Abstract

Cascading failures constitute an important vulnerability of interconnected systems. Here we focus on the study of such failures on networks in which the connectivity of nodes is constrained by geographical distance. Specifically, we use random geometric graphs as representative examples of such spatial networks, and study the properties of cascading failures on them in the presence of distributed flow. The key finding of this study is that the process of cascading failures is non-self-averaging on spatial networks, and thus, aggregate inferences made from analyzing an ensemble of such networks lead to incorrect conclusions when applied to a single network, no matter how large the network is. We demonstrate that this lack of self-averaging disappears with the introduction of a small fraction of long-range links into the network. We simulate the well studied preemptive node removal strategy for cascade mitigation and show that it is largely ineffective in the case of spatial networks. We introduce an altruistic strategy designed to limit the loss of network nodes in the event of a cascade triggering failure and show that it performs better than the preemptive strategy. Finally, we consider a real-world spatial network viz. a European power transmission network and validate that our findings from the study of random geometric graphs are also borne out by simulations of cascading failures on the empirical network.

Introduction

Cascading failures represent a particular vulnerability of networked systems, and their effects have been experienced and documented in several domains such as infrastructure networks [1], financial systems [2], and biological systems [3]. An important feature of real-world networks that has not been incorporated into most studies on cascading failures, with some notable exceptions [4]–[6], is the fact that they are subject to spatial constraints. In other words, in most real-world networks, which node a given node connects to, or interacts with, is largely determined by the geographic distance between the two nodes. This rather severe constraint has important consequences on the network's behavior, and gives rise to significant differences in the scaling behavior of quantities of interest when compared to spatially unconstrained networks [7].

In the context of cascading failures and strategies for their mitigation, studying the effect of spatial constraints is crucial to providing fundamental insights that are practically applicable. A specific context within which studies of cascading failures have proliferated is that of electrical power transmission systems [4], [5], [8]–[12]. However, understanding such failures in the more general context of flow bearing networks is just as important, especially when confronted with the imminent rise of technologies like the Internet of Things [13], which essentially consists of everyday physical objects equipped with sensors to communicate with users or other objects within their range.

Motivated by these reasons, we study a model of load-based cascading failures on networks on a particular class of spatially constrained networks – the Random Geometric Graph (RGG) [14], [15] – carrying distributed flows and compare its behavior to that of unconstrained network classes. Closely related earlier and recent works, employing resistor networks, investigated transport efficiency, flow optimization, and vulnerability in complex networks [16]–[20], and the emergence of traffic gridlocks and congestion in road networks [21], [22].

To validate the insights obtained from these spatially-embedded model networks (RGGs), we also study the same load-based cascading failure process on a real-world network with spatial constraints – the European power transmission network maintained and operated by the Union for the Co-ordination of Transmission of Electricity (UCTE). We find several revealing features that arise from the presence of spatial constraints, the most noticeable being a lack of self-averaging on such networks. This is in stark contrast to the results for unconstrained random networks, and thus points to the potential pitfalls of ignoring spatial constraints when studying cascade mitigation strategies.

Methods

Here, we briefly describe the distributed flow model and cascade model that we utilize in this study. For clarity, we note that we use the term ‘node’ and ‘vertex’ interchangeably in the rest of the paper.

1. Distributed flow

We assume the flow on the network to be both directed and distributed. Specifically, each unit of flow is associated with a source and a sink, and takes advantage of all possible paths between the source and the sink. We adopt a simple model of such flow, by modeling the network as a random resistor network with unit conductances along the links [19], [20]. As each node and edge plays a role in forwarding the current from the source to the target node, each of them experiences a load. For one source-target pair and for unit current flowing between them, the load on an arbitrary edge  is the current along that edge:

is the current along that edge:  ; analogously, the load on an arbitrary node

; analogously, the load on an arbitrary node  is the net current flowing through that node:

is the net current flowing through that node:  . These two loads are related by the expression.

. These two loads are related by the expression.

| (1) |

For all our studies presented here, we assume that unit current flows between  source/target pairs simultaneously. Specifically, we assume that all nodes are simultaneously sources and unit current flows into the network at each source. For each source node, a target is chosen randomly and uniformly from the remaining

source/target pairs simultaneously. Specifically, we assume that all nodes are simultaneously sources and unit current flows into the network at each source. For each source node, a target is chosen randomly and uniformly from the remaining  nodes. Consequently, the load is defined as the superposition of all currents flowing through an arbitrary edge/node, which is identical to the edge/node current-flow betweenness [20], [23], [24]:

nodes. Consequently, the load is defined as the superposition of all currents flowing through an arbitrary edge/node, which is identical to the edge/node current-flow betweenness [20], [23], [24]:

| (2) |

Currents  along the edges due to one source/target pair are obtained by writing down Kirchhoff's law for each node

along the edges due to one source/target pair are obtained by writing down Kirchhoff's law for each node  in the network and solving the system of linear equations:

in the network and solving the system of linear equations:

| (3) |

where we assumed that  units of current flow into the network at a source

units of current flow into the network at a source  and leave at a target

and leave at a target  , and where

, and where  denotes the adjacency matrix of the network. Rewritten in terms of the weighted network Laplacian

denotes the adjacency matrix of the network. Rewritten in terms of the weighted network Laplacian  , where

, where  denotes the degree of node

denotes the degree of node  , the system (3) transforms into the matrix equation

, the system (3) transforms into the matrix equation  , where

, where  is the unknown column voltage vector, while

is the unknown column voltage vector, while  is the net current flowing into the network at node

is the net current flowing into the network at node  , which is zero for all nodes with the exception of the source and target nodes. As the network Laplacian

, which is zero for all nodes with the exception of the source and target nodes. As the network Laplacian  is singular, we use spectral decomposition [18], [25] to find the pseudo-inverse Laplacian

is singular, we use spectral decomposition [18], [25] to find the pseudo-inverse Laplacian  , defined in the space orthogonal to the zero mode. For example, by choosing the reference potential to be the mean voltage [17],

, defined in the space orthogonal to the zero mode. For example, by choosing the reference potential to be the mean voltage [17],  , where

, where  , one obtains:

, one obtains:

| (4) |

for each node  . Thus, for

. Thus, for  units of current and for a given source/target pair, the current flowing through edge

units of current and for a given source/target pair, the current flowing through edge  is:

is:

| (5) |

This relation shows that current along an arbitrary edge is uniquely determined by the network topology. Therefore, this is a fully deterministic model of flow and the only source of randomness in the problem arises in the specific instantiation of the network from its parent ensemble.

Electrical flows when applied to explicitly modeling the power grid have commonly used a DC power flow model [4], [5], [11], [12], [26] wherein links also possess a reactance in addition to resistance. However, as pointed out in [4], the equations for this DC model of power flow bear a close resemblance to that of a simple electrical circuit with the current playing the analogous role of power. Further, Scala et al. [11] have demonstrated that inferences made using a DC power flow model, can still be useful despite neglecting the true AC nature of the power transmission network [27]. We emphasize that although the empirical network on which we validate our results is an electrical grid, our studies are aimed at understanding fundamental aspects of cascades on spatial networks carrying distributed flow, and not towards designing strategies specifically tailored for electrical power transmission systems.

2. Cascade model

We model a cascading failure on a network carrying distributed flow following the seminal model of Motter and Lai [28]. We assume that each node is equipped with a load handling capacity that is proportional to the steady-state load on it when the network is intact. Specifically, the capacity of a node  is

is  where

where  plays the role of a tolerance parameter, and

plays the role of a tolerance parameter, and  is the load on the node for the intact network. If a node on the network fails, i.e. is absent or removed from the network, then the flow undergoes a redistribution, and consequently, so do the loads on the surviving nodes. If the new load on any surviving node exceeds its capacity, i.e. if

is the load on the node for the intact network. If a node on the network fails, i.e. is absent or removed from the network, then the flow undergoes a redistribution, and consequently, so do the loads on the surviving nodes. If the new load on any surviving node exceeds its capacity, i.e. if  , then that node also fails which leads to a further redistribution and possibly further failures. This process constitutes the model of a cascading failure that we utilize here.

, then that node also fails which leads to a further redistribution and possibly further failures. This process constitutes the model of a cascading failure that we utilize here.

3. Network models

We briefly outline the network models used in this paper and the methods employed for generating associated ensembles.

Random geometric graphs

A Random Geometric Graph (RGG) of size  in 2D is constructed by placing

in 2D is constructed by placing  nodes randomly in the unit square with open boundary conditions, and connecting any pair of nodes if the Euclidean distance between them is less than a distance

nodes randomly in the unit square with open boundary conditions, and connecting any pair of nodes if the Euclidean distance between them is less than a distance  , the connection radius

[14], [15]. The average degree of the graph

, the connection radius

[14], [15]. The average degree of the graph  can be controlled by varying

can be controlled by varying  since

since  .

.

Erdös-Rényi graphs

An Erdös-Rényi (ER) graph [29] of size  is constructed by connecting every pair of nodes with probability

is constructed by connecting every pair of nodes with probability  . The average degree of the network can be controlled through

. The average degree of the network can be controlled through  since

since  .

.

Scale-free networks

Scale-free (SF) networks [30] of size  and degree-exponent

and degree-exponent  are constructed by first generating a degree sequence by sampling the prescribed power-law distribution

are constructed by first generating a degree sequence by sampling the prescribed power-law distribution  that yields a desired average-degree

that yields a desired average-degree  . The network is then constructed using this degree sequence following the Configuration Model [31].

. The network is then constructed using this degree sequence following the Configuration Model [31].

Rewired random geometric graphs

To better understand the role of spatial constraints in the observed characteristics of cascades on spatial networks, we generated rewired RGGs as follows. Starting with the original spatial network, we rewire an arbitrarily chosen end of each link to a randomly chosen node in the network with probability  . During this process, we ensure that no self-loops or multiple edges are generated, by rejecting any rewiring step that leads to these undesired features.

. During this process, we ensure that no self-loops or multiple edges are generated, by rejecting any rewiring step that leads to these undesired features.

4. Empirical network

As a realistic testbed on which to validate our results, we use the UCTE European power transmission network from the year 2002 [32]–[34], which we will henceforth refer to simply as the UCTE network. This network is spread over a geographic area that comprises 18 countries, and consists of  buses which constitute the nodes for our purposes. The average degree of the network is

buses which constitute the nodes for our purposes. The average degree of the network is  .

.

Results

1. Load landscapes in RGGs

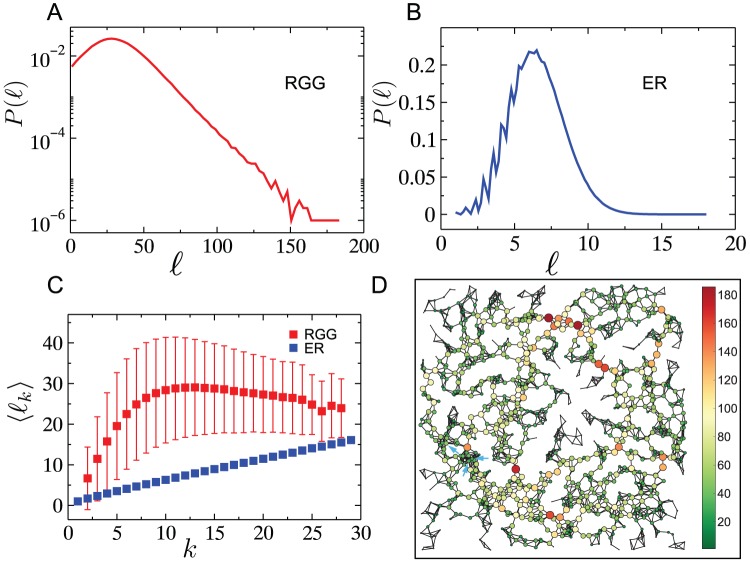

We begin by analyzing the vertex load distributions in RGGs and comparing them to those in ER graphs with the same average degree, the latter playing the role of a null-model where spatial constraints are absent. Both RGGs and ER graphs are characterized by homogeneous (Poissonian) degree distributions [35]. In addition, RGGs exhibit a high clustering coefficient [15], resulting from the spatial dependance of the connectivity and the transitivity of spatial relationships. Path lengths on RGGs scale with the network size  in contrast to the

in contrast to the  scaling found in ER graphs. Given these differences, we expect that the vertex load distribution for RGGs would also differ significantly from that of ER graphs. Indeed, as shown in Figs. 1A and 1B respectively, the vertex load distribution for RGGs has an exponentially decaying tail with a decay constant

scaling found in ER graphs. Given these differences, we expect that the vertex load distribution for RGGs would also differ significantly from that of ER graphs. Indeed, as shown in Figs. 1A and 1B respectively, the vertex load distribution for RGGs has an exponentially decaying tail with a decay constant  , while the distribution for ER graphs is best-fitted by a Gaussian distribution (parameters in caption). For identical average degrees, the mean vertex-load in RGGs, (

, while the distribution for ER graphs is best-fitted by a Gaussian distribution (parameters in caption). For identical average degrees, the mean vertex-load in RGGs, ( ), is almost six times as large as that for ER graphs. Figure 1C shows the average vertex-load conditioned on the vertex-degree, as a function of the degree. Again, in contrast to the case of ER graphs, the plot for RGGs does not display an unambiguously positive correlation of vertex-load with degree over the entire degree range. The vertex-loads are strongly correlated with degrees up until a value close to the average degree, after which they show a subtle decline. A visualization of the network (Fig. 1D) makes it clear that the nodes with the highest loads do not have degrees anywhere as high as the largest degree in the network.

), is almost six times as large as that for ER graphs. Figure 1C shows the average vertex-load conditioned on the vertex-degree, as a function of the degree. Again, in contrast to the case of ER graphs, the plot for RGGs does not display an unambiguously positive correlation of vertex-load with degree over the entire degree range. The vertex-loads are strongly correlated with degrees up until a value close to the average degree, after which they show a subtle decline. A visualization of the network (Fig. 1D) makes it clear that the nodes with the highest loads do not have degrees anywhere as high as the largest degree in the network.

Figure 1. Vertex load profiles in RGGs and ER networks.

Calculated on (A) random geometric graphs and (B) Erdős-Rényi random grahs, composed of  nodes with

nodes with  and averaged over

and averaged over  network realizations. (C) Positive correlations are shown in the case of ER graphs, while these correlations seem to disappear in RGGs for degree classes higher than the average degree of the network ensemble. Data were averaged over more than

network realizations. (C) Positive correlations are shown in the case of ER graphs, while these correlations seem to disappear in RGGs for degree classes higher than the average degree of the network ensemble. Data were averaged over more than  network realizations for networks of

network realizations for networks of  and

and  . The fluctuating tail of the red curve originates from the lack of sufficient number of samples in the specific degree classes. The error bars correspond to one standard deviations. (D) A single network realization (

. The fluctuating tail of the red curve originates from the lack of sufficient number of samples in the specific degree classes. The error bars correspond to one standard deviations. (D) A single network realization ( ,

,  ) showing the vertex loads. Note, that the node with the highest connections (blue arrows indicate the 3 highest degree nodes) does not carry the highest load in the network (loads are color coded, and node sizes are proportional to loads).

) showing the vertex loads. Note, that the node with the highest connections (blue arrows indicate the 3 highest degree nodes) does not carry the highest load in the network (loads are color coded, and node sizes are proportional to loads).

For a network where connections are spatially constrained, we intuitively expect that a high load on a node is indicative of a high load in its neighborhood. To substantiate this, we investigate the spatial correlation between vertex loads on the network. Specifically, we measure the correlation between vertex loads as a function of the distance separating them, by systematically obtaining all pairs of nodes  separated by a distance that lies within

separated by a distance that lies within  , and computing the Pearson correlation coefficient between these pairs:

, and computing the Pearson correlation coefficient between these pairs:

| (6) |

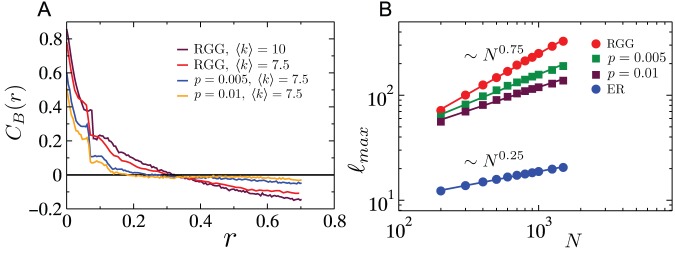

Figure 2A shows the Pearson correlation coefficient between loads at a distance  apart from each other.

apart from each other.  evenly spaced values of

evenly spaced values of  were considered within the complete range

were considered within the complete range  , with

, with  . The resulting picture shows that loads are positively correlated for nodes within a distance

. The resulting picture shows that loads are positively correlated for nodes within a distance  where

where  is the connection radius, while just beyond this value the correlation sharply drops and continues to decrease monotonically thereafter, reaching slightly negative values at very large separations. The picture obtained for networks with different average degrees is qualitatively similar, and does not change significantly for rewired RGGs generated using small values of the rewiring parameter. It is worth mentioning that although the spatial correlation captured by the Pearson correlation coefficient indicates vertex loads being correlated only within a short distance, it does not preclude the existence of lower dimensional correlated structures – such as a 1D backbone formed by vertices with high loads [36] -within the network. To conclude this study of the load profiles, we analyze the extreme value scaling of the load distribution with network size

is the connection radius, while just beyond this value the correlation sharply drops and continues to decrease monotonically thereafter, reaching slightly negative values at very large separations. The picture obtained for networks with different average degrees is qualitatively similar, and does not change significantly for rewired RGGs generated using small values of the rewiring parameter. It is worth mentioning that although the spatial correlation captured by the Pearson correlation coefficient indicates vertex loads being correlated only within a short distance, it does not preclude the existence of lower dimensional correlated structures – such as a 1D backbone formed by vertices with high loads [36] -within the network. To conclude this study of the load profiles, we analyze the extreme value scaling of the load distribution with network size  , a quantity of significance in determining the effective throughput of the network [37]. As shown in Fig. 2B, the maximum vertex load on RGGs scales as a power law with

, a quantity of significance in determining the effective throughput of the network [37]. As shown in Fig. 2B, the maximum vertex load on RGGs scales as a power law with  , with an exponent of

, with an exponent of  . This is a much faster growth in comparison to the scaling,

. This is a much faster growth in comparison to the scaling, found for ER graphs. Rewiring the links of the RGG with increasing probability

found for ER graphs. Rewiring the links of the RGG with increasing probability  , gradually but systematically lowers the loads, and their scaling. (The scaling exponents found for

, gradually but systematically lowers the loads, and their scaling. (The scaling exponents found for  and

and  are

are  and

and  respectively.).

respectively.).

Figure 2. Correlations of vertex loads as a function of distance between vertices and extreme value characteristics of loads.

(A) Load and distance correlations in RGGs and rewired RGGs with rewiring probability  (see text). Pearson correlation coefficient as function of distance

(see text). Pearson correlation coefficient as function of distance  measured between two arbitrary nodes. Data were averaged over

measured between two arbitrary nodes. Data were averaged over  network realizations for networks having

network realizations for networks having  nodes. (B) System size dependence of the maximum vertex load in networks with

nodes. (B) System size dependence of the maximum vertex load in networks with  . Data were averaged over

. Data were averaged over  network realizations. The parameter

network realizations. The parameter  corresponds to the rewiring probability for links in the RGG.

corresponds to the rewiring probability for links in the RGG.

2. Cascades of overload failures

Next, we simulate cascading failures on a network triggered either by random or targeted removals of nodes, and quantify the resilience of the network to such failures. The model used (see Methods) is identical to that used in earlier studies [19], [28], [38], and is parametrized by a single tolerance parameter  which quantifies the excess load bearing capacity of a node. Following the notation introduced in [28], the resilience of a network is quantified in terms of the fractional size of the surviving largest connected component after the cascade ends:

which quantifies the excess load bearing capacity of a node. Following the notation introduced in [28], the resilience of a network is quantified in terms of the fractional size of the surviving largest connected component after the cascade ends:  , where

, where  is the number of nodes belonging to the largest network component after the cascade and

is the number of nodes belonging to the largest network component after the cascade and  is the undamaged (connected) network size.

is the undamaged (connected) network size.

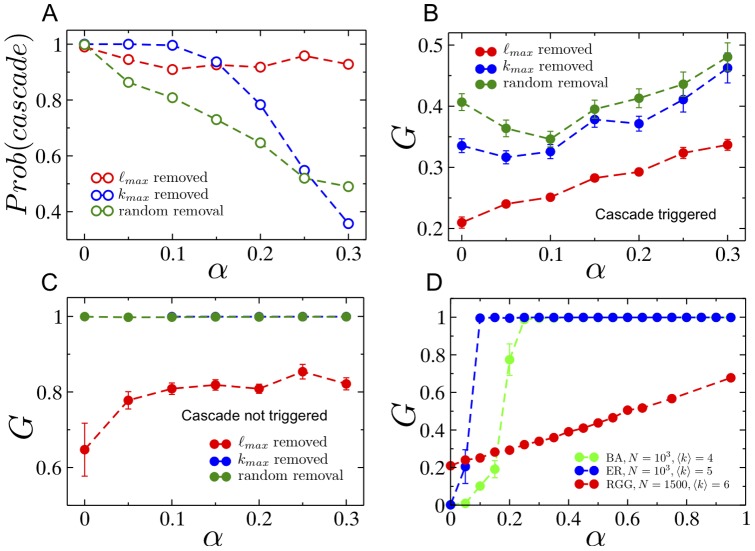

Figure 3A shows the probability that a cascade ensues after an initial node removal. As seen, irrespective of the tolerance parameter, cascades triggered by the removal of the node with the highest load in the network leave behind the largest damage when compared with those resulting from removal of the highest degree node or a random node. Figures 3B,C show the fractional size of the surviving giant component  as a function of the tolerance parameter in the presence and the absence of a cascade. Once again, the damage done is the worst for the case where the initial node removed is the one with the maximal load, even in the case where no cascade is triggered, suggesting that vertices with the highest loads are those responsible for bridging together distinct connected components and ensuring the structural integrity of the network. Finally, Fig. 3D compares the damage done due to cascading failures on RGGs with the damage in SF and ER networks, all having the same average degree. Clearly, while increasing excess capacity does lead to an increase, on average, of the surviving giant component, the growth is profoundly slower for RGGs than for the spatially unconstrained networks. Henceforth, as we further investigate more detailed characteristics of cascades, we restrict our studies to cascades triggered by the removal of the vertex with the highest load, since the damage done to the network is the most severe in this case.

as a function of the tolerance parameter in the presence and the absence of a cascade. Once again, the damage done is the worst for the case where the initial node removed is the one with the maximal load, even in the case where no cascade is triggered, suggesting that vertices with the highest loads are those responsible for bridging together distinct connected components and ensuring the structural integrity of the network. Finally, Fig. 3D compares the damage done due to cascading failures on RGGs with the damage in SF and ER networks, all having the same average degree. Clearly, while increasing excess capacity does lead to an increase, on average, of the surviving giant component, the growth is profoundly slower for RGGs than for the spatially unconstrained networks. Henceforth, as we further investigate more detailed characteristics of cascades, we restrict our studies to cascades triggered by the removal of the vertex with the highest load, since the damage done to the network is the most severe in this case.

Figure 3. Cascades triggered by targeted and random removals.

(A) Probability that a single node removal will trigger a cascade as function of the tolerance parameter. (B) The ratio  of the size of the largest surviving network component to the initial network size, as function of the tolerance parameter

of the size of the largest surviving network component to the initial network size, as function of the tolerance parameter  , when the initial failure triggers a cascade. (C) Similar to (B), except for the case where the initial failure does not trigger a cascade. In all (A), (B) and (C) subplots the red curve corresponds to the case when the triggered node is the node with the highest load, the blue curve to the case when the triggered node is the most connected (highest degree) node in the network and the green curve shows the case when the triggered node was chosen randomly. Network parameters are

, when the initial failure triggers a cascade. (C) Similar to (B), except for the case where the initial failure does not trigger a cascade. In all (A), (B) and (C) subplots the red curve corresponds to the case when the triggered node is the node with the highest load, the blue curve to the case when the triggered node is the most connected (highest degree) node in the network and the green curve shows the case when the triggered node was chosen randomly. Network parameters are  ,

,  , while the data was averaged over

, while the data was averaged over  network realizations. Error bars correspond to the standard error of the mean. (D)

network realizations. Error bars correspond to the standard error of the mean. (D)  as a function of tolerance parameter, unconditioned on whether or not a cascade was triggered for RGGs, SF networks and ER networks. For each point, the data was averaged over

as a function of tolerance parameter, unconditioned on whether or not a cascade was triggered for RGGs, SF networks and ER networks. For each point, the data was averaged over  network realizations.

network realizations.

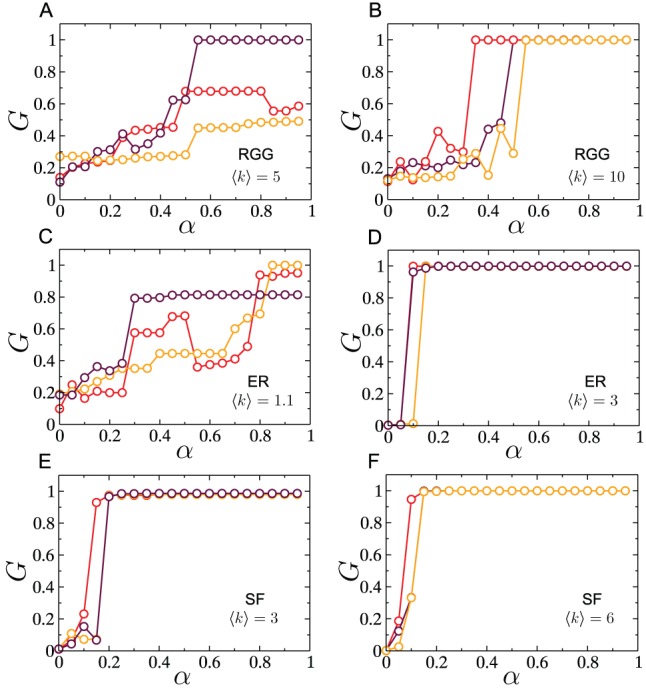

As shown above, increased capacity allocation results in a monotonic increase in the average surviving giant component size, where the averaging is done over an ensemble of network realizations. If such a monotonic increase was also obtained for individual network instances, then increased capacity allocation, although only weakly effective, would at least be a justifiable preventative measure against cascades. Figures 4 A, B show the size of the surviving giant component  as a function of the tolerance parameter

as a function of the tolerance parameter  for three individual instances of RGGs, for different respective average degrees. As is clearly seen, the variation in

for three individual instances of RGGs, for different respective average degrees. As is clearly seen, the variation in  is far from monotonic for a single network instance, and differs significantly across instances. Thus, the trend observed by averaging a macroscopic quantity,

is far from monotonic for a single network instance, and differs significantly across instances. Thus, the trend observed by averaging a macroscopic quantity,  , over an ensemble of RGG networks (as was the case in Fig. 3) provides little indication of the true behavior of the same quantity for an individual network instance. This behavior persists even if the network size is increased (not shown). Such lack of self-averaging has been observed previously in fragmentation processes on lattices, to which cascades bear a close resemblance [39]. In contrast, results of cascades on single instances of ER and SF networks, shown in Figs. 4B,C, are consistent with those obtained by averaging

, over an ensemble of RGG networks (as was the case in Fig. 3) provides little indication of the true behavior of the same quantity for an individual network instance. This behavior persists even if the network size is increased (not shown). Such lack of self-averaging has been observed previously in fragmentation processes on lattices, to which cascades bear a close resemblance [39]. In contrast, results of cascades on single instances of ER and SF networks, shown in Figs. 4B,C, are consistent with those obtained by averaging  over respective network ensembles.

over respective network ensembles.

Figure 4. Cascades on single network realizations.

Simulations were performed on networks of size  . Fractional size of surviving giant component as a function of

. Fractional size of surviving giant component as a function of  for (A),(B) RGGs, (C),(D) ER networks and (E),(F) SF networks.

for (A),(B) RGGs, (C),(D) ER networks and (E),(F) SF networks.

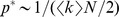

Presumably, this lack of self-averaging is a feature that results from the embedding of the network in two-dimensions (with no shortcuts). To conclusively validate this argument, we study how the presence of a few spatially unconstrained links affects the surviving giant component size, since the addition of such links has the effect of increasing the underlying dimensionality of the space in which the network is embedded. Specifically, for each link, we rewire with probability  one end of the link with a randomly chosen node in the network, without allowing self-loops or multiple edges to form. Similar constructions have been used before in [40]–[42]. By varying

one end of the link with a randomly chosen node in the network, without allowing self-loops or multiple edges to form. Similar constructions have been used before in [40]–[42]. By varying  between

between  and

and  , we can interpolate between RGGs and ER graphs, as is confirmed by the results shown in Fig. 5, where both the degree-conditioned average load and the average load undergo a smooth crossover from the results expected for RGGs to those expected for ER graphs. Figure 6 shows that even with as low as

, we can interpolate between RGGs and ER graphs, as is confirmed by the results shown in Fig. 5, where both the degree-conditioned average load and the average load undergo a smooth crossover from the results expected for RGGs to those expected for ER graphs. Figure 6 shows that even with as low as  of the links of the RGG rewired, the non-monotonicity in

of the links of the RGG rewired, the non-monotonicity in  versus

versus  completely disappears. The interval of

completely disappears. The interval of  within which the crossover takes place contains values larger than the theoretical estimate of

within which the crossover takes place contains values larger than the theoretical estimate of  [43] at which the small-world crossover occurs, likely a finite-size-effect due to the small system sizes considered here. Thus, from a theoretical network-design point of view, the incorporation of a few long-range links would be a simple step in stabilizing flows and managing cascades, since it results in a more predictable relationship between surviving-component size and excess capacity. However, in practical situations the cost of adding such long-range links could be prohibitive, and therefore may not constitute a feasible solution for controlling the grid.

[43] at which the small-world crossover occurs, likely a finite-size-effect due to the small system sizes considered here. Thus, from a theoretical network-design point of view, the incorporation of a few long-range links would be a simple step in stabilizing flows and managing cascades, since it results in a more predictable relationship between surviving-component size and excess capacity. However, in practical situations the cost of adding such long-range links could be prohibitive, and therefore may not constitute a feasible solution for controlling the grid.

Figure 5. Effect of rewiring in RGGs.

(A) Transition in network structure from an RGG towards an ER network through the process of rewiring. Multiple rewired versions (different values of  ) are shown together with the two extreme cases. (B) Average vertex load in RGG, ER and rewired versions of RGG as function of the fraction of rewired links

) are shown together with the two extreme cases. (B) Average vertex load in RGG, ER and rewired versions of RGG as function of the fraction of rewired links  . Network parameters are:

. Network parameters are:  ,

,  . Data were averaged over

. Data were averaged over  network realizations.

network realizations.

Figure 6. Effect of rewiring on cascades in RGGs.

Cascades were triggered by the removal of the highest load. Simulations were performed on networks of size  with

with  . As

. As  is increased the lack of self-averaging that manifests itself in the form of non-monotonicities in the curves for

is increased the lack of self-averaging that manifests itself in the form of non-monotonicities in the curves for  versus

versus  , disappears.

, disappears.

We also studied how length dependent link-conductances affected our results. Specifically, we assumed that  for a link connecting nodes

for a link connecting nodes  and

and  where

where  denotes the Euclidean distance between them, and performed simulations to study the dependence of the surviving giant component size

denotes the Euclidean distance between them, and performed simulations to study the dependence of the surviving giant component size  as a function of the tolerance parameter

as a function of the tolerance parameter  (analogous to results in Fig. 4 A,B), and to investigate the effect of rewiring links to create a few long-range connections in the network (similar to the results in Fig. 6). For both cases, we found no significant quantitative difference in the results for the case where conductances were length-dependent. In particular, the non-self-averaging nature of cascades manifested itself in exactly the same manner as is demonstrated in Figs. S1 and S2.

(analogous to results in Fig. 4 A,B), and to investigate the effect of rewiring links to create a few long-range connections in the network (similar to the results in Fig. 6). For both cases, we found no significant quantitative difference in the results for the case where conductances were length-dependent. In particular, the non-self-averaging nature of cascades manifested itself in exactly the same manner as is demonstrated in Figs. S1 and S2.

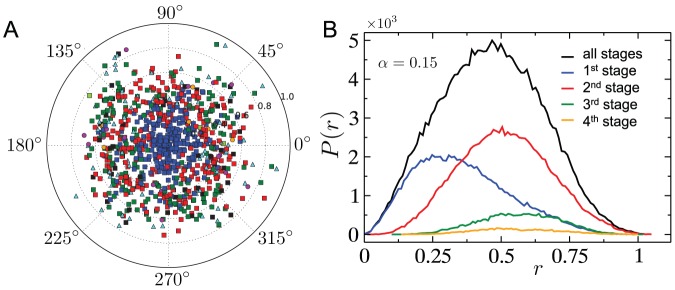

As a next step in understanding the nature of spatial cascades, we measure the spatial correlations between nodes that fail in successive stages of the cascade. Here, a single stage refers to a round of calculating vertex loads, and removing those nodes whose load exceeds their respective capacity. Figure. 7A shows the average location of failing nodes per stage of the cascade, relative to the node that triggers the cascade. The most significant feature observed here, as well as in the distribution of distance (from the cascade-triggering node) for failing nodes in each cascade stage (Fig. 7B) is the separation between the most likely locations for nodes removed in the first and second stages. In subsequent stages, the distribution of the location of failing nodes gets progressively more uniform. In general, as seen from our simulations, cascades last for only a few stages (the longest found in the systems studied here was  stages) with most of the damage occurring by the second stage, and then declining rapidly. The stage-wise distributions in Fig. 7B were obtained by aggregating all nodes removed in a particular stage and belonging to a particular distance bin over

stages) with most of the damage occurring by the second stage, and then declining rapidly. The stage-wise distributions in Fig. 7B were obtained by aggregating all nodes removed in a particular stage and belonging to a particular distance bin over  distinct cascades, and normalizing them by the total number of nodes removed over the distinct cascades. Thus, declining contribution of later stages is due to a combination of two factors: the reduction in the number of nodes removed during later stages, and the decrease in the probability of the cascade surviving up to that stage. The all-stage distribution was generated in a similar fashion as the stage-wise distribution, but disregarding the stages associated with the nodes.

distinct cascades, and normalizing them by the total number of nodes removed over the distinct cascades. Thus, declining contribution of later stages is due to a combination of two factors: the reduction in the number of nodes removed during later stages, and the decrease in the probability of the cascade surviving up to that stage. The all-stage distribution was generated in a similar fashion as the stage-wise distribution, but disregarding the stages associated with the nodes.

Figure 7. Location of overloaded nodes.

(A) Position (distance and angle) of failed nodes relative to the initially removed one, here the highest load in the network. Different colors correspond to different iterations of the cascade: blue squares (1st), red squares (2nd), green squares (3rd), light blue triangles (4th), black squares (5th), magenta circles (6th), orange circles (7th), light green squares (8th), yellow triangles (9th). Network parameters are the same as in Fig. 1, while each data point is the averaged location of nodes removed in a given stage over  independent network cascades. (B) The distribution of the distance

independent network cascades. (B) The distribution of the distance  from the cascade-triggering node for nodes that fail in the course of a cascade (See text for details).

from the cascade-triggering node for nodes that fail in the course of a cascade (See text for details).

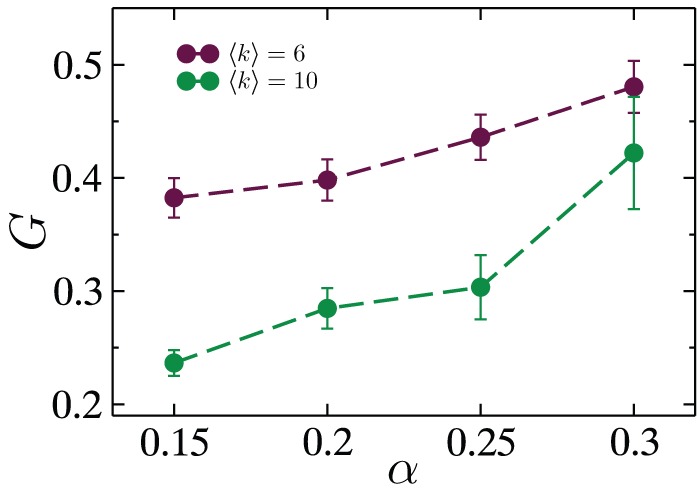

Finally in this section, we study the effect of average degree of RGGs on their resilience to cascading failures. Figure 8 compares the fractional size of the largest connected component as a function of  for networks with average degree

for networks with average degree  and

and  . Surprisingly, the damage caused by cascading failures is far more severe for the more well connected of the two network ensembles. Although, for other dynamical processes such as epidemic spreading and diffusion it is intuitively obvious that more connections lead to more spread, here we would expect that the presence of more paths between any source-sink pair on a denser network would lead to more effective load balancing, and therefore weaker cascading failures. However, while increasing the average degree does cause loads for each node to be lower and more balanced initially, the excess capacity allocation in proportion to these lower and more uniform loads, makes the network ill-equipped to handle variations in load resulting from the initial node removal. As a result, cascades cause more damage for a denser RGG than a sparser one. In contrast, as is well known, denser RGGs are structurally more resilient to random (non-cascading) failures occurring in the network, since the giant component undergoes a transition in size at

. Surprisingly, the damage caused by cascading failures is far more severe for the more well connected of the two network ensembles. Although, for other dynamical processes such as epidemic spreading and diffusion it is intuitively obvious that more connections lead to more spread, here we would expect that the presence of more paths between any source-sink pair on a denser network would lead to more effective load balancing, and therefore weaker cascading failures. However, while increasing the average degree does cause loads for each node to be lower and more balanced initially, the excess capacity allocation in proportion to these lower and more uniform loads, makes the network ill-equipped to handle variations in load resulting from the initial node removal. As a result, cascades cause more damage for a denser RGG than a sparser one. In contrast, as is well known, denser RGGs are structurally more resilient to random (non-cascading) failures occurring in the network, since the giant component undergoes a transition in size at  [44], [45] where

[44], [45] where  is the critical fraction of randomly removed nodes from a RGG, and

is the critical fraction of randomly removed nodes from a RGG, and  is an embedding-dimension dependent constant taking the value

is an embedding-dimension dependent constant taking the value  [15] for two dimensions.

[15] for two dimensions.

Figure 8. The effect of average degree upon cascading failures.

Fraction of the largest surviving network component following cascading failures  triggered by the removal of a single, randomly chosen node as function of

triggered by the removal of a single, randomly chosen node as function of  tolerance parameter. The two curves correspond to two ensembles of random geometric graphs, one with

tolerance parameter. The two curves correspond to two ensembles of random geometric graphs, one with  (maroon) and one with

(maroon) and one with  (green). Data were obtained for RGGs of size

(green). Data were obtained for RGGs of size  , averaged over more than

, averaged over more than  network realizations. The error bars correspond to the standard error of the mean.

network realizations. The error bars correspond to the standard error of the mean.

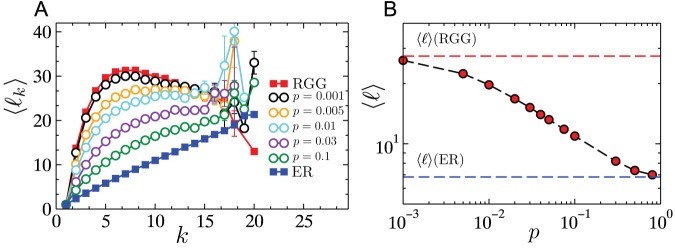

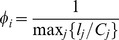

3. Cascade mitigation strategies

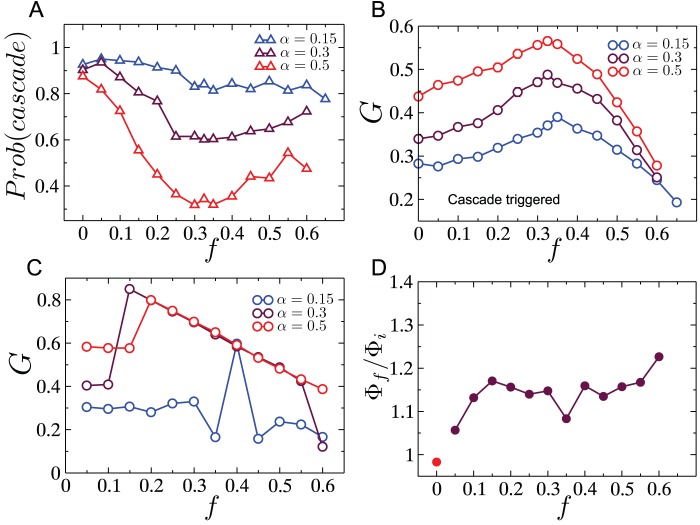

Next, we study two cascade mitigation strategies and evaluate their effectiveness. We begin by analyzing the preemptive node removal strategy proposed by Motter [38]. Intuitively, this method aims to utilize node removal in such a way that the two competing objectives of reducing the load on the network, and keeping the network connected, are balanced. Specifically, the method involves removing a fraction  of the lowest load nodes after the initial node failure. This method was motivated by studies on scale-free networks where the load distribution is heavy-tailed implying that a significant fraction of nodes despite contributing to the total load on the network by acting as sources of current/packets, only frugally participate in the carrying of loads generated by other source-sinks pairs, due to their low betweenness. The load distribution for RGGs however, is comparatively much narrower, and we would therefore expect that preemptive node removal would not yield significant success. The results of investigating the efficacy of preemptive node removal as a cascade mitigation strategy are presented in Fig. 9. As shown in Fig. 9A, the probability of a cascade occurring decreases (with increasing

of the lowest load nodes after the initial node failure. This method was motivated by studies on scale-free networks where the load distribution is heavy-tailed implying that a significant fraction of nodes despite contributing to the total load on the network by acting as sources of current/packets, only frugally participate in the carrying of loads generated by other source-sinks pairs, due to their low betweenness. The load distribution for RGGs however, is comparatively much narrower, and we would therefore expect that preemptive node removal would not yield significant success. The results of investigating the efficacy of preemptive node removal as a cascade mitigation strategy are presented in Fig. 9. As shown in Fig. 9A, the probability of a cascade occurring decreases (with increasing  ) until it reaches a minimum, and beyond which, it increases again. A similar profile is also observed for the ensemble averaged values of the fractional size of the giant surviving component

) until it reaches a minimum, and beyond which, it increases again. A similar profile is also observed for the ensemble averaged values of the fractional size of the giant surviving component  as a function of

as a function of  . Both plots show however, that even at the optimal

. Both plots show however, that even at the optimal  , and for as large as

, and for as large as  additional capacity (i.e.

additional capacity (i.e.  ), the gains obtained are weak. Furthermore, as a consequence of the lack of self-averaging, individual network instances show profiles that are highly variable and showing little resemblance to the ensemble averaged results. Three such examples for individual network instances are shown in Fig. 9C. Finally, we study how the throughput in the giant surviving component after a cascade,

), the gains obtained are weak. Furthermore, as a consequence of the lack of self-averaging, individual network instances show profiles that are highly variable and showing little resemblance to the ensemble averaged results. Three such examples for individual network instances are shown in Fig. 9C. Finally, we study how the throughput in the giant surviving component after a cascade,  , compares to the throughput on the original network,

, compares to the throughput on the original network,  . The throughput captures the maximum current that can be injected per source without the network becoming congested. For

. The throughput captures the maximum current that can be injected per source without the network becoming congested. For  units of current injected at every source, the network is uncongested if for every node

units of current injected at every source, the network is uncongested if for every node  , the inequality,

, the inequality,  holds. Consequently, for the intact network (indicated by subscript

holds. Consequently, for the intact network (indicated by subscript  ), the throughput is

), the throughput is  . The throughput can similarly be calculated for the surviving component after a cascade. As shown in Fig. 9D, the throughput after the cascade

. The throughput can similarly be calculated for the surviving component after a cascade. As shown in Fig. 9D, the throughput after the cascade  is larger than the initial throughput for

is larger than the initial throughput for  . The increase in throughput is expected since the size of the network is smaller after a cascade, leading to a reduction in loads (due to the

. The increase in throughput is expected since the size of the network is smaller after a cascade, leading to a reduction in loads (due to the  dependance in the definition of loads, see Eq. 2) and thereby an increase in the quantity

dependance in the definition of loads, see Eq. 2) and thereby an increase in the quantity  . For the case where

. For the case where  , although the ensemble average of the ratio

, although the ensemble average of the ratio  is smaller than one (

is smaller than one ( ), in most individual instances the ratio is exactly one. In these cases, the throughput after the cascade is determined by a node whose connectivity before and after the cascade is

), in most individual instances the ratio is exactly one. In these cases, the throughput after the cascade is determined by a node whose connectivity before and after the cascade is  . Such a dangling end has initial load equal to

. Such a dangling end has initial load equal to  which remains unchanged after the cascade as well i.e. the reduction in the number of sources and sinks in the system has no effect on its load, unlike for other nodes which have higher connectivity. Therefore the value of

which remains unchanged after the cascade as well i.e. the reduction in the number of sources and sinks in the system has no effect on its load, unlike for other nodes which have higher connectivity. Therefore the value of  after the cascade for such a node often ends up being the highest among all nodes, and by definition results in the throughput after the cascade being identical to that before the cascade i.e.

after the cascade for such a node often ends up being the highest among all nodes, and by definition results in the throughput after the cascade being identical to that before the cascade i.e.  . When

. When  , such dangling ends are removed as part of the preemptive node removal process, and all surviving nodes end up experiencing a reduction in load due to the reduced size of the surviving giant component. As a result, the final throughput is higher than the initial throughput, resulting in

, such dangling ends are removed as part of the preemptive node removal process, and all surviving nodes end up experiencing a reduction in load due to the reduced size of the surviving giant component. As a result, the final throughput is higher than the initial throughput, resulting in  being greater than one.

being greater than one.

Figure 9. Preemptive node removal in RGGs.

(A) Probability that a cascade occurs after removal of the node with highest load, despite a fraction  of nodes being preemptively removed immediately after the initial trigger. (B) Fractional size of the largest surviving network component

of nodes being preemptively removed immediately after the initial trigger. (B) Fractional size of the largest surviving network component  as a function of preemptively removed fraction

as a function of preemptively removed fraction  , when there is a cascade. (C) Fractional size of the largest surviving network component

, when there is a cascade. (C) Fractional size of the largest surviving network component  as a function of preemptively removed fraction

as a function of preemptively removed fraction  for a single network instance for different values of the tolerance parameter

for a single network instance for different values of the tolerance parameter  . (D) The ratio of the throughput (defined in text) of the surviving giant component and the throughput of the original network as a function of the altruist node fraction. The red circle corresponds to the case when there no nodes are preemptively removed. Network parameters are:

. (D) The ratio of the throughput (defined in text) of the surviving giant component and the throughput of the original network as a function of the altruist node fraction. The red circle corresponds to the case when there no nodes are preemptively removed. Network parameters are:  ,

,  , and

, and  for results shown in (A),(B) and (D).

for results shown in (A),(B) and (D).

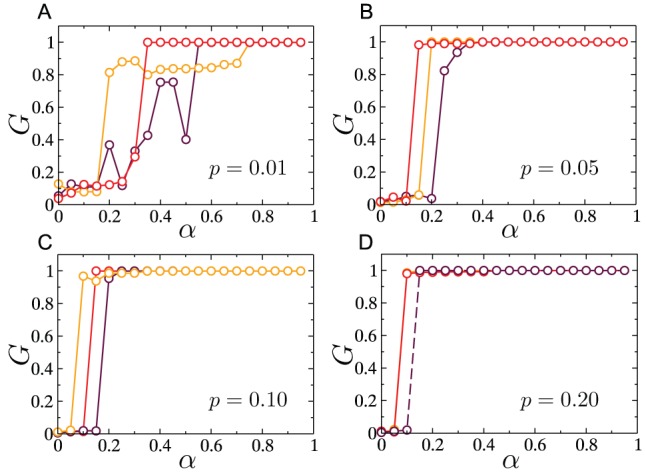

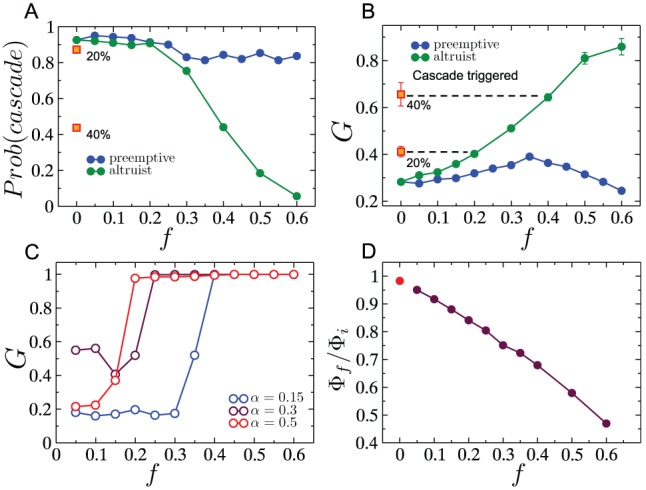

In view of the observation that the pre-cascade vertex load distribution in the RGG is not highly skewed, we propose a cascade mitigation strategy where rather than reducing the total load on the network by the making a fraction of nodes “absent” from the network as we did for the preemptive strategy, we assign a random fraction  of nodes to be altruists who cease to act as sources in the event of a node failure, but continue conducting flow between other source-sink pairs. Figure 10A shows the drop in the probability of a cascade as a function of the fraction

of nodes to be altruists who cease to act as sources in the event of a node failure, but continue conducting flow between other source-sink pairs. Figure 10A shows the drop in the probability of a cascade as a function of the fraction  of altruistic nodes. Clearly, the drop is significant in comparison to that achieved by the preemptive node removal strategy. We also show the results of a third strategy which involves all surviving nodes reducing the net current they inject into the network (per sink) to a fraction

of altruistic nodes. Clearly, the drop is significant in comparison to that achieved by the preemptive node removal strategy. We also show the results of a third strategy which involves all surviving nodes reducing the net current they inject into the network (per sink) to a fraction  of its original value. We show the results (in red) for the two values of

of its original value. We show the results (in red) for the two values of  , and note that the probability of a cascade is approximately the same as that obtained when only a fraction

, and note that the probability of a cascade is approximately the same as that obtained when only a fraction  of nodes are fully altruistic (i.e. inject no current into the network). Figures 10B and C show comparative plots of the size of the surviving giant component obtained for each of these strategies, conditioned on whether a cascade occurs or not. In both cases, the altruistic strategy, as well as the overall current reduction strategy, show a significant improvement over the preemptive node removal strategy. Understandably, this improvement comes at the cost of the overall throughput in the network. Figure 10D shows the effective throughput in the surviving component

of nodes are fully altruistic (i.e. inject no current into the network). Figures 10B and C show comparative plots of the size of the surviving giant component obtained for each of these strategies, conditioned on whether a cascade occurs or not. In both cases, the altruistic strategy, as well as the overall current reduction strategy, show a significant improvement over the preemptive node removal strategy. Understandably, this improvement comes at the cost of the overall throughput in the network. Figure 10D shows the effective throughput in the surviving component  normalized by the initial throughput

normalized by the initial throughput  of the intact network, as a function of the altruist fraction

of the intact network, as a function of the altruist fraction  . For a principled comparison of the throughputs before and after the cascade, we define the effective throughput of the surviving giant component as the current per source on the intact network that would yield the same total current as that flowing through the surviving giant component after the cascade. Mathematically, when the number of altruist nodes in the surviving component is

. For a principled comparison of the throughputs before and after the cascade, we define the effective throughput of the surviving giant component as the current per source on the intact network that would yield the same total current as that flowing through the surviving giant component after the cascade. Mathematically, when the number of altruist nodes in the surviving component is  , this effective throughput is written as:

, this effective throughput is written as:

| (7) |

As seen in Fig. 10D, the ratio  decreases as the altruist fraction is increased, thus indicating that the increased surviving fraction comes at the expense of the throughput of the network.

decreases as the altruist fraction is increased, thus indicating that the increased surviving fraction comes at the expense of the throughput of the network.

Figure 10. Increasing the resilience of the network by introducing altruist nodes.

(A) Probability that a cascade is triggered for an altruist/preemptively removed fraction  . The orange squares indicated the probability of cascade when no nodes (other than the initial cascade-triggering node) are removed, but when the current per source is reduced by

. The orange squares indicated the probability of cascade when no nodes (other than the initial cascade-triggering node) are removed, but when the current per source is reduced by  (upper square) or

(upper square) or  (lower square) immediately after the initial node removal. (B) The fractional size of the surviving giant component

(lower square) immediately after the initial node removal. (B) The fractional size of the surviving giant component  when a cascade is triggered, as a function of the altruist/preemptively-removed node fraction. Also shown are the results when the current per source is reduced by

when a cascade is triggered, as a function of the altruist/preemptively-removed node fraction. Also shown are the results when the current per source is reduced by  (upper square) or

(upper square) or  (lower square) immediately after the initial node removal, which coincide with the

(lower square) immediately after the initial node removal, which coincide with the  and

and  results respectively for altruistic node removal. (C) Similar to (B), but for the cases where a cascade is not triggered. (D) The ratio of the effective throughput (defined in text) of the surviving giant component and the throughput of the original network as a function of the altruist node fraction. The red circle corresponds to the case when there are no altruist nodes. Network parameters for all these plots are:

results respectively for altruistic node removal. (C) Similar to (B), but for the cases where a cascade is not triggered. (D) The ratio of the effective throughput (defined in text) of the surviving giant component and the throughput of the original network as a function of the altruist node fraction. The red circle corresponds to the case when there are no altruist nodes. Network parameters for all these plots are:  ,

,  , and

, and  for results shown in (A),(B) and (D).

for results shown in (A),(B) and (D).

4. Cascade model on an empirical spatial network: The UCTE network

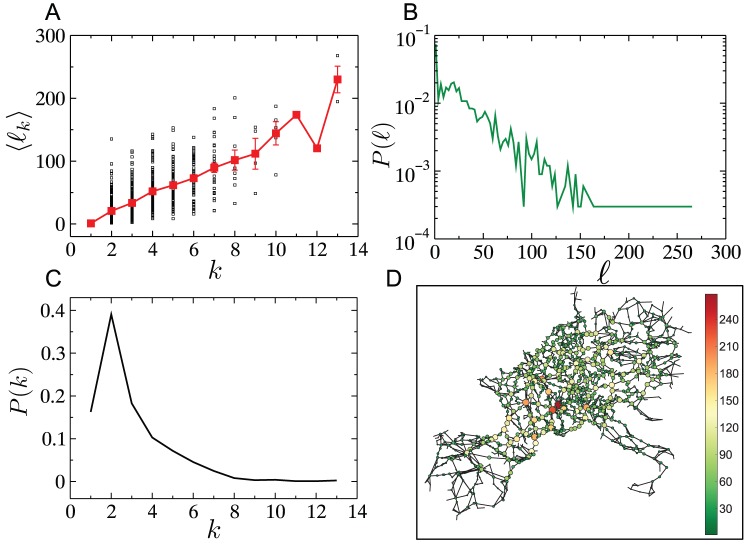

Thus far, our studies have been confined to a stylized model of a spatial network, viz. the RGG. We now study the outcomes of the same cascading failure model on the UCTE network, several aspects of which, have been studied elsewhere [5], [32], [46]. The network consists of  transmission stations, with an average degree

transmission stations, with an average degree  , spanning 18 European countries in 2002. The network is disassortative with an assortativity coefficient of

, spanning 18 European countries in 2002. The network is disassortative with an assortativity coefficient of  , and with a higher average clustering coefficient than an ER graph (

, and with a higher average clustering coefficient than an ER graph ( ). Figure 11 shows several other properties of this network. The load appears to be positively correlated with the degree (Fig. 11A), while the degree and load distributions span a relatively narrow range (Fig. 11B,C respectively), as observed also for RGGs. It is worth noting however, that the variance of loads is significant even for small degree values, which makes it difficult to straightforwardly assess the load bearing responsibility of a node purely from its degree.

). Figure 11 shows several other properties of this network. The load appears to be positively correlated with the degree (Fig. 11A), while the degree and load distributions span a relatively narrow range (Fig. 11B,C respectively), as observed also for RGGs. It is worth noting however, that the variance of loads is significant even for small degree values, which makes it difficult to straightforwardly assess the load bearing responsibility of a node purely from its degree.

Figure 11. Characteristics of the UCTE network.

The network consists of  nodes with an average degree of

nodes with an average degree of  . (A) Scatter of loads as a function of node degree

. (A) Scatter of loads as a function of node degree  (black squares) and the average load (red squares) as a function of node degree

(black squares) and the average load (red squares) as a function of node degree  . (B) The load distribution on the intact UCTE network. (C) The degree distribution of the UCTE network. (D) A visualization of the UCTE network with loads indicated using both node size and color.

. (B) The load distribution on the intact UCTE network. (C) The degree distribution of the UCTE network. (D) A visualization of the UCTE network with loads indicated using both node size and color.

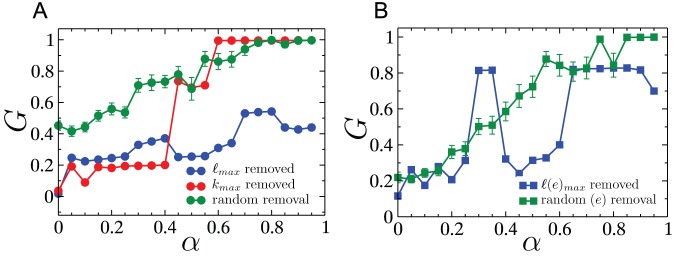

Figures 12 A,B show the cascades triggered on the UCTE network by the removal of a a single edge and a single node, respectively. The non-monotonicity observed in  versus

versus  for the model spatial networks is also observed here, thus reinforcing the non-self-averaging nature of spatially constrained networks. In the case of node-removal triggered cascades, removal of the highest-load node results in the worst overall damage, as was also the case for RGGs.

for the model spatial networks is also observed here, thus reinforcing the non-self-averaging nature of spatially constrained networks. In the case of node-removal triggered cascades, removal of the highest-load node results in the worst overall damage, as was also the case for RGGs.

Figure 12. Cascades on the UCTE network.

(A) Cascades triggered by the removal of a single node where the node was chosen using three different criteria i.e. randomly, highest load or highest degree. (B) Cascades triggered by the removal of a single edge where the edge was either chosen randomly or was the one with the highest load. Data obtained for cascade triggered by the random removal of a single node (edge) were averaged over  different scenarios.

different scenarios.

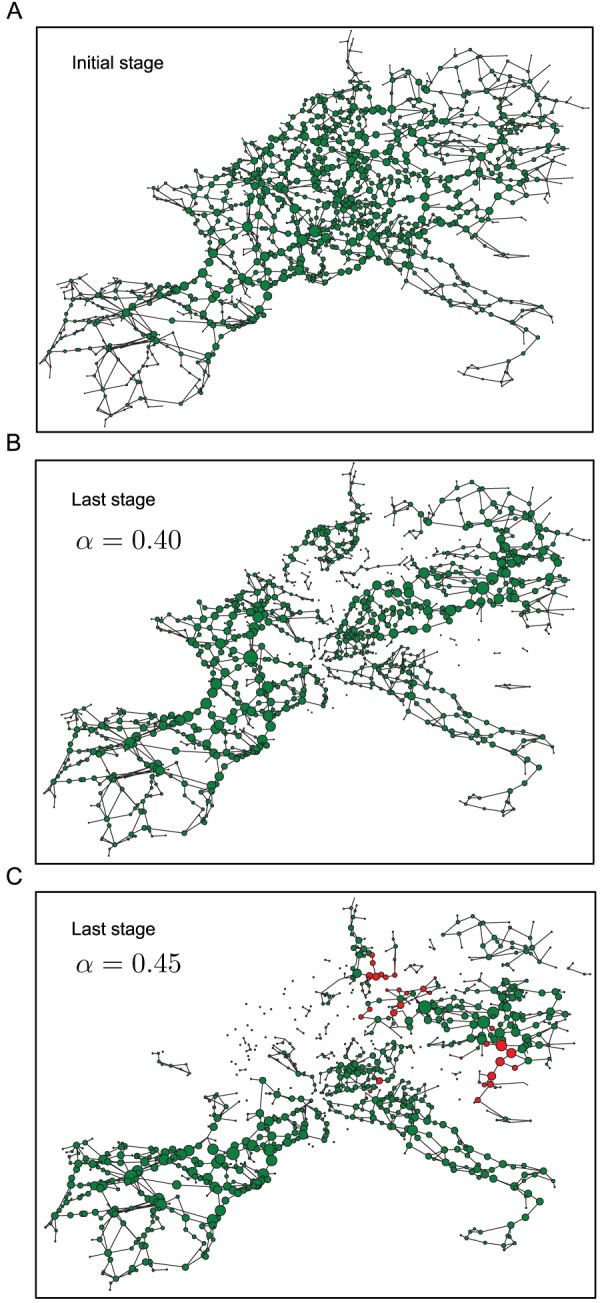

The visualization panels presented in Fig. 13 provide some intuition on the cause of the observed non-monotonicity in  as the tolerance parameter is increased. Figure 13 A shows the landscape of loads on the network before the initiation of a cascade where the size of the node is directly proportional to the load on the node. Figure 13 B shows, the state of the network with tolerance parameter

as the tolerance parameter is increased. Figure 13 A shows the landscape of loads on the network before the initiation of a cascade where the size of the node is directly proportional to the load on the node. Figure 13 B shows, the state of the network with tolerance parameter  after a cascade initiated by the removal of the highest load, has terminated. Figure 13 C shows a similar picture for the case where the tolerance parameter is higher, (

after a cascade initiated by the removal of the highest load, has terminated. Figure 13 C shows a similar picture for the case where the tolerance parameter is higher, ( ), but where the eventual damage is greater (i.e.

), but where the eventual damage is greater (i.e.  is smaller than the value obtained for Fig. 13 B). In this last panel, the network consists of several nodes, indicated in red, that had been removed in the course of the cascade depicted in Fig. 13 B, but are now intact as a consequence of the increased tolerance. However, counter-intuitively, the survival of these nodes result in wider load imbalances, resulting in a larger overall number of failures and a smaller surviving giant component. Thus, to some degree, the nodes shown in red, behave like fuses which if removed in the course of a cascade, end up saving a larger part of the network from failure. Dynamic visualizations of the progression of the cascades resulting in the final states shown in Figs. 13B,C are provided in Movies S1 and S2, respectively. A feature that becomes apparent in these dynamic visualizations is the non-local nature of the progression of the cascade. As pointed out in [4] such non-local progression is commonly observed in real cascade situations, and is a feature which can be reproduced by a more realistic DC power flow model, but not by simpler epidemic or percolation based models. Thus it is worth noting that the model presented in this work, despite being simpler than the DC power flow model used in [4], can nevertheless capture a distinctive attribute of real cascade progression.

is smaller than the value obtained for Fig. 13 B). In this last panel, the network consists of several nodes, indicated in red, that had been removed in the course of the cascade depicted in Fig. 13 B, but are now intact as a consequence of the increased tolerance. However, counter-intuitively, the survival of these nodes result in wider load imbalances, resulting in a larger overall number of failures and a smaller surviving giant component. Thus, to some degree, the nodes shown in red, behave like fuses which if removed in the course of a cascade, end up saving a larger part of the network from failure. Dynamic visualizations of the progression of the cascades resulting in the final states shown in Figs. 13B,C are provided in Movies S1 and S2, respectively. A feature that becomes apparent in these dynamic visualizations is the non-local nature of the progression of the cascade. As pointed out in [4] such non-local progression is commonly observed in real cascade situations, and is a feature which can be reproduced by a more realistic DC power flow model, but not by simpler epidemic or percolation based models. Thus it is worth noting that the model presented in this work, despite being simpler than the DC power flow model used in [4], can nevertheless capture a distinctive attribute of real cascade progression.

Figure 13. UCTE network snapshots before and after cascades.

(A) The intact network with node sizes in proportion to their respective steady-state loads. (B) The network and the loads after a highest-load-removal-triggered cascade has terminated, with the tolerance parameter  . (C) The network and the loads after a highest-load-removal-triggered cascade has terminated, with the tolerance parameter

. (C) The network and the loads after a highest-load-removal-triggered cascade has terminated, with the tolerance parameter  . The red nodes here indicate nodes that were removed in the cascade leading to (B), but survived in the cascade leading to (C).

. The red nodes here indicate nodes that were removed in the cascade leading to (B), but survived in the cascade leading to (C).

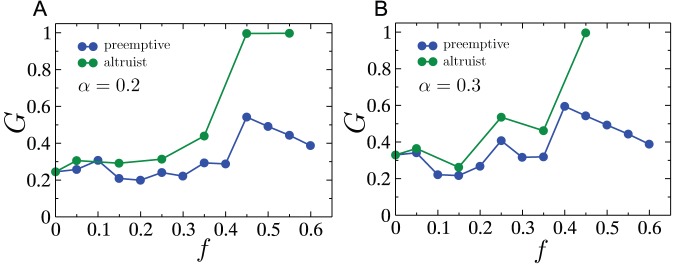

Next, we compare the two cascade mitigation strategies, viz. preemptive node removal and assignment of altruistic nodes, for cascades initiated by highest load removal on the UCTE network. As Fig. 14 A and B show, the altruistic strategy generally results in a larger surviving giant component after the cascade, than in the case when preemptive node removal is employed. It is also worth noting that non-monotonicities due to the lack of self-averaging in the cascade process, manifest themselves in these plots as well.

Figure 14. Cascade mitigation on the UCTE network.

Comparison between the preemptive and altruistic node removal strategies on the UCTE network with tolerance parameter (A)  and (B)

and (B)  .

.

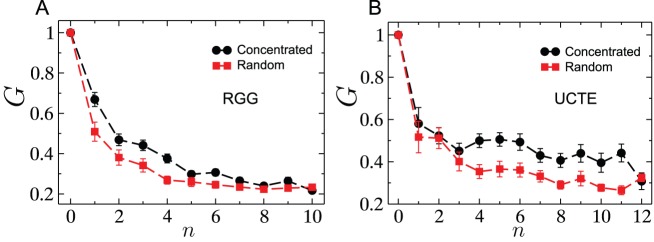

We conclude with an investigation of whether, in the case of multiple initial failures, the failures being spatially localized has any effect on the severity of the cascade. Figure 15A shows for a given value of the tolerance parameter  , the size of the surviving giant component

, the size of the surviving giant component  as a function of the number of nodes removed, for concentrated and random failures on an RGG. Random failures are only marginally more destructive than concentrated ones, which is understandable in light of how the different cascade stages resulting from just a single node's removal can cover a wide spatial spread, as seen in Fig. 7. We arrive at a similar conclusion for the case of concentrated and randomly located failures within the UCTE network from the results shown in Fig. 15B. Dynamic visualizations of the progression of spatially localized and distributed cascades on the UCTE network for the same number of initially removed nodes are provided in Movies S3 and S4, respectively.

as a function of the number of nodes removed, for concentrated and random failures on an RGG. Random failures are only marginally more destructive than concentrated ones, which is understandable in light of how the different cascade stages resulting from just a single node's removal can cover a wide spatial spread, as seen in Fig. 7. We arrive at a similar conclusion for the case of concentrated and randomly located failures within the UCTE network from the results shown in Fig. 15B. Dynamic visualizations of the progression of spatially localized and distributed cascades on the UCTE network for the same number of initially removed nodes are provided in Movies S3 and S4, respectively.

Figure 15. Cascades triggered by concentrated versus randomly distributed removals.

(A) Fractional surviving giant component size after a cascade as a function of number of initial nodes removed in concentrated and random removals for RGGs with  and

and  . (B) Fractional surviving giant component size after a cascade as a function of number of initial nodes removed in concentrated and random removals for the UCTE network. Each data point is an average over more than

. (B) Fractional surviving giant component size after a cascade as a function of number of initial nodes removed in concentrated and random removals for the UCTE network. Each data point is an average over more than  realizations.

realizations.

Discussion

In summary, we have attempted a thorough analysis of the characteristics of cascading failures and strategies for their mitigation on spatially constrained networks, including a model of such networks viz. the random geometric graph, as well as a real-world power transmission network. The key finding worth emphasizing from these studies is the inherent lack of self-averaging for cascade processes on spatial networks. In other words, conclusions gleaned from aggregate statistics on an ensemble of such networks, yield information of little value pertaining to a single network instance. For example, in contrast to the observation for an ensemble of RGGs, for a single network instance, increasing the excess load bearing capacity does not necessarily reduce the severity of the cascade in a monotonic fashion. Thus a straightforward measure for cascade prevention could yield counter-intuitive results. We demonstrate that increasing the effective dimensionality of the system i.e. easing the effect of the spatial constraints by introducing rewired long-range links eliminates these non-intuitive features. A standard cascade mitigation strategy, extensively studied in the past, of preemptively removing a fraction of underperforming nodes does not effectively reduce the severity of cascades on spatially constrained networks, due to the fairly narrow initial range of loads in spatial networks. Instead, the strategy of introducing a fraction of altruistic nodes appears to be a more effective alternative. This holds true both for the model networks as well as for the empirical network. Finally, we also find that cascades resulting from spatially concentrated node failures do not appear to be significantly less destructive than ones that are distributed over the network. Thus, our results paint a complex picture for how failure cascades induced by load redistribution on spatial networks carrying distributed flow propagate through the network. In short, for spatial networks, details specific to a network instance play a very important role in determining strategies to increase the resilience of the network against cascading failures, and methods based on aggregate observations from a network ensemble will present substantial pitfalls.

Note: Data on the UCTE network [32] that we used in this work was obtained from the website http://www.see.ed.ac.uk/~jbialek/Europe_load_flow which is currently non-functional. A processed version of the original data (the UCTE network structure) can be obtained by emailing the corresponding author (SS).

Supporting Information

Cascade realizations on a single RGG of size

N

= 1300 where conductances on links are inversely proportional to their lengths. The behavior of the surviving giant component size  as a function of the tolerance parameter

as a function of the tolerance parameter  (three individual realizations are shown) is practically indistinguishable from that found in the case where conductances on all links are identical, shown in Fig. 4A in the main text. All remaining parameters (besides conductances) and simulation details are identical to that in Fig. 4A.

(three individual realizations are shown) is practically indistinguishable from that found in the case where conductances on all links are identical, shown in Fig. 4A in the main text. All remaining parameters (besides conductances) and simulation details are identical to that in Fig. 4A.

(EPS)

Effect of rewiring links in an RGG with link-length dependent conductances. As the rewiring probability  is increased, the non-monotonicities in

is increased, the non-monotonicities in  as a function of tolerance parameter

as a function of tolerance parameter  gradually disappear, similarly to the case where link conductances are independent of their length (see Fig. 5). Simulations were performed with

gradually disappear, similarly to the case where link conductances are independent of their length (see Fig. 5). Simulations were performed with  and

and  .

.

(EPS)

Progression of the cascade initiated by the removal of the node with the highest load on the UCTE network ( N = 1254) with tolerance parameter α = 0.45. Node sizes are proportional to the load on them. The single orange node at the beginning of the movie indicates the node with the largest node which is removed to trigger a cascade. The overloaded nodes in subsequent stages are shown in orange before they are removed. The total number of nodes removed in the cascade is 167, and the number of nodes in the surviving giant component is 465.

(MP4)

Progression of the cascade initiated by the removal of the node with the highest load on the UCTE network with tolerance parameter

α

= 0.45. Although the tolerance parameter is greater than in the case of Movie S1, a greater number of nodes,  , fail in the cascade, and the resulting giant component is also smaller, with

, fail in the cascade, and the resulting giant component is also smaller, with  nodes. The nodes shown in gray indicate those nodes which failed in course of the cascade occurring for

nodes. The nodes shown in gray indicate those nodes which failed in course of the cascade occurring for  (shown in Movie S1), but survived when

(shown in Movie S1), but survived when  was increased to

was increased to  . The survival of these nodes potentially plays a role in making the cascade more severe. All other color and node size conventions are identical to those in Movie S1.

. The survival of these nodes potentially plays a role in making the cascade more severe. All other color and node size conventions are identical to those in Movie S1.

(MP4)

Progression of a cascade initiated by a spatially localized removal of 9 nodes. Color and node size conventions are as explained in caption for Movie S1. The tolerance parameter used here is  . The number of nodes removed in the course of the cascade is

. The number of nodes removed in the course of the cascade is  , and the number of nodes in the surviving giant component is

, and the number of nodes in the surviving giant component is  .

.

(MP4)

Progression of a cascade initiated by distributed (random) removal of 9 nodes. Color and node size conventions are as explained in caption for Movie S1. The tolerance parameter used here is  . The number of nodes removed in the course of the cascade is

. The number of nodes removed in the course of the cascade is  (same as for Movie S3), and the number of nodes in the surviving giant component is

(same as for Movie S3), and the number of nodes in the surviving giant component is  .

.

(MP4)

Funding Statement

This work was supported by DTRA Award No. HDTRA1-09-1-0049, by the Army Research Laboratory under Cooperative Agreement Number W911NF-09-2-0053 and by NSF Grant No. DMR-1246958. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Northeast blackout of 2003. Available: https://en.wikipedia.org/wiki/Northeast_blackout_of_2003. Accessed 2013 Jun 17.

- 2. Sachs JD (2009) Rethink the global money supply. Scientific American 300: 34. [PubMed] [Google Scholar]