Abstract

Earlier spatial orientation studies used both motion-detection (e.g., did I move?) and direction-recognition (e.g., did I move left/right?) paradigms. The purpose of our study was to compare thresholds measured with motion-detection and direction-recognition tasks on a standard Moog motion platform to see whether a substantial fraction of the reported threshold variation might be explained by the use of different discrimination tasks in the presence of vibrations that vary with motion. Thresholds for the perception of yaw rotation about an earth-vertical axis and for interaural translation in an earth-horizontal plane were determined for four healthy subjects with standard detection and recognition paradigms. For yaw rotation two-interval detection thresholds were, on average, 56 times smaller than two-interval recognition thresholds, and for interaural translation two-interval detection thresholds were, on average, 31 times smaller than two-interval recognition thresholds. This substantive difference between recognition thresholds and detection thresholds is one of our primary findings. For motions near our measured detection threshold, we measured vibrations that matched previously established vibration thresholds. This suggests that vibrations contribute to whole body motion detection. We also recorded yaw rotation thresholds on a second motion device with lower vibration and found direction-recognition and motion-detection thresholds that were not significantly different from one another or from the direction-recognition thresholds recorded on our Moog platform. Taken together, these various findings show that yaw rotation recognition thresholds are relatively unaffected by vibration when moderate (up to ∼0.08 m/s2) vibration cues are present.

Keywords: signal detection theory, perception, psychophysics, discrimination, human

the vestibular organs sense motion of the head—both rotation and translation—as well as the relative orientation of gravity (Goldberg et al. 2011; Merfeld 2012). The vestibular system, alongside visual, tactile, and other sensory systems, contributes to our sense of spatial orientation, where spatial orientation is defined to consist of three interacting sensory modalities, our senses of linear motion, angular motion, and tilt (Merfeld 2012). Unlike other senses (e.g., vision, hearing) where one sensory system is generally predominant, no single sensory system dominates our sense of spatial orientation.

Recently, there has been a rebirth in interest in spatial orientation thresholds (e.g., Barnett-Cowan and Harris 2009; De Vrijer et al. 2008; Dong et al. 2011; Grabherr et al. 2008; Gu et al. 2007; Janssen et al. 2011; MacNeilage et al. 2010; Mallery et al. 2010; Naseri and Grant 2012; Roditi and Crane 2012; Sadeghi et al. 2007; Soyka et al. 2011; Valko et al. 2012; Zupan and Merfeld 2008). With this comes a desire to compare and contrast results obtained from different laboratories as well as a need to put older literature into perspective. Motion thresholds have long been studied (e.g., Benson et al. 1986, 1989; Clark 1967; Clark and Stewart 1968; Doty 1969; Groen and Jongkees 1948; Ormsby 1974), but such studies fell from favor, in part because it was noted that average thresholds across subjects varied by two orders of magnitude (Clark 1967; Guedry 1974).

When combined with signal detection analyses, discrimination tasks provide a standard way to measure thresholds. Discrimination has been defined as the ability to tell two (or more) stimuli apart (Macmillan and Creelman 2005) and can be separated into two distinct categories—detection and recognition (e.g., Macmillan and Creelman 2005; Swets 1973, 1996; Treutwein 1995). Specifically, when one of two stimulus classes is null (no motion), this discrimination task is often called detection (e.g., Green and Swets 1966; Macmillan and Creelman 2005; MacNeilage et al. 2010; Swets 1973, 1996; Treutwein 1995). A patient discriminating between the presence and absence of a tone during a hearing test is performing a detection task. When neither stimulus class is null, this discrimination task is sometimes called recognition (e.g., Macmillan and Creelman 2005; Swets 1973, 1996; Treutwein 1995). Discriminating whole body leftward from rightward motion is a common spatial orientation direction-recognition task. Both techniques are used for other sensory modalities and, when pertinent, often yield similar threshold estimates (Hol and Treue 2001; Nakayama and Silverman 1985). To unambiguously delineate these two distinct forms of discrimination, we utilize these standard historical definitions of detection and recognition throughout this report.

In a recent theoretic analysis (Merfeld 2011), we scrutinized both detection and recognition tasks and, like others (e.g., MacNeilage et al. 2010; Seidman 2008), suggested that vibrations could provide a motion cue that influences spatial orientation perception. Specifically, we suggested that vibration could directly affect responses measured with a detection task, since subjects might detect the presence of vibration and infer motion, but vibration could only influence a recognition task if the subject were able to discern some difference in the vibration for motion in opposing directions (e.g., left/right vibration difference). Since vibration cues are nearly impossible to avoid in controlled motion devices (e.g., actuator vibration is present even with air-bearings), we hypothesized that the use of detection and recognition tasks could contribute to the threshold variations reported in the literature.

Therefore, we investigated motion-detection and direction-recognition paradigms for both whole body rotation and whole body translation on a Moog platform with moderate levels of vibration. (See results for measured vibration levels.) We found that detection thresholds were more than one order of magnitude smaller than recognition thresholds. We also investigated motion detection and direction recognition for whole body rotation on our low-vibration “Rotator” and found that detection and recognition thresholds were indistinguishable from one another and indistinguishable from the direction-recognition thresholds measured on the Moog platform.

METHODS

Subjects

Four healthy volunteers (3 women, 1 men; range 22–36 yr) were recruited to participate in this study. All were screened via a detailed vestibular diagnostic clinical examination to confirm the absence of undiagnosed vestibular disorders. Screening consisted of caloric testing, Hallpike tests, angular vestibuloocular reflex (VOR) evoked via rotation, and posture control measures. A short health history questionnaire was also administered to confirm the absence of dizziness, vertigo, and any other neurological deficits. Informed consent was obtained from all subjects prior to participation in the study. The study was approved by the local ethics committee and was performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki.

Experimental Procedure

In separate test sessions, subjects were rotated in yaw about an earth-vertical axis (“yaw rotation”) or translated laterally along an interaural axis (“y-translation”); subjects were informed of the type of motion at the beginning of the test session. Except for control study 4, which used a separate motion device (our low-vibration “Rotator”), motion stimuli were generated with a Moog 6DOF motion platform. Motion stimuli were single cycles of sinusoidal acceleration [a(t) = Asin(2πft) = Asin(2πt/T), where A is the acceleration amplitude and f is the motion frequency]. In this study, all motions had a frequency of 1 Hz and, therefore, a duration of 1 s. For consistency with our earlier human work (e.g., Grabherr et al. 2008; Valko et al. 2012) and that of others (e.g., MacNeilage et al. 2010; Mallery et al. 2010; Roditi and Crane 2012; Soyka et al. 2012), we choose to present velocity thresholds. Since acceleration, velocity, and displacement are all proportional to one another, the results are unchanged if presented as displacement thresholds, velocity thresholds, or acceleration thresholds.

General methods mimic those of a recent study (Valko et al. 2012). Subjects were seated in a chair with a five-point harness in an upright position. Their heads were held in place via an adjustable helmet that was carefully centered. To minimize the influence of visual cues, trials were performed in the dark in a lighttight room. All skin surfaces except the face were covered (long sleeves, light gloves), and a visor attached to the helmet surrounded the face. Auditory motion cues were masked by noise-canceling ear buds, which played white noise (∼60 dB). Tactile cues were distributed as evenly as possible with padding. The efficacy of these abatement techniques is demonstrated by our earlier study, in which we showed significant threshold increases in patients suffering total vestibular loss (Valko et al. 2012).

For each type of motion (i.e., yaw rotation, y-translation), subjects were presented with three blocks of contiguous trials—one block of two-interval recognition trials, one block of two-interval detection trials with motion to the left, and one block of two-interval detection trials with motion to the right. Before each block, a few suprathreshold practice trials were administered to ensure that the subjects understood the task and to minimize training effects. The stimuli, subject responses, and motion profiles were recorded via computer. The two-interval recognition thresholds were measured on a separate day from the two-interval detection thresholds, and the order of the blocks was randomized across subjects.

Testing was performed with a 3-Down/1-Up adaptive staircase paradigm (e.g., Leek 2001). To minimize the effect of initial conditions, the stimuli began at the same level for both recognition and detection tasks. Yaw rotations (y-translations) began well above threshold at vmax = 4°/s (vmax = 4 cm/s), and each block of testing ended after 75 trials. Until the first mistake, the stimulus was halved after three correct responses at each level (e.g., 4, 2, 1, . . .). From this point onward, the size of the change in stimulus magnitude was determined with the parameter estimation by sequential testing (PEST) rules developed by Taylor and Creelman (1967): 1) After each reversal, halve the step size. 2) A step in the same direction as the last uses the same step size, with the following exception. 3) A third step in the same direction uses a doubled step size. Each additional step in the same direction is also doubled, with the following exception. 4) If a reversal immediately follows a step doubling, then one extra same-size step is taken before doubling. 5) Minimum and maximum step sizes are specified. The magnitudes of the minimum and maximum step sizes were chosen to be 0.38 dB [20log10(21/16)] and 6.02 dB [20log10(2)], respectively. No feedback was provided as to the correctness of responses after each trial. Including control studies, each subject was tested on four different days. In total, each subject participated in about 1,000 trials across all conditions.

Recognition thresholds were measured with a standard two-interval two-alternative forced-choice paradigm, where each trial was divided into two time intervals. For each trial, the subject was moved to the left or to the right in the first interval and then in the opposite direction in the second interval. Subjects were instructed to push the left button if they perceived a leftward motion in the second interval and to push the right button if they perceived rightward motion in the second interval. There was at least a 3-s period between each interval.

Detection thresholds were also measured with a standard two-interval two-alternative forced-choice paradigm. In one of the intervals a motion stimulus was provided, and in the other interval a null stimulus (no motion) was provided. The motion was to the left for one block of trials and to the right for a separate block of trials. Subjects were informed in advance whether the motion during that block was to the right or to the left. Subjects were instructed to push the left button if they perceived the motion stimulus in the first interval and to push the right button if they perceived the motion stimulus in the second interval. Alerts were provided before the onset and after the conclusion of each interval/trial. There was at least a 3-s period between each interval.

Four additional control experiments were performed. Three were conducted to confirm that the large differences we report between two-interval detection thresholds and two-interval recognition thresholds were not in some way specific to the tasks. Control study 4 was conducted on our low-vibration Rotator.

Control study 1: “two-interval bidirectional detection.”

To verify that using unidirectional motions for detection had a minimal effect on thresholds, we measured each subject's rotation and translation thresholds with a two-interval bidirectional detection task. This first control study was similar to the two-interval unidirectional detection paradigm except that the motion for each trial was randomly chosen to be either left or right.

Control study 2: “one-interval detection.”

To ensure that using a two-interval paradigm for detection did not have a significant effect on thresholds, we also measured each subject's leftward one-interval unidirectional detection threshold for yaw rotation and y-translation. In this second control study there was only one interval per trial, in which the subject was either moved or not moved, and the subject's task was to determine whether there had been motion or not.

Control study 3: “one-interval recognition.”

Recognition thresholds were measured with a standard one-interval forced-choice paradigm. On each direction-recognition trial, the subject was moved either to the left or to the right. Subjects were instructed to push the left button if they perceived a leftward motion and to push the right button if they perceived rightward motion.

Control study 4: “low-vibration two-interval recognition and detection.”

Two-interval yaw rotation recognition and detection thresholds were measured on a device having much less vibration than the Moog platform. (See results for vibration levels on our Rotator.) The tasks were the same two-interval tasks performed on the Moog motion device. Three of the four subjects completed this testing; the fourth subject had become pregnant after completing all other portions of this study.

For control studies 1 and 2, testing was performed with a nonadaptive paradigm and stimuli levels ranged between 0.1 and 4 times the average thresholds for detection measured with the primary two-interval detection task. Stimuli at 0.1 times the average threshold were included to act as “catch” trials, as is standard for detection tasks, since preliminary data established that stimuli 10 times lower than threshold provided effective catch trials. These catch trials mimicked threshold-level stimuli in all respects except that the stimuli were 10 times below threshold (our findings that the detection thresholds measured in these 2 control studies were not significantly different from the measured 2-interval detection thresholds, presented in results, help establish the efficacy of these procedures). For control studies 3 and 4, a 3-Down/1-Up adaptive staircase paradigm was used as in the primary study. For all control studies, each block of testing ended after 75 trials and the interstimulus interval was always at least 3 s.

Estimating Threshold and Noise Standard Deviation

For all our analyses reported here, we assume the underlying physiological noise model described in detail elsewhere (Merfeld 2011). This leads to the following equation that defines the probability that the subject perceives a given motion stimulus as positive for this one-interval recognition (1R) task:

where x1R is the peak velocity amplitude of the motion stimulus, x̂ is a random variable that represents the subject's perceived motion, σ represents the standard deviation of the total equivalent physiological noise, μψ is the subject's “psychometric bias,” and Φ is the cumulative standard Gaussian distribution. See appendix a for detailed derivation. Similarly, for two-interval motion detection, the subject's probability of perceiving that a given two-interval detection stimulus, x2D, contained motion in the first interval is modeled by

where |x2D| is the magnitude of the peak velocity amplitude of the motion stimulus and the sign of x2D encodes the order of the stimuli. Finally, for a two-interval direction-recognition task, the subject's probability of perceiving that a given two-interval recognition stimulus, x2R, contained positive motion in the first interval is modeled by (Merfeld 2011)

where |x2R| is the magnitude of the peak velocity amplitude of the motion stimulus and the sign of x2R records the order of the stimuli. Note that for the two-interval tasks, psychometric bias (μψ) does not appear in the equations. If a bias were present, it would be expected to be the same for the first and second intervals. Therefore, theoretically, the biases in the first and second intervals cancel one another.

For all conditions except one-interval detection, the maximum likelihood estimate of σ (and μψ for one-interval recognition) was obtained by fitting the experimental data to these functions via a bias-reduced (Chaudhuri and Merfeld 2013) generalized linear model (BRGLM) fit with a probit link (McCullagh and Nelder 1989). We will refer to σ as the threshold throughout the rest of this report. For one-interval detection, because the decision boundary is not as clearly defined, σ was defined to be estimated by binning subject responses and linearly interpolating to determine the stimulus at which the subject would have responded correctly 75% of the time.

Statistical analyses were performed with a Wilcoxon rank sum test. This test was chosen because it is nonparametric, and therefore the distribution of thresholds across subjects (e.g., log-normal) does not have to be assumed.

Vibration Characterization

Three-dimensional linear acceleration was recorded with a sampling rate of 128 Hz with an Opal sensor attached to each motion platform's chair structure near the torso. Data were acquired during 1-Hz single-cycle yaw sinusoid platform accelerations with peak velocities of 0.89 and 0.02°/s for both our Moog platform and our Rotator and during 1-Hz interaural translation stimuli with peak velocities of 0.32 and 0.023 cm/s for our Moog platform. All calculations described below were performed across a 2-s window that extended 0.5 s before and after the motion trajectory. To minimize the contributions of sensor bias and drift, the mean value for the first and last 0.2 s was subtracted from each data segment. These data were then quantified by calculating the ensemble average and variance for each of the three orthogonal linear acceleration components at each instant in time across 100 independent trials. To measure changes in vibration due to motion, the mean value of the variance for the first and last 0.2 s was subtracted from each of the variance components, leaving the vibration component associated with the motion. The total magnitude of the three-dimensional acceleration variance vector was calculated as the sum of the three variance components at each instant in time. Vibration, defined as oscillations about an equilibrium point, will be quantified with linear acceleration standard deviation, which, of course, is the square root of the variance.

RESULTS

Thresholds

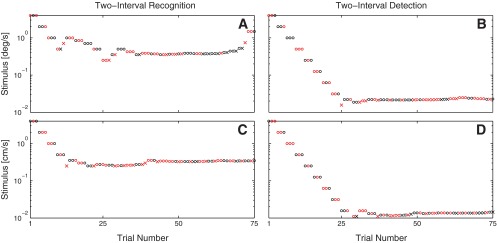

Leftward and rightward two-interval motion-detection thresholds were not statistically different from one another (P = 0.74 for yaw rotation and P = 0.86 for interaural translation). Therefore, two-interval detection thresholds are reported as the average of each subject's leftward and rightward motion-detection thresholds. Figure 1 shows the stimuli and responses for the first subject's (S1) two-interval recognition and two-interval detection test sessions. Table 1 lists the two-interval recognition and average two-interval detection thresholds for yaw rotation and interaural translation for each subject.

Fig. 1.

Stimuli and subject (S1) responses for 2-interval recognition (left) and 2-interval detection with motion to the left (right). A and B: yaw rotation. C and D: y-translation. ○, Correct responses; ×, incorrect responses. Red, positive stimuli; black, negative stimuli.

Table 1.

Two-interval recognition and two-interval detection thresholds

| Recognition | Detection | Ratio: Rec/Det | |

|---|---|---|---|

| Yaw rotation | |||

| S1 | 0.97 ± 0.24 | 0.025 ± 0.005 | 39 |

| S2 | 0.98 ± 0.21 | 0.018 ± 0.003 | 54 |

| S3 | 1.17 ± 0.33 | 0.018 ± 0.003 | 65 |

| S4 | 1.48 ± 0.30 | 0.022 ± 0.004 | 67 |

| Interaural translation | |||

| S1 | 0.67 ± 0.18 | 0.021 ± 0.004 | 32 |

| S2 | 0.69 ± 0.14 | 0.026 ± 0.005 | 27 |

| S3 | 0.55 ± 0.19 | 0.024 ± 0.005 | 23 |

| S4 | 1.04 ± 0.28 | 0.026 ± 0.005 | 40 |

Values (in °/s for yaw rotation and in cm/s for interaural translation) are threshold estimates ± SD for head-centered yaw rotations about an earth-vertical axis and earth-horizontal interaural translation at 1 Hz with n =75 trials for each of the 4 subjects (S1–S4).

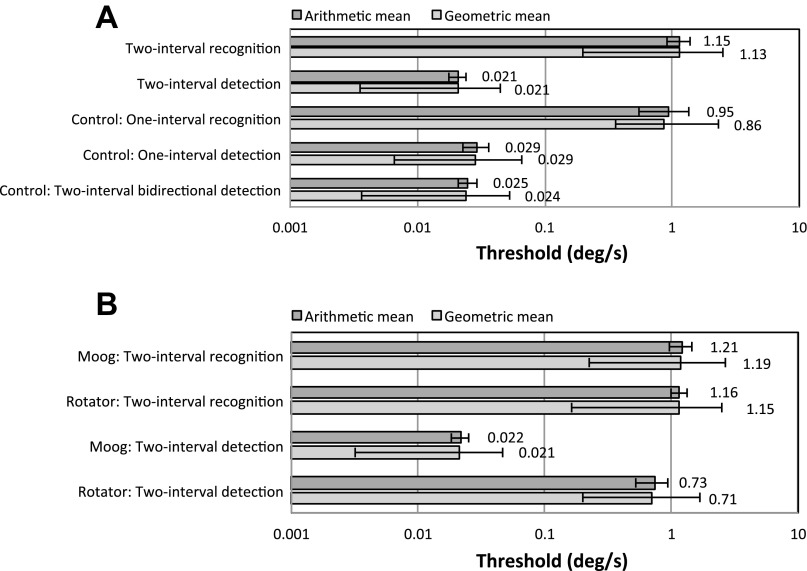

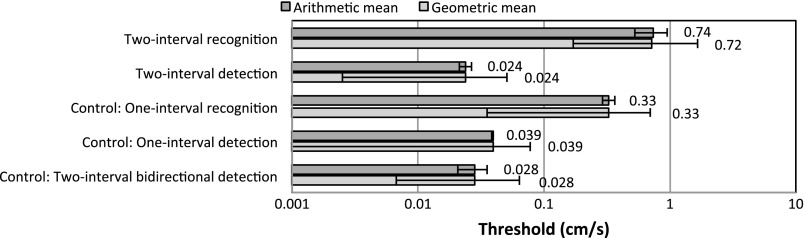

Figure 2A and Figure 3 show the arithmetic and geometric means and standard deviations of the yaw rotation and interaural translation two-interval thresholds measured with our Moog platform across subjects. Both arithmetic and geometric means are shown because both have been reported in the literature; this choice does not affect our findings. For yaw rotation and interaural translation, the two-interval detection thresholds are between 20 and 70 times smaller than the two-interval recognition thresholds. This difference is statistically significant (P < 0.05) and is substantially greater than both intra-recognition threshold and intra-detection threshold variation.

Fig. 2.

A: arithmetic and geometric means of yaw rotation thresholds (σ) across subjects measured on the Moog platform at 1 Hz with n = 75 trials. B: arithmetic and geometric means of yaw rotation thresholds on both the Moog platform and the low-vibration Rotator for the 3 subjects who completed control study 4.

Fig. 3.

Arithmetic and geometric means of thresholds (σ) across subjects for earth-horizontal interaural translation at 1 Hz with n = 75 trials.

Additionally, our control experiments confirmed that one-interval unidirectional detection thresholds were much smaller than one-interval recognition thresholds and that two-interval bidirectional detection thresholds were much smaller than two-interval recognition thresholds. Both findings held true for both yaw rotation and y-translation. These four findings were each statistically significant (P < 0.05).

Two-interval detection yaw rotation thresholds were not significantly different from one-interval detection thresholds (P = 0.11), nor were two-interval yaw recognition thresholds significantly different from one-interval recognition thresholds (P = 0.69). Two-interval y-translation detection thresholds were significantly different from one-interval detection thresholds (P < 0.05), as were two-interval recognition thresholds from one-interval recognition thresholds (P < 0.05). While significant, these differences were only different by a factor of 2–3, which is much smaller than the factors of 20–70 measured between two-interval detection and recognition thresholds.

Figure 2B shows the arithmetic and geometric means and standard deviations of the yaw rotation thresholds on both the Moog platform and the low-vibration Rotator for the three subjects who completed control study 4. The two-interval detection thresholds measured on the Moog platform are between 1 and 2 orders of magnitude smaller than the two-interval recognition thresholds as well as the two-interval detection threshold measured on the low-vibration Rotator.

Bias

For purposes of this study, we consider bias (μψ) a nuisance variable required to fit the one-interval recognition data. This nuisance variable includes both perceptual bias and response bias (García-Pérez and Alcalá-Quintana 2013). The mean bias was 0.12 ± 0.30°/s (13% of threshold) and 0.01 ± 0.10 cm/s (2% of threshold) for yaw rotation and y-translation, respectively. The root mean square of the bias was 0.29°/s (31% of threshold) and 0.08 cm/s (25% of threshold) for yaw rotation and y-translation, respectively.

Vibration

For the near-threshold level yaw rotations and interaural translations investigated, the magnitude of the three-dimensional vibration recorded during positive motion was similar to that recorded during negative motion. Therefore, these data were pooled to yield average vibration. For yaw rotations of 0.893°/s, which was near the average Moog recognition threshold, and 0.02°/s, which was near the average Moog detection threshold, the peak vibrations (taken as the average of the 11 samples centered about the peak vibration) were 0.077 m/s2 and 0.005 m/s2, respectively. For interaural translations of 0.32 cm/s, which was near the average recognition threshold, and 0.023 cm/s, which was near the average detection threshold, the peak vibrations were 0.060 m/s2 and 0.002 m/s2, respectively. As discussed below, all vibrations measured near the Moog detection thresholds are near vibration thresholds established in previous literature (Parsons and Griffin 1988).

Vibrations measured on the Rotator used in control study 4 were 0.002 m/s2 for yaw rotations of 0.89°/s (near the average Moog threshold, which was not significantly different from the direction-recognition and motion-detection thresholds measured on the Rotator) and were below the resolution (<0.002 m/s2) of our sensor for 0.02°/s, which was near the average Moog detection threshold.

DISCUSSION

Our results show that whole body motion thresholds measured with a standard two-interval recognition task are much greater than for a standard two-interval detection task when both tasks are performed on the same Moog motion platform. This was true for both yaw rotation and interaural translation. As discussed in more detail below, this finding suggests that subjects might utilize vibration as a motion cue during a standard detection task, which would imply that our detection paradigm was assessing a vibration threshold and not a vestibular threshold per se. These findings suggest that motion-detection tasks performed with devices that provide vibrations above the vibration detection thresholds (Parsons and Griffin 1988) must, at a minimum, acknowledge the likely vibration contribution.

Our results also show that thresholds measured with a yaw rotation direction-recognition task were not significantly different from those measured with a yaw rotation motion-detection task when both tasks were performed on a low-vibration Rotator. This finding demonstrates that both direction recognition and motion detection yield similar results when vibrations associated with motion are below vibration threshold levels.

Finally, our results showed that the low-vibration yaw rotation recognition thresholds were not significantly different from the yaw rotation recognition thresholds measured on the Moog platform with vibration. As previously conjectured on theoretic grounds (Merfeld 2011), vibration was likely not a significant factor for these yaw recognition tasks, because it is difficult for subjects to use asymmetric vibration noise as a motion cue to discriminate between leftward and rightward rotations. These findings show that vibration cues, even when varied by almost 2 orders of magnitudes, did not have a measureable impact on yaw rotation thresholds measured with a direction-recognition task. This is not to say that vibration cannot impact direction-recognition thresholds. In fact, we explicitly predict that some level of vibration greater than that exhibited by the Moog platform would yield higher direction-recognition thresholds. This would mimic findings for other modalities (Barlow 1956; Carter and Henning 1971; Pelli and Ferell 1999; Pollehn and Roehrig 1970; Siegel and Colburn 1983; Swets et al. 1962; Watamaniuk and Heinen 1999), which show increasing thresholds with increases in “external noise” (in our case vibrational noise) as the level of external noise rises above the “internal” (i.e., physiological) noise floor.

Thresholds

To our knowledge, this is the first spatial orientation study in which perceptual detection and recognition thresholds were measured and compared in the same set of subjects with the same motion device, same motion stimuli, and otherwise similar tasks. We found that thresholds measured with a standard two-interval detection task were between 1 and 2 orders of magnitude smaller than thresholds measured with a standard two-interval recognition task. This was true for yaw rotation, where two-interval detection thresholds were, on average, 56 times smaller than two-interval recognition thresholds. This was also true for interaural translation, where two-interval detection thresholds were, on average, 31 times smaller than two-interval recognition thresholds.

As noted, earlier reviews of the spatial orientation threshold literature (Clark 1967; Guedry 1974) reported that estimates of the average angular rotation threshold for healthy subjects varied across studies between 0.035 and 4°/s—an interval that spans just over 2 orders of magnitude. Clark and Guedry each provided several explanations for this variation including the use of different threshold criteria, the inability to provide controlled stimuli, the variation in stimuli duration and frequency, and the use of different psychophysical methods. Clark specifically mentions in his review (1967) that in some studies the “observer's task was merely to report whether he was turning right or left for preestablished angular accelerations of specified durations” while “some investigators have merely asked the observer to report the presence or absence of the stimulus in a typical ‘yes-no’ technique.”

These methodological variations make it difficult to form generalizations from the data, and furthermore, they limit our ability to identify which experimental variables inflate thresholds and which depress them. In this study we isolated one aspect of the psychophysical method, the use of a recognition task compared with a detection task, and examined its effect on spatial orientation thresholds. Our results show that recognition thresholds (right/left) are substantially greater than detection thresholds (yes/no). This suggests that it is likely that the very different thresholds provided by detection and recognition tasks contribute substantially to the large variability found in the literature.

A theoretical analysis of spatial orientation thresholds (Merfeld 2011) supported by experimental data (Seidman 2008) previously suggested that vibration cues could provide a direct cue to tell a subject that he/she is moving, which is what is reported in a detection task. Merfeld (2011) also suggested that a recognition task would only be affected by vibration cues if subjects were able to discern some asymmetric difference in the vibration for rightward motion versus the vibration for leftward motion. Such cues may be present, and are difficult to rule out completely. However, right/left directional vibration cues are always smaller than the total vibration cue available to the subject during a detection task. Finally, it is important to note that even if a direction-specific vibration cue were present, the subject would need to learn to identify the cue in the absence of feedback. Specifically, for the studies reported here, the subjects received no feedback on whether they responded correctly or incorrectly, thus making it difficult for them to identify a directional vibration cue.

That vibration and other nonvestibular sensory cues do not contribute substantially to 1-Hz direction-recognition thresholds is directly supported by data from patients having no vestibular function (Valko et al. 2012) and by the yaw recognition thresholds we report with two different motion platforms (Fig. 2B). For yaw rotation at 1 Hz, vestibular total-loss patients had recognition thresholds that were >10 times greater than normal, clearly indicating that the vestibular system normally provided the predominant sensory cue in the dark. For interaural translation at 1 Hz, vestibular total-loss patients had recognition thresholds that averaged about 5 times greater than normal, again demonstrating the predominance of vestibular signals. Similarly, our data showing indistinguishable direction-recognition thresholds on the Moog platform and our low-vibration Rotator similarly demonstrate that vibrations, at least at the levels reported here, do not impact direction-recognition thresholds.

For detection tasks, the discussion is more complex. A previous study (Mah et al. 1989) determined linear thresholds along the x-body axis (in the horizontal plane perpendicular to the interaural axis) with a two-interval detection paradigm and a linear sled designed to minimize vibration cues. This was accomplished primarily by employing air-bearing technology to eliminate all frictional contacts and by using a ripple-free force coil to avoid disturbance problems associated with motor ripple (Mah et al. 1989). Using this sled and 1-Hz cosine accelerations, a mean 70.7% correct level of 0.28 cm/s was reported, which is approximately equivalent to a threshold (σ) value of 0.36 cm/s with our one-σ threshold definition. Another study (Dong et al. 2011) measured anterior horizontal translation thresholds with a two-interval detection paradigm and a platform that glided on “vibration-free” air-bearings (for platform details see Robinson et al. 1998). This study measured a mean 1-Hz sinusoidal acceleration 75% correct level of 0.86 cm/s, which corresponds to an equivalent one-σ threshold value of 0.90 cm/s.

The two-interval recognition thresholds we report (0.74 cm/s) fall between these detection thresholds measured with air-bearing systems. Furthermore, the average detection thresholds reported with air-bearing systems (where vibration is presumed not to make a substantive contribution) are both within 50% of our average direction-recognition thresholds measured in different subjects. The fact that detection thresholds measured in the near absence of vibration (Dong et al. 2011; Mah et al. 1989) are roughly the same as our recognition thresholds suggests that all are assaying the same vestibular contribution and not another (e.g., vibration) contribution.

Consistent with these air-bearing studies, the yaw-rotation motion-detection thresholds we measured on a low-vibration Rotator were the same as the yaw-rotation direction-recognition thresholds we measured on both the low-vibration Rotator and the Moog platform. Again, the fact that detection thresholds measured with low vibration are roughly the same as recognition thresholds suggests that vibration at these levels does not have a substantial influence on thresholds measured with a recognition task.

These favorable comparisons of motion-detection thresholds to direction-recognition thresholds suggest two things: 1) that detection paradigms can be used to assay vestibular thresholds, if vibration cues are low enough (i.e., below the vibration threshold relevant for the task), and 2) that recognition paradigms can accurately assay vestibular thresholds even in the presence of moderate vibration cues.

Vibration Measurements

The characterization of vibration on our motion platform seems consistent with the hypothesis that our detection task assayed a vibrational threshold. The peak vibrations we measured that were available to assist with motion detection were 0.005 and 0.002 m/s2 during yaw rotation and interaural translation, respectively. These vibration levels fall within the range of whole body seated vibration perception thresholds reported previously (Parsons and Griffin 1988), which range between 0.001 and 0.05 m/s2 for frequencies between 2 and 63 Hz.

This sensitivity to vibration cues forces us to think carefully about providing artificial vibration cues, especially since no one knows the salient vibrational cue. While the data strongly suggest that vibration cues are contributing, especially for detection thresholds, we simply do not know exactly what vibration cues are being used, but vibration of the head, torso, and behind are likely contributors. Therefore, it is not straightforward to measure vibration at any single point (or even a combination of points) and use that measurement to lead to a definitive conclusion that vibration cues are or are not contributing to the measured detection threshold.

Therefore, we will consider the application of artificial “vibration” with great caution, especially since the “realism” of the vibrational cues could impact the influence of the artificial vibration. We provided such artificial vibration cues when no motion was present in an earlier threshold study (Zupan and Merfeld 2008) to help convince the subject to consider that motion might have occurred in the absence of motion, and others have considered similar techniques. Since the findings reported here suggest that humans may be very sensitive to vibration cues and suggest that humans are able to use nonvestibular vibration cues to determine whether they are moving or not, caution is warranted when interpreting data that rely heavily on the application of artificial vibration cues. This may be overly conservative, but we prefer to err on the side of caution until we have a better understanding of how vibration cues influence self-motion thresholds.

That our measured recognition thresholds were much higher than our measured detection thresholds demonstrates that we can infer that we are moving without knowing which way we are moving. In this sense, recognition (did I move to the left or right?) is more complex and requires more information than detection (did I move?).

Nomenclature

If one were interested only in a vestibular sense, vibration would be considered a confounding variable. However, given the multisensory nature of our sense of spatial orientation, where vibration can be considered a normal contributor to our sense of motion (Seidman 2008), it is not clear that vibration should be classified as a confounding variable for spatial orientation tasks. In the end, given the clarity of our findings, the name given to the contribution of the vibration variable is less important than the factual finding summarized in the title. Nonetheless, we consider vibration an uncontrolled variable that is particularly relevant to detection tasks but is often unreported (in part because we do not know the pertinent canonical vibration variable) rather than a confounding variable. appendix b discusses recognition and detection nomenclature.

Fitted Bias Estimates

We provide but do not interpret the mean of the fitted bias values for one-interval recognition tasks, because this parameter does not directly inform us regarding perception. Therefore, we simply note that bias was always much less than σ when estimated with the one-interval recognition task.

More specifically, studies have shown that the bias fit parameter includes both perceptual and decisional bias (e.g., García-Pérez and Alcalá-Quintana 2011, 2013; Morgan et al. 2012), which cannot be easily separated. As one example, experimental investigations (Morgan et al. 2012) have shown that decisional biases can yield shifts in the psychometric function without substantively affecting the slope or spread, which we report here as threshold σ. This shows that any impacts of criterion biases on σ are not large. Therefore, given the large differences we report here between detection thresholds and recognition thresholds, there is no reason to believe that any such theoretical influence of decisional bias affects our reported threshold findings in any substantive way, especially since any vestibular bias effects would be expected to cancel one another in the two-interval tasks.

Tasks

Historically, unless one is trying to establish the receiver-operator characteristic (ROC) curve (Green and Swets 1966; Macmillan and Creelman 2005), two-interval detection has generally been preferred over one-interval detection because 1) two-interval detection is less susceptible to criterion bias (i.e., there is less reason for the subject to prefer one order of stimuli to the other) (Green and Swets 1966; Macmillan and Creelman 2005) and 2) the decision boundary (i.e., the boundary each subject draws that directly partitions the perceptual space into two sets, one for each class) is better defined on each trial for a two-interval detection task. In fact, two-interval detection tasks were developed, in part, because one-interval data demonstrated the arbitrary nature of the decision boundary for one-interval detection tasks. See Merfeld (2011) for further details on decision boundaries in the context of whole body motion thresholds.

More recently, three-response tasks have been shown to yield improved fits (García-Pérez and Alcalá-Quintana 2011, 2013) for some psychometric models that do not include an equivalent to vestibular bias (Merfeld 2011). Even with experimental evidence of bias (e.g., Crane 2012a, 2012b), given the stark differences between detection and recognition that we obtain and report here, we chose not to pursue a three-response task.

Summary

Our results could incorrectly be interpreted as a condemnation of the Moog motion platform. In fact, as noted above, the similarity of our recognition thresholds measured with a Moog platform to 1) detection thresholds measured with air-bearing technologies and 2) recognition thresholds measured on another motion device with lower vibration shows that the vibrations present on a Moog platform do not have a predominant influence on recognition thresholds. On the other hand, there is a substantial difference between detection thresholds measured with a Moog platform and 1) detection thresholds measured with air-bearing technologies and 2) recognition thresholds measured with a Moog platform.

Our respective findings confirm earlier theoretical analyses (Merfeld 2011) that suggested that recognition tasks are more immune to the influence of vibration cues than detection tasks. Specifically, studies of vestibular loss patients (Valko et al. 2012)—alongside the similarity of our recognition thresholds to detection thresholds reported for low-vibration and air-bearing devices (Dong et al. 2011; Mah et al. 1989)—strongly suggest that recognition tasks primarily assay vestibular sensation and that detection tasks also assay vestibular sensation when vibrational cues are minimized by the use of air-bearings and other vibration-reduction technologies.

GRANTS

This research was supported by National Institute on Deafness and Other Communication Disorders Grant DC-04158.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: S.E.C. and D.M.M. conception and design of research; S.E.C. and F.K. performed experiments; S.E.C. analyzed data; S.E.C., F.K., and D.M.M. interpreted results of experiments; S.E.C. and D.M.M. prepared figures; S.E.C. and D.M.M. drafted manuscript; S.E.C., F.K., and D.M.M. edited and revised manuscript; S.E.C., F.K., and D.M.M. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Miguel A. García-Pérez for reviewing a draft of this manuscript. We also thank Dava Newman and Rita Domingues from the Massachusetts Institute of Technology Man Vehicle Laboratory for lending us inertial measurement units.

APPENDIX A

In this appendix we derive the psychometric functions used to fit the data for each task. We assume that a subject's perception of a motion stimulus with peak velocity amplitude x can be modeled by a Gaussian probability density function such that

where x̂′ is a random variable that represents the subject's perceived motion over the previous trial, σ′ represents the standard deviation of the perceptual noise in the system, and μ′(x) is the mean psychophysical function that maps physical stimuli, x, to a perceptual variable. To first order, the mean spatial orientation psychophysical function can be modeled as

where represents the subject's perceived motion when the stimulus is the null stimulus and κ′ represents linear perceptual scaling of the stimulus. Substitution yields

Since discrimination data can only help estimate the ratios /σ′ and κ′/σ′, we normalize by κ′, yielding

where σ = σ′/κ′, μ0 = /κ′, and x̂ = x̂′/κ′. One can consider this normalization as mapping the perceptual space near threshold with a slope of 1 (κ′ = 1) or accept it as a convenient parameterization that scales perceived motion (x̂′), perceptual noise (σ′), and perceptual bias to have units of physical stimuli. Earlier analyses (Merfeld 2011) dealt with the normalized quantities, where the noise (σ) and vestibular bias (μ0) were both defined in units of the physical stimuli.

Using this model we see that, for a one-interval direction-recognition task, the subject's probability of perceiving that a given motion stimulus is positive is modeled by (Macmillan and Creelman 2005; Merfeld 2011)

where Φ is the cumulative standard Gaussian distribution. This probability as a function of stimulus level (x) defines the theoretic psychometric function.

In direct contrast to the perceptual bias (μ0) defined above, we refer to the shift of the psychometric function as “psychometric bias” (μψ). The location of the point of subjective equality (PSE), the stimulus level at which the subject is equally likely to respond left or right, defines psychometric bias. Equivalently, this is the stimulus level that yields a perception of no motion. To help minimize potential confusion, we note that when response bias (García-Pérez and Alcalá-Quintana 2013) equals zero, psychometric bias and perceptual bias have equal magnitudes but opposite signs, μψ = −μ0 (Haburcakova et al. 2012). While it did not label the psychometric function shift “psychometric bias,” Fig. 3 in Merfeld (2011) demonstrates these opposing signs and shows how this arises. Substituting μψ = −μ0, this yields the following equation that defines the psychometric function:

APPENDIX B

During the review process, we were asked to elaborate on our use of terminology since consistent terminology is not always used across all disciplines. This appendix has been added in response to this suggestion. Our recognition task requires that subjects classify and/or name the motion (e.g., “left” vs. “right”). At first glance, it may not be obvious that this matches the way “recognition” is used in the visual literature, but we see substantial overlap in how the detection/recognition terminology is used. For example, Norton et al. (2006) in their visual psychophysics book define a detection task as asking “a subject whether he or she does or does not see something”; this exactly matches our usage, which we adopted after reading other literature (Swets 1973, 1996; Macmillan and Creelman 2005). For example, Swets (1996) wrote that “[t]he task of detection is to determine whether a stimulus of a specified category (call it category A) is present or not.”

Furthermore, Norton et al. (2006) define a recognition task to be when the subject is asked “to name the object.” This seems very near our definition of recognition that requires subjects to classify/name the motion to be leftward (category A) or rightward (category B), which we adopted from earlier literature (Swets 1973, 1996; Macmillan and Creelman 2005). For example, Swets (1996) wrote that “[t]he task of recognition is to determine whether a stimulus known to be present, as a signal, is a sample from signal category A or signal category B.”

REFERENCES

- Barlow HB. Retinal noise and absolute threshold. J Opt Soc Am 46: 634–639, 1956 [DOI] [PubMed] [Google Scholar]

- Barnett-Cowan M, Harris LR. Perceived timing of vestibular stimulation relative to touch, light and sound. Exp Brain Res 198: 221–231, 2009 [DOI] [PubMed] [Google Scholar]

- Benson AJ, Hutt E, Brown S. Thresholds for the perception of whole body angular movement about a vertical axis. Aviat Space Environ Med 60: 205–213, 1989 [PubMed] [Google Scholar]

- Benson AJ, Spencer MB, Stott JR. Thresholds for the detection of the direction of whole-body, linear movement in the horizontal plane. Aviat Space Environ Med 57: 1088–1096, 1986 [PubMed] [Google Scholar]

- Carter BE, Henning GB. The detection of gratings in narrow-band visual noise. J Physiol 219: 355–365, 1971 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaudhuri SE, Merfeld DM. Signal detection theory and vestibular perception. III. Estimating unbiased fit parameters for psychometric functions. Exp Brain Res 225: 133–146, 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark B. Thresholds for the perception of angular acceleration in man. Aerospace Med 38: 443–450, 1967 [PubMed] [Google Scholar]

- Clark B, Stewart JD. Comparison of three methods to determine thresholds for perception of angular acceleration. Am J Psychol 81: 207–216, 1968 [PubMed] [Google Scholar]

- Crane BT. Fore-aft translation aftereffects. Exp Brain Res 219: 477–487, 2012a [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crane BT. Roll aftereffects: influence of tilt and inter-stimulus interval. Exp Brain Res 223: 89–98, 2012b [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Vrijer M, Medendorp WP, Van Gisbergen JA. Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J Neurophysiol 99: 915–930, 2008 [DOI] [PubMed] [Google Scholar]

- Dong X, Robinson CJ, Fulk G. Psychophysical detection thresholds in anterior horizontal translations of seated and standing blindfolded subjects. Conf Proc IEEE Eng Med Biol Sci 2011: 4112–4115, 2011 [DOI] [PubMed] [Google Scholar]

- Doty RL. Effect of duration of stimulus presentation on the angular acceleration threshold. J Exp Psychol 80: 317–321, 1969 [DOI] [PubMed] [Google Scholar]

- García-Pérez MA, Alcalá-Quintana R. Interval bias in 2AFC detection tasks: sorting out the artifacts. Atten Percept Psychophys 73: 2332–2352, 2011 [DOI] [PubMed] [Google Scholar]

- García-Pérez MA, Alcalá-Quintana R. Shifts of the psychometric function: distinguishing bias from perceptual effects. Q J Exp Psychol (Hove) 66: 319–337, 2013 [DOI] [PubMed] [Google Scholar]

- Goldberg JM, Wilson VJ, Cullen KE, Angelaki DE, Broussard D, Büttner-Ennever J, Fukushima K, Minor LB. The Vestibular System. A Sixth Sense. New York: Oxford Univ. Press, 2011 [Google Scholar]

- Grabherr L, Nicoucar K, Mast FW, Merfeld DM. Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res 186: 677–681, 2008 [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. New York: Wiley, 1966 [Google Scholar]

- Groen JJ, Jongkees LB. The threshold of angular acceleration perception. J Physiol 107: 1–7, 1948 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci 10: 1038–1047, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guedry F. Psychophysics of vestibular sensation. In: Handbook of Sensory Physiology, edited by Kornhuber HH. New York: Springer, vol. VI, 1974 [Google Scholar]

- Haburcakova C, Lewis RF, Merfeld DM. Frequency dependence of vestibuloocular reflex thresholds. J Neurophysiol 107: 973–983, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hol K, Treue S. Different populations of neurons contribute to the detection and discrimination of visual motion. Vision Res 41: 685–689, 2001 [DOI] [PubMed] [Google Scholar]

- Janssen M, Lauvenberg M, van der Ven W, Bloebaum T, Kingma H. Perception threshold for tilt. Otol Neurotol 32: 818–825, 2011 [DOI] [PubMed] [Google Scholar]

- Leek MR. Adaptive procedures in psychophysical research. Percept Psychophys 63: 1279–1292, 2001 [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection Theory: a User's Guide. Mahwah, NJ: Erlbaum, 2005 [Google Scholar]

- MacNeilage PR, Banks MS, DeAngelis GC, Angelaki DE. Vestibular heading discrimination and sensitivity to linear acceleration in head and world coordinates. J Neurosci 30: 9084–9094, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mah RW, Young LR, Steele CR, Schubert ED. Threshold perception of whole-body motion to linear sinusoidal stimulation. In: AIAA Flight Simulation Technologies Conference and Exhibit. Boston, MA: AIAA, vol. AIAA-89-3273, 1989 [Google Scholar]

- Mallery RM, Olomu OU, Uchanski RM, Militchin VA, Hullar TE. Human discrimination of rotational velocities. Exp Brain Res 204: 11–20, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCullagh P, Nelder J. Generalized Linear Models (2nd ed.) Boca Raton, FL: Chapman and Hall, 1989 [Google Scholar]

- Merfeld DM. Signal detection theory and vestibular thresholds. I. Basic theory and practical considerations. Exp Brain Res 210: 389–405, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merfeld DM. Spatial orientation and the vestibular system. In: Sensation and Perception (3rd ed.). Sunderland, MA: Sinauer, chapt. 12, 2012 [Google Scholar]

- Morgan M, Dillenburger B, Raphael S, Solomon JA. Observers can voluntarily shift their psychometric functions without losing sensitivity. Attent Percept Psychophys 74: 185–193, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, Silverman GH. Detection and discrimination of sinusoidal grating displacements. J Opt Soc Am A 2: 267–274, 1985 [DOI] [PubMed] [Google Scholar]

- Naseri AR, Grant PR. Human discrimination of translational accelerations. Exp Brain Res 218: 455–464, 2012 [DOI] [PubMed] [Google Scholar]

- Norton T, Corliss D, Bailey J. The Psychophysical Measurement of Visual Function. Albuquerque, NM: Richmond Products, 2002 [Google Scholar]

- Ormsby C. Model of Human Dynamic Orientation (PhD thesis) Cambridge, MA: MIT, 1974 [Google Scholar]

- Parsons KC, Griffin MJ. Whole-body vibration perception thresholds. J Sound Vib 121: 237–258, 1988 [Google Scholar]

- Pelli DG, Farell B. Why use noise? J Opt Soc Am A 16: 647–653, 1999 [DOI] [PubMed] [Google Scholar]

- Pollehn H, Roehrig H. Effect of noise on the modulation transfer function of the visual channel. J Opt Soc Am 60: 842–848, 1970 [DOI] [PubMed] [Google Scholar]

- Robinson CJ, Purucker MC, Faulkner LW. Design, control, and characterization of a sliding linear investigative platform for analyzing lower limb stability (SLIP-Falls). IEEE Trans Rehabil Eng 6: 334–350, 1998 [DOI] [PubMed] [Google Scholar]

- Roditi RE, Crane BT. Directional asymmetries and age effects in human self-motion perception. J Assoc Res Otolaryngol 13: 381–401, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadeghi SG, Chacron MJ, Taylor MC, Cullen KE. Neural variability, detection thresholds, and information transmission in the vestibular system. J Neurosci 27: 771–781, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidman SH. Translational motion perception and vestiboocular responses in the absence of non-inertial cues. Exp Brain Res 184: 13–29, 2008 [DOI] [PubMed] [Google Scholar]

- Siegel RA, Colburn HS. Internal and external noise in binaural detection. Hear Res 11: 117–123, 1983 [DOI] [PubMed] [Google Scholar]

- Soyka F, Giordano PR, Barnett-Cowan M, Bulthoff HH. Modeling direction discrimination thresholds for yaw rotations around an earth-vertical axis for arbitrary motion profiles. Exp Brain Res 220: 89–99, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soyka F, Robuffo GP, Beykirch K, Bülthoff HH. Predicting direction detection thresholds for arbitrary translational acceleration profiles in the horizontal plane. Exp Brain Res 209: 95–107, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swets JA. The relative operating characteristic in psychology. Science 182: 990–1000, 1973 [DOI] [PubMed] [Google Scholar]

- Swets JA. Signal Detection Theory and ROC Analysis in Psychology and Diagnostics: Collected Papers. Mahwah, NJ: Erlbaum, 1996 [Google Scholar]

- Swets JA, Green DM, Tanner WP., Jr On the width of critical bands. J Acoust Soc Am 34: 108–113, 1962 [Google Scholar]

- Taylor MM, Creelman CD. PEST: efficient estimates on probability functions. J Acoust Soc Am 41: 782–787, 1967 [Google Scholar]

- Treutwein B. Adaptive psychophysical procedures. Vision Res 35: 2503–2522, 1995 [PubMed] [Google Scholar]

- Valko Y, Lim K, Priesol A, Lewis R, Merfeld DM. Human perceptual direction-recognition thresholds as a function of frequency (Abstract). Assoc Res Otolaryngol Abstr 2012: 200, 2012 [Google Scholar]

- Watamaniuk SN, Heinen SJ. Human smooth pursuit direction discrimination. Vision Res 39: 59–70, 1999 [DOI] [PubMed] [Google Scholar]

- Zupan LH, Merfeld DM. Interaural self-motion linear velocity thresholds are shifted by roll vection. Exp Brain Res 191: 505–511, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]