Abstract

Purposes

This paper reports the development and evaluation of a perceived cognitive function (pedsPCF) item bank reported by parents of the pediatric US general population.

Methods

Based on feedback from clinicians, parents, and children, we developed a scale sampling concerns related to children’s cognitive functioning. We administered the scale to 1,409 parents of children aged 7–17 years; of them, 319 had a neurological diagnosis. Dimensionality of the pedsPCF was evaluated via factor analyses and its clinical utility studied by comparing parent ratings in patient groups and symptom cluster defined by the Child Behavior Checklist (CBCL).

Results

Forty-four of 45 items met criteria for unidimensionality. The pedsPCF significantly differentiated samples defined by medication use, repeated grades, special education status, neurologic diagnosis, and relevant symptom clusters with large effect sizes (>0.8). It can predicted children symptoms with the correction rates ranging 79–89%.

Conclusions

We have provided empirical support for the unidimensionality of the pedsPCF item bank and evidence for its potential clinical utility. The pedsPCF is a promising measurement tool to screen children for further comprehensive cognitive tests.

Keywords: Perceived cognitive function, Children, Brain tumor, Neuro-oncology, Item bank

Introduction

Children with neurological disorders such as brain tumors and epilepsy commonly experience decrements in neurocognitive function [1, 2] which are traditionally assessed via neuropsychological testing. However, such testing is time- and resource-intensive, and practice effects can compromise its validity when it is readministered to monitor change over time. The ecological validity of office-based neuropsychological testing has also been questioned [3–6]. An alternative way to sample neurocognitive functioning is simply to ask a relative such as a parent for their perceptions of their child’s cognitive functioning (PCF). This method offers the advantage of a contextual perspective and improved ecological validity. The relationship between perceived and objectively measured cognitive function has been studied in populations such as geriatrics, [7–10] multiple sclerosis [11], and epilepsy, [12–14] with mixed results.

Recent studies have supported the validity of PCF ratings in relation to neuroimaging findings. For example, de Groot et al. [15] suggested that PCF might serve as an early indicator of white matter lesion progression and imminent cognitive decline. Ferguson et al. [16] studied a pair of monozygotic twins with similar neuropsychological test scores, and found the twin with poorer PCF to have more white matter hyperintensities on MRI and more diffuse brain activation during working memory processing than the other twin. Mahone et al. [17] found parent ratings of working memory in typically developing children to be significantly correlated with frontal gray matter volumes, while their performance on an objective working memory task was not.

Such results suggest that PCF may not only reflect current function but that it may predict cognitive decline before it can be detected by objective neuropsychological measures [7]. It is therefore worthwhile to consider the assessment of PCF in children, particularly those at risk for atypical cognitive development due to disease or treatment. We have developed a pediatric PCF item bank to address the need for a comprehensive, psychometrically sound, user-friendly tool for populations such as children with brain tumors.

An item bank is a group of questions designed to assess the full continuum of a construct [18, 19]. As long as the items are calibrated on the continuum, raters can respond to different sets of items at different points in time and those ratings can be compared directly, making it possible to monitor changes over time while minimizing practice effects due to repetitive administration of the same items. This method is particularly useful for brain tumor survivors, as their cognitive problems may not surface for years after completion of treatment, necessitating long-term monitoring. The brief-yet-precise format of PCF item bank applications permitted by modern measurement theory makes it an ideal tool for screening in busy clinical settings, to facilitate prompt screening referral for neuropsychological evaluation.

The primary objective of the present paper is to report the development of the pedsPCF item bank and evaluate the extent to which the cognitive complaints sampled by its questions conform to a unidimensional model, which is required for further item calibrations and development of a computerized adaptive testing platform. The first step in such a process is to ensure that items in the bank are comprehensive and correlate with a common underlying single factor. In addition, we also explore the predictive validity of the pedsPCF item bank in samples of children at risk for neurocognitive dysfunction due to reported neurological conditions.

Methods

This study was approved by Institutional Review Boards at all participating sites.

PedsPCF item development

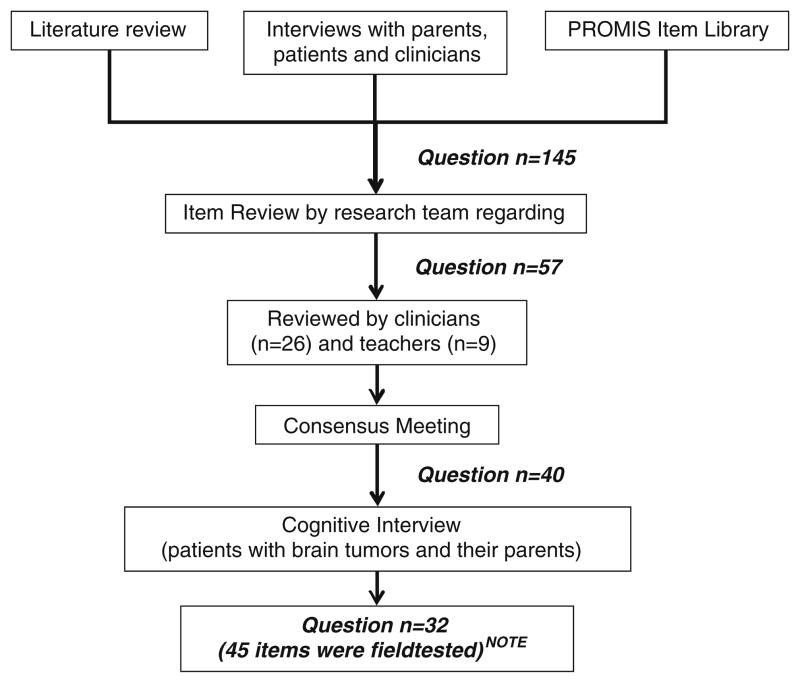

An initial item pool of 145 questions was generated after reviewing (1) existing instruments, (2) a NIH initiative Patient Reported Outcomes Measurement and Information System (PROMIS, www.nihpromis.org) item library, and (3) interview data from pediatric patients, their parents, teachers, and clinicians with experience treating children with neurological conditions (Fig. 1). For interviews to patient/parent, patients and one of their parents were recruited from the Falk Brain Tumor Center at Children’s Memorial Hospital in Chicago for a follow-up appointment between April and August 2002 (patients n=20 and parents n=20) and between July and September 2006 (patients n=20). There were no overlapping participants between these two sets of interviews. Patient inclusion criteria were: (1) at least 1-year post-treatment, (2) ages 7–21, (3) cognitively capable of expressing their concerns using their own words and (4) understand and speak English. The pediatric brain tumor population was selected as an initial clinical context for item development because the effects of brain tumors and their treatment upon neurocognitive functioning have been extensively studied [20].

Fig. 1.

Procedures to develop the pedsPCF item pool

NOTE: Of these 32 questions, 13 were tested by using both intensity and frequency rating scales. Therefore, 45 items were included in the field.

Members of the development team (JSL, FZ, ZB) independently rated whether the content of each item was appropriate for self and parent report for children ages 7–12, 13–17, or 18–21 years. Items with overlapping content were reviewed and redundant items removed. The entire development team (JSL, FZ, ZB, DC) then discussed the results of the above ratings and 57 questions were retained. A separate team of content reviewers was invited to read the 57 items via the web, e-mail, or fax, offering comments about appropriateness of item content, wording, and/or content gaps. The content reviewers consisted of 23 neuro-oncology physicians, one neuro-oncology nurse, one neuropsychologist, one speech therapist, and nine teachers who were experienced with students with medical conditions or other special needs (17.2 years of mean professional experience).

A consensus meeting was then held with 13 consulting clinicians/researchers and the development team members (none of whom had been members of the content reviewer team noted above) to review the content reviewers’ comments. At the conclusion of the meeting, 23 items were retained without change, 13 were modified, four new items were generated, and 17 items were deleted, resulting in a 40-question set.

We then evaluated readability and comprehensiveness of the 40 questions by conducting cognitive interviews with 27 children (ages 7–21) who were at least 1-year post-treatment at the Falk Brain Tumor Center, Children Memorial Hospital in Chicago. Results were summarized and discussed by the development team, and 32 questions were retained, some of which were modified based on patients’ feedback. All items were reviewed individually for content to determine whether an intensity (“from not at all” to “very much”) or frequency (from “none of the time” to “all of the time”) rating framework was more appropriate for that item. The study team ultimately decided to present 13 of the questions within both of the rating frameworks. Results and conclusions were reviewed and agreed upon by consensus.

In conclusion, 45 items (19 unique items plus 13 item pairs using both frequency and intensity framework as individual items) were included in the pedsPCF field test. Based on expert review, these items were presumed to tap the content areas of attention, concentration, executive function, language, spatial orientation, visuospatial ability, memory, personal orientation, and processing speed. Though parent-report and self-report versions of the 45-item survey were prepared, the focus of the current paper is the parent-report PCF item set.

Participants

Data from 1,409 parents drawn from the US general population were recruited (51.8% children aged 7–12 years; 48.2% adolescents aged 13–17 years) by an internet survey company, Greenfield Online Inc. (www.greenfield.com). Greenfield Online sent e-mails to invite potential participants from their database to participate in the present study. Potential participants were first screened by Greenfield via internet to ensure their eligibility (i.e., English-speaking, parents of children aged 7–17 years), after which parent-respondents completed a survey of demographic information, the pedsPCF items, and the Child Behavior Checklist (CBCL [21]). Participating parents completed the items with reference to an eligible child in the household who was available and who agreed to participate. Following parent responses, the child completed a self-report version of the pedsPCF items (data not reported here). Procedures for data quality control are described at http://www.greenfield-ciaosurveys.com/html/qualityassurance.htm. Recruitment was terminated when the pre-set goal was reached.

The mean age of children/adolescents rated was 12.3 years (SD=3.0), with 55.5% male and 83% white. Three hundred nineteen (22.6%) parents indicated that they had been told by a physician or a health professional that their child had the following conditions: epilepsy (15.0%), traumatic brain injury (3.4%), cerebral palsy (2.6%), and brain tumor (1.6%). Table 1 provides detailed demographic and clinical information about parent respondents (Table 1) and the children/adolescents they rated (Table 2).

Table 1.

Sample demographic and clinical information—parent information (n=1,409)

| Variable | Percentage | |

|---|---|---|

| Age | Mean=39.95 (SD=8.34); range: 25–65 years | |

| Hispanic origin | % Yes | 16.5 |

| Racea | White | 83.0 |

| African American | 8.9 | |

| Asian | 3.4 | |

| Marital status | Never married | 7.7 |

| Married | 68.0 | |

| Live w/partner | 11.4 | |

| Separated | 2.6 | |

| Divorced | 8.9 | |

| Widowed | 1.4 | |

| Relationship to childb | Father | 40.0 |

| Mother | 60.0 | |

| Education (father n=563)b | Less than high school | 2.5 |

| Some high school | 4.8 | |

| High school grad | 17.9 | |

| Some college | 31.1 | |

| College degree | 33.6 | |

| Advanced degree | 10.1 | |

| Education (mother n=846)b | Less than high school | 3.1 |

| Some high school | 5.8 | |

| High school grad | 28.4 | |

| Some college | 37.6 | |

| College degree | 19.7 | |

| Advanced degree | 5.4 | |

Not mutually exclusive categories

Only one parent participated in the study, and only that parent provided education information

Table 2.

Sample demographic and clinical informationb—child/adolescent information

| Variable | Total samples | Neurological diagnosisa

|

||

|---|---|---|---|---|

| No | Yes | p valueb | ||

| N | 1,409 | 1,090 | 319 | |

| Age (mean, SD; years) | 12.3 (3.0) | 12.42(3.0) | 12.03 (3.9) | 0.039 |

| Days missed school in the past month (mean, SD; days) | 1.98 (3.2) | 1.44 (2.4) | 3.90 (4.7) | <0.001 |

| Gender (%) | ||||

| Male | 56.8 | 53.7 | 67.4 | <0.001 |

| Have received mental health services (%)c | ||||

| Yes | 29.5 | 21.0 | 58.3 | <0.001 |

| No | 70.6 | 79.0 | 41.7 | |

| Have been diagnosed (%) | ||||

| ADHD/ADD | 26.4 | 21.4 | 43.6 | <0.001 |

| Depression | 16.0 | 9.6 | 37.6 | <0.001 |

| Anxiety | 15.8 | 9.9 | 35.7 | <0.001 |

| Oppositional defiant disorder | 6.0 | 3.9 | 13.2 | <0.001 |

| Conduct disorder | 6.3 | 3.5 | 16.0 | <0.001 |

| Given medication for attentional difficulties (%) | 29.2 | 21.4 | 55.8 | <0.001 |

| Given medication for other mental health problem (in %) | 18.5 | 10.0 | 47.6 | <0.001 |

| Ever repeated a grade (% yes) | 16.3 | 11.3 | 32.3 | <0.001 |

| Quality of life in general (rated by parents; in %) | <0.001 | |||

| Poor | 0.8 | 0.4 | 2.2 | |

| Fair | 9.9 | 5.3 | 25.7 | |

| Good | 23.0 | 21.8 | 27.0 | |

| Very good | 42.2 | 45.1 | 32.6 | |

| Excellent | 24.1 | 27.4 | 12.5 | |

| CBCL attentional Problems (in %) | ||||

| Normal | 77.6 | 85.1 | 52.4 | <0.001 |

| Borderline clinical | 10.2 | 7.5 | 19.4 | |

| Clinical | 12.1 | 7.4 | 28.2 | |

| CBCL social problems (in %) | ||||

| Normal | 72.0 | 80.1 | 44.2 | <0.001 |

| Borderline clinical | 9.2 | 7.5 | 15.1 | |

| Clinical | 18.8 | 12.4 | 40.8 | |

| CBCL thought problems (in %) | ||||

| Normal | 72.5 | 77.8 | 54.2 | <0.001 |

| Borderline clinical | 4.7 | 3.5 | 8.8 | |

| Clinical | 22.9 | 18.7 | 37.0 | |

Specifically, epilepsy (15.0%), traumatic brain injury (3.4%), cerebral palsy (2.6%), and brain tumor (1.6%)

Significance tests between children described as with and without a neurological diagnosis

Types of services include % for all samples who endorsed “received mental services”, those who had neurological conditions” and those who “do not have neurological conditions”, respectively): social services through school (51.6%, 61.8%, 43.2%), clinic/outpatient counseling (50.7%, 60.2%, 79.0%); day hospital, or partial hospitalization (14.0%, 22.6%, 7.0%), hospitalization (15.2%, 21.5%, 10.0%)

Analysis

We evaluated the unidimensionality of items using factor analyses. Data were randomly divided into two datasets using SAS v9.1 (SAS Institute; Gary, NC). We performed an exploratory factor analysis (EFA) on dataset 1 (n=703) to evaluate the number of potential factors among pedsPCF items, followed by a bi-factor analysis, [22, 23] a procedure related to confirmatory factor analysis (CFA), on dataset 2 (n=706) to confirm the EFA results. Bi-factor analysis was conducted by using MPlus 5.2 (Muthen & Muthen; Los Angeles, CA). In EFA, we determined the number of factors using the following criteria: (1) number of factors with eigenvalue >1, (2) review of the scree plot (i.e., number of factors before the break in scree plot, and (3) number of factors that explained >5% of variance. A promax rotation was then used to examine the association among factors by examining their loadings (criterion: >0.4) and inter-factor correlations.

Bi-factor analysis is a technique that is relatively new, though its potential contribution to health outcomes research has been recognized, particularly for the development of item banks. The bi-factor model tests the specific hypothesis of whether unidimensionality of items is supported by taking sub-domains into account. The model consists of a general factor (defined by loadings from all of the items) and local factors (defined by loadings from pre-specified groups of items related to their sub-domains). The general and local factors are modeled as orthogonal and therefore the relationship between items and the general factor is not constrained to be proportional to the relationship between the first- and second-order factors as demonstrated in other hierarchical CFA. Consequently, the relationship between items and factors is simpler to interpret. When the general factor explains covariance between items, uniformly high standardized loadings are seen upon the general factor, indicating that it is appropriate to report a single (e.g., pedsPCF) index score. If the sub-domains represent demonstrably separate constructs, loadings on the general factor will not be uniformly high, leading one to reject the conclusion that the items are sufficiently unidimensional, making it more appropriate to report scores of sub-domains separately. We have successfully employed this technique to evaluate the dimensionality of self-reported fatigue [24] and PCF in adult cancer patients [25].

Commonly accepted fit index cutoff values have been derived from historic experience with normally distributed samples and much shorter and more homogeneous item sets than are being tested in typical item banks. Item banks, on the other hand, attempt to cover somewhat more expansive, yet still definable constructs. With large numbers of items, there are many opportunities for subsets of items to have shared variance not accounted for by the dominant trait. Cook et al. [26] recently conducted a simulation study comparing fit indices under various length of the survey and data distributions. They concluded that the CFA fit values are sensitive to influences other than dimensionality of the data and recommend the use of bi-factor analysis as an adequate and informative approach for developing an item bank. Therefore, in this study, we determined the unidimensionality of the pedsPCF item bank using criteria recommended by McDonald, [22] Reise et al., [23] and Cook et al. [26] Specifically, sufficient unidimensionality of the pedsPCF items were supported when (1) items had larger loadings on the general factor than on the local factor, and (2) more variances were explained by the general factor than by the local factors. Local dependency between items are evaluated by using residual correlations (criteria: absolute value <0.15).

Once the final dimensionality (i.e., numbers of factors/ scores that should be reported) of the pedsPCF item set was determined, we explored its criterion-related validity using subsamples of the overall dataset (n=1,409). T tests were used to evaluate whether pedsPCF scores could significantly differentiate subgroups of children described by their parents as follows: (1) those with neurological conditions, (2) those who had repeated a grade, (3) those who had taken “attentional difficulty medication”, and (4) those were rated in the borderline or clinically significant range by parents on CBCL scales sampling concerns related to attention, social behavior, and thought processes as described in the CBCL manual. Effect sizes (ES; Cohen’s d) were calculated to demonstrate the strength of the comparisons. An ES is considered large when it exceeds 0.8. Finally, we evaluated whether the pedsPCF could predict group membership (neurological condition, normal versus clinical ranges in attentional, social, and thought problems) via discriminant function analysis (DFA) as implemented in the Statistical Package for the Social Sciences (SPSS; Chicago, IL). DFA provided an estimate of how well the pedsPCF item set predicted group membership, as indicated by overall accuracy of classification (i.e., hit ratio). A criterion Press’ Q of p<0.001 was used as indication that the DFA classification hit ratio did not occur by chance. Canonical correlations were used to represent the correlations between the predictors and the discriminant function, interpreted as the proportion of variance explained (r2) by the model being tested.

Results

Analysis results

The item “my child is able to keep his/her mind on things like homework or reading” showed consistently low Spearman’s rho to other items, and a low item-scale correlation. It was suspected that some participants might not have noticed that, unlike the other items, it was positively framed. This item was excluded from further analyses.

EFA results identified eigenvalues greater than one on three factors (31.7, 2.1, and 1.3, respectively), but only the first factor explained more than 5% of variance, and one factor appeared before the elbow in the scree plot. Results of the promax rotation support a single common factor among the 44 items (original 45 items minus the item above which was excluded). When factors were assumed to be independent from each other (i.e., standardized regression coefficients), items loaded across three factors; yet inter-factor correlations ranged from 0.6 to 0.7. When factors were assumed to be correlated, all items had loadings greater than 0.4 on all three factors, with loading ranges of 0.5–0.9, 0.6–0.9, and 0.5–0.9 for factors 1, 2, and 3, respectively. The EFA results strongly suggest unidimensionality among these 44 items; yet they could also be divided into three moderately correlated subdomains, which we named “memory retrieval”, “attention/ concentration”, and “working memory.”

A bi-factor analysis was conducted in which the general factor was “PCF” and local factors (i.e., sub-domains) were “memory retrieval”, “attention/concentration”, and “working memory” with reference to Promax rotation results. Results showed that all items had loadings >0.3 (range: 0.7–0.9) to the general factor and all were larger than loadings to their local factors. The local factors accounted for 9.3% of total variance and the general factor accounted for 69.6% of total variance. No item-pair had a residual correlation greater than 0.15, suggesting local independence (i.e., no redundant items from a measurement’s perspective) among these 44 items. Particularly for the 13 item pairs, which shared the same item content but used two types of rating frameworks, all but two had residual correlations less than 0.1 (range: 0.002–0.084). Two exceptions were 0.147 and 0.126 but still less than 0.15. These results indicated for these items, two different rating scales did provide different perspectives in children’s cognitive function in their daily lives. These results confirm the unidimensionality of the 44 pedsPCF items, and suggest that, from a measurement perspective, a single pedPCF score is more appropriate than three separate scores.

The pedsPCF demonstrated excellent internal consistency (alpha =0.99), with item-scale correlations ranging from 0.7 to 0.9. The pedsPCF index significantly differentiated clinical subgroups as defined by parent reports: children with and without neurological conditions, t= −14.6 p<0.001; children who had repeated a grade versus those who had not, t= −11.3 p<0.001; and children who had taken “attentional difficulty medication” versus not at all, t=20.1 p<0.001. Of those taking attentional difficulty medication, children who had taken such medication within the past 3 months were discriminated from those who had not, t=4.1, p<0.001. We then compared CBCL parent-report ratings in three problems areas (attention problems, social problems, and thought problems) to national norms, grouping children into putative “normal” and “clinical” ranges. The pedsPCF index significantly (p<0.001) differentiated between children in normal vs. clinical ranges, t= −30.6, −24.6, and −17.8 for scales of attention, social, and thought problems, respectively. Large ESs were found for all three problems areas, ES=3.0, 2.1, and 1.5 for attention, social, and thought problems, respectively (see Table 3).

Table 3.

pedsPCF scores comparisons between groups

| Variable | Means

|

t value | Effect sizea | |

|---|---|---|---|---|

| Group 1 (n) | Group 2 (n) | |||

| Attention problems | 1.589 (1,094) | 3.069 (315) | −30.6b | 3.0 |

| Social problems | 1.583 (1,014) | 2.783 (395) | −24.6b | 2.1 |

| Thought problems | 1.667 (1,021) | 2.585 (388) | −16.8b | 1.5 |

Group 1, normal range; group 2, borderline clinical or clinical range

Effect size (ES), estimated by Cohen’s d, is considered large when ES≥0.8, medium when 0.5≤ES<0.8, and small when 0.2≤ES<0.5

Significance p < 0.0001

DFA results (presented in Table 4) show that pedsPCF significantly (p<0.001) predicted children’s clinical groupings, with canonical correlation of 0.4 (explained variance= 16.9%), 0.7 (49.3%), 0.6 (37.6%), 0.5 (21.7%) for children with neurological condition(s), attentional, social, and thought problems, respectively. Additionally, significant hit ratios were found for all classifications with the Press’ Q all greater than critical value at p<0.001. Specifically, 78.6%, 88.6%, 82.8%, and 78.6% of the total samples were classified correctly regarding neurological condition(s), attentional, social, and thought, respectively.

Table 4.

Discriminant function analysis results

| Variable | Group | Canonical correlationa (explained variance; %) | Classification (% correct)b | Predicted group membership

|

|

|---|---|---|---|---|---|

| Group 1 (%) | Group 2 (%) | ||||

| Neurological condition | 1 (No; n=1,090) | 0.4 (16.9) | 78.6 | 92.5 | 7.5 |

| 2 (Yes; n=319) | 69.0 | 31.0 | |||

| Attentional problems | 1 (Normal; n=1,094) | 0.7 (49.3) | 88.6 | 94.1 | 5.9 |

| 2 (Clinical; n=315)c | 30.8 | 69.2 | |||

| Social problems | 1 (Normal; n=1,014) | 0.6 (37.6) | 82.8 | 92.0 | 8.0 |

| 2 (Clinical; n=395)c | 40.8 | 59.2 | |||

| Thought problems | 1 (Normal; n=1,021) | 0.8 (21.7) | 78.6 | 91.6 | 8.4 |

| 2 (Clinical; n=388)c | 55.4 | 44.6 | |||

All correlations are statistically significant, p< 0.0001

Percentage of overall correct classification (i.e., hit ratio). Press’ Q was significant, p< 0.001 for all the classification results

Defined by CBCL national norm, and “clinical” includes sample whose scores fall into eithear borderline clinical or clinical ranges

Discussion

In this study, we developed a pedsPCF item bank that is designed for pediatric brain tumor patients and other populations of children and adolescents at risk for neurocognitive impairment. The pedsPCF demonstrated satisfactory psychometric properties, with excellent internal consistency. The overwhelmingly large variance explained by the general factor and the significant factor loadings on the general factor compared to the local factor support the unidimensionality of the pedsPCF item bank. It significantly differentiated children rated by parents in the normal vs. elevated range on three CBCL symptom scales. Furthermore, it classified children into parent-reported clinical categories at significant accuracy rates. These data indicate that the 44 pedsPCF items hold considerable promise as a tool for research and clinical application. Our next step is to calibrate these items using Item Response Theory models, evaluate the stability of measurement properties across various factors such as race and gender to minimize potential measurement bias, and finally establish a pedsPCF computerized adaptive testing platform.

We focused on parent-report PCF data for three reasons. First, studies comparing parent and child self-report have found the former to be better correlated with clinical indicators than the latter [27–29]. Second, parents typically have primary responsibility for medical decision-making about their children’s care. A third and equally important reason stems from the gradual emergence over time of children’s metacognition or knowledge about their own cognitive processes [30, 31]. Though quality of life is a self-referenced phenomenon which may, even in young children, be at least partly amenable to sampling via self-report, self-assessment of cognitive functioning poses a greater challenge to younger children with limited metacognition. As a result, parents may be more sensitive to the presence of their children’s cognitive difficulties than children themselves [32]. Future studies should be conducted to evaluate similarities and differences between parent-reported and self-reported PCF measures, to understand the emergence of PCF self-awareness with age, and further, to explore the possibility of cross-referencing parent-report and self-report data using rigorous psychometric approaches [33]. The development of such cross-referenced PCF indices will enable longitudinal PCF monitoring from childhood, reported by parents, into adulthood, reported by patients themselves.

The clinical utility of patient self-reported cognition has been questioned, due to its limited associations with traditional neuropsychological test results [12, 34]. Multiple factors likely contribute to inconsistent findings in those studies, including variation in statistical methods and most importantly, the psychometric properties of the scales used to sample PCF. Item content alone may attenuate the level of concordance between PCF and measured cognitive function. For instance, some studies have found low correlations between laboratory tasks and self-reported perceived memory function but significantly stronger associations between neuropsychological tests and PCF when PCF questions focus on “real life” memory skills [35]. In a normal elderly sample, a direct relationship between perceived and measured memory function was only evidenced when self-report items and test variables were both directly related to remote memory [36]. The results of the current study lay the groundwork for future research describing the relationship between “perceived” and “measured” cognitive function in children and adolescents.

This study’s rigorous approach to item development, its large sample size, and its extensive statistical analyses are noteworthy strengths. However, we acknowledge weaknesses of the current study as well. Because our data were collected via the internet, families without internet access at home—particularly those of low socioeconomic status—were likely underrepresented. As a convenience sample, participants were not stratified and cannot, therefore, be assumed to be nationally representative. We plan to evaluate the impact of socioeconomic status upon parent-reported pedsPCF in future studies, with recruitment either in person or by telephone interview. Test–retest reliability was not assessed and should be examined in a future study. By using the IRT framework, we plan to evaluate the reliability of pedsPCF scores overtime in a future study by comparing scores from two or more parallel forms of pedsPCF CAT. Another limitation is that all information in the current study was from parent report, with no objective measures included to evaluate pedsPCF validity. We consider the current findings an initial step that establishes the unidimensionality of PCF, and we look forward to future research allowing us to assess the association of parent-perceived cognitive function with other relevant indicators. We also recognize that the current study does not directly address the ability of pedsPCF to discriminate cognitive function within the brain tumor population; studies that address this specific question are currently in progress.

In conclusion, we have provided empirical support for the unidimensionality of the pedsPCF item bank and preliminary evidence for its potential clinical utility. The pedsPCF is a promising measurement tool to screen children for further comprehensive cognitive tests.

Acknowledgments

This project is supported by the National Cancer Institute (Grant number: R01CA125671; PI: Jin-Shei Lai). Dr. Butt’s time is supported in part by grant KL2RR0254740 from the National Center for Research Resources, National Institutes of Health.

Appendix

Table 5.

Parent-reported pedsPCF item bank (pedsPCF)

| Item stem | Rating scalea |

|---|---|

| It is hard for your child to find his/her way to a place that he/she has visited several times before | Frequency |

| Your child has trouble remembering where he/she put things, like his/her watch or his/her homework | Frequency |

| Your child has trouble remembering the names of people he/she has just met | Frequency |

| Your child is able to keep his/her mind on things like homework or reading | Frequency |

| It is hard for your child to take notes in class | Intensity |

| It is hard for your child to learn new things | Intensity |

| It is hard for your child to understand pictures that show how to make something | Intensity |

| It is hard for your child to pay attention to something boring he/she has to do | Intensity |

| It is hard for your child to pay attention to one thing for more than 5–10 min | Frequency |

| Your child has trouble recalling the names of things | Frequency |

| Your child has trouble keeping track of what he/she is doing if he/she gets interrupted | Frequency |

| It is hard for your child to do more than one thing at a time | Frequency |

| Your child forgets what his/her parents or teachers ask him/her to do | Frequency |

| Your child walks into a room and forgets what he/she wanted to get or do | Frequency |

| Your child has trouble remembering the names of people he/she knows | Frequency |

| It is hard for your child to add or subtract numbers in his/her head | Intensity |

| Your child has trouble remembering the date or day of the week | Frequency |

| When your child has a big project to do, he/she has trouble deciding where to start | Frequency |

| Your child has to contact his/her friends for homework he/she forget | Frequency |

| Your child has a hard time keeping track of his/her homework | Intensity and frequencyb |

| Your child forgets to bring things to and from school that he/she needs for homework | Intensity and frequencyb |

| Your child forgets what he/she is going to say | Intensity and frequencyb |

| Your child has to read things several times to understand them | Intensity and frequencyb |

| Your child reacts slower than most people his/her age when he/she plays games | Intensity and frequencyb |

| It is hard for your child to find the right words to say what he/she means | Intensity and frequencyb |

| It takes your child longer than other people to get his/her school work done | Intensity and frequencyb |

| Your child forgets things easily | Intensity and frequencyb |

| Your child has to use written lists more often than other people his/her age so he/she will not forget things | Intensity and frequencyb |

| Your child has trouble remembering to do things like school projects or chores | Intensity and frequencyb |

| It is hard for your child to concentrate in school | Intensity and frequencyb |

| Your child has trouble paying attention to the teacher | Intensity and frequencyb |

| Your child has to work really hard to pay attention or he/she makes mistakes | Intensity and frequencyb |

Frequency: 1=none of the time, 2=a little of the time, 3=some of the time, 4=most of the time, 5=all of the time. Intensity: 1=not at all, 2=a little bit, 3=somewhat, 4=quite a bit, 5=very much

No local dependency is found for items that share the same item stem but are measured by both frequency and intensity types of rating scales. Therefore, both ratings are retained

Contributor Information

Jin-Shei Lai, Email: js-lai@northwestern.edu, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, 710 N Lake Shore Drive, #724, Chicago, IL 60611, USA. Pediatrics, Feinberg School of Medicine, Northwestern University, Chicago, IL, USA.

Frank Zelko, Children’s Memorial Hospital, Chicago, IL, USA. Psychiatry and Behavior Science, Feinberg School of Medicine, Northwestern University, Chicago, IL, USA.

Zeeshan Butt, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, 710 N Lake Shore Drive, #724, Chicago, IL 60611, USA. Comprehensive Transplant Center, Northwestern University, Chicago, IL, USA.

David Cella, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, 710 N Lake Shore Drive, #724, Chicago, IL 60611, USA.

Mark W. Kieran, Children’s Hospital Boston and Dana Farber Cancer Institute, Harvard Medical School, Boston, MA, USA

Kevin R. Krull, St Jude Children’s Research Hospital, Memphis, TN, USA

Susan Magasi, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, 710 N Lake Shore Drive, #724, Chicago, IL 60611, USA.

Stewart Goldman, Pediatrics, Feinberg School of Medicine, Northwestern University, Chicago, IL, USA. Children’s Memorial Hospital, Chicago, IL, USA.

References

- 1.Mulhern RK, Merchant TE, Gajjar A, Reddick WE, Kun LE. Late neurocognitive sequelae in survivors of brain tumours in childhood. Lancet Oncol. 2004;5:399–408. doi: 10.1016/S1470-2045(04)01507-4. [DOI] [PubMed] [Google Scholar]

- 2.Meador KJ. Cognitive outcomes and predictive factors in epilepsy. Neurology. 2002;58:S21–S26. doi: 10.1212/wnl.58.8_suppl_5.s21. [DOI] [PubMed] [Google Scholar]

- 3.Conway MA. In defense of everyday memory. Am Psychol. 1991;46:19–26. [Google Scholar]

- 4.Loftus EF. The glitter of everyday memory and the gold. Am Psychol. 1991;46:16–18. doi: 10.1037//0003-066x.46.1.16. [DOI] [PubMed] [Google Scholar]

- 5.Chaytor N, Schmitter-Edgecombe M. The ecological validity of neuropsychological tests: a review of the literature on everyday cognitive skills. Neuropsychol Rev. 2003;13:181–197. doi: 10.1023/b:nerv.0000009483.91468.fb. [DOI] [PubMed] [Google Scholar]

- 6.Silver CH. Ecological validity of neuropsychological assessment in childhood traumatic brain injury. J Head Trauma Rehabil. 2000;15:973–988. doi: 10.1097/00001199-200008000-00002. [DOI] [PubMed] [Google Scholar]

- 7.Dufouil C, Fuhrer R, Alperovitch A. Subjective cognitive complaints and cognitive decline: consequence or predictor? The epidemiology of vascular aging study. J Am Geriatr Soc. 2005;53:616–621. doi: 10.1111/j.1532-5415.2005.53209.x. [DOI] [PubMed] [Google Scholar]

- 8.Zimprich D, Martin M, Kliegel M. Subjective cognitive complaints, memory performance, and depressive affect in old age: a change-oriented approach. Int J Aging Hum Dev. 2003;57:339–366. doi: 10.2190/G0ER-ARNM-BQVU-YKJN. [DOI] [PubMed] [Google Scholar]

- 9.Blazer DG, Hays JC, Fillenbaum GG, Gold DT. Memory complaint as a predictor of cognitive decline: a comparison of African American and white elders. J Aging Health. 1997;9:171–184. doi: 10.1177/089826439700900202. [DOI] [PubMed] [Google Scholar]

- 10.Jorm AF, Christensen H, Korten AE, Henderson AS, Jacomb PA, MacKinnon A. Do cognitive complaints either predict future cognitive decline or reflect past cognitive decline? A longitudinal study of an elderly community sample. Psychol Med. 1997;27:91–98. doi: 10.1017/s0033291796003923. [DOI] [PubMed] [Google Scholar]

- 11.Christodoulou C, Melville P, Scherl WF, Morgan T, MacAllister WS, Canfora DM, Berry SA, Krupp LB. Perceived cognitive dysfunction and observed neuropsychological performance: longitudinal relation in persons with multiple sclerosis. J Int Neuropsychol Soc. 2005;11:614–619. doi: 10.1017/S1355617705050733. [DOI] [PubMed] [Google Scholar]

- 12.Banos JH, LaGory J, Sawrie S, Faught E, Knowlton R, Prasad A, Kuzniecky R, Martin RC. Self-report of cognitive abilities in temporal lobe epilepsy: cognitive, psychosocial, and emotional factors. Epilepsy Behav. 2004;5:575–579. doi: 10.1016/j.yebeh.2004.04.010. [DOI] [PubMed] [Google Scholar]

- 13.Allen CC, Ruff RM. Self-rating versus neuropsychological performance of moderate versus severe head-injured patients. Brain Inj. 1990;4:7–17. doi: 10.3109/02699059009026143. [DOI] [PubMed] [Google Scholar]

- 14.Kadis DS, Stollstorff M, Elliott I, Lach L, Smith ML. Cognitive and psychological predictors of everyday memory in children with intractable epilepsy. Epilepsy Behav. 2004;5:37–43. doi: 10.1016/j.yebeh.2003.10.008. [DOI] [PubMed] [Google Scholar]

- 15.de Groot JC, de Leeuw FE, Oudkerk M, Hofman A, Jolles J, Breteler MM. Cerebral white matter lesions and subjective cognitive dysfunction: the Rotterdam Scan Study. Neurology. 2001;56:1539–1545. doi: 10.1212/wnl.56.11.1539. [DOI] [PubMed] [Google Scholar]

- 16.Ferguson RJ, McDonald BC, Saykin AJ, Ahles TA. Brain structure and function differences in monozygotic twins: possible effects of breast cancer chemotherapy. J Clin Oncol. 2007;25:3866–3870. doi: 10.1200/JCO.2007.10.8639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mahone EM, Martin R, Kates WR, Hay T, Horska A. Neuroimaging correlates of parent ratings of working memory in typically developing children. J Int Neuropsychol Soc. 2009;15:31–41. doi: 10.1017/S1355617708090164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Soni MK, Cella D. Quality of life and symptom measures in oncology: an overview. Am J Manage Care. 2002;8:S560–S573. [PubMed] [Google Scholar]

- 19.Hahn EA, Cella D. Health outcomes assessment in vulnerable populations: measurement challenges and recommendations. Arch Phys Med Rehabil. 2003;84:S35–S42. doi: 10.1053/apmr.2003.50245. [DOI] [PubMed] [Google Scholar]

- 20.Nathan PC, Patel SK, Dilley K, Goldsby R, Harvey J, Jacobsen C, Kadan-Lottick N, McKinley K, Millham AK, Moore I, Okcu MF, Woodman CL, Brouwers P, Armstrong FD. Guidelines for identification of, advocacy for, and intervention in neurocognitive problems in survivors of childhood cancer: a report from the Children’s Oncology Group. Arch Pediatr Adolesc Med. 2007;161:798–806. doi: 10.1001/archpedi.161.8.798. [DOI] [PubMed] [Google Scholar]

- 21.Achenbach TM. Integrative guide to the 1991 CBCL/4–18, YSR, and TRF profiles. University of Vermont, Department of Psychology; Burlington, VT: 1991. [Google Scholar]

- 22.McDonald RP. Test Theory: A unified treatment. Lawrence Earlbaum Associates, Inc; Mahwah, NJ: 1999. [Google Scholar]

- 23.Reise SP, Morizot J, Hays RD. The role of the bifactor model in resolving dimensionality issues in health outcomes measures. Qual Life Res. 2007;16(Suppl 1):19–31. doi: 10.1007/s11136-007-9183-7. [DOI] [PubMed] [Google Scholar]

- 24.Lai JS, Crane PK, Cella D. Factor analysis techniques for assessing sufficient unidimensionality of cancer related fatigue. Qual Life Res. 2006;15:1179–1190. doi: 10.1007/s11136-006-0060-6. [DOI] [PubMed] [Google Scholar]

- 25.Lai JS, Butt Z, Wagner L, Sweet JJ, Beaumont JL, Vardy J, Jacobsen PB, Jacobs SR, Shapiro PJ, Cella D. Evaluating the dimensionality of perceived cognitive function. J Pain Symptom Manage. 2009;37:982–995. doi: 10.1016/j.jpainsymman.2008.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cook KF, Kallen MA, Amtmann D. Having a fit: impact of number of items and distribution of data on traditional criteria for assessing IRT’s unidimensionality assumption. Qual Life Res. 2009;18:447–460. doi: 10.1007/s11136-009-9464-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Czyzewski DI, Mariotto MJ, Bartholomew LK, LeCompte SH, Sockrider MM. Measurement of quality of well being in a child and adolescent cystic fibrosis population. Med Care. 1994;32:965–972. doi: 10.1097/00005650-199409000-00007. [DOI] [PubMed] [Google Scholar]

- 28.Hinds PS, Hockenberry M, Rai SN, Zhang L, Razzouk BI, Cremer L, McCarthy K, Rodriguez-Galindo C. Clinical field testing of an enhanced-activity intervention in hospitalized children with cancer. J Pain Symptom Manage. 2007;33:686–697. doi: 10.1016/j.jpainsymman.2006.09.025. [DOI] [PubMed] [Google Scholar]

- 29.Lustig RH, Hinds PS, Ringwald-Smith K, Christensen RK, Kaste SC, Schreiber RE, Rai SN, Lensing SY, Wu S, Xiong X. Octreotide therapy of pediatric hypothalamic obesity: a double-blind, placebo-controlled trial. J Clin Endocrinol Metab. 2003;88:2586–2592. doi: 10.1210/jc.2002-030003. [DOI] [PubMed] [Google Scholar]

- 30.Flavell JH. Metacognitive and cognitive monitoring: a new area of cognitive developmental inquiry. Am Psychol. 1979;34:906–911. [Google Scholar]

- 31.Schwartz BL, Perfect TJ, Perfect T. Applied metacognition. Cambridge University Press; West Nyack: 2002. Introduction: toward an applied metacognition; p. 1EP-10. [Google Scholar]

- 32.Mahone EM, Zabel TA, Levey E, Verda M, Kinsman S. Parent and self-report ratings of executive function in adolescents with myelomeningocele and hydrocephalus. Child Neuropsychol. 2002;8:258–270. doi: 10.1076/chin.8.4.258.13510. [DOI] [PubMed] [Google Scholar]

- 33.Chen WH, Revicki DA, Lai JS, Cook KF, Amtmann D. Linking pain items from two studies onto a common scale using item response theory. J Pain Symptom Manage. 2009;38:615–628. doi: 10.1016/j.jpainsymman.2008.11.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Castellon SA, Ganz PA, Bower JE, Petersen L, Abraham L, Greendale GA. Neurocognitive performance in breast cancer survivors exposed to adjuvant chemotherapy and tamoxifen. J Clin Exp Neuropsychol. 2004;26:955–969. doi: 10.1080/13803390490510905. [DOI] [PubMed] [Google Scholar]

- 35.Bennett-Levy J, Polkey CE, Powell GE. Self-report of memory skills after temporal lobectomy: the effect of clinical variables. Cortex. 1980;16:543–557. doi: 10.1016/s0010-9452(80)80002-5. [DOI] [PubMed] [Google Scholar]

- 36.Larrabee GJ, Levin HS. Memory self-ratings and objective test performance in a normal elderly sample. J Clin Exp Neuropsychol. 1986;8:275–284. doi: 10.1080/01688638608401318. [DOI] [PubMed] [Google Scholar]