Abstract

The graph realization problem has received a great deal of attention in recent years, due to its importance in applications such as wireless sensor networks and structural biology. In this paper, we extend the previous work and propose the 3D-As-Synchronized-As-Possible (3D-ASAP) algorithm, for the graph realization problem in ℝ3, given a sparse and noisy set of distance measurements. 3D-ASAP is a divide and conquer, non-incremental and non-iterative algorithm, which integrates local distance information into a global structure determination. Our approach starts with identifying, for every node, a subgraph of its 1-hop neighborhood graph, which can be accurately embedded in its own coordinate system. In the noise-free case, the computed coordinates of the sensors in each patch must agree with their global positioning up to some unknown rigid motion, that is, up to translation, rotation and possibly reflection. In other words, to every patch, there corresponds an element of the Euclidean group, Euc(3), of rigid transformations in ℝ3, and the goal was to estimate the group elements that will properly align all the patches in a globally consistent way. Furthermore, 3D-ASAP successfully incorporates information specific to the molecule problem in structural biology, in particular information on known substructures and their orientation. In addition, we also propose 3D-spectral-partitioning (SP)-ASAP, a faster version of 3D-ASAP, which uses a spectral partitioning algorithm as a pre-processing step for dividing the initial graph into smaller subgraphs. Our extensive numerical simulations show that 3D-ASAP and 3D-SP-ASAP are very robust to high levels of noise in the measured distances and to sparse connectivity in the measurement graph, and compare favorably with similar state-of-the-art localization algorithms.

Keywords: graph realization, distance geometry, eigenvectors, synchronization, spectral graph theory, rigidity theory, SDP, the molecule problem, divide and conquer

1. Introduction

In the graph realization problem, one is given a graph G =(V, E) consisting of a set of |V| = n nodes and |E| = m edges, together with a non-negative distance measurement dij associated with each edge, and is asked to compute a realization of G in the Euclidean space ℝd for a given dimension d. In other words, for any pair of adjacent nodes i and j, (i, j) ∈ E, the distance dij =dji is available, and the goal is to find a d-dimensional embedding p1, p2,…, pn ∈ ℝd such that ∥pi − pj∥=dij, for all (i, j) ∈ E.

Owing to its practical significance, the graph realization problem has attracted a lot of attention in recent years, across many communities. The problem and its variants come up naturally in a variety of settings such as wireless sensor networks [10, 54], structural biology [32], dimensionality reduction, Euclidean ball packing and multidimensional scaling (MDS) [20]. In such real-world applications, the given distances dij between adjacent nodes are not accurate, dij =∥pi − pj∥ + εij, where εij represents the added noise, and the goal was to find an embedding that realizes all known distances dij as best as possible.

The classical MDS yields an easy solution to the graph realization problem provided that all n(n − 1)/2 pairwise distances are known. Unfortunately, whenever most of distance constraints are missing, as it is typically the case in real applications, the problem becomes significantly more challenging because the rank-d constraint on the solution is not convex. Note that for a fixed embedding, all pairwise distances are invariant to rigid transformations, i.e., compositions of rotations, translations and possibly reflections. Whenever an embedding exists, we say that it is unique (up to rigid transformations) only if there are enough distance constraints, in which case the graph is said to be globally rigid (see, e.g., [31]). The graph realization problem is strongly NP-complete in one dimension, and strongly NP-hard for higher dimensions [45, 60]. Despite its difficulty, there exist many approximation algorithms for the graph realization problem, many of which come from the sensor networks community [2, 4, 5, 37], and rely on methods such as global optimization [15], semidefinite programming (SDP) [10, 11, 14, 51, 52, 62] and local to global approaches [41, 43, 46, 48, 61].

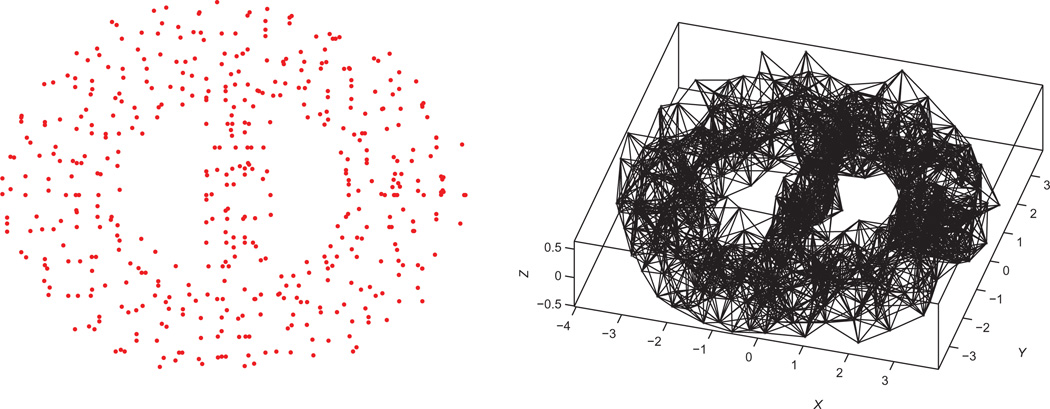

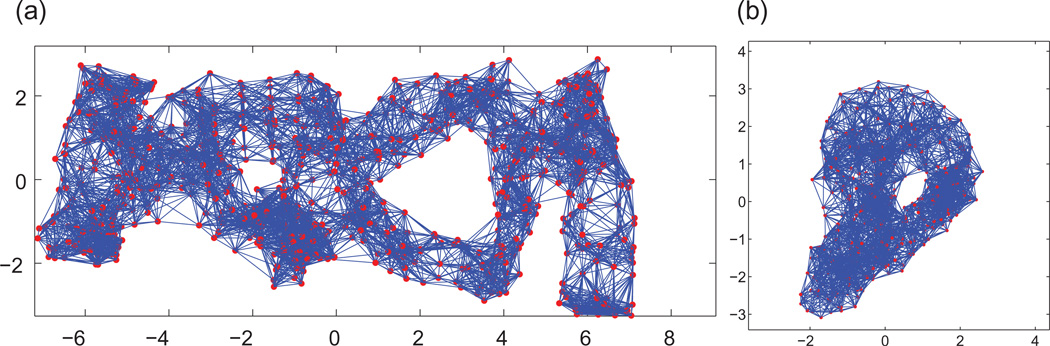

In typical real applications, the available measurements follow a geometric graph model where distances between pairs of nodes are available if and only if they are within sensing radius ρ of each other, i.e., (i, j) ∈ E ⇔ dij ≤ ρ. In Fig. 1, we illustrate with an example the measurement graph associated to a dataset of 500 nodes, with a sensing radius of (ρ) 0.092 and an average degree (deg) of 18, i.e., each node knows, on average, the distance to its 18 closest neighbors. It was shown in [4] that the graph realization problem remains NP-hard even under the geometric graph model.

Fig. 1.

2D view of the BRIDGE-DONUT dataset, a cloud of n=500 points in ℝ3 in the shape of a donut (left), and the associated measurement geometric graph of radius (ρ) =0.92 and average degree (deg)=18 (right).

The graph realization problem in ℝ3 is of particular importance because it arises naturally in the application of nuclear magnetic resonance (NMR) to structural biology. NMR spectroscopy is a well-established modality for atomic structure determination, especially for relatively small proteins (i.e., with atomic mass <40 kDa) [59], and contributes to progress in structural genomics [1]. General properties of proteins such as bond lengths and angles can be translated into accurate distance constraints. In addition, peaks in the NOESY experiments are used to infer spatial proximity information between pairs of nearby hydrogen atoms, typically in the range of 1.8–6 Å. The intensity of such NOESY peaks is approximately proportional to the distance to the minus sixth power, and it is thus used to infer the distance information between pairs of hydrogen atoms nuclear overhauser effects (NOEs). Unfortunately, NOEs provide only a rough estimate of the true distance, and hence the need for robust algorithms that are able to provide accurate reconstructions even at high levels of noise in the NOE data. In addition, the experimental data often contains potential constraints that are ambiguous, because of signal overlap resulting in incomplete assignment [44]. The structure calculation based on the entire set of distance constraints, both accurate measurements and NOE measurements, can be thought of as an instance of the graph realization problem in ℝ3 with noisy data.

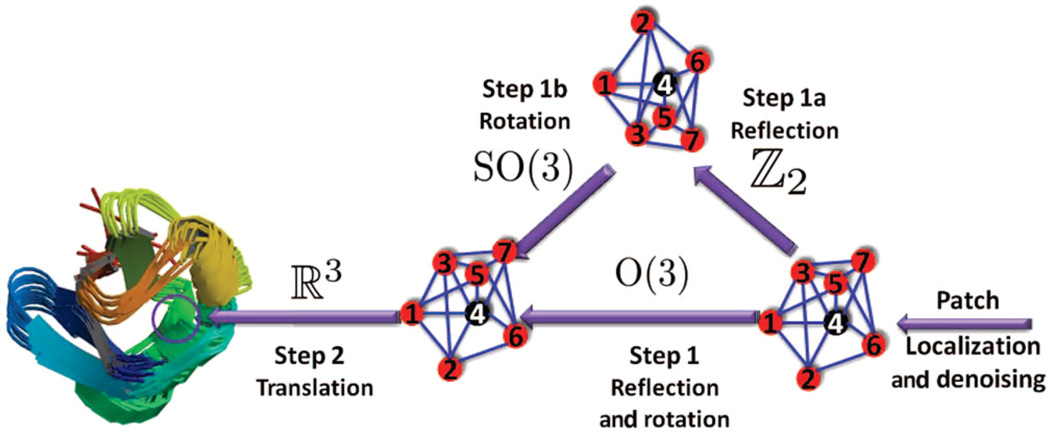

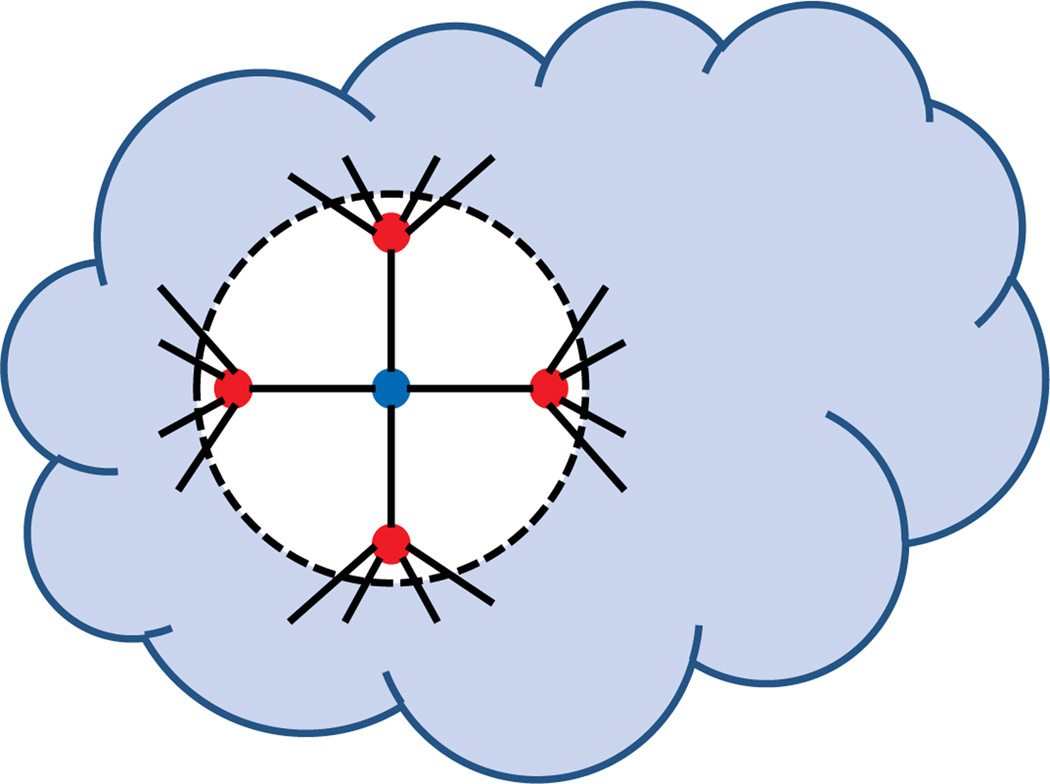

In this paper, we focus on the molecular distance geometry problem (to which we will refer from now on as the molecule problem) in ℝ3, although the approach is applicable to other dimensions as well. In [21], we introduced 2D-ASAP, an eigenvector-based synchronization algorithm that solves the sensor network localization problem in ℝ2. We summarize below the approach used in 2D-ASAP and further explain the differences and improvements that its generalization to three dimensions brings. Figure 2 shows a schematic overview of our algorithm, which we call 3D-As-Synchronized-As-Possible (3D-ASAP).

Fig. 2.

The 3D-ASAP recovery process for a patch in the 1d3z molecule graph. The localization of the rightmost subgraph in its own local frame is obtained from the available accurate bond lengths and noisy NOE measurements by using one of the SDP algorithms. To every patch, like the one shown here, there corresponds an element of Euc(3) that we try to estimate. Using the pairwise alignments, in Step 1 we estimate both the reflection and rotation matrix from an eigenvector synchronization computation over O(3), while in Step 2 we find the estimated coordinates by solving an overdetermined system of linear equations. If there is available information on the reflection or rotations of some patches, one may choose to further divide Step 1 into two consecutive steps. Step 1a is synchronization over ℤ2, while Step 1b is synchronization over SO(3), in which the missing reflections and rotations are estimated.

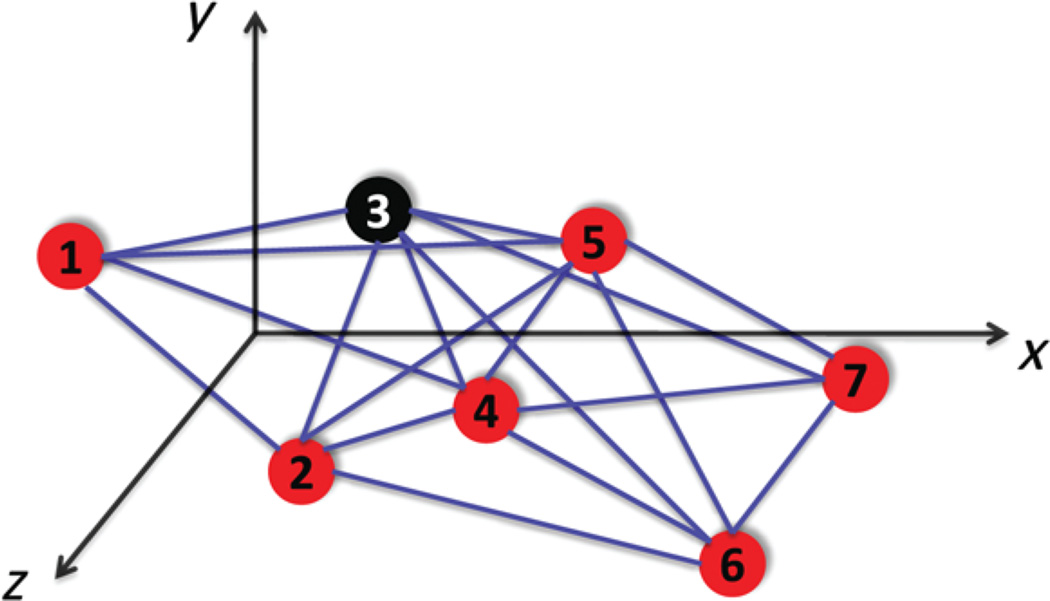

The 2D-ASAP algorithm proposed in [21] belongs to the group of algorithms that integrate local distance information into a global structure determination. For every sensor, we first identify uniquely localizable (UL) subgraphs of its 1-hop neighborhood that we call patches. For each patch, we compute an approximate localization in a coordinate system of its own using either the stress minimization approach of [28], or by using SDP. In the noise-free case, the computed coordinates of the sensors in each patch must agree with their global positioning up to some unknown rigid motion, that is, up to translation, rotation and possibly reflection (Fig. 3). To every patch there corresponds an element of the Euclidean group, Euc(2), of rigid transformations in the plane, and the goal is to estimate the group elements that will properly align all the patches in a globally consistent way. By finding the optimal alignment of all pairs of patches whose intersection is large enough, we obtain measurements for the ratios of the unknown group elements. Finding group elements from noisy measurements of their ratios is also known as the synchronization problem [26, 38]. For example, the synchronization of clocks in a distributed network from noisy measurements of their time offsets is a particular example of synchronization over ℝ. Singer [49] introduced an eigenvector method for solving the synchronization problem over the group SO(2) of planar rotations. This algorithm serves as the basic building block for our 2D-ASAP and 3D-ASAP algorithms. Namely, we reduce the graph realization problem to three consecutive synchronization problems that overall solve the synchronization problem over Euc(2). In the first two steps, we solve synchronization problems over the compact groups ℤ2, respectively, SO(2), for the possible reflections, respectively rotations, of the patches using the eigenvector method. In the third step, we solve another synchronization problem over the non-compact group ℝ2 for the translations by solving an overdetermined linear system of equations using the method of least squares. This solution yields the estimated coordinates of all the sensors up to a global rigid transformation.

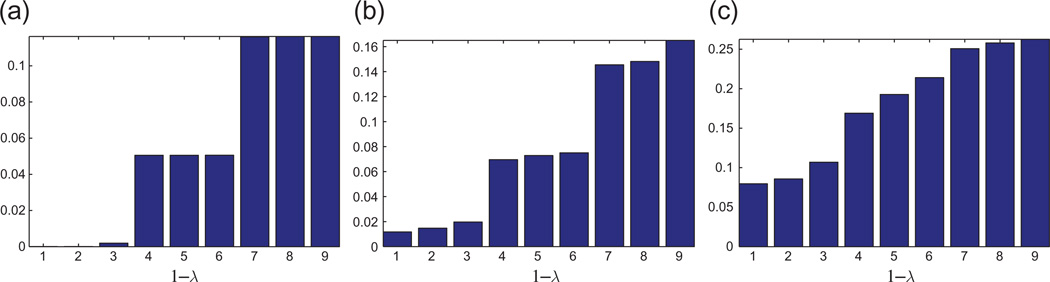

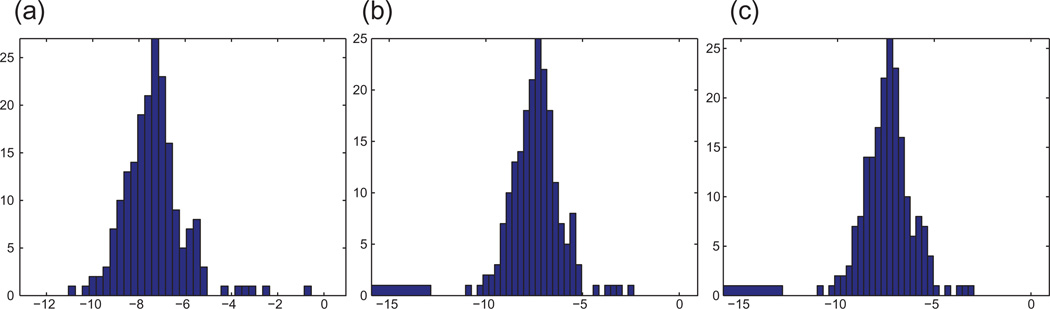

Fig. 3.

Bar plot of the top nine eigenvalues of ℋ for the UNITCUBE graph and various noise levels η. The resulting error rate τ is the percentage of patches whose reflection was incorrectly estimated. To ease the visualization of the eigenvalues of ℋ, we choose to plot 1 − λℋ because the top eigenvalues of ℋ tend to pile up near 1, so it is difficult to differentiate between them by looking at the bar plot of λ ℋ. (a) η =0%, τ =0%, and MSE=6e − 4. (b) η =20%, τ =0%, and MSE=0.05. (c) η =40%, τ =4%, and MSE=0.36.

In the present paper, we extend the approach used in 2D-ASAP to accommodate for the additional challenges posed by rigidity theory in ℝ3 and other constraints that are specific to the molecule problem. In addition, we also increase the robustness to noise and speed of the algorithm. The following paragraphs are a brief summary of the improvements that 3D-ASAP bring, in the order in which they appear in the algorithm.

First, we address the issue of using a divide-and-conquer approach from the perspective of three-dimensional global rigidity, i.e., of decomposing the initial measurement graph into many small over-lapping patches that can be uniquely localized. Sufficient and necessary conditions for two-dimensional combinatorial global rigidity have been established only recently, and motivated our approach for building patches in 2D-ASAP [31, 35]. Owing to the recent coning result in rigidity theory [18], it is also possible to extract globally rigid patches in dimension three. However, such globally rigid patches cannot always be localized accurately by SDP algorithms, even in the case of noise-free data. To that end, we rely on the notion of unique localizability [52] to localize noise graphs and introduce the notion of a weakly uniquely localizable (WUL) graph, in the case of noisy data.

Secondly, we use a median-based denoising algorithm in the preprocessing step, that overall produces more accurate patch localizations. Our approach is based on the observation that a given edge may belong to several different patches, the localization of each of which may result in a different estimation for the distance. The median of these different estimators from the different patches is a more accurate estimator of the underlying distance.

Thirdly, we incorporated in 3D-ASAP the possibility to integrate prior available information. As it is often the case in real applications (such as NMR), one has readily available structural information on various parts of the network that we are trying to localize. For example, in the NMR application, there are often subsets of atoms (referred to as ‘molecular fragments’, by analogy with the fragment molecular orbital approach, e.g., [25]) whose relative coordinates are known a priori, and thus it is desirable to be able to incorporate such information in the reconstruction process. Of course, one may always input into the problem all pairwise distances within the molecular fragments. However, this requires increased computational efforts while still not taking full advantage of the available information, i.e., the orientation of the molecular fragment. Nodes that are aware of their location are often referred to as anchors, and anchor-based algorithms make use of their existence when computing the coordinates of the remaining sensors. Since in some applications the presence of anchors is not a realistic assumption, it is important to have efficient and noise-robust anchor-free algorithms, which can also incorporate the location of anchors if provided. However, note that having molecular fragments is not the same as having anchors; given a set of (possibly overlapping) molecular fragments, no two of which can be joined in a globally rigid body, only one molecular fragment can be treated as anchor information (the nodes of that molecular fragment will be the anchors), as we do not know a priori how the individual molecular fragments relate to each other in the same global coordinate system.

Fourthly, we allow for the possibility of combining the first two steps (computing the reflections and rotations) into one single step, thus doing synchronization over the group of orthogonal transformations O(3) =ℤ2 × SO(3) rather than individually over ℤ2 followed by SO(3). However, depending on the problem being considered and the type of available information, one may choose not to combine the above two steps. For example, when molecular fragments are present, we first do synchronization over ℤ2 with anchors, as detailed in Section 7, followed by synchronization over SO(3).

Fifthly, we incorporate another median-based heuristic in the final step, where we compute the translations of each patch by solving, using least squares, three overdetermined linear systems, one for each of the x-, y- and z-axis. For a given axis, the displacement between a pair of nodes appears in multiple patches, each resulting in a different estimation of the displacement along that axis. The median of all these different estimators from different patches provides a more accurate estimator for the displacement. In addition, after the least squares step, we introduce a simple heuristic that corrects the scaling of the noisy distance measurements. Owing to the geometric graph model assumption and the uniform noise model, the distance measurements taken as input by 3D-ASAP are significantly scaled down, and the least-squares step further shrinks the distances between nodes in the initial reconstruction.

Finally, we introduce 3D-SP-ASAP, a variant of 3D-ASAP that uses a spectral partitioning algorithm in the pre-processing step of building the patches. This approach is somewhat similar to the recently proposed DIStributed COnformation (DISCO) algorithm of [42]. The philosophy behind DISCO is to recursively divide large problems into two smaller problems, thus building a binary tree of subproblems, which can ultimately be solved by the traditional SDP-based localization methods. The 3D-ASAP has the disadvantage of generating a number of smaller subproblems (patches) that is linear in the size of the network, and localizing all resulting patches is a computationally expensive task, which is exactly the issue addressed by 3D-SP-ASAP.

From a computational point of view, all steps of the algorithm can be implemented in a distributed fashion and scale linearly in the size of the network, except for the eigenvector computation, which is nearly linear.1 We show the results of numerous numerical experiments that demonstrate the robustness of our algorithm to noise and various topologies of the measurement graph. In all our experiments we used multiplicative and uniform noise, as detailed in equation (8.1). Throughout the paper, ANE denotes the average normalized error (ANE) that we introduce in (8.2) to measure the accuracy of our reconstructions.

This paper is organized as follows: Section 2 is a brief survey of related approaches for solving the graph realization problem in ℝ3. Section 3 gives an overview of the 3D-ASAP algorithm that we propose. Section 4 introduces the notion of WUL graphs used for breaking up the initial large network into patches and explains the process of embedding and aligning the patches. Section 5 proposes a variant of the 3D-ASAP algorithm by using a spectral clustering algorithm as a preprocessing step in breaking up the measurement graph into patches. In Section 6, we introduce a novel median-based denoising technique that improves the localization of individual patches, as well as a heuristic that corrects the shrinkage of the distance measurements. Section 7 gives an analysis of different approaches to the synchronization problem over ℤ2 with anchor information, which is useful for incorporating molecular fragment information when estimating the reflections of the remaining patches. In Section 8, we detail the results of numerical simulations in which we tested the performance of our algorithms in comparison to existing state-of-the-art algorithms. Finally, Section 9 is a summary and a discussion of possible extensions of the algorithm and its usefulness in other applications.

2. Related work

Owing to the importance of the graph realization problem, many heuristic strategies and numerical algorithms have been proposed in the last decade. A popular approach to solving the graph realization problem is based on SDP and has attracted considerable attention in recent years [9–11, 14, 62]. We defer to Section 4.3 a description of existing SDP relaxations of the graph realization problem. Such SDP techniques are usually able to localize accurately small-to-medium-sized problems (up to a couple thousands atoms). However, many protein molecules have more than 10,000 atoms and the SDP approach by itself is no longer computationally feasible due to its increased running time. In addition, the performance of the SDP methods is significantly affected by the number and location of the anchors, and the amount of noise in the data. To overcome the computational challenges posed by the limitations of the SDP solvers, several divide and conquer approaches have been proposed recently for the graph realization problem. One of the earlier methods appears in [13], and more recent methods include the Distributed Anchor Free Graph Localization (DAFGL) algorithm of [12], and the DISCO algorithm of [42].

One of the critical assumptions required by the distributed SDP algorithm in [13] is that there exist anchor nodes distributed uniformly throughout the physical space. The algorithm relies on the anchor nodes to divide the sensors into clusters, and solves each cluster separately via an SDP relaxation. Combining smaller subproblems together can be a challenging task; however, this is not an issue if there exist anchors within each smaller subproblem (as it happens in the sensor network localization problem) because the solution to the clusters induces a global positioning; in other words, the alignment process is trivially solved by the existence of anchors within the smaller subproblems. Unfortunately, for the molecule problem, anchor information is scarce, almost inexistent, hence it becomes crucial to develop algorithms that are amenable to a distributed implementation (to allow for solving large scale problems) despite there being no anchor information available. The DAFGL algorithm of [12] attempted to overcome this difficulty and was successfully applied to molecular conformations, where anchors are inexistent. However, the performance of DAFGL was significantly affected by the sparsity of the measurement graph and the algorithm could tolerate only up to 5% multiplicative noise in the distance measurements.

The recent DISCO algorithm of [42] addressed some of the shortcomings of DAFGL and used a similar divide-and-conquer approach to successfully reconstruct conformations of very large molecules. At each step, DISCO checks whether the current subproblem is small enough to be solved by itself, and if so, solves it via SDP and further improves the reconstruction by gradient descent. Otherwise, the current subproblem (subgraph) is further divided into two subgraphs, each of which is then solved recursively. To combine two subgraphs into one larger subgraph, DISCO aligns the two overlapping smaller subgraphs and refines the coordinates by applying gradient descent. In general, a divide-and-conquer algorithm consists of two ingredients: dividing a bigger problem into smaller subproblems and combining the solutions of the smaller subproblems into a solution for a larger subproblem. With respect to the former aspect, DISCO minimizes the number of edges between the two subgroups (since such edges are not taken into account when localizing the two smaller subgroups), while maximizing the number of edges within subgroups, since denser graphs are easier to localize both in terms of speed and robustness to noise. As for the latter aspect, DISCO divides a group of atoms in such a way that the two resulting subgroups have many overlapping atoms. Whenever the common subgroup of atoms is accurately localized, the two subgroups can be further joined together in a robust manner. DISCO employs several heuristics that determine when the overlapping atoms are accurately localized, and whether there are atoms that cannot be localized in a given instance (they do not attach to a given subgraph in a globally rigid way). Furthermore, in terms of robustness to noise, DISCO compared favorably with the above-mentioned divide-and-conquer algorithms.

Finally, another graph realization algorithm amenable to large-scale problems is maximum variance unfolding (MVU), a non-linear dimensionality reduction technique proposed by [58]. MVU produces a low-dimensional representation of the data by maximizing the variance of its embedding while preserving the original local distance constraints. MVU builds on the SDP approach and addresses the issue of the possibly high-dimensional solution to the SDP problem. While rank constraints are non-convex and cannot be directly imposed, it has been observed that low-dimensional solutions emerge naturally when maximizing the variance of the embedding (also known as the maximum trace heuristic). Their main observation is that the coordinate vectors of the sensors are often well approximated by just the first few (e.g., 10) low-oscillatory eigenvectors of the graph Laplacian. This observation allows one to replace the original and possibly large-scale SDP by a much smaller SDP that leads to a significant reduction in running time.

While there exist many other localization algorithms, we provide here two other such references. One of the more recent iterative algorithms that was observed to perform well in practice compared with other traditional optimization methods is a variant of the gradient descent approach called the stress majorization algorithm, also known as SMACOF [15], originally introduced by [22]. The main draw-back of this approach is that its objective function (commonly referred to as the stress) is not convex and the search for the global minimum is prone to getting stuck at local minima, which often makes the initial guess for gradient descent-based algorithms important for obtaining satisfactory results. DILAND, recently introduced in [40], is a distributed algorithm for localization with noisy distance measurements. Under appropriate conditions on the connectivity and triangulation of the network, DILAND was shown to converge almost surely to the true solution.

3. The 3D-ASAP algorithm

3D-ASAP is a divide-and-conquer algorithm that breaks up the large graph into many smaller overlapping subgraphs, which we call patches, and ‘stitches’ them together consistently in a global coordinate system with the purpose of localizing the entire measurement graph. Unlike previous graph localization algorithms, we build patches that are WUL (a notion that is defined later in Section 4.1 and is stronger than global rigidity2) which is required to avoid foldovers in the final solution.3 We also assume that the given measurement graph is UL to begin with; otherwise, the algorithm will discard the parts of the graph that do not attach uniquely to the rest of the graph. Alternatively, one may run the algorithm on the UL subcomponents, and later piece them together using application specific information.

We build the patches in the following way. For every node i we denote by V(i) = {j : (i, j) ∈ E} ∪ {i} the set of its neighbors together with the node itself, and by G(i)=(V(i), E(i)) its subgraph of 1-hop neighbors. If G(i) is globally rigid, which can be checked efficiently using the randomized algorithm of [27], then it has a unique embedding in ℝ3. However, embedding a globally rigid graph is NP-hard as shown in [4, 5]. As a result, using one of the existing embedding algorithms, such as SDP, for globally rigid (sub)graphs can produce inaccurate localizations, even for noise-free data. In order to ensure that SDP would give the correct localization, a stronger notion of rigidity is needed, that of unique localizability [52]. However, in practical applications the distance measurements are noisy, so we introduce the notion of weakly localizable subgraphs and use it to build patches that can be accurately localized. The exact way we break up the 1-hop neighborhood subgraphs into smaller WUL subgraphs is detailed in Section 4.1. In Section 5, we describe an alternative method for decomposing the measurement graph into patches, using a spectral-partitioning algorithm. We denote by N the number of patches obtained in the above decomposition of the measurement graph, and note that it may be different from n, the number of nodes in G, since the neighborhood graph of a node may contribute several patches or none. Also, note that the embedding of every patch in ℝ3 is given in its own local frame. To compute such an embedding, we use the following SDP-based algorithms: FULL-SDP for noise-free data [14] and SNL-SDP for noisy data [53]. Once each patch is embedded in its own coordinate system, one must find the reflections, rotations and translations that will stitch all patches together in a consistent manner, a process to which we refer as synchronization.

We denote the resulting patches by P1, P2,…, PN. To every patch Pi there corresponds an element ei ∈ Euc(3), where Euc(3) is the Euclidean group of rigid motions in ℝ3. The rigid motion ei moves patch Pi to its correct position with respect to the global coordinate system. Our goal is to estimate the rigid motions e1,…, eN (up to a global rigid motion) that will properly align all the patches in a globally consistent way. To achieve this goal, we first estimate the alignment between any pair of patches Pi and Pj that have enough nodes in common, a procedure we detail later in Section 4.5. The alignment of patches Pi and Pj provides a (perhaps noisy) measurement for the ratio in Euc(3). We solve the resulting synchronization problem in a globally consistent manner, such that information from local alignments propagates to pairs of non-overlapping patches. This is done by replacing the synchronization problem over Euc(3) by two different consecutive synchronization problems.

In the first synchronization problem, we simultaneously find the reflections and rotations of all the patches using the eigenvector synchronization algorithm over the group O(3) of orthogonal matrices. When prior information on the reflections of some patches is available, one may choose to replace this first step by two consecutive synchronization problems, i.e., first estimate the missing rotations by doing synchronization over ℤ2 with molecular fragment information, as described in Section 7, followed by another synchronization problem over SO(3) to estimate the rotations of all patches. Once both reflections and rotations are estimated, we estimate the translations by solving an overdetermined linear system. Taken as a whole, the algorithm integrates all the available local information into a global coordinate system over several steps by using the eigenvector synchronization algorithm and least squares over the isometries of the Euclidean space. The main advantage of the eigenvector method is that it can recover the reflections and rotations even if many of the pairwise alignments are incorrect. The algorithm is summarized in Table 1.

Table 1.

Overview of the 3D-ASAP algorithm

| Input | G =(V, E), |V| = n, |E| = m, dij for (i, j) ∈ E |

|---|---|

| Pre-processing Step | 1. Break the measurement graph G into N WUL patches P1,…, PN. |

| Patch Localization | 2. Embed each patch Pi separately using either FULL-SDP (for noise-free data), or SNL-SDP (for noisy data), or cMDS (for complete patches). |

| Step 1 | 1. Align all pairs of patches (Pi, Pj) that have enough nodes in common. |

| 2. Estimate their relative rotation and possibly reflection hij ∈ O(3). | |

| 3. Build a sparse 3N × 3N symmetric matrix H = (hij) as defined in (3.1). | |

| Estimating Reflections and Rotations | 4. Define ℋ=D−1H, where D is a diagonal matrix with D3i−2,3i−2 =D3i−1,3i−1 =D3i,3i =deg(i), for i=1,…,N. |

| 5. Compute the top 3 eigenvectors of ℋ satisfying | |

| 6. Estimate the global reflection and rotation of patch Pi by the orthogonal matrix ĥi that is closest to h̃i in Frobenius norm, where ĥi is the submatrix corresponding to the ith patch in the 3N × 3 matrix formed by the top three eigenvectors []. | |

| 7. Update the current embedding of patch Pi by applying the orthogonal transformation ĥi obtained above (rotation and possibly reflection) | |

| Step 2 | 1. Build the m × n overdetermined system of linear equations given in (3.20), after applying the median-based denoising heuristic. |

| Estimating | 2. Include the anchors information (if available) into the linear system. |

| Translations | 3. Compute the least squares solution for the x-axis, y-axis and z-axis coordinates. |

| OUTPUT | Estimated coordinates p̂1,…, p̂n |

3.1 Step 1: Synchronization over O(3) to estimate reflections and rotations

As mentioned earlier, for every patch Pi that was already embedded in its local frame, we need to estimate whether or not it needs to be reflected with respect to the global coordinate system, and what is the rotation that aligns it in the same coordinate system. In 2D-ASAP, we first estimated the reflections, and based on that, we further estimated the rotations. However, it makes sense to ask whether one can combine the two steps, and perhaps further increase the robustness to noise of the algorithm. By doing this, information contained in the pairwise rotation matrices helps in better estimating the reflections, and vice versa, information on the pairwise reflection between patches helps in improving the estimated rotations. Combining these two steps also reduces the computational effort by half, since we need to run the eigenvector synchronization algorithm only once.

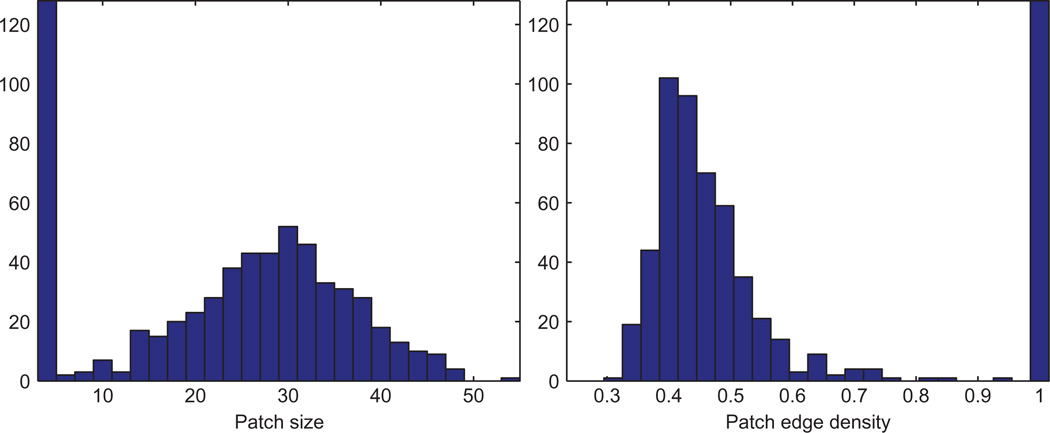

We denote the orthogonal transformation of patch Pi by hi ∈ O(3), which is defined up to a global orthogonal rotation and reflection. The alignment of every pair of patches Pi and Pj whose intersection is sufficiently large provides a measurement hij (a 3 × 3 orthogonal matrix) for the ratio . However, some ratio measurements can be corrupted because of errors in the embedding of the patches due to noise in the measured distances. We denote by GP =(VP, EP) the patch graph whose vertices VP are the patches P1,…, PN, and two patches Pi and Pj are adjacent, (Pi, Pj) ∈ EP, iff they have enough vertices in common to be aligned such that the ratio can be estimated. We let AP denote the adjacency matrix of the patch graph, i.e., if (Pi, Pj) ∈ EP, and otherwise. Obviously, two patches that are far apart and have no common nodes cannot be aligned, and there must be enough4 overlapping nodes to make the alignment possible. Figures 4 and 5 show a typical example of the sizes of the patches we consider, as well as their intersection sizes.

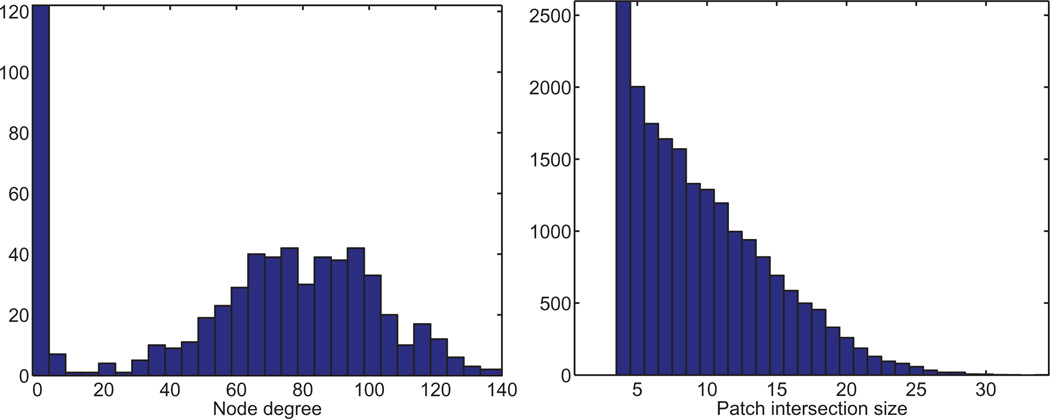

Fig. 4.

Histogram of patch sizes (left) and edge density (right) in the BRIDGE-DONUT graph, n=500 and deg=18. Note that a large number of the resulting patches are of size 4, thus complete graphs on four nodes (K4), which explains the same large number of patches with edge density 1.

Fig. 5.

Histogram of the node degrees of patches in the patch graph GP (left) and the intersection size of patches (right), in the BRIDGE-DONUT graph with n=500 and deg=18. GP has N =615 nodes (i.e., patches) and average degree 24, meaning that, on average, a patch overlaps (in at least four nodes) with 24 other patches.

The first step of 3D-ASAP is to estimate the appropriate rotation and reflection of each patch. To that end, we use the eigenvector synchronization method as it was shown to perform well even in the presence of a large number of errors. The eigenvector method starts off by building the following 3N × 3N sparse symmetric matrix H =(hij), where hij is the a 3 × 3 orthogonal matrix that aligns patches Pi and Pj

| (3.1) |

We explain in more detail in Section 4.5 the procedure by which we align pairs of patches, if such an alignment is at all possible.

Prior to computing the top eigenvectors of the matrix H, as introduced originally in [49], we choose to use the following normalization (similar to 2D-ASAP in [21]). Let D be a 3N × 3N diagonal matrix,5 whose entries are given by D3i−2,3i−2=D3i−1,3i−1=D3i,3i =deg(i), for i=1,…,N. We define the matrix

| (3.2) |

which is similar to the symmetric matrix D−1/2HD−1/2 through

Therefore, ℋ has 3N real eigenvalues with corresponding 3N orthogonal eigenvectors , satisfying . As shown in the next paragraphs, in the noise-free case, , and furthermore, if the patch graph is connected, then . We define the estimated orthogonal transformations ĥ1,…,ĥN ∈ O(3) using the top three eigenvectors , following the approach used in [50].

Let us now show that, in the noise-free case, the top three eigenvectors of ℋ perfectly recover the unknown group elements. We denote by hi the 3 × 3 matrix corresponding to the ith submatrix in the 3N × 3 matrix []. In the noise-free case, hi is an orthogonal matrix and represents the solution which aligns patch Pi in the global coordinate system, up to a global orthogonal transformation. To see this, we first let h denote the 3N × 3 matrix formed by concatenating the true orthogonal transformation matrices h1,…, hN. Note that when the patch graph GP is complete, H is a rank 3 matrix since H =hh⊤, and its top three eigenvectors are given by the columns of h

| (3.3) |

In the general case when GP is a sparse connected graph, note that

| (3.4) |

and thus the three columns of h are each eigenvectors of matrix ℋ, associated to the same eigenvalue λ = 1 of multiplicity 3. It remains to show that this is the largest eigenvalue of ℋ. We recall that the adjacency matrix of GP is AP, and denote by 𝒜P the 3N × 3N matrix built by replacing each entry of value 1 in AP by the identity matrix I3, i.e., 𝒜P =AP ⊗ I3, where ⊗ denotes the tensor product of two matrices. As a consequence, the eigenvalues of 𝒜P are just the direct products of the eigenvalues of I3 and AP, and the corresponding eigenvectors of 𝒜P are the tensor products of the eigenvectors of I and AP. Furthermore, if we let Δ denote the N × N diagonal matrix with Δii =deg(i), for i=1,…,N, it holds true that

| (3.5) |

and thus the eigenvalues of D−1 𝒜P are the same as the eigenvalues of Δ−1 AP, each with multiplicity 3. In addition, if Υ denotes the 3N × 3N matrix with diagonal blocks hi, i = 1,…,N, then the normalized alignment matrix ℋ can be written as

| (3.6) |

and thus ℋ and D−1 AP have the same eigenvalues, which are also the eigenvalues of Δ−1 AP, each with multiplicity 3. Whenever it is understood from the context, we will omit from now on the remark about the multiplicity 3. Since the normalized discrete graph Laplacian ℒ is defined as

| (3.7) |

it follows that in the noise-free case, the eigenvalues of I − ℋ are the same as the eigenvalues of ℒ. These eigenvalues are all non-negative, since ℒ is similar to the positive semidefinite matrix I − Δ−1/2AP Δ−1/2, whose non-negativity follows from the identity

In other words,

| (3.8) |

where the eigenvalues of ℒ are ordered in increasing order, i.e., . If the patch graph GP is connected, then the eigenvalue is simple (thus ) and its corresponding eigenvector is the all-ones vector 1=(1, 1,…, 1) ⊤. Therefore, the largest eigenvalue of ℋ equals 1 and has multiplicity 3, i.e., , and . This concludes our proof that, in the noise-free case, the top three eigenvectors of ℋ perfectly recover the true solution h1,…, hN ∈ O(3), up to a global orthogonal transformation.

However, when the distance measurements are noisy and the pairwise alignments between patches are inaccurate, an estimated transformation h̃i may not coincide with hi, and in fact may not even be an orthogonal transformation. For that reason, we estimate hi by the closest orthogonal matrix to h̃i in the Frobenius matrix norm6

| (3.9) |

We do this by using the well-known procedure (e.g., [3]), ĥi =UiVi⊤, where h̃i =UiΣiVi⊤ is the singular value decomposition of h̃i, see also [24, 39]. Note that the estimation of the orthogonal transformations of the patches are up to a global orthogonal transformation (i.e., a global rotation and reflection with respect to the original embedding). Also, the only difference between this step and the angular synchronization algorithm in [49] is the normalization of the matrix prior to the computation of the top eigen-vector. The usefulness of this normalization was first demonstrated in 2D-ASAP, in the synchronization process over ℤ2 and SO(2). In very recent work, the authors of [7] prove performance guarantees for the above synchronization algorithm in terms of the eigenvalues of I − ℋ (the normalized graph connection Laplacian) and the second eigenvalue of L (the normalized (classical) graph Laplacian).

We use the mean-squared error (MSE) to measure the accuracy of this step of the algorithm in estimating the orthogonal transformations. To that end, we look for an optimal orthogonal transformation Ô ∈ O(3) that minimizes the sum of squared distances between the estimated orthogonal transformations and the true ones:

| (3.10) |

In other words, Ô is the optimal solution to the registration problem between two sets of orthogonal transformations in the least squares sense. Following the analysis of [50], we make use of properties of the trace such as Tr(AB) =Tr(BA), Tr(A)=Tr(A⊤) and notice that

| (3.11) |

If we let Q denote the 3 × 3 matrix

| (3.12) |

it follows from (3.11) that the MSE is given by minimizing

| (3.13) |

In [3] it is proved that Tr(OQ)≤Tr(VU⊤Q), for all O ∈ O(3), where Q=UΣV⊤ is the singular value decomposition of Q. Therefore, the MSE is minimized by the orthogonal matrix Ô =VU⊤ and is given by

| (3.14) |

where σ1, σ2, σ3 are the singular values of Q. Therefore, whenever Q is an orthogonal matrix for which σ1 = σ2 = σ3 = 1, the MSE vanishes. Indeed, the numerical experiments in Table 2 confirm that for noise-free data, the MSE is very close to zero. To illustrate the success of the eigenvector method in estimating the reflections, we also compute τ, the percentage of patches whose reflection was incorrectly estimated. Finally, the last two columns in Table 2 show the recovery errors when, instead of doing synchronization over O(3), we first synchronize over ℤ2 followed by SO(3).

Table 2.

The errors in estimating the reflections and rotations when aligning the N =200 patches resulting from for the UNITCUBE graph on n =212 vertices, at various levels of noise. We used τ to denote the percentage of patches whose reflection was incorrectly estimated

|

O(3) |

ℤ2 and SO(3) |

|||

|---|---|---|---|---|

| η (%) | τ (%) | MSE | τ (%) | MSE |

| 0 | 0 | 6e−4 | 0 | 7e−4 |

| 10 | 0 | 0.01 | 0 | 0.01 |

| 20 | 0 | 0.05 | 0 | 0.05 |

| 30 | 5.8 | 0.35 | 5.3 | 0.32 |

| 40 | 4 | 0.36 | 5 | 0.40 |

| 50 | 7.4 | 0.65 | 9 | 0.68 |

3.2 Step 2: Synchronization over ℝ3 to estimate translations

The final step of the 3D-ASAP algorithm is computing the global translations of all patches and recovering the true coordinates. For each patch Pk, we denote by Gk = (Vk, Ek)7 the graph associated to patch Pk, where Vk is the set of nodes in Pk, and Ek is the set of edges induced by Vk in the measurement graph G =(V, E).

We denote by the known local frame coordinates of node i ∈ Vk in the embedding of patch Pk (see Fig. 6).

Fig. 6.

An embedding of a patch Pk in its local coordinate system (frame) after it was appropriately reflected and rotated. In the noise-free case, the coordinates agree with the global positioning pi =(xi, yi, zi) ⊤ up to some translation t(k) (unique to all i in Vk).

At this stage of the algorithm, each patch Pk has been properly reflected and rotated so that the local frame coordinates are consistent with the global coordinates, up to a translation t(k) ∈ ℝ3. In the noise-free case, we should therefore have

| (3.15) |

We can estimate the global coordinates p1,…, pn as the least-squares solution to the overdetermined system of linear equations (3.15), while ignoring the by-product translations t(1),…, t(N). In practice, we write a linear system for the displacement vectors pi − pj for which the translations have been eliminated. Indeed, from (3.15) it follows that each edge (i, j) ∈ Ek contributes a linear equation of the form8

| (3.16) |

In terms of the x, y and z global coordinates of nodes i and j, (3.16) is equivalent to

| (3.17) |

and similarly for the y and z equations. We solve these three linear systems separately, and recover the coordinates x1,…, xn, y1,…, yn and z1,…, zn. Let T be the least-squares matrix associated with the overdetermined linear system in (3.17), x be the n × 1 vector representing the x-coordinates of all nodes, and bx be the vector with entries given by the right-hand side of (3.17). Using this notation, the system of equations given by (3.17) can be written as

| (3.18) |

and similarly for the y and z coordinates. Note that the matrix T is sparse with only two non-zero entries per row and that the all-ones vector 1=(1, 1,…, 1) ⊤ is in the null space of T, i.e., T1=0, so we can find the coordinates only up to a global translation.

To avoid building a very large least-squares matrix, we combine the information provided by the same edges across different patches in only one equation, as opposed to having one equation per patch. In 2D-ASAP [21], this was achieved by adding up all equations of the form (3.17) corresponding to the same edge (i, j) from different patches, into a single equation, i.e.,

| (3.19) |

and similarly for the y- and z-coordinates. For very noisy distance measurements, the displacements will also be corrupted by noise and the motivation for (3.19) was that adding up such noisy values will average out the noise. However, as the noise-level increases, some of the embedded patches will be highly inaccurate and will thus generate outliers in the list of displacements above. To make this step of the algorithm more robust to outliers, instead of averaging over all displacements, we select the median value of the displacements and use it to build the least-squares matrix

| (3.20) |

We denote the resulting m × n matrix by T̃, and its m × 1 right-hand-side vector by b̃x. Note that T̃ has only two non-zero entries per row,9 T̃e,i =1, T̃e,j =−1, where e is the row index corresponding to the edge (i, j). The least squares solution p̂= p̂1,…, p̂n to

| (3.21) |

is our estimate for the coordinates p=p1,…, pn, up to a global rigid transformation.

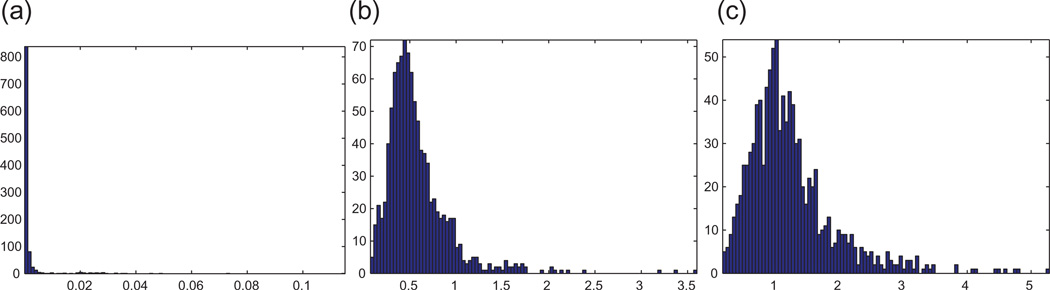

Whenever the ground truth solution p is available, we can compare our estimate p̂ with p. To that end, we remove the global reflection, rotation and translation from p̂, by computing the best procrustes alignment between p and p̂, i.e., p̃=Op̂ + t, where O is an orthogonal rotation and reflection matrix, and t a translation vector, such that we minimize the distance between the original configuration p and p̃, as measured by the least-squares criterion . Figure 7 shows the histogram of errors in the coordinates, where the error associated with node i is given by ∥pi − p̂i∥.

Fig. 7.

Histograms of coordinate errors ∥pi − p̂i∥ for all atoms in the 1d3z molecule, for different levels of noise. In all figures, the x-axis is measured in angstroms. Note the change of scale for (a), and the fact that the largest error showed that there is 0.12. We used ERRc to denote the average coordinate error of all atoms. (a) η =0%, ERRc =2e − 3. (b) η =30%, ERRc =0.57. (c) η =50%, ERRc =1.23.

We remark that in 3D-ASAP anchor information can be incorporated similarly to the 2D-ASAP algorithm [21]; however we do not elaborate on this here since there are no anchors in the molecule problem.

4. Extracting, embedding and aligning patches

This section describes how to break up the measurement graph into patches, and how to embed and pairwise align the resulting patches. In Section 4.1, we recall a recent result of [52] on uniquely d-localizable graphs, which can be accurately localized by SDP-based methods. We thus lay the ground for the notion of WUL graphs, which we introduce with the purpose of being able to localize the resulting patches even when the distance measurements are noisy. Section 4.2 discusses the issue of finding ‘pseudo-anchor’ nodes, which are needed when extracting WUL subgraphs. In Section 4.3, we discuss several SDP relaxations to the graph localization problem, which we use to embed the WUL patches. In Section 4.4, we remark on several additional constraints specific to the molecule problem, which are currently not incorporated in 3D-ASAP. Finally, Section 4.5 explains the procedure for aligning a pair of overlapping patches.

4.1 Extracting WUL subgraphs

We first recall some of the notation introduced earlier, that is needed throughout this section and Section 7 on synchronization over ℤ2 with anchors. We consider a cloud of points in ℝ3 with k anchors denoted by 𝒜, and n atoms denoted by 𝒮. An anchor is a node whose location ai ∈ ℝ3 is readily available, i=1,…, k, and an atom is a node whose location pj is to be determined, j =1,…, n. We denote by dij the Euclidean distance between a pair of nodes, (i, j) ∈ 𝒜 ∪ 𝒮. In most applications, not all pair-wise distance measurements are available, therefore we denote by E(𝒮, 𝒮) and E(𝒮, 𝒜) the set of edges denoting the measured atom–atom and atom–anchor distances. We represent the available distance measurements in an undirected graph G =(V, E) with vertex set V =𝒜 ∪ 𝒮 of size |V| = n + k, and edge set of size |E| = m. An edge of the graph corresponds to a distance constraint, that is, (i, j) ∈ E iff the distance between nodes i and j is available and equals dij =dji, where i, j ∈ 𝒜 ∪ 𝒮. We denote the partial distance measurements matrix by D = {dij : (i, j) ∈ E(𝒮, 𝒮) ∪ E(𝒮, 𝒜)}. A solution p together with the anchor coordinates a comprise a localization or realization q=(p, a) of G. A framework in ℝd is the ensemble (G, q), i.e., the graph G together with the realization q which assigns a point qi in ℝd to each vertex i of the graph.

Given a partial set of noise-free distances and the anchor set a, the graph realization problem can be formulated as the following system

| (4.1) |

Unless the above system has enough constraints (i.e., the graph G has sufficiently many edges), G is not globally rigid and there could be multiple solutions. However, if the graph G is known to be (generically) globally rigid in ℝ3, and there are at least four anchors (i.e., k ≥ 4), and G admits a generic realization,10 then (4.1) admits a unique solution. Owing to recent results on the characterization of generic global rigidity, there now exists a randomized efficient algorithm that verifies if a given graph is generically globally rigid in ℝd [27]. However, this efficient algorithm does not translate into an efficient method for actually computing a realization of G. Knowing that a graph is generically globally rigid in ℝd still leaves the graph realization problem intractable, as shown in [5]. Motivated by this gap between deciding if a graph is generically globally rigid and computing its realization (if it exists), So and Ye [52] introduced the following notion of unique d-localizability. An instance (G, q) of the graph localization problem is said to be uniquely d-localizable if

the system (4.1) has a unique solution p̃=(p̃1;…; p̃n) ∈ ℝnd and

- for any l > d, p̃ = ((p̃1; 0),…, (p̃n; 0)) ∈ ℝnl is the unique solution to the following system:

(4.2)

where (v; 0) denotes the concatenation of a vector v of size d with the all-zeros vector 0 of size l − d. The second condition states that the problem cannot have a non-trivial localization in some higher dimensional space ℝl (i.e., a localization different from the one obtained by setting pj =(p̃j; 0) for j = 1,…, n), where anchor points are trivially augmented to (ai; 0), for i=1,…, k. A crucial observation should now be made: unlike global rigidity, which is a generic property of the graph G, the notion of unique localizability depends not only on the underlying graph G but also on the particular realization q, i.e., it depends on the framework (G, q).

We now introduce the notion of a WUL graph, essential for the preprocessing step of the 3D-ASAP algorithm, where we break the original graph into overlapping patches. A graph is weakly uniquely d-localizable if there exists at least one realization q ∈ ℝ(n+k)d (we call this a certificate realization) such that the framework (G, q) is UL. If a framework (G, q) is UL, then G is a WUL graph; however, the reverse is not necessarily true since unique localizability is not a generic property. Furthermore, note that while a WUL graph may not be UL, it is guaranteed to be globally rigid, since global rigidity is a generic property.

Let us make clear the distinction between the related notions of rigidity, unique localizability and strong localizability introduced in [52]. Loosely speaking, a graph is strongly localizable if it is UL and remains so even under small perturbations. Formally stated, the problem (4.1) is strongly localizable if the dual of its SDP relaxation has an optimal dual slack matrix of rank n. As shown in [52] strong localizability implies unique localizability, but the reverse if not true. By the above observation, strong localizability also implies weak unique localizability.

The advantage of working with UL graphs becomes clear in light of the following result by [52], which states that the problem of deciding whether a given graph realization problem is UL, as well as the problem of determining the node positions of such a UL instance, can be solved efficiently by considering the following SDP

| (4.3) |

where ei denotes the all-zeros vector with a 1 in the ith entry and where Z ≥ 0 means that Z is a positive semidefinite matrix. The SDP method relaxes the constraint Y =XX⊤ to Y ≥ XX⊤, i.e., Y − XX⊤ ≥ 0, which is equivalent to the last condition in (4.3). The following predictor for UL graphs introduced in [52], established for the first time that the graph realization problem is UL if and only if the relaxation solution computed by an interior point algorithm (which generates feasible solutions of max-rank) has rank d and Y =XX⊤.

Theorem 4.1 ([52, Theorem 2]) Suppose G is a connected graph. Then the following statements are equivalent:

Problem (4.1) is UL.

The max-rank solution matrix Z of (4.3) has rank d.

The solution matrix Z represented by (b) satisfies Y =XX⊤.

Algorithm 1 summarizes our approach for extracting a WUL subgraph of a given graph. The algorithm has to cope with two main difficulties. The first difficulty is that only noisy distance measurements are available, yet the SDP (4.3) requires noise-free distances. This difficulty is bypassed by choosing a random realization for which noise-free distances are computed. This realization serves the purpose of the sought after certificate for WUL, and is not related to the actual locations of the atoms. The second difficulty is that the number of anchor points could be smaller than four. A necessary (but not sufficient) condition for the statements in Theorem 4.1 to hold true is the existence of at least four anchor nodes. While this may seem a very restrictive condition (since in many real life applications anchor information is rarely available), there is an easy way to get around this, provided the graph contains a clique (complete subgraph) of size at least 4. As discussed in Section 4.2, a patch of size at least 10 is very likely to contain such a clique, as confirmed by our numerical simulations. However, note that in our simulations for detecting pseudo-anchors, we placed the nodes at random within a disc of radius ρ, while in many real applications the position of the nodes is not necessarily random, as is often the case of certain three-dimensional biological data sets where the atoms lie along a one-dimensional curve. Once such a clique has been found, one may use cMDS to embed it and use the coordinates as anchors. We call such nodes pseudo-anchors.

Algorithm 1.

Finding a WUL subgraph of a graph with four anchors or pseudo-anchors

| Require: Simple graph G =(V, E) with n atoms, k anchors, and ε a small positive constant (e.g., 10−4). |

| 1: Randomize a realization q1,…, qn in ℝ3 and compute the distances dij =∥qi − qj∥ for (i, j) ∈ E. |

| 2: If k < 4, find a complete subgraph of G on 4 vertices (i.e., K4) and compute an embedding of it (using classical MDS) with distances dij computed in step 1. Denote the set of pseudo-anchors by 𝒜. |

| 3: Solve the SDP relaxation problem formulated in (4.3) using the anchor set 𝒜 and the distances dij computed in step 1 above. |

| 4: Denote by the vector w the diagonal elements of the matrix Y − XX⊤. |

| 5: Find the subset of nodes V0 ∈ V\𝒜 such that wi < ε. |

| 6: Denote G0 =(V0, E0) the weakly uniquely localizable subgraph of G. |

Note that Step 1 of the algorithm should be used only in the case of noisy distances. For noise-free data, this step may be skipped as the diagonal elements of the matrix Y − XX⊤ can be readily used to extract the UL subgraph.

Our approach is to extract a WUL subgraph from the 4-connected components of each patch, since 4-connectivity is a necessary condition for global rigidity [17, 31], and as mentioned earlier, WUL implies global rigidity. Then, we apply Algorithm 1 on these components to extract the WUL subgraphs. Ultimately, we would like to extract subgraphs that are UL since they can be embedded accurately using SDP. However, UL is a property that depends on the specific realization, not just the underlying graph. Since the realization is unknown (after all, our goal is in fact to find it), we have to resort to WUL, which is a slightly weaker notion of UL but stronger than global rigidity. We have observed in our simulations that this approach significantly improves the accuracy of the localization compared with embedding patches that are globally rigid but not necessarily WUL. The explanation for this improved performance might be the following: if the randomized realization in Algorithm 1 (or what remains of it after removing some of the nodes) is ‘faithful’, meaning close enough to the true realization, then the WUL subgraph is perhaps generically UL, and hence its localization using the SDP in (4.3) under the original distance constraints can be computed accurately, as predicted by Theorem 4.1.

We also consider a slight variation of Algorithm 1, where we replace Step 3 with the SDP relaxation introduced in the FULL-SDP algorithm of [14]. We refer to this different approach as Algorithm 2. Algorithm 2 is mainly motivated by computational considerations, as the running time of the FULLSDP algorithm is significantly smaller compared with our CVX-based SDP implementation [29, 30] of problem (4.3).

Figure 8 and Table 3 show the reconstruction errors of the patches (in terms of ANE, an error measure introduced in Section 8) in the following scenarios. In the first scenario, we directly embed each 4-connected component (using either FULL-SDP or SNL-SDP, as detailed below), without any prior preprocessing. In the second, respectively third, scenario we first extract a WUL subgraph from each 4-connected component using Algorithm 1, respectively Algorithm 2, and then embed the resulting subgraphs. Note that the subgraph embeddings are computed using FULL-SDP, respectively SNL-SDP, for noise-free, respectively noisy data. Figure 8 contains numerical results from the UNITCUBE graph with noise-free data, in the three scenarios presented above. As expected, the FULL-SDP embedding in scenario 1 gives the highest reconstruction error,11 at least one order of magnitude larger when compared with Algorithms 1 and 2. Surprisingly, Algorithm 2 produced more accurate reconstructions than Algorithm 1, despite its lower running time. These numerical computations suggest12 that Theorem 4.1 remains valid when the formulation in problem (4.3) is replaced by the one considered in the FULL-SDP algorithm [14].

Fig. 8.

Histogram of reconstruction errors (measured in ANE) for the noise-free UNITCUBE graph with n=212 vertices, sensing radius ρ =0.3 and average degree deg=17. denotes the average errors over all N =197 patches. Note that the x-axis shows the ANE in logarithmic scale. Scenario 1: directly embedding the 4-connected components. Scenario 2: embedding the WUL subgraphs extracted using Algorithm 1. Scenario 3: embedding the WUL subgraphs extracted using Algorithm 2. Note that for the subgraph embeddings we use FULL-SDP. (a) Scenario 1: =8.4e − 4. (b) Scenario 2: =2.3e − 5. (c) Scenario 3: =7.2e − 6.

Table 3.

Average reconstruction errors (measured in ANE) for the UNITCUBE graph with n=212 vertices, sensing radius (ρ) 0.26 and average degree (deg) 12. Note that we consider only patches of size greater than or equal to 7, and there are 192 such patches. Scenario 1: directly embedding the 4-connected components. Scenario 2: embedding the WUL subgraphs extracted using Algorithm 1. Scenario 3: embedding the WUL subgraphs extracted using Algorithm 2. Note that for the subgraph embeddings we use FULL-SDP for noise-free data, and SNL-SDP for noisy data

| η (%) | Scenario 1 | Scenario 2 | Scenario 3 |

| 0 | 5.3e−02 | 4.9e−03 | 1.3e−03 |

| 10 | 8.8e−02 | 5.2e−02 | 5.3e−02 |

| 20 | 1.5e−01 | 1.1e−01 | 1.1e−01 |

| 30 | 2.3e−01 | 2.0e−01 | 2.0e−01 |

The results detailed in Fig. 8, while showing improvements of the second and third scenarios over the first one, may not entirely convince the reader of the usefulness of our proposed randomized algorithm, since in the first scenario a direct embedding of the patches using FULL-SDP already gives a very good reconstruction, i.e., 8.4e−4 on average. We regard 4-connectivity a significant constraint that very likely renders a random geometric star graph to become globally rigid, thus diminishing the marginal improvements of the WUL extraction algorithm. To that end, we run experiments similar to those reported in Fig. 8, but this time on the 1-hop neighborhood of each node in the UNITCUBE graph, without further extracting the 4-connected components. In addition, we sparsify the graph by reducing the sensing radius from ρ =0.3 to 0.26. Table 3 shows the reconstruction errors, at various levels of noise. Note that in the noise-free case, Scenarios 2 and 3 yield results which are an order of magnitude better than that of Scenario 1, which returns a rather poor average ANE of 5.3e−02. However, for the noisy case, these marginal improvements are considerably smaller.

Table 4 shows the total number of nodes removed from the patches by Algorithms 1 and 2, the number of 1-hop neighborhoods which are readily WUL, and the running times. Indeed, for the sparser UNITCUBE graph with ρ =0.26, the number of patches which are already WUL is almost half, compared with the case of the denser graph with ρ =0.30.

Table 4.

Comparison of the two algorithms for extracting WUL subgraphs, for the UNICUBE graphs with sensing radius (ρ) 0.30 and 0.26, and noise level (η) 0%. The WUL patches are those patches for which the subgraph extraction algorithms did not remove any nodes

|

ρ =0.30, n=197 |

ρ =0.26, n=192 |

|||

|---|---|---|---|---|

| Algorithm 1 | Algorithm 2 | Algorithm 1 | Algorithm 2 | |

| Total number of nodes removed | 31 | 26 | 258 | 285 |

| Nr of WUL patches | 188 | 191 | 104 | 101 |

| Running time (s) | 887 | 48 | 632 | 26 |

Finally, we remark on one of the consequences of our approach for breaking up the measurement graph. It is possible for a node not to be contained in any of the patches, even if it attaches in a globally rigid way to the rest of the measurement graph. An easy example is a star graph with four neighbors, no two of which are connected by an edge, as illustrated by the graph in Fig. 9. However, we expect such pathological examples to be very unlikely in the case of random geometric graphs.

Fig. 9.

An example of a graph with a node that attaches globally rigidly to the rest of the graph, but is not contained in any patch, and thus it will be discarded by 3D-ASAP.

4.2 Finding pseudo-anchors

To satisfy the conditions of Theorem 4.1, at least d + 1 anchors are necessary for embedding a patch, hence for the molecule problem we need k ≥ 4 such anchors in each patch. Since anchors are not usually available, one may ask whether it is still possible to find such a set of nodes that can be treated as anchors. If one were able to locate a clique of size at least d + 1 inside a patch graph, then using cMDS it is possible to obtain accurate coordinates for the d + 1 nodes and treat them as anchors. Whenever this is possible, we call such a set of nodes pseudo-anchors. Intuitively, the geometric graph assumption should lead one into thinking that if the patch graph is dense enough, it is very likely to find a complete subgraph on d + 1 nodes. While a probabilistic analysis of random geometric graphs with forbidden Kd+1 subgraphs is beyond of scope of this paper, we provide an intuitive connection with the problem of packing spheres inside a larger sphere, as well as numerical simulations that support the idea that a patch of size at least ≈10 is very likely to contain four such pseudo-anchors.

To find pseudo-anchors for a given patch graph Gi, one needs to locate a complete subgraph (clique) containing at least d + 1 vertices. Since any patch Gi contains a center node that is connected to every other node in the patch, it suffices to find a clique of size at least three- in the 1-hop neighborhood of the center node, i.e., to find a triangle in Gi\i. Of course, if a graph is very dense (i.e., has high average degree) then it will be forced to contain such a triangle. To this end, we remind one of the first results in extremal graph theory (Mantel 1907), which states that any given graph on s vertices and more than edges contains a triangle, the bipartite graph with V1 =V2 =s/2 being the unique extremal graph without a triangle and containing edges. However, this quadratic bound which holds for general graphs is very unsatisfactory for the case of random geometric graphs.

Recall that we are using the geometric graph model, where two vertices are adjacent if and only if they are less than distance ρ apart. At a local level, one can think of the geometric graph model as placing an imaginary ball of radius ρ centered at node i, and connecting i to all nodes within this ball; and also connecting two neighbors j, k of i if and only if j and k are less than ρ units apart. Ignoring the center node i, the question to ask becomes how many nodes can one fit into a ball of radius ρ such that there exist at least d nodes whose pairwise distances are all less than ρ. In other words, given a geometric graph H inscribed in a sphere of radius ρ, what is the smallest number of nodes of H that forces the existence of a Kd.

The astute reader might immediately be led into thinking that the problem above can be formulated as a sphere packing problem. Denote by x1, x2,…, xm the set of m nodes (ignoring the center node) contained in a sphere of radius ρ. We would like to know what is the smallest m such that at least d =3 nodes are pairwise adjacent, i.e., their pairwise distances are all less than ρ.

To any node xi associate a smaller sphere Si of radius ρ/2. Two nodes xi, xj are adjacent, meaning less than distance ρ apart, if and only if their corresponding spheres Si and Sj overlap. This line of thought leads one into thinking how many non-overlapping small spheres can one pack into a larger sphere. One detail not to be overlooked is that the radius of the larger sphere should be , and not ρ, since a node xi at distance ρ from the center of the sphere has its corresponding sphere Si contained in a sphere of radius . We have thus reduced the problem of asking what is the minimum size of a patch that would guarantee the existence of four anchors, to the problem of determining the smallest number of spheres of radius that can be ‘packed’ in a sphere of radius such that at least three of the smaller spheres pairwise overlap. Rescaling the radii such that (hence ), we ask the equivalent problem: How many spheres of radius can be packed inside a sphere of radius 1, such that at least three spheres pairwise overlap.

A related and slightly simpler problem is that of finding the densest packing on m equal spheres of radius r in a sphere of radius 1, such that no two of the small spheres overlap. This problem has been recently considered in more depth, and analytical solutions have been obtained for several values of m. If (as in our case), then the answer is m=13 and this constitutes a lower bound for our problem.

However, the arrangements of spheres that prevent the existence of three pairwise overlapping spheres are far from random, and motivated us to conduct the following experiment. For a given m, we generate m randomly located spheres of radius inside the unit sphere, and count the number of times at least three spheres pairwise overlap. We ran this experiment 15, 000 times for different values of m=5, 6, 7, respectively 8, and obtained the following success rates 69, 87, 96%, respectively 99%, i.e., the percentage of realizations for which three spheres of radius pairwise overlap. The simulation results show that for m=9, the existence of three pairwise overlapping spheres is very highly likely. In other words, for a patch of size 10 including the center node, there are very likely to exist at least four nodes that are pairwise adjacent, i.e., the four pseudo-anchors we are looking for.

4.3 Embedding patches

After extracting patches, i.e., WUL subgraphs of the 1-hop neighborhoods, it still remains to localize each patch in its own frame. Under the assumptions of the geometric graph model, it is likely that 1-hop neighbors of the central node will also be interconnected, rendering a relatively high density of edges for the patches. Indeed, as indicated by Fig. 4 (right panel), most patches have at least half of the edges present. For noise-free distances, we embed the patches using the FULL-SDP algorithm [14], while for noisy distances we use the SNL-SDP algorithm of [53]. To improve the overall localization result, the SDP solution is used as a starting point for a gradient-descent method.

The remaining part of this subsection is a brief survey of recent SDP relaxations for the graph localization problem [9–11, 14, 62]. A solution p1,…, pn ∈ ℝ3 can be computed by minimizing the following error function

| (4.4) |

While the above objective function is not convex over the constraint set, it can be relaxed into an SDP [10]. Although SDP can be generally solved (up to a given accuracy) in polynomial time, it was pointed out in [11] that the objective function (4.4) leads to a rather expensive SDP, because it involves fourth-order polynomials of the coordinates. Additionally, this approach is rather sensitive to noise, because large errors are amplified by the objective function in (4.4), compared with the objective function in (4.5) discussed below.

Instead of using the objective function in (4.4), [11] considers the SDP relaxation of the following penalty function

| (4.5) |

In fact, [11] also allows for possible non-equal weighting of the summands in (4.5) and for possible anchor points. The SDP relaxation of (4.5) is faster to solve than the relaxation of (4.4) and it is usually more robust to noise. Constraining the solution to be in ℝ3 is non-convex, and its relaxation by the SDP often leads to solutions that belong to a higher dimensional Euclidean space, and thus need to be further projected to ℝ3. This projection often results in large errors for the estimation of the coordinates. A regularization term for the objective function of the SDP was suggested in [11] to assist it in finding solutions of lower dimensionality and preventing nodes from crowding together towards the center of the configuration.

4.4 Additional information specific to the molecule problem

In this section, we discuss several additional constraints specific to the molecule problem, which are currently not being exploited by 3D-ASAP. While our algorithm can benefit from any existing molecular fragments and their known reflection, there is still information that it does not take advantage of, and which can further improve its performance. Note that many of the remarks below can be incorporated in the pre-processing step of embedding the patches, described in the previous section.

The most important piece of information missing from our 3D-ASAP formulation is the distinction between the ‘good’ edges (bond lengths) and the ‘bad’ edges (noisy NOEs). The current implementations of the FULL-SDP and SNL-SDP algorithms do not incorporate such hard distance constraints.

One other important information which we are ignoring is given by the residual dipolar coupling (RDC) measurements that give noisy angle information (cos2(θ)) with respect to a global orientation [8].

Another approach is to consider an energy-based formulation that captures the interaction between atoms in a readily computable fashion, such as the Lennard–Jones potential. One may then use this information to better localize the patches, and prefer patches that have lower energy.

The minimum distance constraint, also referred to as the ‘hard sphere’ constraint, comes from the fact that any two atoms cannot get closer than a certain distance κ ≈ 1 Å. Note that such lower bounds on distances can be easily incorporated into the SDP formulation.

It is also possible to use the information carried by the non-edges of the measurement graph. Specifically, the distances corresponding to the missing edges cannot be smaller than the sensing radius ρ. Two remarks are in place however; under the current noise model, it is possible for true distances smaller than the sensing radius not to be part of the set of available measurements, and vice versa, it is possible for true distances larger than the sensing radius to become part of the distance set. However, since this constraint is not as certain as the hard sphere constraint, we recommend using the latter one.

Finally, one can envisage that significant other information can be reduced to distance constraints and incorporated into the approach described here for the calculation of structures and complexes. Such development could significantly speed such calculations if it incorporates larger molecular fragments based on modeling, similarly to chemical shift data etc., as done with computationally intensive experimental energy methods, e.g., HADDOCK [56].

4.5 Aligning patches

Given two patches Pk and Pl that have at least four nodes in common, the registration process finds the optimal 3D rigid motion of Pl that aligns the common points (as shown in Fig. 10). A closed form solution to the registration problem in any dimension was given in [34], where the best rigid transformation between two sets of points is obtained by various matrix manipulations and eigenvalue/eigenvector decomposition.

Fig. 10.

Optimal alignment of two patches that overlap in four nodes. The alignment provides a measurement for the ratio of the two group elements in Euc(3). In this example we see that a reflection was required to properly align the patches.

Since alignment requires at least four overlapping nodes, the K4 patches that are fully contained in larger patches are initially discarded. Other patches may also be discarded if they do not intersect any other patch in at least four nodes. The nodes belonging to such patches but not to any other patch would not be localized by ASAP.

As expected, in the case of the geometric graph model, the overlap is often small, especially for small values of ρ. It is therefore crucial to have robust alignment methods even when the overlap size is small. We refer the reader to Section 6 of [21] for other methods of aligning patches with fewer common nodes in ℝ2, i.e., the combinatorial method and the link method which can be adjusted for the three-dimensional case. The combinatorial score method makes use of the underlying assumption of the geometric graph model. Specifically, we exploit the information in the non-edges that correspond to distances larger than the sensing radius ρ and use this information for estimating both the relative reflection and rotation for a pair of patches that overlap in just three nodes (or more). The link method is useful whenever two patches have a small overlap, but there exist many cross edges in the measurement graph that connect the two patches. Suppose that the two patches Pk and Pl overlap in at least one vertex, and call a link edge an edge (u, v) ∈ E that connects a vertex u in patch Pk (but not in Pl) with a vertex v in patch Pl (but not in Pk ). Such link edges can be incorporated as additional information (besides the common nodes) into the registration problem that finds the best alignment between a pair of patches. The right plot in Fig. 5 shows a histogram of the intersection sizes between patches in the BRIDGE-DONUT graph that overlap in at least four nodes.

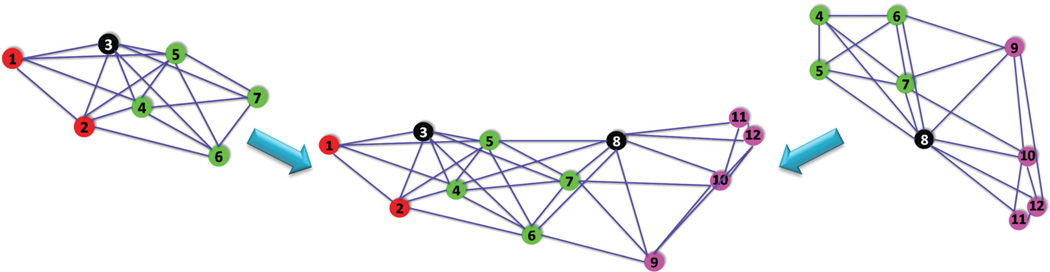

5. Spectral-Partitioning-ASAP (3D-SP-ASAP)

In this section, we introduce 3D-Spectral-Partitioning-ASAP (3D-SP-ASAP), a variation of the 3D-ASAP algorithm, which uses spectral partitioning as a preprocessing step for the localization process.

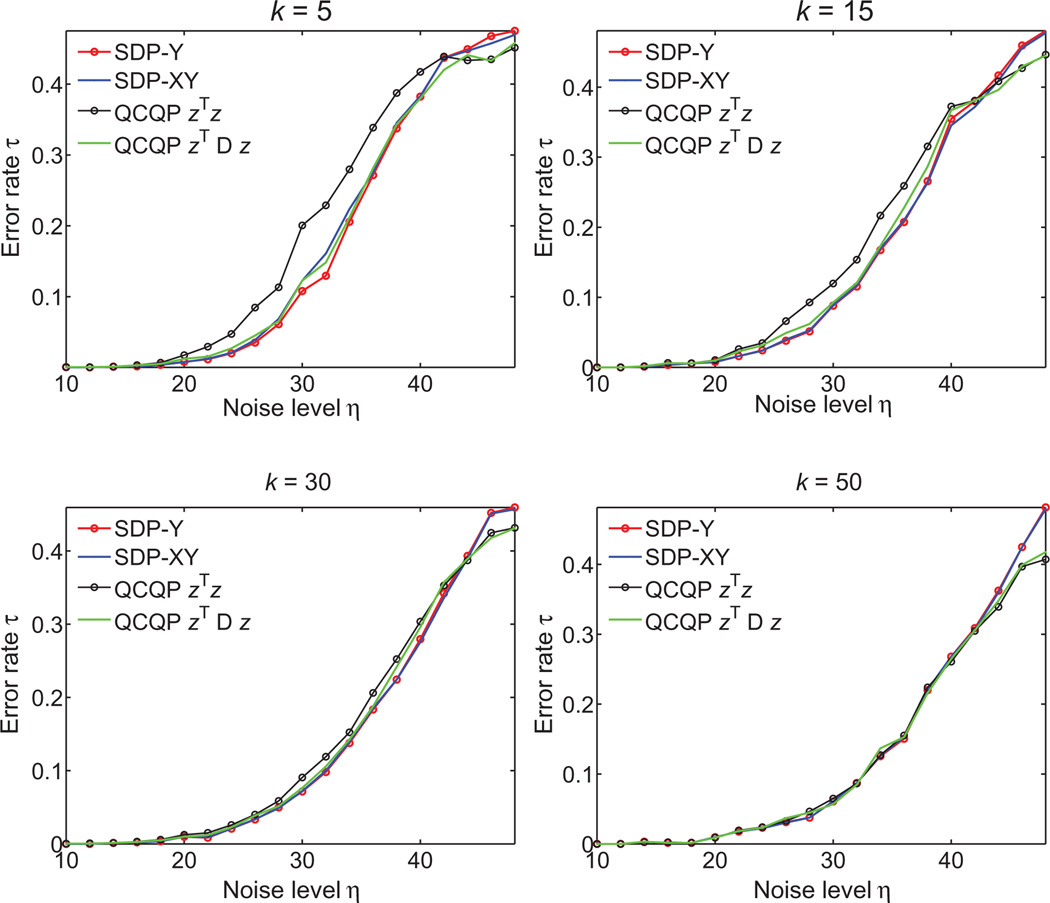

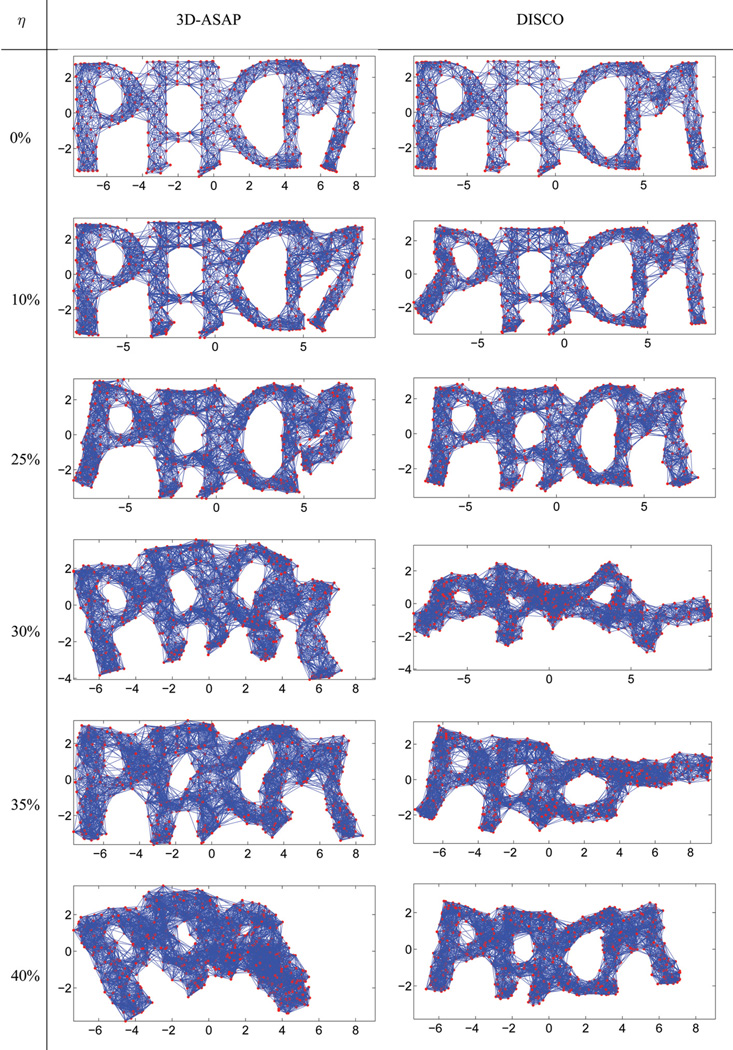

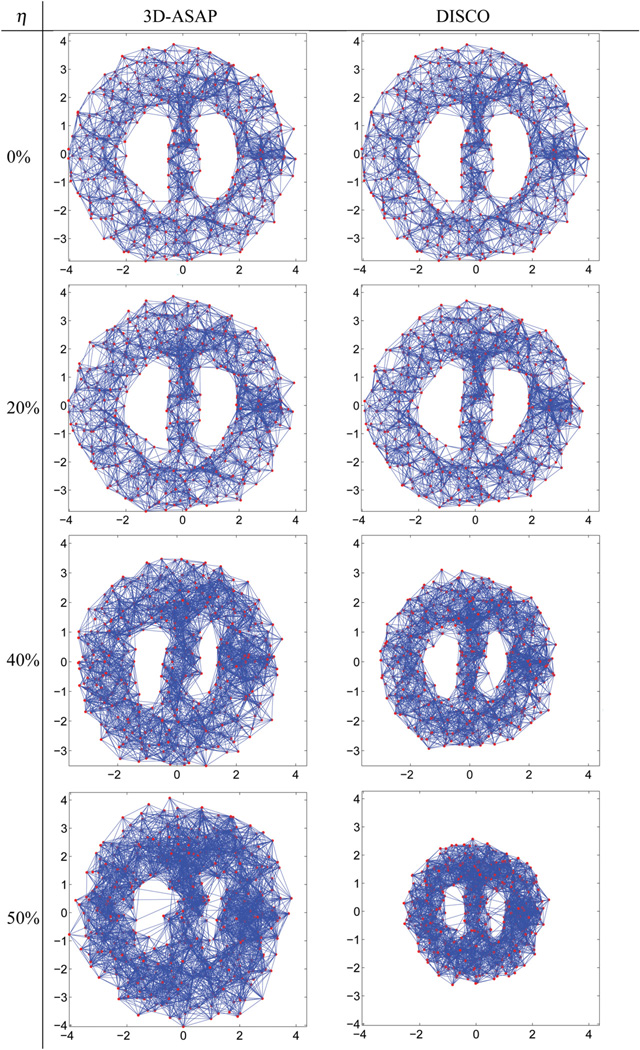

3D-SP-ASAP combines ideas from both DISCO [42] and ASAP. The philosophy behind DISCO is to recursively divide large problems into smaller problems, which can ultimately be solved by the traditional SDP-based localization methods. If the number of atoms in the current group is not too large, DISCO solves the atom positions via SDP and refines the coordinates by using gradient descent; otherwise, it breaks the current group of atoms into smaller subgroups, solves each subgroup recursively, aligns and combines them together, and finally it improves the coordinates by applying gradient descent. The main question that arises is how to divide a given problem into smaller subproblems. DISCO chooses to divide a large group of nodes into exactly two subproblems, solves each problem recursively and combines the two solutions. In other words, it builds a binary tree of problems, where the leaves are problems small enough to be embedded by SDP. However, not all available information is being used when considering only a single spanning tree of the graph of patches. The 3D-ASAP approach fuses information from different spanning trees via the eigenvector computation. However, compared with the number of patches used in DISCO, 3D-ASAP generates many more patches, since the number of patches in 3D-ASAP is linear in the size of the network. This can be considered as a disadvantage, since localizing all the patches is often the most time-consuming step of the algorithm. 3D-SP-ASAP tries to reduce the number of patches to be localized while using the patch graph connectivity in its full.