Abstract

Face processing has been studied for decades. However, most of the empirical investigations have been conducted using static face images as stimuli. Little is known about whether static face processing findings can be generalized to real world contexts, in which faces are constantly moving. The present study investigates the nature of face processing (holistic vs. part-based) in elastic moving faces. Specifically, we focus on whether elastic moving faces, as compared to static ones, can facilitate holistic or part-based face processing. Using the composite paradigm, participants were asked to remember either an elastic moving face (i.e., a face that blinks and chews) or a static face, and then tested with a static composite face. The composite effect was (1) significantly smaller in the dynamic condition than in the static condition, (2) consistently found with different face encoding times (Experiments 1–3), and (3) present for the recognition of both upper and lower face parts (Experiment 4). These results suggest that elastic facial motion facilitates part-based processing, rather than holistic processing. Thus, while previous work with static faces has emphasized an important role for holistic processing, the current work highlights an important role for featural processing with moving faces.

Keywords: elastic facial movement, holistic processing, part-based processing, composite face paradigm

Object motion provides enriched information about the world. Movement facilitates the processing of multi-dimensional profiles of objects as well as their temporal and spatial relationships. One of the most salient moving objects we encounter everyday is the human face. Faces perform various movements, which can be categorized into two types: elastic and rigid. Elastic facial movement refers to the transient structural transformation of the facial skeletal-musculature. For example, a simple smile is comprised of more than 17 facial muscle movements. Rigid facial movement refers to transient changes in face orientation while the facial structure remains unchanged (head turning and nodding). In many circumstances, both types of movements take place concurrently.

Research has revealed that facial movements can facilitate face recognition (e.g., Butcher, Lander, Fang, & Costen, 2011; Knight & Johnston, 1997), a phenomenon that we will henceforth refer to as the facial motion beneficial effect. However, the underlying mechanisms of this effect remain unclear. In the present study, we focused on the influence of elastic facial movement on the manner by which faces are processed, specifically investigating its effect on holistic and part-based processing in face recognition.

Facial Motion Beneficial Effect

It is well established in the literature that learning moving faces leads to superior recognition performance compared to static faces (Knight & Johnston, 1997; Lander & Bruce, 2003; 2004; Lander, Bruce, & Hill, 2001; Lander, Christie, & Bruce, 1999; Lander & Chuang, 2005; Lander, Chuang, & Wickham, 2006; Lander & Davies, 2007; O’Toole et al., 2011; O’Toole, Roark, & Abdi, 2002; Otsuka et al., 2009; Pike, Kemp, Towell, & Phillips, 1997; Roark, Barrett, Spence, Abdi, & O’Toole, 2003; Thornton & Kourtzi, 2002). For example, Schiff, Banka, and de Bordes Galdi (1986) reported that moving faces in videos were more accurately identified than static faces. This facial motion beneficial effect for face recognition remained even when learned dynamic faces were compared to learned static images of multiple profiles of a face (Bulf & Turati, 2010; Lander & Bruce, 2003; Otsuka et al., 2009; Pilz, Thornton, & Bülthoff, 2005). Furthermore, evidence from previous studies indicates that this motion beneficial effect is a result of the dynamic nature of the moving faces (Lander & Bruce, 2000; 2004; Lander et al., 1999; Lander & Davies, 2007). Lander and Bruce (2004), for example, compared the recognition accuracy of learned moving faces at different video playback speeds. They found that the highest face recognition accuracy was for those learned at a natural speed, which implies that faces moving at their natural speed are better encoded and subsequently recognized. Taken together, these studies provide strong evidence to suggest that the dynamic information in moving faces facilitates improved face recognition.

Despite consistent findings in support of the facial motion facilitation effect, the cause of the effect remains unclear. Some researchers have suggested that the beneficial effect may stem from the fact that facial movement optimizes facial information processing, which in turns leads to better recognition of dynamic faces than static ones (Bulf & Turati, 2010; O’Toole et al., 2002; Otsuka, Hill, Kanazawa, Yamaguchi, & Spehar, 2012; Otsuka et al., 2009; Pike et al., 1997; Roark et al., 2003). However, which particular aspect of facial information processing has been optimized has not been specified.

It has been widely recognized that face information is processed in at least two qualitatively different manners: holistic and part-based processing (Calder, Rhodes, Johnson, & Haxby, 2011; Mondloch, Pathman, Maurer, Le Grand, & de Schonen, 2007; Moscovitch, Winocur, & Behrmann, 1997; Tanaka & Farah, 1993; Young, Hellawell, & Hay, 1987). Holistic face processing refers to the tendency to integrate facial information as a unified whole or gestalt, whereas part-based processing focuses on the processing of facial features (e.g., eyes, nose, and mouth) in isolation. According to existing studies, adults, who are face-processing experts, predominantly employ the holistic method (McKone, 2008; Richler, Cheung, & Gauthier, 2011; Richler, Mack, Gauthier, & Palmeri, 2009). For example, Richler et al. (2011a) found that holistic face processing predicts face recognition ability in adults. Wang, Li, Fang, Tian, and Liu (2012) have also recently confirmed this association by providing evidence for a correlation between individual differences in holistic processing and face recognition accuracy. Holistic face processing additionally has been found to play an important role in superior recognition of own race faces compared to other race faces (Harrison et al., 2011; Hayward, Crookes, Favelle, & Rhodes, 2011; Michel, Corneille, & Rossion, 2007; Michel, Rossion, Han, Chung, & Caldara, 2006), with own race face processing employing significantly more holistic processing. In addition, face recognition deficits, which are commonly observed in prosopagnosia and autism populations, can be explained by impairments in holistic face processing (Ramon, Busigny, & Rossion, 2010; Teunisse & de Gelder, 2003). Taken together, the existing evidence suggests that expert-level face processing in adults depends on holistic face processing as opposed to part-based processing.

It should be noted that all of these findings have been obtained with the use of static faces as stimuli. However, in everyday life, most of the faces we encounter are moving faces. It is primarily with dynamically moving faces, not static ones, that we typically perform various tasks such as detection, discrimination, and recognition. It is entirely unclear whether findings with static faces can be generalized to dynamic moving faces. More specifically, it is not clear how, or even whether, facial movement influences holistic or part-based processing of faces.

Facial Movement’s Influence On Holistic versus Part-Based Processing

Only a few studies have investigated how facial movement influences the manner in which faces are processed, or more specifically, whether facial motion leads to greater holistic processing or part-based processing. One set of studies has used the face inversion paradigm as an indirect method to address this question. It is believed that inversion disproportionally disrupts holistic face processing but not part-based processing (Freire, Lee, & Symons, 2000; Yin, 1969). Thornton, Mullins, and Banahan (2011) examined face gender identification in both static and moving faces. They found that participants needed significantly more time to judge the gender of inverted moving faces than that of upright moving faces, whereas gender identification for static faces was unaffected by face inversion. Based on this finding, the researchers concluded that facial movement facilitates holistic processing. However, Hill and Johnston (2001) found participants to be more accurate in judging the gender of moving faces in both the upright and inverted conditions. Furthermore, Knappmeyer, Thornton, and Bülthoff (2003) showed that identity judgment based on facial movement was also not influenced by inversion. Recent studies have further challenged the traditional view that inversion disrupts holistic face processing more so than part-based processing. More recently, inversion has been shown to impair not only holistic processing, but also part-based processing (e.g., Yovel & Kanwisher, 2004). This finding raises considerable doubts about the appropriateness of using inversion to address the holistic versus part-based processing question.

The face composite effect has come to be regarded as a more direct and reliable measure of holistic face processing (Cheung, Richler, Phillips, & Gauthier, 2011; Harrison et al., 2011; McKone, 2008; Richler et al., 2009, 2011a; Young et al., 1987; see Tanaka & Gordon, 2011, for a review). This effect refers to the phenomenon in which identification of a certain face part (upper or lower face) is involuntarily affected by the other face part. For example, when a face is made up of two face parts, which belong to two different persons, the identification of one face part is impeded by the other face part when the two parts are fully aligned. Researchers have regarded this interference as evidence of holistic processing, demonstrating the automatic integration of the whole face to form a face gestalt. This argument has been further supported by the fact that the misalignment of two face parts or face inversion has the ability to reduce or eliminate this interference due to the destruction of the face gestalt (McKone, 2008; Mondloch & Maurer, 2008; Richler et al., 2011b; Young et al., 1987).

Using the face composite effect paradigm, Xiao, Quinn, Ge, and Lee (2012) recently examined the influence of rigid facial movement on holistic versus part-based processing. In their study, participants saw a sequence of images of a face from different orientations and were asked to remember the face. Then, they were shown a static composite face, either aligned or misaligned, and asked to identify whether the upper face part of the composite face belonged to the same person they saw in the sequential face images. The major dynamic versus static manipulation was centered on how the images of a face from different orientations were displayed.

In the dynamic condition, the face images from different orientations were shown sequentially in a natural order and at a natural speed, which could be perceived as the face turning from one side to another. In the static condition, the face images from different orientations were displayed at the same speed but in a randomized order (Experiment 1) or in the same natural order but with long intervals between images (Experiments 2 and 3). Thus, in the static condition, participants could not perceive coherent facial movements, although they saw exactly the same images of a face from different orientations.

Xiao et al. (2012) observed the typical face composite effect in the static condition in each of the three experiments: participants recognized the upper face part better when it was misaligned with the lower foil face part than when it was aligned. However, in the dynamic condition of each of the three experiments, the face composite effect disappeared. This finding suggests that rigid facial movement during face familiarization promotes part-based face processing rather than holistic face processing, which consequently reduces the holistic interference from the irrelevant face part. Xiao et al. (2012) further suggested that the predictable rigid facial motion allowed observers to readily attend to a certain face part during the familiarization stage, thereby enabling them to withstand interference from an irrelevant face part during the testing stage.

However, this explanation might not be applicable to elastic facial movement, as the two types of facial movements present completely different movement patterns. Previous studies have suggested that the facial information embedded in rigid (e.g., head rotation and nodding) and elastic (e.g., facial expression, oral speech, and gaze) facial movement is different (Lander & Bruce, 2003; O’Toole et al., 2002; Roark et al., 2003). Rigid facial movements provide multiple viewpoints of a face, and display coherent face orientation changes (i.e., head turning and nodding). Thus, rigid motion by definition does not contain any changes related to face structure or features. This coherent predicable nature of movement makes it possible to maintain attention to certain facial features across different viewpoints. However, elastic facial movements, by definition, involve changes in facial features and the structural relationships among them. One possibility is that the changing nature of facial features during elastic motion makes it difficult for observers to sustain their attention. Additionally, elastic facial motions are often idiosyncratic and sometimes provide characteristic information unique to a face’s owner (i.e., dynamic identity). As a result, observers may resort to using a more holistic method to process the dynamic face (holistic processing hypothesis). Alternatively, it is possible that the changes in face parts during elastic motion draw more attention to facial features, which leads to increased part-based processing (part-based processing hypothesis). The present study was designed to examine these two hypotheses by addressing a specific issue: how elastic facial movement influences the manner by which faces are processed.

The Present Study

Here, we examined the effect of elastic facial movement on face processing by using the composite face paradigm. In Experiments 1 through 4, participants were first familiarized with a dynamic or a static target face. The dynamic target face display consisted of an individual facing the participants while blinking and chewing silently. The reason for choosing silent chewing movement as the source of elastic motion was because it does not include expressive, semantic, or attention cues, making it ideal to study the pure effect of facial movement. The static target face was a single image of the same face. Following the familiarization phase, a composite static face appeared. It consisted of upper and lower face parts from two different faces, which were either aligned to form a face gestalt or misaligned. The participants’ task was to judge whether the upper or lower parts of the composite faces belonged to the target face.

Similar to existing studies with static faces (e.g., Mondloch & Maurer, 2008; Young et al., 1987), we used response accuracy differences between recognizing the aligned and misaligned composite faces (i.e., the composite effect) to index the manner in which facial elastic motion affected face processing. If the target face was processed holistically, worse performance should be observed for the aligned composite face than for the misaligned one. In other words, a composite effect should be observed. However, if participants processed the target face in a part-based manner, then the face composite effect should not be observed. That is, we should observe equal accuracy in recognizing face parts in the aligned and misaligned conditions.

By comparing the composite effect in the dynamic condition with that in the static one, we can infer how elastic facial movement influences the manner in which face information is processed. A larger composite effect in the dynamic condition compared to the static condition would indicate that elastic facial movement facilitates participants’ processing of the target face more holistically. On the other hand, a smaller composite effect in the dynamic condition compared to the static condition would suggest that elastic facial movement facilitates part-based processing.

In Experiments 1 through 3, we asked participants to learn and recognize the upper face parts of the target composite faces. In Experiment 4, we asked participants to recognize the lower face parts to examine whether the results of Experiments 1 through 3 could be generalized to lower face identification.

Experiments 1–3

Experiments 1–3 were designed to examine how elastic facial movement influences face recognition by using the face composite paradigm (Maurer, Le Grand, & Mondloch, 2002; McKone, 2008; Mondloch & Maurer, 2008; Mondloch et al., 2007; Richler et al., 2009, 2011a; Richler, Tanaka, Brown, & Gauthier, 2008; Young et al., 1987). Specifically, we compared the composite effect in the dynamic condition against that in the static condition to understand whether elastic facial movements encourage either holistic or part-based face processing. In addition, the elastic facial movement’s influence was investigated with three different stimulus presentation durations in Experiment 1, 2, and 3, respectively, so as to ascertain whether facial motion’s impact is influenced by encoding time. If encoding time affects the motion effect, then we should observe different sized effects between the three experiments. In particular, the longer the duration, the greater the motion effect.

Method

Participants

Twenty-four Chinese participants (7 males) participated in Experiment 1, and another 48 participants took part in Experiment 2 (N = 24, 7 males) and Experiment 3 (N = 24, 8 males). All participants had normal or corrected to normal vision, and they had not met any of the models whose faces would be used in the experiment. Participants took part in the experiment after giving their informed consent. They participated in only one of the three experiments.

Materials and procedure

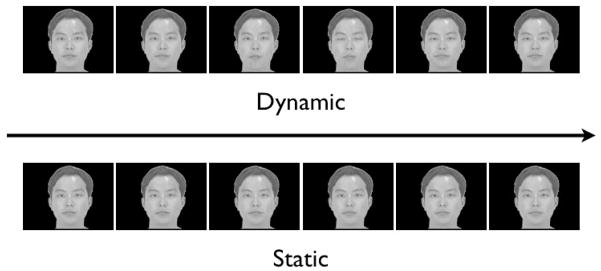

In Experiment 1, participants saw and were asked to remember a target face. In the dynamic condition, front-facing faces that were silently blinking and chewing were presented to participants (Figure 1). The faces were those of 20 Chinese models (10 male and 10 female), who were required to pose with neutral expressions and avoid any head movements. Following the presentation of the target face for 600 ms, a 500 ms visual mask was presented at the center of the screen. A static composite test face showed up immediately after the offset of the visual mask. The composite test face was comprised of upper and lower face parts, which came from two different faces. There were two types of test trials: target face test trials and foil face test trials. In the target face test trials, the upper part of the composite face was from the target face, whereas the lower part was from a different person that the participants had not seen before. For half of the target face test trials, the upper and the lower face parts were aligned to form a whole face (i.e., the aligned condition), whereas in the other half of the trials, the two were misaligned at about the midpoint of the face (i.e., the misaligned condition, see Figure 2).

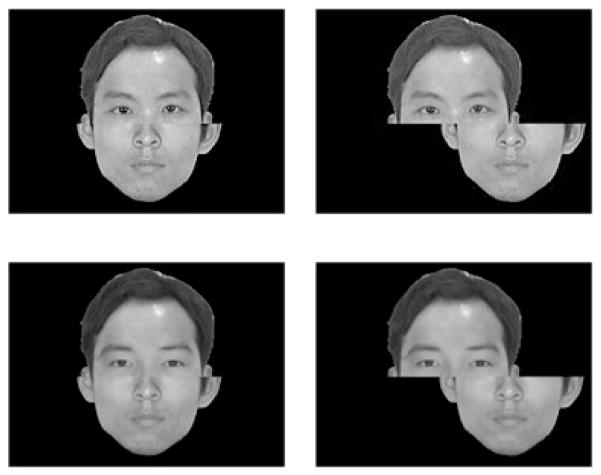

Figure 1.

Illustrations of the dynamic and static target face conditions.

Figure 2.

Examples of composite faces. The upper panel faces are target aligned and misaligned faces; the bottom panel faces are foil aligned and misaligned faces.

In the foil face test trials, the upper foil test face came from another face image different from the target face. The foil face and target face images were identical except that the target face’s eyes and nose were replaced with those from additional faces. These additional faces were themselves never seen by participants in the present study. The image editing software Photoshop was used to ensure that the foil faces with eye and nose replacements looked natural. The resultant foil faces were perceived as belonging to entirely different persons from the target faces although they shared the same face contour.

When the target or foil test face was presented, participants were asked to recognize whether the upper face part was the same person as the target face by pressing keys. Participants were supposed to respond “same person” in the target test trials and “different person” in the foil test trials.

For the static condition, the procedure was exactly the same as that in the dynamic condition, except the target faces were static face pictures rather than moving face videos. These face pictures were extracted from the face videos. For each model’s face video, six static face pictures (2 for the closed mouth images, 2 for the open mouth images, and 2 for the middle point between open and closed mouth) were extracted and were randomly presented in the static trials. All of the face videos and pictures were sized to 640 * 480 pixel and were presented on 17-inch monitors with a resolution of 1024 * 768 pixel.

For Experiments 2 and 3, the procedure and stimuli were identical to those in Experiment 1 except for the target face presentation duration. The target faces were shown for 1200 ms in Experiment 2 and 1800 ms in Experiment 3.

To avoid potential interference between the dynamic and static trials, 20 models’ faces were equally split into two sets (5 male and 5 female for each set), which were used in either the dynamic or static condition respectively. For half of the participants, faces in Set 1 were only shown in the dynamic trials and those in Set 2 were only shown in the static trials, and vice versa for the other half of the participants.

All of the participants went through 160 experimental trials, which were equally divided into 2 (target face type: dynamic and static) × 2 (composite face alignment: aligned and misaligned) × 2 (composite face type: target and foil) = 8 conditions. All the trials were presented in a random order.

A set of 4 practice trials was administered before the experimental trials so as to familiarize participants with the experimental procedure. The faces used in these practice trials were not shown in the experimental trials.

Results and Discussion

The measurement used in the present study was participants’ response accuracy. According to previous studies, only accuracy data from the target composite face trials was taken into account (e.g., Mondloch & Maurer, 2008; Young et al., 1987) because it is regarded as more reliable to examine whether faces are processed holistically or in a part-based manner (Richler et al., 2011b).

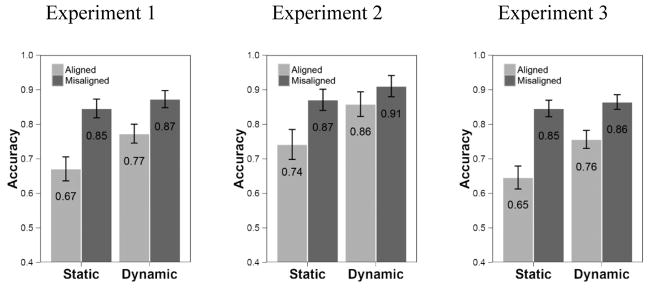

Figure 3 shows that the mean accuracy in Experiments 1, 2, and 3. For Experiment 1, a 2 (target face type) × 2 (composite face alignment) repeated measures ANOVA was conducted on response accuracy. Consistent with previous findings, test faces were more accurately recognized in the dynamic condition than in the static one (Mdynamic = .82, Mstatic = .76, F[1, 23] = 7.01, ηp 2 = .23, p = .014). Additionally, the misaligned test composite faces were better recognized than the aligned faces (Mmisaligned = .86, Maligned = .72, F[1, 23] = 22.32, ηp2 = .49, p < .001). This finding suggests that the test faces were processed in a holistic manner regardless of condition.

Figure 3.

Mean accuracy of target face recognition in Experiments 1–3. Error bars represent unit standard error.

Next, we explored whether the degree of holistic and part-based face processing differed in the dynamic and static conditions by examining whether the composite effect was the same between the dynamic and static conditions. A significant interaction was found between target face type and alignment (F[1, 23] = 5.26, ηp2 = .19, p = .031), indicating that the size of the composite effect in the dynamic and static conditions was different. We calculated the face composite effect in the dynamic and static conditions by subtracting response accuracy for aligned faces from that for misaligned faces. As shown in Figure 3, dynamic faces led to a smaller composite effect than those in the static condition (Mdynamic = .10, Mstatic = .17). Furthermore, simple effects analyses showed that moving faces led to better recognition of aligned than of misaligned composite faces (p < .050). However, no difference was found in recognition of misaligned composite faces between the dynamic and static conditions (p > .050). Thus, elastic facial movement decreased the amount of holistic interference caused by aligned face parts, suggesting that elastic facial movement led participants to process facial information in a more part-based manner.

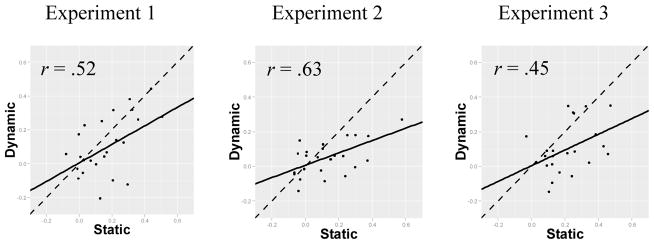

It should be noted that the part-based processing promoted by elastic facial movement does not necessarily mean that motion provided additional information to the user nor that it improved recognition. In fact, there was no difference in recognition among the misaligned composite faces between the two conditions. Instead, facial motion seems to facilitate more part-based processing. This argument can be further supported with an individual differences analysis on the correlation between the composite effect size in the dynamic and static conditions among participants. The Pearson correlation showed a significant positive correlation between the two (r = .52, p = .009, see Figure 4). This correlation suggests that elastic facial movement did not completely change face processing from holistic to part-based in an all-or-none fashion. Otherwise, we should not have observed any correlation between the dynamic and static conditions in composite effect size. Rather, elastic movement shifted the manner by which participants processed faces from more holistic to more part-based.

Figure 4.

The relation of the composite effect size between the dynamic and static conditions. The solid lines represent the regression line. The dashed line is a theoretical line representing perfect correlation between the size of the composite effect in the dynamic and static conditions.

For Experiment 2, we found the same results as those in Experiment 1. The 2-way ANOVA showed a significant main effect for both target face type and test face alignment factors. Participants’ responses were more accurate when they learned dynamic faces than static ones (Mdynamic = .88, Mstatic = .81, F[1, 23] = 23.37, ηp2 = .50, p < .001). The alignment of the upper and lower part of the composite faces affected participants’ recognition of the upper part (Mmisaligned = .89, Maligned = .80, F[1, 23] = 14.70, ηp2 = .39, p < .001). More importantly, it showed that the size of the composite effect in the dynamic condition was significantly smaller than in the static condition, F(1, 23) = 8.87, ηp2 = .28, p = .006, which again indicates face processing was different when learning dynamic faces compared to static ones. Simple effects analyses revealed that for misaligned faces, no significant difference in recognition between dynamic and static conditions was found (p > .050). However, for aligned composite faces, face recognition was significantly better in the dynamic condition than in the static condition (p < .050), suggesting that when participants learned the elastic moving faces as compared to the static ones their processing became more part-based. In addition, this processing difference was supported by a significant correlation between the size of participants’ composite effects between the dynamic and static conditions (Figure 4, r = .63, p = .001). This latter result once again indicates that elastic facial movement boosted part-based processing when learning dynamic faces as compared to static ones, rather than completely changing the manner of face processing from holistic to part-based.

As in Experiments 1 and 2, we conducted identical analyses for Experiment 3. Both the ANOVA and correlation analysis revealed the same results as those from the previous experiments. As shown in Figure 3, elastic facial movement led to better recognition (Mdynamic = .81, Mstatic = .75, F[1, 23] = 9.17, ηp2 = .29, p = .006). Misaligned composite faces were better recognized than aligned ones (Mmisaligned = .86, Maligned = .70, F[1, 23] = 44.73, ηp2 = .66, p < .001). The interaction between the two factors also showed significance (F[1, 23] = 6.69, ηp2 = .23, p = .017), indicating that composite size was different between the dynamic and static conditions. The simple effects analyses once again replicated the results from Experiments 1 and 2: elastic facial movement facilitated upper face part recognition for aligned faces (p < .050), but not for misaligned faces (p > .050). The significant correlation between the composite effect in the dynamic and the static conditions (r = .45, p = .031) demonstrated that elastic facial movement increased the degree to which part-based face processing was employed, but did not entirely shift face processing from holistic to part-based.

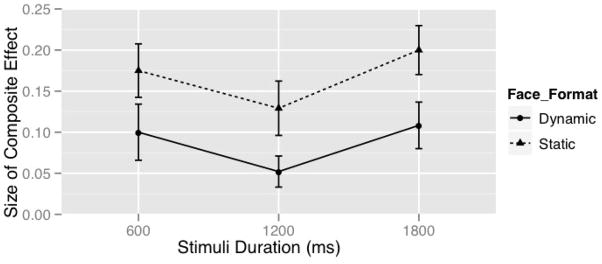

Results from Experiments 1 through 3 consistently revealed an elastic motion effect, which showed that elastic moving faces led to a smaller composite effect than static faces. The next analysis focused on whether this elastic motion effect was affected by the face presentation duration used in Experiments 1, 2, and 3, respectively. We conducted a 2 (target face type) × 2 (composite face alignment) × 3 (presenting duration) 3 way mixed ANOVA on the accuracy data from the three experiments. No significant effects involving the presentation duration factor were found (ps > .10, Figure 5). Thus, the elastic motion effect was robust across the different durations of face presentation.

Figure 5.

The size of the composite effect for the dynamic and static conditions in Experiments 1 through 3. The error bars represent unit standard error.

In sum, the three experiments consistently showed that elastic facial movement led to a smaller composite effect. This elastic motion effect suggests that elastic facial movement may promote part-based face processing. However, an alternative explanation emerges as this effect may derive from the particular moving pattern of the chewing and blinking faces because this facial movement occurs primarily in the mouth area, that is, the lower face part. On the other hand, the blinking occurring in the upper face part is relatively infrequent. The dynamic contrast between two face parts could lead the upper face part to be perceived as relatively more stable than the lower chewing face part, thereby allowing the upper face part information to be more easily accessed. Thus, participants might have more easily processed the upper part of the face than the lower one, resulting in better performance in the dynamic than the static condition. This argument suggests that the motion effect might merely apply to situations where recognition of the upper face part is required.

In Experiment 4, we aimed to address this potential issue by asking participants to recognize the lower instead of upper face part. If the smaller composite effect in the dynamic condition was due to general part-based processing facilitation, we should continue observing this effect in the lower face part recognition task. Alternatively, if the initial results were due to the particular unbalanced moving pattern in the chewing faces, then we should observe a comparable composite effect in the dynamic and static conditions.

Experiment 4

Method

Participants

Nineteen undergraduate students participated in the current experiment (8 males) with normal or corrected to normal vision. None of them had participated in the previous experiments or knew the models in the experiments.

Materials and procedure

The stimuli in the present experiment were identical to those in the previous ones except for the composite faces. The upper part of the composite faces was always a different person from the person in the target face. The lower part of the target composite faces was always the same person as the person in the target face. The lower parts of the foil composite faces had the same face contour as those of the target composite faces, except their inner face features (i.e., nose and mouth) were replaced with those from other models’ face feature images, which were never shown in the present study.

The procedure was identical to that in Experiment 2 (1200 ms presentation duration), with the only exception being task requirement. In the present experiment, participants were asked to identify whether the lower face part of the composite faces was the same person as in the target faces.

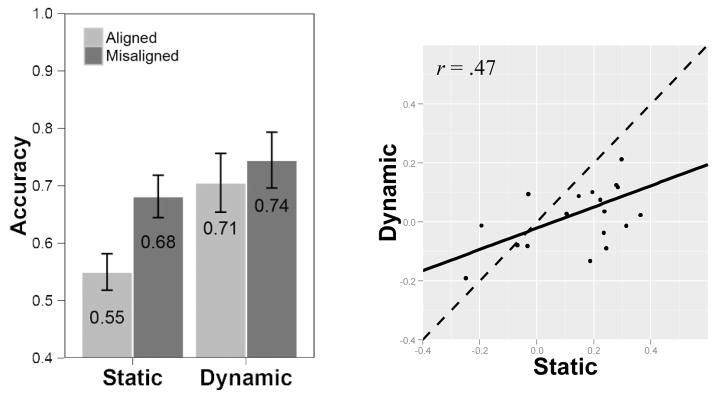

Results and Discussion

As shown in Figure 6, the present results were similar to those in Experiments 1–3, which was confirmed by a 2 (target face type) × 2 (composite face alignment) ANOVA. Learning dynamic faces led to overall better recognition (M = .72) as compared to learning static ones (M = .62, F[1, 18] = 18.52, ηp2 = .51, p < .001). The facial movement beneficial effect was replicated when identifying lower composite face parts. Additionally, better recognition was also observed in the misaligned condition (M = .71) than the aligned one (M = .63, F[1, 18] = 9.70, ηp2 = .35, p = .006). More importantly, the interaction was significant, F(1, 18) = 7.14, ηp2 = .28, p = .016, with a smaller composite effect in the dynamic condition (M = .04) than in the static condition (M = .13). Thus, despite the fact that we changed the task demand and asked participants to recognize the lower face parts, the same elastic motion effect was observed.

Figure 6.

Mean accuracy of target composite face recognition in Experiment 4 (left). Error bars represent unit standard error. The relation of the composite effect size between the dynamic and the static conditions (right). The solid line indicates the regression line, and the dashed line is a theoretical line representing perfect correlation between the size of the composite effect in the dynamic and static conditions.

The individual differences analysis also showed that the composite effect in the dynamic and the static conditions was significantly correlated (r = .47, p = .043). This correlation corresponded with those in the previous experiments, suggesting that facial movement only changed the degree of using part-based processing relative to holistic processing.

To confirm the similarity between findings in the current and previous experiments, we directly compared the composite effect in the present experiment (lower recognition) with that in Experiment 2 (upper recognition), given that both conditions used the same 1200 ms stimulus presentation time. A 2 (task) × 2 (target face type) mixed ANOVA was conducted on the size of the composite effect. Each participant’s composite effect score was calculated by subtracting his or her aligned face recognition accuracy from that in the misaligned trials. As expected, the effect of target face type showed a larger composite effect in the static than the dynamic condition (F[1, 41] = 15.92, ηp2 = .28, p < .001). However, neither the main effect of experiment nor the interaction reached significance (ps > .724). This finding suggests that neither the size of the composite effect nor the motion effect that facilitates part-based processing is affected by the demand of the recognition task.

In the lower face part recognition task, the present experiment successfully replicated the finding that elastically moving faces led to a smaller composite effect than for static faces. The similar findings within the upper and the lower face recognition tasks indicate that the effect of facial movement is not limited to recognition of particular face parts, reflecting elastic movement’s relatively pervasive influence on the manner in which faces are processed.

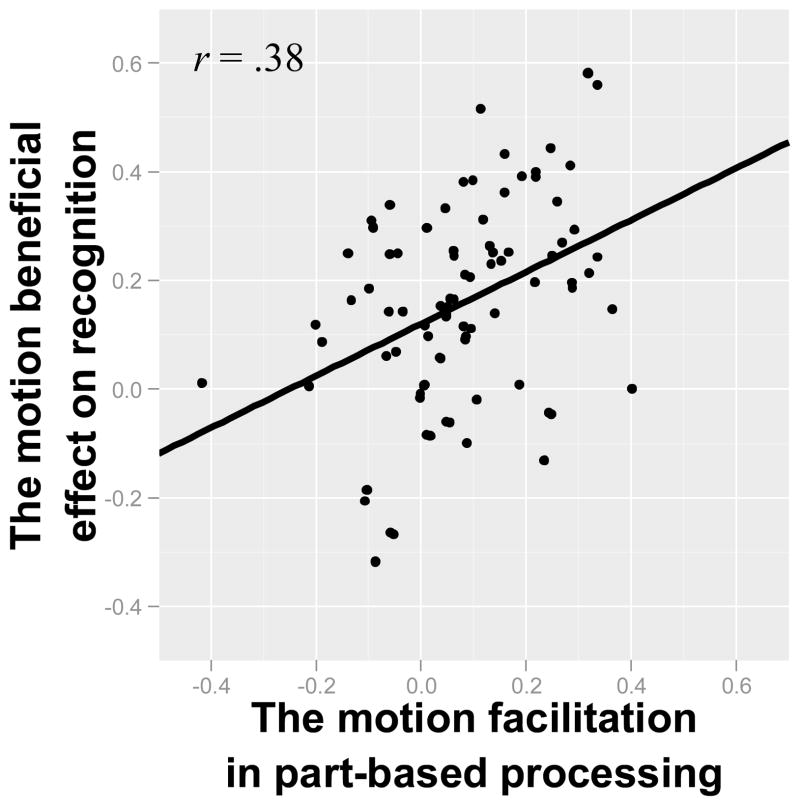

In sum, we have found evidence that the elastic facial motion effect is relatively robust in both upper and lower face part recognition. Thus, results from Experiments 1 through 4 together suggest that elastic facial movement leads to more part-based face processing. In spite of the consistency of results across Experiments 1 through 4, one crucial question remains: is the elastic motion effect in face recognition related to participants’ change in face processing manner from holistic to part-based? We tested this possibility by examining the correlation between the degree of change in processing manner and the degree to which the recognition advantage was facilitated by facial movement. We calculated the degree of change in processing manner by subtracting the composite effect in the dynamic condition from that in the static condition (i.e., the size of the motion facilitation effect). The recognition advantage was determined by subtracting recognition accuracy in the static condition from that in the dynamic condition. A Pearson correlation was performed to examine the relationship between these two measurements based on the data from Experiments 1 through 4. Data from 5 participants were not included in the analysis because their scores on one or the other measure were greater than 2 standard deviations from the mean. The degree to which part-based processing was used was found to be positively correlated with improved recognition for the facial movement condition (see Figure 7, r = .38, p < .001). Although causation cannot be inferred based on this correlational result, it does suggest a potential link between elastic facial movement’s facilitation of part-based processing and improved recognition performance.

Figure 7.

The relation between the size of part-based processing facilitated by elastic motion and the size of motion facilitation on recognition. The solid line represents the regression line.

General Discussion

In the present study, we examined the effect of elastic facial movement on the manner by which faces are processed. Specifically, we tested whether facial motion facilitates either holistic or part-based face processing. We obtained four major findings. First, facial motion facilitates improved face recognition, replicating a robust effect in the literature (Butcher et al., 2011; Hill & Johnston, 2001; Knight & Johnston, 1997; Lander & Bruce, 2003, 2004; Lander & Chuang, 2005; Otsuka et al., 2009). Second and more importantly, we found that elastic facial movement leads to a significantly smaller composite effect, suggesting that elastic facial movement facilitates part-based, not holistic, face processing. Third, the effect is found in three experiments that varied in exposure time. This finding suggests that the facial motion’s part-based processing facilitation effect is relatively robust and apparently unaffected by how much time participants have to encode the target face. Fourth, the facial movement effect is also found regardless of task demand (i.e., recognizing either the upper or lower part of the face).

In the present study, the face composite effect was used to obtain direct evidence indicating that elastic facial movement promotes part-based face processing. In addition, analyses of individual differences showed that elastic facial movement increases the degree to which participants use part-based processing in extracting face identity information. That is, elastic facial movement does not completely change participants’ face processing strategy from a holistic to a part-based manner in an all or none fashion.

The current study, to our knowledge, is the first to provide direct evidence for an influence of elastic facial movement on the manner in which faces are processed. Our finding that elastic facial movement promotes part-based face processing is in line with results from previous studies that have used indirect measures. For example, Hill and Johnston (2001) showed that facial motion could facilitate gender judgment in both upright and inverted faces, thereby suggesting that the effect of facial motion is preserved with face inversion. Also, findings from Knappmeyer et al. (2003) indicated that there was no difference in the amount of motion facilitation when making identity judgments for upright and inverted faces. Given that inverting faces has been thought to mainly disrupt holistic face processing, the finding that facial movement facilitates recognition of inverted faces to the same extent as upright faces suggests that facial movement exerts its effect mainly on part-based, and not holistic, processing.

Why would elastic facial movement facilitate part-based rather than holistic processing? There exist at least two non-mutually exclusive possibilities. First, the effect may be a result of the attention that elastic facial movement draws to individual face parts (Lander, Hill, Kamachi, & Vatikiotis-Bateson, 2007). As shown in previous studies, although holistic processing is regarded as dominant in face perception, part-based processing becomes dominant when faces possess distinguishing features (e.g., big nose, hairstyle, Moscovitch et al., 1997; Sergent, 1985; Young, Ellis, Flude, McWeeny, & Hay, 1986). Elastic movement is a product of the motion of several facial parts, and it is possible that motion of these facial features might cause them to appear more salient than those that remain static, thus acting as attention attractors. Participants’ attention is likely driven to these face parts involuntarily, as opposed to allocation of attention across the whole face area. This behavior, in turn, would result in a smaller composite effect.

The second possibility is that facilitation of part-based processing is driven by the changing face structure, which is also a product of elastic movements. When engaged in elastic motion, spatial relations between facial features change. Holistic face processing relies heavily on the spatial structure of a face; shifting spatial relationships make it difficult to process the face as a whole. Consequently, face processing must rely on part-based processing to interpret facial information. One of these possibilities, or a combination of both, likely led participants to rely on part-based face processing more so in the dynamic condition than in the static condition.

In line with present findings, Xiao et al. (2012) recently provided evidence for the idea that rigid facial movements also facilitate part-based face processing, by showing a smaller composite effect in the dynamic face condition relative to the static face condition. Rigid facial movement differs from elastic facial movement in that it does not include any changes in facial structure. It mainly consists of face viewpoint changes, for example, nodding and head turning. The authors suggested this rigid motion effect to be a product of coherent face viewpoint changes which provided a relatively stable viewing condition for observers to attend to face parts rather than the whole face. Consequently, promotion of this part based processing led to a smaller composite effect.

The present findings taken together with those of Xiao et al. (2012) have important implications for our understanding of the exact nature of face processing in the real world. The faces we encounter in the real world are constantly moving. However, most of our current knowledge about face processing is obtained from studies using static faces as stimuli. For example, the dominance of holistic face processing is a principal theory that was discovered and supported through these static face studies. The present findings suggest, however, that individuals rely on less, not more, holistic processing when viewing ecologically valid moving faces. This conclusion implies that natural face processing may not be done primarily in a holistic manner, and that face processing in natural situations might not look like what we have learned from static face studies. If this is indeed the case, we must seriously reassess the vast existing evidence derived from studies using static faces.

What does this motion facilitation process signify for the processing of natural faces? One possibility that can be inferred from the present results is that facial movement might lead to processing of facial information that is most crucial to the task at hand. The current experiments asked participants to recognize a face part, and participants explicitly knew this. Thus, facial movements promoted the processing of part-based face information in order to adapt to this situation. As some recent studies have argued, holistic face processing is thought to reflect a rigid perceptual mechanism (Richler et al., 2011b). However, the current findings show that this rigidity can be weakened by the introduction of facial movement, which optimizes face processing and allows adaptation to the task requirement. In both the current study and in Xiao et al. (2012), the task was face part recognition, whereas in the real world we encounter various face-related tasks all the time, such as identifying gender, age, or emotional status. It would therefore be worthwhile to examine the facial motion effect in various tasks. If facial motion can flexibly influence performance according to task requirements, different effects would be predicted.

It should be noted that, in the present study, we only tested recognition of static composite faces; however, face recognition in the real world almost always involving moving faces. Ideally, one should use a 2 (static vs. dynamic familiarization faces) × 2 (static vs. dynamic test faces) design to assess fully the role of motion on face part-based or holistic processing. However, at present, it is technically difficult to produce dynamic composite faces because composite faces require matching of the two face parts. For static faces, this matching can be achieved based solely on image properties, such as skin color, face feature position, and so forth. For dynamic faces, this matching has to be done based both on image properties and movement patterns. Matching movement patterns requires that the two face parts move synchronously so as to be perceived as a single face. If there is a mismatch in the moving pattern, the aligned composite faces would no longer be perceived as a whole. It is this moving pattern matching issue that challenges the current technology. Nevertheless, the present design has one advantage. All existing studies of the face composite effect have used static test faces. By using the static faces as the test stimuli, we were able to compare the present findings with the existing ones. In particular, the present study must be able to replicate the robust face composite effect in the static condition to ensure that the motion effect observed was not due to specifics of our face stimuli. However, when technology improves in the future, the 2 × 2 design mentioned above should be used.

Previous face processing studies have suggested that holistic and part-based processing can occur both at the encoding and retrieval stages (Richler et al., 2008; Wenger & Ingvalson, 2003). In the current study, the fact that the smaller composite effect in the elastic motion condition remained stable despite variations in the duration of face presentation (Experiments 1–3) suggests that it likely occurs at the face information retrieval stage rather than at the encoding stage. In Experiments 1–3, faces were presented for 600, 1200, and 1800 ms respectively; observers’ encoding time was therefore limited to these presentation durations. No differences in the motion effect were observed among any of these encoding times, suggesting the facial motion might not influence the encoding process. Otherwise, we should have found different motion effects for these experiments. The data are thus consistent with the idea that elastic motion might facilitate face information retrieval in a part-based manner. However, it should be noted, that this retrieval assumption has not been directly examined, and it would be worthwhile to test this by conducting a systematic investigation with particular paradigms.

Because this work and that of Xiao et al. (2012) used only the composite effect as a measurement of part-based or holistic processing, it would be premature to conclude that elastic facial movement facilitates part-based face processing under all circumstances. Future studies should use other direct methods to further examine this relationship, for example, the part-whole paradigm (Tanaka & Farah, 1993). The part-whole effect occurs when recognition of a face part (e.g., eyes, nose or mouth) is more difficult when it is isolated than when it is presented as part of a face. Although both the part-whole effect and the composite effect index holistic face processing, recent studies have found that the two are not exactly the same, implying that they might reflect different aspects of face processing (e.g., Wang et al, 2012). More importantly, it would be worthwhile to test the difference between the two effects to determine whether the present findings could be replicated with the part-whole paradigm. Furthermore, because the part-whole paradigm focuses on a specific facial feature (e.g., eyes, nose or mouth), it would be ideal for examining the effect of facial motion on a particular facial feature, which would, in turn, contribute to a more comprehensive understanding of the role of facial movement in face processing.

Acknowledgments

This research is supported by grants from the Natural Science and Engineering Research Council of Canada, National Institutes of Health (R01 HD046526), the Natural Science Foundation of China (60910006, 31028010, 31070908), the Zhejiang Provincial Natural Science Foundation of China (Y2100970), and the Zhejiang QianJiang Talent Foundation (QJC1002010 1013371-M).

Contributor Information

Naiqi G. Xiao, University of Toronto and Zhejiang Sci-Tech University

Paul C. Quinn, University of Delaware

Liezhong Ge, Zhejiang Sci-Tech University.

Kang Lee, University of Toronto and University of California, San Diego.

References

- Bulf H, Turati C. The role of rigid motion in newborns’ face recognition. Visual Cognition. 2010;18:504–512. doi: 10.1080/13506280903272037. [DOI] [Google Scholar]

- Butcher N, Lander K, Fang H, Costen N. The effect of motion at encoding and retrieval for same- and other-race face recognition. British Journal of Psychology. 2011;102:931–942. doi: 10.1111/j.2044-8295.2011.02060.x. [DOI] [PubMed] [Google Scholar]

- Calder A, Rhodes G, Johnson MH, Haxby JV, editors. The oxford handbook of face perception. New York: Oxford University Press; 2011. [Google Scholar]

- Cheung OS, Richler JJ, Phillips WS, Gauthier I. Does temporal integration of face parts reflect holistic processing? Psychonomic Bulletin & Review. 2011;18:476–483. doi: 10.3758/s13423-011-0051-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freire A, Lee K, Symons LA. The face-inversion effect as a deficit in the encoding of configural information: direct evidence. Perception. 2000;29:159–170. doi: 10.1068/p3012. [DOI] [PubMed] [Google Scholar]

- Harrison SA, Richler JJ, Mack ML, Palmeri TJ, Hayward WG, Gauthier I. The complete design lets you see the whole picture: Differences in holistic processing contribute to face-inversion and other-race effects. Journal of Vision. 2011;11:625. doi: 10.1167/11.11.625. [DOI] [Google Scholar]

- Hayward WG, Crookes K, Favelle S, Rhodes G. Why are face composites difficult to recognize? Journal of Vision. 2011;11:668. doi: 10.1167/11.11.668. [DOI] [Google Scholar]

- Hill H, Johnston A. Categorizing sex and identity from the biological motion of faces. Current Biology. 2001;11:880–885. doi: 10.1016/S0960-9822(01)00243-3. [DOI] [PubMed] [Google Scholar]

- Knappmeyer B, Thornton IM, Bülthoff HH. The use of facial motion and facial form during the processing of identity. Vision Research. 2003;43:1921–1936. doi: 10.1016/S0042-6989%2803%2900236-0. [DOI] [PubMed] [Google Scholar]

- Knight B, Johnston A. The role of movement in face recognition. Visual Cognition. 1997;4:265–273. doi: 10.1080/713756764. [DOI] [Google Scholar]

- Lander K, Bruce V. Recognizing famous faces: Exploring the benefits of facial motion. Ecological Psychology. 2000;12:259–272. doi: 10.1207/S15326969ECO1204_01. [DOI] [Google Scholar]

- Lander K, Bruce V. The role of motion in learning new faces. Visual Cognition. 2003;10:897–912. doi: 10.1080/13506280344000149. [DOI] [Google Scholar]

- Lander K, Bruce V. Repetition priming from moving faces. Memory and Cognition. 2004;32:640–647. doi: 10.3758/BF03195855. [DOI] [PubMed] [Google Scholar]

- Lander K, Chuang L. Why are moving faces easier to recognize? Visual Cognition. 2005;12:429–442. doi: 10.1080/13506280444000382. [DOI] [Google Scholar]

- Lander K, Davies R. Exploring the role of characteristic motion when learning new faces. The Quarterly Journal of Experimental Psychology. 2007;60:519–526. doi: 10.1080/17470210601117559. [DOI] [PubMed] [Google Scholar]

- Lander K, Bruce V, Hill H. Evaluating the effectiveness of pixelation and blurring on masking the identity of familiar faces. Applied Cognitive Psychology. 2001;15:101–116. doi: 10.1002/1099-0720%28200101/02%2915:1%3C101::AID-ACP697. [DOI] [Google Scholar]

- Lander K, Christie F, Bruce V. The role of movement in the recognition of famous faces. Memory and Cognition. 1999;27:974–985. doi: 10.3758/BF03201228. [DOI] [PubMed] [Google Scholar]

- Lander K, Chuang L, Wickham L. Recognizing face identity from natural and morphed smiles. The Quarterly Journal of Experimental Psychology. 2006;59:801–808. doi: 10.1080/17470210600576136. [DOI] [PubMed] [Google Scholar]

- Lander K, Hill H, Kamachi M, Vatikiotis-Bateson E. It’s not what you say but the way you say it: Matching faces and voices. Journal of Experimental Psychology: Human Perception and Performance. 2007;33:905–914. doi: 10.1037/0096-1523.33.4.905. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/S1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- McKone E. Configural processing and face viewpoint. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:310–327. doi: 10.1037/0096-1523.34.2.310. [DOI] [PubMed] [Google Scholar]

- Michel C, Corneille O, Rossion B. Race categorization modulates holistic face encoding. Cognitive Science. 2007;31:911–924. doi: 10.1080/03640210701530805. [DOI] [PubMed] [Google Scholar]

- Michel C, Rossion B, Han J, Chung CS, Caldara R. Holistic processing is finely tuned for faces of one’s own race. Psychological Science. 2006;17:608–615. doi: 10.1111/j.1467-9280.2006.01752.x. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Maurer D. The effect of face orientation on holistic processing. Perception. 2008;37(8):1175–1186. doi: 10.1068/p6048. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Pathman T, Maurer D, Le Grand R, de Schonen S. The composite face effect in six-year-old children: Evidence of adult-like holistic face processing. Visual Cognition. 2007;15:564–577. doi: 10.1080/13506280600859383. [DOI] [Google Scholar]

- Moscovitch M, Winocur G, Behrmann M. What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. Journal of Cognitive Neuroscience. 1997;9:555–604. doi: 10.1162/jocn.1997.9.5.555. [DOI] [PubMed] [Google Scholar]

- O’Toole AJ, Phillips PJ, Weimer S, Roark DA, Ayyad J, Barwick R, Dunlop J. Recognizing people from dynamic and static faces and bodies: dissecting identity with a fusion approach. Vision Research. 2011;51:74–83. doi: 10.1016/j.visres.2010.09.035. [DOI] [PubMed] [Google Scholar]

- O’Toole AJ, Roark DA, Abdi H. Recognizing moving faces: A psychological and neural synthesis. Trends in Cognitive Sciences. 2002;6:261–266. doi: 10.1016/S1364-6613%2802%2901908-3. [DOI] [PubMed] [Google Scholar]

- Otsuka Y, Hill H, Kanazawa S, Yamaguchi MK, Spehar B. Perception of Mooney faces by young infants: the role of local feature visibility, contrast polarity, and motion. Journal of Experimental Child Psychology. 2012;111:164–179. doi: 10.1016/j.jecp.2010.10.014. [DOI] [PubMed] [Google Scholar]

- Otsuka Y, Konishi Y, Kanazawa S, Yamaguchi MK, Abdi H, O’Toole AJ. Recognition of moving and static faces by young infants. Child Development. 2009;80:1259–1271. doi: 10.1111/j.1467-8624.2009.01330.x. [DOI] [PubMed] [Google Scholar]

- Pike GE, Kemp RI, Towell NA, Phillips KC. Recognizing moving faces: The relative contribution of motion and perspective view information. Visual Cognition. 1997;4:409–437. doi: 10.1080/713756769. [DOI] [Google Scholar]

- Pilz KS, Thornton IM, Bülthoff HH. A search advantage for faces learned in motion. Experimental Brain Research. 2005;171:436–447. doi: 10.1007/s00221-005-0283-8. [DOI] [PubMed] [Google Scholar]

- Ramon M, Busigny T, Rossion B. Impaired holistic processing of unfamiliar individual faces in acquired prosopagnosia. Neuropsychologia. 2010;48:933–944. doi: 10.1016/j.neuropsychologia.2009.11.014. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Cheung OS, Gauthier I. Holistic processing predicts face recognition. Psychological Science. 2011a;22:464–471. doi: 10.1177/0956797611401753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler JJ, Mack ML, Gauthier I, Palmeri TJ. Holistic processing of faces happens at a glance. Vision Research. 2009;49:2856–2861. doi: 10.1016/j.visres.2009.08.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler JJ, Tanaka JW, Brown DD, Gauthier I. Why does selective attention to parts fail in face processing? Journal of Experimental Psychology: Learning, Memory, and Cognition. 2008;34:1356–1368. doi: 10.1037/a0013080. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Wong YK, Gauthier I. Perceptual expertise as a shift from strategic interference to automatic holistic processing. Current Directions in Psychological Science. 2011b;20:129–134. doi: 10.1177/0963721411402472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roark DA, Barrett SE, Spence MJ, Abdi H, O’Toole AJ. Psychological and neural perspectives on the role of motion in face recognition. Behavioral and Cognitive Neuroscience Reviews. 2003;2:15–46. doi: 10.1177/1534582303002001002. [DOI] [PubMed] [Google Scholar]

- Schiff W, Banka L, de Bordes Galdi G. Recognizing people seen in events via dynamic “mug shots. The American Journal of Psychology. 1986;99:219–231. Retrieved from http://www.jstor.org/stable/1422276. [PubMed] [Google Scholar]

- Sergent J. Influence of task and input factors on hemispheric involvement in face processing. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:846–861. doi: 10.1037/0096-1523.11.6.846. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Gordon I. Features, configuratiton and holistic face processing. In: Calder A, Rhodes G, Johnson M, Haxby J, editors. The Oxford handbook of face perception. New York: Oxford University Press; 2011. pp. 177–194. [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. The Quarterly Journal of Experimental Psychology A: Human Experimental Psychology. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Teunisse JP, de Gelder B. Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain and Cognition. 2003;52:285–294. doi: 10.1016/s0278-2626(03)00042-3. [DOI] [PubMed] [Google Scholar]

- Thornton IM, Kourtzi Z. A matching advantage for dynamic human faces. Perception. 2002;31:113–132. doi: 10.1068/p3300. [DOI] [PubMed] [Google Scholar]

- Thornton IM, Mullins E, Banahan K. Motion can amplify the face-inversion effect. Psihologija. 2011;44:5–22. doi: 10.2298/PSI1101005T. [DOI] [Google Scholar]

- Wang R, Li J, Fang H, Tian M, Liu J. Individual differences in holistic processing predict face recognition ability. Psychological Science. 2012;23:169–177. doi: 10.1177/0956797611420575. [DOI] [PubMed] [Google Scholar]

- Wenger MJ, Ingvalson EM. Preserving informational separability and violating decisional separability in facial perception and recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2003;29:1106–1118. doi: 10.1037/0278-7393.29.6.1106. [DOI] [PubMed] [Google Scholar]

- Xiao NG, Quinn PC, Ge L, Lee K. Rigid facial motion influences featural, but not holistic, face processing. Vision Research. 2012;57:26–34. doi: 10.1016/j.visres.2012.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. doi: 10.1037/h0027474. [DOI] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception. 1987;16:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Young AW, Ellis AW, Flude BM, McWeeny KH, Hay DC. Face–name interference. Journal of Experimental Psychology: Human Perception and Performance. 1986;12:466–475. doi: 10.1037/0096-1523.12.4.466. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: Domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]