Abstract

Previous network analyses of the phonological lexicon (Vitevitch, 2008) observed a web-like structure that exhibited assortative mixing by degree: words with dense phonological neighborhoods tend to have as neighbors words that also have dense phonological neighborhoods, and words with sparse phonological neighborhoods tend to have as neighbors words that also have sparse phonological neighborhoods. Given the role that assortative mixing by degree plays in network resilience, we examined instances of real and simulated lexical retrieval failures in computer simulations, analysis of a slips-of-the-ear corpus, and three psycholinguistic experiments for evidence of this network characteristic in human behavior. The results of the various analyses support the hypothesis that the structure of words in the mental lexicon influences lexical processing. The implications of network science for current models of spoken word recognition, language processing, and cognitive psychology more generally are discussed.

Keywords: Network science, Spoken word recognition, Mental lexicon

1. Introduction

Network science draws on work from mathematics, sociology, computer science, physics and a number of other fields that examine complex systems using nodes (or vertices) to represent individual entities, and connections (or edges) to represent relationships between entities, to form a web-like structure, or network, of the entire system. This approach has been used to examine complex systems in economical, biological, social, and technological domains (Barabási, 2009). More relevant to the cognitive sciences, this approach has also increased our understanding of connectivity in the brain (Sporns, 2010), the categorization of psychological disorders (Cramer, Waldorp, van der Maas, & Borsboom, 2010), and the cognitive processes and representations involved in human navigation (Iyengar, Madhavan, Zweig, & Natarajan, 2012), semantic memory (Griffiths, Steyvers, & Firl, 2007; Hills, Maouene, Maouene, Sheya, & Smith, 2009; Steyvers & Tenenbaum, 2005), and human collective behavior (Goldstone, Roberts, & Gureckis, 2008).

Cognitive science has appealed to networks in the past, including artificial neural networks (Rosenblatt, 1958), networks of semantic memory (Quillian, 1967), and network-like models of language (e.g., linguistic nections: Lamb, 1970; Node Structure Theory: MacKay, 1992). What separates network science from these previous network approaches is that network science is equal parts theory and equal parts methodology: “…networks offer both a theoretical framework for understanding the world and a methodology for using this framework to collect data, test hypotheses, and draw conclusions” (Neal, 2013, p. 5). Regarding methodology, network science offers a wide array of statistical and computational tools to analyze individual agents in a complex system (often referred to as the microlevel), characteristics of the over-all structure of a system (often referred to as the macro-level), as well as various levels in between (often referred to as the meso-level). See Appendix A for definitions of various network measures that can be used to assess the different levels of a system.

One could argue, without oversimplifying the case, that psycholinguistic research has predominantly engaged in micro-level analyses to identify specific lexical characteristics—such as word frequency, age of acquisition, word length, phonotactic probability, etc.—that affect the speed and accuracy with which a word can be retrieved from the lexicon. Indeed, Cutler (1981) provided a long list of lexical characteristics that were known to affect the retrieval of a word; this list has only grown since then (e.g., Vitevitch, 2002a; Vitevitch, 2007). Although much has been learned about the micro-structure of words in the mental lexicon, what was not known then—and is still missing in mainstream approaches—is an understanding of how the macro-structure and meso-structure of the lexicon influences lexical processing. Stated more brusquely: studying the individual pieces does not help us understand how they all fit together, nor how the entire system works as a whole (for a similar critique of reductionism in other fields see Barabási, 2012).

To better understand the complex cognitive system known as the mental lexicon, we used the mathematical tools of network science to examine how the mental lexicon might be structured at the macro-level, and how the macro-structure of the lexicon might influence lexical processing. To be sure, psycholinguists have hinted at the influence that the structure of the lexicon might have on lexical processing. Consider this statement by Forster (1978, p. 3): “[a] structured information-retrieval system permits speakers to recognize words in their language effortlessly and easily” as well as the verbal model of lexical retrieval that he proposed.

Also consider this statement by Luce and Pisoni (1998, p. 1): “…similarity relations among the sound patterns of spoken words represent one of the earliest stages at which the structural organization of the lexicon comes into play.” What was missing, however, were the right tools to measure the structure of the lexicon. Statements by Barabási suggest that the tools of network science are ideally suited to examine the micro-, meso- and macro-structure of the mental lexicon, as well as other complex systems:

All systems perceived to be complex, from the cell to the Internet and from social to economic systems, consist of an extraordinarily large number of components that interact via intricate networks. To be sure, we were aware of these networks before. Yet, only recently have we acquired the data and tools to probe their topology, helping us realize that the underlying connectivity has such a strong impact on a system’s behavior that no approach to complex systems can succeed unless it exploits the network topology. (Barabási, 2009, p. 413)

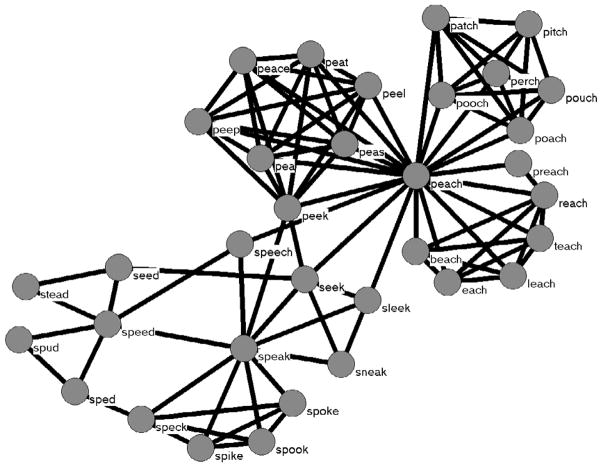

Vitevitch (2008) applied the tools of network science to the mental lexicon by creating a network with approximately 20,000 English words as nodes, and connections between words that were phonologically similar (using the one-phoneme metric used in Luce and Pisoni (1998)). Fig. 1 shows a small portion of this network (see Hills et al. (2009) and Steyvers and Tenenbaum (2005) for lexical networks based on semantic rather than phonological relationships among words).

Fig. 1.

A sample of words from the phonological network analyzed in Vitevitch (2008). The word “speech” and its phonological neighbors (i.e., words that differ by the addition, deletion or substitution of a phoneme) are shown. The phonological neighbors of those neighbors (i.e., the 2-hop neighborhood of “speech”) are also shown.

Network analyses of the English phonological network revealed several noteworthy characteristics about the macro-structure of the mental lexicon. Vitevitch (2008) found that the phonological network had: (1) a large highly interconnected component, as well as many islands (words that were related to each other—such as faction, fiction, and fission—but not to other words in the large component) and many hermits, or words with no neighbors (known as isolates in the network science literature); the largest component exhibited (2) small-world characteristics (“short” average path length and, relative to a random graph, a high clustering coefficient; Watts & Strogatz, 1998), (3) assortative mixing by degree (a word with many neighbors tends to have neighbors that also have many neighbors; Newman, 2002), and (4) a degree distribution that deviated from a power-law.

Arbesman, Strogatz, and Vitevitch (2010b) found the same constellation of structural features in phonological networks of Spanish, Mandarin, Hawaiian, and Basque, and elaborated on the significance of these characteristics. For example, the giant component of the phonological networks contained, in some cases, less than 50% of the nodes; networks observed in other domains often have giant components that contain 80–90% of the nodes. Arbesman et al. (2010b) also noted that assortative mixing by degree is found in networks in other domains. However, typical values for assortative mixing by degree in social networks range from .1–.3, whereas the phonological networks examined by Arbesman et al. were as high as .7.

Finally, most of the languages examined by Arbesman et al. exhibited degree distributions fit by truncated power-laws (but the degree distribution for Mandarin was better fit by an exponential function). Networks with degree distributions that follow a power-law are known as scale-free networks. Scale-free networks have attracted attention because of certain structural and dynamic properties, such as remaining relatively intact in the face of random failures in the system, but vulnerability when attacks are targeted at well-connected nodes (Albert & Barabási, 2002; Albert, Jeong, & Barabási, 2000). See work by Amaral, Scala, Barthélémy, and Stanley (2000) for the implications on the dynamic properties of networks with degree distributions that deviate from a power-law in certain ways.

It is important to note that Kello and Beltz (2009) demonstrated that certain characteristics they observed in the networks of several real languages (English, Dutch, German, Russian, and Spanish) were unlikely to arise simply because connections between nodes were based on substring relations (like the one-phoneme metric used to connect nodes in the networks examined in Vitevitch (2008) and Arbesman, Strogatz, and Vitevitch (2010a, 2010b). Kello and Beltz used methods like those used by Mandelbrot (1953) and Miller (1957) to create variable-length random letter strings, and found that the degree distribution of a network comprised of such overlapping items differed radically from the degree distribution of the networks containing words from real languages.

Similarly, Gruenenfelder and Pisoni (2009) created three pseudolexicons of randomly generated “words.” Only the pseudolexicon that contained items that closely resembled real-words in natural languages—words that varied in length, the proportions of words at a given length that matched those proportions found in English, phonotactic-like constraints on the ordering of consonants and vowels in the words—produced a network structure that began to resemble the network structure observed in natural languages. Thus, networks of word-forms from natural languages capture important information at the micro- meso- and macro-levels about the structural relations among those word-forms above and beyond what might be expected from a network formed from nodes that simply share overlapping features.

One of the fundamental assumptions of network science is that the structure of a network influences the dynamics of that system (Watts & Strogatz, 1998). A certain process might operate very efficiently on a network with a certain structure. However, in a network with the same number of nodes and same number of connections—but with those nodes connected in a slightly different way—the same process might now be woefully inefficient. Given the fundamental assumption that the structure of a network influences the dynamics of that system, then the structure among phonological word-forms in the mental lexicon observed by Vitevitch (2008) should influence certain language- related processes. Indeed, psycholinguistic evidence demonstrates that three network features at the micro-level of the network—degree, clustering coefficient, and closeness centrality of individual words—influence a number of language-related processes.

Degree refers to the number of connections incident to a given node. In the context of a phonological network like that of Vitevitch (2008), degree corresponds to the number of word-forms that sound similar to a given word. Many psycholinguistic studies have shown that degree—better known in the psycholinguistic literature as phonological neighborhood density—influences spoken word recognition (Luce & Pisoni, 1998), spoken word production (Vitevitch, 2002b), word-learning (Charles-Luce & Luce, 1990; Storkel, 2004), and phonological short-term memory (Roodenrys, Hulme, Lethbridge, Hinton, & Nimmo, 2002). Our discussion of degree is not meant to suggest that our use of network science to examine the mental lexicon led us to discover something new (i.e., the already well-known effects of phonological neighborhood density on processing). Rather, we discuss degree and neighborhood density to show an interesting point of convergence between conventional psycholinguistics and network science. This conceptual convergence inspired us to examine how other network science measures of the structure of the mental lexicon might influence various language-related processes.

One of those other network science measures is the clustering coefficient, which—in the context of a phonological network like that in Vitevitch (2008)—measures the extent to which the neighbors of a given node are also neighbors of each other. We initially explored the influence of the clustering coefficient on processing because it provides a measure of the “internal structure” of a phonological neighborhood. When clustering coefficient is low, few of the neighbors of a target node are neighbors of each other. When clustering coefficient is high, many neighbors of a target word are also neighbors with each other. Given the well-known and widely replicated effects of degree/neighborhood density, we reasoned that we would likely be able to observe influences of the internal structure of a phonological neighborhood on processing. Indeed, the results of several studies—using a variety of conventional psycholinguistic and memory tasks, as well as computer simulations—demonstrated that clustering coefficient influences language-related processes like spoken word recognition, word production, retrieval from long-term memory, and redintegration in short-term memory (Chan & Vitevitch, 2009, 2010; Vitevitch, Chan, & Roodenrys, 2012; Vitevitch, Ercal, & Adagarla, 2011).

Although degree/neighborhood density and clustering coefficient are conceptually similar, it is important to note that they are, by definition, distinct concepts. Furthermore, Vitevitch et al. (2012) demonstrated that degree and clustering coefficient are not correlated in the phonological network of English. Moreover, Vitevitch et al. (2011) observed in a computer simulation independent effects of degree/neighborhood density and of clustering coefficient on the diffusion of activation in their network representation of the lexicon.

Using a game called word-morph, in which participants were given a word, and asked to form a disparate word by changing one letter at a time, Iyengar et al. (2012) demonstrated the influence of another network science measure—closeness centrality—on a language-related process. Closeness centrality is one type of centrality measure (others being degree centrality, betweenness centrality, reach centrality, and eigenvector centrality) that attempts to capture in some way which nodes are “important” in the network. Closeness centrality assesses how far away other nodes in the network are from a given node.1 A node with high closeness centrality is very close to many other nodes in the network, requiring that, on average, only a few links be traversed to reach another node. A node with low closeness centrality is, on average, far away from other nodes in the network, requiring the traversal of many links to reach another node.

Iyengar et al. (2012) found that words with high closeness centrality in a network of the orthographic lexicon allowed participants in the word-morph game to quickly transform one word into another. For example, asked to “morph” the word bay into the word egg participants might have changed bay into bad–bid–aid–add–ado–ago–ego and finally into egg. Similarly, when asked to “morph” the word ass into the word ear participants might have changed ass into ask–ark–arm–aim–aid–bid–bad–bar and finally into ear. Once participants in this task identified certain “landmark” words in the lexicon—words that had high closeness centrality, like the word aid in the examples above—the task of navigating from one word to another became trivial, enabling the participants to solve subsequent word-morph puzzles very quickly. The time it took to find a solution dropped from 10–18 min in the first 10 games, to about 2 min after playing 15 games, to about 30 s after playing 28 games, because participants would “morph” the start-word (e.g., bay or ass) into one of the landmark words that were high in closeness centrality (e.g., aid), then morph the landmark-word into the desired end-word (e.g., egg or ear). Although this task is a contrived word-game rather than a conventional psycholinguistic task that assesses on-line lexical processing, the results of Iyengar et al. (2012) nevertheless illustrate further how the tools of network science can be used to provide insights about cognitive processes and representations.

Our review of previous studies that examined how degree, clustering coefficient, and closeness centrality influence various language-related processes clearly shows that the tools of network science can be used to provide novel insights about cognitive processes and representations. Note, however, that these metrics assess the micro-level of a system, providing information about individual nodes rather than the system as a whole. Therefore, one could argue that these applications of network science to psycholinguistics are also guilty of the charge we leveled against mainstream psycholinguistic research: the myopic focus of mainstream psycholinguistics on the trees (i.e., individual characteristics of words) has prevented mainstream psycholinguistics from seeing the forest (i.e., the mental lexicon as a system). To illustrate further how the tools of network science can be used to provide novel insights about cognitive processes and representations (and to acquit ourselves of this crime of perspective), we examined in the present study how the macro-level measure from network science known as assortative mixing by degree might influence language-related processing.

To define assortative mixing by degree we will consider each component of this term in turn. Mixing describes a preference for how nodes in a network tend to connect to each other. This preference can be based on a variety of characteristics. For example in a social network, mixing may occur based on age, gender, race, etc. Assortative mixing (a.k.a. homophily) means that “like goes with like.” Again using the example of a social network, people of similar age tend to connect to each other. There are, of course, instances in which younger people are friends with older people, but overall there is a general tendency in the network for people of similar ages to be connected. Assortative mixing can be contrasted with disassortative mixing, which means that dissimilar entities will tend to be connected. For example, in a network of a heterosexual dating website, males and females would be connected, but not males and males, nor females and females. It is also possible that no mixing preferences are exhibited in a network.

In addition to gender or age, degree, or the number of edges incident on a vertex, is another way in which nodes in a network may exhibit a preference for mixing. Therefore, assortative mixing by degree refers to the tendency in a network for a highly connected node to be connected to other highly connected nodes (Newman, 2002). In other words, when looking at the network overall, there is a positive correlation between the degree of a node and the degree of its neighbors. Assortative mixing by degree is often observed in social networks (Newman, 2002). Note that a negative correlation between the degree of a node and the degree of its neighbors is also possible, and is known as disassortative mixing by degree. Disassortative mixing by degree is often found in networks representing technological systems, like the World Wide Web (Newman, 2002). Networks with a correlation of zero between the degree of a node and the degree of its neighbors are also possible, and also have been observed (Newman, 2002).

The relatively high values of assortative mixing by degree in the phonological networks examined by Arbesman et al. (2010b)—values as high as .7 compared to values ranging from .1–.3 in social networks— demand additional investigation. One possibility is that the values observed by Arbesman et al. are just a statistical quirk or a mathematical curiosity. Indeed, there are many interesting relationships and correlations that have been observed among words or in language more generally. Consider Menzerath’s law, which states that the larger a particular unit is, the smaller its constituents will be, such that a long word will be comprised of small or simple syllables (such as CVs or Vs), whereas a short word will be comprised of a large or more complex syllable (such as CCCVCCC as in the monosyllabic word, strengths). The relationship between unit and constituent size known as Menzerath’s law is not only observed in language, but has also been observed in music and genomes (Ferrer-i-Cancho, Forns, Hernandez-Fernandez, Bel-Enguix, & Baixeries, 2012).

Consider also Martin’s Law (the relationship between the number of definitions of a word and the generality of those definitions), as well as the relationship between phoneme inventory and entropy, and the relationship between polysemy and word length. Finally, consider the numerous observations by Zipf (1935), including the correlation between word-length and word frequency (long words tend to occur less often in the language; see also Baayen, 1991, 2001, 2010), and the observation that high frequency words tend to have many phonological neighbors (e.g., Frauenfelder, Baayen, Hellwig, & Schreuder, 1993; Landauer & Streeter, 1973). Given the plethora of relationships observed among words and in language more generally (and sometimes even in other domains), the observation of assortative mixing by degree in Arbesman et al. (2010b) could simply be another statistical curiosity.

We grant that it is interesting to discover and document the presence of such relationships in and across languages. We further grant that much has been learned by studying how the variables that contribute to those relationships influence processing. Consider all of the work investigating the influence of word frequency on various language and memory processes (dating back at least to Lester, 1922), studies of word-length on processing (e.g., Vitevitch, Stamer, & Sereno, 2008), all of the work on orthographic and phonological neighborhood density on processing (e.g., Laxon, Coltheart, & Keating, 1988; Pisoni, Nusbaum, Luce, & Slowiaczek, 1985), and the work on neighborhood frequency on processing (e.g., Grainger, O’Regan, Jacobs, & Segui, 1989). What appears to be lacking are studies that demonstrate that relationships between variables—such as the observation that high frequency words tend to have many phonological neighbors (e.g., Frauenfelder et al., 1993; Landauer & Streeter, 1973)—directly influence processing. From the perspective of cognitive psychology, it is important to demonstrate that statistical relationships between variables are more than interesting mathematical quirks. That is, one must demonstrate that such statistical relationships influence cognitive processing in some way. In the work that follows, we will examine how the macro-level measure of a network known as assortative mixing by degree might influence certain aspects of language related processing.

Note that there have been many studies on Menzerath’s law, Martin’s law, and other relationships among words in the language, such as the general relationships observed about word frequency (e.g., Baayen, 1991, 2001, 2010; Zipf, 1935), but most of the previous studies of these statistical relationships attempted to determine the origin of the global pattern observed in the language. To be clear, the goal of the present work is not to determine the origin of assortative mixing by degree in the phonological lexicon, or to propose a model that could generate such a macro-level pattern in the language (for such work see the stochastic model described in Baayen (1991)). Instead, we take the observations of Arbesman et al. (2010b) as a given: assortative mixing by degree exists in the mental lexicon. The goal of the present research is to determine if this statistical relationship observed at the macro-level of the lexicon influences cognitive processing in some way.

Furthermore, given the work of Keller (2005) and others, we caution against the practice of “inverse inference,” that is, inferring from an observed pattern in the data back to the model that might have generated it. Keller (2005) criticized the once-common practice in network science of observing a power-law degree distribution (N.B. Zipfian distributions of word frequency follow a power law) and then inferring that the network was generated by a particular mechanism (i.e., growth with preferential attachment in the case of scale-free networks). To illustrate the problems of inversely inferring the mechanism that produced the observed distribution, Keller discussed the work of Herbert Simon and a number of others that shows that such power-law distributions can be produced by a large number of algorithms/mechanisms. Given that there are often many ways to produce a particular pattern in the data, and often no way to discern which of those mechanisms is actually responsible for the observed data, the field of network science has essentially abandoned the practice of inferring the generating mechanism when a scale-free degree distribution is observed.

Again, regardless of how assortative mixing by degree came to be in the lexicon, the goal of the present research is to determine if this statistical relationship observed at the macro-level of the lexicon influences cognitive processing in some way. We begin by considering how the pattern of mixing influences processing in other domains. Mathematical simulations suggest that the overall pattern of mixing exhibited in a network has implications for the ability of the system to maintain processing in the face of damage to the network, a concept somewhat reminiscent of graceful degradation in cognitive science. Newman (2002) found that network connectivity (i.e., the existence of paths between pairs of nodes) was easier to disrupt (by a factor of five to ten) by removing nodes with high-degree in networks with disassortative mixing than in networks with assortative mixing by degree. In other words, networks with assortative mixing by degree are better able to maintain processing pathways than networks with disassortative mixing by degree in the face of targeted attacks to the system.

More directly related to language, Arbesman et al. (2010b) examined network resilience in response to simulated attacks on nodes in a network of English words. Recall that English and a variety of other languages exhibited relatively high values of assortative mixing by degree (.5–.8 in the language networks examined by Arbesman et al., whereas .1–.3 is typically observed in social networks). Arbesman et al. observed similar and high levels of resilience in connectivity in the language network when either a random attack or an attack targeting highly connected nodes was carried out. This pattern of network resiliency differs from that typically seen in other networks, which tend to be resilient to random attacks on the network, but eventually succumb to failure when highly connected nodes are targeted for removal (e.g., Albert et al., 2000; Newman, 2002). Given the high levels of assortative mixing by degree, and high levels of resiliency to both random and targeted attacks observed in the language networks examined by Arbesman et al. (2010b), it is not unreasonable to suggest that the assortative mixing by degree found in the mental lexicon may contribute to the resilience of some language- related processes.

While it is unclear what the equivalent of a targeted attack on a phonological network might be in real-life, or how a word (i.e., node) could be permanently removed from the network, there are instances, as in the tip-of-the-tongue phenomenon (e.g., Brown & McNeill, 1966) and in certain forms of aphasia, in which words are temporarily “unavailable” during lexical retrieval. Given the influence that assortative mixing by degree has in maintaining network integrity, and the large values of assortative mixing by degree observed in several languages, we reasoned that the influence that assortative mixing by degree might have on lexical processing might be more easily observed when lexical retrieval failed.

Examining the influence of assortative mixing by degree during failed lexical retrieval complements previous studies that examined the influence of other network characteristics on processing in a number of ways. First, previous studies focused on micro-level measures of the network (e.g., degree, clustering coefficient, closeness centrality of individual nodes), whereas the present study focuses on the macro-level characteristic of mixing. Second, previous studies focused predominantly on quickly retrieving (or navigating to) a desired lexical item, whereas in the present study we focus on instances of failed lexical retrieval (a topic that is, as described below, relatively less examined in psycholinguistics). Finally, previous studies and network analyses have, in many cases, simply reported statistical measures of the network or the language in general. In the present case, we, like a few notable exceptions in the previous literature, are examining how an observed structure in the mental lexicon might influence cognitive processing (see Borge-Holthoefer and Arenas (2010) for the importance of tying measures of language networks to cognitive processing).

Investigations of various types of lexical retrieval failures in the area of speech production—such as slips of the tongue, malapropisms, and tip-of-the-tongue experiences—played an important role in increasing our understanding of the process of speech production. Arguably without the pioneering work of Fromkin (1971), Fay and Cutler (1977), Brown and McNeill (1966) and others, including work examining on-line processing with reaction time based tasks (Levelt, Roelofs, & Meyer, 1999), our understanding of the process of speech production would have been significantly impeded. Although investigations of speech production errors continue to play a crucial role in increasing our understanding of speech production, comparatively less work has investigated errors during speech perception. Like speech production errors, perceptual errors of speech—such as mondegreens and slips of the ear—can be examined scientifically rather than anecdotally (see the pioneering work summarized in Bond (1999)). As in the case of models of speech production, perceptual speech errors have the potential to inform models of speech perception and spoken word recognition.

Although models of spoken word recognition have existed for several decades, and some models have undergone significant revisions in that time, none of the widely-accepted models of spoken word recognition have been used to predict what will happen when lexical retrieval fails (NAM: Luce & Pisoni, 1998; PARSYN: Luce, Goldinger, Auer, & Vitevitch, 2000; Shortlist: Norris, 1994; Norris & McQueen, 2008; Cohort: Gaskell & Marslen-Wilson, 1997; Marslen-Wilson, 1987; TRACE: McClelland & Elman, 1986). Given the basic assumptions of these models—multiple wordforms that resemble the acoustic–phonetic input are activated and then compete with each other for recognition—how the system recovered from failed lexical retrieval might have appeared so obvious as to not require comment: one of the other partially-activated competitors will be retrieved if the desired target word cannot, for some reason, be retrieved.

Alternatively, the design characteristics of many models of spoken word recognition are such that the desired target word wins the competition for recognition (e.g., Shortlist: Norris, 1994; Norris & McQueen, 2008; Cohort: Gaskell & Marslen-Wilson, 1997; Marslen-Wilson, 1987; TRACE: McClelland & Elman, 1986). That is, models of spoken word recognition aren’t designed to make errors.2 A similar state of affairs is found in models of spoken word production where one class of models addresses chronometric aspects of speech production, but don’t make any errors or predictions about speech errors (e.g., Levelt, Roelofs, & Meyer, 1999), and another class of models addresses speech production errors, but don’t make predictions regarding chronometric aspects of speech production (e.g., Dell, 1986, 1988, etc.).

Regardless of the reason for the silence on this matter, the lack of attention to recognition errors is unfortunate, because, as Norman (1981, p. 13) reminds us: “By examining errors, we are forced to demonstrate that our theoretical ideas can have some relevance to real behavior.” To demonstrate that the theoretical idea we put forward—the macro-level structure of the mental lexicon influences language-related processes—has some relevance to real behavior, we examined (simulated and real) instances in which lexical retrieval failed for a “cognitive footprint” (Waller & Zimbelman, 2003), or indirect evidence, of assortative mixing by degree in the mental lexicon. If the macro-level structure of the lexicon observed by Vitevitch (2008)—a positive correlation between the degree of a given word and the degree of its neighbors—has consequences for human behavior, then, in the context of failed lexical retrieval, we would expect to observe a positive correlation between the degree of the target word and the degree of the “erroneously” retrieved word. In the present study we used computer simulations, a corpus analysis of actual speech perception errors, and several laboratory-based tasks that captured certain relevant aspects of failed lexical retrieval to look for a “cognitive footprint” of assortative mixing by degree.

The focus of the present study on demonstrating that the macro-structure of the phonological lexicon, as measured by assortative mixing by degree, influences cognitive processing also contrasts with many previous studies that observed various statistical relationships among words and in language more generally. Consider the correlation that exists between the frequency with which a given word occurs, and the number of phonological neighbors that the given word has: high frequency words tend to have many phonological neighbors, whereas low frequency words tend to have few phonological neighbors (e.g., Frauenfelder et al., 1993; Landauer & Streeter, 1973). This relationship is found between two different lexical characteristics in the same word, whereas we examined a relationship between the same lexical characteristic (i.e., degree/neighborhood density) in two different words (albeit the words were phonological neighbors of each other). Less trivial, the present study examined how this relationship might influence cognitive processing. There have been few (if any) studies that have demonstrated an influence of global patterns observed in language—such as the various relationships observed by Zipf (1935)—on cognitive processing, making the present study a significant contribution to the field of cognitive psychology rather than simply a quantitative observation about the constituents of language.

2. Simulation 1 using jTRACE

Lewandowsky (1993) described several rewards (as well as some hazards) associated with using computer simulations to explore new ideas in cognitive psychology. Among the benefits of using computer simulations is that they provide a low-cost way to examine novel predictions about human behavior. As a preliminary, low-cost test of the hypothesis that assortative mixing by degree influences some aspect of lexical processing we conducted a simulation using jTRACE (Strauss, Harris, & Magnuson, 2007), an updated implementation of the TRACE model of spoken word recognition (McClelland & Elman, 1986). jTRACE/TRACE shares many features with (but does have important differences from) other widely-accepted models of spoken word recognition (e.g., Shortlist, NAM, cohort, etc.).

Because the design characteristics of jTRACE make actual retrieval errors rare, if not impossible, we “simulated” a failure in lexical retrieval by presenting the model with a target item, and took the next most active lexical item in the set of competitors as the retrieval error. We recognize that taking the next-most active competitor in jTRACE to be the item that would be erroneously retrieved if lexical retrieval failed may be a less than ideal method of simulating failed lexical retrieval in the model. However, in the absence of a computational model of speech perception errors—the word recognition equivalent of models of speech production errors like those of Dell (1986, 1988)—we found this method to be acceptable as a preliminary test of our hypothesis. The outcome of this preliminary test could help us determine if further investigation of psycholinguistic behavior in human participants might be warranted.

Note that our decision to use jTRACE should not be seen as an endorsement of the TRACE model (McClelland & Elman, 1986). Indeed, our previous work demonstrated that jTRACE/TRACE (and Shortlist) could not account for the influence of clustering coefficient on spoken word recognition (Chan & Vitevitch, 2009). Rather, our decision to use this computer model in our simulation was based solely on practical concerns: it was the only computer model of spoken word recognition that was available in a ready-to-use format, with an easy-to-use interface, and it would enable us to test our hypothesis in a low-cost setting. Therefore, we wish to set aside a number of theoretical issues that the psycholinguistics literature has (hotly) debated over the years—the existence of feedback connections between levels of representation, the required number of levels of representation, the nature of those representations, etc.—and instead focus on the advantages of using computational models to explore novel hypotheses, as suggested by Lewandowsky (1993).

If the macro-level structure of the phonological network observed by Vitevitch (2008; see also Arbesman et al., 2010b) influences lexical processing, then we should see evidence of this influence in the output of a model of spoken word recognition that captures certain important aspects of human behavior. Specifically, we should observe a positive correlation between the degree/neighborhood density of the target word and the degree/neighborhood density of the erroneously retrieved word (i.e., the next most active lexical item in jTRACE).

2.1. Method

2.1.1. Stimuli

We used the 28 words with high clustering coefficient and the 28 words with low clustering coefficient used in the jTRACE simulation in Chan and Vitevitch (2009). These items consisted of monosyllabic words with 3 phonemes found in initial_lexicon in jTRACE. All of the words were found in initial_lexicon (one of the lexicons available for use in jTRACE) and were also comparable in neighborhood density, as reported in Chan and Vitevitch (2009). Given the previous failure of jTRACE to produce processing differences in these words in an examination of clustering coefficient (Chan & Vitevitch, 2009), we reasoned that these words would provide the model with a significant challenge in the present simulation investigating the influence of assortative mixing by degree on processing. If effects of assortative mixing by degree are observed on processing in the present simulation, then our confidence that similar results might be observed in human behavior is increased.

2.1.2. Procedure

The default parameter settings of the jTRACE computer model (Strauss et al., 2007) were used, including use of initial_lexicon as the lexicon. Importantly, the network structure of the words in initial_ lexicon exhibits the same characteristics as those observed in Vitevitch (2008) for a larger sample of English words; this is not surprising because initial_lexicon was designed to reflect key characteristics of the English language. As in Chan and Vitevitch (2009), we allowed the target words to reach maximal activation. In the present simulation we then identified the next-most active item from the set of lexical competitors. We assumed that the second-most active item would be the item retrieved from the lexicon if lexical retrieval of the target word failed. The target words and the next most active items (i.e., the “perceptual error”) are listed in Appendix B.

2.2. Results and discussion

If the macro-structure of the mental lexicon (as assessed by the network science measure of assortative mixing by degree) influences lexical processing, then we should see evidence of this influence in the output of jTRACE. Specifically, we should observe a positive correlation between the degree/neighborhood density of the target word and the degree/neighborhood density of the erroneously retrieved word (i.e., the next most active lexical item in jTRACE). As described by Newman (2002, p. 1), “…the Pearson correlation coefficient of the degrees at either ends of an edge….” is the statistic used to assess mixing by degree in the network science literature. We found a significant, positive relationship (r(56) = +.46, p < .001) between the degree of the target items (mean = 5.28 neighbors) and the degree of the next most active lexical item in jTRACE (mean = 4.68 neighbors).

Note that some of the words (n = 10) that were the next most active lexical item in jTRACE differed from the target word by more than a single phoneme. Given the relatively small number of words in initial_lexicon compared to the lexicon of the average human language user, it is not surprising that such words are included in the set of lexical competitors (i.e., these words are the closest matches in the initial_lexicon, but not necessarily the closest matches in a real lexicon). Such words would not be directly connected to the target word in the network model constructed in Vitevitch (2008). Therefore, to make our test of the assortative mixing by degree hypothesis comparable to the network analysis of Vitevitch (2008) we again correlated the degree of the target item to the degree of the next most active lexical item in jTRACE, but restricted the analysis to next-most-active items that differed from the target word by the addition, deletion, or substitution of a single phoneme (i.e., there is a link between these words in the phonological network in Vitevitch, 2008). We again found a significant, positive relationship (r(46) = +.44, p < .01) between the degree of the target items (mean = 5.63 neighbors) and the degree of the next most active lexical item in jTRACE (mean = 5.34 neighbors).

Thus, the output of a computer simulation that captures certain important aspects of human psycholinguistic behavior does indeed show a “cognitive footprint” for the network feature of assortative mixing by degree. If the network feature of assortative mixing by degree observed in a network analysis by Vitevitch (2008) was simply a spurious mathematical phenomenon, or resulted only from the way in which the network was constructed, and did not have any consequences for psycholinguistic behavior, then there should be no evidence of this relationship among word-forms in the output of a computer simulation of psycholinguistic behavior. Observing evidence for assortative mixing by degree when lexical retrieval “fails” suggests that the structure of the phonological lexicon—as observed with tools from network science—influences lexical processing.

3. Simulation 2 using jTRACE and lexicons with different types of mixing by degree

Another advantage of computer simulations is that they can be used in “computational experiments” to explore questions that are difficult—for ethical or practical reasons—to examine in the real world (Plunkett & Elman, 1997; see also Vitevitch & Storkel, 2012). In the present simulation we further explored how the structure of the lexicon might influence (failed) lexical retrieval by manipulating mixing by degree in the lexicon. That is, we created a lexicon that exhibited assortative mixing by degree, a lexicon that exhibited disassortative mixing by degree (where words with high degree tend to connect to words with low degree, and vice versa; a negative correlation in degree), and a lexicon that exhibited no mixing by degree (zero correlation in degree, indicating that it is equally likely that a word with high degree connects to a word with low degree or to a word with high degree, and vice versa).

It would be difficult to carry out this manipulation in a controlled laboratory setting with human language-users using a conventional psycholinguistic task because assortative mixing by degree appears to be a general characteristic of phonological word-forms in real languages (Arbesman et al., 2010b). That means that an artificial vocabulary would need to be constructed with the characteristics that we wish to examine, and participants would need to be trained on this new artificial vocabulary before we could test for the influence of these characteristics on processing; a difficult and time-consuming endeavor, indeed.

We again assumed that the most active lexical competitor in jTRACE would be retrieved if lexical retrieval of the target item failed. If the macro-level structure of the lexicon indeed influences processing, then we expect to find a cognitive footprint of this influence in the output of the computational model. That is, the correlation between the degree of the target word and the degree of the next most active lexical item in the set of competitors should match the direction of the correlation between the degree of the target word and the degree of the neighbors in each lexicon that we constructed. Specifically, we should see a positive correlation in the output of the model for the lexicon that exhibits assortative mixing by degree, a negative correlation in the output of the model for the lexicon that exhibits disassortative mixing by degree, and zero correlation in the output of the model for the lexicon that does not exhibit any mixing by degree.

3.1. Method

3.1.1. Stimuli

Our focus in the present simulations was on the macro-level structure of the lexicon, so we simply used strings of phonemes to create items that—for the sake of convenience—we refer to as words (N.B. any resemblance of these specially created words to real words in English is strictly coincidental). All of the words in the specially created lexicons were three phonemes long, and used the 14 phoneme symbols available in jTRACE (^, a, b, d, g, i, l, k, p, r, s, S, t, u). A word was considered a neighbor of another word if a single phoneme could be substituted into the word to form another word that appeared in the lexicon. (Additions and deletions of phonemes were not included in the assessment of phonological neighbors in order to keep all of the words the same length.)

Three separate lexicons were created that varied in the type of mixing by degree. The lexicon that exhibited assortative mixing by degree contained 56 words, the lexicon that exhibited disassortative mixing by degree contained 56 words, and the lexicon that exhibited no mixing by degree contained 48 words. The small (and unequal) number of words in each lexicon is a result of the constraints of the number of phonemes in the model, from maintaining the same word-length, and creating neighbors (by substituting one phoneme in the target word to form a new word) that would exhibit the desired type of mixing by degree. See Appendix C for the words that constituted the three different lexicons. Correlational analyses of the degree of each word and the degree of the neighbors of each word confirmed that the three lexicons exhibited the desired type of mixing by degree. In the case of the lexicon that exhibited assortative mixing by degree, r = +1.0 (p < .0001). For the lexicon that exhibited disassortative mixing by degree, r = −1.0 (p < .0001), and for the lexicon that exhibited no mixing by degree, r = 0 (p = 1).

Although the relationship between the degree of the target and neighbors (i.e., mixing by degree) differed among the three lexicons, the three lexicons contained words that were comparable in degree/neighborhood density (F(2,157) = 2.01, p = .14). In the case of the lexicon that exhibited assortative mixing by degree, the mean number of neighbors per word was 1.7 (sd = .46). For the lexicon that exhibited disassortative mixing by degree, the mean number of neighbors per word was 1.5 (sd = .87). For the lexicon that exhibited no mixing by degree, the mean number of neighbors per word was 1.5 (sd = .51).

We recognize that the small number of words and the few neighbors that each word has in the current lexicons might be considered a “simplified” lexicon, especially when compared to the size of the lexicon used in Simulation 1 or to the lexicon of a typical human. However, it is important to keep in mind that “[m]odels are not intended to capture fully the processes they attempt to elucidate. Rather, they are explorations of ideas about the nature of cognitive processes. In these explorations, simplification is essential—through simplification, the implications of the central ideas become more transparent” (McClelland, 2009, p. 11). The “simplified” lexicons used in the present simulation provided us with the opportunity to explore the idea of how the macro-level structure of a network—as measured by mixing by degree—might influence lexical processing.

3.1.2. Procedure

The default parameter settings of the jTRACE computer model (Strauss et al., 2007) were used in the present simulations, with the exception of using the specially created lexicons that varied in mixing by degree. Each word in each lexicon was presented as input. After 180 time slices the identity of the second-most active item in the set of lexical competitors was determined. We assumed that the second-most active item would be the item retrieved from the lexicon if lexical retrieval failed.

3.2. Results and discussion

As in Simulation 1, we again correlated the degree of the target word and the degree of the nextmost active word in the set of competitors to determine if there was a “cognitive footprint” in the output of the model indicative of the type of mixing by degree exhibited by the lexicon. If the macro-level structure of the lexicon influences (failed) lexical retrieval, then the correlation between the degree of the target word and the degree of the next most active word in the output of the model should be positive for the lexicon that exhibits assortative mixing by degree, negative for the lexicon that exhibits disassortative mixing by degree, and zero for the lexicon that does not exhibit mixing by degree.

For the lexicon that exhibited assortative mixing by degree, the correlation between the degree of the target word and the degree of the next most active lexical item in the set of competitors was r(54) = +.91 (p < .0001). For the lexicon that exhibited disassortative mixing by degree, the correlation between the degree of the target word and the degree of the next most active lexical item in the set of competitors was r(54) =−.75 (p < .0001). Note that in three instances in the disassortative lexicon (indicated in the appendix) the target word itself was the second-most active item. In those instances, we used the (incorrect) item with the highest activation as the item that would be retrieved if lexical retrieval failed. For the lexicon that did not exhibit mixing by degree, the correlation between the degree of the target word and the degree of the next most active lexical item in the set of competitors was r(46) = 0.0 (p = 1).

The results of Simulation 1 and 2 indicate that the type of mixing by degree exhibited by the phonological lexicon influences certain aspects of lexical processing, suggesting that the structure of the mental lexicon influences lexical processing. Because the computer model used in these simulations, jTRACE, captures certain relevant aspects of spoken word recognition behavior in humans we are encouraged to look for a cognitive footprint of assortative mixing by degree in human behavior. If the network feature of assortative mixing by degree is not simply a mathematical artifact of the network analysis performed in Vitevitch (2008), and the type of mixing observed in the English phonological lexicon influences processing, then we should be able to observe evidence of assortative mixing by degree when lexical retrieval “fails” in humans. We first analyzed a corpus of speech perception errors, that is, actual, attested failures in lexical retrieval, for a cognitive footprint of assortative mixing by degree in human behavior. We then used several psycholinguistic tasks to create situations in the laboratory that captured certain relevant aspects of “failed” lexical retrieval to look further for a cognitive footprint of assortative mixing by degree in human behavior.

4. Slip of the ear analysis

Although analyses of naturally occurring speech errors laid the foundation for understanding the process of speech production (Fromkin, 1971), our understanding of the process of word recognition has been guided primarily by evidence obtained from experiments employing reaction-time methodologies (e.g., priming, lexical decision, and naming tasks) rather than from naturally occurring errors. Speech perception errors do exist, and are perhaps better known as slips of the ear. A slip of the ear is a misperception of a correctly produced utterance. Slips of the ear should not be confused with slips of the tongue (i.e., speech production errors), where the speaker intends to say one thing but erroneously produces something else. In slips of the ear, the utterance is produced correctly (i.e., as intended), but the perceiver “hears” something else. An example (from Bond, 1999) of a slip of the ear is when a speaker says, “Stir this!” but the listener perceives the utterance as “Store this!”

As described in Bond (1999), the misperceptions that typically occur in slips of the ear involve misperceiving a vowel or consonant in a word (resulting in the erroneous perception of another word), the addition or deletion of a syllable in a word, or the mis-segmentation of words in a phrase (resulting in the perception of more or fewer words than was produced). Although there are likely to be many factors that contribute to slips of the ear, certain errors have not been observed, like hearing cat instead of dog, suggesting that semantic information does not strongly contribute to the initial stages of speech perception or to misperceptions; this contrasts with speech production errors where such substitutions have been observed (Fromkin, 1971).

Analyses of slips of the ear often provide evidence that is consistent with and complements the findings obtained from laboratory-based experiments (see the work of Bond (1999), Felty, Buchwald, Gruenenfelder, and Pisoni (2013), and others). For example, Vitevitch (2002b) found that the target word in 88 slips of the ear (obtained from the materials in the appendix of Bond (1999)) had denser phonological neighborhoods and higher neighborhood frequency than words comparable in length and word class that were randomly selected from the lexicon. This result—in accord with predictions derived from the neighborhood activation model and tested with several conventional psycholinguistic tasks in the laboratory (Luce & Pisoni, 1998)—suggests that words with dense neighborhoods and high neighborhood frequency are more difficult to perceive than words with sparse neighborhoods and low neighborhood frequency (hence their disproportionate appearance in slips of the ear corpora). In the present analysis we examined in a different way the same 88 slips of the ear examined in Vitevitch (2002b) for a cognitive footprint of assortative mixing by degree in human performance.

4.1. Methods

The items examined in Vitevitch (2002b) came from the Bond (1999) corpus and consisted of misperceptions made by adults that were classified by Bond as misperceptions of vowels or of consonants. This led to the exclusion of “complex errors” and parsing errors that resulted in “extensive mismatch between utterance and perception.” Also excluded were proper nouns, foreign words or phrases, acronyms or auditory spellings, and domain-specific technical terms.

4.2. Results and discussion

In the present analysis we examined the neighborhood density (i.e., degree) of the target words (mean = 12.3 neighbors, sd = 7.8) and the neighborhood density of the erroneously perceived words (mean = 13.1 neighbors, sd = 8.2). When the neighborhood density/degree of the 88 spoken words was correlated with the neighborhood density/degree of the corresponding misperceived words, a significant, positive correlation was observed (r(88) = +.68, p < .0001). This result suggests that naturally occurring instances of failed lexical retrieval contain a cognitive footprint of the network structure known as assortative mixing by degree that was observed in a network analysis of the English phonological lexicon (Vitevitch, 2008), indicating further that the structure of the mental lexicon influences certain language-related processes.

Observing that assortative mixing by degree influences human behavior is neither a trivial result, nor a foregone outcome given the observation of assortative mixing by degree in the phonological network examined in Vitevitch (2008). One need only look at the other studies reported in Vitevitch (2002b)—where the slips of the ear used in the present analysis were initially analyzed (see also Bond, 1999)—for evidence that human behavior doesn’t always conform to the predictions of psychological theory or to statistical distributions in the language. In addition to the findings regarding neighborhood density and neighborhood frequency summarized above in Section 4, Vitevitch (2002b) found that the slip of the ear tokens were higher in frequency of occurrence than words in general. This observation was unexpected given the predictions of models of spoken word recognition, which indicate that words with low frequency of occurrence are difficult to perceive, and would therefore lead to the prediction that slips of the ear should be low in frequency of occurrence rather than high as was observed in Vitevitch (2002b).

Furthermore, Zipf (1935) observed that there are many words in the language that are relatively low in frequency of occurrence, and few words in the language that are relatively high in frequency of occurrence. The statistical distribution of the number of words with a given word-frequency in the language would further lead to the prediction that, based on the principles of statistical sampling, it is most likely that slips of the ear would be words that are low in frequency of occurrence, instead of high in frequency of occurrence as was observed in Vitevitch (2002b). We note that the perceptual identification experiment and lexical decision experiment reported in Vitevitch (2002b) provided evidence that the counter-intuitive finding regarding word-frequency observed in the same study might have been due to the more rapid production of high frequency words compared to low frequency words that typically occurs in naturalistic settings (see also Wright, 1979). We further note that the counter-intuitive finding of word frequency in slips of the ear serves as an example that human behavior does not always conform to psychological theory or to statistical distributions in the language, making the present observation of an influence of assortative mixing by degree on slips of the ear a significant finding that provides important evidence that the structure of the mental lexicon influences certain language-related processes.

The combination of naturalistic observation and laboratory-based methods in Vitevitch (2002b) required to resolve the counter-intuitive finding of word frequency in slips of the ear emphasized to us the importance of using multiple methods to examine a research question. Like all research methodologies, naturalistic observations such as naturally occurring speech errors have certain strengths and weaknesses (see discussion in Bond (1999)). Rather than rely solely on this source of human behavioral data to examine how assortative mixing by degree might influence lexical retrieval processes, we conducted three psycholinguistic experiments. Given the role that assortative mixing by degree plays in network resilience, we used psycholinguistic tasks that captured certain relevant aspects of “failed” lexical retrieval. Like all laboratory-based tasks, the psycholinguistic tasks that we used are somewhat contrived, and performance in each of them is likely to be influenced by several factors. Nevertheless, these tasks may allow us to glimpse a cognitive footprint of the network structure of the mental lexicon. While each of these tasks individually provides limited information, together the results of these tasks offer a more complete view of how the structure of the mental lexicon might influence processing.

5. Experiment 1: Perceptual identification task

In the perceptual identification task, participants hear a word mixed with noise and type or say the word that was heard. Pisoni (1996) provided a concise review of the perceptual identification task and its use. He noted that differences in signal-to-noise ratios, speaking rate, response format (open- versus closed-set), the types of noise used (e.g., white, pink, envelope-shaped, etc.), and a number of other factors influence the responses made in this task. In addition, responses in this task are thought to reflect both bottom-up processing of the acoustic–phonetic input, as well as top-down processing strategies that may be employed in this “off-line” task (meaning that a response deadline is not typically imposed on participants). We acknowledge these concerns regarding the perceptual identification task, but also recognize certain benefits of this task that make it well-suited to our present need as a proxy of failed lexical retrieval. Indeed, we are not the only ones to recognize that the perceptual identification task captures certain relevant aspects of failed lexical retrieval; Felty et al. (2013) used this task to make a corpus of speech perception errors collected under controlled laboratory conditions.

In the version of the perceptual identification task used in the present experiment, participants heard real English words embedded in white noise at a signal-to-noise ratio that would lead to a reasonable number of erroneous responses (S/N = +5). Although overall accuracy of responses is typically assessed in this task, we instead analyzed the incorrect responses, which, by definition, are instances of failed lexical retrieval. Lower signal-to-noise ratios would increase the number of erroneous responses, but the increased noise would also likely discourage the participants in the task, and perhaps lead to idiosyncratic and task-specific strategies that would influence task performance. Higher signalto- noise ratios would likely lead to performance that approached “ceiling,” leaving us a small number of errors to examine. Therefore, we used a signal-to-noise ratio that would provide us with a reasonable number of “genuine” erroneous responses.

Recall that degree in network science terminology is equivalent to phonological neighborhood density in psycholinguistics. It is well-known that phonological neighborhood density is correlated with a number of other factors, like word frequency, phonotactic probability, etc., that also influence spoken word recognition (Frauenfelder et al., 1993; Landauer & Streeter, 1973). Also recall the over-representation of words with high neighborhood density observed by Vitevitch (2002b) in a corpus of speech errors. To take advantage of the control afforded by laboratory conditions to minimize the potential influence of other factors known to influence spoken word recognition, and to examine a broader range of words from the mental lexicon, we selected an equal number of words that had relatively high neighborhood density and that had relatively low neighborhood density (Luce & Pisoni, 1998), but were similar in terms of several other lexical characteristics (see Section 5.1.2 for details). We then correlated the neighborhood density of the erroneous responses to the neighborhood density of the target word that was embedded in noise. If the macro-level characteristic of the mental lexicon known as assortative mixing by degree influences processing, then we should observe in the perceptual identification task a correlation between the neighborhood density of the erroneous response and the neighborhood density of the target word.

5.1. Method

5.1.1. Participants

Twelve native English-speaking students enrolled at the University of Kansas gave their written consent to participate in the present experiment. None of the participants reported a history of speech or hearing disorders, nor participated in the other experiments reported here.

5.1.2. Materials

One hundred English monosyllabic words containing three phonemes in a consonant–vowel–consonant syllable structure were used as stimuli in this experiment (and are listed in Appendix D). A male native speaker of American English (the first author) produced all of the stimuli by speaking at a normal speaking rate and loudness in an IAC sound attenuated booth into a high-quality microphone. Isolated words were recorded digitally at a sampling rate of 44.1 kHz. The pronunciation of each word was verified for correctness. Each stimulus word was edited into an individual sound file using SoundEdit 16 (Macromedia, Inc.). The amplitude of the individual sound files was increased to their maximum without distorting the sound or changing the pitch of the words by using the Normalization function in SoundEdit 16. The same program was used to degrade the stimuli by adding white noise equal in duration to the sound file. The white noise was 5 dB less in amplitude than the mean amplitude of the sound files. Thus, the resulting stimuli were presented at a +5 dB signal to noise ratio (S/N).

Neighborhood density refers to the number of words that sound similar to the stimulus word based on the addition, deletion or substitution of a single phoneme in that word (Luce & Pisoni, 1998). A word like cat, which has many neighbors (e.g., at, bat, mat, rat, scat, pat, sat, vat, cab, cad, calf, cash, cap, can, cot, kit, cut, coat), is said to have a dense phonological neighborhood, whereas a word, like dog, that has few neighbors (e.g., dig, dug, dot, fog) is said to have a sparse phonological neighborhood (N.B., each word has additional neighbors, but only a few were listed for illustrative purposes). Half of the stimuli had dense phonological neighborhoods (mean = 27.7 neighbors, sd = 1.6), and the remaining stimuli had sparse phonological neighborhoods (mean = 14.9 neighbors, sd = 1.5; F(1,98) = 1648.62, p < .0001). Although the stimuli differed in neighborhood density, they were comparable on a number of other characteristics as described below.

Subjective familiarity

Subjective familiarity was measured on a seven-point scale (Nusbaum, Pisoni, & Davis, 1984). Words with dense neighborhoods had a mean familiarity value of 6.87 (sd = .22) and words with sparse neighborhoods had a mean familiarity value of 6.82 (sd = .28, F(1,98) = 1.50, p = .22). The mean familiarity value for the words in the two groups indicates that all of the words were highly familiar.

Frequency of occurrence in the language

Average log word frequency (log10 of the raw values from Kučera and Francis (1967)) was 1.03 (sd = .58) for the words with dense neighborhoods, and 1.00 (sd = .58) for the words with sparse neighborhoods (F(1,98) = .08, p = .77).

Neighborhood frequency

Neighborhood frequency is the mean word frequency of the neighbors of the target word. Words with dense neighborhoods had a mean log neighborhood frequency value of 2.03 (sd = .24), and words with sparse neighborhoods had a mean log neighborhood frequency value of 1.94 (sd = .25; F(1,98) = 2.99, p = .09).

Phonotactic probability

The phonotactic probability was measured by how often a certain segment occurs in a certain position in a word (positional segment frequency) and by the segment-to-segment co-occurrence probability (biphone frequency; as in Vitevitch and Luce (2005)). The mean positional segment frequency for words with dense neighborhoods was .147 (sd = .02) and .140 (sd = .02, F(1,98) = 2.11, p = .15), respectively. The mean biphone frequency for words with dense neighborhoods was .007 (sd = .003) and for words with sparse neighborhoods was .007 (sd = .003, F(1,98) = .009, p = .93). These values were obtained from the web-based calculator described in Vitevitch and Luce (2004).

5.1.3. Procedure

Participants were tested individually. Each participant was seated in front of an iMac computer running PsyScope 1.2.2 (Cohen, MacWhinney, Flatt, & Provost, 1993), which controlled the presentation of stimuli and the collection of responses. In each trial, the word “READY” appeared on the computer screen for 500 ms. Participants then heard one of the randomly selected stimulus words imbedded in white noise through a set of Beyerdynamic DT 100 headphones at a comfortable listening level. Each stimulus was presented only once. The participants were instructed to use the computer keyboard to enter their response (or their best guess) for each word they heard over the headphones. They were instructed to type “?” if they were absolutely unable to identify the word. The participants could use as much time as they needed to respond. Participants were able to see their responses on the computer screen when they were typing and could make corrections to their responses before they hit the RETURN key, which initiated the next trial. The experiment lasted about 15 min. Prior to the experiment, each participant received five practice trials to become familiar with the task. These practice trials were not included in the data analyses.

5.2. Results and discussion

A response was scored as correct if the phonological transcription of the response matched the phonological transcription of the stimulus. Misspelled words and typographical errors in the responses were scored as correct responses in certain conditions: (1) adjacent letters in the word were transposed, (2) the omission of a letter in a word was scored as a correct response only if the response did not form another English word, or (3) the addition of a single letter in the word was scored as a correct response if the letter was within one key of the target letter on the keyboard. Of the 1200 responses, 328 were correct (27%) and 14 responses (1%) received the response of “absolutely unable to identify” (i.e., “?”); these responses could not, of course, be analyzed further.

Turning to the 858 incorrect responses (72% of the 1200 responses), 398 (46% of the incorrect responses) were phonological neighbors of the target words based on the one phoneme metric used in Luce and Pisoni (1998), and 460 (54% of the incorrect responses) were not phonological neighbors of the target words. Given the way in which assortative mixing by degree was calculated in the network analysis of Vitevitch (2008), we further analyzed only the incorrect responses that were phonological neighbors of the target words (based on the metric used in Luce & Pisoni, 1998). We recognize that the remaining incorrect responses might be useful for developing an account of all types of perceptual errors, for developing an algorithm that would predict the likelihood of a misperception occurring, etc. Such questions are interesting, but they are not the focus of the present investigation and do not directly address the question at hand: can we find in human language behavior a cognitive footprint of assortative mixing by degree? Only the incorrect responses that are phonological neighbors of the target words allow us to directly address that question. Furthermore, the same restriction was used in the analysis of actual speech perception errors and in the jTRACE simulations. Using the same restriction in each analysis enables us to equitably compare the results across research methods.

To look for a trace of assortative mixing by degree in the responses in the perceptual identification task we correlated the neighborhood density of the target word (n = 87, mean = 21.06, sd = 6.61) to the mean neighborhood density of the incorrect responses to that target (n = 87, mean = 22.36, sd = 6.22; note that all of the participants correctly identified 13 words reducing n in the above analyses to 87 instead of 100). We observed a positive and statistically significant correlation between degree/neighborhood density of the targets and the mean of the incorrect responses that were neighbors of the targets (r(85) = +.22, p < .01).

The magnitude of the correlation in the present experiment is smaller than that observed in the network analysis reported in Vitevitch (2008), and in the analysis of slips of the ear in Section 4.2 above. This should not be surprising given that we selected an equal number of words with dense phonological neighborhoods (i.e., high degree) and with sparse phonological neighborhoods (i.e., low degree) to use as stimuli in the perceptual identification experiment. Recall that the slips of the ear analysis showed that misperceptions typically occur for words with dense phonological neighborhoods (i.e., high degree), so inclusion of items with sparse phonological neighborhoods/low degree may have reduced the overall magnitude of the present correlation.

Furthermore, the perceptual identification task captures certain important aspects of what happens when lexical retrieval fails, but it is unlikely that this (or any) laboratory-based task provides a perfect analog of cognitive processes used in the real world, contributing further to the reduced magnitude of the present correlation. This, too, should not be surprising, because “…the laboratory setting is not necessarily representative of the social world within which people act. As a consequence, we cannot use laboratory findings to estimate the likelihood that a certain class of responses will occur in naturalistic situations…. Experiments are not conducted to yield such an estimate.” (Berkowitz & Donnerstein, 1982, p. 255). Rather, the present experiment (like most experiments) was conducted to demonstrate that changes in variable X can lead to changes in variable Y.

The result observed in the present experiment indicates that listeners who incorrectly perceived a neighbor of a target word with a dense phonological neighborhood (mixed with noise) tended to respond with a word that also had a dense phonological neighborhood. Concomitantly, listeners who incorrectly perceived a neighbor of a target word with a sparse phonological neighborhood (mixed with noise) tended to respond with a word that also had a sparse phonological neighborhood. This result provides evidence of a cognitive footprint of assortative mixing by degree observed in a network analysis of the mental lexicon by Vitevitch (2008), and also observed in the present report in a computer simulation and an analysis of a speech error corpus. This result further suggests that the structure of the mental lexicon influences cognitive processing, and that the tools of network science can be used to provide novel insights about those cognitive processes and representations.

6. Experiment 2: Similar sounding word task

The perceptual identification task used in Experiment 1 captured certain relevant aspects of failed lexical retrieval (see also Felty et al., 2013), allowing us to examine whether the macro-structure observed among word-forms in the phonological lexicon of English (i.e., assortative mixing by degree) might influence in some way human language processing. However, we also recognize that the noise used in the perceptual identification task might differentially mask different phonemes, and might induce task-specific strategies in addition to the strategies typically used during spoken word recognition. Therefore, in the present experiment we used a task that did not employ degraded stimuli. In Experiment 2 of Luce and Large (2001), participants heard a nonword, and were asked to say the first real English word that came to mind that sounded like that nonword. For example, a participant might have heard/fin/ and responded with the real word feet. We reasoned that a modified version of the task—using as stimuli real English words instead of nonwords—might capture certain relevant aspects of failed lexical retrieval and allow us to see an influence of assortative mixing by degree on processing. We recognize that asking participants to respond with a word other than the one they heard might seem unnatural, but we believe this task is no more unnatural than other tasks used in psycholinguistics (e.g., semantic associate task, Nelson, McEvoy, & Schreiber, 1998).

Participants in the present experiment were presented with the same English words used in Experiment 1—this time without noise—and were asked to respond with the first English word that came to mind that sounded like the word they heard. Similar to the jTRACE simulation, the word that participants produced in this task was viewed as the word that would be retrieved when lexical retrieval failed. As in Experiment 1, we correlated the neighborhood density/degree of the target word (i.e., what was presented) to the mean neighborhood density/degree of responses that were phonological neighbors.

6.1. Method

6.1.1. Participants

Fourteen native English speaking students enrolled at the University of Kansas gave their written consent to participate in the present experiment. None of the participants reported a history of speech or hearing disorders.

6.1.2. Materials

The same stimuli used in Experiment 1 were used in the present experiment. The only exception being that the words were not mixed with white noise in the present experiment.

6.1.3. Procedure