Abstract

For much of the past 30 years, investigations of auditory perception and language have been enhanced or even driven by the use of functional neuroimaging techniques that specialize in localization of central responses. Beginning with investigations using positron emission tomography (PET) and gradually shifting primarily to usage of functional magnetic resonance imaging (fMRI), auditory neuroimaging has greatly advanced our understanding of the organization and response properties of brain regions critical to the perception of and communication with the acoustic world in which we live. As the complexity of the questions being addressed has increased, the techniques, experiments and analyses applied have also become more nuanced and specialized. A brief review of the history of these investigations sets the stage for an overview and analysis of how these neuroimaging modalities are becoming ever more effective tools for understanding the auditory brain. We conclude with a brief discussion of open methodological issues as well as potential clinical applications for auditory neuroimaging.

Keywords: fMRI, PET, humans, auditory cortex, auditory pathway

Introduction

The study of audition in the brain has long been conducted successfully in experimental animals, permitting us to achieve substantial knowledge regarding many aspects of auditory sensation and perception including sound localization (Jenkins et al., 1984), sound discrimination (Rauschecker et al., 1995), and responses to unique acoustic stimuli such as species-specific vocalizations (Brainard et al., 2000). In addition to providing fundamental knowledge of how we perceive the acoustic world in which we are immersed, many of these studies have been driven by an underlying desire to understand how humans produce, perceive and comprehend transmission of information via speech, with the underlying neural representation of language being of comparable interest. It is toward this more human-centric end that specific response properties (e.g., best frequency, rate-level curves) of neurons within the structures along the auditory pathway have been investigated, seeking to identify the organizational principles (e.g., tonotopy) that guide connections carrying information from the peripheral cochlea to auditory sensory and association cortices, and beyond to integrative regions of the brain. Identification of such organizational principles through a “bottom-up” approach provides insight as to the combinations of features that must be present for a given complex stimulus to cease being perceived merely as a “sound”, and instead begin to elicit responses from regions that are primarily responsible for detection of species-specific vocalizations and, in humans, particular to “speech”.

The desire to characterize the organizational principles of structures in the auditory pathway arguably dictates that some form of spatial (as in localization) information must be obtained in conjunction with temporal response information. Construction of organizational maps of properties in the central nervous system continues to be most commonly performed using individual or multiple electrode penetrations. Such work is challenging, time consuming, and has fundamentally been impractical in humans until recent advances in the use of (invasive) intra-cranial recordings, leaving the larger goal of addressing speech processing untenable through these historically-significant techniques.

The desire to non-invasively evaluate human auditory pathway function on a spatial map (i.e., property-to-place) basis was only made possible by the advent of functional neuroimaging methods. Historically, in vivo evaluation of higher auditory function in the human brain was almost exclusively conducted in neurosurgical cases (e.g., epilepsy) in which psychophysical evaluation and electrocorticographic approaches could be combined to yield localization of preference for particular stimulus properties—e.g., Penfield (1947). Going farther back in time, our understanding of the neural basis of auditory and language function came primarily from post-mortem correlation of previously observed behavioral deficits and brain lesions, such as in the identification of Broca’s (Broca, 1861) and Wernicke’s (Wernicke, 1874) areas.

In the 1960s, electroencephalography (EEG), applied for the purpose of measuring evoked potentials, became the first neuroimaging technique to be directed at the study of human auditory function (Bickford et al., 1964; Cody et al., 1964). Evoked potentials initially served a valuable role in the assessment of auditory neurological health and development (Hecox et al., 1974; Salamy et al., 1976), and continue to be used as such today. However, evoked potentials have historically provided only limited information about the structure and organizing principles of the central auditory pathway, except in cases where generators for the various response waveforms (e.g., N100) have previously been identified (Jewett, 1970; Melcher et al., 1996). As such, while valuable for understanding how and when (and, therefore, at which stage of the auditory pathway) differentiation of stimuli takes place (e.g., Cohn, 1971), evoked potentials have not proven useful for non-invasive elucidation of auditory processing at a resolution below the level of a nucleus or a prominent, damaged, cell population (in contrast to fMRI, see Baumann et al., 2011).

With the advent of source localization techniques in the late 1970s, it first became practical to use EEG (and its companion, magnetoencephalography, MEG) to evaluate the local organization and response properties within the central auditory pathway. Such advancements permitted some of the earliest investigations into the organizing principles of the human auditory pathway, particularly auditory cortex—e.g., mapping of tonotopic organization of primary and secondary cortices (Cansino et al., 1994; Romani et al., 1982), amplitopic organization (Pantev et al., 1989), and pitch-based organization (Pantev et al., 1995). Such measurements continue to provide critical information about auditory function on a regional-basis, given the extremely high temporal resolution and fine-grain sensitivity of EEG and MEG, but the assumptions inherent in the solution of the inverse problem for source localization continue to limit the ability of such techniques to precisely characterize organizational principles.

Beginning in the 1980s, investigation of the function and organization of the central auditory pathway has benefitted from concurrent collection of dynamic (temporal) information with spatial information. In the past thirty years, auditory neuroscience has been greatly advanced by the development and application of positron emission tomography (PET), functional magnetic resonance imaging (fMRI) and near infrared spectroscopy (NIRS) along with other forms of non-invasive optical imaging. Among these techniques, the bulk of studies to-date that enhanced our understanding of the operation and organization of the human central auditory pathway rely on PET and fMRI.

PET and fMRI have significantly contributed to our understanding of the neurosciences through their ability to obtain functional (dynamic, time-varying) information simultaneous with localization (within the brain) of signal sources. In each of these techniques, images generated while the subject is in one cognitive state may be directly compared, on a location-by-location basis, with images generated while the subject is in a second, different state. Changes in image intensity with differences in cognitive state provide information about changes in the underlying physiologic state of the brain tissue, providing information regarding the neuronal population level.

Based on their measurement of close proxies to underlying neuronal behavior during both sensation and cognitive processing, PET and fMRI have frequently been used to study a wide range of auditory and linguistic phenomena, ranging from “basic” acoustic perception (e.g., Harms et al., 2002; Hart et al., 2003) to language comprehension (e.g., Saur et al., 2008; Scott et al., 2000). Both PET and fMRI rely on the assumption that (local) changes in vascular behavior are reflective of changes in neuronal behavior (Roy et al., 1890). While this relationship is not yet fully characterized, activity in neurons is closely linked to glucose consumption, oxygen consumption, and blood flow—changes that are readily exploited through detection of the accumulation or passage of tracers (PET) or measurement of local oxygenation levels (fMRI). Therefore, both techniques provide us with indirect, yet informative, measures of changes in neuronal metabolic activity. Such measures permit us to identify areas of the brain in which an alteration of state (i.e., excitation or inhibition) has occurred, and facilitate our construction of the network of interconnections associated with the auditory pathway.

Based on their key role in the past several decades, the mechanisms of PET and fMRI are described below along with a brief summary of their specific application to auditory neuroimaging in the past and as expected in the future.

Positron Emission Tomography (PET) for Auditory Neuroscience

PET is a general name for techniques that rely on the introduction of radioactive compounds into body tissue (including brain) that may be used to assess local metabolism. The majority of PET neuroimaging relies on positron-emitting isotopes of oxygen or fluorine that have been incorporated into compounds that will either be taken up by brain tissues as a result of metabolic demand or will pass through a region of the brain and remain in the vascular compartment, permitting evaluation of blood flow and volume. PET imaging can be conducted with temporal resolution ranging from several seconds to several minutes (limited by the integration time required for the camera to detect a sufficient number of emissions), and effective spatial resolution on the order of 6 mm, a function of radius and the density of detectors in the camera ring (e.g., Malison et al., 1995).

Basis of PET Neuroimaging

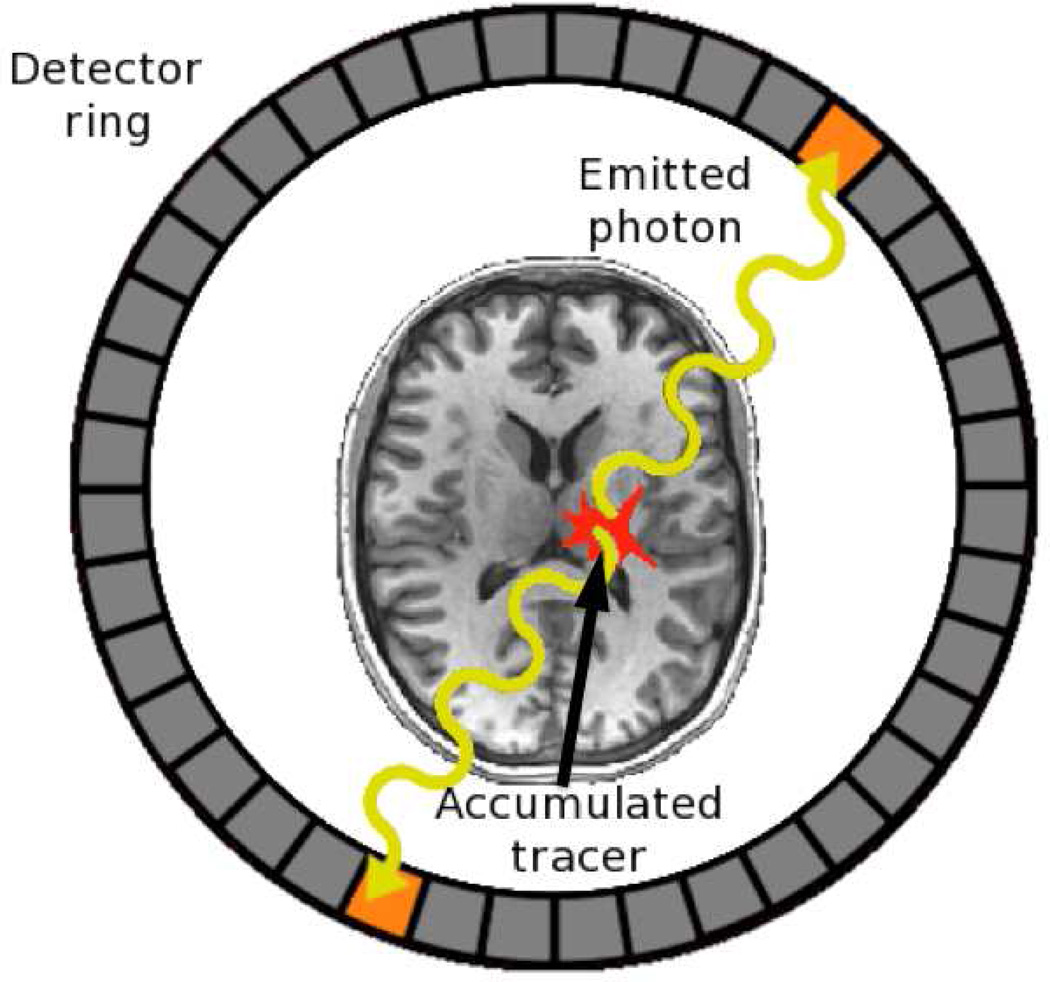

Construction of PET images is achieved using electronic detection of photons that arrive coincidentally at two locations on a ring of scintillating detectors located around the patient. These photons are produced by annihilation of positrons (emitted by the radiopharmaceutical) with electrons located in the surrounding tissue (see Figure 1). Observation of a pair of photons with near-coincidental arrival times on the detector ring indicates that a positron annihilation occurred somewhere along the line between the two detectors. Accumulation of many such coincidental arrivals permits construction of lines of response between each possible pair of detectors, from which traditional filtered back-projection techniques may be used to identify sources—regions of high concentration of the positron-emitting tracer. Note that the time required to collect sufficient coincidental arrivals of photons is dependent on the dose of the radioactive compound, and rapid image formation may not always be desirable from a clinical perspective. This trade-off (ionizing radiation dose vs. image formation time) is central to PET experimental design.

Figure 1.

Coincidence detection in positron emission tomography (PET). Accumulated radioactive tracer within a patient releases positrons that annihilate with nearby electrons, resulting in the emission of two high-energy photons (gamma rays) that intersect with a detector ring surrounding the patient, with the detections being coincident in time. The two detectors form a line along which the positron annihilation must have occurred. The intersection of many such detected coincidence lines, observed over the course of a scan, serves to pinpoint areas of relatively high concentration of the radioactive label. Two- or three-dimensional images indicating where the labeled compound (e.g., 15O-labeled water) accumulated in the brain may then constructed by back-projection.

The majority of PET studies focused on cognitive function use 15O labeled water. This tracer has a short half-life (~2 minutes), is readily taken into any biological tissue, and readily crosses the blood-brain barrier. Typically, H215O is injected into the blood stream of the subject (generally via the arm) and rapidly diffuses in the blood supply. When a region of the brain exhibits increased neuronal activity, the local increase in blood flow produces greater delivery of H215O, and the local (extra-vascular) concentration of 15O also increases. By collecting a sequence of 8–16 images—each requiring approximately one minute for formation—separated by 7–10 minutes (permitting accumulated tracer to decay nearly completely between injections), multiple experimental conditions may be evaluated in the same subject within a single imaging session. Direct (often subtractive) comparison of tracer accumulations across conditions is then used to identify regions of the brain that have exhibited increased or decreased neuronal activity as a result of variations in experimental condition. On a group basis, data from multiple subjects may be averaged together after appropriate registration procedures have been effected, allowing group-level conclusions to be drawn for generalization to a greater population.

While of tremendous value for assessment of metabolic changes, PET has several specific limitations that preclude its use as a primary workhorse for neuroimaging. First, as noted above, a key limitation for PET imaging is that it requires administration of ionizing radiation. Although the typical PET dose is small (roughly equivalent to one year’s exposure to natural background radiation), this amount is sufficient to rule out its use as a longitudinal tool, and further limits its application to children and other potentially sensitive groups (e.g., women who are or may become pregnant). Second, PET is costly due to the need for synthesis of radionuclides in close proximity to the imaging site, or the production of many times the necessary volume when synthesis must take place at an appreciable distance (by travel time) from the imager. It is useful to note that these two drawbacks serve to limit most group-level PET studies to sizes of 10 subjects or fewer, sometimes constraining the ability to generalize results to larger populations.

Specific Issues for Application of PET to Auditory Neuroscience

While much of neuroimaging has shifted toward functional MRI over the past two decades, PET continues to offer several technical advantages over fMRI that preserve its value as a tool in the investigation of the auditory system. First, as a passive imaging technique, PET is quiet, producing no background noise that can interfere with perception of an auditory stimulus. Second, again due to the passive nature of the PET detection process, the environment is electromagnetically compatible with a variety of auditory implants and assist devices (e.g., hearing aids). This makes PET ideal as a tool for the study of individuals for whom augmentation is essential for hearing. Third, the PET environment is generally “subject-friendly” being relatively non-confining (the detector ring is relatively short, often “head-only”) and offering frequent and extensive interaction between experimenter and subject due to the short duration of most PET acquisitions (as noted above, typically one or two minutes).

Functional Magnetic Resonance Imaging (fMRI)

During the past two decades, a shift has taken place in functional imaging, largely away from PET to fMRI. Not only is the core technology associated with fMRI more widely available than PET, but with no associated radiation burden, the former has become an attractive alternative for large-scale cognitive studies in healthy volunteers. The first demonstration of the potential use of the MRI signal to localize brain function dates back to 1991 when Belliveau and colleagues showed differences in gadolinium concentration before and after visual stimulation in occipital cortex (Belliveau et al., 1991). Within the following year, original papers (Bandettini et al., 1992; Kwong et al., 1992; Ogawa et al., 1992) demonstrated how local changes in blood oxygenation levels (BOLD) could be used as an intrinsic marker of neuronal activity in humans, giving rise to BOLD-fMRI as we know it today—see Bandettini (2012) for a further historical review on fMRI.

The specific signal fluctuations observed in fMRI experiments reflect changes in the oxygenation of hemoglobin. When oxygenated (HbO), the hemoglobin molecule does not appreciably alter the magnetic field experienced by protons in tissues in the immediate vicinity. However, when reduced (HbR) by the extraction of oxygen into the tissue, the hemoglobin molecule becomes paramagnetic, reducing homogeneity of local magnetic fields. A reduction in the homogeneity of the local magnetic field reduces the coherence of the signals produced by the tissue’s protons, resulting in a lower net signal being observed. Therefore, as the relative concentrations of HbO and HbR ([HbO] and [HbR], respectively) vary, the signal obtained from a given region will also vary (Ogawa et al., 1990).

Basis of fMRI Neuroimaging

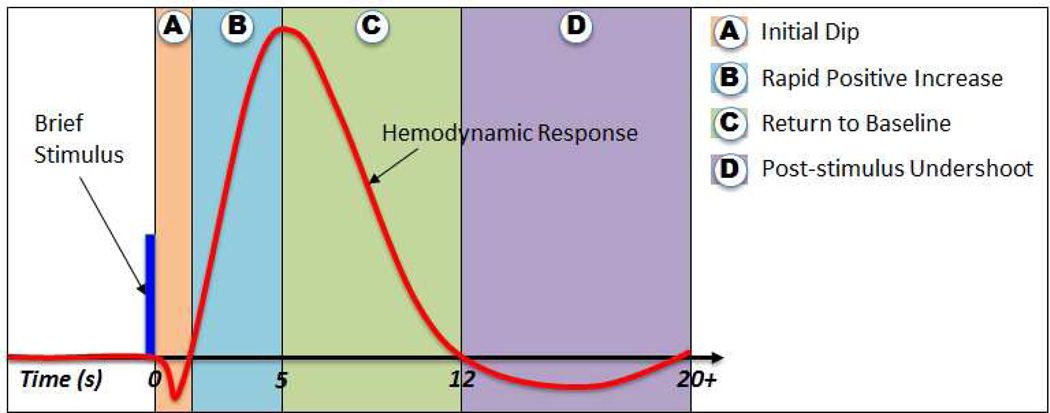

The signal fluctuations associated with changes in blood oxygenation may be characterized in a linear systems sense by the hemodynamic response that is measured by BOLD-fMRI (see Figure 2). This response reflects metabolic activity among neurons, glia and the local vasculature. Factors contributing to this measure include (but are not limited to) neuronal and glial physiology, rate of oxygen consumption, and regional blood flow and volume. These factors are integrated by the magnetic resonance imaging process over the millions of cells contained within each voxel—see Bandettini (2009) and Logothetis (2008) for reviews of the large literature on how neuronal activity is related to observed fMRI signals.

Figure 2.

Stereotypical example of a blood-oxygenation level-dependent (BOLD) hemodynamic response observed using functional magnetic resonance imaging (fMRI) subsequent to a brief (impulse-like) stimulation. Four response components are depicted following this stimulation. (A) The “initial dip” in which oxygen consumption temporarily outstrips delivery of oxygenated hemoglobin to the neural tissue. (B) A delayed, yet rapid increase of the signal level subsequent to increased local blood volume and blood flow, resulting in greater delivery of oxygenated hemoglobin than is required to offset oxygen consumption. The delay of this rise is typically observed to be 1–2 seconds, with the peak being achieved between 4–7 seconds after stimulus onset. (C) Gradual return of the signal level to “baseline” (that observed prior to presentation of the stimulus) associated with decreases in blood volume and blood flow (D) The post-stimulus undershoot during which the observed signal levels are depressed relative to those observed prior to stimulus presentation. This drop in signal level is associated with a temporal decoupling of the blood flow recovery relative to blood volume, producing decreased oxygen delivery. See Mandeville et al. (1998) for further information about the underlying physiology.

A brief, consensus, view is here presented to explain the use of fMRI to assess neuronal behavior. The initial increase in metabolic demand associated with neuronal activity drives down [HbO]:[HbR] through increased extraction of oxygen (Frostig et al., 1990), resulting in a localized signal drop referred to as the “initial dip” (Hennig et al., 1994; Hu et al., 1997). This initial dip (Figure 2A) is transient and of low amplitude, making it both difficult to measure and a less generally useful feature to use for detection of activation in fMRI studies (Sheth et al., 2005; Zarahn, 2001). Subsequent to the initial dip, capillaries proximal to the active neurons dilate and increase the local blood volume (Hillman et al., 2007; Malonek et al., 1997) and subsequently bring about additional local blood flow (Malonek et al., 1996). With the increased blood flow, delivery of oxygen exceeds the actual demand from the local active tissue, increasing the local [HbO]:[HbR]. The increase in this ratio is why increased metabolic demand results in an increase in local field homogeneity and an increased fMRI signal. Note that this hemodynamic response is sluggish relative to the neuronal behavior, and the peak of a response to an input occurs 4–7 seconds after onset of the driving stimulus or cognitive state (Figure 2B), with this delay varying with position in the brain, with typical delays in auditory cortex at the short end of this range (Saad et al., 2001). After a sustained “peak”, the return of neuronal activity to baseline produces first a decrease in local blood volume (presumably due to capillary contraction) followed by a decrease in local blood flow (Malonek et al., 1997; Mandeville et al., 1998). This two-step sequence leads to a drop of [HbO]:[HbR] to (Figure 2C) and then below the “baseline” level that existed prior to the increase in neuronal activity. As a result, there is a prolonged “undershoot” period in the fMRI signal time-course (Figure 2D).

Given that BOLD-fMRI is thus clearly illustrating a response that is epi-phenomenological relative to the neuronal activity, it is useful to identify how the response should be interpreted. As noted above, each voxel in an fMRI dataset contains millions of neurons (Logothetis, 2008) and the gross behavior observed is likely to be a population-level effect, rather than necessarily the consequence of a small subset. Such an interpretation is consistent with the observation that fMRI signals are better predicted by the local field potential (LFP) than by multi-unit activity or post-synaptic potentials (Goense et al., 2008; Logothetis, 2003; Logothetis et al., 2001). Therefore, BOLD activity likely primarily reflects inputs to, and processing within, the region in which a signal change is detected, rather than providing a direct measure of the number or rate of (spike) outputs from that region (Logothetis, 2002; Viswanathan et al., 2007). As a result, while we refer to an increase in fMRI signal levels as “activation” we must recognize that this is not necessarily a reflection of increased spike activity in that area. Critically, it precludes the ability to make direct assignment of neural computation to any given area based solely on increased fMRI signal levels.

While the BOLD effect (as described above) is typically observed as a positive deviation from the baseline MR signal level associated with increases in neuronal activity, researchers have also reported negative signal changes (Gonzalez-Castillo et al., 2012; Logothetis, 2002; Saad et al., 2001; Shmuel et al., 2006; Shmuel et al., 2002; Tootell et al., 1998). As generally observed, these negative BOLD responses are similar to positive responses in being time-locked to stimulus or task onset, and having a comparable (though inverted) time course (Shmuel et al., 2006; Shmuel et al., 2002). Moreover, such responses are often observed spatially adjacent to positive BOLD responses (Shmuel et al., 2006; Wade et al., 2010)—see also Boas et al. (2008). While the physiological basis of such responses remains unclear, experimental evidence suggests that they are a consequence of reductions in local neuronal activity, including inhibition (Shmuel et al., 2006; Wade et al., 2010). Vascular mechanisms such as shunting of blood from neighboring regions have been proposed, but such mechanisms are unlikely to be sustained over the many seconds for which these responses persist. However, given the complex relationship among local changes in metabolism and vascular response, such behaviors are physiologically possible (Buxton et al., 1997; Uludag et al., 2009). Regardless of source, when inverted BOLD responses are observed in contrasts between active states, it does not make sense to refer to a “negative BOLD” signal, as the contrast obtained (even in analysis of the default mode network) is most probably between two levels of metabolic activity that are still elevated relative to a true “resting” (i.e., null activity) condition.

Current Advancements in fMRI

In the two decades since its inception, fMRI has developed at a dramatically fast pace due to radical improvements in hardware (e.g., increased field strength, coil arrays), acquisition techniques (e.g., parallel imaging), signal processing (e.g., more effective noise compensation) informatics (e.g., memory and processing capabilities), analytical tools (e.g., multi-variate pattern analysis and machine learning approaches) and experimental designs (e.g., resting state fMRI, simultaneous fMRI-EEG).

In terms of hardware, users of fMRI have enjoyed a dramatic increase in field strength from early sub-1T systems in the 1980s to today’s cutting-edge research magnets that exceed 10T. Increments in field strength provide increases in signal-to-noise ratio and shift the source of contributed signal from intra- to extravascular sources. Both of these field strength dependencies translate into better sensitivity to neuronal behavior, higher spatial resolution and better reproducibility for fMRI. Early fMRI research (1990s) was conducted primarily using 1.5T systems, with roughly 4 mm isotropic voxels, already representing a marked improvement in spatial resolution over PET and proving sufficient for imaging (gross) responses from subcortical and brainstem nuclei (e.g., Guimaraes et al., 1998). Today the core of fMRI experimentation is conducted in 3T systems installed around the globe, and typically facilitating 2.5–3 mm isotropic spatial resolutions. Additionally, a small body of approximately 35 human 7T scanners (Ugurbil, 2012) is already demonstrating the great potential of ultra-high field for fMRI experimentation and perhaps the clinic. These systems provide enough resolution to begin to resolve hemodynamic responses on a column-specific (Yacoub et al., 2008) and layer-specific (Olman et al., 2012) basis within the cortex. One caveat associated with imaging at higher field strength is that signal drop-out artifacts associated with air-tissue interfaces (e.g., sinuses or the mastoid cavity) are exacerbated at higher field strengths. Obtaining data using finer spatial resolution (i.e., greater in-plane matrix sizes, thinner slices) reduces these effects by minimizing the acquired volume encompassing these boundaries, and spin-echo (as opposed to gradient-echo) techniques reduce such artifacts by minimizing effects of static field inhomogeneities, but at a cost—particularly at lower field strengths—in signal-to-noise ratio.

A second hardware advance worth noting here is the development of procedures for using arrays of coils (e.g., Bodurka et al., 2004) in conjunction with advanced acquisition acceleration techniques that simultaneously acquire data from multiple slices (Feinberg et al., 2010; Moeller et al., 2010). Such advances can halve volume acquisition durations, critical to controlling the duration of acoustic noise associated with acquisition (see below) and for increasing the number of measurements that may be obtained in a given experiment, thus reducing the temporal signal-to-noise ratio necessary for detection of subtle responses (Murphy et al., 2007).

The hardware advancements noted above are now being matched by a move toward “big data” approaches to analysis and data sharing that may open fMRI to even more rapid advancement in the study of neuroscience than has taken place in its first twenty years. It is presently feasible, on high-end research systems, to acquire whole-brain fMRI scans with spatial resolutions in the vicinity of 1mm3 and repetition times of 1 second or less. As a result, a single two-hour duration scanning session can produce up to 2 GB of raw data (DICOM images). Recent developments in informatics and drastic reduction in computer equipment costs permit neuroscientists to transfer, store, process and visualize these data in a relatively efficient manner. Interestingly, all these technical advances have recently been met by a substantial push for availability and sharing of public fMRI datasets. One such example is the Human Connectome Project (http://www.humanconnectome.org) financed by the NIH, which recently released to the public an initial dataset of 68 subjects (from a projected target of 1200 subjects) which includes whole-brain resting fMRI scans acquired with a repetition time of 720 msec and an isotropic spatial resolution of 2 mm. The driving force for data sharing is the possibility to rapidly achieve a significant jump in sample size from a few dozen subjects (common practice for individual-site studies) to several hundreds. In this manner neuroscientists will be able to benefit from powerful data mining techniques and “big data” approaches successfully applied today in other disciplines such as sociology or economics (Cukier et al., 2013).

Specific Issues for Application of fMRI to Auditory Neuroscience

While fMRI has become the tool of choice for many neuroscientists when trying to understand the neuronal correlates of complex auditory processing in the human brain, it still poses significant challenges in the application to auditory neuroscience due to a key confound that has proven difficult to eliminate: the acoustic noise associated with image acquisition. Typical echo-planar imaging acquisition procedures used in fMRI result in one or more magnetic gradient fields (those used to encode position in the object as a specific temporal frequency component) effecting an alternating positive/negative amplitude at a fixed temporal frequency (generally 750–1500 Hz). Such a “switched” gradient produces acoustic frequency contractions and expansions of the bore of the MRI, resulting in acoustic noise (referred to hereafter as a “ping” due to the strong harmonic structure of the resulting acoustic spectrum) of appreciable intensity (generally at least 70 dB SPL, sometimes as high as 115 dB SPL or greater) at frequencies to which the human auditory system is markedly sensitive (Foster et al., 2000; Ravicz et al., 2000).

Early in the history of fMRI, it was observed that this scanner noise confound was meaningful, not only through alteration of the perception of an acoustic stimulus, but also could alter subject behavior and observed patterns of activation (e.g., Tomasi et al., 2005) through production of its own associated hemodynamic response (Bandettini et al., 1998; Tamer et al., 2009). Therefore, the consequence of the acoustic noise generated by the image acquisition process is that many experimental paradigms result not in measurement of how the central auditory and associated networks are responding to the presented stimulus, but rather yield a measurement of how these networks are responding to the combination of the stimulus and noise (Edmister et al., 1999). Given that the majority of fMRI analyses remain based on a general linear model approach, the observed non-linear combination of responses in the auditory cortex (Talavage et al., 2004a) can limit the ability to resolve the lesser of the two responses (possibly precluding its statistical detection), leading to inaccurate assessment of network extent (i.e., area recruitment) and the relative strength of response to the intended stimulus (i.e., neural demand), possibly contributing to misattribution of functionality, such as highlighted in Barker et al. (2012). Addressing the issue of scanner noise has since been an area of marked interest in the auditory neuroscience research community. In spite of nearly 20 years of such effort, such research has yet to produce wholly satisfactory results. Reductions of the consequences of this noise are typically achieved by one of two means: (1) altering the acoustic properties of the confounding noise as experienced by the subject, or (2) altering the means by which the noise interacts with the system being observed (e.g., the brain).

Acoustic properties of the noise generated by the imaging process are most commonly altered through increased attenuation of the sound pressure levels, either near the source (i.e., within the magnet housing) or at the receiver (e.g., ear defenders, ear plugs, active noise cancelation systems). These mostly passive approaches can work to significantly reduce the auditory perception of the stimulus, but the vibrations induced in the bore of the scanner are not experienced solely at the ear, and bone conduction provides an upper bound on the extent to which such measures can ultimately work. Maximal benefits are generally observed to be on the order of the gap between air and bone conduction, being approximately 35–45 dB when using both earplugs and ear defenders, though dependent on frequency (Ravicz et al., 2001). Achieving attenuation beyond this level, while feasible, is largely impractical by passive means. Key issues related to overcoming interaction with the residual noise level tend to be effective delivery of the stimulus to the ear canal (via probe tubes through earplugs or else direct in-ear stimulation) and the level of threshold shift induced by the presence of the acoustic imaging noise, with this latter being reported as greater than 25 dB under relatively typical imaging conditions (e.g., Talavage et al., 2004b).

Active attenuation has been demonstrated (Hall et al., 2009; Mechefske et al., 2001) to be feasible, but to date there have not been appreciable improvements in the patterns of activity observed in the cortex (Blackman et al., 2011). This result is somewhat unexpected, but could arise from the fact that the active noise cancellation (resulting in ~35 dB additional attenuation at the ear) does not eliminate the presence of (sub-threshold) acoustic energy, which could still contribute to (partial) masking effects due to out-of-phase addition of residual noise signals over the spectrum of the presented stimulus (Vogten, 1978)—Hall et al. (2009) found masked detection thresholds improved by 20 dB, but were not necessarily equivalent to “out-of-the-magnet” values. Ultimately, while active attenuation can be used in small areas (e.g., the ear canal), the larger problem of bone conduction through the remainder of the body—exposed to the acoustic noise of imaging throughout the scanner bore—is likely impractical to solve. Therefore, the bone conduction limit may cap the maximal benefit that can be achieved through attenuation, as even enhanced attenuation of the “heard” noise may not overcome the probable (lesser) attenuation of the “experienced” noise.

A more desirable acoustic approach has been to alter the temporal structure of the image acquisition process to reduce or prevent the perception of acoustic frequency content. Initially this was achieved by slowing down, or making irregular the temporal switching of the readout gradients, but such alterations are generally associated with image distortions or reduced temporal signal-to-noise ratios, both of which can markedly impact the ability to detect subtle changes in auditory cortex. That said, such approaches are likely to provide the best possible solutions, and more recent advances in “quiet” imaging sequences are encouraging. Schmitter et al. (2008)—evaluated in Peelle et al. (2010)—reduces the scanning noise by more than 20 dB through generation of a different k-space trajectory that is “smooth” and also has a switching frequency lower than in typical EPI, resulting in acoustic energy at a less-bothersome frequency range. A documented benefit is that a quieter sequence does result in lesser effort on the part of the listener, though this may be counter-productive in fMRI, as reduced effort likely results in lesser activation (Peelle et al., 2010). However, non-rectilinear acquisitions in k-space require regridding of acquired samples to a rectilinear grid for application of the traditional FFT relationship for MRI. Such manipulations degrade the spatial resolution of the data, hurting localization and identification of distinct foci of activity. In the case of approaches such as that of Schmitter et al. (2008), continuous gradients result in larger voxel dimensions along the readout direction (i.e., non-isotropic resolution) and markedly lower sensitivity near sources of rapidly changing magnetic susceptibility (e.g., air-tissue interfaces, such as the mastoid cavity or sinuses).

Recent advances in the application of non-Fourier sampling for MRI acquisition (e.g., compressed sensing) suggest that pseudo-random patterns of acquisition could be sufficient for rapid acquisition of fMRI data, provided that substantially increased image reconstruction times are acceptable, as is usually the case in a research, but not clinical, setting. It is likely that trajectories for the acquisition of the k-space data could be generated to produce an acoustic stimulus that is more white in spectral structure, resulting in a reduced stimulus percept and likely a lesser noise-induced response in the auditory pathway.

While the future of auditory fMRI likely will follow a path of reducing the acoustic frequency energy achieved by both alterations to the noise-generating hardware and subsequent readout process, the present relies heavily on use of several acquisition paradigms that are intended to minimize the extent of interaction between hemodynamic responses induced by the desired stimulus and the confounding noise. These include “sparse sampling” and “gapped” paradigms, both of which are intended to provide periods of time in which a stimulus may be perceived “in quiet”.

Sparse sampling designs (Eden et al., 1999; Edmister et al., 1999; Hall et al., 1999) generally follow the model of “pings – stimulus presentation in quiet – pings” to achieve delivery of the desired acoustic stimulus free from (dominant) confounds while maintaining a reasonable rate of both stimulus delivery and data acquisition. “Gapped” designs (e.g., Schmithorst et al., 2004) skip one or more readout periods while otherwise continually collecting data, with the stimulus presented in this (quiet) gap. Both designs—though particularly “gapped” approaches—can lead to variable tissue magnetization across volume acquisitions due to inconsistent signal recovery. This issue may be addressed by periodic presentation, at an integer factor of the inter-acquisition repetition time, of a radio frequency tip (without the subsequent readout) within the silent period—e.g., the interleaved silent steady-state approach of Schwarzbauer et al. (2006).

In general, acoustically optimal acquisitions would use volume acquisitions that are temporally short enough that the response induced by the acoustic noise generated by the initial slice acquisition has not yet become statistically different from zero by the time the final slice acquisition occurs, and spaces out these volume acquisitions sufficiently that the response induced by the acoustic noise generated by the final slice acquisition has decayed near to, or, ideally, below baseline (i.e., at or after the boundary between C and D in Figure 2) before the initial slice acquisition of the next volume acquisition. Given that such an acquisition would result in extremely low sampling frequencies (comparable to PET), and would require overly long-duration experiments to facilitate comparison of multiple conditions, very few studies are so conducted. Rather, experimenters attempt a cost-benefit analysis and generally select approaches that result in clear perception of the stimulus, and correspondingly robust responses, even if one or both of these elements is not free from all scanner noise-related confound. Even with such a trade-off, this approach does reduce the temporal sampling rate, though this loss is partially, or even largely, offset by larger amplitude responses due to the more pristine nature of the presentation (Edmister et al., 1999).

The trade-offs specifically required for sparse sampling approaches are in terms of duration of stimulus presentation (limited by the duration of the inter-acquisition period), effective sampling of the response curve (i.e., number of samples acquired during the hemodynamic response), and the resulting interaction of the responses to the stimulus and the noise (now offset in time). In a recent study, Perrachione et al. (2013) sought to systematically identify the most efficient combination of repetition time, inter-stimulus interval and response model for sparse sampling strategies. Using a combination of simulation and experimental data, they came to the following recommendations: (1) maintain a high rate of stimulus presentation to maximize effect size; (2) use short to intermediate repetition times to maximize number of samples and statistical power; and (3) use physiologically informed models that appropriately account for the sluggishness of the hemodynamic response onset (e.g., see Figure 2B).

Partly out of concern for the trade-offs noted above, and partly to take advantage of technological advances that accelerate the volume acquisition process (e.g., multi-band readouts), it may be attractive to perform sparse sampling designs having extremely short repetition times (on the order of 100 milliseconds or less) and inter-stimulus intervals (appreciably shorter than the duration of the overall hemodynamic response of Figure 2). However, one must keep in mind that such designs may not provide the desired benefit, as the gain from the reduction in the number of “pings” and increase in number of acquisitions following stimulation may well be offset by increased acoustic masking, the increased history of “ping” delivery (e.g., Olulade et al., 2011), and the overlap of multiple hemodynamic responses, which can potentially interact in a non-linear manner, including saturation (Talavage et al., 2004a). Therefore, application of such paradigms, or even the use of “continuous”—i.e., distributed volume acquisition (Edmister et al., 1999)—approaches are attractive, but should be used with caution until the community has determined the noise level at which the linearity of auditory cortex hemodynamic responses is sufficient for effective analysis of more complex paradigms.

Contributions of PET and fMRI to Auditory Neuroscience

Over the past thirty years, a vast PET and fMRI literature in the domain of auditory neuroscience has developed. Here we will briefly summarize how PET and fMRI have contributed to our understanding of two particular topics within auditory neuroscience, one related to sensory perception (tonotopy) and one related to cognitive function (speech perception). These two historical overviews demonstrate the benefit of the application of neuroimaging in humans, and, further, illustrate a variety of powerful experimental designs that may be applied to a wide range of potential auditory-related experiments.

Investigations of the Tonotopic Organization of Auditory Cortex

Arising from mechanical properties of the cochlea, tonotopy—a linear arrangement of neurons according to best frequency—is a fundamental organizing principle of the auditory pathway. Multiple tonotopic maps have long been observed in the auditory cortex of experimental animals, with each map typically corresponding to a cytoarchitecturally distinct area (Morel et al., 1993; Reale et al., 1980). Prior to the advent of neuroimaging, tonotopy was largely inferred to exist in humans, but had not been documented beyond neuroanatomical analysis suggesting “in register” projections that ultimately reached Heschl’s gyrus (site of primary auditory cortex).

Due to the traditional assumption that auditory perception and processing may be in part based on features, including those that are frequency-specific, it has been of great interest to the neuroimaging community to investigate the tonotopic organization of human auditory cortex. Romani et al. (1982) was the first to demonstrate, using MEG, that four distinct frequencies resulted in depths (relative to the scalp) of generators on Heschl’s gyrus that co-varied with the presented frequency—indicating that, at minimum, a first order tonotopic organization was present. Similar work was subsequently performed by Lauter et al. (1985), using PET to image correlates of two probe frequencies. This work provided the first direct localization of responses on Heschl’s gyrus—specifically agreeing with historical literature in observation of higher frequencies being more medial and posterior along the gyrus than lower frequencies.

In spite of the hostile acoustic environment, investigation of tonotopic organization was a relatively common pursuit in early fMRI. Pair-wise analyses were conducted, with results observed on Heschl’s gyrus to be similar to those in PET (e.g., Lockwood et al., 1999) by Wessinger et al. (1997) and Bilecen et al. (1998). Larger scale analyses using either more probes or seeking to image a broader expanse of cortex were subsequently conducted with fMRI (Formisano et al., 2003; Talavage et al., 2000; Yang et al., 2000) and identified potential progressions of frequency sensitivity outside of primary auditory cortex, though such findings were not always robust (Langers et al., 2007; Schönwiesner et al., 2002). Nonetheless, the observed non-primary locations for responses were consistent with findings from MEG (Cansino et al., 1994; Pantev et al., 1995) that first demonstrated the potential presence of multiple tonotopic maps in human auditory cortex.

The next step for the investigation of tonotopic organization using neuroimaging came from fMRI of visual neuroscience, in which novel experimental phase-encoding paradigms were used to reveal multiple retinotopic maps in the human visual cortex; for a review of such paradigms, see Engel (2012). The retinotopic maps were found to be separable based on reversals of horizontal or vertical position gradients, with these reversals generally occurring near cytoarchitectonic boundaries (Sereno et al., 1995). Such reversals of tonotopic maps had previously been observed in experimental animals, where maps in adjacent cytoarchitectonic regions were frequently found to exhibit a “mirror” organization, abutting at high- or low-frequency ends (e.g., Morel et al., 1993). Building on this knowledge, band-filtered noise sweeps were presented in phase-encode paradigms by Talavage et al. (2004b) to map peak frequency selectivity as a function of position on the cortical surface. This work ultimately suggested as many as seven tonotopic mappings in the auditory cortex that could be consistent with underlying cytoarchtectonic subdivisions (Galaburda et al., 1980; Rivier et al., 1997).

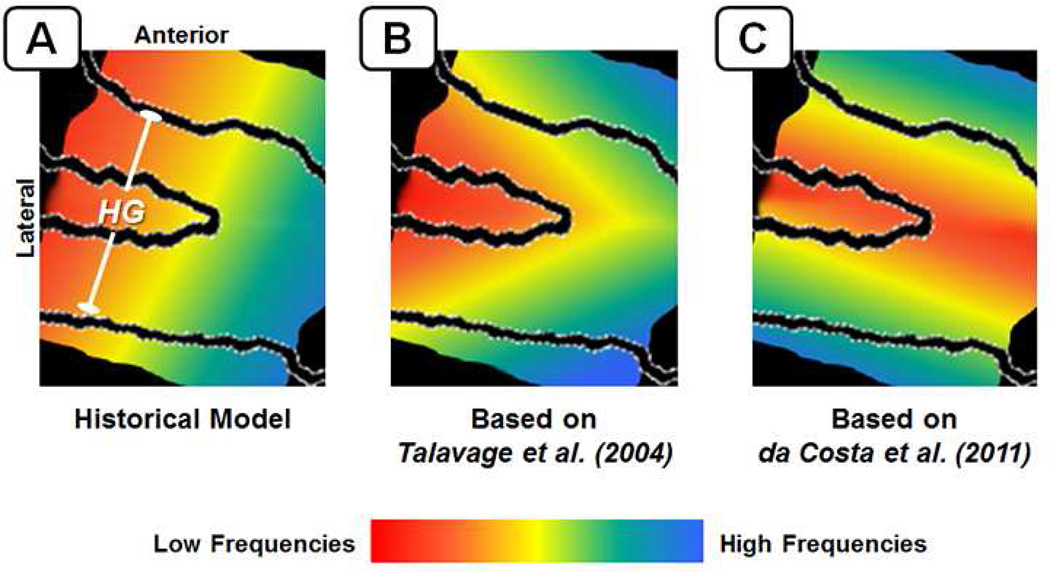

A key gain in our understanding of the frequency-selective organization of auditory cortex arising from these neuroimaging studies was a break from the classical model that the low-to-high frequency gradient lay along the length of the superior aspect of Heschl’s gyrus (Figure 3A). The study by Talavage et al. (2004b) reported mirrored tonotopic maps, sharing a lower-frequency sensitive area on the superior aspect of the gyrus, but running obliquely such that the corresponding higher-frequency areas were observed on the anterior and posterior faces (Figure 3B), as previously observed in Talavage et al. (2000). Working at 7T, da Costa et al. (2011) used a comparable paradigm with discrete tones rather than continuous sweeps to highlight that the alignment of the primary auditory areas was more likely to be normal to the long axis of Heschl’s gyrus (Figure 3C). Studies by Woods et al. (2010), Humphries et al. (2010) and Langers and van Dijk (2012) have applied event-related stimulus presentation paradigms with uncorrelated input sequences of tones to further delineate boundaries between cortical areas, revealing a more complex structure to auditory cortical frequency selectivity than could have been envisioned without neuroimaging, and critically one which is well-reconcilable with observations in the brains of experimental primates (e.g., Baumann et al., 2013).

Figure 3.

Diagrams to illustrate change in understanding of the organization of frequency selectivity (i.e., tonotopy) in primary auditory areas, as brought about by the application of functional neuroimaging. (A) The historical literature proposed a low-to-high gradient of frequency selectivity running along the superior aspect of Heschl’s gyrus, from antero-lateral to postero-medial. (B) Early continuous mapping of frequency selectivity suggested two “mirrored” maps on Heschl’s gyrus, sharing a low-frequency selective area on the superior aspect, with high-frequency selective areas on the antero-medial and postero-medial faces of the gyrus (Talavage et al., 2000; Talavage et al., 2004b). (C) High-field (7T) mappings using advanced event-related fMRI experimentation reveal a more complex arrangement in the entirety of auditory cortex, with the finding that frequency selective gradients in the primary auditory areas run normal to the long axis of Heschl’s gyrus (Da Costa et al., 2011).

Investigations of the Speech Processing in Auditory Cortex

A recent review paper by Price nicely presents a historical in-depth review of the contributions from both PET and fMRI to our current understanding of how the brain processes both spoken and written language (Price, 2012). In here, we will only briefly summarize some milestones of this research endeavor, and how PET and fMRI have contributed to redefine early classical models of speech perception such as those of Geschwind (1970) and Luria (1972) that effectively argued for a single stream of speech processing, and the anatomical divorce of speech production from speech perception along an anterior-posterior dimension.

Important early contributions from PET and fMRI were to highlight the distributed and parallel nature of speech processing in the human brain (fMRI: Benson et al., 1996; Binder et al., 1997; PET: Petersen et al., 1988; Wise et al., 1991). Such work highlighted that classical models that leaned toward single streams of processing were not fully capturing the complex structure of processing in the brain. Beyond the mere number of processing streams involved in processing complex sounds, neuroimaging findings also further challenged strict lateralization models of speech processing, highlighting the bilateral nature of activation for different properties of speech stimuli across several populations of subjects (Belin et al., 1998; Binder et al., 1996; Scott et al., 2003; Szaflarski et al., 2002; Zatorre et al., 1992).

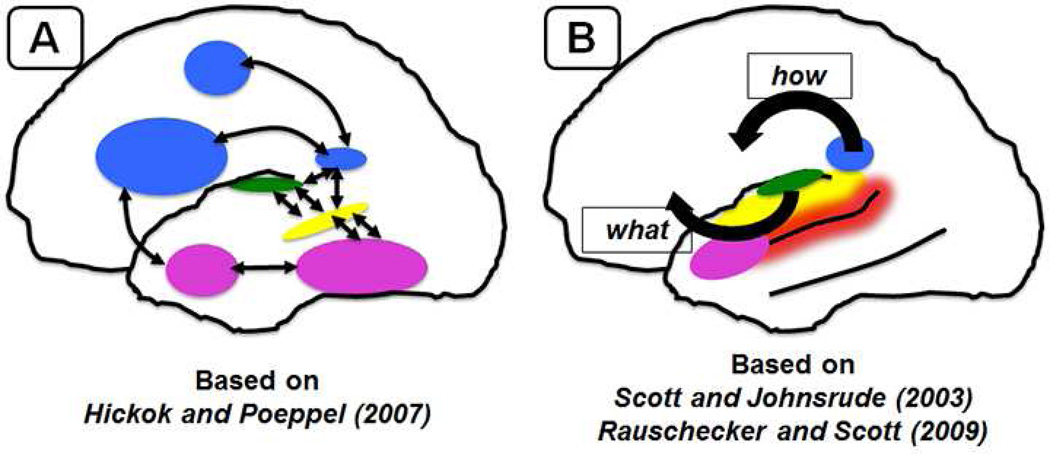

More recent work using fMRI has permitted neuroscientists to further clarify the parallel streams involved in processing speech into the powerful dual-stream speech-processing model (Hickok et al., 2004; Scott et al., 2004), which today constitutes one of the best accepted models for how we understand spoken language. Building on the earlier observations that speech comprehension is highly parallelized and distributed, the dual-speech processing model sustains that speech processing results from the combination of parallel ventral and dorsal streams of processing initiated in early auditory cortex and proceeding in two well differentiated routes. The ventral stream of processing is proposed to be involved in mapping sound to meaning. According to Hickok and Poeppel (2000; 2004; 2007), this stream starts in the posterior part of the superior temporal gyrus then projects ventro-laterally into the inferior posterior temporal cortex (see Figure 4A). In contrast, the sound to meaning pathway (or “what” pathway; see Figure 4B) is proposed by others to project anteriorly along the STG and STS, and terminate in the more anterior STS (Rauschecker et al., 2009; Scott et al., 2003). Different experiments have tried to link each of these regions with specific processing roles ranging from phonologic, phonemic to semantic processing, but no general consensus about the specific roles of each of these regions has yet been reached, beyond a consistent demonstration of left anterior STS responses to intelligible speech (Evans et al., 2013; Okada et al., 2010; Scott et al., 2000) and a clear role for the STG in the processing of acoustic phonetic information (Davis et al., 2003; Jacquemot et al., 2003). There is less controversy about the second, dorsal, stream which is posited to be involved in mapping of sound into articulatory representations. This second stream projects from the superior temporal gyrus dorso-posteriorly and includes portions of the posterior Sylvian fissure and also frontal regions. This stream provides neurobiological support for aspects of the long-disputed motor theory of speech perception (Liberman et al., 2000) which argues that speech comprehension heavily relies upon articulatory/gestural representations of speech.

Figure 4.

Models of perception of speech, as informed by results of functional neuroimaging. (A) Model based on Hickok and Poeppel (2007). Green: primary auditory/early auditory cortex – spectrotemporal processing, bilaterally. Yellow: middle to posterior portions of the superior temporal sulcus (STS) bilaterally – phonological processing and representation. Blue: dorsal pathway – maps sensory or phonological representations onto motor representations. Runs from posterior medial planum temporale to motor and premotor fields. Left lateralized. Pink: ventral pathway – maps sensory or phonological representations onto lexical and conceptual representations. Runs ventrally from the mid/posterior STS back towards the posterior middle temporal gyrus and posterior inferior temporal lobe, then forward towards the anterior middle temporal gyrus and the anterior inferior temporal lobe. (B) The What/How Model, based on Scott and Johnsrude (2003) and Rauschecker and Scott (2009). Green: primary auditory cortex – complex context specific processing of sound. Yellow: left superior temporal gyrus – auditory association cortex, processes acoustic-phonetic properties of stimuli. Red: left superior temporal sulcus – associated with processing higher order information in the speech signal – consonant vowel combinations, words. Pink: left anterior STS – associated with responses to intelligibility in speech at the order of words and sentences, and insensitive to acoustic properties of the sound. Blue: posterior medial planum/posterior STG – associated with a ‘how’ stream of processing, in which sounds are represented with respect to their associated motor representations. Bilateral or unilateral, depending on task.

Beyond the fundamental reformation of our understanding of how the normal brain processes speech, PET and fMRI continue to provide novel information about speech perception in the context of disordered systems. In the clinical realm, it has now become feasible to use neuroimaging via fMRI to conduct auditory studies that focus on single-subject assessment for the purpose of non-invasive pre-surgical mapping of eloquent cortex (Bookheimer, 2007), with the goal here being not to understand how the brain processes sounds, but rather to delineate areas of the brain near the target of the surgery that if resected or damaged may result into the inability of the subject to communicate following the surgery. A further realm of future interest for our understanding of language processing is the study of individuals who are deafened or who use hearing augmentation. Work using PET and fMRI in patients using cochlear implants has revealed substantial plastic capacity in the cortex of congenitally-deaf individuals (Giraud et al., 2001; Lazard et al., 2013). Of comparable interest will be subjects whose brains have adapted to altered forms of language input such as American Sign Language (Karns et al., 2012; Malaia et al., 2012; Neville et al., 1998), or degraded acoustic stimuli, such as those produced by vocoders that are intended to mimic cochlear implant stimulation (e.g., Giraud et al., 2004).

The Future of Hemodynamic Imaging in Auditory Neuroscience

Within the auditory realm, both PET and fMRI retain the potential to serve critical scientific and clinical roles. In spite of the general shift of cognitive neuroscience studies toward fMRI, PET offers several technical advantages that preserve its value as a tool for the investigation of the auditory system. First, PET is a quiet imaging technique, offering significant advantage over fMRI for the presentation of auditory stimuli without any (even intermittent) background noise. Second, because the PET imaging environment is electromagnetically passive, it can easily accommodate high-fidelity sound delivery. This aspect of PET also makes it compatible with the metallic and magnetic components contained in cochlear and brainstem implants and many hearing aids, permitting neuroimaging of individuals wearing and using these devices (Giraud et al., 2000) though fMRI has potential for predictive evaluation of possible implant users (Lazard et al., 2012). Additionally, PET offers considerable potential for studying distributions and effects of neurochemically-specific processes in vivo, as specific neuroreceptors and neurotransmitters (and associated agonists and antagonists) may be targeted for imaging—for examples, see Reith et al. (1994) and Busatto et al. (1995). At this time, however, fMRI appears as though it will serve in the future as the primary workhorse for the hemodynamic-based study of auditory function. For this to be most useful, a number of advances (described above) are in progress related to the acoustic environment and our understanding of how to probe the brain’s behavior to elucidate its underlying function.

One area of research that clearly benefits and tests the sensitivity limits of fMRI is the application of machine learning strategies for “brain decoding”; see Norman et al. (2006) and Pereira et al. (2009) for reviews on this topic. In brain decoding experiments the primary goal is not to identify which regions of the brain are responsive to a given stimulus or task, but to test whether spatio-temporal patterns of BOLD response from individual brain regions contain sufficient information to infer specific details about the stimulus/task presented to or observed by the subject at a given moment in time. The majority of early fMRI decoding work focused on the visual system (Kriegeskorte et al., 2008; Polimeni et al., 2010) because its functional organization is relatively well understood and the MRI signal from the back of the head has high contrast-to-noise ratio and relatively few imaging distortions. However, fMRI brain decoding is not limited to the visual system, and successful decoding results have also been reported for auditory stimuli. For example, Formisano et al. (2008) were able to decode “what” (which one out of three possible Dutch vowels) and “whom” (which one out of three different Dutch native speakers) was speaking using a support vector machine technique trained on BOLD response patterns within portions of the superior temporal gyrus and superior temporal sulcus. Further, in a recent cross-modality study Meyer et al. (2010) found that subjective experience of sound (that associated with visual sound-implying but silent stimuli) was sufficient to differentiate between various animals, musical instruments and objects presented visually using as input to a multivariate pattern analysis BOLD responses from auditory cortex only. These studies exemplify the power of brain decoding techniques when applied to high quality/high resolution fMRI data, and how these techniques may help us better understand how and where specific conceptualizations (sounds, speaker identity, etc.) are encoded in the human brain.

A second area of fMRI research that has grown rapidly in recent years is that of resting-state functional connectivity. Biswal et al. (1995) demonstrated that low frequency BOLD signal fluctuations (< 0.1Hz) recorded while subjects lay still in the scanner, in the absence of any task or stimulation, exhibit patterns of spatial correlation similar to activation maps obtained with tasks. While the original observation focused on spatial co-fluctuation of left and right primary motor cortex, many studies have demonstrated co-fluctuations of other cortical regions, including those in auditory cortex. These networks, commonly referred to as “resting-state” networks or “intrinsic connectivity” networks have proven to be highly reliable across subjects (Damoiseaux et al., 2006) and to show great correspondence with activation based dynamics encountered in task-based experiments (Smith et al., 2009). Resting state fMRI is an attractive research approach for auditory neuroscience given its simplicity (no need for stimulus delivery or response recording systems), minimal subject compliance requirements (remaining still), and its suitability for public data sharing—see van den Heuvel et al. (2010) and Van Dijk et al. (2010) for in-depth reviews on this topic. Evidencing its potential clinical applicability in spite of a lack of consensus regarding analysis and interpretation of resting state data, several studies have demonstrated correlations between disruption of resting state connectivity and some major developmental and neurological conditions, including dyslexia (Rogers et al., 2007; van der Mark et al., 2011). Further, such analysis lends itself well to “naturalistic” conditions that are otherwise difficult to assess using traditional contrast-based fMRI—e.g., narratives (Wilson et al., 2008).

Finally, we close with a brief note highlighting a new advance in imaging technology that brings forth the potential to use both PET and fMRI in the same scan session on the same subject. Newly developed PET/MR systems now provide a means to more directly assess metabolic—in the context of cellular or neurochemical—activity in conjunction with enhanced localization and measurement of gross neurophysiological—in the context of hemodynamic— behavior (Heiss, 2009; Stegger et al., 2012). As we move forward with a desire to understand the chemical (e.g., neurotransmitter, neuropeptide) basis of what we have now been observing for over 30 years in the brain, the future holds great promise for an enhanced understanding of how the various cells and structures in the auditory pathway interact to produce complex individual and communal behavior.

Highlights.

Neuroimaging has enhanced our understanding of sensory and speech processing

Our understanding of neural organizational principles is enhanced by neuroimaging

Examination of the auditory pathway with fMRI is challenging, yet effective

Auditory neuroimaging has potential for contribution to clinical applications

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bandettini PA. Seven topics in functional magnetic resonance imaging. Journal of integrative neuroscience. 2009;8:371–403. doi: 10.1142/s0219635209002186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandettini PA. Twenty years of functional MRI: the science and the stories. NeuroImage. 2012;62:575–588. doi: 10.1016/j.neuroimage.2012.04.026. [DOI] [PubMed] [Google Scholar]

- Bandettini PA, Wong EC, Hinks RS, Tikofsky RS, Hyde JS. Time course EPI of human brain function during task activation. Magnetic resonance in medicine : official journal of the Society of Magnetic Resonance in Medicine / Society of Magnetic Resonance in Medicine. 1992;25:390–397. doi: 10.1002/mrm.1910250220. [DOI] [PubMed] [Google Scholar]

- Bandettini PA, Jesmanowicz A, Van Kylen J, Birn RM, Hyde JS. Functional MRI of brain activation induced by scanner acoustic noise. Magnetic Resonance in Medicine. 1998;39:410–416. doi: 10.1002/mrm.1910390311. [DOI] [PubMed] [Google Scholar]

- Barker D, Plack CJ, Hall DA. Reexamining the evidence for a pitch-ensitive region: a human fMRI study using iterated ripple noise. Cereb Cortex. 2012;22:745–753. doi: 10.1093/cercor/bhr065. [DOI] [PubMed] [Google Scholar]

- Baumann S, Petkov CI, Griffiths TD. A unified framework for the organization of the primate auditory cortex. Front Syst Neurosci. 2013;7:11. doi: 10.3389/fnsys.2013.00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumann S, Griffiths TD, Sun L, Petkov CI, Thiele A, Rees A. Orthogonal representation of sound dimensions in the primate midbrain. Nature neuroscience. 2011;14:423–425. doi: 10.1038/nn.2771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Zilbovicius M, Crozier S, Thivard L, Fontaine A, Masure MC, Samson Y. Lateralization of speech and auditory temporal processing. J Cogn Neurosci. 1998;10:536–540. doi: 10.1162/089892998562834. [DOI] [PubMed] [Google Scholar]

- Belliveau JW, Kennedy DN, Jr., McKinstry RC, Buchbinder BR, Weisskoff RM, Cohen MS, Vevea JM, Brady TJ, Rosen BR. Functional mapping of the human visual cortex by magnetic resonance imaging. Science. 1991;254:716–719. doi: 10.1126/science.1948051. [DOI] [PubMed] [Google Scholar]

- Benson RR, Logan WJ, Cosgrove GR, Cole AJ, Jiang H, LeSueur LL, Buchbinder BR, Rosen BR, Caviness VS., Jr. Functional MRI localization of language in a 9-year-old child. The Canadian journal of neurological sciences. Le journal canadien des sciences neurologiques. 1996;23:213–219. doi: 10.1017/s0317167100038543. [DOI] [PubMed] [Google Scholar]

- Bickford RG, Jacobson JL, Cody DT. Nature of Average Evoked Potentials to Sound and Other Stimuli in Man. Annals of the New York Academy of Sciences. 1964;112:204–223. doi: 10.1111/j.1749-6632.1964.tb26749.x. [DOI] [PubMed] [Google Scholar]

- Bilecen D, Scheffler K, Schmid N, Tschopp K, Seelig J. Tonotopic organization of the human auditory cortex as detected by BOLD-FMRI. Hearing research. 1998;126:19–27. doi: 10.1016/s0378-5955(98)00139-7. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Cox RW, Rao SM, Prieto T. Human brain language areas identified by functional magnetic resonance imaging. The Journal of neuroscience : the official journal of the Society for Neuroscience. 1997;17:353–362. doi: 10.1523/JNEUROSCI.17-01-00353.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Swanson SJ, Hammeke TA, Morris GL, Mueller WM, Fischer M, Benbadis S, Frost JA, Rao SM, Haughton VM. Determination of language dominance using functional MRI: a comparison with the Wada test. Neurology. 1996;46:978–984. doi: 10.1212/wnl.46.4.978. [DOI] [PubMed] [Google Scholar]

- Biswal B, Yetkin FZ, Haughton VM, Hyde JS. Functional connectivity in the motor cortex of resting human brain using echo-planar MRI. Magnetic Resonance in Medicine. 1995;34:537–541. doi: 10.1002/mrm.1910340409. [DOI] [PubMed] [Google Scholar]

- Blackman GA, Hall DA. Reducing the effects of background noise during auditory functional magnetic resonance imaging of speech processing: qualitative and quantitative comparisons between two image acquisition schemes and noise cancellation. Journal of speech, language, and hearing research : JSLHR. 2011;54:693–704. doi: 10.1044/1092-4388(2010/10-0143). [DOI] [PubMed] [Google Scholar]

- Boas DA, Jones SR, Devor A, Huppert TJ, Dale AM. A vascular anatomical network model of the spatio-temporal response to brain activation. NeuroImage. 2008;40:1116–1129. doi: 10.1016/j.neuroimage.2007.12.061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bodurka J, Ledden PJ, van Gelderen P, Chu R, de Zwart JA, Morris D, Duyn JH. Scalable multichannel MRI data acquisition system. Magnetic resonance in medicine : official journal of the Society of Magnetic Resonance in Medicine / Society of Magnetic Resonance in Medicine. 2004;51:165–171. doi: 10.1002/mrm.10693. [DOI] [PubMed] [Google Scholar]

- Bookheimer S. Pre-surgical language mapping with functional magnetic resonance imaging. Neuropsychology review. 2007;17:145–155. doi: 10.1007/s11065-007-9026-x. [DOI] [PubMed] [Google Scholar]

- Brainard MS, Doupe AJ. Interruption of a basal ganglia-forebrain circuit prevents plasticity of learned vocalizations. Nature. 2000;404:762–766. doi: 10.1038/35008083. [DOI] [PubMed] [Google Scholar]

- Broca P. Remarks on the seat of the faculty of articulate language, followed by an observation of aphemia. Some papers on the cerebral cortex. 1861:199–220. [Google Scholar]

- Busatto GF, Pilowsky LS. Neuroreceptor mapping with in-vivo imaging techniques: principles and applications. British Journal of Hospital Medicine. 1995;53:309–313. [PubMed] [Google Scholar]

- Buxton RB, Frank LR. A model for the coupling between cerebral blood flow and oxygen metabolism during neural stimulation. Journal of Cerebral Blood Flow and Metabolism. 1997;17:64–72. doi: 10.1097/00004647-199701000-00009. [DOI] [PubMed] [Google Scholar]

- Cansino S, Williamson SJ, Karron D. Tonotopic organization of human auditory association cortex. Brain research. 1994;663:38–50. doi: 10.1016/0006-8993(94)90460-x. [DOI] [PubMed] [Google Scholar]

- Cody DT, Jacobson JL, Walker JC, Bickford RG. Averaged Evoked Myogenic and Cortical Potentials to Sound in Man. The Annals of otology, rhinology, and laryngology. 1964;73:763–777. doi: 10.1177/000348946407300315. [DOI] [PubMed] [Google Scholar]

- Cohn R. Differential cerebral dominance to noise and verbal stimuli. Transactions of the American Neurological Association. 1971;96:127–131. [PubMed] [Google Scholar]

- Cukier K, Mayer-Schoenberger V. The Rise of Big Data: How It's Changing the Way We Think About the World. Foreign Affairs. 2013;92:28–40. [Google Scholar]

- Da Costa S, van der Zwaag W, Marques JP, Frackowiak RS, Clarke S, Saenz M. Human primary auditory cortex follows the shape of Heschl's gyrus. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:14067–14075. doi: 10.1523/JNEUROSCI.2000-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damoiseaux JS, Rombouts SA, Barkhof F, Scheltens P, Stam CJ, Smith SM, Beckmann CF. Consistent resting-state networks across healthy subjects. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:13848–13853. doi: 10.1073/pnas.0601417103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS. Hierarchical processing in spoken language comprehension. Journal of Neuroscience. 2003;23:3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eden GF, Joseph JE, Brown HE, Brown CP, Zeffiro TA. Utilizing hemodynamic delay and dispersion to detect fMRI signal change without auditory interference: the behavior interleaved gradients technique. Magnetic Resonance in Medicine. 1999;41:13–20. doi: 10.1002/(sici)1522-2594(199901)41:1<13::aid-mrm4>3.0.co;2-t. [DOI] [PubMed] [Google Scholar]

- Edmister WB, Talavage TM, Ledden PJ, Weisskoff RM. Improved auditory cortex imaging using clustered volume acquisitions. Human brain mapping. 1999;7:89–97. doi: 10.1002/(SICI)1097-0193(1999)7:2<89::AID-HBM2>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel SA. The development and use of phase-encoded functional MRI designs. NeuroImage. 2012;62:1195–1200. doi: 10.1016/j.neuroimage.2011.09.059. [DOI] [PubMed] [Google Scholar]

- Evans S, Kyong JS, Rosen S, Golestani N, Warren JE, McGettigan C, Mourao-Miranda J, Wise RJ, Scott SK. The Pathways for Intelligible Speech: Multivariate and Univariate Perspectives. Cereb Cortex. 2013 doi: 10.1093/cercor/bht083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feinberg DA, Moeller S, Smith SM, Auerbach E, Ramanna S, Gunther M, Glasser MF, Miller KL, Ugurbil K, Yacoub E. Multiplexed echo planar imaging for sub-second whole brain FMRI and fast diffusion imaging. PloS one. 2010;5:e15710. doi: 10.1371/journal.pone.0015710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Formisano E, De Martino F, Bonte M, Goebel R. “Who” is saying “what”? Brain-based decoding of human voice and speech. Science. 2008;322:970–973. doi: 10.1126/science.1164318. [DOI] [PubMed] [Google Scholar]

- Formisano E, Kim DS, Di Salle F, van de Moortele PF, Ugurbil K, Goebel R. Mirror-symmetric tonotopic maps in human primary auditory cortex. Neuron. 2003;40:859–869. doi: 10.1016/s0896-6273(03)00669-x. [DOI] [PubMed] [Google Scholar]

- Foster JR, Hall DA, Summerfield AQ, Palmer AR, Bowtell RW. Sound-level measurements and calculations of safe noise dosage during EPI at 3 T. Journal of Magnetic Resonance Imaging. 2000;12:157–163. doi: 10.1002/1522-2586(200007)12:1<157::aid-jmri17>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- Frostig RD, Lieke EE, Ts'o DY, Grinvald A. Cortical functional architecture and local coupling between neuronal activity and the microcirculation revealed by in vivo high-resolution optical imaging of intrinsic signals. Proceedings of the National Academy of Sciences of the United States of America. 1990;87:6082–6086. doi: 10.1073/pnas.87.16.6082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galaburda A, Sanides F. Cytoarchitectonic organization of the human auditory cortex. The Journal of comparative neurology. 1980;190:597–610. doi: 10.1002/cne.901900312. [DOI] [PubMed] [Google Scholar]

- Geschwind N. The organization of language and the brain. Science. 1970;170:940–944. doi: 10.1126/science.170.3961.940. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Truy E, Frackowiak R. Imaging plasticity in cochlear implant patients. Audiology & neuro-otology. 2001;6:381–393. doi: 10.1159/000046847. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Truy E, Frackowiak RS, Gregoire MC, Pujol JF, Collet L. Differential recruitment of the speech processing system in healthy subjects and rehabilitated cochlear implant patients. Brain : a journal of neurology. 2000;123(Pt 7):1391–1402. doi: 10.1093/brain/123.7.1391. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Kell C, Thierfelder C, Sterzer P, Russ MO, Preibisch C, Kleinschmidt A. Contributions of sensory input, auditory search and verbal comprehension to cortical activity during speech processing. Cereb Cortex. 2004;14:247–255. doi: 10.1093/cercor/bhg124. [DOI] [PubMed] [Google Scholar]

- Goense JB, Logothetis NK. Neurophysiology of the BOLD fMRI signal in awake monkeys. Current Biology. 2008;18:631–640. doi: 10.1016/j.cub.2008.03.054. [DOI] [PubMed] [Google Scholar]

- Gonzalez-Castillo J, Saad ZS, Handwerker DA, Inati SJ, Brenowitz N, Bandettini PA. Whole-brain, time-locked activation with simple tasks revealed using massive averaging and model-free analysis. Proceedings of the National Academy of Sciences of the United States of America. 2012;109:5487–5492. doi: 10.1073/pnas.1121049109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guimaraes AR, Melcher JR, Talavage TM, Baker JR, Ledden P, Rosen BR, Kiang NY, Fullerton BC, Weisskoff RM. Imaging subcortical auditory activity in humans. Human brain mapping. 1998;6:33–41. doi: 10.1002/(SICI)1097-0193(1998)6:1<33::AID-HBM3>3.0.CO;2-M. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Chambers J, Akeroyd MA, Foster JR, Coxon R, Palmer AR. Acoustic, psychophysical, and neuroimaging measurements of the effectiveness of active cancellation during auditory functional magnetic resonance imaging. Journal of the Acoustical Society of America. 2009;125:347–359. doi: 10.1121/1.3021437. [DOI] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Gurney EM, Bowtell RW. “Sparse” temporal sampling in auditory fMRI. Human brain mapping. 1999;7:213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harms MP, Melcher JR. Sound repetition rate in the human auditory pathway: representations in the waveshape and amplitude of fMRI activation. Journal of neurophysiology. 2002;88:1433–1450. doi: 10.1152/jn.2002.88.3.1433. [DOI] [PubMed] [Google Scholar]

- Hart HC, Palmer AR, Hall DA. Amplitude and frequency-modulated stimuli activate common regions of human auditory cortex. Cereb Cortex. 2003;13:773–781. doi: 10.1093/cercor/13.7.773. [DOI] [PubMed] [Google Scholar]

- Hecox K, Galambos R. Brain stem auditory evoked responses in human infants and adults. Arch Otolaryngol. 1974;99:30–33. doi: 10.1001/archotol.1974.00780030034006. [DOI] [PubMed] [Google Scholar]

- Heiss WD. The potential of PET/MR for brain imaging. European journal of nuclear medicine and molecular imaging 36 Suppl. 2009;1:S105–S112. doi: 10.1007/s00259-008-0962-3. [DOI] [PubMed] [Google Scholar]

- Hennig J, Ernst T, Speck O, Deuschl G, Feifel E. Detection of brain activation using oxygenation sensitive functional spectroscopy. Magnetic Resonance in Medicine. 1994;31:85–90. doi: 10.1002/mrm.1910310115. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends Cogn Sci. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature reviews. Neuroscience. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hillman EM, Devor A, Bouchard MB, Dunn AK, Krauss GW, Skoch J, Bacskai BJ, Dale AM, Boas DA. Depth-resolved optical imaging and microscopy of vascular compartment dynamics during somatosensory stimulation. NeuroImage. 2007;35:89–104. doi: 10.1016/j.neuroimage.2006.11.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu X, Le TH, Ugurbil K. Evaluation of the early response in fMRI in individual subjects using short stimulus duration. Magnetic resonance in medicine : official journal of the Society of Magnetic Resonance in Medicine / Society of Magnetic Resonance in Medicine. 1997;37:877–884. doi: 10.1002/mrm.1910370612. [DOI] [PubMed] [Google Scholar]

- Humphries C, Liebenthal E, Binder JR. Tonotopic organization of human auditory cortex. NeuroImage. 2010;50:1202–1211. doi: 10.1016/j.neuroimage.2010.01.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacquemot C, Pallier C, LeBihan D, Dehaene S, Dupoux E. Phonological grammar shapes the auditory cortex: a functional magnetic resonance imaging study. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2003;23:9541–9546. doi: 10.1523/JNEUROSCI.23-29-09541.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]