Abstract

Objective

Improving patient safety was a strong motivation behind duty hour regulations implemented by ACGME on July 1, 2003. We investigated whether rates of Patient Safety Indicators (PSIs) changed following these reforms.

Research Design

Observational study of patients admitted to VA (N=826,047) and Medicare (N=13,367,273) acute-care hospitals from 7/1/2000–6/30/2005. We examined changes in patient safety events in more vs. less teaching-intensive hospitals before (2000–2003) and after (2003–2005) duty hour reform, using conditional logistic regression, adjusting for patient age, gender, comorbidities, secular trends, baseline severity, and hospital site.

Measures

Ten PSIs were aggregated into 3 composite measures based on factor analyses: “Continuity of Care,” “Technical Care,” and “Other” composites.

Results

“Continuity of Care” composite rates showed no significant changes post-reform in hospitals of different teaching intensity in either VA or Medicare. In the VA, there were no significant changes post-reform for the “Technical Care” composite. In Medicare, the odds of a Technical Care PSI event in more vs. less teaching-intensive hospitals in post-reform year 1 were 1.12 (95% CI; 1.01–1.25); there were no significant relative changes in post-reform year 2. “Other” composite rates increased in VA in post-reform year 2 in more vs. less teaching-intensive hospitals (OR, 1.63; 95% CI, 1.10–2.41), but not in Medicare in either post-reform year.

Conclusions

Duty hour reform had no systematic impact on PSI rates. In the few cases where there were statistically significant increases in the relative odds of developing a PSI, the magnitude of the absolute increases were too small to be clinically meaningful.

Keywords: Patient safety, hospital quality, resident duty hour reform, administrative data

INTRODUCTION

Concerns about patient safety were a major reason why duty hour regulations were implemented by the Accreditation Council for Graduate Medical Education (ACGME) on July 1, 2003.1 Despite reservations that duty hour rules might adversely affect patient outcomes, evidence to date has not demonstrated adverse effects.2–5 Two recent studies examining changes in mortality following ACGME reform found some evidence of decreased mortality in teaching hospitals, relative to non-teaching hospitals, among specific subgroups of high-risk patients,2,6 whereas a third study found no effects on mortality among Medicare patients following reform.3 These findings suggest that duty hour reforms either had no effect or a modest favorable effect on mortality.

There is very little evidence to date of the impact of changes in resident work hours on patient outcomes other than mortality. While duty hour reform might lead to reductions in mortality because of a decrease in residents’ fatigue, the benefits of decreasing fatigue might be offset by disruptions in the continuity of care due to additional physician handoffs.2–5 Indeed, a recent study evaluating effects of resident work hour limits in New York found increased rates of two procedure-related patient safety events (accidental puncture or laceration and postoperative thromboembolism) and no change in the rates of three others (foreign body left during procedure, iatrogenic pneumothorax, and postoperative wound dehiscence).7 Another study found that reducing resident hours in intensive care units resulted in fewer serious medical errors.8 However, these studies were not based on national samples and the second study was conducted in an intensive care unit with a nurse to patient ratio of 1:1 or 1:2, making discontinuity of care from reduced resident hours less likely to be problematic.

To develop a more comprehensive understanding of the effects of duty hour reform on patient outcomes, we investigated the association between ACGME duty hour rules and patient safety, as measured by the Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators (PSIs). The sample included patients hospitalized within the Veterans Health Administration (VA), the single largest provider of residency training in the U.S., and Medicare patients hospitalized in short-term, acute-care U.S. nonfederal hospitals. We compared trends in risk-adjusted PSIs among more versus less teaching-intensive hospitals within each setting to examine whether PSI rates changed differentially among these groups post-reform. We hypothesized that rates of PSIs related to continuity of care would worsen as a result of more frequent handoffs and increased need for cross-coverage under duty hour regulation.4,9,10 In contrast, we hypothesized that technical skill-based PSIs would improve in teaching hospitals because better rested residents would perform better on activities requiring manual dexterity11,12 or finely tuned cognitive activity.13 These hypotheses were supported by two recent surveys of residents that reported that errors due to fatigue improved after duty hour reform;14,15 the study by Myers et al. (2006) also reported that errors due to continuity of care worsened.14

METHODS

The PSIs

The AHRQ PSIs served as the outcome measures. Several recent studies have used the PSIs to identify significant gaps and variations in safety of care,16–19 although this is the first study to use them to examine the effects of duty hour reform nationwide. The PSIs were specifically designed to capture potentially preventable events that compromise patient safety in the acute-care setting, such as complications following surgeries, procedures, or medical care.16 The AHRQ PSI software uses secondary diagnoses, procedures, and other information contained in hospital discharge records to flag hospitalizations with selected, potentially safety-related events. The 20 hospital-level PSIs are calculated as rates, defined with both a numerator (complication of interest) and denominator (population at risk) (Appendix 1, http://links.lww.com/A1281).

PSI Composite Measures

Because rates of individual PSIs were generally low, we conducted a principal components factor analysis in both the VA and Medicare datasets to reduce the number of PSIs to a smaller set of empirically-derived but conceptually coherent composite measures. We selected only those PSIs relevant to the VA and Medicare; thus, we excluded the four obstetric PSIs. We also focused our analysis on PSIs that represented iatrogenic complications of care. This excluded two PSIs based on mortality, death in low-mortality DRGs and failure-to-rescue, because they measure how well hospitals treat complications rather than how well they prevent complications.

We also excluded two PSIs with extremely low frequencies and questionable validity given current diagnosis codes -- complications of anesthesia and transfusion reaction. AHRQ has recommended the removal of complications of anesthesia from its “approved” list of PSIs due to concerns about the variability of External Cause of Injury (E) codes across hospitals and states and has also proposed specific ICD-9-CM coding changes that will help restrict transfusion reaction to the most preventable events.20,21

Finally, we omitted postoperative hip fracture and decubitus ulcer because the literature indicates that over 70% may be present on admission (POA).22 At the time of this study, POA codes were not present in either the Medicare or VA datasets. Thus, our final set of PSIs consisted primarily of surgical and procedural indicators.

We extracted three factors, consistent across both the VA and Medicare, that were linked to certain domains of care. The PSIs loading most heavily on the first factor likely reflect continuity of care in the perioperative setting-- postoperative physiologic or metabolic derangement, postoperative respiratory failure, and postoperative sepsis. These PSIs formed our “Continuity of Care” composite. PSIs reflecting technical skill-based care, including foreign body left in during procedure, postoperative hemorrhage or hematoma, postoperative wound dehiscence, and accidental puncture or laceration, loaded on a second factor. These PSIs constituted a “Technical Care” composite. Iatrogenic pneumothorax, selected infections due to medical care, and postoperative pulmonary embolism/deep vein thrombosis (PE/DVT) loaded most strongly on a third factor. This factor was composed of a mix of surgical and medical PSIs, of which the first two are frequently related to insertion or management of central venous catheters. From these PSIs, we created the “Other” composite. We allocated PSIs to composites primarily based on their factor loadings. For example, iatrogenic pneumothorax, which could be perceived as a technical skill-based PSI, was placed instead into the “Other” composite because it loaded most strongly on this factor. Although factor loadings were generally consistent between VA and Medicare, there were slight discrepancies with two PSIs—postoperative PE/DVT and wound dehiscence. We placed these PSIs into composites based upon our underlying conceptual framework (Appendix 2, http://links.lww.com/A1281).

Study Sample

The initial VA and Medicare samples were comprised of all admissions from July 1, 2000 through June 30, 2005 to acute-care VA and short-term general nonfederal hospitals, respectively, with data for all 5 years as described in previous work.3, 6 Additional exclusions specific to each sample are discussed below.

VA Patients

Because VA inpatient data include both acute and non-acute care, we applied a previously developed methodology to distinguish acute from non-acute care.23 This resulted in 1,018,040 patients with 2,231,472 admissions from 132 hospitals. We further excluded admissions to hospitals outside the U.S. (n=41,928), transfers from non-VA hospitals (n=31,049), admissions spanning July 1, 2003 (n=6,809), admissions with dates of death earlier than their discharge dates (n=20), and admissions for patients older than 90 years (n=11,398) because the proportion of such patients treated aggressively may change over time in ways that cannot be observed well with administrative data. These exclusions yielded data from 985,664 patients with 2,140,268 admissions from 131 hospitals. We then ran the PSI software (version 3.0)24 on these admissions and mapped individual PSIs into the three composites.

An index admission was defined as the first admission between July 1, 2000 and June 30, 2005 for which there was no prior admission eligible for the same PSI composite within 5 years. This ensured that each patient would only be represented once within each analysis, although they could appear in more than one composite analysis. Since separate analyses (not shown here) demonstrated that PSIs were more likely to occur in patients’ subsequent admissions, selecting the first admission for each patient reduced the possibility of selecting cases for which there would be a higher PSI rate post-reform for reasons other than duty hour reform.3

Using index admissions, the sample decreased to 826,047 patients with 883,664 admissions from 131 hospitals. Since a patient could be represented in more than one composite in any admission, this resulted in 206,772 admissions at risk for Continuity of Care PSIs, 789,257 at risk for Technical Care PSIs, and 806,459 at risk for PSIs in the “Other” composite.

Medicare Patients

From the initial sample of 21,401,849 patients with 60,096,553 admissions from 3,361 acute-care hospitals within the 50 states, we excluded admissions with hospitalizations spanning July 1, 2003 (n=191,671), admissions with dates of death earlier than their discharge dates (n=3,974), admissions for patients younger than 66 years (n=11,801,284) to allow for a 180-day lookback, and patients older than 90 years (n=3,433,617). This resulted in a sample of 16,923,128 patients with 44,666,007 admissions from 3,361 hospitals. Similar to the VA, we ran the PSI software,24 mapped the resulting PSIs into the composites, and selected the first composite admission in the past 5 years for each patient, yielding a final sample of 13,207,281 patients with 14,494,565 admissions at 3,361 hospitals. This resulted in 4,877,164 admissions at risk for Continuity of Care PSIs, 12,270,897 at risk for Technical Care PSIs, and 12,605,512 at risk for PSIs in the “Other” composite.

Risk Adjustment

Risk adjustment was performed according to the Elixhauser method,25 including all of the original 29 comorbidities except for fluid and electrolyte disorders or coagulopathy.3, 6 We performed a 180-day lookback to obtain more information on comorbidities prior to the index hospitalization. To better capture baseline severity, we aggregated paired diagnosis-related groups (DRGs), those with and without complications or comorbidities (to avoid adjusting for iatrogenic events), into 5 risk groups depending upon the rates of the relevant PSI composite within each aggregated DRG in the year prior to the study sample. Risk adjustment also included age and gender.

Data Sources

Data on patient characteristics were obtained from the VA Patient Treatment File and the Medicare Provider Analysis and Treatment File, which include information on principal and secondary diagnoses, age, gender, and discharge status.3,6 VA Support Service Center Occupancy Rate Reports provided data on number of beds per facility, and the number of residents at each hospital was obtained from the VA Office of Academic Affiliations. For Medicare, the number of residents and hospitals’ average daily census were taken from Medicare Cost Reports.

Teaching Intensity: Resident-to-Bed Ratio

The primary measure of teaching intensity was the resident-to-bed ratio, calculated as the number of interns plus residents divided by the mean number of operational beds. The resident-to-bed ratio has been used to differentiate hospitals of varying degrees of teaching intensity;26 its validity as a marker of teaching intensity was demonstrated in our previous work.3, 6 Teaching hospitals were defined as those hospitals with resident-to-bed ratios greater than 0; major and very major teaching hospitals were those hospitals with resident-to-bed ratios of greater than 0.25 to 0.60 and greater than 0.60, respectively. We used the resident-to-bed ratio as a continuous variable to provide more power than dividing hospitals into arbitrary categories.27 We held the resident-to-bed ratio fixed at the pre-reform year 1 level so that a potential response by hospitals to duty hour reforms of changing the number of residents would not confound estimation of the net effects of the reforms. Resident-to-bed ratios varied little over time. For example, the mean change from pre-reform year 3 to pre-reform year 2 was –0.001 in VA and 0.001 in Medicare. The pre-reform period included: pre-year 3 (07/01/2000–06/30/2001), pre-year 2 (07/01/2001–06/30/2002), and pre-year 1 (07/01/2002–06/30/2003). The post-reform period included: post-year 1 (07/01/2003– 06/30/2004) and post-year 2 (07/01/2004–06/30/2005).

Statistical Analysis

We used a multiple time series research design,28 also known as difference-in-differences, to examine whether the change in duty hour rules was associated with a change in the underlying trend in patient outcomes in teaching hospitals, an approach that reduces potential biases from unmeasured variables.29 This research design compares each hospital with itself, before and after reform, contrasting the changes in hospitals with more residents to the changes in hospitals with fewer or no residents, making adjustments for observed differences in patient risk factors. It also adjusts for changes in outcomes over time (trends) that were common to all hospitals. Thus, temporally stable differences between hospitals and time trends that affect all hospitals cannot be mistaken for an effect of the reform, nor can changes in patient case-mix that are adequately reflected in patient characteristics used in the models.3,6

The dependent variables were the three PSI composites: “Continuity of Care,” “Technical Care,” and “Other.” We used conditional logistic regression to adjust for patient age, gender, comorbidities, secular trends common to all patients (e.g., due to general changes in technology) represented by year indicators, baseline severity (the 5 aggregated paired DRG risk groups from pre-reform year 4), stratifying on hospital site. Conditional logistic regression has the advantage of allowing hospitals with very few admissions with PSI events to be included in the model, whereas a standard fixed effects model cannot include such hospitals. We used pre-reform year 1 as the reference group to standardize the comparison because the trends pre-reform in more vs. less teaching intensive hospitals were different in several of the subgroups. The degree of change in PSIs in conjunction with the change in duty hour rules was measured as coefficients of resident-to-bed ratio interacted with indicator variables for post-reform years 1 and 2. These coefficients, presented as odds ratios (ORs), measure the degree to which the PSI composite rates changed differently in more vs. less teaching-intensive hospitals from pre-reform year 1 to each of the post-reform years. Because a resident-to-bed ratio of 1 indicates a hospital with a large number of residents, the implicit scaling of the coefficients that measure the effects of reform describes the effects of reform on a very major teaching hospital. We expected the duty hour reform to have the greatest impact on hospitals with high RB ratios.

We examined the two post-reform years separately to allow for the possibility that some hospitals implemented work hour changes gradually over time. We tested the stability of the results in both the VA and Medicare by eliminating patients admitted to hospitals in New York State, due to earlier passage of the Libby Zion laws, and by eliminating patients admitted from nursing homes, because such patients may not have been treated aggressively. P-values < 0.05 were considered statistically significant. All analyses were conducted with SAS software, version 9.1.30

RESULTS

Compared to Medicare patients, VA patients were younger, more than twice as likely to be male, and had fewer comorbidities on average (Table 1). Unadjusted rates of the “Continuity of Care” composite were highest of all the composites, and consistently higher in the VA than in Medicare. Approximately 85% of VA hospitals were teaching hospitals (Table 2), with more than 61% classified as either major or very major teaching hospitals (resident-to-bed ratio >0.25). In contrast, 69% of hospitals in Medicare were non-teaching hospitals, and only about 9% were classified as either major or very major teaching hospitals.

Table 1.

Description of Patient Population in Sample

| VA | MEDICARE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Years Pre and Post Duty Hour Reform of 7/1/03 |

Pre-3 | Pre-2 | Pre-1 | Post-1 | Post-2 | Pre-3 | Pre-2 | Pre-1 | Post-1 | Post-2 |

|

Academic Year Number Admissions |

2000–1 171,459 |

2001–2 174,940 |

2002–3 174,839 |

2003–4 17,9123 |

2004–5 183,303 |

2000–1 3,096,855 |

2001–2 3,128,109 |

2002–3 3,064,472 |

2003–4 3,107,292 |

2004–5 3,043,495 |

| Age (Mean) | 63.15 | 63.33 | 63.19 | 63.32 | 63.31 | 76.19 | 76.20 | 76.16 | 76.15 | 76.08 |

| % Male | 96.57 | 96.48 | 96.32 | 96.14 | 95.96 | 44.11 | 44.01 | 44.16 | 44.12 | 44.26 |

| Mean # of Comorbidities | 1.40 | 1.43 | 1.46 | 1.54 | 1.58 | 1.60 | 1.63 | 1.66 | 1.71 | 1.75 |

| % w/ 0 comorbidities | 26.67 | 26.18 | 25.43 | 23.01 | 21.94 | 19.88 | 18.50 | 17.25 | 15.87 | 14.70 |

| % w/ 1 | 30.96 | 30.15 | 29.62 | 29.53 | 29.26 | 33.26 | 33.07 | 32.83 | 32.32 | 31.89 |

| % w/ 2 | 25.01 | 25.58 | 25.98 | 26.89 | 27.32 | 26.17 | 27.07 | 27.78 | 28.59 | 29.22 |

| % w/ 3 or more | 17.35 | 18.09 | 18.98 | 20.57 | 21.49 | 20.69 | 21.36 | 22.13 | 23.22 | 24.20 |

| COMPOSITE RATES | ||||||||||

|

Continuity of Care PSIs PSI Events (Numerator) Total Eligible Admissions (Denom) % Rate |

550 36,822 1.49 |

586 39,011 1.50 |

572 41,015 1.39 |

670 43,451 1.54 |

702 46,473 1.51 |

11,290 948,120 1.19 |

11,996 972,587 1.23 |

11,648 977,807 1.19 |

12,471 991,037 1.26 |

12,379 987,613 1.25 |

|

Technical Care PSIs PSI Events (Numerator) Total Eligible Admissions (Denom) % Rate |

680 151,677 0.45 |

729 156,046 0.47 |

796 156,640 0.51 |

842 160,538 0.52 |

779 164,356 0.47 |

12,323 2,488,166 0.50 |

12,986 2,494,605 0.52 |

12,673 2430102 0.52 |

12,411 2,456,062 0.51 |

12,339 2,401,962 0.51 |

|

Other PSIs PSI Events (Numerator) Total Eligible Admissions (Denom) % Rate |

673 158,835 0.42 |

685 160,569 0.43 |

658 159,490 0.41 |

755 162,345 0.47 |

688 165,220 0.42 |

14,281 2,614,605 0.55 |

14,377 2,582,706 0.56 |

14,025 2,490,048 0.56 |

14,426 2,496,722 0.58 |

13,759 2,421,431 0.57 |

The PSI Composites are comprised of individual PSIs as follows:

Continuity of Care: Postoperative physiologic or metabolic derangement, postoperative respiratory failure, and postoperative sepsis;

Technical Care: Foreign body left in during procedure, postoperative hemorrhage or hematoma, postoperative wound dehiscence, and accidental puncture or laceration;

Other: Iatrogenic pneumothorax, selected infections due to medical care, and postoperative pulmonary embolism or deep vein thrombosis (PE/DVT).

Table 2.

Hospital Characteristics by Teaching Status in VA and Medicare

| Non-teaching (0) | Minor Teaching (>0–0.25) |

Major Teaching (>0.25–0.6) |

Very Major Teaching (>0.6) |

Totals | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| VA | Medicare | VA | Medicare | VA | Medicare | VA | Medicare | VA | Medicare | |

| N facilities (% VA or Medicare) | 19 (14.50%) |

2,315 (68.88%) |

32 (24.43%) |

739 (21.99%) |

40 (30.53%) |

198 (5.89%) |

40 (30.53%) |

109 (3.24%) |

131 (99.99%) |

3,361 (100.00%) |

| N admissions (% VA or Medicare) | 35,114 (3.97%) |

7,070,554 (48.78%) |

137,544 (15.57%) |

5,181,925 (35.75%) |

364,011 (41.19%) |

1,472,271 (10.16%) |

346,995 (39.27%) |

769,815 (5.31%) |

883,664 (100.0%) |

14,494,565 (100.00%) |

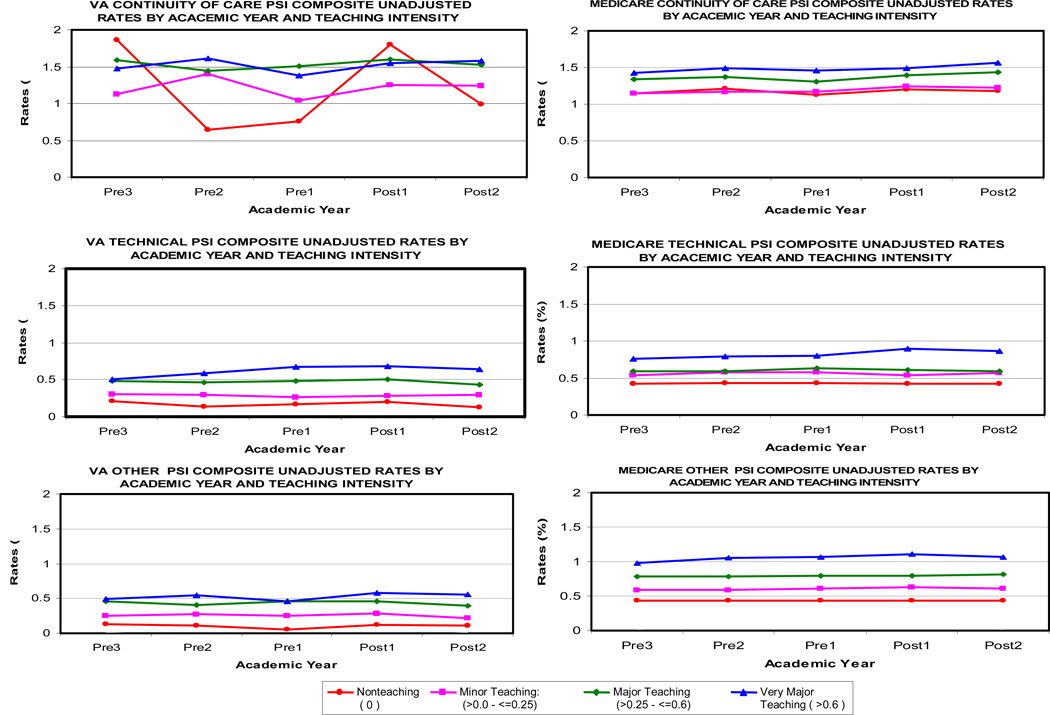

There were some differences in the trends of unadjusted PSI rates in hospitals of different teaching intensity from the first pre-reform year to the last pre-reform year, but in nearly all of the graphs, the rate of change from the last pre-reform year to post-reform year 2 did not vary across the different teaching intensity groups (Figure 1). Within the VA, the unadjusted data suggest a relative increase in the rate of the “Continuity of Care” composite at non-teaching hospitals between the last pre-reform year and the first post-reform year. Within Medicare, the unadjusted data suggest a relative increase in the rate of the “Technical Care” composite at very major teaching hospitals during this same time period.

Figure 1.

Changes Over Time in Unadjusted PSI Composite Rates in More vs. Less Teaching-Intensive Hospitals

Changes in risk-adjusted PSI composite rates are presented in Table 3. In the VA, there was no evidence of relative increases or decreases in the odds of PSI events in more vs. less teaching-intensive hospitals in either post-reform year, for either the “Continuity of Care” or “Technical Care” composites. Although the odds ratio for the “Continuity of Care” composite exceeded 1.0 in both post-reform years, the change in the odds of these PSI events in more vs. less teaching hospitals was still not significant when a single parameter was used to estimate the pooled post-reform effect. The odds of the “Other” composite increased in more teaching-intensive hospitals, relative to less teaching-intensive hospitals, from the last pre-reform year to post-reform year 2 (OR, 1.63; 95% CI, 1.10–2.41). Although a 63% increase in the odds of PSIs in this composite seems large, it implies a very small change in the absolute probability of these PSI events based on the composite’s baseline rate of 0.46%. Results for all three composites were qualitatively similar when we excluded patients admitted to hospitals in New York State or patients admitted from nursing homes. Finally, to ensure that the composites were not masking some variation in the individual PSIs, we repeated the regression analyses used for the composites with the individual PSIs. Trends for the individual PSIs were generally similar to overall trends in the VA (data not shown).

Table 3.

Adjusted Odds of PSI Composite Events After Duty Hour Reform in More vs. Less Teaching-Intensive Hospitals

| Continuity of Care Composite |

Technical Care Composite |

Other Composite |

||||

|---|---|---|---|---|---|---|

| VA Odds Ratio (95% CI) |

Medicare Odds Ratio (95% CI) |

VA Odds Ratio (95% CI) |

Medicare Odds Ratio (95% CI) |

VA Odds Ratio (95% CI) |

Medicare Odds Ratio (95% CI) |

|

| Resident/bed ratio*post1* | 1.08 (0.69 – 1.70) P= 0.73 |

0.95 (0.85, 1.07) P= 0.41 |

1.02 (0.72 – 1.44) P= 0.90 |

1.12 (1.01, 1.25) P=0.03 |

1.30 (0.88 – 1.92) P=0.18 |

0.99 (0.90, 1.10) P=0.89 |

| Resident/bed ratio*post2* | 1.21 (0.78 – 1.86) P= 0.40 |

1.04 (0.93, 1.16) P=0.52 |

0.95 (0.67 – 1.34) P= 0.75 |

1.09 (0.98, 1.21) P=0.13 |

1.63 (1.10 – 2.41) P= 0.01 |

1.03 (0.93, 1.13) P=0.61 |

| Number of cases | 206,772 | 4,877,164 | 789,257 | 12,270,897 | 806,459 | 12,605,512 |

An odds ratio in this table describes the difference in the pre- to post-reform change in risk between a hospital with a resident-to-bed ratio of 1 and a hospital with a resident-to-bed ratio of 0; CI, confidence interval.

The PSI Composites are comprised of individual PSIs as follows:

Continuity of Care: Postoperative physiologic or metabolic derangement, postoperative respiratory failure, and postoperative sepsis;

Technical Care: Foreign body left in during procedure, postoperative hemorrhage or hematoma, postoperative wound dehiscence, and accidental puncture or laceration;

Other: Iatrogenic pneumothorax, selected infections due to medical care, and postoperative pulmonary embolism or deep vein thrombosis (PE/DVT).

The interaction terms (resident-to-bed ratio in post-reform year 1 and resident-to-bed ratio in post-reform year 2) measure whether there is any relative change in the odds of PSIs in more vs. less teaching-intensive hospitals.

In Medicare, as in the VA, there was no evidence of relative changes in the odds of “Continuity of Care” PSI composite events in more vs. less teaching-intensive hospitals in either post-reform year. However, unlike VA results, there was a relative increase in the odds of “Technical Care” PSI composite events in more vs. less teaching-intensive hospitals between the last pre-reform year and post-reform year 1 (OR, 1.12, 95% CI, 1.01–1.25) but not in post-reform year 2 (OR 1.09, 95% CI, .0.98–1.21). The absolute change associated with this odds ratio was again very small. Contrary to the VA results for the “Other” composite, there was no significant change in PSI rates in more vs. less teaching-intensive hospitals between pre-reform and either of the post-reform years in Medicare. The stability of these results was upheld when our additional exclusion criteria were applied.

DISCUSSION

We found no systematic effect of resident duty work hour reform on potentially preventable safety-related events as measured by the AHRQ PSIs. Although we hypothesized that rates of PSIs related to continuity of care would worsen due to more handoffs and increased reliance on cross-cover arrangements, this did not appear to be the case among either VA or Medicare patients. We also had hypothesized that rates of technical-skilled PSIs would improve due to reduced fatigue among residents. However, there were no differences in the rate of change in this composite between more vs. less teaching-intensive hospitals in the VA. While we did see an increase in post-reform year 1 in the odds of the “Technical Care” composite among Medicare patients in more vs. less teaching-intensive hospitals, this increase was small in magnitude and no longer significant in post-reform year 2.

However, we saw higher rates of events in our “Other” PSI composite in more teaching-intensive hospitals, relative to less teaching-intensive hospitals, in post-reform year 2 in the VA. Because the absolute difference in risk was small and limited to the VA, this finding should be interpreted cautiously and in the context of our previous work, which suggested no systematic changes in mortality.3,6

There may be several explanations for the lack of any systematic change in the rates of PSIs. First, our original conceptual framework linking specific PSIs with broad domains of care may have been incorrect. Second, interventions intended to reduce physician work hours may have had unanticipated negative effects on nursing care, especially within the VA system, perhaps by reducing the availability of physicians for interdisciplinary communication or by imposing more ongoing burdens on nurses. Third, although residents may get more sleep, increased handoffs could have offsetting negative effects. Fourth, the duty hour reform still allowed 30 hours of continuous work, making residents prone to acute sleep deprivation. Finally, compliance may not have been high,31 although the data on this are limited.

Our study is the first national study to examine the association between duty hour reform and patient safety and to compare the degree of change across national samples of Medicare and VA patients. Other studies have found beneficial effects of reduced resident work hours primarily from direct observation of residents or self-report from frontline providers.5,14,15 Our study eliminates some of the methodological limitations found in other studies by comparing findings across federal and non-federal hospitals, including data for three years pre-reform and two years post-reform, utilizing indicators of patient safety developed specifically to capture potential safety-related events, and using a difference-in-differences approach to reduce the likelihood of confounding.

Despite the strengths of this study, there were limitations. We did not have clinical data for risk adjustment, limiting our analyses to administrative data, which lack clinical detail and are subject to variability in coding practices across providers.16,17 However, our difference-in-differences analysis essentially treated each hospital as its own control, factoring out inter-hospital differences in coding that were consistent over time. Nonetheless, a potential limitation with all difference-in-difference studies is unmeasured confounding due to contemporaneous interventions that may have differentially affected teaching or non-teaching hospitals. Another limitation was related to power. Despite using all available data for both the VA and Medicare as well as aggregating individual PSIs into composite measures because of the low prevalence of individual PSIs,16 our confidence intervals were still relatively wide, particularly in the VA.

We were also limited in our ability to measure patient safety using administrative data. Although the PSIs are standardized; demonstrate face, content, and predictive validity;16,32,33 and have been applied to numerous data sets,22,34,35 their criterion validity has not yet been established. It is possible that the PSIs are not sensitive enough to detect changes over time. The few published studies examining the criterion validity of the PSIs have been limited by small sample sizes or lack of a true gold standard.34–39 A recent study examining the criterion validity of five of the surgical PSIs in the VA found moderate sensitivities (19% – 56%) and positive predictive values (PPVs) (22% – 74%).40 Postoperative respiratory failure and postoperative wound dehiscence had the highest PPVs (74% and 72%, respectively) of all PSIs examined. Two current studies41,42 are examining the criterion validity of the PSIs; one study recently reported PPVs ranging from 40% for postoperative sepsis to 90% for accidental puncture or laceration.43 The addition of POA codes, which were added to Medicare data last year but have not yet been added to VA data, will help improve PPV in future applications.

These results, along with recent endorsement by the National Quality Forum of four PSIs (accidental puncture or laceration, iatrogenic pneumothorax, foreign body, and postoperative wound dehiscence),44 suggest that some of the PSIs, such as those in our “Technical Care” composite, may be ready to use in examining the effects of policy reforms over time. Poulose et al. (2005) also used the PSIs to evaluate a previous effort to reduce resident work hours, but they found worsening trends in accidental puncture or laceration and postoperative PE/DVT after implementation of work hour limits in New York State.7 Our findings related to the impact of work hour reform nationally are more reassuring.

At present, however, the PSIs are still regarded by both AHRQ and the user community principally as screening tools to flag potential safety-related events rather than as definitive measures.45,46 We also view the PSIs as indicators of potential safety-related events,32,40–43, 47,48 although their advantages in using administrative data make them attractive relative to other measures of hospital-safety performance. No easily-obtainable, objective, alternative measures of hospital-safety performance currently exist.49.

In conclusion, our study showed that implementation of the ACGME duty hour rules did not have an overall systematic impact on potential safety-related events in more vs. less teaching-intensive hospitals. These findings do not suggest, however, that implementation of duty hour reform was a mistake. Rather, they highlight the importance of obtaining a more comprehensive understanding of what approaches to implementation have worked best and the mechanisms by which outcomes for some programs improved and others worsened. To improve safety, further study is needed to assess which interventions best minimize the negative effects of physician handoffs while maximizing the benefits of reduced fatigue. Gathering data on the contribution of different system-level approaches to duty hours, such as night floats, shift work, mandatory naps, or greater use of hospitalists and physician extenders, will help to inform future resident work hour reform efforts.50 Nonetheless, the question of how to optimally regulate resident duty hours will continue to provoke debate, and this will likely persist until we can demonstrate improvements in outcomes of care rather than maintenance of the status quo.

ACKNOWLEDGMENTS

We would like to acknowledge the assistance of Marlena Shin, J.D., M.P.H., for her administrative help with the manuscript.

This work was supported by VA grant HSR&D IIR 04.202.1 and NHLBI ROI HL082637, with additional support from National Science Foundation grant SES-06-0646002.

Appendix 1. Accepted Hospital-Level Indicator Definitions

| Indicator | Definition and Numerator | Denominator |

|---|---|---|

| PSI 5. Foreign body left in during procedure | Discharges with ICD-9-CM codes for foreign body left in during procedure in any secondary diagnosis field. | All medical and surgical discharges, 18 years and older or MDC 14 (pregnancy, childbirth, and puerperium), defined by specific DRGs. Exclude patients with ICD-9-CM codes for foreign body left in during procedure in the principal diagnosis field. |

| PSI 6. Iatrogenic pneumothorax | Discharges with ICD-9-CM code of 512.1 in any secondary diagnosis field. | All medical and surgical discharges 18 years and older defined by specific DRGs. Exclude cases:

|

| PSI 7. Selected Infections due to medical care | Discharges with ICD-9-CM code of 9993 or 99662 in any secondary diagnosis field. | All medical and surgical discharges, 18 years and older or MDC 14 (pregnancy, childbirth, and puerperium), defined by specific DRGs. Exclude cases:

|

| PSI 9. Postoperative hemorrhage or hematoma | Discharges among cases meeting the inclusion and exclusion rules for the denominator with the following:

AND

|

All surgical discharges 18 years and older defined by specific DRGs and an ICD-9-CM code for an operating room procedure. Exclude cases:

Note: If day of procedure is not available in the input data file, the rate may be slightly lower than if the information was available.

|

| PSI 10. Postoperative physiologic and metabolic derangements | Discharges with ICD-9-CM codes for physiologic and metabolic derangements in any secondary diagnosis field. Discharges with acute renal failure (subgroup of physiologic and metabolic derangements) must be accompanied by a procedure code for dialysis (3995, 5498). |

All elective surgical discharges age 18 and older defined by specific DRGs and an ICD-9-CM code for an operating room procedure. *Defined by admit type. Exclude cases:

Note: If day of procedure is not available in the input data file, the rate may be slightly lower than if the information was available

|

| PSI 11. Postoperative Respiratory Failure | Discharges among cases meeting the inclusion and exclusion rules for the denominator.with ICD-9-CM codes for acute respiratory failure (518.81) in any secondary diagnosis field (After 1999, include 518.84) OR Discharges among cases meeting the inclusion and exclusion rules for the denominator.with ICD-9-CM codes for reintubation procedure as follows:

|

All elective* surgical discharges age 18 and over defined by specific DRGs and an ICD-9-CM code for an operating room procedure. *Defined by admit type. Exclude cases:

Note: If day of procedure is not available in the input data file, the rate may be slightly lower than if the information was available.

|

| PSI 12. Postoperative Pulmonary Embolism or Deep Vein Thrombosis | Discharges with ICD-9-CM codes for deep vein thrombosis or pulmonary embolism in any secondary diagnosis field. | All surgical discharges age 18 and older defined by specific DRGs and an ICD-9-CM code for an operating room procedure. Exclude cases:

Note: If day of procedure is not available in the input data file, the rate may be slightly lower than if the information was available.

|

| PSI 13. Postoperative Sepsis | Discharges with ICD-9-CM code for sepsis in any secondary diagnosis field. | All elective surgical discharges age 18 and older defined by specific DRGs and an ICD-9-CM code for an operating room procedure. *Defined by admit type. Exclude cases:

|

| PSI 14. Postoperative Wound Dehiscence | Discharges with ICD-9-CM code for reclosure of postoperative disruption of abdominal wall (54.61) in any procedure field. | All abdominopelvic surgical discharges. Exclude cases:

Note: If day of procedure is not available in the input data file, the rate may be slightly lower than if the information was available

|

| PSI 15. Accidental Puncture or Laceration | Discharges 18 years and older with ICD-9-CM code denoting technical difficulty (e.g., accidental cut, puncture, perforation, or laceration) in any secondary diagnosis field. | All medical and surgical discharges defined by specific DRGs. Exclude cases:

|

Appendix 2. Rotated Factor Patterns in VA and Medicare

| VA | |||

|---|---|---|---|

| Indicator | Factor 1 | Factor 2 | Factor 3 |

| PSI 5. Foreign body left in during procedure | 0.00042 | 0.26170 | −0.12134 |

| PSI 6. Iatrogenic pneumothorax | −0.11037 | 0.14807 | 0.73234 |

| PSI 7. Selected Infections due to medical care | 0.13517 | −0.12344 | 0.60410 |

| PSI 9. Postoperative hemorrhage or hematoma | 0.02681 | 0.54391 | −0.21219 |

| PSI 10. Postoperative physiologic and metabolic derangements | 0.62290 | −0.04453 | −0.05685 |

| PSI 11. Postoperative Respiratory Failure | 0.62729 | 0.18649 | 0.00287 |

| PSI 12. Postoperative Pulmonary Embolism or Deep Vein Thrombosis* | 0.04485 | 0.35622 | 0.16845 |

| PSI 13. Postoperative Sepsis | 0.65834 | −0.03810 | 0.10460 |

| PSI 14. Postoperative Wound Dehiscence | 0.03703 | 0.49093 | 0.03387 |

| PSI 15. Accidental Puncture or Laceration | −0.02481 | 0.49038 | 0.10018 |

| Medicare† | |||

| Indicator | Factor 1 | Factor 2 | Factor 3 |

| PSI 5. Foreign body left in during procedure | −0.01101 | −0.09891 | 0.35161 |

| PSI 6. Iatrogenic pneumothorax | −0.05089 | 0.54867 | −0.08350 |

| PSI 7. Selected Infections due to medical care | 0.22889 | 0.45531 | −0.18405 |

| PSI 9. Postoperative hemorrhage or hematoma | 0.03209 | −0.04326 | 0.66217 |

| PSI 10. Postoperative physiologic and metabolic derangements | 0.41455 | −0.39006 | −0.01884 |

| PSI 11. Postoperative Respiratory Failure | 0.68395 | 0.05248 | 0.07537 |

| PSI 12. Postoperative Pulmonary Embolism or Deep Vein Thrombosis | 0.12413 | 0.30393 | 0.20270 |

| PSI 13. Postoperative Sepsis | 0.70279 | 0.10007 | −0.02723 |

| PSI 14. Postoperative Wound Dehiscence‡ | −0.01480 | 0.45618 | 0.05202 |

| PSI 15. Accidental Puncture or Laceration | 0.00068 | 0.19099 | 0.60529 |

Bolded type indicates the composite to which each PSI was assigned.

Although this PSI loaded more heavily on Factor 2 than on Factor 3, we included it in Factor 3 because it did not fit well with the concept underlying Factor 2 and because it loaded more heavily on Factor 2 in Medicare data.

Factors 2 and 3 are reversed in Medicare data, relative to VA data.

Although this PSI loaded more heavily on Factor 2 than on Factor 3, we included it in Factor 3 because it did not fit well with the concept underlying Factor 2 and because it loaded more heavily on Factor 2 in VA data.

REFERENCES

- 1. [Accessed April 8, 2008];Resident duty hours language: final requirements [Accreditation Council for Graduate Medical Education web site] Available at: http://acgme.org.

- 2.Shetty KD, Bhattacharya J. Changes in hospital mortality associated with residency work-hour regulations. Ann Intern Med. 2007;147(2):73–80. doi: 10.7326/0003-4819-147-2-200707170-00161. [DOI] [PubMed] [Google Scholar]

- 3.Volpp KG, Rosen AK, Rosenbaum PR, et al. Mortality among hospitalized Medicare beneficiaries in the first 2 years following ACGME resident duty hour reform. JAMA. 2007;298(9):975–983. doi: 10.1001/jama.298.9.975. [DOI] [PubMed] [Google Scholar]

- 4.Fletcher KE, Davis SQ, Underwood W, et al. Systematic review: effects of resident work hours on patient safety. Ann Intern Med. 2004;141(11):851–857. doi: 10.7326/0003-4819-141-11-200412070-00009. [DOI] [PubMed] [Google Scholar]

- 5.Jagsi R, Weinstein DF, Shapiro J, et al. The Accreditation Council for Graduate Medical Education’s limits on residents’ work hours and patient safety. A study of resident experiences and perceptions before and after hours reductions. Arch Intern Med. 2008;168(5):493–500. doi: 10.1001/archinternmed.2007.129. [DOI] [PubMed] [Google Scholar]

- 6.Volpp KG, Rosen AK, Rosenbaum PR, et al. Mortality among patients in VA hospitals in the first 2 years following ACGME resident duty hour reform. JAMA. 2007;298(9):984–992. doi: 10.1001/jama.298.9.984. [DOI] [PubMed] [Google Scholar]

- 7.Poulose BK, Ray WA, Arbogast PG, et al. Resident work hour limits and patient safety. Ann Surg. 2005;241(6):847–860. doi: 10.1097/01.sla.0000164075.18748.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Landrigan CP, Rothschild JM, Cronin JW, et al. Effect of reducing interns’ work hours on serious medical errors in intensive care units. N Engl J Med. 2004;351(18):1838–1848. doi: 10.1056/NEJMoa041406. [DOI] [PubMed] [Google Scholar]

- 9.Petersen LA, Brennan TA, O’Neil AC, et al. Does housestaff discontinuity of care increase the risk for preventable adverse events? Ann Intern Med. 1994;121(11):866–872. doi: 10.7326/0003-4819-121-11-199412010-00008. [DOI] [PubMed] [Google Scholar]

- 10.Laine C, Goldman L, Soukup JR, et al. The impact of a regulation restricting medical house staff working hours on the quality of patient care. JAMA. 1993;269(3):374–378. [PubMed] [Google Scholar]

- 11.Grantcharov TP, Bardram L, Funch-Jensen P, et al. Laparoscopic performance after one night on call in a surgical department: prospective study. BMJ. 2001;323(7323):1222–1223. doi: 10.1136/bmj.323.7323.1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Eastridge BJ, Hamilton EC, O’Keefe GE, et al. Effect of sleep deprivation on the performance of simulated laparoscopic surgical skill. Am J Surg. 2003;186(2):169–174. doi: 10.1016/s0002-9610(03)00183-1. [DOI] [PubMed] [Google Scholar]

- 13.Buysse DJ, Barzansky B, Dinges D, et al. Sleep, fatigue, and medical training: setting an agenda for optimal learning and patient care. Sleep. 2003;26(2):218–225. doi: 10.1093/sleep/26.2.218. [DOI] [PubMed] [Google Scholar]

- 14.Myers JS, Bellini LM, Morris JB, et al. Internal medicine and general surgery residents’ attitudes about the ACGME duty hours regulations: a multicenter study. Acad Med. 2006;81(12):1052–1058. doi: 10.1097/01.ACM.0000246687.03462.59. [DOI] [PubMed] [Google Scholar]

- 15.Jagsi R, Shapiro J, Weissman JS, et al. The educational impact of ACGME limits on resident and fellow duty hours: a pre-post survey study. Acad Med. 2006;81(12):1059–1068. doi: 10.1097/01.ACM.0000246685.96372.5e. [DOI] [PubMed] [Google Scholar]

- 16.Romano PS, Geppert JJ, Davies S, et al. A national profile of patient safety in U.S. hospitals. Health Aff. 2003;22(2):154–166. doi: 10.1377/hlthaff.22.2.154. [DOI] [PubMed] [Google Scholar]

- 17.Zhan C, Miller MR. Administrative data based patient safety research: a critical review. Qual Saf Health Care. 2003;12(Suppl 2):ii58–ii63. doi: 10.1136/qhc.12.suppl_2.ii58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miller MR, Elixhauser A, Zhan C, et al. Patient Safety Indicators: using administrative data to identify potential patient safety concerns. Health Serv Res. 2001;36(6 Pt 2):110–132. [PMC free article] [PubMed] [Google Scholar]

- 19.Weiner BJ, Alexander L, Baker S et al. Quality improvement implementation and hospital performance on Patient Safety Indicators. Med Care Res Rev. 2006;63(1):29–57. doi: 10.1177/1077558705283122. [DOI] [PubMed] [Google Scholar]

- 20.Romano PS. Selecting indicators for patient safety at the health systems level in OECD countries. Health Care Quality Indicators Patient Safety Subgroup Meeting/Health Care Quality Indicators Expert Meeting; October 24–26, 2007; [Accessed November 21, 2008]. Available at: http://www.oecd.org/dataoecd/44/29/39495326.pdf. [Google Scholar]

- 21. [Accessed November 21, 2008];Classifications of Diseases and Functioning & Disability: ICD-9-CM Coordination and Maintenance Committee in 2008 [Department of Health and Human Services, Centers for Disease Control and Prevention website] Available at: http://www.cdc.gov/nchs/about/otheract/icd9/maint/classifications_of_diseases_and1.htm.

- 22.Houchens R, Elixhauser A, Romano P. How often are potential "Patient Safety Events" present on admission? Jt Comm J Qual Patient Saf. 2008;34(3):154–163. doi: 10.1016/s1553-7250(08)34018-5. [DOI] [PubMed] [Google Scholar]

- 23.Rivard P, Elwy AR, Loveland S, et al. Applying Patient Safety Indicators (PSIs) across healthcare systems: achieving data comparability. In: Henriksen K, Battles JB, Marks E, Lewin DI, editors. Advances in patient safety: from research to implementation. Vol 2. Rockville, MD: Agency for Healthcare Research and Quality (AHRQ) and Department of Defense (DoD); 2005. pp. 7–25. [PubMed] [Google Scholar]

- 24.Agency for Healthcare Research and Quality Patient Safety Indicators Software [AHRQ website]. Version 3.0. Rockville, MD: 2006. [Accessed April 8, 2008]. Available at: http://www.qualityindicators.ahrq.gov/software.htm. [Google Scholar]

- 25.Elixhauser A, Steiner C, Harris DR, et al. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- 26.Allison JJ, Kiefe CI, Weissman NW, et al. Relationship of hospital teaching status with quality of care and mortality for Medicare patients with acute MI. JAMA. 2000;284(10):1256–1262. doi: 10.1001/jama.284.10.1256. [DOI] [PubMed] [Google Scholar]

- 27.Cox D. Note on grouping. J Am Stat Assoc. 1957;52(280):543–547. [Google Scholar]

- 28.Campbell DT, Stanley JC. Experimental and quasi-experimental designs for research. Dallas, TX: Houghton-Mifflin; 2002. p. 181. [Google Scholar]

- 29.Rosenbaum PR. Stability in the absence of treatment. J Am Stat Assoc. 2001;96(453):210–219. [Google Scholar]

- 30.SAS/STAT Software. Version 9.1. Cary, N.C.: SAS Institute, Inc.; 2003. [Google Scholar]

- 31.Landrigan CP, Czeisler CA, Barger LK, et al. Effective implementation of work-hour limits and systematic improvements. Jt Comm J Qual Patient Saf. 2007;33(11 Suppl):19–29. doi: 10.1016/s1553-7250(07)33110-3. [DOI] [PubMed] [Google Scholar]

- 32.Rosen AK, Rivard P, Zhao S, et al. Evaluating the Patient Safety Indicators: how well do they perform on Veterans Health Administration data? Med Care. 2005;43(9):873–84. doi: 10.1097/01.mlr.0000173561.79742.fb. [DOI] [PubMed] [Google Scholar]

- 33.Zhan C, Miller MR. Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290(14):1868–1874. doi: 10.1001/jama.290.14.1868. [DOI] [PubMed] [Google Scholar]

- 34.Gallagher BK, Cen L, Hannan EL. Validation of AHRQ's Patient Safety Indicator for accidental puncture or laceration. In: Henriksen K, Battles JB, Marks E, Lewin DI, editors. Advances in Patient Safety: From Research to Implementation. Vol. 2. Rockville, MD: Agency for Healthcare Research and Quality and Department of Defense; 2005b. pp. 27–38. [PubMed] [Google Scholar]

- 35.Zhan C, Battles J, Chiang Y, et al. The validity of ICD-9-CM codes in identifying postoperative deep vein thrombosis and pulmonary embolism. Jt Comm J Qual Patient Saf. 2007;33(6):326–331. doi: 10.1016/s1553-7250(07)33037-7. [DOI] [PubMed] [Google Scholar]

- 36.Gallagher BK, Cen L, Hannan EL. Readmission for selected infections due to medical care: expanding the definition of a Patient Safety Indicator. In: Henriksen K, Battles JB, Marks E, Lewin DI, editors. Advances in Patient Safety: From Research to Implementation. Vol. 2. Rockville, MD: Agency for Healthcare Research and Quality and Department of Defense; 2005a. pp. 39–50. [PubMed] [Google Scholar]

- 37.Polancich S, Restrepo E, Prosser J. Cautious use of administrative data for decubitus ulcer outcome reporting. Am J Med Qual. 2006;21(4):262–8. doi: 10.1177/1062860606288244. [DOI] [PubMed] [Google Scholar]

- 38.Shufelt JL, Hannan EL, Gallagher BK. The postoperative hemorrhage and hematoma Patient Safety Indicator and its risk factors. Am J Med Qual. 2005;20(4):210–218. doi: 10.1177/1062860605276941. [DOI] [PubMed] [Google Scholar]

- 39.Weller WE, Gallagher BK, Cen L, et al. Readmissions for venous thromboembolism: expanding the definition of Patient Safety Indicators. Jt Comm J Qual Patient Saf. 2004;30(9):497–504. doi: 10.1016/s1549-3741(04)30058-4. [DOI] [PubMed] [Google Scholar]

- 40.Romano P, Mull H, Rivard P, et al. Validity of selected AHRQ Patient Safety Indicators based on VA National Surgical Quality Improvement Program data. Health Serv Res. 2008 doi: 10.1111/j.1475-6773.2008.00905.x. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rosen A. Principal Investigator. Validating the Patient Safety Indicators in the VA: a multi-faceted approach. Bedford VA Medical Center: VA Health Services Research and Development. SDR 07-002. [Google Scholar]

- 42.Romano P, Geppert J. Principal Investigators. AHRQ Patient Safety Indicator validation pilot. Battelle: AHRQ; Contract No. 290-04-0004. [Google Scholar]

- 43.Zrelak P, Romano P, Geppert J, et al. Positive predictive value of AHRQ Patient Safety Indicators in a national sample of hospitals. Washington D.C.: AcademyHealth Annual Research Meeting; 2008. [Google Scholar]

- 44.AHRQ. [Accessed May 8, 2008];The AHRQ Quality Indicators in 2007. AHRQ Quality Indicators eNewsletter. 2007b Available at: http://qualityindicators.ahrq.gov/newsletter/2007-February-AHRQ-QI-Newsletter.htm.

- 45.AHRQ. Guide to Patient Safety Indicators Version 3.1. Rockville, MD: Agency for Healthcare Research and Quality; 2007a. Revised March 2007. [Google Scholar]

- 46.Remus D, Fraser I. Guidance for using the AHRQ quality indicators for hospital-level public reporting or payment. Rockville, MD: U.S. Department of Health and Human Services, Agency for Healthcare Research and Quality; 2004. [Google Scholar]

- 47.Rosen AK, Zhao S, Rivard P, et al. Tracking rates of patient safety indicators over time: lessons from the Veterans Administration. Med Care. 2006;44(9):850–61. doi: 10.1097/01.mlr.0000220686.82472.9c. [DOI] [PubMed] [Google Scholar]

- 48.Rivard PE, Rosen AK, Carroll JS. Enhancing patient safety through organizational learning: are patient safety indicators a step in the right direction? Health Serv Res. 2006;41(4 Pt 2):1633–1653. doi: 10.1111/j.1475-6773.2006.00569.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Thomas EJ, Petersen LA. Measuring errors and adverse events in health care. J Gen Intern Med. 2003;18(1):61–7. doi: 10.1046/j.1525-1497.2003.20147.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Volpp KG, Landrigan C. Starting from scratch: designing physician work hour reform from first principles. JAMA. 2008;300(10):1197–1199. doi: 10.1001/jama.300.10.1197. [DOI] [PubMed] [Google Scholar]