Abstract

Correlated neuronal activity is a natural consequence of network connectivity and shared inputs to pairs of neurons, but the task-dependent modulation of correlations in relation to behavior also hints at a functional role. Correlations influence the gain of postsynaptic neurons, the amount of information encoded in the population activity and decoded by readout neurons, and synaptic plasticity. Further, it affects the power and spatial reach of extracellular signals like the local-field potential. A theory of correlated neuronal activity accounting for recurrent connectivity as well as fluctuating external sources is currently lacking. In particular, it is unclear how the recently found mechanism of active decorrelation by negative feedback on the population level affects the network response to externally applied correlated stimuli. Here, we present such an extension of the theory of correlations in stochastic binary networks. We show that (1) for homogeneous external input, the structure of correlations is mainly determined by the local recurrent connectivity, (2) homogeneous external inputs provide an additive, unspecific contribution to the correlations, (3) inhibitory feedback effectively decorrelates neuronal activity, even if neurons receive identical external inputs, and (4) identical synaptic input statistics to excitatory and to inhibitory cells increases intrinsically generated fluctuations and pairwise correlations. We further demonstrate how the accuracy of mean-field predictions can be improved by self-consistently including correlations. As a byproduct, we show that the cancellation of correlations between the summed inputs to pairs of neurons does not originate from the fast tracking of external input, but from the suppression of fluctuations on the population level by the local network. This suppression is a necessary constraint, but not sufficient to determine the structure of correlations; specifically, the structure observed at finite network size differs from the prediction based on perfect tracking, even though perfect tracking implies suppression of population fluctuations.

Author Summary

The co-occurrence of action potentials of pairs of neurons within short time intervals has been known for a long time. Such synchronous events can appear time-locked to the behavior of an animal, and also theoretical considerations argue for a functional role of synchrony. Early theoretical work tried to explain correlated activity by neurons transmitting common fluctuations due to shared inputs. This, however, overestimates correlations. Recently, the recurrent connectivity of cortical networks was shown responsible for the observed low baseline correlations. Two different explanations were given: One argues that excitatory and inhibitory population activities closely follow the external inputs to the network, so that their effects on a pair of cells mutually cancel. Another explanation relies on negative recurrent feedback to suppress fluctuations in the population activity, equivalent to small correlations. In a biological neuronal network one expects both, external inputs and recurrence, to affect correlated activity. The present work extends the theoretical framework of correlations to include both contributions and explains their qualitative differences. Moreover, the study shows that the arguments of fast tracking and recurrent feedback are not equivalent, only the latter correctly predicts the cell-type specific correlations.

Introduction

The spatio-temporal structure and magnitude of correlations in cortical neural activity have been subject of research for a variety of reasons: the experimentally observed task-dependent modulation of correlations points at a potential functional role. In the motor cortex of behaving monkeys, for example, synchronous action potentials appear at behaviorally relevant time points [1]. The degree of synchrony is modulated by task performance, and the precise timing of synchronous events follows a change of the behavioral protocol after a phase of re-learning. In primary visual cortex, saccades (eye movements) are followed by brief periods of synchronized neural firing [2], [3]. Further, correlations and fluctuations depend on the attentive state of the animal [4], with higher correlations and slow fluctuations observed during quiet wakefulness, and faster, uncorrelated fluctuations in the active state [5]. It is still unclear whether the observed modulation of correlations is in fact employed by the brain, or whether it is merely an epiphenomenon. Theoretical studies have suggested a number of interpretations and mechanisms of how correlated firing could be exploited: Correlations in afferent spike-train ensembles may provide a gating mechanism by modulating the gain of postsynaptic cells (for a review, see [6]). Synchrony in afferent spikes (or, more generally, synchrony in spike arrival) can enhance the reliability of postsynaptic responses and, hence, may serve as a mechanism for a reliable activation and propagation of precise spatio-temporal spike patterns [7], [8], [9], [10]. Further, it has been argued that synchronous firing could be employed to combine elementary representations into larger percepts [11], [12], [7], [13], [14]. While correlated firing may constitute the substrate for some en- and decoding schemes, it can be highly disadvantageous for others: The number of response patterns which can be triggered by a given afferent spike-train ensemble becomes maximal if these spike trains are uncorrelated [15]. In addition, correlations in the ensemble impair the ability of readout neurons to decode information reliably in the presence of noise (see e.g. [16], [15], [17]). Recent studies have indeed shown that biological neural networks implement a number of mechanisms which can efficiently decorrelate neural activity, such as the nonlinearity of spike generation [18], synaptic-transmission variability and failure [19], [20], short-term synaptic depression [20], heterogeneity in network connectivity [21] and neuron properties [22] and the recurrent network dynamics [23], [24], [17]. To study the significance of experimentally observed task-dependent correlations, it is essential to provide adequate null hypotheses: Which level and structure of correlations is to be expected in the absence of any task-related stimulus or behavior? Even in the simplest network models without time varying input, correlations in the neural activity emerge as a consequence of shared input [25], [26], [27] and recurrent connectivity [24], [28], [17], [29], [30]. Irrespective of the functional aspect, the spatio-temporal structure and magnitude of correlations between spike trains or membrane potentials carry valuable information about the properties of the underlying network generating these signals [26], [28], [31], [29], [30] and could therefore help constraining models of cortical networks. Further, the quantification of spike-train correlations is a prerequisite to understand how correlation sensitive synaptic plasticity rules, such as spike-timing dependent plasticity [32], interact with the recurrent network dynamics [33]. Finally, knowledge of the expected level of correlations between synaptic inputs is crucial for the correct interpretation of extracellular signals like the local-field potential (LFP) [34].

Previous theoretical studies on correlations in local cortical networks provide analytical expressions for the magnitude [27], [24], [17] and the temporal shape [35], [36], [29], [30] of average pairwise correlations, capture the influence of the connectivity on correlations [37], [38], [28], [31], [29], [39], and connect oscillatory network states emerging from delayed negative feedback [40] to the shape of correlation functions [30]. In particular we have shown recently that negative feedback loops, abundant in cortical networks, constitute an efficient decorrelation mechanism and therefore allow neurons to fire nearly independently despite substantial shared presynaptic input [17] (see also [37], [24], [41]). We further pointed out that in networks of excitatory (E) and inhibitory (I) neurons, the correlations between neurons of different cell type (EE, EI, II) differ in both magnitude and temporal shape, even if excitatory and inhibitory neurons have identical properties and input statistics [17], [30]. It remains unclear, however, how this cell-type specificity of correlations is affected by the connectivity of the network.

The majority of previous theoretical studies on cortical circuits is restricted to local networks driven by external sources representing thalamo-cortical or cortico-cortical inputs (e.g. [42], [43], [44]). Most of these studies emphasize the role of the local network connectivity (e.g. [45]). Despite the fact that inputs from remote (external) areas constitute a substantial fraction of all excitatory inputs (about  [7], see also [46], [47]), their spatio-temporal structure is often abstracted by assuming that neurons in the local network are independently driven by external sources. A priori, this assumption can hardly be justified: neurons belonging to the local cortical network receive, at least to some extent, inputs from identical or overlapping remote areas, for example due to patchy (clustered) horizontal connectivity [48], [49]. Hence, shared-input correlations are likely to play a role not only for local but also for external inputs. Coherent activation of neurons in remote presynaptic areas constitutes another source of correlated external input, in particular for sensory areas [50], [5], [51], [4]. So far, it is largely unknown how correlated external input affects the dynamics of local cortical networks and alters correlations in their neural activity.

[7], see also [46], [47]), their spatio-temporal structure is often abstracted by assuming that neurons in the local network are independently driven by external sources. A priori, this assumption can hardly be justified: neurons belonging to the local cortical network receive, at least to some extent, inputs from identical or overlapping remote areas, for example due to patchy (clustered) horizontal connectivity [48], [49]. Hence, shared-input correlations are likely to play a role not only for local but also for external inputs. Coherent activation of neurons in remote presynaptic areas constitutes another source of correlated external input, in particular for sensory areas [50], [5], [51], [4]. So far, it is largely unknown how correlated external input affects the dynamics of local cortical networks and alters correlations in their neural activity.

In this article, we investigate how the magnitude and the cell-type specificity of correlations depend on i) the connectivity in local cortical networks of finite size and ii) the level of correlations in external inputs. Existing theories of correlations in cortical networks are not sufficient to address these questions as they either do not incorporate correlated external input [35], [17], [29], [28], [31] or assume infinitely large networks [24]. Lindner et al. [37] studied the responses of finite populations of spiking neurons receiving correlated external input, but described inhibitory feedback by a global compound process.

Our work builds on the existing theory of correlations in stochastic binary networks [35], a well-established model in the neuroscientific community [42], [24]. This model has the advantage of requiring for its analytical treatment elementary mathematical methods only. We employ the same network structure used in the work by Renart et al. [24] which relates the mechanism of recurrent decorrelation to the fast tracking of external signals (see [52] for a recent review). This choice enables us to reconsider the explanation of decorrelation by negative feedback [17], originally shown for networks of leaky integrate-and-fire neurons, and to compare it to the findings of Renart et al. In fact, the motivation for the choice of the model arose from the review process of [17], during which both the reviewers and the editors encouraged us to elucidate the relation of our work to the one of Renart et al. in a separate subsequent manuscript. The present work delivers this comparison.

We show here that the results presented in [17] for the leaky integrate-and-fire model are in qualitative agreement with those in networks of binary neurons. The formal relationship between spiking models and the binary neuron model is established in [53]. In particular, for weak correlations it can be shown that both models map to the Ornstein-Uhlenbeck process with one important difference: The location of the effective white noise for spiking neurons is additive in the output, while for binary neurons the effective noise is low-pass filtered, or equivalently additive on the input side of the neuron.

The remainder of the manuscript is organized as follows: In “Methods”, in recurrent random networks of excitatory and inhibitory cells driven by fluctuating input from an external population of finite size. We account for the fluctuations in the synaptic input to each cell, which effectively linearize the hard threshold of the neurons [54], [24]. We further include the resulting finite-size correlations into the established mean-field description [42], [54] to increase the accuracy of the theory. In “Results”, we first show in “Correlations are driven by intrinsic and external fluctuations” that correlations in recurrent networks are not only caused by the externally imposed correlated input, but also by intrinsically generated fluctuations of the local populations. We demonstrate that the external drive causes an overall shift of the correlations, but that their relative magnitude is mainly determined by the intrinsically generated fluctuations. In “Cancellation of input correlations”, we revisit the earlier reported phenomenon of the suppression of correlations between input currents to pairs of cells [24] and show that it is a direct consequence of the suppression of fluctuations on the population level [17]. In “Limit of infinite network size” we consider the strong coupling limit of the theory, where the network size goes to infinity to recover earlier results for inhomogeneous connectivity [24] and to extend these results to homogeneous connectivity. Subsequently, in “Influence of connectivity on the correlation structure”, we investigate in how far the reported structure of correlations is a generic feature of balanced networks and isolate parameters of the connectivity determining this structure. Finally, in “Discussion”, we summarize our results and their implications for the interpretation of experimental data, discuss the limitations of the theory, and provide an outlook of how the improved theory may serve as a further building block to understand processing of correlated activity.

Methods

Networks of binary neurons

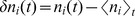

We denote the activity of neuron  as

as  . The state

. The state  of a binary neuron is either

of a binary neuron is either  or

or  , where

, where  indicates activity,

indicates activity,  inactivity [35], [55], [24]. The state of the network of

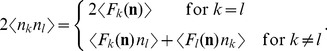

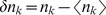

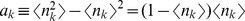

inactivity [35], [55], [24]. The state of the network of  such neurons is described by a binary vector

such neurons is described by a binary vector  . We denote the mean activity as

. We denote the mean activity as  , the (zero time lag) covariance of the activities of a pair

, the (zero time lag) covariance of the activities of a pair  of neurons is defined as

of neurons is defined as  , where

, where  is the deviation of neuron

is the deviation of neuron  's activity from expectation and the average

's activity from expectation and the average  is over time and realizations of the stochastic activity.

is over time and realizations of the stochastic activity.

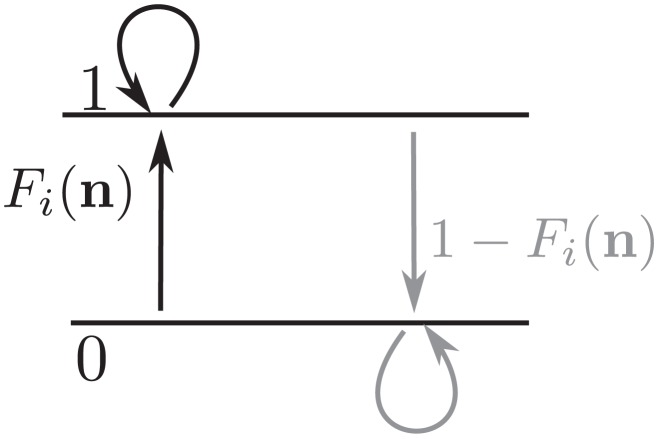

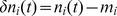

The neuron model shows stochastic transitions (at random points in time) between the two states  and

and  controlled by transition probabilities, as illustrated in Figure 1. Using asynchronous update [56], in each infinitesimal interval

controlled by transition probabilities, as illustrated in Figure 1. Using asynchronous update [56], in each infinitesimal interval  each neuron in the network has the probability

each neuron in the network has the probability  to be chosen for update [57], where

to be chosen for update [57], where  is the time constant of the neuronal dynamics. An equivalent implementation draws the time points of update independently for all neurons. For a particular neuron, the sequence of update points has exponentially distributed intervals with mean duration

is the time constant of the neuronal dynamics. An equivalent implementation draws the time points of update independently for all neurons. For a particular neuron, the sequence of update points has exponentially distributed intervals with mean duration  , i.e. update times form a Poisson process with rate

, i.e. update times form a Poisson process with rate  . We employ the latter implementation in the globally time-driven [58] spiking simulator NEST [59], and use a discrete time resolution

. We employ the latter implementation in the globally time-driven [58] spiking simulator NEST [59], and use a discrete time resolution  for the intervals. The stochastic update constitutes a source of noise in the system. Given the

for the intervals. The stochastic update constitutes a source of noise in the system. Given the  -th neuron is selected for update, the probability to end in the up-state (

-th neuron is selected for update, the probability to end in the up-state ( ) is determined by the gain function

) is determined by the gain function  which possibly depends on the activity

which possibly depends on the activity  of all other neurons. The probability to end in the down state (

of all other neurons. The probability to end in the down state ( ) is

) is  . This model has been considered earlier [60], [35], [55], and here we follow the notation introduced in the latter work.

. This model has been considered earlier [60], [35], [55], and here we follow the notation introduced in the latter work.

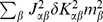

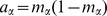

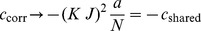

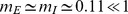

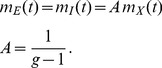

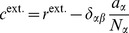

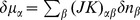

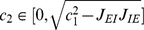

Figure 1. State transitions of a binary neuron.

Each neuron is updated at random time points, intervals are i.i.d. exponential with mean duration  , so the rate of updates per neuron

, so the rate of updates per neuron  is

is  . The probability of neuron

. The probability of neuron  to end in the up-state (

to end in the up-state ( ) is determined by the gain function

) is determined by the gain function  which potentially depends on the states

which potentially depends on the states  of all neurons in the network. The up-transitions are indicated by black arrows. The probability for the down state (

of all neurons in the network. The up-transitions are indicated by black arrows. The probability for the down state ( ) is given by the complementary probability

) is given by the complementary probability  , indicated by gray arrows.

, indicated by gray arrows.

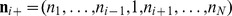

The stochastic system is completely characterized by the joint probability distribution  in all

in all  binary variables

binary variables  . An example is the recurrent random network considered here (Figure 2). Knowing the joint probability distribution, arbitrary moments can be calculated, among them pairwise correlations. Here we are only concerned with the stationary state of the network. A stationary solution of

. An example is the recurrent random network considered here (Figure 2). Knowing the joint probability distribution, arbitrary moments can be calculated, among them pairwise correlations. Here we are only concerned with the stationary state of the network. A stationary solution of  implies that for each state a balance condition holds, so that the incoming and outgoing probability fluxes sum up to zero. The occupation probability of the state is then constant. We denote as

implies that for each state a balance condition holds, so that the incoming and outgoing probability fluxes sum up to zero. The occupation probability of the state is then constant. We denote as  the state, where the

the state, where the  -th neuron is active (

-th neuron is active ( ), and

), and  where neuron

where neuron  is inactive (

is inactive ( ). Since in each infinitesimal time interval at most one neuron can change state, for each given state

). Since in each infinitesimal time interval at most one neuron can change state, for each given state  there are

there are  possible transitions (each corresponding to one of the

possible transitions (each corresponding to one of the  neurons changing state). The sum of the probability fluxes into the state and out of the state must compensate to zero [61], so

neurons changing state). The sum of the probability fluxes into the state and out of the state must compensate to zero [61], so

|

(1) |

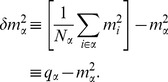

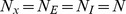

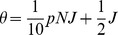

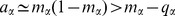

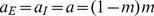

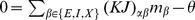

Figure 2. Recurrent local network of two populations of excitatory ( ) and inhibitory (

) and inhibitory ( ) neurons driven by a common external population (

) neurons driven by a common external population ( ).

).

The external population  delivers stochastic activity to the local network. The local network is a recurrent Erdös-Rényi random network with homogeneous synaptic weights

delivers stochastic activity to the local network. The local network is a recurrent Erdös-Rényi random network with homogeneous synaptic weights  coupling neurons in population

coupling neurons in population  to neurons in population

to neurons in population  , for

, for  and same parameters for all neurons. There are

and same parameters for all neurons. There are  neurons in both the excitatory and the inhibitory population. The connection probability is

neurons in both the excitatory and the inhibitory population. The connection probability is  , and each neuron in population

, and each neuron in population  receives the same number

receives the same number  of excitatory and inhibitory synapses. The size

of excitatory and inhibitory synapses. The size  of the external population determines the amount of shared input received by each pair of cells in the local network. The neurons are modeled as binary units with a hard threshold

of the external population determines the amount of shared input received by each pair of cells in the local network. The neurons are modeled as binary units with a hard threshold  .

.

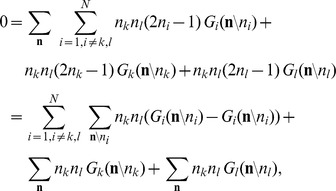

From this equation we derive expressions for the first  and second moments

and second moments  by multiplying with

by multiplying with  and summing over all possible states

and summing over all possible states  , which leads to

, which leads to

|

Note that the term denoted  does not depend on the state of neuron

does not depend on the state of neuron  . We use the notation

. We use the notation  for the state of the network excluding neuron

for the state of the network excluding neuron  , i.e.

, i.e.  . Separating the terms in the sum over

. Separating the terms in the sum over  into those with

into those with  and the two terms with

and the two terms with  and

and  , we obtain

, we obtain

|

where we obtained the first term by explicitly summing over state  (i.e. using

(i.e. using  and evaluating the sum

and evaluating the sum  ). This first sum obviously vanishes. The remaining terms are of identical form with the roles of

). This first sum obviously vanishes. The remaining terms are of identical form with the roles of  and

and  interchanged. We hence only consider the first of them and obtain the other by symmetry. The first term simplifies to

interchanged. We hence only consider the first of them and obtain the other by symmetry. The first term simplifies to

|

where we denote as  the average of a function

the average of a function  with respect to the distribution

with respect to the distribution  . Taken together with the mirror term

. Taken together with the mirror term  , we arrive at two conditions, one for the first (

, we arrive at two conditions, one for the first ( ,

,  ) and one for the second (

) and one for the second ( ) moment

) moment

|

(2) |

Considering the covariance  with centralized variables

with centralized variables  , for

, for  one arrives at

one arrives at

| (3) |

This equation is identical to eq. 3.9 in [35], to eqs. 3.12 and 3.13 in [55], and to eqs. (19)–(22) in [24, supplement].

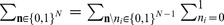

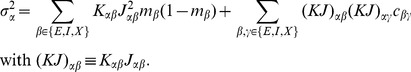

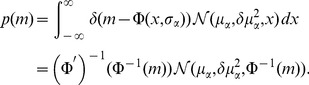

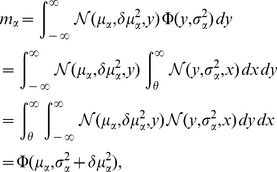

Mean-field solution

Starting from (1) for the general case  , a similar calculation as the one resulting in (2) for

, a similar calculation as the one resulting in (2) for  leads to

leads to

where we used  , valid for binary variables. As in [24] we now assume a particular form for the gain function and for the coupling between neurons by specifying

, valid for binary variables. As in [24] we now assume a particular form for the gain function and for the coupling between neurons by specifying

where  is the incoming synaptic weight from neuron

is the incoming synaptic weight from neuron  to neuron

to neuron  ,

,  is the Heaviside function, and

is the Heaviside function, and  is the threshold of the activation function. For positive

is the threshold of the activation function. For positive  the neuron gets activated only if sufficient excitatory input is present and for negative

the neuron gets activated only if sufficient excitatory input is present and for negative  the neuron is intrinsically active even in the absence of excitatory input. We denote by

the neuron is intrinsically active even in the absence of excitatory input. We denote by  the summed synaptic input to the neuron, sometimes also called the “field”. Because

the summed synaptic input to the neuron, sometimes also called the “field”. Because  , the variance

, the variance  of a binary variable is

of a binary variable is  . We now aim to solve (2) for the case

. We now aim to solve (2) for the case  , i.e. the equation

, i.e. the equation  . In general, the right hand side depends on the fluctuations of all neurons projecting to neuron

. In general, the right hand side depends on the fluctuations of all neurons projecting to neuron  . An exact solution is therefore complicated. However, for sufficiently irregular activity in the network we assume the neurons to be approximately independent. Further assume that in a network of homogeneous populations

. An exact solution is therefore complicated. However, for sufficiently irregular activity in the network we assume the neurons to be approximately independent. Further assume that in a network of homogeneous populations  (same parameters

(same parameters  ,

,  and same statistics of the incoming connections for all neurons, i.e. same number

and same statistics of the incoming connections for all neurons, i.e. same number  and strength

and strength  of incoming connections from neurons in a given population

of incoming connections from neurons in a given population  ) the mean activity of an individual neuron can be represented by the population mean

) the mean activity of an individual neuron can be represented by the population mean  . The mean input to a neuron in population

. The mean input to a neuron in population  then is

then is

| (4) |

We assumed in the last step identical synaptic amplitudes  for a synapse from a neuron in population

for a synapse from a neuron in population  to a neuron in population

to a neuron in population  . So the input to each neuron has the same mean

. So the input to each neuron has the same mean  . As a first approximation, if the mean activity in the network is not saturated, i.e. neither

. As a first approximation, if the mean activity in the network is not saturated, i.e. neither  nor

nor  , mapping this activity back by the inverse gain function to the input,

, mapping this activity back by the inverse gain function to the input,  must be close to the threshold value, so

must be close to the threshold value, so

| (5) |

This relation may be solved for  and

and  to obtain a coarse estimate of the activity in the network [42], [54]. In mean-field approximation we assume that the fluctuations of the fields of individual neurons

to obtain a coarse estimate of the activity in the network [42], [54]. In mean-field approximation we assume that the fluctuations of the fields of individual neurons  around their mean are mutually independent, so that the fluctuations

around their mean are mutually independent, so that the fluctuations  of

of  are, in turn, caused by a sum of independent random variables and hence the variances add up to the variance

are, in turn, caused by a sum of independent random variables and hence the variances add up to the variance  of the field

of the field

| (6) |

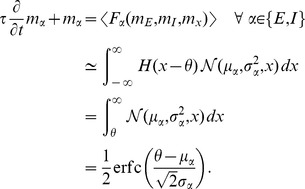

As  is a sum of typically thousands of synaptic inputs, it approaches a Gaussian distribution

is a sum of typically thousands of synaptic inputs, it approaches a Gaussian distribution  with mean

with mean  and variance

and variance  . In this approximation the mean activity in the network is the solution of

. In this approximation the mean activity in the network is the solution of

|

(7) |

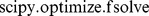

This equation needs to be self-consistently solved with  by numerical or graphical methods in order to obtain the stationary activity, because

by numerical or graphical methods in order to obtain the stationary activity, because  and

and  depend on

depend on  themselves. We here employ the algorithm

themselves. We here employ the algorithm  and

and  from the MINPACK package, implemented in scipy (version 0.9.0) [62] as the function

from the MINPACK package, implemented in scipy (version 0.9.0) [62] as the function  .

.

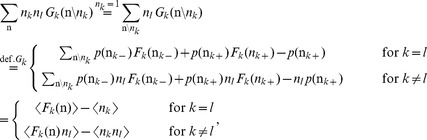

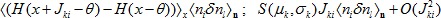

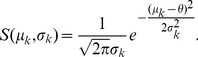

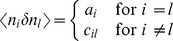

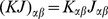

Linearized equation for correlations and susceptibility

In general, the term  in (3) couples moments of arbitrary order, resulting in a moment hierarchy [55]. Here we only determine an approximate solution. Since the single synaptic amplitudes

in (3) couples moments of arbitrary order, resulting in a moment hierarchy [55]. Here we only determine an approximate solution. Since the single synaptic amplitudes  are small, we linearize the effect of a single synaptic input. We apply the linearization to the two terms of the form

are small, we linearize the effect of a single synaptic input. We apply the linearization to the two terms of the form  on the right hand side of (3). In the recurrent network, the activity of each neuron in the vector

on the right hand side of (3). In the recurrent network, the activity of each neuron in the vector  may be correlated to the activity of any other neuron

may be correlated to the activity of any other neuron  . Therefore, the input

. Therefore, the input  sensed by neuron

sensed by neuron  not only depends on

not only depends on  directly, but also indirectly through the correlations of

directly, but also indirectly through the correlations of  with any of the other neurons

with any of the other neurons  that project to neuron

that project to neuron  . We need to take this dependence into account in the linearization. Considering the effect of one particular input

. We need to take this dependence into account in the linearization. Considering the effect of one particular input  explicitly one gets

explicitly one gets

|

The first term  already contains two factors

already contains two factors  and

and  , so it takes into account second order moments. Performing the expansion for the next input would yield terms corresponding to correlations of higher order, which are neglected here. This amounts to the assumption that the remaining fluctuations in

, so it takes into account second order moments. Performing the expansion for the next input would yield terms corresponding to correlations of higher order, which are neglected here. This amounts to the assumption that the remaining fluctuations in  are independent of

are independent of  and

and  , and we again approximate them by a Gaussian random variable

, and we again approximate them by a Gaussian random variable  with mean

with mean  and variance

and variance  , so

, so  . Here we used the smallness of the synaptic weight

. Here we used the smallness of the synaptic weight  and replaced the difference by the derivative

and replaced the difference by the derivative  , which has the form of a susceptibility. Using the explicit expression for the Gaussian integral (7), the susceptibility is exactly

, which has the form of a susceptibility. Using the explicit expression for the Gaussian integral (7), the susceptibility is exactly

|

(8) |

The same expansion holds for the remaining inputs to cell  . With

. With  , the equation for the pairwise correlations (3) in linear approximation takes the form

, the equation for the pairwise correlations (3) in linear approximation takes the form

|

(9) |

corresponding to eq. (6.8) in [35] and eqs. (31)–(33) in [24, supplement]. Note, however, that the linearization used in [35] relies on the smoothness of the gain function due to additional local noise, whereas here and in [24, supplement] a Heaviside gain function is used and only the existence of noise generated by the network itself justifies the linearization. If the input to each neuron is homogeneous, i.e.  and

and  for all neurons

for all neurons  in population

in population  , a structurally similar equation connects the correlations

, a structurally similar equation connects the correlations  averaged over disjoint pairs of neurons belonging to two (possibly identical) populations

averaged over disjoint pairs of neurons belonging to two (possibly identical) populations  ,

,  with the population averaged variances

with the population averaged variances

|

(10) |

In deriving the last expression, we replaced variances of individual neurons and correlations between individual pairs by their respective population averages and counted the number of connections. This equation corresponds to eqs. (9.14)–(9.16) in [35] (which lack, however, the external population  , and note the typo in the first term in line 2 of eq. (9.16), which should read

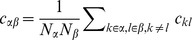

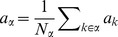

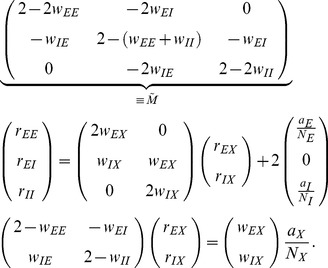

, and note the typo in the first term in line 2 of eq. (9.16), which should read  ) and eqs. (36) in [24, supplement]. Written in matrix form (10) takes the form (24) stated in the results sections of the present article, where we defined

) and eqs. (36) in [24, supplement]. Written in matrix form (10) takes the form (24) stated in the results sections of the present article, where we defined

|

(11) |

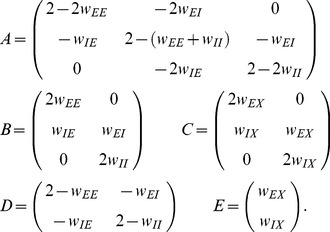

The explicit solution of the system of equations in the second line of (24) is

|

(12) |

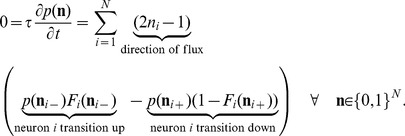

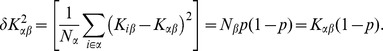

Mean-field theory including finite-size correlations

The mean-field solution presented in “Mean-field solution” assumes that correlations among the neurons in the network are negligible. This assumption enters the expression (6) for the variance of the input to a neuron. Having determined the actual magnitude of the correlations in (24), we are now able to state a more accurate approximation in which we take these correlations into account, modifying the expression for the variance of the field

|

(13) |

This correction suggests an iterative scheme: Initially we solve the mean-field equation (7) assuming  (hence

(hence  given by (6)). In each step of the iteration we then calculate the correlations by (24), compute the mean-field solution of (7) and the susceptibility

given by (6)). In each step of the iteration we then calculate the correlations by (24), compute the mean-field solution of (7) and the susceptibility  (8), taking into account the correlations (13) determined in the previous step. These steps are iterated until the solution (

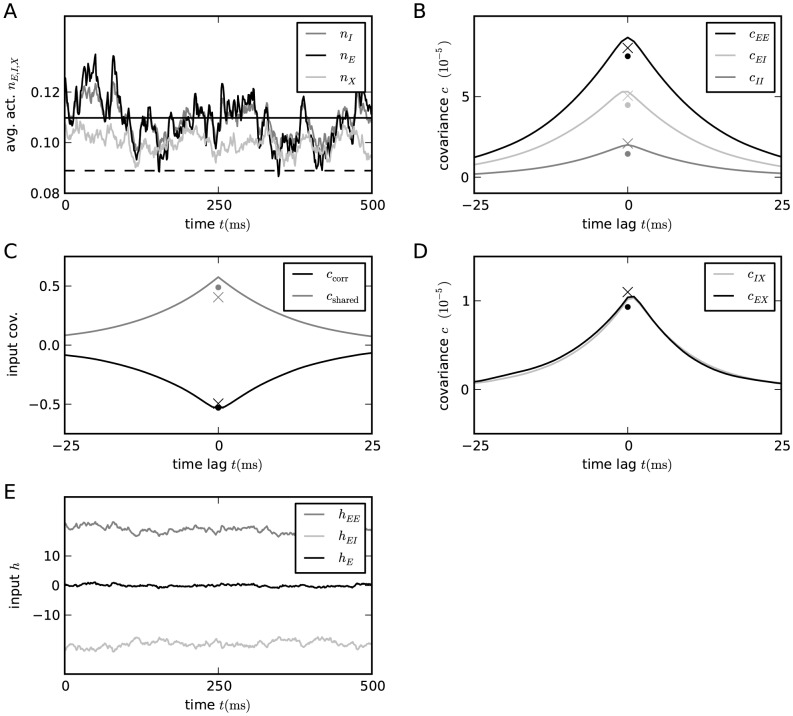

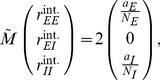

(8), taking into account the correlations (13) determined in the previous step. These steps are iterated until the solution ( ) converges. We use this approach to determine the correlation structure in Figure 3, where we iterated until the solution became invariant up to a residual absolute difference of

) converges. We use this approach to determine the correlation structure in Figure 3, where we iterated until the solution became invariant up to a residual absolute difference of  . A comparison of the distribution of the total synaptic input

. A comparison of the distribution of the total synaptic input  at the end of the iteration with a Gaussian distribution with parameters

at the end of the iteration with a Gaussian distribution with parameters  and

and  is shown in Figure 3D.

is shown in Figure 3D.

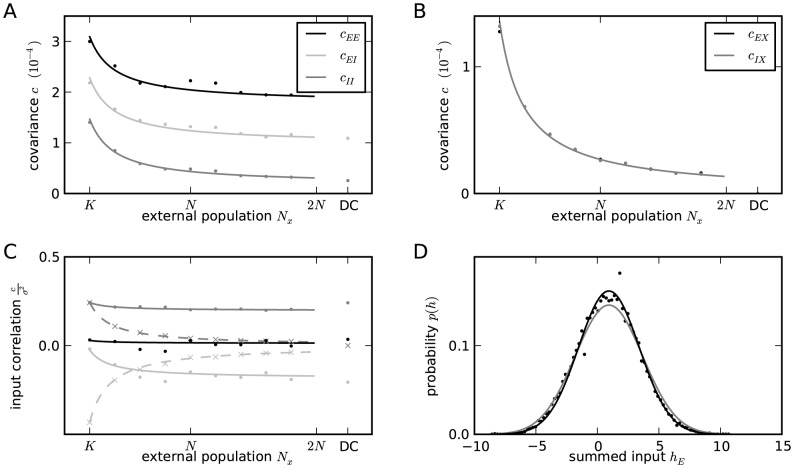

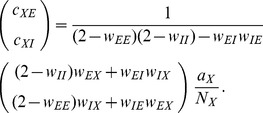

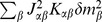

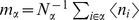

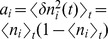

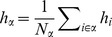

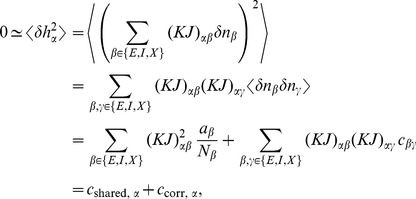

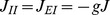

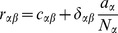

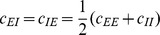

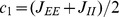

Figure 3. Correlations in a network of three populations as illustrated in Figure 2 in dependence of the size  of the external population.

of the external population.

Each neuron in population  receives

receives  randomly drawn excitatory inputs with weight

randomly drawn excitatory inputs with weight  ,

,  randomly drawn inhibitory inputs of weight

randomly drawn inhibitory inputs of weight  and

and  external inputs of weight

external inputs of weight  (homogeneous random network with fixed in-degree, connection probability

(homogeneous random network with fixed in-degree, connection probability  ). A Correlations averaged over pairs of neurons within the local network (22). Dots indicate results of direct simulation over

). A Correlations averaged over pairs of neurons within the local network (22). Dots indicate results of direct simulation over  averaged over

averaged over  pairs of neurons. Curves show the analytical result (24). The point “DC” shows the correlation structure emerging if the drive from the external population is replaced by a constant value

pairs of neurons. Curves show the analytical result (24). The point “DC” shows the correlation structure emerging if the drive from the external population is replaced by a constant value  , which provides the same mean input as the original external drive. B Correlations between neurons within the local network and the external population averaged over pairs of neurons (same labeling as in A). C Correlation between the inputs to a pair of cells in the network decomposed into the contributions due to shared inputs

, which provides the same mean input as the original external drive. B Correlations between neurons within the local network and the external population averaged over pairs of neurons (same labeling as in A). C Correlation between the inputs to a pair of cells in the network decomposed into the contributions due to shared inputs  (gray, eq. 25) and due to correlations

(gray, eq. 25) and due to correlations  in the presynaptic activity (light gray, eq. 26). Dashed curves and St. Andrew's Crosses show the contribution due to external inputs, solid curves and dots show the contribution from local inputs. The sum of all components is shown by black dots and curve. Curves are theoretical results based on (24), (25), and (26), symbols are obtained from simulation. D Probability distribution of the fluctuating input

in the presynaptic activity (light gray, eq. 26). Dashed curves and St. Andrew's Crosses show the contribution due to external inputs, solid curves and dots show the contribution from local inputs. The sum of all components is shown by black dots and curve. Curves are theoretical results based on (24), (25), and (26), symbols are obtained from simulation. D Probability distribution of the fluctuating input  to a single neuron in the excitatory population. Dots show the histogram obtained from simulation binned over the interval

to a single neuron in the excitatory population. Dots show the histogram obtained from simulation binned over the interval  with a bin size of

with a bin size of  . The gray curve is the prediction of a Gaussian distribution obtained from mean-field theory neglecting correlations, with mean and variance given by (4) and (6), respectively. The black curve takes correlations in the afferent signals into account and has a variance given by (13). Other parameters: simulation resolution

. The gray curve is the prediction of a Gaussian distribution obtained from mean-field theory neglecting correlations, with mean and variance given by (4) and (6), respectively. The black curve takes correlations in the afferent signals into account and has a variance given by (13). Other parameters: simulation resolution  , synaptic delay

, synaptic delay  , activity measurement in intervals of

, activity measurement in intervals of  . Threshold of the neurons

. Threshold of the neurons  , time constant of inter-update intervals

, time constant of inter-update intervals  . The average activity in the network is

. The average activity in the network is  .

.

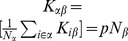

Influence of inhomogeneity of in-degrees

In the previous sections we assumed the number of incoming connections to be the same for all neurons. Studying a random network in its original Erdös-Rényi [63] sense, the number of synaptic inputs  to a neuron

to a neuron  from population

from population  is a binomially distributed random number. As a consequence, the time-averaged activity differs among neurons. Since each neuron

is a binomially distributed random number. As a consequence, the time-averaged activity differs among neurons. Since each neuron  samples a random subset of inputs from a given population

samples a random subset of inputs from a given population  , we can assume that the realization of

, we can assume that the realization of  is independent of the realization of the time-averaged activity of the inputs from population

is independent of the realization of the time-averaged activity of the inputs from population  . So these two contributions to the variability of the mean input

. So these two contributions to the variability of the mean input  add up. The number of incoming connections to a neuron in population

add up. The number of incoming connections to a neuron in population  follows a binomial distribution

follows a binomial distribution

where  is the connection probability and

is the connection probability and  the size of the sending population. The mean value is as before

the size of the sending population. The mean value is as before  , where we denote the expectation value with respect to the realization of the connectivity as

, where we denote the expectation value with respect to the realization of the connectivity as  . The variance of the in-degree is hence

. The variance of the in-degree is hence

|

In the following we adapt the results from [54], [24] to the present notation. The contribution of the variability of the number of synapses to the variance of the mean input is  . The contribution from the distribution of the mean activities can be expressed by the variance of the mean activity defined as

. The contribution from the distribution of the mean activities can be expressed by the variance of the mean activity defined as

|

The  independently drawn inputs hence contribute

independently drawn inputs hence contribute  , as the variances of the

, as the variances of the  terms add up. So together we have [54, eq. 5.5–5.6]

terms add up. So together we have [54, eq. 5.5–5.6]

Using  we obtain

we obtain

|

(14) |

The latter expression differs from [54, eq. 5.7] only in the term  that is absent in the work of van Vreeswijk and Sompolinsky, because they assumed the number of synapses to be Poisson distributed in the limit of sparse connectivity [54, Appendix, (A.6)] (also note that their

that is absent in the work of van Vreeswijk and Sompolinsky, because they assumed the number of synapses to be Poisson distributed in the limit of sparse connectivity [54, Appendix, (A.6)] (also note that their  corresponds to our

corresponds to our  ). The expression (14) is identical to [24, supplement, eq. (25)].

). The expression (14) is identical to [24, supplement, eq. (25)].

Since the variance of a binary signal with time-averaged activity  is

is  , the population-averaged variance is hence

, the population-averaged variance is hence

| (15) |

So the sum of  such (uncorrelated) signals contributes to the fluctuation of the input as

such (uncorrelated) signals contributes to the fluctuation of the input as

| (16) |

The contribution due to the variability of the number of synapses  can be neglected in the limit of large networks [24]. With the time-averaged activity of a single cell with mean input

can be neglected in the limit of large networks [24]. With the time-averaged activity of a single cell with mean input  and variance

and variance  given by (7)

given by (7)  the distribution of activity in the population is

the distribution of activity in the population is

|

(17) |

The mean activity of the whole population is

|

(18) |

because the penultimate line is a convolution of two Gaussian distributions, so the means and variances add up. The second moment of the population activity is

| (19) |

These expressions are identical to [24, supplement, eqs. (26), (27)]. The system of equations (4), (14), (16), (18), and (19) can be solved self-consistently. We use the algorithm  and

and  of the MINPACK package, implemented in scipy (version 0.9.0) [62] as the function

of the MINPACK package, implemented in scipy (version 0.9.0) [62] as the function  . This yields the self-consistent solutions for

. This yields the self-consistent solutions for  and

and  and hence the distribution of time averaged activity (17) can be obtained, shown in Figure 4F.

and hence the distribution of time averaged activity (17) can be obtained, shown in Figure 4F.

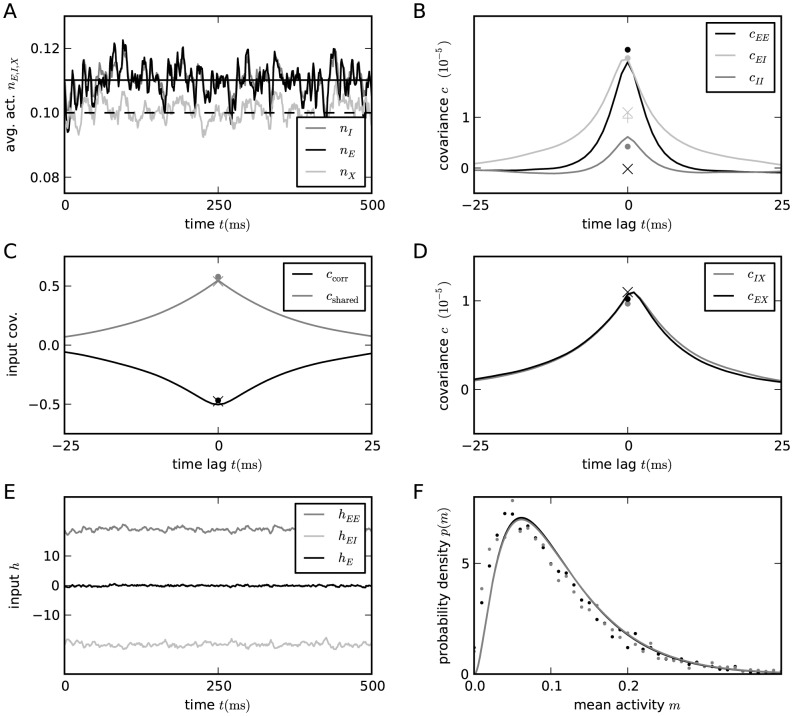

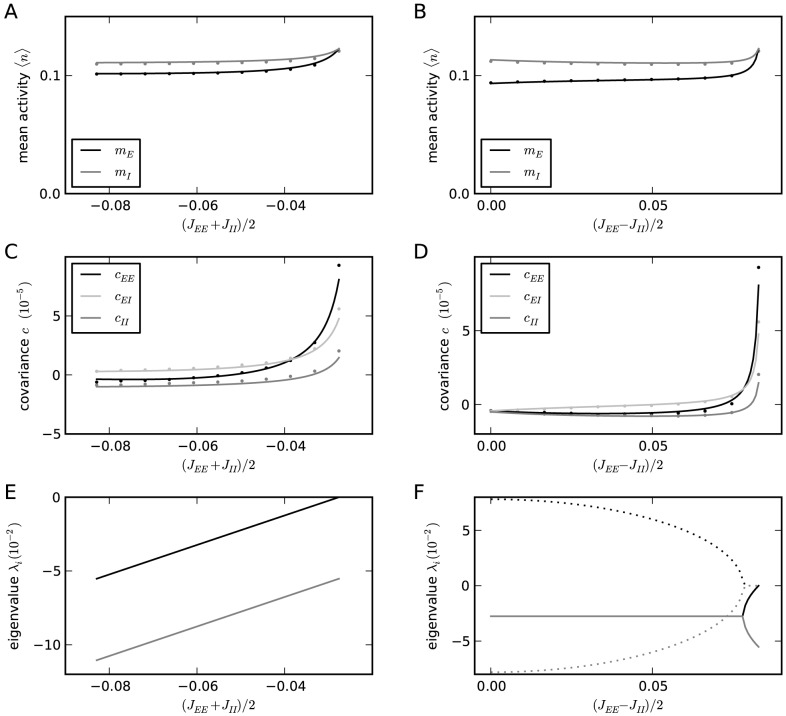

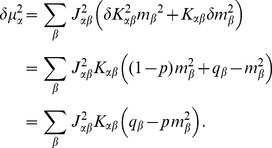

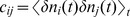

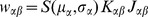

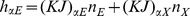

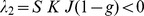

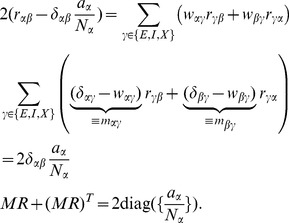

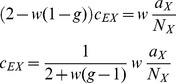

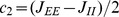

Figure 4. Activity in a network of  binary neurons as described in [24, their Fig. 2], with

binary neurons as described in [24, their Fig. 2], with  ,

,  ,

,  ,

,  ,

,  ,

,  .

.

Number  of synaptic inputs binomially distributed as

of synaptic inputs binomially distributed as  , with connection probability

, with connection probability  . A Population averaged activity (black

. A Population averaged activity (black  , gray

, gray  , light gray

, light gray  ). Analytical prediction (5) for the mean activities

). Analytical prediction (5) for the mean activities  (dashed horizontal line) and numerical solution of mean field equation (7) (solid horizontal line). B Cross correlation between excitatory neurons (black curve), between inhibitory neurons (gray curve), and between excitatory and inhibitory neurons (light gray curve) obtained from simulation. St. Andrew's Crosses show the theoretical prediction from [24, supplement, eqs. 38,39] (prediction yields

(dashed horizontal line) and numerical solution of mean field equation (7) (solid horizontal line). B Cross correlation between excitatory neurons (black curve), between inhibitory neurons (gray curve), and between excitatory and inhibitory neurons (light gray curve) obtained from simulation. St. Andrew's Crosses show the theoretical prediction from [24, supplement, eqs. 38,39] (prediction yields  , so only one cross is visible). Dots show the theoretical prediction (24). The plus symbol shows the prediction for the correlation

, so only one cross is visible). Dots show the theoretical prediction (24). The plus symbol shows the prediction for the correlation  when terms proportional to

when terms proportional to  and

and  are set to zero. C Correlation between the input currents to a pair of excitatory neurons. Contribution due to pairwise correlations

are set to zero. C Correlation between the input currents to a pair of excitatory neurons. Contribution due to pairwise correlations  (black curve) and due to shared input

(black curve) and due to shared input  (gray curve). Symbols show the theoretical predictions based on [24] (crosses) and based on (24) (dots). D Similar to B, but showing the correlations between external neurons and neurons in the excitatory and inhibitory population. E Fluctuating input

(gray curve). Symbols show the theoretical predictions based on [24] (crosses) and based on (24) (dots). D Similar to B, but showing the correlations between external neurons and neurons in the excitatory and inhibitory population. E Fluctuating input  averaged over the excitatory population (black), separated into contributions from excitatory synapses

averaged over the excitatory population (black), separated into contributions from excitatory synapses  (gray) and from inhibitory synapses

(gray) and from inhibitory synapses  (light gray). F Distribution of time averaged activity obtained by direct simulation (symbols) and analytical prediction (17) using the numerically evaluated self-consistent solution for the first

(light gray). F Distribution of time averaged activity obtained by direct simulation (symbols) and analytical prediction (17) using the numerically evaluated self-consistent solution for the first  and second moments

and second moments  ,

,  (19). Duration of simulation

(19). Duration of simulation  , mean activity

, mean activity  , other parameters as in Figure 3.

, other parameters as in Figure 3.

Results

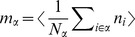

Our aim is to investigate the effect of recurrence and external input on the magnitude and structure of cross-correlations between the activities in a recurrent random network, as defined in “Networks of binary neurons”. We employ the established recurrent neuronal network model of binary neurons in the balanced regime [42]. The binary dynamics has the advantage to be more easily amendable to analytical treatment than spiking dynamics and a method to calculate the pairwise correlations exists [35]. The choice of binary dynamics moreover renders our results directly comparable to the recent findings on decorrelation in such networks [24]. Our model consists of three populations of neurons, one excitatory and one inhibitory population which together represent the local network, and an external population providing additional excitatory drive to the local network, as illustrated in Figure 2. The external population may either be conceived as representing input into the local circuit from remote areas or as representing sensory input. The external population contains  neurons, which are pairwise uncorrelated and have a stochastic activity with mean

neurons, which are pairwise uncorrelated and have a stochastic activity with mean  . Each neuron in population

. Each neuron in population  within the local network draws

within the local network draws  connections randomly from the finite pool of

connections randomly from the finite pool of  external neurons.

external neurons.  therefore determines the number of shared afferents received by each pair of cells from the external population with on average

therefore determines the number of shared afferents received by each pair of cells from the external population with on average  common synapses. In the extreme cases

common synapses. In the extreme cases  all neurons receive exactly the same input, whereas for large

all neurons receive exactly the same input, whereas for large  the fraction of shared external input approaches

the fraction of shared external input approaches  . The common fluctuating input received from the finite-sized external population hence provides a signal imposing pairwise correlations, the amount of which is controlled by the parameter

. The common fluctuating input received from the finite-sized external population hence provides a signal imposing pairwise correlations, the amount of which is controlled by the parameter  .

.

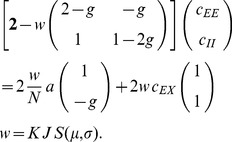

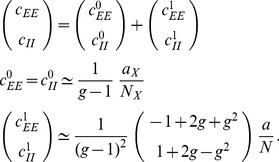

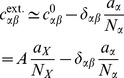

Correlations are driven by intrinsic and external fluctuations

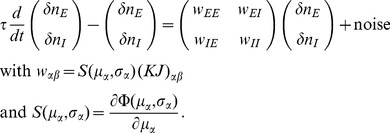

To explain the correlation structure observed in a network with external inputs (Figure 2), we extend the existing theory of pairwise correlations [35] to include the effect of externally imposed correlations. The global behavior of the network can be studied with the help of the mean-field equation (7) for the population-averaged mean activity

| (20) |

where the fluctuations of the input  to a neuron in population

to a neuron in population  are to good approximation Gaussian with the moments

are to good approximation Gaussian with the moments

| (21) |

To determine the average activities in the network, the mean-field equation (20) needs to be solved self-consistently, as the right-hand side depends on the mean activities  through (21), as explained in “Mean-field theory including finite-size correlations”. Here

through (21), as explained in “Mean-field theory including finite-size correlations”. Here  denotes the number of connections from population

denotes the number of connections from population  to

to  , and

, and  their average synaptic amplitude. Once the mean activity in the network has been found, we can determine the structure of correlations. For simplicity we focus on the zero time lag correlation,

their average synaptic amplitude. Once the mean activity in the network has been found, we can determine the structure of correlations. For simplicity we focus on the zero time lag correlation,  , where

, where  is the deflection of neuron

is the deflection of neuron  's activity from baseline and

's activity from baseline and  is the variance of neuron

is the variance of neuron  's activity. Starting from the master equation for the network of binary neurons, in “Methods” for completeness and consistency in notation we re-derive the self-consistent equation that connects the cross covariances

's activity. Starting from the master equation for the network of binary neurons, in “Methods” for completeness and consistency in notation we re-derive the self-consistent equation that connects the cross covariances  averaged over pairs of neurons from population

averaged over pairs of neurons from population  and

and  and the variances

and the variances  averaged over neurons from population

averaged over neurons from population

| (22) |

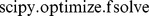

The obtained inhomogeneous system of linear equations (24) reads [35]

| (23) |

Here  measures the effective linearized coupling strength from population

measures the effective linearized coupling strength from population  to population

to population  . It depends on the number of connections

. It depends on the number of connections  from population

from population  to

to  , their average synaptic amplitude

, their average synaptic amplitude  and the susceptibility

and the susceptibility  of neurons in population

of neurons in population  . The susceptibility

. The susceptibility  given by (8) quantifies the influence of fluctuation in the input to a neuron in population

given by (8) quantifies the influence of fluctuation in the input to a neuron in population  on the output.

on the output.  depends on the working point

depends on the working point  of the neurons in population

of the neurons in population  . The autocorrelations

. The autocorrelations  ,

,  and

and  are the inhomogeneity in the system of equations, so they drive the correlations, as pointed out earlier [35]. This is in line with the linear theories [17], [30] for leaky integrate-and-fire model neurons, where cross-correlations are proportional to the auto-correlations; the system of equations (23) is identical to [35, eqs. (9.14)–(9.16)]. Note that this description holds for finite-sized networks. With the symmetry

are the inhomogeneity in the system of equations, so they drive the correlations, as pointed out earlier [35]. This is in line with the linear theories [17], [30] for leaky integrate-and-fire model neurons, where cross-correlations are proportional to the auto-correlations; the system of equations (23) is identical to [35, eqs. (9.14)–(9.16)]. Note that this description holds for finite-sized networks. With the symmetry  , (23) can be written in matrix form as

, (23) can be written in matrix form as

|

(24) |

The explicit forms of the matrices  are given in (11). This system of linear equations can be solved by elementary methods. From the structure of the equations it follows, that the correlations between the external input and the activity in the network,

are given in (11). This system of linear equations can be solved by elementary methods. From the structure of the equations it follows, that the correlations between the external input and the activity in the network,  and

and  , are independent of the other correlations in the network. They are solely determined by the solution of the system of equations in the second line of (24), driven by the fluctuations of the external drive

, are independent of the other correlations in the network. They are solely determined by the solution of the system of equations in the second line of (24), driven by the fluctuations of the external drive  . The correlations among the neurons within the network are given by the solution of the first system in (24). They are hence driven by two terms, the fluctuations of the neurons within the network proportional to

. The correlations among the neurons within the network are given by the solution of the first system in (24). They are hence driven by two terms, the fluctuations of the neurons within the network proportional to  and

and  and the correlations between the external population and the neurons in the network,

and the correlations between the external population and the neurons in the network,  and

and  .

.

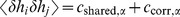

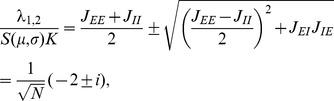

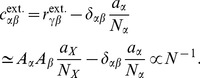

The second line of (24) shows that all correlations depend on the size  of the external population. Since the number

of the external population. Since the number  of randomly drawn afferents per neuron from this population is constant, the mean number of shared inputs to a pair of neurons is

of randomly drawn afferents per neuron from this population is constant, the mean number of shared inputs to a pair of neurons is  . In the extreme case

. In the extreme case  on the left of Figure 3 all neurons receive exactly identical input. If the recurrent connectivity would be absent, we would hence have perfectly correlated activity within the local network, the covariance between two neurons would be equal to their variance

on the left of Figure 3 all neurons receive exactly identical input. If the recurrent connectivity would be absent, we would hence have perfectly correlated activity within the local network, the covariance between two neurons would be equal to their variance  , in this particular network

, in this particular network  . Figure 3A shows that the covariance in the recurrent network is much smaller; on the order of

. Figure 3A shows that the covariance in the recurrent network is much smaller; on the order of  . The reason is the recently reported mechanism of decorrelation [24], explained by the negative feedback in inhibition-dominated networks [17]. Increasing the size of the external population decreases the amount of shared input, as shown in Figure 3C. In the limit where the external drive is replaced by a constant value (visualized as point “

. The reason is the recently reported mechanism of decorrelation [24], explained by the negative feedback in inhibition-dominated networks [17]. Increasing the size of the external population decreases the amount of shared input, as shown in Figure 3C. In the limit where the external drive is replaced by a constant value (visualized as point “ ”), the external drive does consequently not contribute to correlations in the network. Figure 3A shows that the relative position of the three curves does not change with

”), the external drive does consequently not contribute to correlations in the network. Figure 3A shows that the relative position of the three curves does not change with  . The overall offset, however, changes. This can be understood by inspecting the analytical result (24): The solution of this system of linear equations is a superposition of two contributions. One is due to the externally imposed fluctuations, proportional to

. The overall offset, however, changes. This can be understood by inspecting the analytical result (24): The solution of this system of linear equations is a superposition of two contributions. One is due to the externally imposed fluctuations, proportional to  , the other is due to fluctuations generated within the local network, proportional to

, the other is due to fluctuations generated within the local network, proportional to  and

and  . Varying the size of the external population only changes the external contribution, causing the variation in the offset, while the internal contribution, causing the splitting between the three curves, remains constant. In the extreme case

. Varying the size of the external population only changes the external contribution, causing the variation in the offset, while the internal contribution, causing the splitting between the three curves, remains constant. In the extreme case  (

( ), we still observe a similar structure. The slightly larger splitting is due to the reduced variance

), we still observe a similar structure. The slightly larger splitting is due to the reduced variance  in the single neuron input, which consequently increases the susceptibility

in the single neuron input, which consequently increases the susceptibility  (8).

(8).

Figure 3D shows the probability distribution of the input  to a neuron in population

to a neuron in population  . The histogram is well approximated by a Gaussian. The first two moments of this Gaussian are

. The histogram is well approximated by a Gaussian. The first two moments of this Gaussian are  and

and  given by (21), if correlations among the afferents are neglected. This approximation deviates from the result of direct simulation. Taking the correlations among the afferents into account affects the variance in the input according to (13). The latter approximation is a better estimate of the input statistics, as shown in Figure 3D. This improved estimate can be accounted for in the solution of the mean-field equation (20), which in turn affects the correlations via the susceptibility

given by (21), if correlations among the afferents are neglected. This approximation deviates from the result of direct simulation. Taking the correlations among the afferents into account affects the variance in the input according to (13). The latter approximation is a better estimate of the input statistics, as shown in Figure 3D. This improved estimate can be accounted for in the solution of the mean-field equation (20), which in turn affects the correlations via the susceptibility  . Iterating this procedure until convergence, as explained in “Mean-field theory including finite-size correlations”, yields the semi-analytical results presented in Figure 3.

. Iterating this procedure until convergence, as explained in “Mean-field theory including finite-size correlations”, yields the semi-analytical results presented in Figure 3.

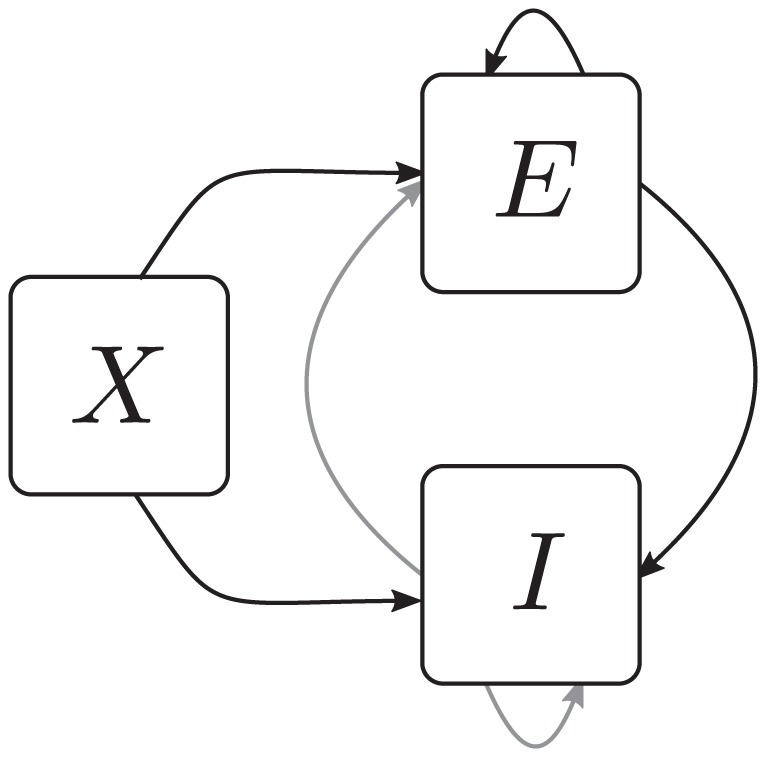

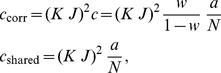

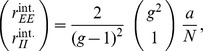

Cancellation of input correlations

For strongly coupled networks in the limit of large network size, previous work [24], [52] derived a balance equation for the correlations between pairs of neurons. The expressions for the correlations are approximate at finite network size and become exact for infinitely large networks. The authors show that the resulting structure of correlations amounts to a suppression of the correlations between the input currents to a pair of cells and that the population-averaged activity closely follows the fluctuations imposed by the external drive, known as fast tracking [42]. Here we revisit these three observations - the correlation structure, the input correlation, and fast tracking - from a different view point, providing an explanation based on the suppression of population rate fluctuations by negative feedback [17].

Figure 4A shows the population activities in a network of three populations for fixed numbers of neurons  and otherwise identical parameters as in [24, their Fig. 2]. Moreover, we distributed the number of incoming connections

and otherwise identical parameters as in [24, their Fig. 2]. Moreover, we distributed the number of incoming connections  per neuron according to a binomial distribution as in the original publication. The deflections of the excitatory and the inhibitory population partly resemble those of the external drive to the network, but partly the fluctuations are independent. Our theoretical result for the correlation structure (24) is in line with this observation: the fluctuations in the network are not only driven by external input (proportional to

per neuron according to a binomial distribution as in the original publication. The deflections of the excitatory and the inhibitory population partly resemble those of the external drive to the network, but partly the fluctuations are independent. Our theoretical result for the correlation structure (24) is in line with this observation: the fluctuations in the network are not only driven by external input (proportional to  ), but also by the fluctuations generated within the local populations (proportional to

), but also by the fluctuations generated within the local populations (proportional to  and

and  ), so the tracking cannot be perfect in finite-sized networks.

), so the tracking cannot be perfect in finite-sized networks.

We now consider the fluctuations in the input averaged over all neurons  belonging to a particular population

belonging to a particular population  ,

,  . We can decompose the input

. We can decompose the input  to the population

to the population  into contributions from excitatory (local and external) and from inhibitory cells,

into contributions from excitatory (local and external) and from inhibitory cells,  and

and  , respectively, where we used the short hand

, respectively, where we used the short hand  . As shown in Figure 4E, the contributions of excitation and inhibition cancel each other so that the total input fluctuates close to the threshold (

. As shown in Figure 4E, the contributions of excitation and inhibition cancel each other so that the total input fluctuates close to the threshold ( ) of the neurons: the network is in the balanced state [42]. Moreover, this cancellation not only holds for the mean value, but also for fast fluctuations, which are consequently reduced in the sum

) of the neurons: the network is in the balanced state [42]. Moreover, this cancellation not only holds for the mean value, but also for fast fluctuations, which are consequently reduced in the sum  compared to the individual components

compared to the individual components  and

and  (Figure 4E).

(Figure 4E).

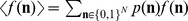

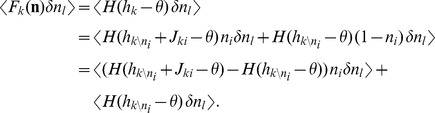

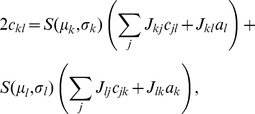

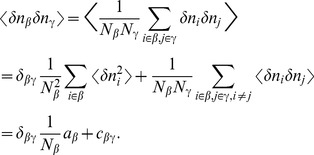

We next show that this suppression of fluctuations directly implies a relation for the correlation  between the inputs to a pair

between the inputs to a pair  of individual neurons. There are two distinct contributions to this correlation

of individual neurons. There are two distinct contributions to this correlation  , one due to common inputs shared by the pair of neurons (both neurons

, one due to common inputs shared by the pair of neurons (both neurons  assumed to belong to population

assumed to belong to population  )

)

| (25) |

and one due to the correlations between afferents

| (26) |

Figure 4C shows these two contributions to be of opposite sign but approximately same magnitude, as already shown in [24, supplement] and in [17]. Figure 3C shows a further decomposition of the input correlation into contributions due to the external sources and due to connections from within the local network. The sum of all components is much smaller than each individual component. This cancellation is equivalent to small fluctuations in the population-averaged input  , because

, because

|

(27) |

where in the second step we used the general relation between the covariance  among two population averaged signals

among two population averaged signals  and

and  , the population-averaged variance

, the population-averaged variance  , and the pairwise averaged covariances

, and the pairwise averaged covariances  , which reads [17, cf. eq. (1)]

, which reads [17, cf. eq. (1)]

|

(28) |

We have therefore shown that the cancellation of the contribution of shared input  with the contribution due to the correlations among cells

with the contribution due to the correlations among cells  is equivalent to a suppression of the fluctuations in the population-averaged input signal to the population

is equivalent to a suppression of the fluctuations in the population-averaged input signal to the population  .

.

This suppression of fluctuations in the population-averaged input is a consequence of the overall negative feedback in these networks [17]: a fluctuation  of the population averaged input

of the population averaged input  causes a response in network activity which is coupled back with a negative sign, counteracting its own cause and hence suppressing the fluctuation

causes a response in network activity which is coupled back with a negative sign, counteracting its own cause and hence suppressing the fluctuation  . Expression (27) is an algebraic identity showing that hence also correlations between the total inputs to a pair of cells must be suppressed. Qualitatively this property can be understood by inspecting the mean-field equation (7) for the population-averaged activities, where we linearized the gain function

. Expression (27) is an algebraic identity showing that hence also correlations between the total inputs to a pair of cells must be suppressed. Qualitatively this property can be understood by inspecting the mean-field equation (7) for the population-averaged activities, where we linearized the gain function  around the stationary mean-field solution to obtain

around the stationary mean-field solution to obtain

|

(29) |

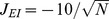

Here the noise term qualitatively describes the fluctuations caused by the stochastic update process and the external drive (see [53] for the appropriate treatment of the noise). After transformation into the coordinate system of eigenvectors  (with eigenvalue

(with eigenvalue  ) of the effective connectivity matrix

) of the effective connectivity matrix  , each component fulfills the differential equation

, each component fulfills the differential equation

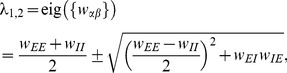

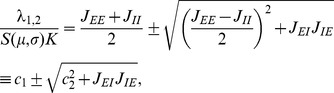

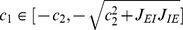

For stability the eigenvalues must satisfy  . In the example of the

. In the example of the  network shown in Figure 4 we have the two eigenvalues

network shown in Figure 4 we have the two eigenvalues

|

(30) |

which in the case of identical susceptibility  for all populations can be expressed in terms of the synaptic weights

for all populations can be expressed in terms of the synaptic weights

|

(31) |

where in the second line we inserted the numerical values of Figure 4. The fluctuations  are hence suppressed so the contributions

are hence suppressed so the contributions  to the fluctuations on the input side are small. This explains why fluctuations of

to the fluctuations on the input side are small. This explains why fluctuations of  are small in networks stabilized by negative feedback. This argument also shows why the suppression of input-correlations does not rely on a balance between excitation and inhibition; it is as well observed in purely inhibitory networks of leaky integrate-and-fire neurons [17, cf. text following eq. (21) therein] and of binary neurons [52, eq. (30)], where the overall negative feedback suppresses population fluctuations

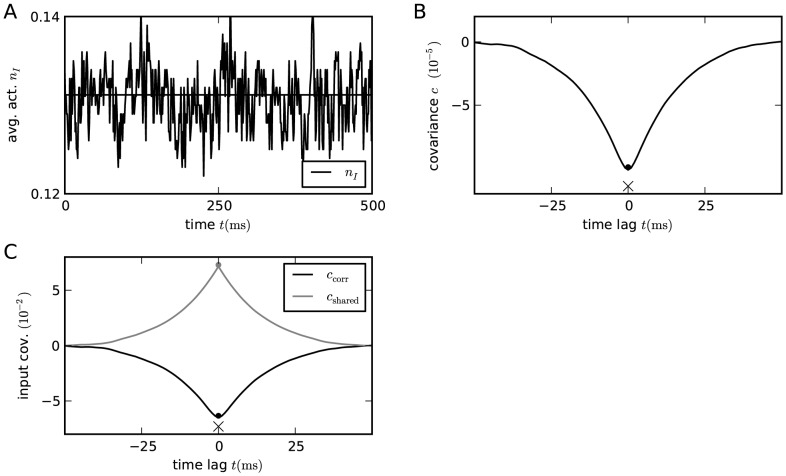

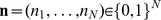

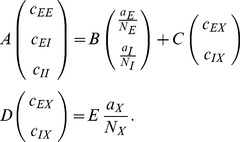

are small in networks stabilized by negative feedback. This argument also shows why the suppression of input-correlations does not rely on a balance between excitation and inhibition; it is as well observed in purely inhibitory networks of leaky integrate-and-fire neurons [17, cf. text following eq. (21) therein] and of binary neurons [52, eq. (30)], where the overall negative feedback suppresses population fluctuations  in exactly the same manner, as the only appearing eigenvalue in this case is negative. Figure 5 shows the correlations in a purely inhibitory network without any external fluctuating drive. In this network the neurons are autonomously active due to a negative threshold

in exactly the same manner, as the only appearing eigenvalue in this case is negative. Figure 5 shows the correlations in a purely inhibitory network without any external fluctuating drive. In this network the neurons are autonomously active due to a negative threshold  , which, by the cancellation argument

, which, by the cancellation argument  , was chosen to obtain a mean activity of about

, was chosen to obtain a mean activity of about  . Pairwise correlations in the finite-sized network follow from (23) to be negative,

. Pairwise correlations in the finite-sized network follow from (23) to be negative,

| (32) |

and approach  in the limit of strong coupling, as also shown in [52, eq. 30]. The contributions to the input correlation follow from (25) and (26) as

in the limit of strong coupling, as also shown in [52, eq. 30]. The contributions to the input correlation follow from (25) and (26) as

|

(33) |

so that for strong negative feedback  the contribution due to correlations approaches

the contribution due to correlations approaches  . In this limit the two contributions cancel each other as in the inhibition-dominated network with excitation and inhibition. Note, however, that the presence of externally imposed fluctuations is not required for the mechanism of cancellation by negative feedback. The negative feedback suppresses also purely network generated fluctuations. For finite coupling we have

. In this limit the two contributions cancel each other as in the inhibition-dominated network with excitation and inhibition. Note, however, that the presence of externally imposed fluctuations is not required for the mechanism of cancellation by negative feedback. The negative feedback suppresses also purely network generated fluctuations. For finite coupling we have  , so the total currents are always positively correlated.

, so the total currents are always positively correlated.

Figure 5. Suppression of correlations by purely inhibitory feedback in absence of external fluctuations.

Activity in a network of  binary inhibitory neurons with synaptic amplitudes

binary inhibitory neurons with synaptic amplitudes  . Each neuron receives

. Each neuron receives  randomly drawn inputs (fixed in-degree) with

randomly drawn inputs (fixed in-degree) with  . A Population averaged activity. Numerical solution of mean field equation (7) (solid horizontal line). B Cross covariance between inhibitory neurons. Theoretical result (32) shown as dot. St. Andrew's Cross indicates the leading order term

. A Population averaged activity. Numerical solution of mean field equation (7) (solid horizontal line). B Cross covariance between inhibitory neurons. Theoretical result (32) shown as dot. St. Andrew's Cross indicates the leading order term  . C Correlation between the input currents to a pair of excitatory neurons. The black curve is the contribution due to pairwise correlations

. C Correlation between the input currents to a pair of excitatory neurons. The black curve is the contribution due to pairwise correlations  , the gray curve is the contribution of shared input

, the gray curve is the contribution of shared input  . The dot symbols show the theoretical expectations (33) based on the leading order (crosses) and based on the full solution (32) (dot). Threshold of neurons

. The dot symbols show the theoretical expectations (33) based on the leading order (crosses) and based on the full solution (32) (dot). Threshold of neurons  .

.

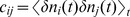

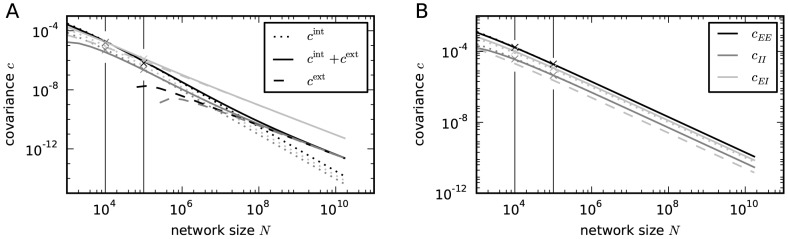

An interesting special case is a network with homogeneous connectivity, as studied in “Correlations are driven by intrinsic and external fluctuations”, where  and

and  , shown in Figure 6. In this symmetric case there is only one negative eigenvalue

, shown in Figure 6. In this symmetric case there is only one negative eigenvalue  . The other eigenvalue is

. The other eigenvalue is  , so fluctuations are only mildly suppressed in direction

, so fluctuations are only mildly suppressed in direction  . However, on the input side of the neurons, these fluctuations are not seen, since their contribution to the input field is by the vanishing eigenvalue

. However, on the input side of the neurons, these fluctuations are not seen, since their contribution to the input field is by the vanishing eigenvalue  . Another consequence of the vanishing eigenvalue is that the system can freely fluctuate along the eigendirection

. Another consequence of the vanishing eigenvalue is that the system can freely fluctuate along the eigendirection  . Consequently the tracking of the external signal is much weaker in this case, as evidenced in Figure 6A.

. Consequently the tracking of the external signal is much weaker in this case, as evidenced in Figure 6A.

Figure 6. Activity in a network of  binary neurons with synaptic amplitudes

binary neurons with synaptic amplitudes  ,

,  depending exclusively on the type of the sending neuron (

depending exclusively on the type of the sending neuron ( or

or  ).

).