Abstract

Lensfree on-chip imaging and sensing platforms provide compact and cost-effective designs for various telemedicine and lab-on-a-chip applications. In this work, we demonstrate computational solutions for some of the challenges associated with (i) the use of broadband, partially-coherent illumination sources for on-chip holographic imaging, and (ii) multicolor detection for lensfree fluorescent on-chip microscopy. Specifically, we introduce spectral demultiplexing approaches that aim to digitally narrow the spectral content of broadband illumination sources (such as wide-band light emitting diodes or even sunlight) to improve spatial resolution in holographic on-chip microscopy. We also demonstrate the application of such spectral demultiplexing approaches for wide-field imaging of multicolor fluorescent objects on a chip. These computational approaches can be used to replace e.g., thin-film interference filters, gratings or other optical components used for spectral multiplexing/demultiplexing, which can form a desirable solution for cost-effective and compact wide-field microscopy and sensing needs on a chip.

The optical microscope has been a very important tool for various fields, including engineering, medicine and sciences. Over the last decades, various breakthrough advances pushed the limits of optical microscopy in terms of resolution, contrast, signal-to-noise ratio (SNR), imaging speed, etc. opening up new applications for light microscopy. However, most of these advanced optical microscopy techniques are limited to advanced research and clinical settings due to their relatively high cost, complexity and weight. Computational microscopy tools1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18, on the other hand, offer more accessible optical imaging platforms by replacing costly and complex optical hardware with image reconstruction algorithms. Lensfree on-chip imaging and sensing platforms form an important example of this research theme. Besides reducing the cost and optical complexity, these lensfree microscopy techniques also aim to provide field-portability as well as compatibility with microfluidic devices by enabling wide field-of-view and high-throughput imaging without sacrificing resolution, making them highly desirable for various telemedicine19,20,21,22 and lab-on-a-chip5,23,24,25,26 applications.

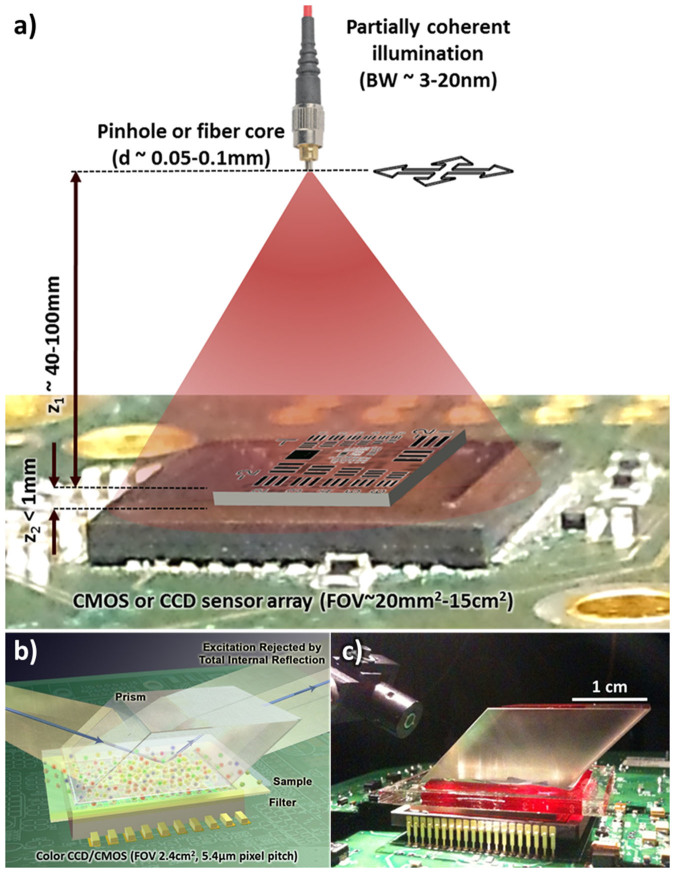

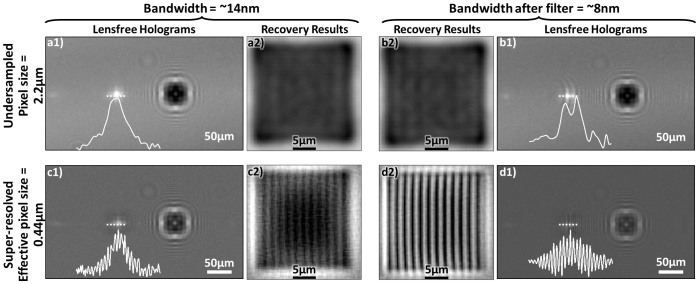

Despite their design simplicity, on-chip imaging approaches also present some challenges that need to be addressed to improve the imaging performance, including the spatial resolution1,27,28. The first one of these challenges is a result of placing the objects very close to the detector array (i.e., on a chip) as illustrated in Fig. 1.a. This short vertical distance (e.g., z2 < 1 mm) is necessary to maintain a decent SNR for both lensfree holographic and fluorescent imaging on a chip and also allows all of the active area of the detector array to serve as the imaging field of view (FOV), typically achieving a FOV of for example 15–30 mm2 and >1–20 cm2 using CMOS and CCD image sensors, respectively. However, this small z2 choice of on-chip microscopy tools also causes the pixel size of the detector to be a potential limiting factor toward achieving a high spatial resolution without the use of any lenses. As a result, on-chip holograms of objects suffer from under-sampling and aliasing artifacts as illustrated in Fig. 2. Under the assumption that the illumination is sufficiently narrow-band (e.g., ~3–5 nm), these spatial under-sampling artifacts can be well addressed by implementing pixel super resolution29,30,31 methods on lensfree geometries based on e.g., source29,31,32 or object shifting24,33. Another challenge with on-chip imaging is due to the absence of lenses; i.e., one needs to reconstruct diffracted fields of the objects, which constitute (i) partially-coherent fields as in the case of holography and (ii) incoherent fields as in the case of fluorescent objects. Depending on the imaging geometry and coherence properties of the diffracted object fields, iterative phase retrieval34,35,36 techniques or compressive decoding37 algorithms38,39 can be used to digitally reconstruct the images of the objects from their intensity measurements. These previous reconstruction approaches1,22,26,40 assumed a single wavelength of operation, and the effective bandwidth of the illumination source or the fluorescent emitters was approximated to be narrow, i.e., quasi-monochromatic. This assumption, while valid in most cases, could introduce image artifacts and resolution limitations in on-chip imaging; for example in holographic on-chip microscopy a large bandwidth for the source (e.g., 20–30 nm) would result in a reduced temporal coherence length, which would also reduce the effective numerical aperture that can be synthesized after a pixel super-resolution technique is applied.

Figure 1. Lensfree holographic and fluorescent computational microscopy set-ups.

(a) A schematic of the partially coherent, on-chip holographic microscopy setup. (b) A schematic and (c) a photograph of the lensfree, on-chip multicolor fluorescence microscopy platform.

Figure 2. Effects of pixel size and illumination bandwidth on lensfree image reconstruction quality.

(a and b) Effects of under-sampling and (a and c) lack of temporal coherence for lensfree holographic on-chip microscopy. (c and d) The use of pixel super resolution to overcome spatial under-sampling. The cross-sections along the first diffraction orders together with the recovery results demonstrate the effects of spatial sampling rate and spectral bandwidth on image quality.

Compared to highly coherent laser sources, in our on-chip holographic imaging geometry partially coherent light sources such as light emitting diodes (LEDs)41 are preferred to mitigate speckle and multiple reflection interference noise terms. Therefore, despite their limited temporal coherence, LEDs are highly desired for field-portable pixel-super resolution microscopy24,31,32,42. In addition to significantly reduced speckle and multiple reflection interference noise, such broader band sources also improve the light efficiency and allow shorter image acquisition times as well as longer object-to-sensor distances. Therefore, computational demultiplexing methods are highly desirable toward digitally relaxing the temporal coherence limitation of broadband sources in on-chip holographic imaging. Another area where such computational spectral demultiplexing approaches can be particularly useful is lensfree on-chip fluorescent imaging, where the incoherent fields of various fluorescent emitters of different colors can be resolved despite their spatial overlap at the sensor plane.

Toward this end, here we demonstrate a set of computational spectral demultiplexing approaches for broad-band holographic and multicolor fluorescent on-chip imaging. The first of these techniques will focus on holographic on-chip microscopy, where we report a polychromatic phase retrieval approach to demultiplex the superimposed spectral components of the object holograms recorded with a broadband LED illumination. Based on a similar idea, we also demonstrate a field-portable holographic microscope performing multispectral holographic on-chip imaging with sunlight43 illumination over a wide field-of-view of e.g., 15–30 mm2. In this second approach, the superimposed holograms are digitally recovered with the knowledge of the spectral response curves of both the pixels of the CMOS sensor chip and the illumination spectra through the use of a compressive decoding method, which provides a decent fit since this inverse problem is underdetermined and the multispectral spatial information of our objects is naturally redundant. Finally, we report an on-chip multicolor fluorescent microscopy method with a wide imaging field-of-view of e.g., ~2.4 cm2. In this fluorescent imaging setup, the incoherent signatures from the objects are detected by a color (i.e., RGB) sensor-array that is placed at < 0.5 mm away from the sample plane. Diffraction correction and spectral demultiplexing of these multicolor lensfree fluorescent signatures are simultaneously performed through a sparse signal recovery algorithm with the knowledge of the system point spread function (PSF) at different wavelengths and the sensor's spectral response curve. These computational demultiplexing approaches can in general be useful to replace various color filters, gratings or other optical components, providing a desirable solution for cost-effective and compact on-chip holographic and fluorescent microscopy even in field-conditions.

Results

When the illumination source for lensfree holographic on-chip microscopy is not monochromatic, as in the case of broadband LEDs, the recorded lensfree hologram ( ) can be represented as an incoherent superposition of weighted monochromatic holograms (

) can be represented as an incoherent superposition of weighted monochromatic holograms ( ), such that:

), such that:

|

where  ,

,  is the reference wave and

is the reference wave and  is the object wave for each wavelength. The overall contribution (ci) of each wavelength within the broad illumination spectrum is determined by (i) the specific spectrum of the illumination source and (ii) the spectral responsivity of the sensor chip. This weighted summation of these monochromatic holograms causes a spatial smearing effect in the measured broadband hologram, decreasing the fringe visibility and causing some of the high resolution information embedded within these fringes to be buried under the background noise as illustrated in Fig. 2. Another way to explain this effect is that the temporal coherence length shrinks as the source bandwidth (

is the object wave for each wavelength. The overall contribution (ci) of each wavelength within the broad illumination spectrum is determined by (i) the specific spectrum of the illumination source and (ii) the spectral responsivity of the sensor chip. This weighted summation of these monochromatic holograms causes a spatial smearing effect in the measured broadband hologram, decreasing the fringe visibility and causing some of the high resolution information embedded within these fringes to be buried under the background noise as illustrated in Fig. 2. Another way to explain this effect is that the temporal coherence length shrinks as the source bandwidth ( ) broadens, i.e.,

) broadens, i.e.,

|

where n is the refractive index of the medium and  is the centre wavelength of the source. This reduced temporal coherence length of a broadband source limits the maximum spatial resolution and causes reconstruction artifacts, which become more pronounced especially for sub-micron spatial features of an object. To address this important challenge, here we use a polychromatic phase retrieval (PolyPR) approach to digitally relax the temporal coherence limitation and reduce the spatial artefacts due to monochromatic treatment of the holograms created with broadband illumination.

is the centre wavelength of the source. This reduced temporal coherence length of a broadband source limits the maximum spatial resolution and causes reconstruction artifacts, which become more pronounced especially for sub-micron spatial features of an object. To address this important challenge, here we use a polychromatic phase retrieval (PolyPR) approach to digitally relax the temporal coherence limitation and reduce the spatial artefacts due to monochromatic treatment of the holograms created with broadband illumination.

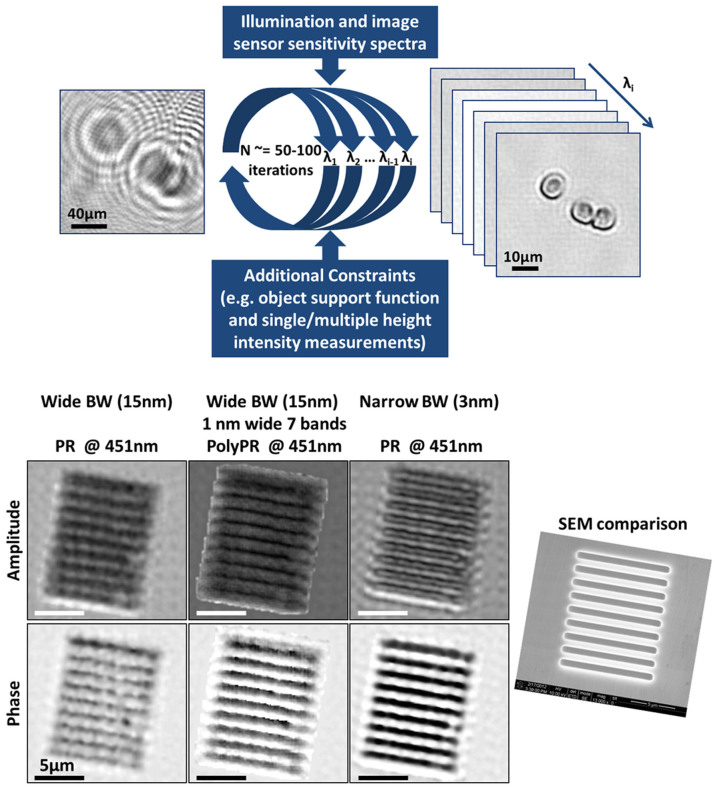

To demonstrate how this polychromatic phase recovery approach can significantly improve the reconstruction quality under wide-band LED illumination, we designed a holographic on-chip imaging experiment (as in Fig. 1a) where the spatial resolution is limited by the temporal coherence of the source. For this end, the lensfree holograms of a 1.5 μm period phase grating that is illuminated with a 15 nm bandwidth LED are recorded with a CMOS sensor-array (1.12 μm pixel pitch) and are used to create a single, pixel super-resolved hologram24,31,32. This resulting hologram is then processed/reconstructed using (i) the regular phase recovery approach22 under the assumption that the source is quasi-monochromatic, and (ii) the PolyPR algorithm, which is detailed in Fig. 3 (top), Supp. Fig. 2 and the Methods section. The comparison of the reconstructed images in each case is demonstrated in Fig. 3 (bottom), where PolyPR approach presents significant improvements over the regular phase retrieval results, especially for the phase images.

Figure 3. Polychromatic Phase Retrieval (PolyPR).

PolyPR improves the spatial resolution by relaxing the limitations due to broadband illumination and lack of sufficient temporal coherence. (Top) A block diagram explaining the PolyPR method. (Bottom) Regular and polychromatic phase retrieval results for a 1.5 μm-period grating, which is illuminated with wide (15 nm) and narrow (3 nm) bandwidth (BW) LEDs.

We should emphasize that the recovery of a spectral image cube from a single broadband measurement is in general an underdetermined problem. As a result, despite being superior to the broadband illumination followed by regular PR case, PolyPR based results cannot match the performance of the narrowband illumination under regular PR case. Also, PolyPR requires more iterations to converge compared to regular PR (e.g., ~100 vs. 15), which may make some of the edge artefacts of object support-based phase retrieval technique more pronounced. This, however, can be mitigated by using different constraints such as multi-height intensity measurements, which do not need any object support information for phase retrieval42.

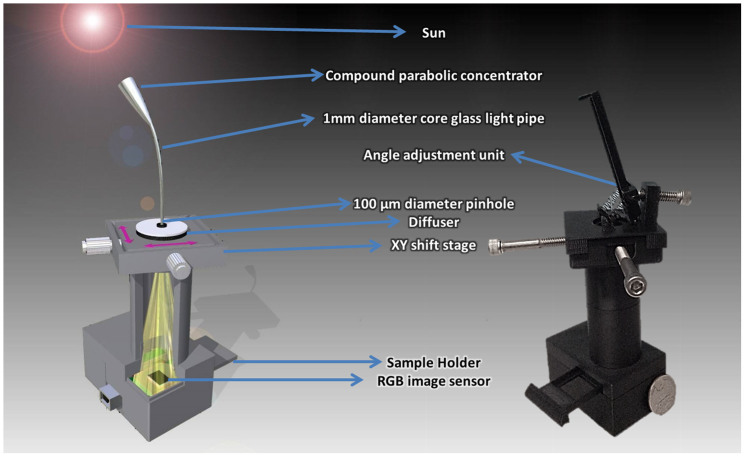

After these initial imaging results obtained with a broadband LED, we tested our technique by using the sun as our illumination source. Sunlight had been frequently used as a source in optics experiments until other light sources such as incandescent light bulbs were invented. Providing an easy-to-access and rather broad spectral content, the sunlight still presents a rich source11 that could potentially be useful in multispectral imaging to extract the wavelength-dependent features of microscopic objects of interest. In this context, to investigate the use of sunlight as an illumination source for our on-chip holography set-up, we created a portable holographic microscopy platform working with sunlight (see Fig. 4 and the Methods section for details). In this hand-held platform, sunlight based lensfree holograms are separated into multiple narrowband holograms by using a compressive sampling/sensing based algorithm, which is summarized in Fig. 5 (top) and is further detailed in the Methods section. The experimental performance of this platform is demonstrated by imaging a US Air Force Resolution Target. Our spectral demultiplexing approach clearly improved the quality of the recovered images (see Fig. 5, bottom), where we can reach a spatial resolution of ~2.3 μm over a field of view of ~15 mm2 using our field-portable holographic sunlight microscope (Fig. 4).

Figure 4. A field-portable, on-chip platform is designed to perform holographic imaging with sunlight.

(left) A detailed schematic and (right) a photo of our portable, on-chip platform for holographic imaging using sunlight.

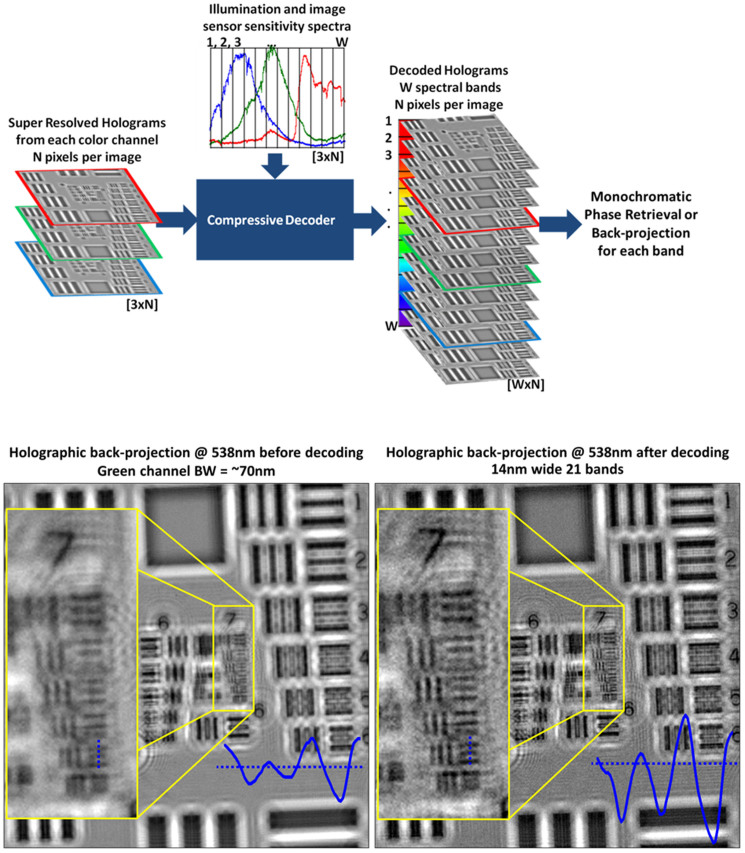

Figure 5. Spectral demultiplexing of lensfree sunlight holograms using compressive decoding.

(Top) A block diagram explaining our decoding method used to demultiplex the lensfree sunlight holograms into narrower spectral bands. (Bottom) Back-projection results are reported for lensfree holograms of a resolution test chart, before and after the spectral demultiplexing step. The inset images clearly show the improvement, (bottom, right inset) where a spatial resolution of ~2.3 μm is demonstrated.

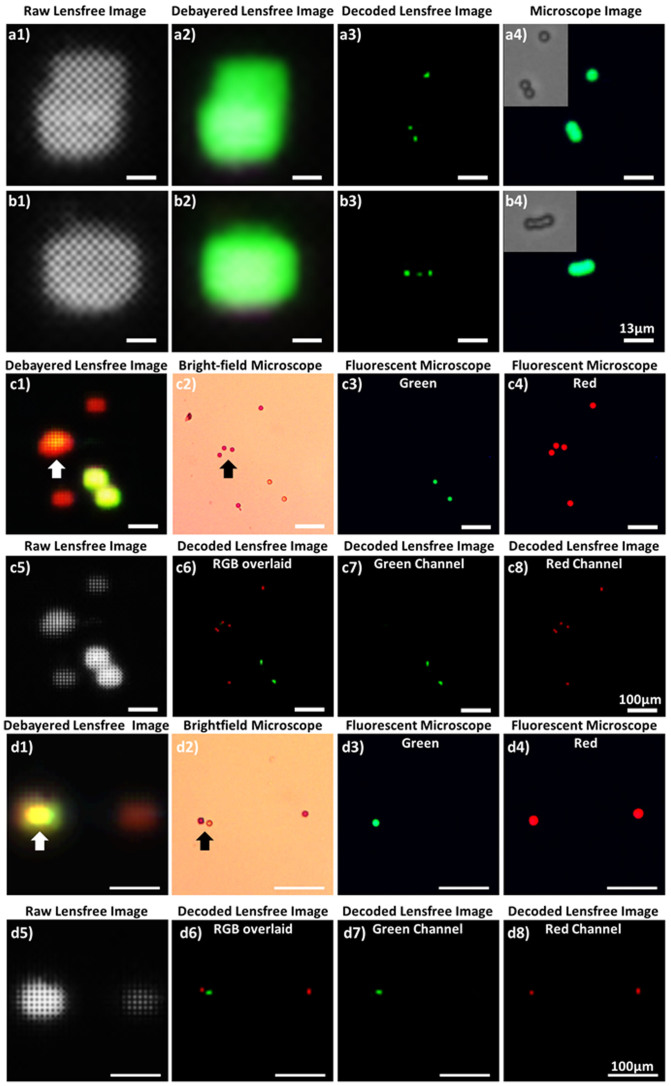

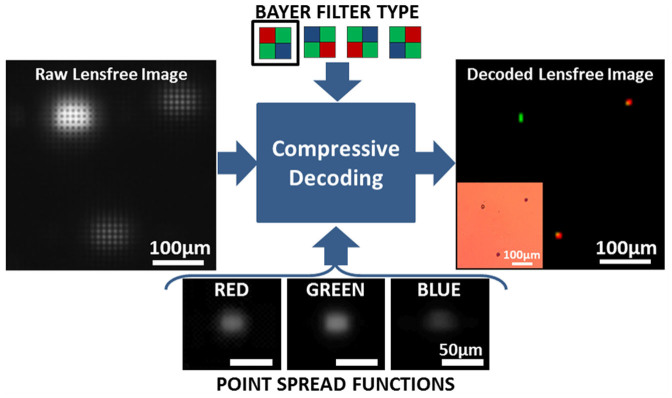

In addition to their use for lensfree holographic on-chip imaging, as discussed in our Introduction, computational spectral demultiplexing methods can also play a critical role for improving the performance of multicolor on-chip fluorescent microscopy. Performing fluorescence imaging without lenses by using an on-chip geometry provides a very large field-of-view, easily reaching e.g., >10–20 cm2 25,26. To further increase the multiplexing capabilities and the specificity of on-chip fluorescent imaging, performing simultaneous multicolor detection is highly desirable. For this purpose, we created a lensfree fluorescent on-chip microscopy platform, as shown in Figs. 1.b and c, to image multicolor fluorescent emitters simultaneously. We also developed a decoding method (summarized in Fig. 6, with further details given in the Methods section) that simultaneously recovers the position and the color of the fluorescent emitters. The performance of this on-chip fluorescent imaging approach is tested with 4 μm green (excitation/emission: 505/515 nm) fluorescent beads and 10 μm red (excitation/emission: 580/605 nm) and green (excitation/emission: 505/515 nm) fluorescent beads as shown in Fig. 7. The raw fluorescent signatures were modulated with the Bayer pattern and exhibit severe spatial overlap due to the lensfree nature of our on-chip imaging platform (see e.g., Fig. 7.a1, b1, c5 and d5). These multicolor fluorescent objects and their spatial distribution can be successfully recovered as illustrated in Fig. 7, which is cross-validated using fluorescent and bright field microscope images of the same objects. This simultaneous multicolor reconstruction feature further boosts up the throughput and the multiplexing capability of our wide-field on-chip imaging platform, which can be useful for various applications including for example imaging and screening of rare cells using large volume micro-fluidic chips.

Figure 6. Block diagram explaining our decoding method for lensfree multicolor fluorescence imaging.

Lensfree, simultaneous multicolor fluorescence imaging on a chip based on sparse signal recovery is illustrated.

Figure 7. Lensfree simultaneous multicolor fluorescent imaging on a chip.

The performance of the decoding method summarized in Fig. 6 is demonstrated with (a and b) 4 μm green fluorescent beads and (c and d) 10 μm red and green fluorescent beads. (a1, b1, c5 and d5) Raw, lensfree fluorescence signatures of these microbeads are cropped from a large field-of-view of ~2.42 cm2. These raw images are decoded to retrieve color and spatial distribution information. (a3, b3, c6, c7, c8, d6, d7, and d8) Decoding results are verified with (a4 and b4 insets) bright-field and (c3, c4, d3, and d4) fluorescent microscope images of the same objects. (a2, b2, c1, and d1) The debayered lensfree images illustrate the limitations of the color sensor for lensfree fluorescent imaging without our demultiplexing method. The arrows are highlighting two regions, where our method effectively resolves the color as well as spatial overlap of lensfree fluorescent signatures.

Discussion

Some of the limitations of our on-chip holographic imaging platforms due to a broadband illumination source could be mitigated or at least partially reduced by modifications of the optical setup such as having a shorter illumination wavelength ( ), a closer object-to-sensor distance (

), a closer object-to-sensor distance ( ), or using spectral filters to narrow the illumination bandwidth. However, these physical modifications to the on-chip holographic imaging set-up also have their own short-comings. First, most image sensors exhibit reduced sensitivities at shorter wavelengths. Second, reducing the object-to-sensor distance causes severe under-sampling of the holographic signatures, and might also yield insufficient lateral shifts for pixel super resolution techniques3. Another potential issue for the use of smaller object-to-sensor distances is reduced thermal isolation from the sensor-array, which might create limitations for imaging of e.g., live biological objects. Therefore, digital narrowing/demultiplexing of the bandwidth of the source is a rather convenient, cost-effective and photon-efficient solution that can be used to partially overcome the resolution losses due to the limited temporal coherence of broadband or polychromatic sources in lensfree on-chip holographic microscopy. To demonstrate this idea, we used a polychromatic phase retrieval method (see the Methods section) to digitally separate the superimposed spectral characteristics of the object holograms. As shown in the Results section, this method partially relaxes the temporal coherence limitation without hardware restrictions and enables the use of an illumination source with a wider spectral bandwidth. These permit us to work with shorter image acquisition times (i.e., higher frame rates) as well as longer object-to-sensor distances.

), or using spectral filters to narrow the illumination bandwidth. However, these physical modifications to the on-chip holographic imaging set-up also have their own short-comings. First, most image sensors exhibit reduced sensitivities at shorter wavelengths. Second, reducing the object-to-sensor distance causes severe under-sampling of the holographic signatures, and might also yield insufficient lateral shifts for pixel super resolution techniques3. Another potential issue for the use of smaller object-to-sensor distances is reduced thermal isolation from the sensor-array, which might create limitations for imaging of e.g., live biological objects. Therefore, digital narrowing/demultiplexing of the bandwidth of the source is a rather convenient, cost-effective and photon-efficient solution that can be used to partially overcome the resolution losses due to the limited temporal coherence of broadband or polychromatic sources in lensfree on-chip holographic microscopy. To demonstrate this idea, we used a polychromatic phase retrieval method (see the Methods section) to digitally separate the superimposed spectral characteristics of the object holograms. As shown in the Results section, this method partially relaxes the temporal coherence limitation without hardware restrictions and enables the use of an illumination source with a wider spectral bandwidth. These permit us to work with shorter image acquisition times (i.e., higher frame rates) as well as longer object-to-sensor distances.

Several other computational methods toward coherent imaging with broadband X-ray and UV sources have been developed. For instance, Parsons et al. treated broadband intensity measurements as coherent but noisy images44. Furthermore, similar polychromatic phase retrieval methods were also reported earlier for lensfree imaging using broadband X-ray sources which demonstrated 60 fold reduction in the integration time45 and more than an order of magnitude increase in usable flux46. Our imaging method is different from these earlier works in several ways. First, the wavelength of operation in our platform is in the visible part of the spectrum and our on-chip imaging geometry provides unit fringe magnification for the inline holograms of the objects, making our approach significantly wider field-of-view, easily reaching e.g., 20–30 mm2 or >1 cm2 using CMOS or CCD image sensors, respectively. Furthermore, in our reconstruction approach we do not assume spectrally invariant objects, and recover a multispectral image cube for each object of interest without making small angle or far-field assumptions. Finally, each wave propagation step in our reconstruction algorithm is performed by using the angular spectrum method calculated separately for each λi, instead of rescaling and interpolating the holograms recorded at a central wavelength as implemented in Ref. 45 and 46. Also, our approach does not rely on multiple intensity measurements at different wavelengths for phase retrieval as reported by Witte et al. toward extreme UV lensfree imaging47.

Reconstructing a spectral image cube from a single intensity measurement is in general a challenging problem, as a result of which PolyPR requires significantly more iterations to converge compared to regular PR techniques (e.g., ~100 vs. 15). We also investigated how thresholding and widening/narrowing the spectral bands affect the recovery performance of PolyPR and concluded that using narrow bands selected after a threshold is applied to the intensity of the spectrum provides satisfactory imaging performance while also significantly reducing the computational load as illustrated in Supp. Fig. 1.

Although spectral demultiplexing and phase retrieval for our on-chip holograms are addressing different issues, PolyPR is a combined method performing both of these tasks within a single recovery process, run for moderately wide illumination bandwidths. However, when the source bandwidth is considerably wider, as in the case of sunlight, it is preferred to perform these processes separately. For this end, instead of the PolyPR approach for wide-band LED illumination, we used a two-step recovery method for lensfree holograms recorded with the sunlight illumination. Before any diffraction compensation (i.e., the holographic back-projection or phase retrieval), we performed spectral demultiplexing, where we estimated the weights of monochromatic holograms from super-resolved, polychromatic holograms at three color channels (i.e., red, green and blue). Göröcs et al. performed a matrix based cross-talk removal for color holograms acquired with red, green and blue lasers48; however, our problem is significantly more difficult since we are dealing with sunlight that is much more complex and broad in its spectral content. We approached this underdetermined problem with a compressive decoding method by using the knowledge of the combined spectrum of the sunlight and sensor sensitivity (i.e., overall pixel responsivity) since multispectral spatial data of physical objects are naturally redundant16,49.

Another dimension of this work includes simultaneous multicolor fluorescent microscopy on a chip. For this end, we demonstrated a multicolor fluorescent imaging platform using a custom-designed compressive decoding algorithm to recover both the color and location information of fluorescent emitters from their lensfree images. In this recovery process, object sparsity is particularly effective as an additional constraint since the object distribution (in both space and color domains) is already sparse without the need for any additional domain representation or transformation. However this process can be easily extended into various imaging applications, since almost all of the natural and manmade objects of interest can be sparsely represented in an appropriate basis37 with some prior knowledge about the objects. Therefore this recovery approach can be applied to other fluorescent objects without a technical limitation.

To demonstrate its proof of concept, we reported in this manuscript the imaging results for two different colors; however in principle this method can be extended to fluorescent emitters having arbitrary emission spectra within the visible range by using the red, green and blue PSFs. On the other hand, due to free-space diffraction, the detection SNR is one of the main limitations for extending the multi-color imaging performance of lensfree fluorescent imaging on a chip. One can in general mitigate this SNR limitation by increasing the excitation power level, which could provide a solution using high optical density color filters that are installed between the sample and the sensor-chip. Note also that most thin-film interference based excitation filters or emission spike filters would not work as desired within an on-chip imaging configuration since the fluorescent emission is not collimated in our lensfree design which would reduce the effective optical density of these filters. Therefore, to achieve better SNR and contrast in multi-color lensfree fluorescent on-chip imaging systems, absorption filters that are customized by coating the sensor-chip cover-glass or active area can be utilized to improve the multi-color reconstruction performance of the presented approach to resolve more complicated multi-color fluorescent samples. Another alternative could be to use multi-color quantum-dots for fluorescent labeling of specimen such that a deep UV source can be used for simultaneous excitation of all the colors in the specimen while the sensor-chip remains blind for the excitation wavelength, avoiding the use of color filters, also simplifying the design of the multi-color lensfree on-chip imager.

Methods

Spectral demultiplexing of lensfree on-chip holograms recorded with broadband LED illumination

Our polychromatic phase retrieval algorithm (see Fig. 3, top and Supp. Fig. 2) iteratively converges to the complex multispectral field distribution by using a pixel super-resolved polychromatic intensity measurement (i.e., the pixel super-resolved lensfree hologram) along with the source and detector spectral characteristics that are known a priori. It starts with the pixel super-resolved hologram of the objects29, which can be considered as a weighted superposition of various monochromatic in-line holograms as detailed earlier. This initial pixel super-resolved hologram is scaled with the wavelength weighting factor (ci) and the square root of each scaled hologram is propagated (separately for each λi of interest) to the object plane, to calculate the initial estimate. Then, the iterative process is started by enforcing an additional constraint, which can vary depending on the nature of the object such as phase only, amplitude only, or spatially confined (defining an object-support)50. Since our objects in this work are both sparse and spatially confined, we preferred to use at this step of the algorithm an object-support constraint. For this, we use the initial propagation of the hologram intensity at the central illumination wavelength to estimate the rough physical boundaries of the object by thresholding the resulting field amplitude at the object plane to create a binary object mask. After this object-support constraint is enforced, each spatially filtered field is forward propagated toward the detector plane, where intensities of each hologram at different wavelengths (λi) are appropriately weighted and summed up, and this summation is compared to the measured super-resolved hologram intensity by calculating the mean square error between the two. At this stage, the phase of the complex field for each λi is kept and its amplitude is updated for each wavelength with the ratio of the square root of the calculated and detected intensities. Next, the resulting complex field is back propagated to the object plane, completing one iteration, performed separately for each λi (see Fig. 3 and Supp. Fig. 2). These successive iterations continue up to e.g., 100 cycles or simply until the change of the mean square error between the measured and calculated hologram intensities is smaller than a certain threshold value, whichever comes first. Propagations in both directions are numerically implemented by using the angular spectrum method with wavelength specific transfer functions, calculated for each λi51,52.

Spectral demultiplexing of lensfree on-chip holograms recorded with sunlight illumination

In our compact and light-weight partially-coherent holographic imaging design that is illustrated in Fig. 4, the objects are placed at ~0.5 mm away from the active region of a color (RGB) sensor array and are uniformly illuminated by the sunlight, which is first collected by a simple light collection unit adapted from conventional solar cell technologies. After trying some static collection approaches such as wide angle lenses or compound parabolic condensers, we decided to use a dynamic, angle scanning collection approach (Fig. 4), where we utilized a flexible light pipe (1 mm diameter glass core) with a compound parabolic condenser at the collection end to maximize the light collection efficiency. There is also a plastic diffuser after the light pipe to prevent imaging the sky like a pinhole camera would do. After the sunlight passes through a plastic diffuser, it is spatially filtered by a 0.1 mm diameter aperture, which is positioned at ~7.5 cm away from the objects giving us a sufficiently large spatial coherence diameter at the sample plane. This 0.1 mm pinhole is controlled using a simple screw-based x-y translation stage, which permits capturing slightly shifted in-line holograms of the object. These lensfree images captured under sunlight are digitally combined using a pixel super-resolution algorithm31 to form a finely sampled in-line hologram per color channel (i.e., at red, green and blue channels). These pixel-super-resolved holograms at each color channel are then processed with the knowledge of the spectral response curves of each pixel of the sensor array as well as the spectral content of the sunlight to digitally remove the cross-talk between color channels and retrieve the multispectral spatial features of the objects. Specifically, we minimized the squared l2 norm of the mismatch between the measurement and the estimation, regularized with the total variation of the multispectral object38. Then the demultiplexed holograms are individually processed either by using iterative monochromatic phase retrieval or a simple back-projection if the twin image noise is weak. This recovery process is summarized in Fig. 5 (top).

Spectral demultiplexing for lensfree multicolor fluorescent imaging on a chip

In our on-chip fluorescent imaging setup (Fig. 1.b and c), the excitation light (center wavelength of 480 nm with 15 nm bandwidth) is delivered to the object chamber through the side facet of a prism such that after excitation a significant portion of the pump beam can be rejected through total-internal-reflection (TIR) occurring between the sample holder and the air interface at the bottom. The object chamber is placed on the top of an absorption filter (used to reject the scattered excitation photons), which makes the object-to-detector distance around 80–100 μm. In this on-chip fluorescent imaging design, we preferred to use a color sensor (from Kodak, KAF-8300) with an active area (i.e., object field-of-view) of 2.42 cm2 and a pixel pitch of 5.4 μm. We first experimentally characterized the red, green and blue point spread functions (PSFs) of this lensfree imaging system with small fluorescent micro-particles (~2 μm in diameter). The measured fluorescent intensities from multiple isolated particles are interpolated, aligned, and averaged. These intensity profiles depend on the structure and optical design of the sensor pixel, filter response and the distance between the object and the detector planes. The PSFs for each color channel and the Bayer pattern arrangement of the sensor chip are used to decode the color and the spatial distribution of the fluorescent emitters from a single raw image by using a similar optimization method reported in Ref. 40. However, in this current implementation, the forward model is modified to take into account (i) different PSFs for different colors (red, green and blue), (ii) under-sampling due to the large pixel size, and (iii) the Bayer pattern type, in other words, the specific spatial distribution of the color filters on the sensor-array pixels. Figure 6 summarizes this spectral demultiplexing recovery process for our lensfree multicolor fluorescent imaging platform.

Author Contributions

I.S. and A.F.C. conducted the experiments. I.S. developed the computational methods and processed the resulting data. U.S. contributed to the portable sunlight microscope design. I.S. and A.O. planned and executed the research, and wrote the manuscript. A.O. supervised the project.

Supplementary Material

Supplementary Figures

Acknowledgments

Ozcan Research Group gratefully acknowledges the support of the Presidential Early Career Award for Scientists and Engineers (PECASE), Army Research Office (ARO) Life Sciences Division, ARO Young Investigator Award, National Science Foundation (NSF) CAREER Award, NSF CBET Biophotonics Program, NSF EFRI Award, Office of Naval Research (ONR) Young Investigator Award and National Institutes of Health (NIH) Director's New Innovator Award DP2OD006427 from the Office of the Director, National Institutes of Health.

Footnotes

A.O. is the co-founder of a start-up company (Holomic LLC) which aims to commercialize computational imaging and sensing technologies licensed from UCLA.

References

- Greenbaum A. et al. Imaging without lenses: achievements and remaining challenges of wide-field on-chip microscopy. Nat. Methods 9, 889–895 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isikman S. O. et al. Lensfree On-Chip Microscopy and Tomography for Biomedical Applications. IEEE J. Sel. Top. Quantum Electron. 18, 1059–1072 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorocs Z. & Ozcan A. On-Chip Biomedical Imaging. Biomed. Eng. IEEE Rev. In 6, 29–46 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu H., Isikman S. O., Mudanyali O., Greenbaum A. & Ozcan A. Optical imaging techniques for point-of-care diagnostics. Lab. Chip 13, 51–67 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Su T.-W., Xue L. & Ozcan A. High-throughput lensfree 3D tracking of human sperms reveals rare statistics of helical trajectories. Proc. Natl. Acad. Sci. 109, 16018–16022 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Su T.-W., Erlinger A., Tseng D. & Ozcan A. Compact and Light-Weight Automated Semen Analysis Platform Using Lensfree on-Chip Microscopy. Anal. Chem. 82, 8307–8312 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Su T.-W. et al. Sperm Trajectories Form Chiral Ribbons. Sci. Rep. 3, 1664; 10.1038/srep01664 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merola F. et al. Digital holography as a method for 3D imaging and estimating the biovolume of motile cells. Lab. Chip 13, 4512–4516 (2013). [DOI] [PubMed] [Google Scholar]

- Rosen J. & Brooker G. Non-scanning motionless fluorescence three-dimensional holographic microscopy. Nat. Photonics 2, 190–195 (2008). [Google Scholar]

- Kim M. K. Incoherent digital holographic adaptive optics. Appl. Opt. 52, A117–A130 (2013). [DOI] [PubMed] [Google Scholar]

- Bhaduri B., Tangella K. & Popescu G. Fourier phase microscopy with white light. Biomed. Opt. Express 4, 1434–1441 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- August Y., Vachman C., Rivenson Y. & Stern A. Compressive hyperspectral imaging by random separable projections in both the spatial and the spectral domains. Appl. Opt. 52, D46–D54 (2013). [DOI] [PubMed] [Google Scholar]

- Rivenson Y., Stern A. & Rosen J. Reconstruction guarantees for compressive tomographic holography. Opt. Lett. 38, 2509–2511 (2013). [DOI] [PubMed] [Google Scholar]

- Rivenson Y., Katz B., Kelner R. & Rosen J. Single-channel in-line multi-modal digital hologram recorder. in Front. Opt. 2013 (Kang, I.) FW6A.4 (Optical Society of America, 2013). 10.1364/FIO.2013.FW6A.4. [Google Scholar]

- Bianco V. et al. [Looking beyond Smoke and Flames by Lensless Infrared Digital Holography] Fringe. (Springer Berlin Heidelberg, 2014). [Google Scholar]

- Brady D. J. Optical Imaging and Spectroscopy. (John Wiley & Sons, 2009). [Google Scholar]

- Brady D. J., Choi K., Marks D. L., Horisaki R. & Lim S. Compressive Holography. Opt. Express 17, 13040–13049 (2009). [DOI] [PubMed] [Google Scholar]

- Choi K. et al. Compressive holography of diffuse objects. Appl. Opt. 49, H1–H10 (2010). [DOI] [PubMed] [Google Scholar]

- Zhu H. et al. Cost-effective and rapid blood analysis on a cell-phone. Lab. Chip 13, 1282–1288 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tseng D. et al. Lensfree microscopy on a cellphone. Lab Chip 10, 1787–1792 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isikman S. O. et al. Field-portable lensfree tomographic microscope. Lab Chip 11, 2222–2230 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mudanyali O. et al. Compact, light-weight and cost-effective microscope based on lensless incoherent holography for telemedicine applications. Lab Chip 10, 1417–1428 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei Q. et al. On-Chip Cytometry using Plasmonic Nanoparticle Enhanced Lensfree Holography. Sci. Rep. 3, 1699 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isikman S. O., Bishara W., Zhu H. & Ozcan A. Optofluidic Tomography on a Chip. Appl. Phys. Lett. 98, 161109–161109–3 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arpali S. A., Arpali C., Coskun A. F., Chiang H.-H. & Ozcan A. High-throughput screening of large volumes of whole blood using structured illumination and fluorescent on-chip imaging. Lab. Chip 12, 4968–4971 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coskun A. F., Sencan I., Su T.-W. & Ozcan A. Lensfree Fluorescent On-Chip Imaging of Transgenic Caenorhabditis elegans Over an Ultra-Wide Field-of-View. PLoS ONE 6, e15955 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gopinathan U., Pedrini G. & Osten W. Coherence effects in digital in-line holographic microscopy. J. Opt. Soc. Am. A 25, 2459–2466 (2008). [DOI] [PubMed] [Google Scholar]

- Xia P. et al. Improvement of color reproduction in color digital holography by using spectral estimation technique. Appl. Opt. 50, H177–182 (2011). [DOI] [PubMed] [Google Scholar]

- Bishara W., Su T.-W., Coskun A. F. & Ozcan A. Lensfree on-chip microscopy over a wide field-of-view using pixel super-resolution. Opt. Express 18, 11181–11191 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hardie R. A Fast Image Super-Resolution Algorithm Using an Adaptive Wiener Filter. IEEE Trans. Image Process. 16, 2953–2964 (2007). [DOI] [PubMed] [Google Scholar]

- Greenbaum A. et al. Increased space-bandwidth product in pixel super-resolved lensfree on-chip microscopy. Sci. Rep. 3, 1717 (2013).23611876 [Google Scholar]

- Bishara W. et al. Holographic pixel super-resolution in portable lensless on-chip microscopy using a fiber-optic array. Lab Chip 11, 1276–1279 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishara W., Isikman S. O. & Ozcan A. Lensfree Optofluidic Microscopy and Tomography. Ann. Biomed. Eng. 40, 251–262 (2012). [DOI] [PubMed] [Google Scholar]

- Seo S. et al. High-Throughput Lens-Free Blood Analysis on a Chip. Anal Chem 82, 4621–4627 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fienup J. R. Phase retrieval algorithms: a comparison. Appl. Opt. 21, 2758–2769 (1982). [DOI] [PubMed] [Google Scholar]

- Fienup J. R. Phase retrieval for undersampled broadband images. J. Opt. Soc. Am. A 16, 1831–1837 (1999). [Google Scholar]

- Donoho D. L. Compressed sensing. Inf. Theory IEEE Trans. On 52, 1289–1306 (2006). [Google Scholar]

- Bioucas-Dias J. M. & Figueiredo M. A. T. A New TwIST: Two-Step Iterative Shrinkage/Thresholding Algorithms for Image Restoration. IEEE Trans. Image Process. 16, 2992–3004 (2007). [DOI] [PubMed] [Google Scholar]

- Kim S.-J., Koh K., Lustig M., Boyd S. & Gorinevsky D. An Interior-Point Method for Large-Scale l1-Regularized Least Squares. IEEE J. Sel. Top. Signal Process. 1, 606–617 (2007). [Google Scholar]

- Coskun A. F., Sencan I., Su T.-W. & Ozcan A. Lensless wide-field fluorescent imaging on a chip using compressive decoding of sparse objects. Opt. Express 18, 10510–10523 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Repetto L., Piano E. & Pontiggia C. Lensless digital holographic microscope with light-emitting diode illumination. Opt. Lett. 29, 1132–1134 (2004). [DOI] [PubMed] [Google Scholar]

- Greenbaum A., Akbari N., Feizi A., Luo W. & Ozcan A. Field-Portable Pixel Super-Resolution Colour Microscope. PLoS ONE 8, e76475 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agarwal G. S., Gbur G. & Wolf E. Coherence properties of sunlight. Opt. Lett. 29, 459–461 (2004). [DOI] [PubMed] [Google Scholar]

- Parsons A. D. et al. Ultra-broadband support determination for extreme ultraviolet coherent diffractive imaging from a high harmonic source. J. Opt. 15, 094009 (2013). [Google Scholar]

- Abbey B. et al. Lensless imaging using broadband X-ray sources. Nat. Photonics 5, 420–424 (2011). [Google Scholar]

- Chen B. et al. Multiple wavelength diffractive imaging. Phys. Rev. A 79, 023809 (2009). [Google Scholar]

- Witte S., Tenner V. T., Noom D. W. E. & Eikema K. S. E. Ultra-broadband extreme-ultraviolet lensless imaging of extended complex structures. ArXiv13026064v1 Physicsoptics (2013). [Google Scholar]

- Göröcs Z., Kiss M., Tóth V., Orzó L. & Tokés S. Multicolor digital holographic microscope (DHM) for biological purposes. in Proc SPIE 7568 Imaging Manip. Anal. Biomol. Cells Tissues VIII 7568, 75681P–75681P–10 (2010). [Google Scholar]

- Zhao Y. et al. Hyperspectral imagery super-resolution by sparse representation and spectral regularization. EURASIP J. Adv. Signal Process. 2011, 1–10 (2011). [Google Scholar]

- Greenbaum A., Sikora U. & Ozcan A. Field-portable wide-field microscopy of dense samples using multi-height pixel super-resolution based lensfree imaging. Lab. Chip 12, 1242–1245 (2012). [DOI] [PubMed] [Google Scholar]

- Goodman J. Introduction to Fourier Optics. (Roberts and Company Publishers., 2004). [Google Scholar]

- Carpenter D. J. & Pask C. The Angular Spectrum Approach to Diffraction of Partially Coherent Light. Opt. Acta Int. J. Opt. 24, 939 (1977). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figures