Abstract

Sensitivity to time, including the time of reward, guides the behaviour of all organisms. Recent research suggests that all major reward structures of the brain process the time of reward occurrence, including midbrain dopamine neurons, striatum, frontal cortex and amygdala. Neuronal reward responses in dopamine neurons, striatum and frontal cortex show temporal discounting of reward value. The prediction error signal of dopamine neurons includes the predicted time of rewards. Neurons in the striatum, frontal cortex and amygdala show responses to reward delivery and activities anticipating rewards that are sensitive to the predicted time of reward and the instantaneous reward probability. Together these data suggest that internal timing processes have several well characterized effects on neuronal reward processing.

Keywords: temporal discounting, reward expectation, dopamine, striatum, frontal cortex, amygdala

1. Introduction

Many behavioural processes are affected by rewards. Unlike the senses, time intervals cannot be directly perceived via specific receptors but must be constructed by the brain. Humans and animals estimate time intervals with great accuracy [1,2], and this accuracy decays proportionally with delay [3–5]. The ability to estimate short time intervals plays an important role in everyday behaviour. Timing is essential for predicting and planning actions. A good example is the temporal accuracy required to hit a ball in a tennis game. Although we focus presently on reward, all reinforcement processes, including aversive learning and reactions, are subjected to timing processes. The term ‘timing’ refers not only to the duration of an event but also to the moment at which the event is likely to occur (temporal prediction). Both duration estimation and temporal prediction require a metrical representation of time in which the occurrence of consecutive events is measured on a continuous scale. Recent studies indicate that temporal processing may not be centralized in one single brain structure but rather occurs across different specialized areas [6–8]. Temporal prediction can be induced by stimuli or events and also by the passing of time itself [9]. This fact is characterized by the ‘hazard function’, defined as the conditional probability that an event will occur given that it has not yet occurred [10]. We know very well examples of increasing hazard functions; the longer we wait at the bus stop, the more we expect the bus to appear at the next moment.

The time of reward has several important influences on reward processing. The economic value of reward decreases with increasing delays. This temporal discounting may lead to the preference of sooner smaller rewards over larger later rewards. Temporal discounting has been demonstrated in humans and animals in many psychological and behavioural economics studies [1,3–5,11,12], although the debate is still raging between hyperbolic, exponential and combined models. Related to value discounting, behavioural conditioning requires optimal stimulus–reward intervals and becomes less efficient with shorter or longer intervals [13,14]. Furthermore, time intervals and rates of reward are of crucial importance for conditioning [5]. This review will discuss several neuronal processes involved in these functions. The processing of temporal information about rewards seems to rely most crucially on dopamine neurons, striatum, frontal cortex and amygdala. However, each region may have a functionally discrete role.

2. Dopamine neurons

Several studies suggest an involvement of the dopamine system in the timing of events, including rewards. Administration of amphetamine, an indirect dopamine agonist that enhances extracellular dopamine concentrations, results in the overestimation of time delays, whereas the dopamine receptor antagonist haloperidol leads to underestimation of delays [15,16]. Individuals with higher time sensitivity are also more sensitive to stimulant-induced euphoria, suggesting overlapping dopaminergic mechanisms for internal clock and reward functions [16–19].

Midbrain dopamine neurons code prediction errors in reward value [20], defined as the difference between current and predicted reward value. According to temporal difference (TD) reinforcement models, current reward value at each moment is defined as the present reward plus the discounted sum of future rewards [21]. The dopamine prediction error response shows three features that reflect closely the characteristics of TD reinforcement models, which makes them candidates for effective teaching signals for reward.

First, the dopamine response occurs to any event that elicits a reward-prediction error as defined above, irrespective of the event being a primary, unconditioned reward (US) or a conditioned stimulus (CS) predicting a primary reward [22]. The response transfer from the primary reward to the reward-predicting stimulus together with the coding of reward-prediction errors at both events are hallmarks of TD reinforcement models [21].

Second, the dopamine response reflects temporal discounting, both at the time of the conditioned, reward-predicting stimulus and at the time of the reward itself [23,24]. The dopamine activation to the CS predicting a reward with a longer delay is weaker than the response to another CS predicting the same physical amount of reward after a shorter delay. This characteristic is probably due to the reduction in subjective value with the longer delay shown by intertemporal behavioural choices. Thus, dopamine neurons code the decreasing subjective reward value rather than the unchanged objective, physical value in temporal discounting. More specifically, in TD language, the dopamine response to the predictive stimulus reflects the prediction error (in subjective value) that decreases as the difference between the lower current reward value (current reward plus discounted sum of future rewards) minus the constant average reward prediction before the specific stimulus becomes smaller. By contrast, the dopamine activation following the reward itself increases with increasing reward delays after the CS. This characteristic most probably reflects the increasing prediction error at the time of the reward with longer delays, as the difference between the constant received reward minus the lower, temporally discounted, predicted value before the reward increases. This interpretation attributes the effects of temporal discounting to changes in subjective value that are induced by a timing mechanism acting on the reward system.

Third, the dopamine response is sensitive to temporal aspects of reward prediction by showing reward-prediction error responses with surprising changes in reward timing. A positive response (activation) occurs when a reward occurs at a different time as predicted, and a negative response (depression) occurs when the predicted reward fails to occur at the predicted time and it has not yet occurred (figure 1; [26]). These characteristics make the dopamine response a teaching signal for time-sensitive reward predictions. These responses comply well with basic properties of TD models whose teaching signal is time sensitive. Although earlier TD implementations failed to replicate the time-sensitive dopamine responses completely [20,27], later models using appropriate prediction resets achieved good matches with the neuronal data [28]. Reward responses of dopamine neurons are also highly sensitive to the duration of the stimulus–reward interval, and this temporal precision declines as interval duration increases [23], showing time-scale invariance [1–5]. Thus, dopamine neurons code errors in the prediction of both the occurrence and the time of reward.

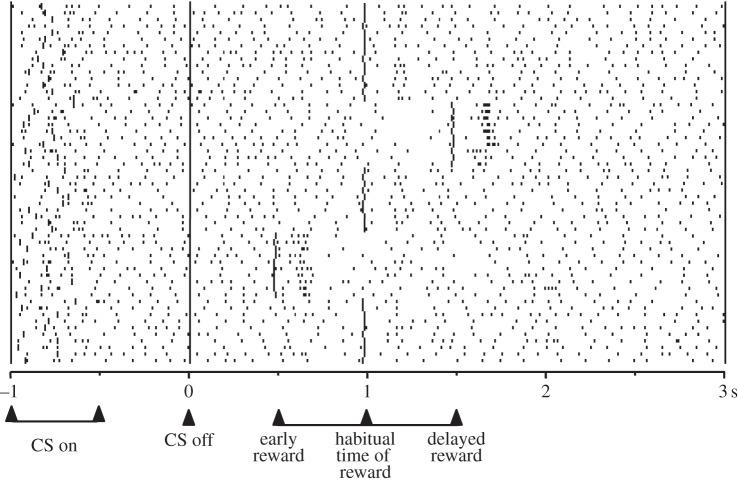

Figure 1.

Responses of dopamine neurons to errors in the temporal prediction of reward. The activity of a dopamine neuron is depressed when reward is delayed and increased when the reward is delivered earlier (early reward) or delayed (delayed reward) as predicted (habitual time of reward). CS, conditioned stimulus (adapted from [25]).

Taken together, dopamine neurons are highly sensitive to time intervals. One can find temporal sensitivity in the responses to prediction errors at temporally distinct events [22], in the influence of temporal delays on subjective value responses [23,24], and in the responses to variations in temporal intervals [23,26]. The temporal sensitivity of dopamine responses is likely an important component of their potential role of teaching signal for time-sensitive behavioural processes, rather than representing a ‘clock in the brain’ which may reside elsewhere in the brain. Alternatively, the temporal sensitivity of dopamine responses may derive from a mechanism within these neurons that is dedicated to their teaching function without requiring access to a central brain clock.

3. Striatum

Several studies have demonstrated the involvement of the striatum in functions extending beyond a role in movement. Many striatal neurons process reward information. The striatal responses to reward-predicting cues undergo temporal discounting in close relationship to behavioural discounting. Cue responses in striatal neurons decrease with increasing delays to reward in parallel with decreasing preferences measured in binary behavioural choices [29]. Whereas the decreasing responses in caudate neurons reflect the differences in discounted reward value between the two choice options, the decreases in ventral striatal neurons reflect the sums of discounted choice values.

In addition to temporal discounting, the activity of striatal neurons explicitly reflects the timing of rewards. Striatal neurons in behaving monkeys show sustained activations during the expectation of external signals of behavioural significance and during the delay period preceding reward delivery, both in arm movement tasks and in oculomotor tasks [30–32]. Most of these sustained prereward activations are prolonged when reward delivery is delayed and terminate only after the reward, suggesting a relationship to reward occurrence. Interestingly, in some striatal neurons the activation begins later when reward delivery is predictably delayed (figure 2), extending the relationship to the time of the reward [33]. Orbitofrontal neurons show similar sustained reward anticipatory activity whose onset is displaced by temporal shifts of reward and then ramps up to the expected time of reward [34].

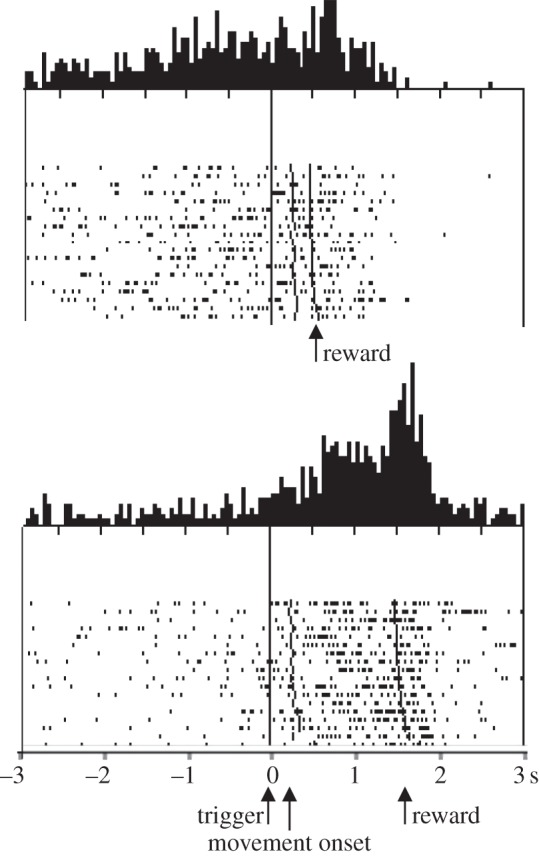

Figure 2.

Influence of delayed reward delivery on the onset of expectation-related neuronal activation. Introduction of a delay of 1 s in reward delivery, extend and delay the response onset (Adapted from [33]).

Through the experience in a particular behavioural task, a stimulus gains predictive information for the occurrence of the subsequent task components. In this way, the reception of a known stimulus would evoke a representation of the predicted event. The observation that striatal neurons are activated before the occurrence of predictable environmental events may suggest that these neurons have access to central representations of these events. These representations would include both the occurrence and the time of reward. The described sustained activity may develop when an environmental stimulus evokes the representation of a specific subsequent event. The influence of predicted reward delay on the onset of reward expectation-related activity suggests that such a stimulus may provide a temporal guide for neurons engaged in the performance of the task.

4. Frontal and parietal cortex

Responses to reward-predicting stimuli decrease in a number of cortical structures with increasing delays, reflecting temporal discounting of reward value. Premotor cortical neurons in monkeys show lower activations following visual instructions for delayed behavioural responses and rewards [35]. Reversal of cue-delay associations leads to reversal of neuronal responses, suggesting a relationship to delay rather than visual stimulus properties. About one-third of task-related neurons in monkey dorsolateral prefrontal cortex show delay-related reductions of responses to chosen cue targets in choice trials [36]. Similar temporal discounting is observed in cue responses of neurons in the orbitofrontal cortex of monkeys [37]. The same neurons code also reward magnitude, suggesting that temporal discounting reflects reduced subjective reward value rather than simple decreases in motivation. By contrast, other orbitofrontal neurons show reduced movement-related activity with increasing delays [38]. As these activities do not covary with reward value, they seem to reflect the decreased motivation induced by delayed reward. Cue responses in lateral parietal cortex decrease with increasing delays to reward in choices between fixed earlier and psychometrically varied delayed rewards [39]. Taken together, reward-related neuronal responses undergo temporal discounting in a number of cortical structures.

Neurons in frontal, parietal and temporal cortex show anticipatory activity preceding predictable visual or somatosensory stimuli or planned movements. Some of these activations reflect the timing of these events, as they vary with the hazard function of their occurrence (hazard function is defined as the conditional probability of event occurrence given the event has not yet occurred). The responses may reflect the temporal evolution of attention to a forthcoming visual stimulus in extrastriate visual cortex V4 [40], the time of a forthcoming vibratory somatosensory stimulus in prefrontal cortex [41] and the planned time of an eye movement in parietal cortex [42]. Indeed, motor preparation is intrinsically linked to the time of the movement to be executed. In being sensitive to timing, these responses have access to an internal clock. However, it is unclear whether a central, singular clock exists in the brain that provides timing information to these diverse brain structures, or whether these structures constitute parts of a clock distributed across several brain structures, or whether separate clocks in these structures are specific for the local functions such as attention and somatosensory, visual and movement processing. Such distributed clocks should be synchronized with each other through interactions without requiring a central clock.

5. Amygdala

The amygdala is a major component of the brain's reward system. Lesions of this nucleus disrupt behavioural reward processes in humans and animals [43–47]. Amygdala neurons respond to reward-predicting stimuli and reward delivery [48–53] and show activations preceding behavioural responses and reward delivery [49,54].

Populations of amygdala neurons show decreased reward responses with increasing instantaneous reward probability (figure 3; [55,56]). The less likely the reward is to occur at a given moment, the higher is the neuronal response, suggesting a relationship to temporal reward ‘surprise’. Humans and animals learn to predict the outcomes of their behaviour. Learning depends on the discrepancy or ‘error’ between received and predicted outcomes. It proceeds when there is a ‘surprise’ in the outcome, that is, when outcomes that are not fully predicted occur. The discrepancy between reward occurrence and reward prediction is termed an ‘error in reward prediction’. The surprise responses observed in the amygdala may reflect the coding of positive temporal reward-prediction errors, and thus may play a role in learning.

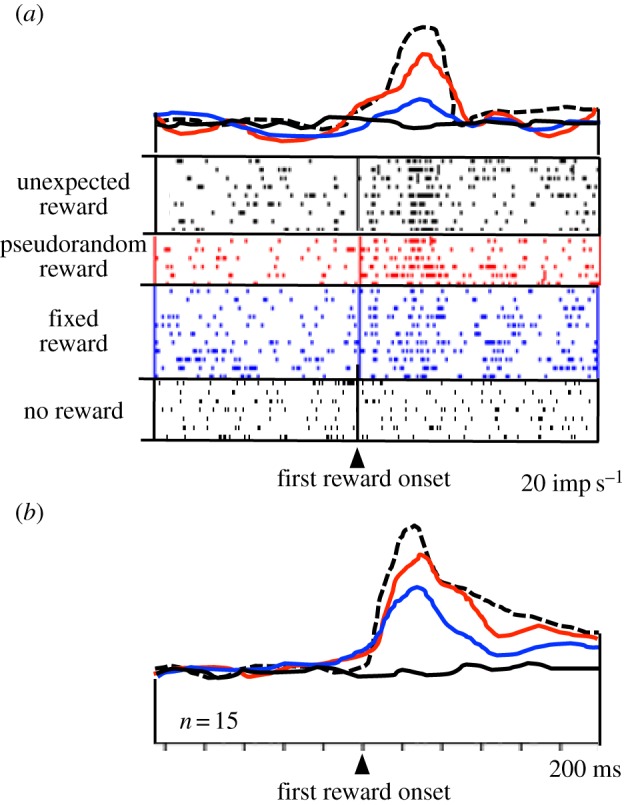

Figure 3.

Neuronal responses in primate amygdala reflecting reward surprise. Responses decrease with increasing reward prediction (defined by increasing instantaneous reward probability). Dotted line: responses to unpredicted reward delivered without any preceding stimulus. Second line from top (red online): reward responses during a stimulus that predicts the occurrence but not the time of a pseudorandomly occurring reward. Third line from top (blue online): responses to a fully predicted, fixed reward delivered 2 s after stimulus presentation. Bottom line (black online): activity at 2 s following a non-rewarded stimulus. (a) Single neuron. (b) Averaged population responses (n = 15 neurons) (adapted from [55]). (Online version in colour.)

Opposite to the ‘surprise neurons’, another population of amygdala neurons shows increased reward responses with increasing instantaneous reward probability (figure 4; [55]). The more likely the reward is to occur at any given moment, the higher is the neuronal response. Thus, the modulations vary positively with the temporal predictability of reward. These activations might function to maintain established, temporally specific reward predictions after reinforcement learning and thus help to avoid extinction. This response is similar to the attentional modulation seen in monkey visual cortex V4, which parallels the hazard function of visual stimulus occurrence during individual trials [40]. These data demonstrate that such temporal modulations are not restricted to attentional processes but occur also with reward.

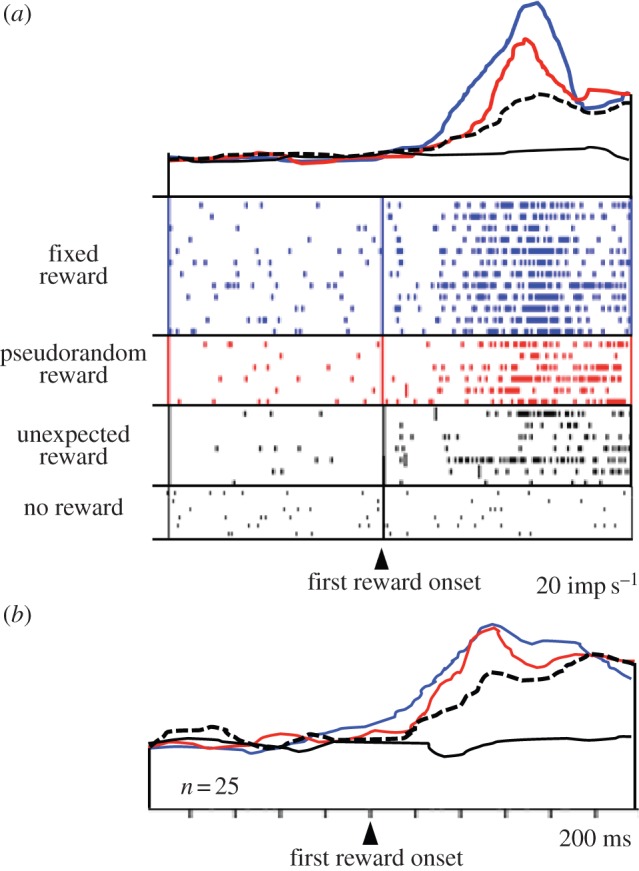

Figure 4.

Increase of reward responses of amygdala neurons with increasing reward prediction (increasing instantaneous reward probability). Same code as in figure 3. (a) Single neuron. (b) Averaged population responses (n = 25 neurons) (adapted from [55]). (Online version in colour.)

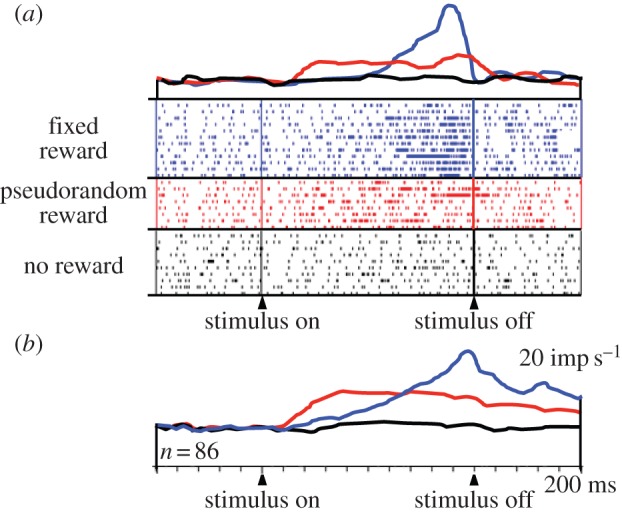

Distinct from responses to reward delivery, a population of amygdala neurons shows activations preceding the predictable delivery of reward (figure 5; [55]). Again, reward predictability defined by instantaneous reward probability has a remarkable influence on the temporal profiles of these prereward activations. Whereas prereward activations with singular reward start late and ramp up to high peaks, the activations with flat instantaneous reward rate start earlier and maintain a modest plateau until reward probability drops with stimulus offset. The absence of ramping with flat reward rate during the stimulus reflects the flat reward expectation derived from the employed renewal process with multiple rewards. It corresponds, inversely, to the ‘rate of occurrence of failure for repairable systems’ in reliability engineering [10,57] rather than the hazard rate modelling unique, non-repeating events. Thus, the temporal profiles of neuronal activity reflect the timing of predicted rewards.

Figure 5.

Increase of prereward activity of amygdala neurons with instantaneous reward probability. Top line (blue online): activity preceding reward delivered at a fixed time at the end of a specific stimulus. Middle line (red online): activity preceding reward delivered at pseudorandom times during another stimulus. Bottom line (black online): no changes in activity during presentation of a non-rewarded stimulus. (a) Single neuron. (b) Averaged population responses (n = 86 neurons) (adapted from [55]). (Online version in colour.)

Taken together, figures 3–5 show that the activities of amygdala neurons are not only modulated by the actual or anticipated occurrence of reward but also by the predicted timing and instantaneous rate of reward. These modulations affect both the reward responses and the anticipatory activities. Thus, amygdala neurons have access to an internal clock that processes the time of future reward occurrence. The temporal influence on amygdala neurons might derive from dopamine projections [58] involved in interval timing [59,60].

In being sensitive to reward timing, amygdala neurons process a fundamental characteristic of reward function, and thus may play a wider role in reward than hitherto assumed. The observed neuronal reward signals sensitive to the expected time of reward may be involved in a wide range of behavioural functions, including allocation of behavioural resources to specifically timed rewards, planning of sequential steps of goal-directed acts, choices between temporally distinct rewards and assignment of credit to specifically timed reward during novel reward learning, value updating and economic decision making, as conceptualized by animal learning and economic decision theories.

Funding statement

Our work was supported by the Wellcome Trust, the Behavioural and Clinical Neuroscience Institute Cambridge and the European Research Council.

References

- 1.Church RM, Deluty MZ. 1977. Bisection of temporal intervals. J. Exp. Psychol. Anim. Behav. Process. 3, 216–228. ( 10.1037/0097-7403.3.3.216) [DOI] [PubMed] [Google Scholar]

- 2.Roberts S. 1981. Isolation of an internal clock. J. Exp. Psychol. Anim. Behav. Process. 7, 242–268. ( 10.1037/0097-7403.7.3.242) [DOI] [PubMed] [Google Scholar]

- 3.Gibbon J. 1977. Scalar expectancy theory and Weber's Law in animal timing. Psychol. Rev. 84, 279–325. ( 10.1037/0033-295X.84.3.279) [DOI] [Google Scholar]

- 4.Rakitin BC, Gibbon J, Penney TB, Malapani C, Hinton SC, Meck WH. 1998. Scalar expectancy theory and peak-interval timing in humans. J. Exp. Psychol. Anim. Behav. Process. 24, 15–33. ( 10.1037/0097-7403.24.1.15) [DOI] [PubMed] [Google Scholar]

- 5.Gallistel CR, Gibbon J. 2000. Time, rate and conditioning. Psych. Rev. 107, 289–344. ( 10.1037/0033-295X.107.2.289) [DOI] [PubMed] [Google Scholar]

- 6.Reutimann J, Yakovlev V, Fusi S, Seen W. 2004. Climbing neuronal activity as an event-based cortical representation of time. J. Neurosci. 24, 3295–3303. ( 10.1523/JNEUROSCI.4098-03.2004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lewis PA, Miall RC. 2006. Remembering the time: a continuous clock. Trends Cogn. Sci. 10, 401–406. ( 10.1016/j.tics.2006.07.006) [DOI] [PubMed] [Google Scholar]

- 8.Karmarkar UR, Buonomano DV. 2007. Timing in the absence of clocks: encoding time in neural network states. Neuron 53, 427–433. ( 10.1016/j.neuron.2007.01.006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Coull J, Nobre A. 2008. Dissociating explicit timing from temporal expectation with fMRI. Curr. Opin. Neurobiol. 18, 137–144. ( 10.1016/j.conb.2008.07.011) [DOI] [PubMed] [Google Scholar]

- 10.Luce RD. 1986. Response times: their role in inferring elementary mental organization. New York, NY: Oxford University Press. [Google Scholar]

- 11.Ainslie GW. 1974. Impulse control in pigeons. J. Exp. Anal. Behav. 21, 485–489. ( 10.1901/jeab.1974.21-485) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rodriguez ML, Logue AW. 1988. Adjusting delay to reinforcement: comparing choice in pigeons and humans. J. Exp. Psychol. Anim. Behav. Process. 14, 105–117. ( 10.1037/0097-7403.14.1.105) [DOI] [PubMed] [Google Scholar]

- 13.Mackintosh NJ. 1974. The psychology of animal learning. London, UK: Academic Press. [Google Scholar]

- 14.Wagner AR. 1981. SOP: A model of automatic memory processing in animal behavior. In Information processing in animals: memory mechanisms (eds Spear NE, Miller RR.), pp. 5–47. Hillsdale, NJ: Erlbaum. [Google Scholar]

- 15.Hinton SC, Meck WH. 1997. How time flies: functional and neural mechanisms of interval timing. In Time and behavior: psychological and neurobiological analyses (eds Bradshaw CM, Szabadi E.), pp. 409–557. New York, NY: Elsevier. [Google Scholar]

- 16.Lake JI, Meck WH. 2013. Differential effects of amphetamine and haloperidol on temporal reproduction: dopaminergic regulation of attention and clock speed. Neuropsychologia 51, 284–292. ( 10.1016/j.neuropsychologia.2012.09.014) [DOI] [PubMed] [Google Scholar]

- 17.Meck WH. 1988. Internal clock and reward pathways share physiologically similar information-processing stages. In Quantitative analyses of behavior: biological determinants of reinforcement, vol. 7 (eds Commons ML, Church RM, Stellar JR, Wagner AR.), pp. 121–138. Hillsdale, NJ: Erlbaum. [Google Scholar]

- 18.MacDonald CJ, Meck WH. 2004. Systems-level integration of interval timing and reaction time. Neurosci. Biobehav. Rev. 28, 747–769. ( 10.1016/j.neubiorev.2004.09.007) [DOI] [PubMed] [Google Scholar]

- 19.MacDonald CJ, Meck WH. 2006. Interaction of raclopride and preparatory interval effects on simple reaction time performance. Behav. Brain Res. 175, 62–74. ( 10.1016/j.bbr.2006.08.004) [DOI] [PubMed] [Google Scholar]

- 20.Schultz W, Dayan P, Montague PR. 1997. A neural substrate of prediction and reward. Science 275, 1593–1599. ( 10.1126/science.275.5306.1593) [DOI] [PubMed] [Google Scholar]

- 21.Sutton RS, Barto AG. 1998. Reinforcement learning. Cambridge, MA: MIT Press. [Google Scholar]

- 22.Ljungberg T, Apicella P, Schultz W. 1992. Responses of monkey dopamine neurons during learning of behavioral reactions. J. Neurophysiol. 67, 145–163. [DOI] [PubMed] [Google Scholar]

- 23.Fiorillo CD, Newsome WT, Schultz W. 2008. The temporal precision of reward prediction in dopamine neurons. Nat. Neurosci. 11, 966–973. ( 10.1038/nn.2159) [DOI] [PubMed] [Google Scholar]

- 24.Kobayashi S, Schultz W. 2008. Influence of reward delays on responses of dopamine neurons. J. Neurosci. 28, 7837–7846. ( 10.1523/JNEUROSCI.1600-08.2008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Samuelson PA. 1937. Some aspects of the pure theory of capital. Q. J. Econ. 51, 469–496. ( 10.2307/1884837) [DOI] [Google Scholar]

- 26.Hollerman JR, Schultz W. 1998. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci. 1, 304–309. ( 10.1038/1124) [DOI] [PubMed] [Google Scholar]

- 27.Montague PR, Dayan P, Sejnowski TJ. 1996. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 16, 1936–1947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Suri R, Schultz W. 1999. A neural network with dopamine-like reinforcement signal that learns a spatial delayed response task. Neuroscience 91, 871–890. ( 10.1016/S0306-4522(98)00697-6) [DOI] [PubMed] [Google Scholar]

- 29.Cai X, Kim S, Lee D. 2011. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron 69, 170–182. ( 10.1016/j.neuron.2010.11.041) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hikosaka O, Sakamoto M, Usui S. 1989. Functional properties of monkey caudate neurons. III. Activities related to expectation of target and reward. J. Neurophysiol. 61, 814–832. [DOI] [PubMed] [Google Scholar]

- 31.Schultz W. 1989. Neurophysiology of basal ganglia. In Handbook of experimental pharmacology (ed. Calne DB.), pp. 1–45. Berlin, Germany: Springer. [Google Scholar]

- 32.Apicella P, Scarnati E, Ljungberg T, Schultz W. 1992. Neuronal activity in monkey striatum related to the expectation of predictable environmental events. J. Neurophysiol. 68, 945–960. [DOI] [PubMed] [Google Scholar]

- 33.Schultz W, Apicella P, Scarnati E, Ljungberg T. 1992. Neuronal activity in monkey ventral striatum related to the expectation of reward. J. Neurosci. 12, 4595–4610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tremblay L, Schultz W. 2000. Modifications of reward expectation-related neuronal activity during learning in primate orbitofrontal cortex. J. Neurophysiol. 83, 1877–1885. [DOI] [PubMed] [Google Scholar]

- 35.Roesch MR, Olson CR. 2005. Neuronal activity dependent on anticipated and elapsed delay in macaque prefrontal cortex, frontal and supplementary eye fields, and premotor cortex. J. Neurophysiol. 94, 1469–1497. ( 10.1152/jn.00064.2005) [DOI] [PubMed] [Google Scholar]

- 36.Kim S, Hwang J, Lee D. 2008. Prefrontal coding of temporally discounted values during intertemporal choice. Neuron 59, 161–172. ( 10.1016/j.neuron.2008.05.010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Roesch MR, Olson CR. 2005. Neuronal activity in orbitofrontal cortex reflects the value of time. J. Neurophysiol. 94, 2457–2471. ( 10.1152/jn.00373.2005) [DOI] [PubMed] [Google Scholar]

- 38.Roesch MR, Taylor AR, Schoenbaum G. 2006. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron 51, 509–520. ( 10.1016/j.neuron.2006.06.027) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Louie K, Glimcher PW. 2010. Separating value from choice: delay discounting activity in the lateral intraparietal area. J. Neurosci. 30, 5498–5507. ( 10.1523/JNEUROSCI.5742-09.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ghose GM, Maunsell JHR. 2002. Attentional modulation in visual cortex depends on task timing. Nature 419, 616–620. ( 10.1038/nature01057) [DOI] [PubMed] [Google Scholar]

- 41.Brody CD, Hernandez A, Zainos A, Romo R. 2003. Timing and neuronal encoding of somatosensory parametric working memory in macaque prefrontal cortex. Cereb. Cortex 13, 1196–1207. ( 10.1093/cercor/bhg100) [DOI] [PubMed] [Google Scholar]

- 42.Janssen P, Shadlen MN. 2005. A representation of the hazard rate of elapsed time in macaque area LIP. Nat. Neurosci. 8, 234–241. ( 10.1038/nn1386) [DOI] [PubMed] [Google Scholar]

- 43.Bechara A, Damasio H, Damasio AR, Lee GP. 1999. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J. Neurosci. 19, 5473–5481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Hampton AN, Adolphs R, Tyszka JM, O'Doherty JP. 2007. Contributions of the amygdala to reward expectancy and choice signals in human prefrontal cortex. Neuron 55, 545–555. ( 10.1016/j.neuron.2007.07.022) [DOI] [PubMed] [Google Scholar]

- 45.Everitt BJ, Morris KA, O'Brien A, Robbins TW. 1991. The basolateralamygdala-ventral striatal system and conditioned place preference: further evidence of limbic-striatal interactions underlying reward-related processes. Neuroscience 42, 1–18. ( 10.1016/0306-4522(91)90145-E) [DOI] [PubMed] [Google Scholar]

- 46.Gaffan D, Murray EA, Fabre-Thorpe M. 1993. Interaction of the amygdala with the frontal lobe in reward memory. Eur. J. Neurosci. 5, 968–975. ( 10.1111/j.1460-9568.1993.tb00948.x) [DOI] [PubMed] [Google Scholar]

- 47.Parkinson JA, Crofts HS, McGuigan M, Tomic DL, Everitt BJ, Roberts AC. 2001. The role of primate amygdala in conditioned reinforcement. J. Neurosci. 21, 7770–7780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sanghera MK, Rolls ET, Roper-Hall A. 1979. Visual responses of neurons in the dorsolateral amydala of the alert monkey. Exp. Neurol. 63, 610–626. ( 10.1016/0014-4886(79)90175-4) [DOI] [PubMed] [Google Scholar]

- 49.Schoenbaum G, Chiba AA, Gallagher M. 1999. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J. Neurosci. 19, 1876–1884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Sugase-Miyamoto Y, Richmond BJ. 2005. Neuronal signals in the monkey basolateral amygdala during reward schedules. J. Neurosci. 25, 11 071–11 083. ( 10.1523/JNEUROSCI.1796-05.2005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Paton JJ, Belova MA, Morrison SE, Salzman CD. 2006. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439, 865–870. ( 10.1038/nature04490) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Bermudez MA, Schultz W. 2010. Responses of amygdala neurons to positive reward-predicting stimuli depend on background reward (contingency) rather than stimulus–reward pairing (contiguity). J. Neurophysiol. 103, 1158–1170. ( 10.1152/jn.00933.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bermudez MA, Schultz W. 2010. Reward magnitude coding in primate amygdala neurons. J. Neurophysiol. 104, 3424–3432. ( 10.1152/jn.00540.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Carelli RM, Williams JG, Hollander JA. 2003. Basolateral amygdala neurons encode cocaine self-administration and cocaine-associated cues. J. Neurosci. 23, 8204–8211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bermudez MA, Göbel C, Schultz W. 2012. Sensitivity to temporal reward structure in amygdala neurons. Curr. Biol. 22, 1839–1844. ( 10.1016/j.cub.2012.07.062) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Belova MA, Paton JJ, Morrison SE, Salzman CD. 2007. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron 55, 970–984. ( 10.1016/j.neuron.2007.08.004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Doob JL. 1948. Renewal theory from the point of view of the theory of probability. Trans. Am. Math. Soc. 63, 422–438. ( 10.1090/S0002-9947-1948-0025098-8) [DOI] [Google Scholar]

- 58.Sadikot AF, Parent A. 1990. The monoaminergic innervation of the amygdala in the squirrel monkey: an inmunohistological study. Neuroscience 36, 431–447. ( 10.1016/0306-4522(90)90439-B) [DOI] [PubMed] [Google Scholar]

- 59.Artieda J, Pastor MA, Lacuz F, Obeso JA. 1992. Temporal discrimination is abnormal in Parkinson's disease. Brain 115, 199–210. ( 10.1093/brain/115.1.199) [DOI] [PubMed] [Google Scholar]

- 60.Buhusi CV, Meck WH. 2005. What makes us tick? Functional and neural mechanisms of interval timing. Nat. Rev. Neurosci. 6, 755–765. ( 10.1038/nrn1764) [DOI] [PubMed] [Google Scholar]