Significance

Picking up visual information from our environment in a timely manner is the starting point of adaptive visual-motor behavior. Humans and other animals with foveated visual systems extract visual information through a cycle of brief fixations interspersed with gaze shifts. Object identification typically requires foveal analysis (limited to a small region of central vision). In addition, the next fixation location needs to be selected using peripheral vision. How does the brain coordinate these two tasks on the short time scale of individual fixations? We show that the uptake of information for foveal analysis and peripheral selection occurs in parallel and independently. These results provide important theoretical constraints on models of eye movement control in a variety of visual-motor domains.

Keywords: eye movement control, perceptual decision-making, attention, classification image

Abstract

Human vision is an active process in which information is sampled during brief periods of stable fixation in between gaze shifts. Foveal analysis serves to identify the currently fixated object and has to be coordinated with a peripheral selection process of the next fixation location. Models of visual search and scene perception typically focus on the latter, without considering foveal processing requirements. We developed a dual-task noise classification technique that enables identification of the information uptake for foveal analysis and peripheral selection within a single fixation. Human observers had to use foveal vision to extract visual feature information (orientation) from different locations for a psychophysical comparison. The selection of to-be-fixated locations was guided by a different feature (luminance contrast). We inserted noise in both visual features and identified the uptake of information by looking at correlations between the noise at different points in time and behavior. Our data show that foveal analysis and peripheral selection proceeded completely in parallel. Peripheral processing stopped some time before the onset of an eye movement, but foveal analysis continued during this period. Variations in the difficulty of foveal processing did not influence the uptake of peripheral information and the efficacy of peripheral selection, suggesting that foveal analysis and peripheral selection operated independently. These results provide important theoretical constraints on how to model target selection in conjunction with foveal object identification: in parallel and independently.

Almost all human visually guided behavior relies on the selective uptake of information, due to sensory and cognitive limitations. On the sensory side, the sampling of visual input by the retinal mosaic of photoreceptors becomes increasingly sparse and irregular away from central vision (1). In addition, fewer cortical neurons are devoted to the analysis of peripheral visual information (cortical magnification) (2, 3). Humans and other animals with so-called foveated visual systems have evolved gaze-shifting mechanisms to overcome these limitations. Saccadic eye movements serve to rapidly and efficiently deploy gaze to objects and regions of interest in the visual field. Sampling the environment appropriately with gaze is the starting point of adaptive visual-motor behavior (4, 5).

Studies have shown that saccadic eye movements are guided by analysis of information in the visual periphery up to 80–100 ms before saccade execution (6–8). However, active vision typically requires humans not only also to analyze information in the visual periphery to decide where to fixate next (peripheral selection), but also to analyze the information at the current fixation location (foveal analysis). Not much is known about how foveal analysis and peripheral selection are coordinated and interact. In this regard, we need to know (i) whether and to what extent foveal analysis and peripheral selection are constrained by a common bottleneck or limited capacity resource, and (ii) how time within a fixation is allocated to these two tasks.

Capacity limitations are ubiquitous in human visual processing. There is a long-standing debate on the extent to which visual attention may be focused on different locations in the visual field (9–11). If foveal analysis and peripheral selection both require a spatial attentional “spotlight,” the coordination of these two tasks will be constrained by the way in which this spotlight can be configured. For example, the size of the spotlight may vary with the processing difficulty of foveal information, as in tunnel vision (12, 13). Similarly, in both reading (14) and scene-viewing (15), a reduction in the perceptual span has been reported with higher foveal load. A high foveal processing load can also prevent distraction from irrelevant visual information in the periphery (16). These findings suggest that there may be interactions between foveal analysis and peripheral selection (17, 18), in that the gain on peripheral information processing may vary according to the foveal processing load.

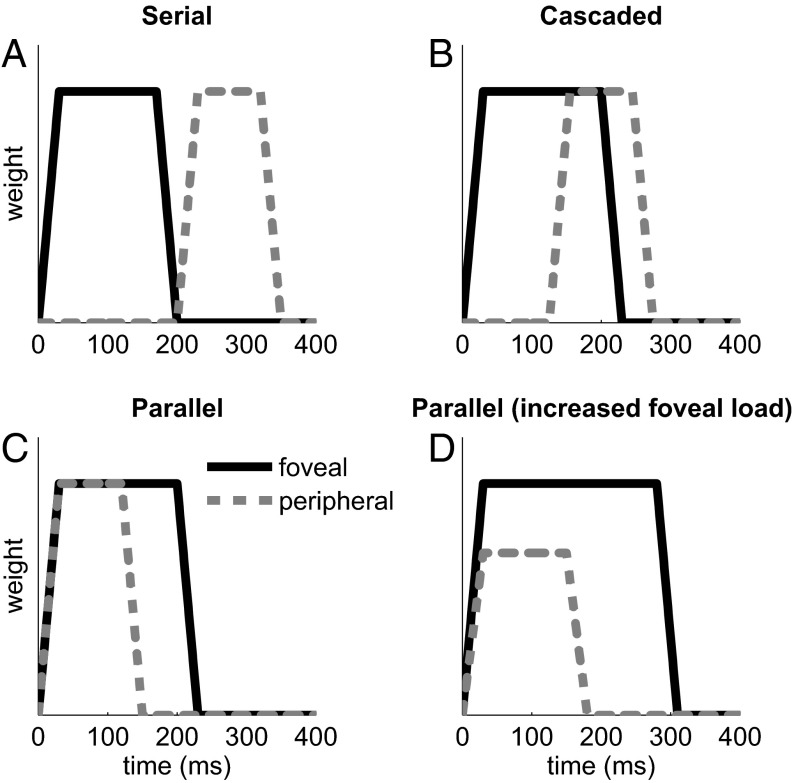

A useful way to think of the coordination between foveal analysis and peripheral selection is to picture the temporal profile of information extraction over the course of a fixation period. Fig. 1 shows some schematic profiles, or integration windows, for foveal and peripheral information (shown in black and gray respectively). Fig. 1 A–C chart the progression in the extent to which the extraction of peripheral visual information is contingent upon the completion of foveal analysis—from completely contingent (Fig. 1A, serial), through partly contingent (Fig. 1B, cascaded), to completely parallel (Fig. 1C). This temporal relation between foveal analysis and peripheral selection is a core assumption of models of eye movement control in reading (14, 19–21) and other visual-motor domains (22, 23). Finally, Fig. 1D demonstrates a hypothetical tradeoff between an increase in foveal load and a decreased peripheral gain. In this example, the foveal integration window is extended to reflect the higher processing load. The duration of the peripheral window is also extended, but by a smaller amount, and its amplitude is reduced. Note that the accuracy of peripheral selection will be determined by both the amplitude and the duration of the integration window.

Fig. 1.

Hypothetical temporal weighting functions for foveal analysis and peripheral selection. (A) Strict serial model: peripheral information is analyzed only once foveal processing is complete. (B) A weaker version of the serial model in which peripheral information is processed once some criterion amount of foveal analysis is complete. (C) Parallel model in which foveal analysis and peripheral selection start together. In A–C, the time window for peripheral selection is shorter than that for foveal analysis, reflecting the primary importance of the latter. (D) Manipulation of foveal load. As foveal processing difficulty is increased, more time is taken to analyze the foveal information. The time window for peripheral selection extends as well, but by a smaller amount. In addition, the gain of peripheral processing is lower, resulting in attenuation of the amplitude of the weighting function.

A potentially powerful way to identify the coordination between foveal analysis and peripheral selection is then to estimate these underlying integration windows directly, under conditions of variable foveal processing load. Identifying these windows is far from trivial; it involves determining what information is being processed, from where, and at what point in time during an individual fixation. We have developed a dual-task noise classification approach (24–26) that allows us to identify what information is used by the observer for what “task” over the brief time scale of a single fixation. Using this method, we show that the uptake of information for foveal analysis and peripheral selection proceeds independently and in parallel.

Results

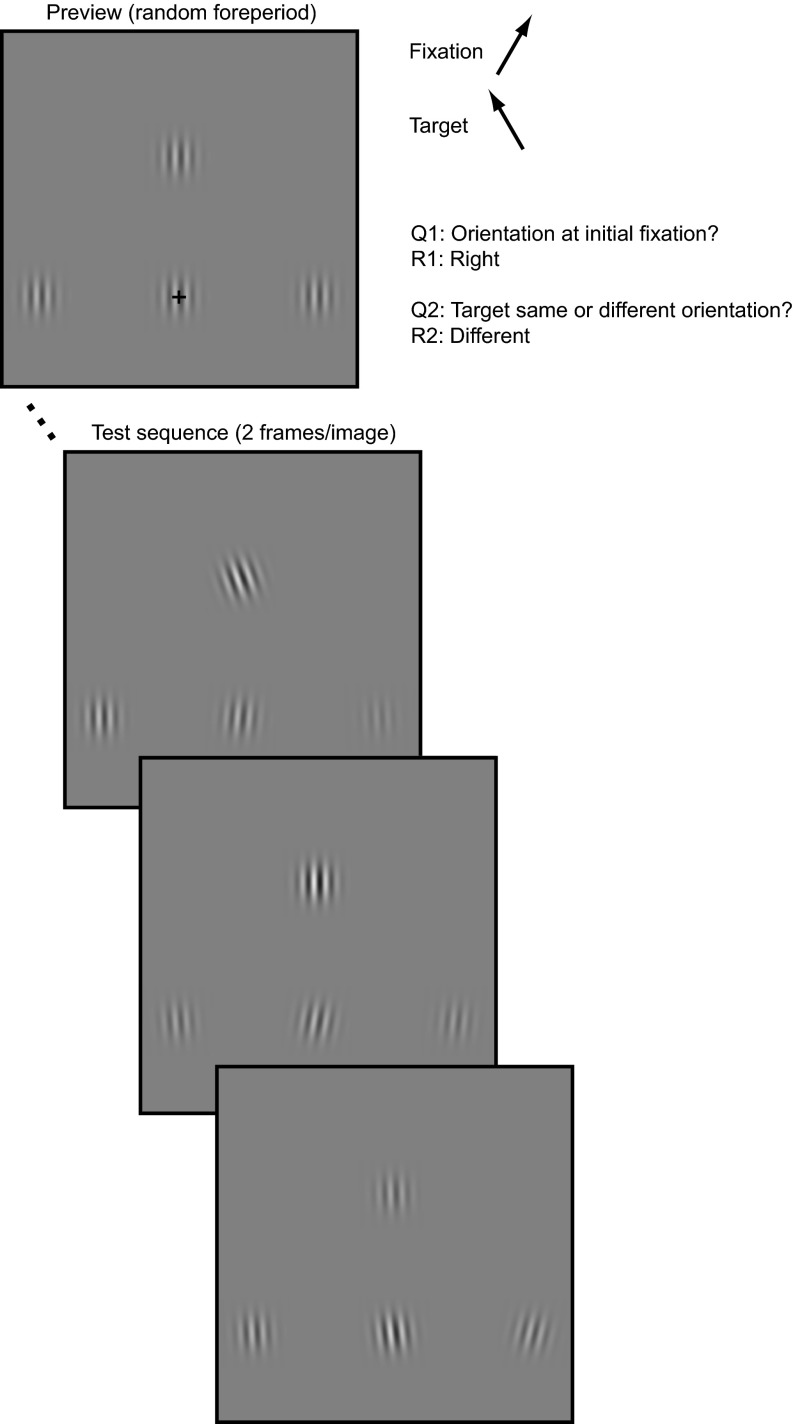

Our experimental paradigm is illustrated in Fig. 2; it aims to mimic the demands of active visual sampling. The observer has to analyze information at multiple locations using foveal vision (foveal analysis), use peripheral information to guide a saccade (peripheral selection), and use the collected information to make some overall perceptual decision about the state of the visual world. Critically, foveal analysis and peripheral selection are based on different visual dimensions (tilt and contrast, respectively). We insert temporal noise in both visual dimensions and relate this noise to the behavioral outcomes.

Fig. 2.

Illustration of the paradigm. Trials start with a preview of variable duration. The preview is replaced by a sequence of test images, with each image shown for two video frames (∼24 ms per image), for a total duration of ∼750 ms, or 32 images at an 85-Hz monitor refresh rate. The peripheral target is signaled by its higher average luminance contrast (straight up in this figure). The mean target and nontarget contrasts are equidistant from the preview contrast. The fixation pattern remains at the preview contrast. The contrast of all patterns is perturbed independently with zero-mean Gaussian noise (σ = 0.15). The fixation pattern can be tilted clockwise or anticlockwise from vertical (clockwise in this example). The target pattern can be tilted in the same or a different direction (anticlockwise in this example). The direction of tilt of the remaining patterns is determined randomly and independently, so that their tilt conveys no information about the likely target orientation (i.e., the orientations of the peripheral patterns are uncorrelated). The orientation of all four patterns is also perturbed independently with zero-mean Gaussian noise (σ = 6°). The mean pattern tilt was either 1° or 2° (as in this figure).

Eight human observers took part in a comparative tilt judgment task. An initially fixated pattern, which we refer to as the foveal target, was tilted away from vertical by a small amount (1° or 2°) in either a clockwise or anticlockwise direction. Three peripheral patterns were presented, one of which (peripheral target) had a slightly higher luminance contrast than the other two (nontargets). All three patterns were independently tilted either clockwise or anticlockwise. Observers had limited time to compare the tilt of the foveal and peripheral targets. The tilt and contrast of all four patterns was independently perturbed with zero-mean Gaussian noise, refreshed every two video frames (∼24 ms). The tilt offset was sufficiently small that this was a foveal task—i.e., to perform the task observers had to (i) analyze the tilt of the foveal target; (ii) select the peripheral target from the nontargets on the basis of luminance contrast; (iii) fixate the peripheral target and analyze its orientation; and (iv) respond whether the foveal and peripheral targets were tilted in the same or different direction. In addition, they also reported the tilt direction of the foveal target.

Though this was a challenging task, the overall same/different judgment was performed above chance by 7/8 observers (based on the 95% binomial confidence intervals). Averaged across observers, the overall accuracy was 60%. Accuracy of foveal target tilt discrimination was well above chance for all observers, with a mean of 77% correct. The peripheral target was correctly fixated with the first saccade on 63% of the trials (note that chance performance here corresponds to 33%; all observers were above chance on this measure). Fig. S1 summarizes a number of performance measures along with the individual observer data.

Temporal Integration Windows for Foveal Analysis and Peripheral Selection.

To identify the foveal integration window, we focus on the tilt discrimination of the foveal target. To identify the peripheral integration window for saccade target selection, we focus on which of the three peripheral patterns was selected for the first saccade. The logic of the noise classification approach is straightforward: If a noise sample at a particular point in time was processed and used to drive behavior, it should be predictive of behavior. By assessing to what extent the noise at various points in time is predictive of behavior, we obtain an estimate of the underlying temporal weighting function that observers use to perform a particular task (27). We performed this analysis aligned on the onset of the test sequence (display aligned) and aligned on the onset of the first movement (saccade aligned).

We start with foveal analysis. Suppose that, for example, the true direction of tilt of the foveal target on a given trial is +1° (clockwise). Suppose the observer is particularly sensitive to the first two samples presented after the onset of the test sequence (corresponding to the first ∼50 ms of the sequence). Due to random sampling, the orientations presented during this interval are −3° and 0°. The mean orientation over this interval is negative, and the observer signals that the tilt of the foveal target was anticlockwise. This response would be classed as an error in our analysis. As a first step, then, we averaged the orientation noise traces of the foveal target separately for correct and error decisions to infer the temporal interval used by the observer to make a decision. Before averaging, we subtracted the true mean orientation from the sequence of tilt values, so that we were left with the noise samples only, and 0° corresponds to the “true” mean tilt. Noise samples that tilted the pattern further in its nominal (mean) orientation were given a positive sign (e.g., more clockwise for a clockwise pattern); samples that tilted the pattern in the opposite direction from the nominal orientation were given a negative sign. Trials with different levels of mean tilt (1° or 2°) were pooled together in this analysis.

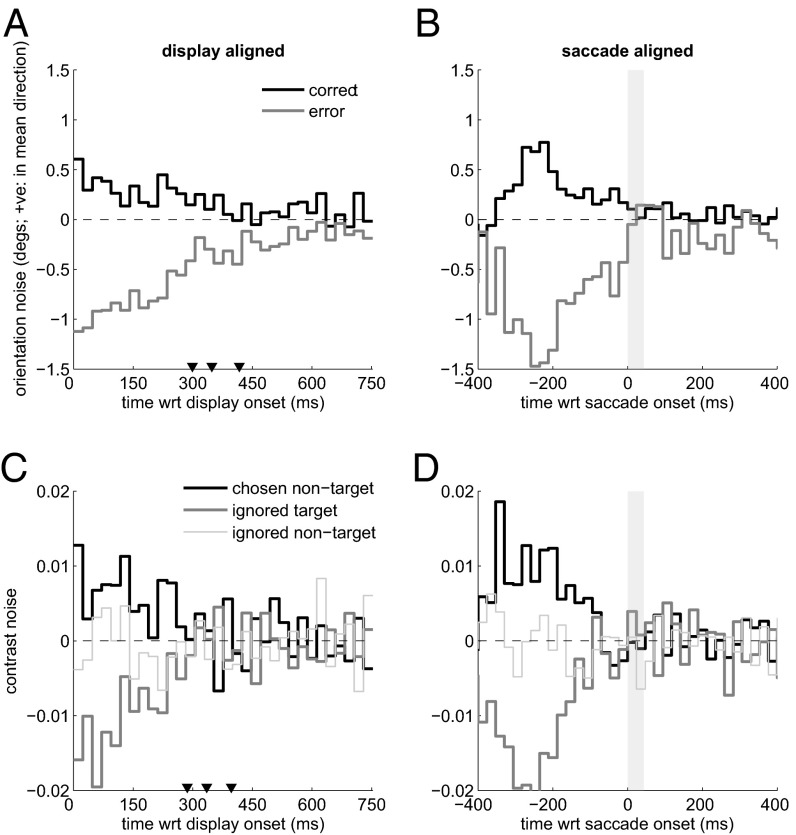

Fig. 3 A and B shows these classification images for a single observer. Fig. 3 A and C shows the display-aligned traces for the whole trial duration; Fig. 3 B and D shows the traces aligned to saccade onset. Where the two curves differ, we have evidence that the noise in the stimulus influenced the decision. During this interval, noise samples that tilted the pattern away from its true direction were more likely to induce an error, and noise samples that tilted the pattern even further in the true direction were more likely to induce a correct decision. The foveal nature of this discrimination is readily apparent in these traces, particularly when aligned on eye movement onset. Before movement onset, the orientation noise clearly influenced the perceptual tilt judgment, but after the movement, the noise had a much less pronounced effect on the decisions. Aligned on display onset, the traces converge rather gradually due to the variability in the duration of the initial fixation.

Fig. 3.

Raw temporal classification images for foveal identification (orientation noise) (A and B) and peripheral selection (C and D) for one observer. Note that display onset refers to the start of the noisy test image sequence; it is not the same as stimulus onset, due to the preview during which all patterns were already present (Fig. 2). The three triangles in the display-aligned panels (A and C) correspond to the 25th, 50th (median), and 75th percentiles of the fixation duration distribution. The gray-shaded box in the saccade-aligned plots (B and D) corresponds to the mean saccade duration for this observer.

Next consider peripheral selection. The analysis here is more complex, because there were three patterns to choose from. However, the logic is very similar. Consider an observer who uses the information presented between 100–150 ms after onset of the test sequence. If during this interval one of the nontargets happens to have a particularly high luminance contrast, the observer may be more likely to select that pattern for the next fixation (7). In other words, the sequence of contrast values in the periphery should be predictive of the observed fixation behavior. For this analysis, we only considered erroneous saccades directed to a nontarget. Fig. S2 shows that little insight is gained from correct saccade trials in the identification of the integration window for peripheral selection.

Fig. 3 C and D shows the average noise traces from all three peripheral locations preceding these saccades, for the same observer. Of critical interest is the comparison between the ignored target and the chosen nontarget. As expected, errors in peripheral selection occurred when the nontarget happened to be relatively high in contrast and/or the target was relatively low in contrast. Again, where the two curves differ, we have evidence that noise in the stimulus influenced the decisions. Of course in this instance, noise that occurred after saccade onset cannot, by definition, influence saccade target selection.

To compare the temporal processing windows underlying foveal analysis and peripheral selection more directly, we calculated the area under the receiver operating characteristic (ROC) curve at each point in time, given two distributions of noise samples: correct vs. error for foveal analysis; chosen nontarget vs. ignored target for peripheral selection (the thick black and gray lines in Fig. 3). This measure quantifies the separation between two distributions as the probability with which a pair of noise samples can be accurately assigned to the two stimulus or response classes by comparison with a criterion value (28). When two distributions lie completely on top of each other, this classification cannot be made above chance level. As the distributions separate, classification becomes more accurate. This measure allows for direct comparison between foveal analysis and peripheral selection on a meaningful scale (probability) that incorporates the variability of the noise samples within each stimulus or response class (not included in Fig. 3).

The area under the ROC curve may be computed nonparametrically, so that we do not have to make any assumptions about the distributions of noise values (29). Though the distributions that generated the external noise were Gaussian, there is no guarantee that they will still be Gaussian once conditionalized on stimulus or response class. For example, extreme noise values drawn from the tails of the distribution are more likely to generate errors in tilt discrimination than noise values closer to the mean. Our analysis here is similar to that used in single-cell neurophysiology to quantify the extent to which single neurons can distinguish between two stimuli (where distributions of firing rates are frequently nonnormal) (30–32).

Materials and Methods, ROC Analyses explains in detail how the analysis was performed. In brief, we iterated a criterion from a small value (where all noise values from both distributions lie to the right of the criterion) to a large value (where all of the noise values lie to the left of the criterion). For each criterion value, we computed the proportion of noise values from the error (foveal analysis) and ignored target (peripheral selection) distributions that were greater than the criterion value. In addition, we computed the proportion of noise values from the correct (foveal analysis) and chosen nontarget (peripheral selection) distributions that were greater than the criterion. Plotting one proportion (correct or chosen nontarget) against the other (error or ignored target) traces out the ROC curve. This curve was then numerically integrated to find the classification accuracy.

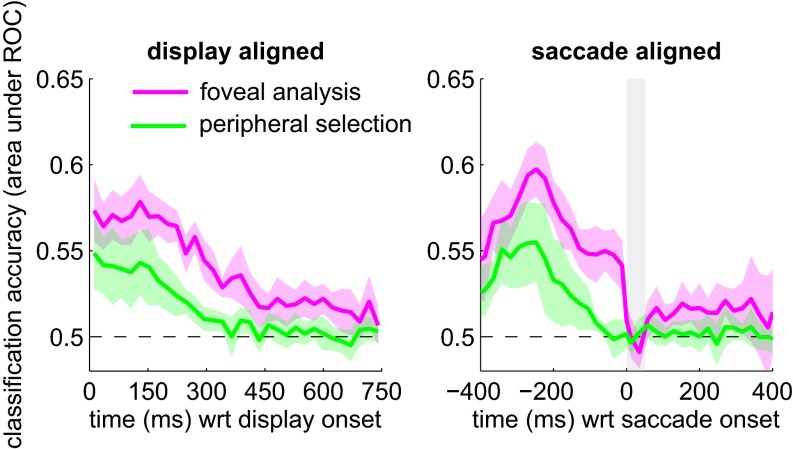

Fig. 4 shows this accuracy measure as a function of time, averaged across all eight observers. The temporal integration window now corresponds to the region where the classification accuracy is greater than chance. The uptake of information for foveal analysis and peripheral selection occurred largely in parallel. In particular, foveal orientation and peripheral contrast signals were monitored right from the onset of the test sequence; classification accuracy for both foveal analysis and peripheral selection is clearly above chance at the beginning of the test sequence. Aligned on movement onset, foveal information was processed right up to the onset of the first saccade and suppressed during the saccade (indicated by the gray shaded box). The uptake of peripheral information ceased some 60–80 ms before the saccade, which is compatible with other estimates of a so-called “saccadic dead time” (6–8, 33). This dead time corresponds to the period before movement onset during which new visual information can no longer modify the upcoming movement. It is the “point of no return” in saccade programming. Our data clearly show that foveal processing continued during this dead time. A final feature is that the function for foveal analysis recovers from saccadic suppression and is raised above the baseline almost straight away after movement offset. In other words, observers continued to process some orientation information from the previously fixated location, even though this location was now in the periphery.

Fig. 4.

Noise classification accuracy for foveal identification and peripheral selection, averaged across eight observers. In the saccade-aligned panel (Right), the average movement duration is shown by the vertical shaded box. The shaded region around the functions corresponds to the 95% confidence interval across the subject pool. Note that in the saccade-aligned plot, fewer trials contribute to the extreme time points (i.e., long before movement onset and long after movement offset). To align the noise samples on movement onset, we assigned the sample during which the movement started to time 0. This relatively crude alignment means that the “true” onset of the saccade relative to the start of the noise sample is accurate within the duration of an individual noise frame (i.e., ∼24 ms). Given the large amount of data collected for each observer, the average starting point of the movement will lie near the midpoint of the noise frame.

On the whole, classification accuracy is lower for peripheral selection than for foveal analysis. This finding implies that the peripheral contrast noise was less predictive of the upcoming saccadic decision, compared with the predictive value of the foveal orientation noise for tilt discrimination. Observers may simply be less sensitive to the peripheral contrast information (34). Reduced contrast sensitivity in the periphery will diminish the influence of the external noise on behavior and thereby reduce its predictive value. Alternatively, it is possible that different amounts of internal noise are added by the sensory apparatus to the foveal orientation and peripheral contrast signals (35, 36). Internal noise dilutes the influence of external noise and thereby reduces its predictive value. We cannot say whether any such differences in internal noise depend on the location in the visual field (foveal vs. periphery) or on the specific feature dimensions involved (contrast vs. orientation).

Interaction Between Foveal Analysis and Peripheral Selection.

Having demonstrated the temporal uptake of information for foveal analysis and peripheral selection, we are now in a position to address the interaction between the two tasks. Foveal tilt discrimination difficulty was varied at two levels, determined by the mean offset from vertical. We refer to these conditions as high load (1°) and low load (2°). As illustrated in Fig. 1 C and D, we may expect this variation in load to influence the uptake of information from the fovea and the periphery. Such changes in the uptake of information may be identified using our noise classification approach. In particular, we might expect changes in the width and/or amplitude of the temporal integration windows for foveal analysis and peripheral selection, with variations in foveal load.

First we consider the behavioral results under the two levels of foveal processing difficulty. Table 1 summarizes the relevant behavioral performance measures. When foveal load was low, accuracy improved by ∼20%. There was a very small effect on the duration of the first fixation, with observers moving away from the foveal target slightly earlier when the processing load was low (statistically the effect is negligible) (37). The accuracy of peripheral selection was completely unaffected by the difficulty of foveal processing. In summary, making foveal processing easier did clearly benefit tilt identification, but did not affect eye movement behavior: both fixation duration and target selection accuracy were essentially constant with the variation in foveal load.

Table 1.

The effect of foveal processing load on behavior (averaged across eight observers)

| Performance measure | High load: 1° | Low load: 2° | Ci-* | Ci+ | Bf10† |

| Foveal target tilt | 0.70 | 0.85 | −0.16 | −0.14 | 2.24 × 105 |

| First fixation duration | 421 | 414 | −1 | 14 | 1.49 |

| Saccade target accuracy | 0.63 | 0.62 | −3 × 10−3 | 0.02 | 1.07 |

95% Confidence intervals on the difference between low and high load.

Bayes factor in favour of the “alternative” hypothesis that there is a difference between the two load conditions with a JZS prior on effect size (with scale factor r = 0.5).

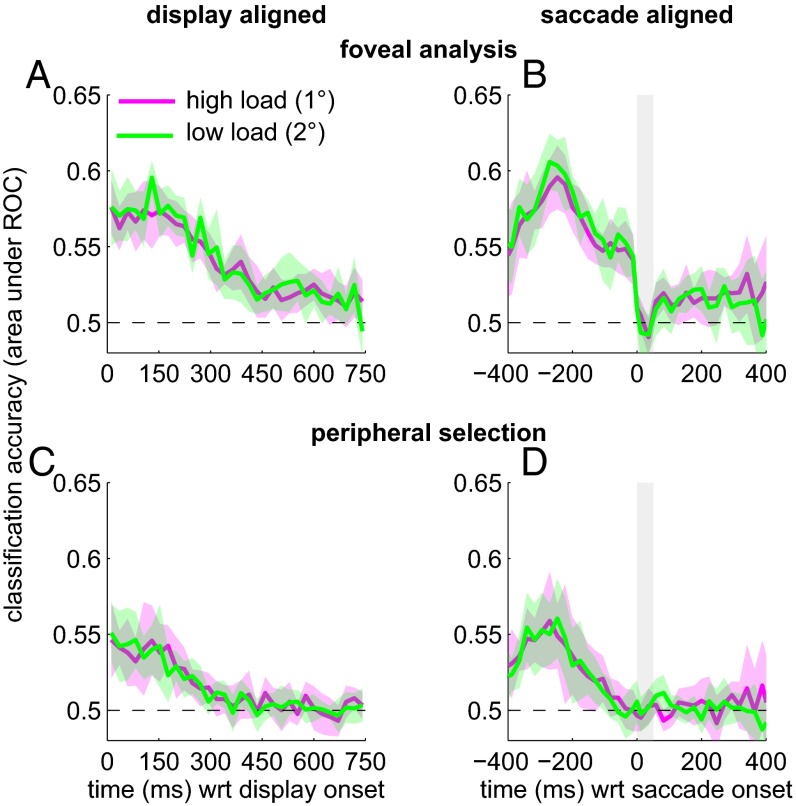

Next, we examined the underlying temporal integration windows by performing the noise classification analysis separately for the two levels of processing load. Fig. 5 shows the classification accuracy for each level of foveal load. These curves largely overlap (and fall in each other’s confidence interval), and there is no evidence for systematic and reliable differences. These data suggest that observers did not adjust their uptake of information in response to variations in the quality of foveal evidence. The lighter foveal-processing load did not affect the amplitude, shape, or width of the temporal processing windows for foveal analysis and peripheral selection. Fig. S3 shows that this result held regardless of whether the foveal load was varied randomly between trials or systematically between blocks.

Fig. 5.

Noise classification accuracy for foveal identification (A and B) and peripheral selection (C and D), averaged across eight observers. Each panel contains a separate function for the two levels of foveal processing load. In the saccade-aligned plots (B and D), the saccade duration is shown by the shaded vertical box. The shaded regions around the functions show the 95% confidence intervals.

These results suggest a degree of independence between foveal analysis and peripheral selection. To test independence between these processes more thoroughly, we performed (part of) a classic dual-task analysis. That is, we examined to what extent performance on one task (foveal analysis in this case) suffered from the addition of another (peripheral selection). The logic goes as follows. If both tasks share a bottleneck or limited capacity resource, having to perform the peripheral selection task concurrently with foveal analysis may be expected to impair the latter. If the two tasks proceed independently, foveal analysis of tilt would be just as accurate with or without the peripheral selection demand. In that case, performance on the foveal analysis task in isolation should allow us to predict perceptual discrimination performance in the main, comparative task.

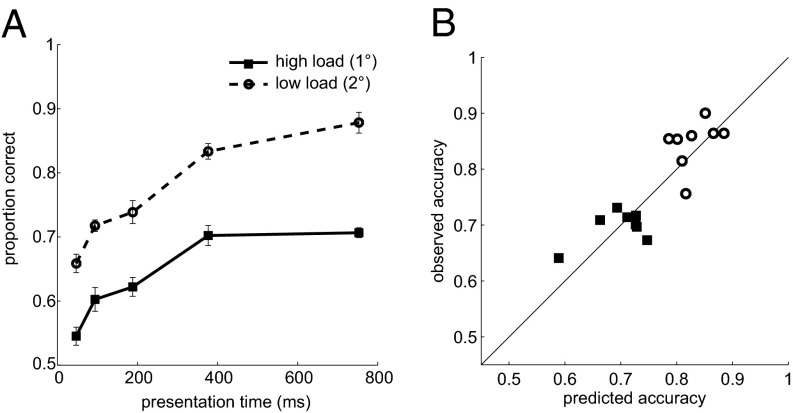

One issue to consider in this regard is time. We have shown that the uptake of information from the fovea occurred throughout the entire fixation duration. Given the dynamic nature of the external noise, the accuracy of foveal tilt discrimination will depend on the duration of the fixation/integration epoch. For any one individual observer, we therefore need to be able to predict the accuracy of foveal discrimination at a time scale that is relevant to that observer. For this reason we measured foveal orientation discrimination accuracy as a function of time in a separate experiment (Materials and Methods, Single Pattern Tilt Judgments).

Fig. 6A shows accuracy for the two levels of foveal processing difficulty, averaged across observers. Performance clearly improves with presentation time, up to a plateau. Overall accuracy was higher for the lower foveal load, and the two curves appear to be separated by approximately a constant. We constructed these functions for each individual observer. We then plugged the observed mean first fixation duration from the main experiment into the function corresponding to a particular observer and foveal load. We simply found the predicted probability correct for a given fixation duration through linear interpolation between two data points. Fig. 6B shows the correspondence between predicted tilt accuracy based on single-task performance and tilt discrimination in the main task with a concurrent peripheral selection demand.

Fig. 6.

Comparison of foveal tilt discrimination under single- and dual-task conditions. (A) Single-task performance. Observers viewed a single pattern at fixation, which fluctuated in orientation and contrast in exactly the same way as the foveal target in the main experiment. After a variable interval, the pattern was extinguished, and the observer generated a vertical, upward saccade to a Gaussian noise-patch. Accuracy is averaged across observers; the error bars are within-subject SEMs. (B) Dual-task tilt performance (i.e., with a concurrent peripheral selection demand) as a function of single-task performance. Single-task accuracy was found by plugging the mean first fixation duration into the functions that relate tilt discrimination accuracy to viewing time (as in A).

The addition of a peripheral selection demand, if relying on a common bottleneck or limited capacity resource, should have lowered tilt discrimination in the main task. As a result, we would have expected the data points to lie below the diagonal; clearly, that is not the pattern we found. Instead, the data points scatter around the identity line, with no obvious systematic offset. Averaged across the two levels of foveal difficulty (to ensure independence between observation pairs), the correlation is r(8) = 0.7, P = 0.03 (one-tailed). A Bayesian t test on the difference between the averaged observed and predicted scores (38), resulted in a Bayes factor of 0.54 (effectively no evidence against the null) (37). Taken together, then, the behavioral performance data, temporal integration windows, and the dual-task analysis all strongly support the conclusion that peripheral selection was performed at no cost to the analysis of the currently fixated item.

Discussion

The visual environment is explored by discretely sampling it through active gaze shifts. During a period of stable fixation, the information at the current point of gaze is analyzed in fine detail using foveal vision. At the same time, decisions about where to fixate next need to be made. We have developed a dual-task noise classification methodology that enabled us to identify what information was processed, from which spatial location, and at what point in time during an individual fixation. Using this methodology, we found that foveal analysis and peripheral selection largely proceed in parallel and independently from each other. Foveal analysis spans essentially the entire fixation duration and continues all of the way up to movement onset. The extraction of information from the periphery for saccade target selection stops sometime earlier. During this dead time, information continues to be processed from the fovea. Variation in the difficulty of foveal processing did not affect the uptake of information from the fovea or the periphery. In the following sections, we examine these claims about parallelism and independence in more detail.

Foveal Analysis and Peripheral Selection Occur in Parallel.

One interpretation of our results is that at least for some period, attention is split between multiple locations: attention is needed to extract orientation information from the central pattern, and contrast information from the peripheral patterns (10). The noise classification analysis suggests the extraction of this information proceeds in parallel. However, it could be argued that our methodology is not able to distinguish between parallel processing and rapid random serial shifting of attention (9, 39, 40). The temporal integration windows are estimated over many trials. On any one given trial, attention could rapidly shift between the four patterns in the display. Given some variability in the order and speed with which individual items are attended, it is likely that each location will have been sampled at a particular point in time in some trials. Taken across many trials, it would then appear that information was extracted from the fovea and periphery at the same point in time.

The strongest evidence against rapid serial shifting comes from our dual-task analysis. In the single-pattern tilt judgments there were no peripheral patterns to shift attention to; as such, attention would, presumably, have remained on the foveal target at all times. In the dual-task condition, however, attention would have moved between the foveal target and all three peripheral items. For the same fixation duration, the proportion of time for which the foveal target was attended should be drastically reduced in the dual-task condition. As such, tilt discrimination should have been superior in the single-task condition. On the contrary, the analysis shown in Fig. 6 shows that foveal target tilt discrimination was essentially the same in both single- and dual-task conditions. Based on these considerations, the most parsimonious explanation of the temporal integration windows identified in the present study is that foveal analysis and peripheral selection started together and proceeded in parallel.

However, evidence against a rapidly shifting attentional focus does not necessarily provide evidence that attention was divided. Attention is typically thought of as a unitary mechanism: all visual features that fall within the spotlight are enhanced. Indeed, one of the proposed primary functions of attention is to bind different features to the same object in the focus of attention (40). Some aspects of our data (SI Text, Peripheral Processing Is Nonunitary and Fig. S4) argue directly against such a unitary mechanism. Limited processing of tilt at the future fixation position occurs once peripheral information about contrast has been processed and the saccade target has been (or is being) selected. If attention had already “visited” peripheral locations for the purpose of deciding which pattern had the higher contrast, why would information about orientation processed along the way not contribute to subsequent judgments of peripheral target tilt?

Indeed, we see no need to invoke an attentional spotlight to account for the highly selective uptake of foveal and peripheral information. To perform the task, observers may adaptively choose which upstream sensory channels to monitor. For instance, for foveal tilt discrimination, observers might monitor central visual mechanisms with off-vertical orientation preferences. Tilt judgments may involve accumulation of a decision variable that tracks the difference in the neural response of neurons with clockwise and anticlockwise orientation preferences (41, 42). The sign of this integrated difference variable may then be used to make the tilt judgment (43–45). For peripheral selection, observers could monitor peripheral mechanisms tuned to vertical orientations that scale their response with pattern contrast. The eye movement decision may be based on integrating and comparing mechanisms that represent different locations in the visual field, with the saccade target being the location that triggered the maximum integrated response (46, 47).

Foveal Analysis and Peripheral Selection Occur Independently.

The absence of a dual-task cost in conditions with variable foveal load is striking, given previous demonstrations of tunnel vision and altered peripheral processing with changes in foveal load (12–14, 16). These previous findings are consistent with a reduction in peripheral gain under conditions of high foveal load. Why did we see no evidence at all for such a gain change?

One possible reason is that the task-relevant visual features were rather basic and, perhaps more importantly, different for foveal analysis and peripheral selection. Concurrent, unspeeded visual discrimination tasks in the fovea and periphery are performed without interference when the two tasks involve different feature dimensions (e.g., color and luminance) (48), or when the peripheral task involves discrimination between well-learned, biologically relevant categories (e.g., animal vs. nonanimal discrimination) (49). Dual-task costs are observed when the discrimination in the fovea and periphery involves the same dimension or less well-practiced feature combinations (e.g., “T” vs. “+” discrimination) (49). It is possible then that peripheral information can be analyzed at no cost to foveal processing when sufficiently specialized detectors are involved so that no or little binding across feature channels is needed.

The limitations of peripheral visual processing, in conjunction with the clutter of natural visual scenes, are such that eye guidance by complex combinations of features may not always be possible (50–53). Indeed, the primary reason to fixate a region in the visual field is to extract more complex and detailed information from that location with the high-resolution fovea. For example, in reading, low spatial frequency information about word boundaries is used to select the target for the next saccade (54). However, low spatial frequency information about the coarse outline of words is effectively useless when it comes to identifying those words, which relies on high spatial frequency shape information and combining elementary features (55). The analysis of this information requires the fovea.

Another possible reason for the independence observed in the present study is that foveal analysis and peripheral selection must be one of the most extensively practiced dual tasks that humans (and other foveated animals) are confronted with. We shift gaze about three times every second during our waking hours (56), although not all of these shifts are visually guided. Maintaining vigilance and awareness of the peripheral visual field is clearly important for our survival [e.g., navigation and locomotion (57); detecting predators or other kinds of potential hazards (58)]. Combining foveal object identification with some basic peripheral feature processing to enable rapid orienting responses may just be a particular dual task that the brain has adapted—over the course of evolution or within an individual’s lifetime—to perform without interference (59).

Conclusions

Much of the neural and behavioral work on attention and eye movement control has little to say about the foveal component of active gaze behavior. Most studies and models are concerned with the system’s response to peripheral visual information, typically with minimal foveal-processing demands. The majority of models of visual search and scene perception focus exclusively on the selection of fixation locations (60–63; and see ref. 50 for a review). Some models of search are based on template-matching and assume that a target template is applied across the visual field independently and in parallel (61, 63), but to our knowledge this assumption has not yet been tested experimentally. In terms of the underlying neurophysiology, many studies have charted the competition between neurons representing potential peripheral target locations, again in the absence of any foveal processing demand (64). The lack of consideration to the foveal processing demands is striking, given that the primary reason to shift gaze to a certain location is to extract the information at that location with greater resolution. Any model of eye movement control needs to solve the same problem, regardless of the domain of application: How are foveal analysis and peripheral selection coordinated? Our study provides a default starting position on the issue. Foveal analysis and peripheral selection occur in parallel and independently.

Materials and Methods

Observers.

One group of four observers experienced the two different levels of foveal processing load randomly intermixed. Another group of four observers experienced the two load conditions in a blocked manner. We found that this variation in presentation mode did not affect behavior (Fig. S3), which is why we present the data from all eight observers together. Five observers were female; the age range across all observers was 21–33. All subjects had normal or corrected vision. Observers were paid for their help at a rate of £7.5/h. The study was approved by the local Faculty Ethics Committee and complied with the principles of the Declaration of Helsinki. Observers provided written informed consent prior to participation and at the end of the study after having been debriefed. Each observer was given one initial session on the comparative task as practice. The study then started with one initial session in which time vs. accuracy curves were measured for the two levels of foveal load (without a peripheral selection demand), followed by 15 sessions of the comparative task, and one final time vs. accuracy measurement. In total, each subject completed 18 ∼1-h sessions on different days. In the comparative tilt judgment task, observers performed four blocks of 96 trials in a session, for a total of 5,760 trials.

Stimuli and Equipment.

Stimuli were generated using custom-written software in MatLab (MathWorks, Inc.) using Psychtoolbox 3.08 (65–67) and presented on a ViewSonic G225f 21-inch CRT monitor, running at 85 Hz with a spatial resolution of 1,024 × 768. To create fine steps in luminance contrast, the graphic card output was enhanced to 14 bits using a bits++ digital video processor (Cambridge Research Systems, Ltd.). The range between the minimum and maximum luminance (determined by the maximum contrast pattern, i.e., the target contrast plus twice the SD of the contrast noise distribution) was sampled in 255 steps using a linearized look-up table. One additional gray level was used for the fixation point and calibration targets. The screen was set to midgray (47 cd⋅m−2).

Eye movements were recorded at 1,000 Hz using the EyeLink 2k system (SR Research Ltd.). Saccades were analyzed offline using velocity and acceleration criteria of 30°⋅s−1 and 8,000°⋅s−2. The eye tracker was calibrated using a grid of nine points at the start of each block of trials. The calibration target was a plus sign (+), with each leg measuring 0.6° × 0.1°. Each trial started with presentation of a central fixation point (identical to the calibration target). The stimuli were presented automatically as soon as the observer’s fixation remained within 1.5° of the center for 500 ms.

Comparative Tilt Judgment Task.

Displays consisted of four Gabor patterns, with a spatial frequency of two cycles per degree. The circular SD of the Gaussian envelope was 0.5°. The patterns were in sine phase. One pattern replaced the initial fixation point in the center of the screen. The three remaining patterns fell on an imaginary circle around fixation, at an eccentricity of 8°. The patterns were always at 90° angles, but could appear in one of four configurations: top (Fig. 2), left, bottom, and right. The variation in the configuration is included to discourage observers from developing stimulus-independent saccade biases (e.g., always saccade straight up). All four pattern configurations appeared equally often within a block of 96 trials.

During the preview, all patterns were stationary in both contrast and (vertical) orientation. The Michelson contrast of the underlying sinusoid,  , was set to 0.4. The preview duration was approximately distributed according to a shifted and truncated exponential (minimum: 235 ms; mean: 490 ms; max: 1 s). The preview was followed by a ∼750-ms test period. The mean contrast of the foveal target remained at 0.4. The mean peripheral target contrast was 0.475, and that of the peripheral nontargets was 0.325. These contrast values were perturbed with Gaussian noise with a SD of 0.15. On half the trials the tilt offset of the foveal target was clockwise; on the other half the offset was anticlockwise. On half the trials the tilt offset of the peripheral target was the same, and on the other half the offset was different to that of the foveal target. The offsets of the two peripheral nontarget patterns were chosen randomly and independently from trial to trial. The orientation of all four patterns was perturbed with Gaussian noise with a SD of 6°. The noise perturbations in both contrast and orientation were applied independently to all four patterns every two video frames. A single noise sample or frame lasted ∼24 ms.

, was set to 0.4. The preview duration was approximately distributed according to a shifted and truncated exponential (minimum: 235 ms; mean: 490 ms; max: 1 s). The preview was followed by a ∼750-ms test period. The mean contrast of the foveal target remained at 0.4. The mean peripheral target contrast was 0.475, and that of the peripheral nontargets was 0.325. These contrast values were perturbed with Gaussian noise with a SD of 0.15. On half the trials the tilt offset of the foveal target was clockwise; on the other half the offset was anticlockwise. On half the trials the tilt offset of the peripheral target was the same, and on the other half the offset was different to that of the foveal target. The offsets of the two peripheral nontarget patterns were chosen randomly and independently from trial to trial. The orientation of all four patterns was perturbed with Gaussian noise with a SD of 6°. The noise perturbations in both contrast and orientation were applied independently to all four patterns every two video frames. A single noise sample or frame lasted ∼24 ms.

Observers were simply told about the perceptual judgments they had to make. We did not give any specific eye movement instructions. The constraints of the task were such that observers had to move their eyes to the peripheral target to identify its orientation and compare it to the initially viewed foveal target. The duration of the test period was sufficiently short that observers had to be selective in where to direct their first eye movement; there was not enough time to inspect each pattern in the display with foveal vision and estimate its tilt direction accurately (Fig. 6A).

Single Pattern Tilt Judgments.

Observers performed two separate sessions in which they judged the tilt of a single pattern. In this task, observers were presented with a vertically oriented, stationary Gabor at fixation with a luminance contrast of 0.4. The peripheral patterns were not shown. After the random foreperiod, the fixation point was removed and the pattern was tilted away from vertical by 1° or 2° (randomly intermixed or blocked in the two different subject groups). As in the main experiment, the patterns were perturbed with temporal orientation and contrast noise. After a variable delay (4, 8, 16, 32, and 64 frames, corresponding to ∼47, 94, 188, 376, and 753 ms), a peripheral pattern appeared straight up from the fixated pattern (i.e., in the 90° location in Fig. 2). This peripheral pattern was a Gaussian-windowed, high-contrast patch of noise, created by adding zero-mean Gaussian luminance noise to the background luminance (as a proportion of the maximum screen luminance, the mean luminance of the noise patch was 0.5 and the SD was 0.16). The circular SD of the window function was the same as that used for the Gabor patches. At the same time, the fixated pattern disappeared, which signaled to the observer to make a vertical upward saccade to fixate the noise patch.

The requirement to make a saccade was included to keep the motor demands as close as possible to the main comparative tilt judgment task, without invoking a peripheral selection demand. The noise patch also served as a postsaccadic foveal mask that was broadband in orientation and spatial frequency. Upon successful completion of the saccade, the observer indicated the orientation of the fixated pattern with an unspeeded manual button press. Note that the saccade target always appeared in the same location, and that the “go” signal was the offset of the fixation stimulus. As such, this task required no or minimal peripheral processing. In each session, observers performed five blocks of 100 trials (10 repetitions of each presentation time for the two levels of foveal processing load).

Data Analysis.

Eye movement data.

We were predominantly interested in the first saccade that brought the eyes from the foveal target to one of the three peripheral items. We only included trials in which that saccade was generated after the onset of the test sequence and started within 2° of the foveal target. Provided the saccade had a minimum amplitude of 4°, it was assigned to the nearest peripheral pattern. After filtering the data set in this way, the total number of included trials ranged between 5,299 and 5,760 across observers.

Classification images.

Foveal analysis.

Trials were separated by accuracy of the tilt judgment of the foveal target. For each trial, we subtracted the “true” mean orientation from each vector of M tilt samples (M = 32). The resulting noise values were given a sign so that positive values indicated samples tilted in the direction of the nominal (true) tilt, and negative values represented samples tilted away from the nominal direction. We then simply averaged the noise samples across the trials in each response class. Trials from the two foveal load conditions were pooled together in this analysis. A standard classification image would correspond to the difference between the correct and error traces in Fig. 3 (26). Instead, we performed an ROC analysis over time so that the difference takes the variability around the average noise traces into account and yields a result in meaningful units that can be compared directly across tasks (see below).

To align the data on movement onset, we found the noise sample during which the movement was initiated. To find this sample, we simply divide the fixation duration (millisecond resolution) by the duration of a single noise frame and round the result up to the nearest integer sample. The vector of M noise values is then “copied” into a larger vector of 2M − 1, with time 0 (movement onset) assigned to element m = M in this expanded representation. Empty cells (long before movement onset and long after movement onset) were set to “Not-a-Number” in MatLab and were not included in the calculation of the average noise traces and ROCs.

Peripheral selection.

Only trials with an inaccurate first saccade directed to a nontarget were included in this analysis. For each of these trials we have three vectors of M contrast samples, corresponding to the ignored peripheral target, the chosen nontarget, and the ignored nontarget. We subtracted the relevant mean contrast from each of these three vectors before averaging across trials. The alignment on movement onset occurred in exactly the same way as in the analysis of the orientation noise.

ROC analyses.

The first steps of this analysis are identical to those in the classification image analyses described above, before the final averaging step. For the uptake of peripheral contrast information we did not include the “ignored nontarget” noise values. As illustrated in Fig. 3, these traces hovered around zero and did not appear to contribute to selection of the next fixation point. A convenient way to represent the data at this stage is as two matrices. For instance, for display-aligned foveal analysis, we have one N1 × M matrix for error trials and one N2 × M matrix for correct trials. We refer to these matrices as X1 and X2 respectively. In the case of peripheral selection, the matrices would contain the contrast noise values for the ignored target and chosen nontarget, respectively. Note that the typical values for N1 and N2 were well into the hundreds (and frequently well in the thousands).

Consider each time sample j in turn, where j = 1, …, M in the display aligned analyses and j = 1, …, 2M − 1 in the saccade aligned analyses. There are two vectors of noise values:  and

and  , where the dots in the superscript indicate that we take the values from all rows in the matrix. Only the real valued samples from X are taken in the construction of g. A nonparametric ROC curve is created by evaluating the proportion of noise samples that are greater than a criterion value (28, 29, 68). The criterion value is changed from some small value (where both distributions fall to the right of the criterion) to some relatively large value (where both distributions fall to the left of the criterion). The extreme values of the criterion fix the start and endpoints of the ROC curve at (1, 1) and (0, 0).

, where the dots in the superscript indicate that we take the values from all rows in the matrix. Only the real valued samples from X are taken in the construction of g. A nonparametric ROC curve is created by evaluating the proportion of noise samples that are greater than a criterion value (28, 29, 68). The criterion value is changed from some small value (where both distributions fall to the right of the criterion) to some relatively large value (where both distributions fall to the left of the criterion). The extreme values of the criterion fix the start and endpoints of the ROC curve at (1, 1) and (0, 0).

In between these extreme values, the curve is evaluated at a further 20 criterion values, linearly spaced between the minimum and maximum values across the two distributions. For each criterion value, we evaluated the proportion of noise values in vectors g1 and g2 that were greater than the criterion. The corresponding point on the ROC curve is (p1k, p2k), for k = 1, …, 22. The area under the ROC curve was then computed by simple numerical integration. Though this nonparametric, numerical procedure is relatively brute-force, we have verified that these area estimates were stable and no longer dependent on the number of criterion values chosen. Note that in standard signal-detection theoretic terms, we treat distribution g2 (correct orientation noise samples, chosen nontarget contrast samples) as the “signal” and distribution g1 (error orientation noise samples, ignored target samples) as “noise.”

Supplementary Material

Acknowledgments

Support for this work was provided by UK Engineering and Physical Sciences Research Council Advanced Research Fellowship EP/E054323/1 and Grant EP/I032622/1 (to C.J.H.L.) and National Science Foundation Grant 0819592 (to M.P.E.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1313553111/-/DCSupplemental.

References

- 1.Curcio CA, Sloan KR, Kalina RE, Hendrickson AE. Human photoreceptor topography. J Comp Neurol. 1990;292(4):497–523. doi: 10.1002/cne.902920402. [DOI] [PubMed] [Google Scholar]

- 2.Daniel PM, Whitteridge D. The representation of the visual field on the cerebral cortex in monkeys. J Physiol. 1961;159(2):203–221. doi: 10.1113/jphysiol.1961.sp006803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rovamo J, Virsu V. An estimation and application of the human cortical magnification factor. Exp Brain Res. 1979;37(3):495–510. doi: 10.1007/BF00236819. [DOI] [PubMed] [Google Scholar]

- 4.Ballard DH, Hayhoe MM, Pook PK, Rao RPN. Deictic codes for the embodiment of cognition. Behav Brain Sci. 1997;20(4):723–742, discussion 743–767. doi: 10.1017/s0140525x97001611. [DOI] [PubMed] [Google Scholar]

- 5.Botvinick M, Plaut DC. Doing without schema hierarchies: A recurrent connectionist approach to normal and impaired routine sequential action. Psychol Rev. 2004;111(2):395–429. doi: 10.1037/0033-295X.111.2.395. [DOI] [PubMed] [Google Scholar]

- 6.Becker W, Jürgens R. An analysis of the saccadic system by means of double step stimuli. Vision Res. 1979;19(9):967–983. doi: 10.1016/0042-6989(79)90222-0. [DOI] [PubMed] [Google Scholar]

- 7.Caspi A, Beutter BR, Eckstein MP. The time course of visual information accrual guiding eye movement decisions. Proc Natl Acad Sci USA. 2004;101(35):13086–13090. doi: 10.1073/pnas.0305329101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ludwig CJH, Mildinhall JW, Gilchrist ID. A population coding account for systematic variation in saccadic dead time. J Neurophysiol. 2007;97(1):795–805. doi: 10.1152/jn.00652.2006. [DOI] [PubMed] [Google Scholar]

- 9.Eriksen CW, Yeh YY. Allocation of attention in the visual field. J Exp Psychol Hum Percept Perform. 1985;11(5):583–597. doi: 10.1037//0096-1523.11.5.583. [DOI] [PubMed] [Google Scholar]

- 10.Müller MM, Malinowski P, Gruber T, Hillyard SA. Sustained division of the attentional spotlight. Nature. 2003;424(6946):309–312. doi: 10.1038/nature01812. [DOI] [PubMed] [Google Scholar]

- 11.Jans B, Peters JC, De Weerd P. Visual spatial attention to multiple locations at once: The jury is still out. Psychol Rev. 2010;117(2):637–684. doi: 10.1037/a0019082. [DOI] [PubMed] [Google Scholar]

- 12.Mackworth NH. Visual noise causes tunnel vision. Psychon Sci. 1965;3(2):67–68. [Google Scholar]

- 13.Ikeda M, Takeuchi T. Influence of foveal load on the functional visual field. Percept Psychophys. 1975;18(4):255–260. [Google Scholar]

- 14.Henderson JM, Ferreira F. Effects of foveal processing difficulty on the perceptual span in reading: Implications for attention and eye movement control. J Exp Psychol Learn Mem Cogn. 1990;16(3):417–429. doi: 10.1037//0278-7393.16.3.417. [DOI] [PubMed] [Google Scholar]

- 15.Henderson JM, Pollatsek A, Rayner K. Effects of foveal priming and extrafoveal preview on object identification. J Exp Psychol Hum Percept Perform. 1987;13(3):449–463. doi: 10.1037//0096-1523.13.3.449. [DOI] [PubMed] [Google Scholar]

- 16.Lavie N, Hirst A, de Fockert JW, Viding E. Load theory of selective attention and cognitive control. J Exp Psychol Gen. 2004;133(3):339–354. doi: 10.1037/0096-3445.133.3.339. [DOI] [PubMed] [Google Scholar]

- 17.Hooge ITC, Erkelens CJ. Peripheral vision and oculomotor control during visual search. Vision Res. 1999;39(8):1567–1575. doi: 10.1016/s0042-6989(98)00213-2. [DOI] [PubMed] [Google Scholar]

- 18.Shen J, et al. Saccadic selectivity during visual search: The influence of central processing difficulty. In: Hyona J, Radach R, Deubel H, editors. The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research. Amsterdam: Elsevier; 2003. pp. 65–88. [Google Scholar]

- 19.Morrison RE. Manipulation of stimulus onset delay in reading: Evidence for parallel programming of saccades. J Exp Psychol Hum Percept Perform. 1984;10(5):667–682. doi: 10.1037//0096-1523.10.5.667. [DOI] [PubMed] [Google Scholar]

- 20.Reichle ED, Pollatsek A, Fisher DL, Rayner K. Toward a model of eye movement control in reading. Psychol Rev. 1998;105(1):125–157. doi: 10.1037/0033-295x.105.1.125. [DOI] [PubMed] [Google Scholar]

- 21.Engbert R, Nuthmann A, Richter EM, Kliegl R. SWIFT: A dynamical model of saccade generation during reading. Psychol Rev. 2005;112(4):777–813. doi: 10.1037/0033-295X.112.4.777. [DOI] [PubMed] [Google Scholar]

- 22.Salvucci DD. An integrated model of eye movements and visual encoding. Cogn Syst Res. 2001;1(4):201–220. [Google Scholar]

- 23.Reichle ED, Pollatsek A, Rayner K. Using E-Z Reader to simulate eye movements in nonreading tasks: A unified framework for understanding the eye-mind link. Psychol Rev. 2012;119(1):155–185. doi: 10.1037/a0026473. [DOI] [PubMed] [Google Scholar]

- 24.Ahumada AJ., Jr Classification image weights and internal noise level estimation. J Vis. 2002;2(1):121–131. doi: 10.1167/2.1.8. [DOI] [PubMed] [Google Scholar]

- 25.Victor JD. Analyzing receptive fields, classification images and functional images: Challenges with opportunities for synergy. Nat Neurosci. 2005;8(12):1651–1656. doi: 10.1038/nn1607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Murray RF. Classification images: A review. J Vis. 2011;11(5):2. doi: 10.1167/11.5.2. [DOI] [PubMed] [Google Scholar]

- 27.Ludwig CJH, Gilchrist ID, McSorley E, Baddeley RJ. The temporal impulse response underlying saccadic decisions. J Neurosci. 2005;25(43):9907–9912. doi: 10.1523/JNEUROSCI.2197-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Green DM, Swets JA. Signal Detection Theory and Psychophysics. New York: Wiley; 1966. [Google Scholar]

- 29.DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics. 1988;44(3):837–845. [PubMed] [Google Scholar]

- 30.Tolhurst DJ, Movshon JA, Dean AF. The statistical reliability of signals in single neurons in cat and monkey visual cortex. Vision Res. 1983;23(8):775–785. doi: 10.1016/0042-6989(83)90200-6. [DOI] [PubMed] [Google Scholar]

- 31.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: A comparison of neuronal and psychophysical performance. J Neurosci. 1992;12(12):4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Thompson KG, Hanes DP, Bichot NP, Schall JD. Perceptual and motor processing stages identified in the activity of macaque frontal eye field neurons during visual search. J Neurophysiol. 1996;76(6):4040–4055. doi: 10.1152/jn.1996.76.6.4040. [DOI] [PubMed] [Google Scholar]

- 33.Hooge ITC, Beintema JA, van den Berg AV. Visual search of heading direction. Exp Brain Res. 1999;129(4):615–628. doi: 10.1007/s002210050931. [DOI] [PubMed] [Google Scholar]

- 34.Pointer JS, Hess RF. The contrast sensitivity gradient across the human visual field: With emphasis on the low spatial frequency range. Vision Res. 1989;29(9):1133–1151. doi: 10.1016/0042-6989(89)90061-8. [DOI] [PubMed] [Google Scholar]

- 35.Abbey CK, Eckstein MP. Classification images for detection, contrast discrimination, and identification tasks with a common ideal observer. J Vis. 2006;6(4):335–355. doi: 10.1167/6.4.4. [DOI] [PubMed] [Google Scholar]

- 36.Ludwig CJH, Eckstein MP, Beutter BR. Limited flexibility in the filter underlying saccadic targeting. Vision Res. 2007;47(2):280–288. doi: 10.1016/j.visres.2006.09.009. [DOI] [PubMed] [Google Scholar]

- 37.Kass RE, Raftery AE. Bayes factors. J Am Stat Assoc. 1995;90(430):773–795. [Google Scholar]

- 38.Rouder JN, Speckman PL, Sun D, Morey RD, Iverson G. Bayesian t tests for accepting and rejecting the null hypothesis. Psychon Bull Rev. 2009;16(2):225–237. doi: 10.3758/PBR.16.2.225. [DOI] [PubMed] [Google Scholar]

- 39.Posner MI. Orienting of attention. Q J Exp Psychol. 1980;32(1):3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- 40.Treisman AM, Gelade G. A feature-integration theory of attention. Cognit Psychol. 1980;12(1):97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- 41.Gold JI, Shadlen MN. Neural computations that underlie decisions about sensory stimuli. Trends Cogn Sci. 2001;5(1):10–16. doi: 10.1016/s1364-6613(00)01567-9. [DOI] [PubMed] [Google Scholar]

- 42.Smith PL, Ratcliff R. Psychology and neurobiology of simple decisions. Trends Neurosci. 2004;27(3):161–168. doi: 10.1016/j.tins.2004.01.006. [DOI] [PubMed] [Google Scholar]

- 43.Ratcliff R. Theory of memory retrieval. Psychol Rev. 1978;85(2):59–108. [Google Scholar]

- 44.Ratcliff R. Modeling response signal and response time data. Cognit Psychol. 2006;53(3):195–237. doi: 10.1016/j.cogpsych.2005.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ludwig CJ, Davies JR. Estimating the growth of internal evidence guiding perceptual decisions. Cognit Psychol. 2011;63(2):61–92. doi: 10.1016/j.cogpsych.2011.05.002. [DOI] [PubMed] [Google Scholar]

- 46.Beutter BR, Eckstein MP, Stone LS. Saccadic and perceptual performance in visual search tasks. I. Contrast detection and discrimination. J Opt Soc Am A Opt Image Sci Vis. 2003;20(7):1341–1355. doi: 10.1364/josaa.20.001341. [DOI] [PubMed] [Google Scholar]

- 47.Ludwig CJH. Temporal integration of sensory evidence for saccade target selection. Vision Res. 2009;49(23):2764–2773. doi: 10.1016/j.visres.2009.08.012. [DOI] [PubMed] [Google Scholar]

- 48.Morrone MC, Denti V, Spinelli D. Color and luminance contrasts attract independent attention. Curr Biol. 2002;12(13):1134–1137. doi: 10.1016/s0960-9822(02)00921-1. [DOI] [PubMed] [Google Scholar]

- 49.VanRullen R, Reddy L, Koch C. Visual search and dual tasks reveal two distinct attentional resources. J Cogn Neurosci. 2004;16(1):4–14. doi: 10.1162/089892904322755502. [DOI] [PubMed] [Google Scholar]

- 50.Eckstein MP. Visual search: A retrospective. J Vis. 2011;11(5):11. doi: 10.1167/11.5.14. [DOI] [PubMed] [Google Scholar]

- 51.Pelli DG, Palomares M, Majaj NJ. Crowding is unlike ordinary masking: Distinguishing feature integration from detection. J Vis. 2004;4(12):1136–1169. doi: 10.1167/4.12.12. [DOI] [PubMed] [Google Scholar]

- 52.Levi DM. Crowding—an essential bottleneck for object recognition: A mini-review. Vision Res. 2008;48(5):635–654. doi: 10.1016/j.visres.2007.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zelinsky GJ, Peng Y, Berg AC, Samaras D. Modeling guidance and recognition in categorical search: Bridging human and computer object detection. J Vis. 2013;13(3):30. doi: 10.1167/13.3.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Rayner K. Eye movements in reading and information processing: 20 years of research. Psychol Bull. 1998;124(3):372–422. doi: 10.1037/0033-2909.124.3.372. [DOI] [PubMed] [Google Scholar]

- 55.Pelli DG, Burns CW, Farell B, Moore-Page DC. Feature detection and letter identification. Vision Res. 2006;46(28):4646–4674. doi: 10.1016/j.visres.2006.04.023. [DOI] [PubMed] [Google Scholar]

- 56.Findlay JM, Gilchrist ID. Active Vision: The Psychology of Looking and Seeing. New York: Oxford Univ Press; 2003. [Google Scholar]

- 57.Marigold DS, Weerdesteyn V, Patla AE, Duysens J. Keep looking ahead? Re-direction of visual fixation does not always occur during an unpredictable obstacle avoidance task. Exp Brain Res. 2007;176(1):32–42. doi: 10.1007/s00221-006-0598-0. [DOI] [PubMed] [Google Scholar]

- 58.Thorpe SJ, Gegenfurtner KR, Fabre-Thorpe M, Bülthoff HH. Detection of animals in natural images using far peripheral vision. Eur J Neurosci. 2001;14(5):869–876. doi: 10.1046/j.0953-816x.2001.01717.x. [DOI] [PubMed] [Google Scholar]

- 59.Hazeltine E, Teague D, Ivry RB. Simultaneous dual-task performance reveals parallel response selection after practice. J Exp Psychol Hum Percept Perform. 2002;28(3):527–545. [PubMed] [Google Scholar]

- 60.Itti L, Koch C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Res. 2000;40(10-12):1489–1506. doi: 10.1016/s0042-6989(99)00163-7. [DOI] [PubMed] [Google Scholar]

- 61.Najemnik J, Geisler WS. Optimal eye movement strategies in visual search. Nature. 2005;434(7031):387–391. doi: 10.1038/nature03390. [DOI] [PubMed] [Google Scholar]

- 62.Torralba A, Oliva A, Castelhano MS, Henderson JM. Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychol Rev. 2006;113(4):766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- 63.Zelinsky GJ. A theory of eye movements during target acquisition. Psychol Rev. 2008;115(4):787–835. doi: 10.1037/a0013118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Schall JD, Thompson KG. Neural selection and control of visually guided eye movements. Annu Rev Neurosci. 1999;22:241–259. doi: 10.1146/annurev.neuro.22.1.241. [DOI] [PubMed] [Google Scholar]

- 65.Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- 66.Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spatial Vision. 1997;10(4):437–442. [PubMed] [Google Scholar]

- 67.Kleiner M, Brainard D, Pelli D. What's new in Psychtoolbox-3? Perception. 2007:36S. [Google Scholar]

- 68.Macmillan N, Creelman C. Detection Theory: A User’s Guide. 2nd Ed. London: Psychology Press; 2004. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.