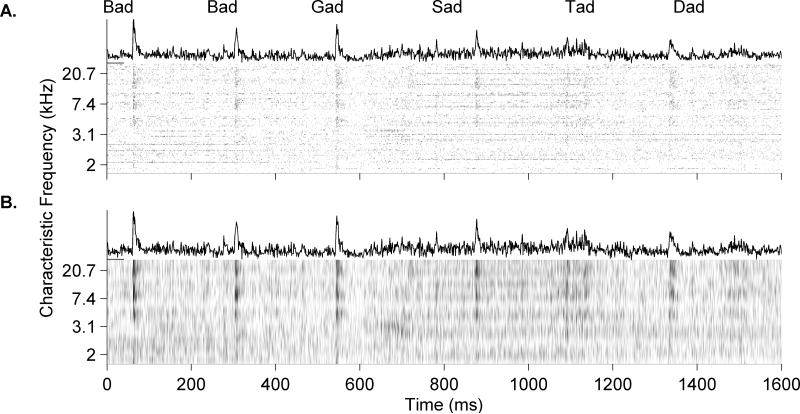

Figure 13. The classifier can use awake neural data to locate and identify speech sounds in sequences.

A. Raw neural recordings from 123 sites in passively-listening awake rats. Awake data had significantly higher spontaneous firing rates compared to anesthetized (64.2 ±1.8 Hz compared to 23.4 ± 1 Hz in the anesthetized preparation, unpaired t-test; p<0.001). B. After spatial smoothing with the same Gaussian filter used with anesthetized recordings, evoked activity was averaged and the classifier was able to locate and identify each speech sound in the sequence.