Abstract

Organisms use the process of selective attention to optimally allocate their computational resources to the instantaneously most relevant subsets of a visual scene, ensuring that they can parse the scene in real time. Many models of bottom-up attentional selection assume that elementary image features, like intensity, color and orientation, attract attention. Gestalt psychologists, how-ever, argue that humans perceive whole objects before they analyze individual features. This is supported by recent psychophysical studies that show that objects predict eye-fixations better than features. In this report we present a neurally inspired algorithm of object based, bottom-up attention. The model rivals the performance of state of the art non-biologically plausible feature based algorithms (and outperforms biologically plausible feature based algorithms) in its ability to predict perceptual saliency (eye fixations and subjective interest points) in natural scenes. The model achieves this by computing saliency as a function of proto-objects that establish the perceptual organization of the scene. All computational mechanisms of the algorithm have direct neural correlates, and our results provide evidence for the interface theory of attention.

I. Introduction

The brain receives an overwhelming amount of sensory information from the retina – estimated at up to 100Mbps per optic nerve [Koch, McLean, Berry, Sterling, Balasubramanian, and Freed, 2004, Strong, Koberle, de Ruyter van Steveninck, and Bialek, 1998]. Parallel processing of the entire visual field in real time is likely impossible for even the most sophisticated brains due to the high computational complexity of the task [Broadbent, 1958, Tsotsos, 1991]. Yet, organisms can efficiently process this information to parse complex scenes in real time. This ability relies on selective attention which provides a mechanism through which the brain filters sensory information to select only a small subset of it for further processing. This allows the visual field to be subdivided into sub-units which are then processed sequentially in a series of computationally efficient tasks [Itti and Koch, 2001a], as opposed to processing the whole scene simultaneously. Two different mechanisms work together to implement this sensory bottleneck. The first, top down attention, is controlled by the organism itself and biases attention based on the organism’s internal state and goals. The second mechanism, bottom up attention, is based on different parts of a visual scene having different instantaneous saliency values. It is thus a result of the fact that some stimuli are intrinsically conspicuous and therefore attract attention.1

Most theories and computational models of attention surmise that it is a feature driven process [Itti et al., 1998, Koch and Ullman, 1985, Treisman and Gelade, 1980, Walther, Itti, Riesenhuber, Poggio, and Koch, 2002]. However, there is a growing body of evidence, both psychophysical [Cave and Bichot, 1999, Duncan, 1984, Egly, Driver, and Rafal, 1994, Einhauser, Spain, and Perona, 2008, He and Nakayama, 1995, Ho and Yeh, 2009, Kimchi et al., 2007, Matsukura and Vecera, 2006, Scholl, 2001] and neurophysiological [Ito and Gilbert, 1999, Qiu, Sugihara, and von der Heydt, 2007, Roelfsema, Lamme, and Spekreijse, 1998, Wannig, Stanisor, and Roelfsema, 2011], which shows that attention does not only depend on image features but also on the structural organization of the scene into perceptual objects.

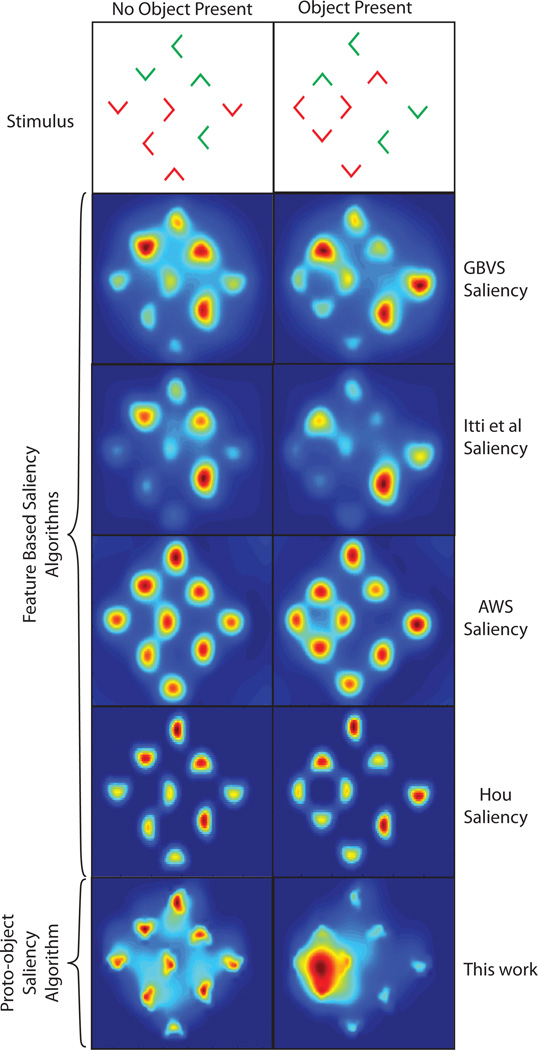

In the Kimchi et al. [2007] experiment a display of 9 red and green, ’L’-shaped elements was used to show that objects can automatically attract attention in a stimulus driven fashion. Subjects were tasked with identifying the color of a target element in the display. In a subset of the trials the elements were arranged, using Gestalt factors, to form an object (see figure 1) which was task irrelevant (the task being to report the color of a tagged L shape). Reaction times were fastest when the target formed part of the object, slowest when the target was outside of the object and intermediate when there was no object present. These results suggest that attention is pre-allocated to the location of the object giving rise to a benefit when the target forms part of the object and a cost when the target is outside of the object. Consequently, a model of salience should identify the object as the most salient location in the visual field. However, as shown in figure 1, feature based algorithms such as those by Itti et al. [1998], Harel et al. [2007], Garcia-Diaz et al. [2012a], Garcia-Diaz et al. [2012b] and Hou and Zhang [2008] are unable to do this. Instead image features (’L’-shapes) are recognized as the most salient regions for both the no-object and object cases.

Fig. 1.

Top row: Stimuli used by Kimchi et al. [2007]. ’L’-shaped elements were arranged to form a no object (left) or object (right) condition. It was found that, in the object present case, attention is automatically drawn to the location of the object. Second row: results of the Graph Based Visual Saliency (GBVS) algorithm [Harel et al., 2007] in predicting the locations of highest saliency. Third row: results of the Itti et al. [1998] algorithm in predicting the locations of highest salience. Fourth row: results of the Adaptive Whitening Saliency (AWS) model [Garcia-Diaz et al., 2012a,b]. Fifth row: results of the Hou algorithm by Hou and Zhang [2008]. All feature based algorithms fail to identify the object in the second display. Bottom row: results of the proto-object saliency algorithm described in this work. The algorithm uses Gestalt cues to perform perceptual scene organization. The formation of the object is clearly identified by the algorithm. In all figures red is the highest salience and blue the lowest.

In the work that follows we present a biologically plausible model of object based visual salience. The model utilizes the concept of border ownership cells, which have been found in monkey visual cortex [Zhou, Friedman, and von der Heydt, 2000], to provide components of the perceptual organization of a scene. Hypothetical grouping cells use Gestalt principles [Kanizsa, 1979, Koffka, 1935] to integrate the global contour information of figures into tentative proto-objects. Local image saliency is then computed as a function of grouping cell activity. As shown in figure 1, these mechanisms allow the model to correctly assign the location of highest salience to the object in the stimuli used by Kimchi et al. [2007]. If no object is present, individual elements are awarded the highest saliency. In the remainder of this paper the model is used to investigate whether visual saliency is better explained through image features or through (proto)-objects, and whether the bottom up bias in subjective interest [Elazary and Itti, 2008, Masciocchi, Mihalas, Parkhurst, and Niebur, 2009] is object or feature based. Our results strongly support the “interface theory” of attention [Qiu et al., 2007] which states that figure-ground mechanisms provide structure for selective attention. This work has important benefits in not only understanding the visual processes of the brain but also in designing the next generation of machine vision search and object recognition algorithms.

II. Related Work

Early theories of visual attention were built on the Feature Integration Theory (FIT) proposed by Treisman and Gelade [1980]. FIT is a two stage hypothesis designed to explain the differences between feature and conjunction search. It proposes that feature search, where objects are defined by a unique feature, occurs rapidly and in parallel across the visual field. Conjunction search, where an object is defined by a combination of non-unique features, occurs serially and requires attention. In the first, pre-attentive, stage of FIT the features which constitute an object are computed rapidly and in parallel in different feature maps. This allows for the rapid identification of a target defined by a unique feature. However, if an object is defined by a combination of non-unique features then attention is needed to bind the features into a single object and the search must be performed serially. The situation is more complex than described in this early view (see, e.g. Wolfe, 2000) but it suffices as a starting point to understand the basic principles.

How independent, parallel feature maps give rise to the deployment of bottom up attention can be explained by a saliency [Koch and Ullman, 1985] or master [Treisman, 1988] map which guides visual search towards conspicuous targets [Wolfe, 1994, 2007]. These maps represent visual saliency by integrating the conspicuity of individual features into a single, scalar-valued 2D retinotopic map. The activity of the map provides a ranking of the salient locations in the visual field with the most active region describing the next location to be attended. Several structures in the pulvinar [Robinson and Petersen, 1992], posterior parietal cortex [Bisley and Goldberg, 2003, Constantinidis and Steinmetz, 2005, Kusunoki, Gottlieb, and Goldberg, 2000], superior colliculus [Basso and Wurtz, 1998, McPeek and Keller, 2002, Posner and Petersen, 1990, White and Munoz, 2010], the frontal eye fields [Thompson and Bichot, 2005, Zenon, Filali, Duhamel, and Olivier, 2010], or visual cortex [Koene and Zhaoping, 2007, Mazer and Gallant, 2003, Zhaoping, 2008] have been suggested as physiological substrates of a saliency map.

Numerous computational models [Itti and Koch, 2001b, Itti et al., 1998, Milanese, Gil, and Pun, 1995, Niebur and Koch, 1996, Walther et al., 2002] were developed to explain the neural mechanisms responsible for computing a saliency map. The Itti et al. [1998] model, based off the conceptual framework proposed by Koch and Ullman [1985], is arguably the most influential of all saliency models. The model works as follows: an input image is decomposed into various feature channels (color, intensity and orientation in this model, plus temporal change in the closely related Niebur and Koch [1996] model; other channels can be added easily). Within each channel, a center surround mechanism and normalization operator work together to award unique, conspicuous features high activity and common features low activity. The results of each channel are then normalized to remove modality specific activation differences. In the last stage, the results of each channel are linearly summed to form the saliency map. This model, which uses biologically plausible computation mechanisms, is able to reproduce human search performance for images featuring pop out [Itti et al., 1998] and it predicts human eye fixations significantly better than chance [Parkhurst, Law, and Niebur, 2002]. More recent saliency algorithms have improved on the normalization method [Itti and Koch, 2001a, Parkhurst, 2002], changed the way in which features are processed to compute saliency [Garcia-Diaz et al., 2012a,b, Harel et al., 2007, Hou and Zhang, 2008], incorporated learning [Zhang, Tong, Marks, Shan, and Cottrell, 2008] or added additional feature channels; however all of these models incorporate the ideas of feature contrast and feature uniqueness, as introduced by Koch and Ullman [1985], to compute saliency.

In contrast to FIT and feature based attention, Gestalt psychologists argue that the whole of an object is perceived before its individual features are registered. This is achieved by grouping features into perceptual objects using principles like proximity, similarity, closure, good continuation, common fate and good form [Kanizsa, 1979, Koffka, 1935]. This view is backed by an increasing amount of evidence, both psychophysical [Cave and Bichot, 1999, Duncan, 1984, Egly et al., 1994, Einhauser et al., 2008, He and Nakayama, 1995, Ho and Yeh, 2009, Matsukura and Vecera, 2006, Scholl, 2001] and neurophysiological [Ito and Gilbert, 1999, Qiu et al., 2007, Roelfsema et al., 1998, Wannig et al., 2011]. These results show that attention does not only depend on image features but also on the structural organization of the scene into perceptual objects.

One theory of object based attention is the integrated competition hypothesis [Duncan, 1984] which states that attention is allocated through objects in the visual field competing for limited resources across all sensorimotor systems. When an object in one system gains dominance, its processing is supported across all systems while the representation of other objects is suppressed. Sun and Fisher [2003] and Sun, Fisher, Wang, and Gomes [2008] utilized this theory in their design of an object based saliency map which could reproduce human fixation behavior for a number of artificial scenes. Although it is based on a biologically motivated theory, their model does not use biologically plausible computational mechanisms; instead, machine vision techniques are used. Consequently the model does not provide insight into the biological mechanisms which can account for object based attention.

An alternative hypothesis for object based attention is coherence theory [Rensink, 2000]. It uses the notion of proto-objects, which are pre-attentive structures with limited spatial and temporal coherence. They are rapidly computed in parallel across the visual field and updated whenever the retina receives a new stimulus. Focused attention acts to stabilize a small number of proto-objects generating the percept of an object with both spatial and temporal coherence. Because of temporal coherence, any changes to the retina at the object’s location are treated as changes to the existing object and not the appearance of a new one. During this stage the object is said to be in a coherence field. Once attention is released from the object, it dissolves back into its dynamic proto-object representation. In coherence theory, proto-objects serve the dual purpose of being the “highest-level output of low-level vision as well as the lowest-level operand on which high-level processes (such as attention) can act” [Rensink, 2000]. Consequently proto-objects must not only provide a representation of the visual saliency of a scene but also a mechanism through which top down attention can act.

Walther and Koch [2006] used the concept of proto-objects to develop a model of object based attention. Their model uses the Itti et al. [1998] feature based saliency algorithm to compute the most salient location in the visual field. The shape of the proto-object at that location is then calculated by the spreading of activation in a 4-connected neighborhood of above threshold activity in the map with the highest saliency contribution at that location. They demonstrated that proto-object based saliency could improve the performance of the biologically motivated HMAX [Riesenhuber and Poggio, 1999] image recognition algorithm. However, there are two drawbacks associated with this model. First, the model does not extend the Itti et al. [1998] algorithm to account for how the arrangement of features into potential objects can affect the saliency of the visual scene. As a result, this model cannot explain the results obtained by Kimchi et al. [2007] (see figure 1). Second, although the computational mechanisms in the model can theoretically be found in the brain, it is unclear if the spreading of brightness or color signals, as used in the algorithm to extract proto-objects, actually occurs in the visual cortex [Roe, Lu, and Hung, 2005, Rossi and Paradiso, 1999, von der Heydt, Friedman, and Zhou, 2003].

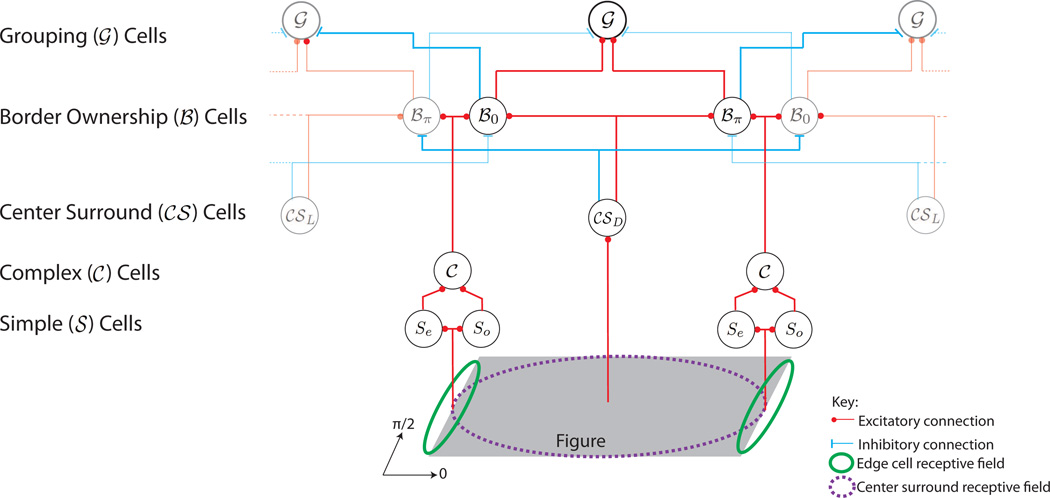

In the following, we present a neurally plausible proto-object based model of visual attention. Perceptual organization of the scene through figure-ground segregation is achieved through border ownership assignment – the one sided assignment of a border to a region perceived as figure. Neurons coding border ownership have been discovered in early visual cortex, predominantly area V2 [Zhou et al., 2000], see figure 2. Li [1998] suggests that border-ownership signals originate from the lateral propagation of edge signals through primary visual cortex but such a mechanism seems unlikely because the signals would have to travel along slow intra-areal connections. This is not compatible with the observed time course of border-ownership responses which appear as early as 20ms after the edge signals of a visual stimulus arise. An alternative hypothesis, supported by recent neurophysiological evidence [Zhang and von der Heydt, 2010], is that “grouping (𝒢) cells” communicate with border-ownership (ℬ) neurons via (fast) white matter projections [Craft, Schütze, Niebur, and von der Heydt, 2007]. The grouping neurons integrate object features into tentative proto-objects without needing to recognize the object [Craft et al., 2007, Mihalaş, Dong, von der Heydt, and Niebur, 2011]. The high conduction velocity of the myelinated fibers connecting the border ownership neurons and the grouping cells accounts for the fast development of border ownership responses. The proto-object saliency model draws inspiration from the neuronal model of Craft et al. [2007] which uses a recurrently connected network of ℬ and 𝒢 cells to model border ownership assignment for a number of synthetic images.

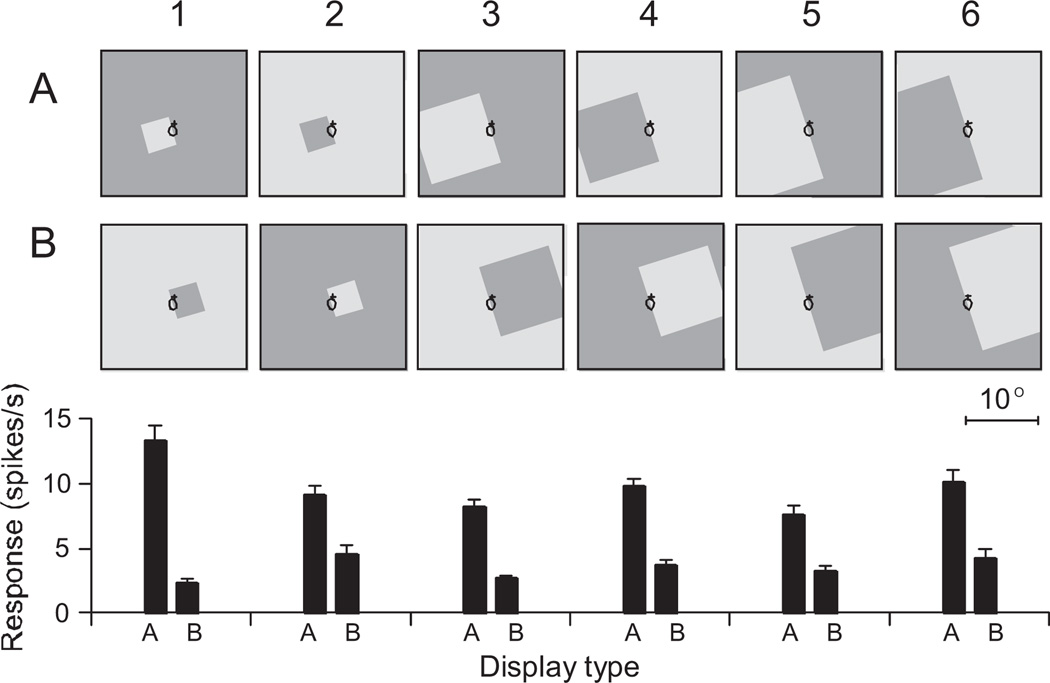

Fig. 2.

Response of a border ownership cell in monkey V2 to stimuli of varying sizes. Border ownership cells only respond when their receptive field falls over a contrast edge and their response is modulated by which side of their receptive field the figure appears on. Rows A and B show the stimuli which are, for a given trial, identical within the receptive field (black ellipse) of the border ownership selective cell. Bar graphs below Row B show the mean firing rate of the cell to the stimuli. For all sizes and both contrast polarities, the cell’s preferential response occurred when the square was located to the left of the receptive field. Reproduced with permission from Zhou et al. [2000].

III. Model

The core of our model is a grouping mechanism which estimates the location and spatial scale of proto-objects within the input image. This mechanism, described in section III-A, provides saliency information through the perceptual organization of a scene into figure and ground. In section III-B the grouping mechanism is extended to operate across multiple feature channels and to incorporate competition between proto-objects of similar size and feature composition.

Objects in a visual scene can occlude each other partially. Our convention is that in the occlusion zone, we always refer to the object that is closest to the observer as the “figure,” and that behind it as the “background.” To achieve scale invariance, the algorithm successively down samples the input image, β(x, y), in steps of √2 to form an image pyramid spanning 5 octaves. The kth level of the pyramid is denoted using the superscript k. Unless explicitly stated any operation applied to the pyramid is applied independently to each level. Each layer of the network represents neural activity which propagates from one layer to the next in a feed forward fashion – the model does not have any recurrent connections. This was done to ensure the computational efficiency of the model. However, if computation time is not an issue then recurrent connections can be added to ensure more accurate border-ownership and grouping assignment (see Craft et al. [2007] and Mihalaş et al. [2011] for examples of such circuits). Receptive fields of neurons are described by correlation kernels and correlation is used to calculate the neural response to an input, see below for details. The model was implemented using MATLAB (Mathworks, Natick, MA,USA).

A. A feed forward model of grouping

This model is responsible for estimating the location and spatial scale of proto-objects within the input image. The first stage of processing extracts object edges using 2D Gabor filters [Kulikowski, Marcelja, and Bishop, 1982], which approximate the receptive fields of simple cells in the primary visual cortex [Jones and Palmer, 1987, Marcelja, 1980]. Both even, ge,θ(x, y), and odd, go,θ(x, y), filter kernels are used, where:

| (1) |

where θ ∈ {0, π/4, π/2, 3π/4} radians, γ is the spatial aspect ratio, σ is the standard deviation of the Gaussian envelope, ω is the spatial frequency of the filter, and x′ and y′ are coordinates in the rotated reference frame defined by

| (2) |

Simple cell responses are computed according to

| (3) |

where and are the even and odd edge pyramids at angle θ and * is the correlation operator defined as

| (4) |

Using an energy representation [Adelson and Bergen, 1985, Morrone and Burr, 1988], contrast invariant complex cell responses are calculated from a simple cell response pair as

| (5) |

where is the complex cell’s response at angle θ.

To infer whether the edges in belong to figure or ground, knowledge of objects in the scene is required. This context information is retrieved from a center surround mechanism, as commonly implemented in the retina, lateral geniculate nucleus and cortex [Reid, 2008]. Center-surround receptive fields have a central region (the center) which is surrounded by an antagonistic region (the surround) which inhibits the center. Our model uses center-surround mechanisms of both polarities, with ON-center receptive fields identifying light objects on dark backgrounds and OFF-center surround operators detecting dark objects on light backgrounds. This is implemented as

| (6) |

where ⌊·⌋ is a half-wave rectification, and 𝒞𝒮D and 𝒞𝒮L are the dark and light object pyramids. 𝒞𝒮off and 𝒞𝒮on are the OFF-center and ON-center center surround mechanisms generated using a difference of Gaussians as follows,

| (7) |

In these equations, σi is the standard deviation of the center (inner) Gaussian and σo is the standard deviation of the surround (outer) Gaussian.

Next, for a given angle θ, antagonistic pairs of border ownership responses, ℬθ and ℬθ + π, are created by modulating 𝒞 cell responses with the activity from the 𝒞𝒮 pyramids. ℬ cells can code borders for light objects on dark backgrounds or dark objects on light backgrounds. This is achieved by computing ℬ cell activity independently for each contrast case and then summing to give a border ownership response independent of figure-ground contrast polarity. This mimics the behavior of the border ownership cell shown in figure 2.

ℬθ,L, the border ownership activity for a light object on a dark background is given by

| (8) |

and ℬθ,𝒟, the border ownership activity for a dark object on a light background is given by

| (9) |

where υθ is the kernel responsible for mapping object activity in the 𝒞𝒮 pyramids back to the objects edges (see figure 4 for details), wopp is the synaptic weight of the inhibitory signal from the opposite polarity 𝒞𝒮 pyramid and the term 2−j normalizes the υθ operator such that the influence across spatial scales is constant. υθ is generated using the von Mises distribution as follows

| (10) |

R0 is the zero crossing radius of the center surround masks, and θ is the desired angle of the mask. The factor rotates the mask to ensure it is correctly aligned with the edge cells. I0 is the modified Bessel function of the first kind. υθ is then normalized according to

| (11) |

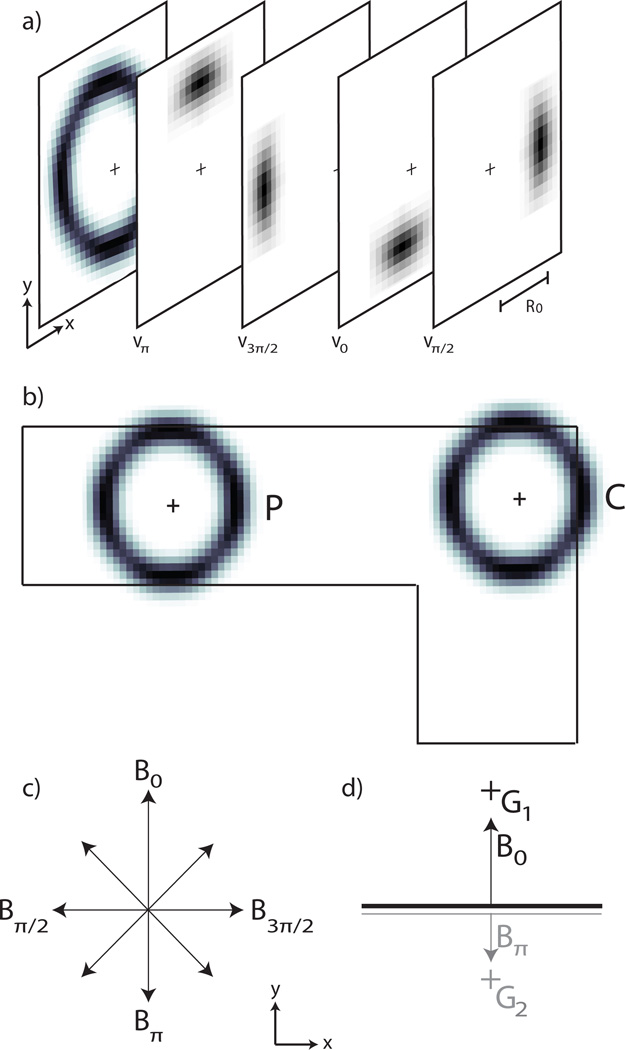

Fig. 4.

a) The annular receptive field of the grouping cells (adapted from Craft et al., 2007). In the model this is realized by using eight individual kernels (υθ in the text) whose combined activity produces the desired annular receptive field. Each kernel is generated using equation (10) and the kernels υ0, υπ/2, υπ and υ3π/2 are shown in the figure. Identical kernels are also used as the connection pattern to map the activity of the 𝒞𝒮 cells to the ℬ cells during border ownership assignment. See equation (8). b) The annular receptive field of the grouping cells bias the 𝒢 cells to have a preference for continuity (C) and proximity (P) (adapted from Craft et al., 2007). c) Conventions for the display of ℬ cell activity at a given pixel. The length of the arrows indicates the magnitude of each cell’s activity. d) Border ownership is assigned to a given pixel by selecting, from the pool of all potential ℬ cells (shown in (c)), the pair of cells with the greatest activity difference. Within that pair, the cell with the greater activity will own the border – in the case shown, ℬ0. The winning border will then excite its corresponding grouping cell, 𝒢1. This is done by mapping the activity of ℬ0 to 𝒢1 using υ0. 𝒢1 also receives a small inhibitory signal from ∜π (not shown here). 𝒢2 denotes the 𝒢 cell which corresponds to ∜π. Black (grey) lines indicate high (low) activity.

The border ownership responses coding for light and dark objects are then combined to give the contrast polarity invariant response

| (12) |

The sign of the difference ℬθ(x, y) − ℬθ + π (x, y) determines the direction of border ownership at pixel (x, y) and orientation θ. Its magnitude gives a confidence measure for the strength of ownership which is also used for determining the local border orientation, see eqs 13 and 14.

In the above, the activity of a ℬ cell is facilitated by 𝒞𝒮 activity on its preferred side and suppressed by 𝒞𝒮 activity on its non-preferred side. This is motivated by neurophysiological results which show that when an edge is placed in the classical receptive field of a border ownership neuron, image fragments placed within the cell’s extra-classical field can cause enhancement of the cell’s activity if the image fragment is placed on its preferred side, and suppression if it is placed on the non-preferred side [Zhang and von der Heydt, 2010]. Furthermore, modulating the ℬ cell responses with the 𝒞𝒮 activity summated across spatial scales ensures that the ℬ cell response is invariant to spatial scale — a behavior exhibited by the ℬ cells’ biological counterpart, see figure 2 [Zhou et al., 2000]. Furthermore, by biasing the ℬ cell activity by the 𝒞𝒮 activity at lower spatial scales, the model is made robust in its border ownership assignment when small concavities occur in larger convex objects.

At each pixel multiple border ownership cells exist coding for each direction of ownership at multiple orientations. However, a pixel can only belong to a single border. The winning border owenership response (ℬ̂) is selected, from the pool of all border ownership responses, according to

| (13) |

where

| (14) |

In words, the orientation of ℬ̂ is assigned according to the pair of ℬ cell responses with the highest difference, and the direction of ownership is assigned to the ℬ cell in that pair with the greater response.

The final stage of the algorithm calculates grouping (𝒢) cell responses by integrating the winning ℬ̂ cell activity in an annular fashion, see figure 4. This biases 𝒢 cells to show preference for objects whose borders exhibit the Gestalt principles of continuity and proximity (fig. 4b). 𝒢 cell activity is defined according to

| (15) |

where if and zero otherwise. wb is the synaptic weight of the inhibitory signal from the ℬ cell coding for the opposite (non-preferred) direction of ownership.

The 𝒢 pyramid from eq. 15 is the output of the grouping algorithm.

B. Proto-object based saliency

In this section the basic model of section III-A is extended to account for multiple feature channels and to incorporate competition between proto-objects of similar size and feature composition. Note that saliency is obtained based on the primitives generated for the proto-object computation and that the basic mechanisms are shared.

The extended model, shown in figure 5, accepts an input image which is decomposed into 9 feature channels: 1 intensity channel, 4 color-opponency channels and 4 orientation channels. A normalization operator added to the grouping mechanism allows for competition between proto-objects of similar size and feature composition. The effect of this operator is that the grouping activity of maps with few proto-objects is promoted and the grouping activity of maps with multiple proto-objects is suppressed. This operator, 𝒩1(·), which is very similar to that used by Itti et al., [1998], works as follows: The two center surround pyramids, 𝒞𝒮D and 𝒞𝒮L, are simultaneously normalized so that all values in the maps are in the range [0,…, M]. If, before normalization, the maximum of 𝒞𝒮D was twice the maximum of 𝒞𝒮L, then after the normalization the maximum of 𝒞𝒮D will be M and the maximum of 𝒞𝒮L will be M/2. Next, the average of all local maxima, m̄, is computed across both maps. In the final stage of normalization each center surround map is multiplied by (M − m̄)2. 𝒩1(·) is simultaneously applied to 𝒞𝒮D and 𝒞𝒮L to preserve the local ordering of activity in the maps. The effects of the normalization propagate forward through the grouping mechanism – maps with high 𝒞𝒮 activity, will have high border ownership activity which results in high grouping activity. Conversely, maps with low 𝒞𝒮 activity will have weak grouping activity.

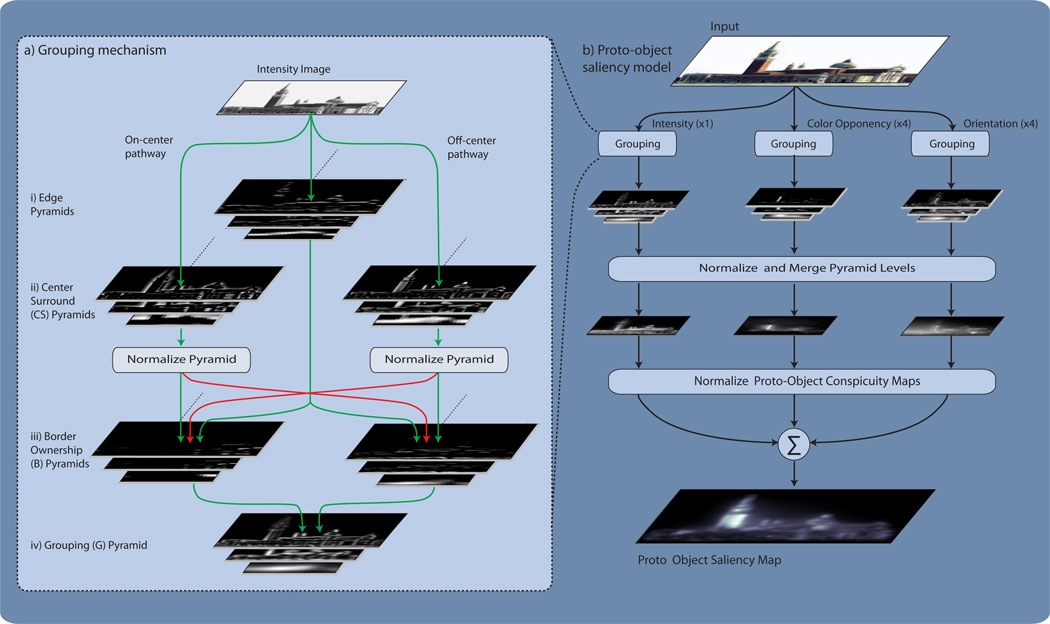

Fig. 5.

a) Overview of the feed forward grouping mechanism acting on the Intensity channel. Image pyramids are used to provide scale invariance. The first stage of processing extracts object edges for angles ranging between 0 and 3π/4 radians in π/4 increments. Only the extracted edges orientated at 0 radians are shown. Next, bright and dark objects are extracted from the intensity input image using ON-center and OFF-center center surround mechanisms. The center-surround (𝒞𝒮) pyramids are then normalized before being combined with the edge information to assign border ownership to object edges. Border ownership activity is then integrated in an annular fashion to generate a grouping pyramid. b) The proto-object saliency algorithm. An input image is separated into Intensity, Color Opponency and Orientation channels. The activity of each channel is then passed to the grouping mechanism. The grouping mechanism for both the intensity and color-opponency channels is identical to that shown in (a). However, to ensure that the orientation channels respond only to proto-objects at a given angle, the grouping mechanism for the orientation channels differs slightly. In these channels the ON-center center-surround mechanism has been replaced by an even Gabor filter (with a positive center lobe) and the OFF-center center-surround mechanism has been replaced by an even Gabor filter (with a negative center lobe). These filters are tuned to the orientation of the channel and the width of their center lobes match the zero-crossing diameter of the center-surround mechanisms used in the intensity and color-opponency channels. Like the center-surround mechanisms they replace, the output of the Gabor filters provides an estimation of the location of light objects on dark backgrounds and of dark objects on light backgrounds; however, their response is also modulated by the orientation of proto-objects in the visual scene. The outputs of the Gabor filters are then normalized and the algorithm continues as in (a). The resulting grouping pyramids are then normalized and collapsed to form channel specific conspicuity maps. The conspicuity maps are then normalized, enhancing the activity of channels with unique proto-objects and suppressing the activity of channels with multiple proto-objects. The normalized conspicuity maps are then linearly combined to form the proto-object saliency map.

The intensity channel, ℐ, is generated according to

| (16) |

where r, g and b are the red, green and blue channels of the RGB input image [Itti et al., 1998].

The four color opponency channels—red-green (ℛ𝒢), green-red (𝒢ℛ), blue-yellow (ℬ𝒴) and yellow-blue (𝒴ℬ) are generated by decoupling hue from intensity through normalizing each of the r, g, b color channels by intensity. However, because hue variations are not perceivable at very low luminance the normalization is only applied to pixels whose intensity value is greater than 10% of the global intensity maximum of the image. Pixels which do not meet this requirement are set to zero. This ensures that hue variations at very low luminance do not contribute towards object saliency. The normalized r, g, b values are then used to create four broadly tuned color channels, red (ℛ), green (𝒢), blue (ℬ) and yellow (𝒴) [Itti et al., 1998], according to

| (17) |

and the opponency signals ℛ𝒢,𝒢ℛ,ℬ𝒴 and 𝒴ℬ are then created as follows,

| (18) |

The four orientation channels, 𝒪α where α ∈ {0,π/4, π/2, 3π/4} radians, are created using ℐ as the input to the grouping algorithm. Orientation selectivity is obtained by replacing the center-surround mechanisms in the grouping algorithm with even Gabor filters orientated at2 α. Specifically,

| (19) |

where x′ and y′ are the rotated coordinate system defined according to

| (20) |

The spatial frequency, ω1, of the Gabor filters is set so that the width of the central lobe of the filters matches the zero crossing diameter of the original center surround mechanisms. The result of this is that 𝒞𝒮D still codes for dark objects on light backgrounds and 𝒞𝒮L still codes for light objects on dark backgrounds; however the activity in these maps is modulated by the orientation of the proto-objects.

Each of the above feature channels is processed independently by the grouping mechanism to form feature specific grouping pyramids, 𝒢i where i is the channel type. These grouping pyramids are then collapsed to form proto-object conspicuity maps — ℐ̄ for intensity, 𝒞̄ for color-opponency and 𝒪̄ for orientation. This is achieved through a second normalization, 𝒩2(·), and a cross scale addition ⊕ of the pyramid levels. 𝒩2(·) is identical to 𝒩1(·) except that it operates on a single map. ⊕ is achieved by scaling each map to scale k = 8 and then performing a pixel-wise addition.

For the intensity channel, ℐ̄ is generated according to

| (21) |

𝒞̄ is generated according to

| (22) |

and the orientation conspicuity map is generated according to

| (23) |

The conspicuity maps are then normalized and linearly combined to form the proto-object saliency map 𝒮:

| (24) |

The parameters used in the proto-object saliency algorithm are shown in Table I.

TABLE I.

Model Parameters

| Parameter | value |

|---|---|

| γ | 0.5000 |

| σ | 2.2400 |

| ω | 1.5700 |

| σ i | 0.9000 |

| σo | 2.7000 |

| wb | 1.0000 |

| R0 | 2.0000 |

| wopp | 1.0000 |

| σl | 3.2000 |

| γl | 0.8000 |

| ωl | 0.7854 |

| M | 10.0000 |

IV. Results

Saliency models are often designed to predict either eye-fixations or salient objects. Models do not generalize well across these categories [A.Borji, Sihite, and Itti, 2012] and so comparisons of model performance should be conducted between models of the same class. Models designed to predict salient objects work by first detecting the most salient location in the visual scene and then segmenting the entire extent of the object corresponding to that location [A.Borji et al., 2012]. This differs from our model of proto-object saliency which calculates saliency as a function of proto-objects (as opposed to image features). The resulting saliency map can then be used to predict eye-fixations. Consequently our model will be tested against four algorithms which are also designed to predict eye-fixations. The first algorithm we test against is the much used Itti et al. [1998] algorithm. Although more recent models score better in their ability to predict eye-fixations [A.Borji et al., 2012, Borji, Sihite, and Itti., 2012], it is biologically plausible and this model shares many computational mechanisms (the input color scheme, normalization operator and method to combine component feature maps into the final saliency map) with ours. It is thus useful to compare our model with the Itti et al. [1998] algorithm since any improvements of the performance of our model can be attributed to the figure-ground organization in the proto-object saliency map. The primary goal of our work is to evaluate the influence of the representation of proto-object on saliency computations, rather than to design a model that is optimized for best eye-prediction performance, without regard to biological relevance. Nevertheless, it is interesting to compare the eye position prediction performance of our model with the best performance of saliency models that have been optimized for this purpose. We therefore compare our model with three other methods, the Graph Based Visual Saliency (GBVS) model [Harel et al., 2007], the Adaptive Whitening Saliency (AWS) model [Garcia-Diaz et al., 2012a,b] and the Hou model by Hou and Zhang [2008]. These algorithms are less biologically plausible than the Itti et al. [1998] algorithm and ours; however they offer state of the art performance for feature based saliency algorithms [A.Borji et al., 2012]. The AWS and Hou models were ranked first and second in their ability to predict eye-fixations across a variety of image categories [Borji et al., 2012].

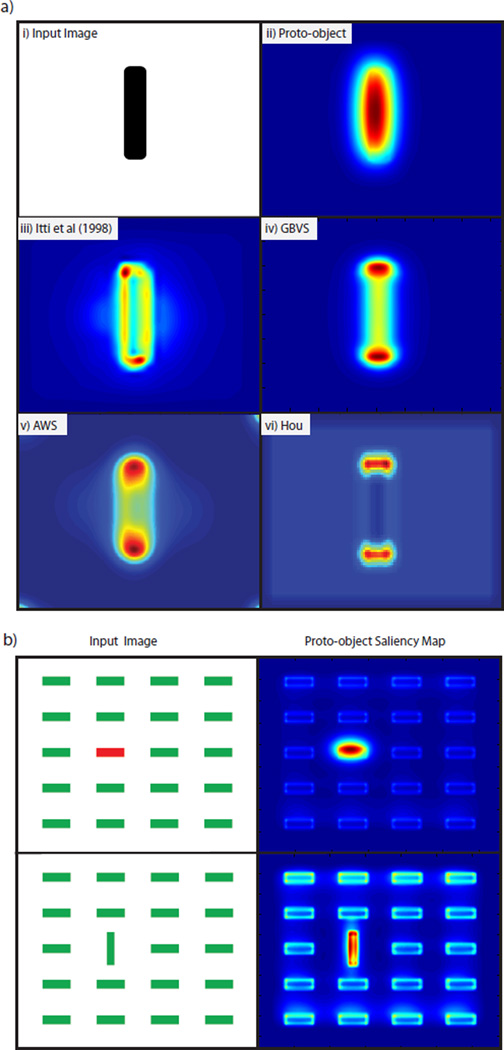

Figure 6(a) shows the response of these algorithms to a vertical bar. It can be seen that the highest activation in the proto-object saliency map corresponds to the center of the bar, while in the four feature based algorithms, the peaks of saliency tend to concentrate at the edges of objects. Figure 6(b) shows a simple application of the proto-object saliency map, showing that it can detect pop-out stimuli, as can all the other algorithms (data not shown).

Fig. 6.

a-i) Image of a vertical bar a-ii) Proto-object saliency map of the bar. a-iii) Itti et al. [1998] saliency map of the bar. a-iv) GBVS saliency map of the bar. a-v) AWS saliency map of the bar. a-vi) Hou saliency map of the bar. Red indicates high activity and blue the lowest activity. b) Proto-object saliency maps for two feature search tasks. In both cases the item described by the unique feature is awarded the highest saliency. Red indicates high activity and blue the lowest activity.

To quantify the performance of the algorithms, in their ability to predict perceptual saliency, two experiments were performed. The first measures the ability of the algorithms to predict attention by using eye fixations as an overt measure of where subjects are directing their covert attention, This idea, first used by Parkhurst et al. [2002] to quantify the performance of the Itti et al. [1998] algorithm, is based on the premotor theory of attention [Rizzolatti, Riggio, Dascola, and Umilt, 1987] which posits that the same neural circuits drive both attention and eye fixations. Numerous psychological [Hafed and Clark, 2002, Hoffman and Subramaniam, 1995, Kowler, Anderson, Dosher, and Blaser, 1995, Sheliga, Riggio, and Rizzolatti, 1994, 1995], physiological [Kustov and Robinson, 1996, Moore and Fallah, 2001, 2004, Moore, Armstrong, and Fallah, 2003] and brain imaging [Beauchamp, Petit, Ellmore, Ingeholm, and Haxby, 2001, Corbetta, Akbudak, Conturo, Snyder, Ollinger, Drury, Linenweber, Petersen, Raichle, Van Essen, Shulman, and Van Essen, 1998, Nobre, Gitelman, Dias, and Mesulam, 2000] studies provide strong evidence for this link. Recent experiments [Elazary and Itti, 2008, Masciocchi et al., 2009] have found that subjective interest points are also biased by bottom up factors. Experiment 2 investigates whether or not this bias is better explained by proto-objects or features.

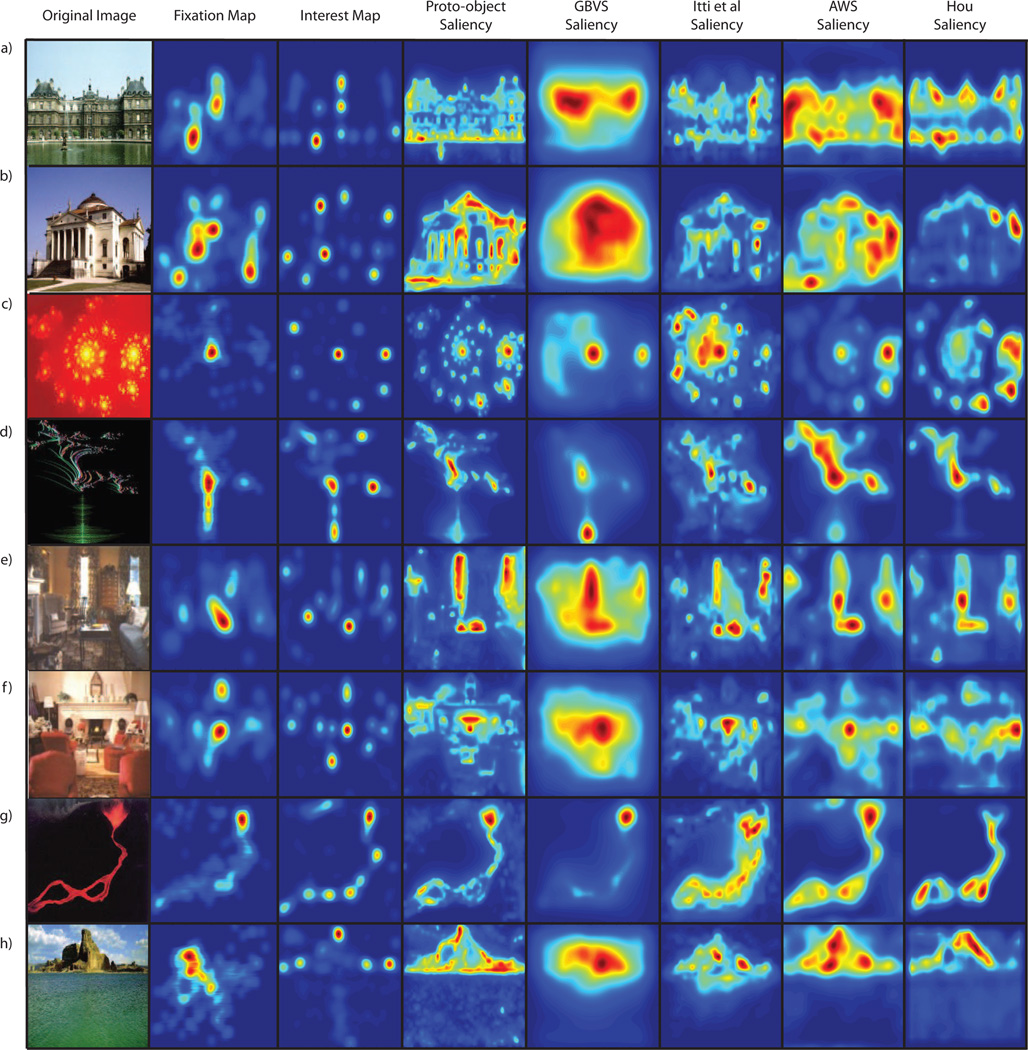

Both experiments used the image database of Masciocchi et al. [2009] which consists of 100 images, with 25 images in each of four categories (buildings, home interiors, fractals and landscapes). For each image both fixation and interest data are available. Eye fixation data was collected from 21 subjects during a free viewing task. The images were presented to the subjects in a random order and all trials began with the subject fixating at a cross located at the center of the screen. Each image subtended approximately 30.4° × 24.2° of visual angle. Each image was displayed for 5 seconds and subjects’ eye movements were recorded using an eye tracker. Eye movements that travelled less than 1° in 25ms, and were longer than 100ms were counted as a single fixation. On average participants made 12.89 ± 3.11 fixations per trial (i.e., within 5 seconds). Interest point data was collected from 802 subjects in an online experiment. Each subject was given a set of 15 images randomly selected from the data set and the subjects were told to click on the 5 most interesting points in the image. The experiment was self-paced. For full details of how fixation and interest data was collected see Masciocchi et al. [2009]. Examples of the images and their corresponding saliency maps are shown in figure 7.

Fig. 7.

Examples of images and their associated eye-fixation maps, interest maps and saliency maps calculated using the three algorithms discussed in the text. Two images are shown for each image category: buildings (a,b), fractals (c,d), home interiors (e,f) and landscapes (g,h). The eye fixation (interest) maps were generated by combining the fixation (interest) points across all images and then convolving the combined points with a 2D Gaussian with a standard deviation of 27 pixels, the standard deviation of the eye tracker error.

To measure the saliency map’s performance, two popular metrics are used, the area under the Receiver Operating Characteristic curve [Green and Swets, 1966] and the Kullback Leibler divergence [Itti and Baldi, 2005, 2006]. In their original forms these metrics are extremely sensitive to edge effects caused by the way in which image edges are handled during the filtering stages of the algorithms [Zhang et al., 2008]. These edge effects inadvertently introduce different amounts of center bias to each algorithm. This gives the algorithms varying (false) abilities to explain the center bias3 found in human fixation and interest data due to the effects of priming, the fact that the center of the screen is the optimal viewing point [Tatler, 2007, Vitu, Kapoula, Lancelin, and Lavigne, 2004] and that photographers tend to put the subject in the center of the image [Parkhurst and Niebur, 2003, Reinagel and Zador, 1999, Schumann, Einhauser-Treyer, Vockeroth, Bartl, Schneider, and Konig, 2008, Tatler, 2007, Tatler, Baddeley, and Gilchrist, 2005, Tseng, Carmi, Cameron, Munoz, and Itti, 2009]. To provide a fair comparison the metrics are modified to only use saliency values sampled at human fixation (interest) points. This diminishes the influence of edge effects as human eye fixations and interest points are less likely to be near image edges. Furthermore, by only using salient values sampled at fixation (interest) locations the center bias effects all aspects of the metrics equally.

The modified Receiver Operating Characteristic (ROC) examines the sensitivity (as a function of true and false positives) of the saliency map to predict fixation (interest) points. To calculate an ROC curve the saliency map is treated as a binary classifier, where pixels below a threshold are classified as not fixated and pixels above a threshold are classified as fixated. By varying the threshold, and using eye fixations as ground truth, an ROC curve can be drawn. The area under the curve provides a metric as to how well the algorithm performed, with an area of 1 meaning perfect prediction and an area of 0.5 meaning chance; values below 0.5 correspond to anti-correlation. Following Tatler et al. [2005] the eye fixation (interest) points for the image under consideration are used to sample the saliency map when computing true positives and the set of all fixation (interest) points pooled across all other images (drawn with no repeats) is used to sample the saliency map when computing false positives. The ROC scores are then normalized by the ROC score describing the ability of human fixation (interest) points to predict other human fixation (interest) points. To do this, the test subjects were randomly partitioned into two equally sized groups. As in Masciocchi et al. [2009], the fixation points from one group were then convolved with a 2D Gaussian (standard deviation 27 pixels, equal to the standard deviation of the eye tracker errors) to generate a fixation map. The average ROC score of the fixation map’s ability to predict the remaining fixation points was then calculated. This process was repeated 10 times and the average was used to perform the normalization. The modified ROC metric is the most reliable metric to use when quantifying a saliency map’s ability to predict eye-fixations [Borji et al., 2012].

For the modified Kullback Leibler divergence (KLD) metric, the method described in Zhang et al. [2008] is used. For a given image, the KL divergence is computed between the histogram of saliency sampled at fixation (interest) points for that image, against a histogram of saliency sampled at the same fixation (interest) points but from the saliency map of a different image. This is repeated 99 times, once for each alternative saliency map in the data set, and the average result is the KLD score for that image. If the saliency map performs better than chance, then the histogram of saliency values computed at fixated (interesting) locations should have higher values than the histogram of saliency values computed at the same locations but sampled from a different saliency map. This will cause a high KL divergence between the two distributions. If the saliency map performs at chance then the KL divergence between the two histograms is low. The average KLD score, across all images, is then normalized by the KLD score describing the ability of human fixation (interest) points to predict other human fixation (interest) points. This was calculated in a similar way to the average ROC score described above.

A. Experiment 1 : Predicting Fixation Points

Table II shows the ability of the five algorithms to predict human fixation points as computed by the ROC and KLD metrics and Table III shows the significance, in difference, of these results. Significance was calculated using a paired t-test between the proto-object model’s score and the score of the competing algorithm. The results show that the AWS algorithm is significantly better (p < 10−5) than the proto-object saliency algorithm as judged by the ROC metric. There is no significance difference between the results of the proto-object and AWS algorithms as judged by the KLD metric. The results also show that there is no significant difference between the proto-object and Hou algorithms for both metrics and that the proto-object based saliency algorithm is significantly better at predicting fixation points than the Itti et al. [1998] and GBVS algorithms (p < 10−2 for the difference between the KLD score for the proto-object algorithm and the GBVS algorithm, p < 10−7 for all other cases).

TABLE II.

Average Ability of the Saliency Maps to Predict Human Eye Fixations and Subjective Interest Points Across all Images. Also See Table AI in the Appendix for the Results for the Noisy Stimuli

| Fixation points | Interest points | |||

|---|---|---|---|---|

| Algorithm | Area ROC | KLD | Area ROC | KLD |

| Proto-object saliency | 0.9208 | 1.3048 | 0.7874 | 0.4851 |

| Feature saliency (Itti et al) | 0.8707 | 0.7197 | 0.7473 | 0.2696 |

| Feature saliency (GBVS) | 0.8668 | 1.1056 | 0.7111 | 0.3387 |

| Feature saliency (AWS) | 0.9483 | 1.2239 | 0.8100 | 0.4678 |

| Feature saliency (Hou) | 0.9213 | 1.1796 | 0.7828 | 0.4143 |

TABLE III.

Significance of the Ability of Proto-Object Saliency to Predict Eye Fixations and Subjective Interest Points Compared to the Ability of the Feature Based Methods. Also See Table AII in the Appendix for the Significance of the Noisy Results

| Fixation points | Interest points | ||||

|---|---|---|---|---|---|

| Algorithm | Metric | sig- nificant? |

p-value | sig- nificant? |

p-value |

| Feature saliency | ROC | ✓ | < 10−9 | ✓ | < 10−8 |

| (Itti et al) | KLD | ✓ | < 10−9 | ✓ | < 10−12 |

| Feature saliency | ROC | ✓ | < 10−7 | ✓ | < 10−16 |

| (GBVS) | KLD | ✓ | < 10−2 | ✓ | < 10−7 |

| Feature saliency | ROC | ✓ | < 10−5 | ✓ | < 10−5 |

| (AWS) | KLD | ✗ | – | ✗ | – |

| Feature saliency | ROC | ✗ | – | ✗ | – |

| (Hou) | KLD | ✗ | – | ✓ | < 10−2 |

B. Experiment 2: Predicting Interest Points

Table II shows the ability of the five algorithms to predict human interest points as computed by the ROC and KLD metrics and Table III shows the significance, in difference, of these results. The results show that the AWS algorithm is significantly better (p < 10−5) than the proto-object saliency algorithm as judged by the ROC metric. There is no significance difference between the results of the proto-object and AWS algorithms as judged by the KLD metric. The results also show that there is no significant difference between the proto-object and Hou algorithms as computed by the ROC metric, however the proto-object algorithm is significantly better (p < 10−2) at predicting human interest points as judged by the KLD metric. The proto-object saliency map scores significantly higher (p < 10−7) in its ability to predict subjective interest points than both the Itti et al. [1998] and GBVS algorithms.

V. Discussion

A. Proto-object saliency

In agreement with the work by Borji et al. [2012] our results show that the AWS and Hou algorithms are the top performing feature based saliency models. However, contrary to the results of Borji et al. [2012], we find that the performance of the Itti et al. [1998] and GBVS algorithms is approximately equal. The results also show that AWS algorithm outperforms the proto-object algorithm (significantly according to the ROC metric, not significantly according to the KLD metric), but the proto-object algorithm is equal to (if not better than) the Hou algorithm and that it significantly outperfoms both the GBVS and Itti et al. [1998] algorithms. When contrasting the results of the algorithms it should be noted that the approach taken in the design of the Itti et al. [1998] and proto-object models was to build models in as biologically plausible a fashion as possible. This differs from the approach taken in the design of the AWS, Hou and GBVS algorithms which, although motivated by biology, take a higher level approach and do not attempt to model specific visual functions.

The AWS algorithm performs so well for two main reasons. Firstly, the Hou, GBVS, Itti and proto-object algorithms all use a limited, fixed feature space. As a result, each algorithm’s performance depends on how well the features used in the algorithms match those found in the images being tested. In contrast, the feature space of the AWS algorithm is adapted to the statistical structure of each image. As a result the AWS algorithm uses the optimal features for each image being tested, as opposed to the other algorithms which use a fixed, non-optimal feature space for all images in the data set.

A second factor which can explain the AWS algorithm’s performance is that, although not explicitly designed into the AWS algorithm, the AWS algorithm includes basic aspects of object based attention. Figure 2 and Figure 3 of Garcia-Diaz et al. [2012b] show that the AWS algorithm exhibits early stages of figure-ground segregation [Garcia-Diaz et al., 2012a,b]. These results can be attributed to the whitening used by the AWS algorithm. Whitening is one property of center-surround mechanisms [Doi and Lewicki, 2005, Graham, Chandler, and Field, 2004]. Thus, the whitening stages of the AWS algorithm are similar to filtering using center-surround mechanisms and the output of the whitening stage of the AWS algorithm approachs that of the 𝒞𝒮D and 𝒞𝒮L center-surround cells in the proto-object algorithm. If the normalization used in the AWS algorithm correctly enhances the figure and suppresses the ground in this output then the output saliency map from the AWS algorithm will include a basic representation of proto-objects. However, because of the adaptive nature of the AWS algorithm, these proto-objects may be at more optimal scales than the fixed proto-object sizes used in our algorithm. Furthermore, unlike the proto-object algorithm the AWS algorithm does not have any competition between the figure-ground responses created during whitening. Thus the saliency map from the AWS algorithm will be blurrier than that of the proto-object algorithm. This can be seen in Figure 7. As mentioned above, blurrier maps tend to outperform sharper saliency maps [A.Borji et al., 2012].

Fig. 3.

Grouping network architecture. Input to the system is the grey parallelogram at the bottom of the figure. For simplicity only cells and connections at a single scale are shown. High (low) contrast connections and cells indicate high (low) activation levels. Red (blue) lines represent excitatory (inhibitory) connections. Green ellipses indicate simple (𝒮) cell receptive fields. Simple cells are activated by figure edges and the outputs of the even (Se) and odd (So) cells are combined to form complex (𝒞) cells. The complex cells directly excite the Border Ownership (ℬ) neurons. Note that, in this example, ℬ0 neurons represent right borders and ℬπ neurons represent left borders; in general, border-ownership selective neurons always come in two populations whose preferred side of figure differ by 180 degrees. Also note that the mechanism rendering ℬ neurons insensitive to contrast are not shown in this figure (see eq. 12 and preceding equations). An estimate of objects in the scene is extracted using 𝒞𝒮 neurons which have large center surround receptive fields. 𝒞𝒮L neurons have on-center receptive fields and extract bright objects from dark backgrounds. 𝒞𝒮D neurons have off-center receptive fields and extract dark objects from light backgrounds (the subscripts stand for “light” and “dark.”). Thus, 𝒞𝒮D and 𝒞𝒮L neurons have identical receptive fields but of opposite polarity, at each location. For clarity only the receptive field of the most responsive 𝒞𝒮 neuron is shown at a given location (purple dashed ellipse). The ℬ cell responses to 𝒞 cells are modulated by the 𝒞𝒮 cells. Excitation from the 𝒞𝒮 cell coding for figure on the ℬ cell’s preferred side enhances ℬ cell activity whilst inhibition from the 𝒞𝒮 cell coding for an object on the non preferential side suppresses ℬ cell activity. This is the start of global scene context integration as objects with well defined contours will strongly bias the ℬ cells to code for their borders whilst they inhibit ℬ cells coding for ground. ℬ cell activity is then integrated in an annular manner to give the grouping activity represented by the 𝒢 cells.

It should be noted that although the AWS algorithm contains basic notions of figure-ground organization we still classify it as a feature based algorithm. The figure-ground representation in the AWS algorithm is tightly coupled to the spatial location of features in the image. The AWS algorithm does not include any mechanisms, such as the grouping cells in the proto-object algorithm, to process the arrangement of features in a scene into tentative proto-objects. Consequently, in scenarios where features and object are decoupled, such as in the Kimchi et al. [2007] experiment shown in Figure 1, the AWS algorithm is unable to award the highest saliency to the object in the scene. Our proto-object algorithm is the only model able to explain the Kimchi et al. [2007] results.

The proto-object based model shares many computational mechanisms with the Itti et al. [1998] algorithm. These results show that by incorporating perceptual scene organization into the algorithm it is possible to match the performance of sophisticated, non-biologically plausible models such as the Hou model which uses predictive coding techniques to calculate saliency. It should also be noted that in the proto-object model the spread of activation in the saliency map is localized to the figures in the images while the background receives very low saliency values. In contrast, in the feature based algorithms the activation of saliency is not localized to the figures. This is especially evident in figures 7(c) and (d). Blurrier saliency maps tend to outperform sharper saliency maps [A.Borji et al., 2012].

The figure ground organization in the proto-object saliency algorithm is a result of the 𝒢 cells which integrate object features into proto-objects using large annular receptive fields (see figure 4). These receptive fields bias the grouping cell activity, and consequently salient locations, to fall on the centroids and the medial axis of the proto-objects [Ardila, Mihalas, von der Heydt, and Niebur, 2012]. An example of this is shown in figure 6(a-ii) where the highest activation in the saliency map corresponds to the center of the bar. Note that, in the feature based algorithms, the peaks of saliency tend to concentrate at the edges of objects. This is shown in figures 6 (a-iii) through (a-vi). The results of the proto-object saliency map are confirmed by results obtained by Einhauser et al. [2008], and Nuthmann and Henderson [2010] who show that object centers are a better predictor of human fixations than object features.

In the Einhauser et al. [2008] study, subjects were presented with 99 images. The test subjects were asked to perform artistic evaluation, analysis of content and search on the data set whilst their eye fixations were recorded. Immediately after an image was presented, the subjects were asked to list objects which they saw. A hand-segmented object based saliency map was then created where the saliency of objects within the map was proportional to the recall frequency of the objects across all test subjects. From this, Einhauser et al. [2008] concluded that saliency only has an indirect effect on attention by acting through recognized objects. Consequently, they suggest that saliency is not just a result of preprocessing steps to higher vision but instead incorporates cognitive functions such as object recognition.

In a complementary experiment, Nuthmann and Henderson [2010] presented participants with 135 color images of real-world scenes. The pictures were divided into blocks of 45 images and while viewing each block, the subject was asked to either take a memory test, perform a search task, or evaluate aesthetic preferences. Eye fixations were recorded and it was found that the Preferred Viewing Location (PVL) of an object is always towards its center. In a second experiment the PVL of “saliency proto-objects”, generated using the Walther and Koch [2006] algorithm, was investigated. A PVL existed for proto-objects which overlapped real objects; however, no PVL was found for saliency proto-objects which did not overlap real objects. Thus, when the influence of real objects is removed from saliency proto-objects, little evidence for a PVL remains. Consequently, Nuthmann and Henderson [2010] argue that saliency proto-objects are not selected for fixation and attention. Instead they hypothesize that a scene is parsed into constituent objects which are prioritized according to cognitive relevance.

At first glance it may appear as if the findings of Nuthmann and Henderson [2010] are contradictory to the work presented in this paper; however a distinction must be made between the Walther and Koch [2006] proto-objects and the proto-objects of this work. Both are close to the notion of proto-objects as defined by Rensink [2000], but their implementations and interpretations are fundamentally different. The Walther and Koch [2006] model uses the Itti et al. [1998] feature based saliency algorithm to compute the most salient location in the visual field. The shape of the proto-object at that location is then calculated by a spreading of activation around the most conspicuous feature at that location. The proto-objects are purely a function of individual features — there is no notion of object in their proto-objects. In contrast, the proto-objects in this paper are represented by grouping cells whose activity is dependent on not only the individual features of an object but also on Gestalt principles of perceptual organization. The grouping cells provide a handle for selective attention by not only providing the spatial location of potential objects within a scene but also by acting as pointers to the features which constitute an object, akin to how a symbolic pointer in a computer program can point to a structure composed of many individual elements. When cast in the saliency framework of section III, the normalized grouping cell activity provides a measure of how unique an object 16 is and consequently a measure of its saliency. This is in line with recent neurophysiological results which show that border ownership is computed independently of (top down) attention [Qiu et al., 2007]. This suggests that saliency is a function of proto-objects and not the other way around, as implicitly assumed in the Walther and Koch [2006] implementation. In line with Rensink [2000]’s proto-object definition, the grouping cells are the highest output level of low level visual processes and provide a purely feed forward measure of object based attention. However, again following Rensink, grouping cells can also act as the lowest level of top down processes. In fact, by using a similar network of border ownership and grouping cells, Mihalaş et al. [2011] have shown that grouping cells can explain many psychophysical results of top down attention (this is discussed in more detail below). Using this distinction, the experiments of Nuthmann and Henderson [2010] do not exclude proto-objects as a mechanism through which object based attention can be explained. Instead, their results bolster the growing literature [Cave and Bichot, 1999, Duncan, 1984, Egly et al., 1994, He and Nakayama, 1995, Ho and Yeh, 2009, Ito and Gilbert, 1999, Matsukura and Vecera, 2006, Qiu et al., 2007, Roelfsema et al., 1998, Scholl, 2001, Wannig et al., 2011] that supports the idea that attention is object and not feature based.

Both Einhauser et al. [2008], and Nuthmann and Henderson [2010] posit that their results can only be explained through higher order neural mechanisms, such as object recognition, which are used to guide object based attention. While there is no denying that attention has a strong top down component, our the results provide an alternative explanation, namely that object based saliency, acting through proto-objects, can also direct attention. A recent study by Monosov, Sheinberg, and Thompson [2010] shows that, in a visual search task, attention is applied before object recognition is performed. This agrees with a proto-object based theory of attention as neurophysiological results show that border ownership signals emerge 50–70 ms after stimulus presentation [Qiu et al., 2007, Zhou et al., 2000] while object recognition is a relatively slower process occurring 120–150 ms after stimulus presentation [Johnson and Olshausen, 2003].

B. Object based bias for interest

Experiments by Masciocchi et al. [2009], and Elazary and Itti [2008] show that subjective interest points are biased by a bottom up component. Using the same image database as that used in this paper (see section IV for details), Masciocchi et al. [2009] investigated the correlation between subjective interest points, eye fixations and salient locations generated using the Itti et al. [1998] saliency algorithm. Interestingly, Masciocchi et al. [2009] found that participants agreed in which things were “interesting” even though the interest point selection process was entirely subjective and performed independently by all participants. Furthermore, positive correlations were found between interest points and both eye-fixations and salient locations. This suggests that the selection of interesting locations is in part driven by bottom up factors and that interest points can serve as an indicator of bottom up attention [Masciocchi et al., 2009]. In the Elazary and Itti [2008] experiment, participants were not explicitly asked to label interesting locations, instead interest was defined as the property that makes participants label one object over another in the LabelMe database (available at http://labelme.csail.mit.edu). The Itti et al. [1998] saliency algorithm was then applied to the database and its ability to predict interesting objects was analyzed. Elazary and Itti [2008] found that saliency was a significant predictor of which objects participants chose to label. In 76% of the images, the saliency algorithm finds at least one labeled object within the three most salient locations of the image. This indicates that interesting objects within a scene are not only dependent on higher cognitive processes but also on low level visual properties [Elazary and Itti, 2008].

Both Masciocchi et al. [2009], and Elazary and Itti [2008] surmise that the segmentation of a scene into objects is an important factor in interest point selection. Our experiment 2 investigates this by testing whether or not the bottom up bias of selective interest is better explained through proto-objects or through features. The results, see section IV, show that proto-object based saliency matches or outperforms feature based saliency algorithms (except for the AWS algorithm) in its ability to predict interest points. This indicates that the bottom up component of interest is not only dependent on saliency but also on the perceptual organization of a scene into tentative objects.

C. The interface theory of attention

The work presented in this paper uses a feed forward network of ℬ and 𝒢 cells to compute figure ground organization and saliency. In a complementary study, Mihalaş et al. [2011] demonstrated that a recurrent model, using a similar network of ℬ and 𝒢 cells, could perform top-down object based attention. When a broadly tuned, spatial top-down signal was applied to the grouping neurons representing a given proto-object, attention backpropagated through the network enhancing the local features (ℬ cells) of the object — top down attention auto-localized and auto-zoomed to fit the contours of the proto-object [Mihalaş et al., 2011]. Using this network Mihalaş et al. [2011] were able to reproduce the psychophysical phenomena described by Egly et al. [1994] and Kimchi et al. [2007].

Together these two studies provide support for the “interface” theory of attention [Craft et al., 2007, Qiu et al., 2007, Zhou et al., 2000] where the neuronal network that creates figure ground-organization provides an interface for bottom-up and topdown selection processes. This is a natural fit with coherence theory [Rensink, 2000], where the 𝒢 cells provide a handle or interface to the proto-objects for both bottom up and top down processing. In the interface theory the magnitude of attentional enhancement is not dependent on where in the cortical hierarchy the attentional processing is performed but rather on how involved the local circuits are in processing contextual scene information [Kastner and McMains, 2007].

D. Grouping cell receptive fields and local features

An important aspect of the grouping algorithm are the large annular receptive fields of the 𝒢 cells which bias grouping activity for figures exhibiting the Gestalt principles of continuity and proximity. Although, there is no direct electrophysiological evidence yet that shows that cells with such receptive fields exist, psychophysical evidence points to special integration mechanisms for concentric circular patterns [Sigman, Cecchi, Gilbert, and Magnasco, 2001, Wilson, Wilkinson, and Asaad, 1997].

Furthermore, there is neurophysiological evidence for neurons selective for concentric gratings [Gallant, Connor, Rakshit, Lewis, and Van Essen, 1996]. As an alternative, the 𝒢 cells do not need complete annular receptive fields [Craft et al., 2007]; instead their responses could be computed through intermediate cells tuned to curved contour segments or combinations of such segments (as was performed in our computations). Cells exhibiting such properties have been shown to exist in extrastriate cortex [Brincat and Connor, 2004, Pasupathy and Connor, 2001, Yau, Pasupathy, Brincat, and Connor, 2013].

In this work grouping is assigned through the Gestalt principles of proximity and continuity. Although excluded from this iteration of the model, other Gestalt principles, such as symmetry are also important. Indeed, a saliency map which uses local mirror symmetry has been found to be a strong predictor of eye fixations [Kootstra, de Boer, and Schomaker, 2011]. Furthermore, in this model, border ownership assignment is purely a result of (estimated) 𝒢 cell activity and local orientation features. Additional features such as T-junctions [Craft et al., 2007] and stereoscopic cues [Qiu and von der Heydt, 2005] are also important for correct border ownership assignment. For a more accurate description of perceptual scene organization, future iterations of the model should include such mechanisms.

VI. Conclusion

A biologically plausible model of proto-object saliency has been presented. The model is constructed out of basic computation mechanisms with known biological correlates, yet is able to match the performance of state of the art, non bio-inspired algorithms. The performance of the algorithm strengthens the growing body of evidence which suggests attention is object based. In addition, the model was used to investigate whether the bottom up bias in subjective interest is object or feature based. To the authors’ knowledge this is the first experiment of this kind. The results support the idea that the bias is object based. Lastly, the model supports the “interface” hypothesis which states that attention is a result of how involved the local circuitry of perceptual organization is in processing the visual scene.

Highlights.

Our model shows proto-objects are important in predicting perceptual saliency

Border ownership cells (discovered in monkey V2) help provide perceptual scene organization

Border ownership responses are integrated with gestalt factors to estimate proto-object locations

The model provides support for the interface theory of attention

ACKNOWLEDGMENTS

We acknowledge support by the Office of Naval Research under MURI grant N000141010278 and by NIH under R01EY016281-02.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The dichotomy between top-down and bottom-up attention has recently been challenged by Awh, Belopolsky, and Theeuwes [2012]. These authors argue that the traditional definition of top-down attention, which includes all signals internal to the organism, conflates the effects of current selection goals with selection history. Although selection history can influence an organism’s goals through associative learning and memory, it also includes effects which countermand goal driven selection. Awh et al. [2012] thus propose that the notion of top-down attention should be changed so that current goals and selection history are distinct categories. Attention would thus consist of three processes: current goals, selection history and physical salience (bottom up attention). Our work is only concerned with the last of these factors.

This is only done in the orientation channels. The intensity and color opponency channels use the regular center surround operators as described in Section III-A.

The Itti et al. [1998], AWS, Hou and proto-object saliency algorithms were not designed to account for center bias. However, by more heavily connecting graph nodes at the center of the saliency map, the GBVS algorithm explicitly incorporates a center bias. To ensure a fair evaluation of the algorithms, the GBVS algorithm was configured to have uniform connections across the saliency map, eliminating the center bias.

References

- Borji A, Sihite DN, Itti L. Salient Object Detection: A Benchmark. Proc. European Conference on Computer Vision (ECCV) 2012 Oct [Google Scholar]

- Adelson EH, Bergen JR. Spatiotemporal energy models for the perception of motion. J Opt Soc Am A. 1985 Feb;2:284–299. doi: 10.1364/josaa.2.000284. [DOI] [PubMed] [Google Scholar]

- Ardila D, Mihalas S, von der Heydt R, Niebur E. 46th Annual Conference on Information Sciences and Systems. IEEE Press; 2012. Medial axis generation in a model of perceptual organization; pp. 1–4. [Google Scholar]

- Awh E, Belopolsky A, Theeuwes J. Top-down versus bottom-up attentional control: a failed theoretical dichotomy. Trends in Cognitive Sciences. 2012;16(8):437–443. doi: 10.1016/j.tics.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basso MA, Wurtz RH. Modulation of neuronal activity in superior colliculus by changes in target probability. J. Neurosci. 1998 Sep;18:7519–7534. doi: 10.1523/JNEUROSCI.18-18-07519.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Petit L, Ellmore TM, Ingeholm J, Haxby JV. A parametric fmri study of overt and covert shifts of visuospatial attention. Neuro Image. 2001;14(2):310–321. doi: 10.1006/nimg.2001.0788. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003 Jan;299:81–86. doi: 10.1126/science.1077395. [DOI] [PubMed] [Google Scholar]

- Borji A, Sihite DN, Itti L. Quantitative analysis of human-model agreement in visual saliency modeling: a comparative study. IEEE Transactions on Image Processing. 2012 doi: 10.1109/TIP.2012.2210727. [DOI] [PubMed] [Google Scholar]

- Brincat SL, Connor CE. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nature Neurosci. 2004;7:880–886. doi: 10.1038/nn1278. [DOI] [PubMed] [Google Scholar]

- Broadbent DE. Perception and Communication. London: Pergamon; 1958. [Google Scholar]

- Cave KR, Bichot NP. Visuospatial attention: beyond a spotlight model. Psychon Bull Rev. 1999 Jun;6:204–223. doi: 10.3758/bf03212327. [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Steinmetz MA. Posterior parietal cortex automatically encodes the location of salient stimuli. J. Neurosci. 2005 Jan;25:233–238. doi: 10.1523/JNEUROSCI.3379-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, Drury HA, Linenweber MR, Petersen SE, Raichle ME, Van Essen DC, Shulman GL, Van Essen DC. A common network of functional areas for attention and eye movements. Neuron. 1998 Oct;21:761–773. doi: 10.1016/s0896-6273(00)80593-0. [DOI] [PubMed] [Google Scholar]

- Craft E, Schütze H, Niebur E, von der Heydt R. A neural model of figure-ground organization. J. Neurophysiol. 2007;97(6):4310–4326. doi: 10.1152/jn.00203.2007. [DOI] [PubMed] [Google Scholar]

- Doi E, Lewicki MS. Japanese cognitive science society. Kyoto University; 2005. Jul, Relations between the statistical regularities of natural images and the response properties of the early visual system; pp. 1–8. [Google Scholar]

- Duncan J. Selective attention and the organization of visual information. J Exp Psychol Gen. 1984 Dec;113:501–517. doi: 10.1037//0096-3445.113.4.501. [DOI] [PubMed] [Google Scholar]

- Egly R, Driver J, Rafal RD. Shifting visual attention between objects and locations: evidence from normal and parietal lesion subjects. J Exp Psychol Gen. 1994 Jun;123:161–177. doi: 10.1037//0096-3445.123.2.161. [DOI] [PubMed] [Google Scholar]

- Einhauser W, Spain M, Perona P. Objects predict fixations better than early saliency. J. Vis. 2008;8(14):1–26. doi: 10.1167/8.14.18. [DOI] [PubMed] [Google Scholar]

- Elazary L, Itti L. Interesting objects are visually salient. J. Vis. 2008;8:1–15. doi: 10.1167/8.3.3. [DOI] [PubMed] [Google Scholar]

- Gallant JL, Connor CE, Rakshit S, Lewis JW, Van Essen DC. Neural responses to polar, hyperbolic, and cartesian gratings in area v4 of the macaque monkey. J. Neurophys. 1996;76(4):2718–2739. doi: 10.1152/jn.1996.76.4.2718. [DOI] [PubMed] [Google Scholar]

- Garcia-Diaz A, Fdez-Vidal XR, Pardo XM, Dosil R. Saliency from hierarchical adaptation through decorrelation and variance normalization. Image and Vision Computing. 2012a;30(1):51–64. [Google Scholar]

- Garcia-Diaz A, Leborn V, Fdez-Vidal XR, Pardo XM. On the relationship between optical variability, visual saliency, and eye fixations: A computational approach. Journal of Vision. 2012b;12(6) doi: 10.1167/12.6.17. [DOI] [PubMed] [Google Scholar]

- Graham DJ, Chandler DM, Field DJ. Decorrelation and response equalization with center-surround receptive fields. Journal of Vision. 2004;4(8):276. [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. New York: John Wiley; 1966. [Google Scholar]

- Hafed ZM, Clark JJ. Microsaccades as an overt measure of covert attention shifts. Vis. Res. 2002;42(22):2533–2545. doi: 10.1016/s0042-6989(02)00263-8. cited By (since 1996) 72. [DOI] [PubMed] [Google Scholar]

- Harel J, Koch C, Perona P. Graph-based visual saliency. In: Schölkopf B, Platt J, Hoffman T, editors. NIPS. Cambridge, MA: MIT Press; 2007. pp. 545–552. [Google Scholar]

- He ZJ, Nakayama K. Visual attention to surfaces in three-dimensional space. Proc. Natl. Acad. Sci. U.S.A. 1995 Nov;92:11155–11159. doi: 10.1073/pnas.92.24.11155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho MC, Yeh SL. Effects of instantaneous object input and past experience on object-based attention. Acta Psychol (Amst) 2009 Sep;132:31–39. doi: 10.1016/j.actpsy.2009.02.004. [DOI] [PubMed] [Google Scholar]

- Hoffman JE, Subramaniam B. The role of visual attention in saccadic eye movements. Percep. Psychophys. 1995;57(6):787–795. doi: 10.3758/bf03206794. [DOI] [PubMed] [Google Scholar]

- Hou Xiaodi, Zhang Liqing. Dynamic visual attention: searching for coding length increments. NIPS. 2008:681–688. [Google Scholar]

- Ito M, Gilbert CD. Attention modulates contextual influences in the primary visual cortex of alert monkeys. Neuron. 1999 Mar;22:593–604. doi: 10.1016/s0896-6273(00)80713-8. [DOI] [PubMed] [Google Scholar]

- Itti L, Baldi P. IEEE Proc. CVPR. volume 1. Washington, DC: 2005. Sep, A principled approach to detecting surprising events in video; pp. 631–637. [Google Scholar]

- Itti L, Baldi P. NIPS. volume 18. Cambridge, MA: MIT press; 2006. Bayesian surprise attracts human attention; pp. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]