Abstract

Nonparametric varying coefficient models are useful for studying the time-dependent effects of variables. Many procedures have been developed for estimation and variable selection in such models. However, existing work has focused on the case when the number of variables is fixed or smaller than the sample size. In this paper, we consider the problem of variable selection and estimation in varying coefficient models in sparse, high-dimensional settings when the number of variables can be larger than the sample size. We apply the group Lasso and basis function expansion to simultaneously select the important variables and estimate the nonzero varying coefficient functions. Under appropriate conditions, we show that the group Lasso selects a model of the right order of dimensionality, selects all variables with the norms of the corresponding coefficient functions greater than certain threshold level, and is estimation consistent. However, the group Lasso is in general not selection consistent and tends to select variables that are not important in the model. In order to improve the selection results, we apply the adaptive group Lasso. We show that, under suitable conditions, the adaptive group Lasso has the oracle selection property in the sense that it correctly selects important variables with probability converging to one. In contrast, the group Lasso does not possess such oracle property. Both approaches are evaluated using simulation and demonstrated on a data example.

Keywords: Basis expansion, group Lasso, high-dimensional data, non-parametric coefficient function, selection consistency, sparsity

1. Introduction

Consider a linear varying coefficient model with pn variables

| (1.1) |

where yi(t) is the response variable for the ith subject at time point t ∈ T, T is the time interval on which the measurements are taken, εi(t) is the error term, xik(t) is the covariate variable with time-varying effects, βk(t) is the corresponding smooth coefficient function. Such a model is useful in investigating the time-dependent effects of covariates on responses measured repeatedly. One well known example is longitudinal data analysis (Hoover et al. (1998)) where the response for the ith experimental subject in the study is observed on ni occasions, and the observations at times tij : j = 1, . . . , ni are correlated. Another important example is the functional response model (Rice (2004)), where the response yi(t) is a smooth real function, although only yi(tij), j = 1, . . . , ni are observed in practice. In both examples, the response yi(t) is a random process and the covariate xi(t) = (xi1(t), . . . , xip(t))′ is a p-dimensional vector of random processes. In this paper, we investigate the selection of the important covariates and the estimation of their relative coefficient functions in high-dimensional settings, in the particular case , under the assumption that the number of important covariates is “small” relative to the sample size. We propose penalized methods for variable selection and estimation in (1.1) based on basis expansion of the coefficient functions, and show that under appropriate conditions, the proposed methods can select the important variables with high probability and estimate the coefficient functions effciently.

Many methods have been developed for variable selection and estimation in varying coefficient models (1.1). See, for example, Fan and Zhang (2000) and Wu and Chiang (2000), for the local polynomial smoothing method; Wang and Xia (2008) for the local polynomial method with Lasso penalty; Huang, Wu, and Zhou (2004) and Qu and Li (2006) for basis expansion and the spline method; Chiang, Rice, and Wu (2001) for the smoothing spline method; Wang, Li, and Huang (2008) for basis function approximation with SCAD penalty (Fan and Li (2001); Fan and Lv (2010)). In addition to these methods, much progress has been made in understanding such properties of the resulting estimators as selection consistency, convergence, and asymptotic distribution. However, in all these studies, the number of variables p is fixed or less than the sample size n. To the best of our knowledge, there has been no work on the problem of variable selection and estimation in varying coefficient models in sparse, situations.

There has been much work on the selection and estimation of groups of variables. For example, Yuan and Lin (2006) proposed the group Lasso, group Lars, and group nonnegative garrote methods. Kim, Kim, and Kim (2006) considered the group Lasso in the context of generalized linear models. Zhao, Rocha, and Yu (2008) proposed a composite absolute penalty for group selection that can be considered a generalization of the group Lasso. Huang et al. (2007) considered the group bridge approach which can be used for simultaneous group and within group variable selection. However, there has been no investigation of these methods in the context of high-dimensional varying coefficient models.

In this paper, we apply the group Lasso and basis expansion to simultaneously select the important variables and estimate the coefficient functions in (1.1). With basis expansion, each coefficient function is approximated by a linear combination of a set of basis functions. Thus the selection of important variables and estimation of the corresponding coefficient functions amounts to the selection and estimation of groups of coefficients in the linear expansions. It is natural to apply the group Lasso, since it takes into account the group structure in the approximation model. We show that, under appropriate conditions, the group Lasso selects a model of the right order of dimensionality, selects all variables with coefficient function norms greater than a certain threshold level, and is estimation consistent. In order to achieve selection consistency, we apply the adaptive group Lasso. We show that the adaptive group Lasso can correctly select important variables with probability converging to one based on an initial consistent estimator. In particular, we use the group Lasso to obtain the initial estimator for the adaptive group Lasso. This approach follows the idea of the adaptive Lasso (Zou (2006)). An important aspect of our results is that p can be much larger than n.

The rest of the paper is organized as follows. In Section 2, we describe the procedure for selection and estimation using the group Lasso and the adaptive group Lasso with basis expansion. In Section 3, we state the results on estimation consistency of the group Lasso and the selection consistency of the adaptive group Lasso in high-dimensional settings. Proofs are given in Section 6. In Section 4, simulations and data examples are used to illustrate the proposed methods. Summary and discussion are given in Section 5.

2. Basis Expansion and Penalized Estimation

Suppose that the coefficient function βk can be approximated by a linear combination of basis functions,

| (2.1) |

where Bkl(t), t ∈ T, l = 1, . . . , dk, are basis functions and dk is the number of basis functions, which is allowed to increase with the sample size n.

Let Gk denote all functions that have the form for a given basis system {Bkl, l = 1, . . . , dk}. For gk ∈ Gk, define the approximation error by

Let be the L∞ distance between βk and Gk, and take ρ = max1≤k≤p dist(βk, Gk).

By the definition of ρk and (2.1), model (1.1) can be written as

| (2.2) |

for i = 1, . . . , n and j = 1, . . . , ni. In low-dimensional settings, we can minimize the least squares criterion

| (2.3) |

with respect to γkl's. The least squares estimator of βk is , where are the minimizer of (2.3).

When the number of variables p or is larger than the sample size n, however the least squares method is not applicable since there is no unique solution to (2.3). In such case, regularized methods are needed. We apply the group Lasso (Yuan and Lin (2006)),

| (2.4) |

where λ is the penalty parameter, γk = (γk1, . . . , γkd)′ is a dk-dimensional coefficient vector corresponding to the kth variable, and . Here Rk = (rij)dk×dk is the kernel matrix whose (i, j)th element is

| (2.5) |

it is a symmetric positive definite matrix by Lemma A.1 in Huang, Wu, and Zhou (2004).

To express the criterion function (2.4), let

with Xk = (x1k(t11), . . . , x1k(t1n1), . . . , xnk(tn1), . . . , xnk(tnnn))′ and define

and . Set U = (U11, . . . , U1n1, . . . , Un1, . . . , Unnn)′ with for i = 1, . . . , n, j = 1, . . . , ni. Then the group Lasso penalized criterion (2.4) can be rewritten as

| (2.6) |

The group Lasso estimator is .

Let w = (w1, . . . , wp′ be a given vector of weights, where 0 ≤ wk ≤ ∞, 1 ≤ k ≤ p. Then a weighted group Lasso criterion is

| (2.7) |

The weighted group Lasso estimator , where is the minimizer of (2.7). When the weights are dependent on the data through an initial estimator, such as , then we call the resulting an adaptive group Lasso estimator.

3. Theoretical Results

In this section, we describe the asymptotic properties of the group Lasso and the adaptive group Lasso estimators defined in (2.6) and (2.7) of Section 2 when p can be larger than n, but the number of important covariates is relatively small.

In (1.1), without loss of generality, suppose that the first qn variables are important. Let A0 = {qn+1, . . . , pn}. Here we write qn, pn to indicate that q and p are allowed to diverge with n. Thus all the variables in A0 are not important. Let |A| denote the cardinality of any set and . For any set , define

Here UA is a n × dA dimensional submatrix of the ‘designed’ matrix U. Take whenever the integral exists.

We rely on the following conditions.

- (C1) There exist constants q* > 0, c* > 0 and c* > 0 where 0 < c* ≤ c* < ∞ such that

(C2) There is a small constant η1 ≥ 0 such that .

(C3) The random errors εi(t), i = 1, . . . , n are independent and identically distributed as ε(t), where E[ε(t)] = 0 and E[ε(t)2] ≤ σ2 < ∞ for t ∈ T ; moreover, the tail probabilities satisfy for t ∈ T , x > 0, and some constants C and K

(C4) There exists a positive constant M such that for all t ∈ T and i = , . . . , n, k = 1, . . . , pn.

Condition (C1) is the sparse Riesz condition for varying coefficient models, which controls the range of eigenvalues of the matrix U. This condition was formulated for the linear regression model in Zhang and Huang (2008). If the covariate matrix X satisfies (C1), then the matrix U also satisfies (C1) and . See Lemma A.1 in Huang, Wu, and Zhou (2004). Condition (C2) assumes that the varying coefficients of the unimportant variables are small in the sense, but do not need to be exactly zero. If η1 = 0, (C2) becomes βk(t) ≡ 0 for all k ∈ A0. This can be called the narrow-sense sparsity condition (NSC) (Wei and Huang (2008)). Under the NSC, the problem of variable selection is equivalent to distinguishing nonzero coefficient functions from zero coefficient functions. Under (C2), it is no longer sensible to select the set of all nonzero coefficient functions, the goal is to select the set of important variables with large coefficient functions. From the standpoint of statistical modeling and interpretation, (C2) is mathematically weaker and more realistic. Condition (C3) assumes that the error term is a mean zero stochastic process with uniformly bounded variance function and has a sub-Gaussian tail behavior. Condition (C4) assumes that all the covariates are uniformly bounded, which is satisfied in many practical situations.

3.1. Estimation consistency of group Lasso

For the matrix Rk at (2.5), by the Cholesky decomposition there exists a matrix Qk such that Rk = dkQ′kQk.

Let Qkb be the smallest eigenvalue of matrix Qk, Qb = mink Qkb, da = maxk dk, db = maxk dk, db = da/db, . Thus N is the number of total observations, mn is the number of all approximation coefficients in the basis expansions. Note that for k = 1, . . . , pn, dk can increase as n increases to give a more accurate approximation. For example, as in non-parametric regression, we can choose dk = O(nτ) for some constant 0 < τ < 1/2. With , (C1), let

| (3.1) |

and consider the constraint

| (3.2) |

where . Note that when q* is fixed, .

Let represent the set of indices of the variables selected by the group Lasso. The cardinality of  is

| (3.3) |

This describes the dimension of the selected model; if q̂ = O(qn), then the size of the selected model is of the same order as the underlying model. To measure the important variables missing in the selected model, take

| (3.4) |

Theorem 1. Assume (C1)–(C4) and that η1 ≤ ρ. Let q̂ and ξ2 be defined as in (3.3) and (3.4), respectively, for the model selected by the group Lasso from (2.6). Let M1 and M2 be defined as in (3.1). If the constraint (3.2) is satisfied, then, with probability converging to 1 and ,

q̂ ≤ M1qn,

.

Part (i) of Theorem 1 shows that the group Lasso selects a model whose dimension is comparable to the underlying model, regardless of the large number of unimportant variables. Part (ii) implies that all the variables with coefficient functions are selected in the model with high probability.

Let be the mean of conditional on X. It is useful to consider the decomposition where and contribute to the variance and bias terms, respectively. Let , where β = β(t) = (β1(t), . . . , βpn(t))′.

Theorem 2 (Convergency of group Lasso). Let be fixed and 1 ≤ qn ≤ n ≤ pn → ∞. Suppose the conditions in Theorem 1 hold. Then, with probability converging to one,

Consequently, with probability converging to one,

This theorem gives the rate of convergence of β(t) as determined by four terms: the stochastic error and bias due to penalization (the first and second terms, ), the basis approximation error (the third and fourth terms, ). Under the conditions of Theorem 1 and Theorem 2, the group Lasso is estimation consistent in model selection.

Immediately from Theorem 2, we have the following corollary.

Corollary 1. Let λ = Op(ρ(N log mn)1/2). Suppose the conditions in Theorem 2 hold. Then Theorem 1 holds and, with probability converging to one, and . Consequently, with probability converging to one,

This corollary follows by substituting the given λ value into the expression in the results of Theorem 2 and using by Lemma A.1 in Huang, Wu, and Zhou (2004). Note that can be interpreted as the best approximation in the estimation space Gk(t) to βk(t); under appropriate conditions, the bias term is asymptotically negligible to the variance . For example, a special extreme case: for k = 1, . . . , pn, if βk(t) is a constant function independent of t, then (1.1) simplifies to a high-dimensional linear regression problem, . Thus by choosing appropriate λ, which is consistent with the result obtained in Zhang and Huang (2008). If we use B-spline basis functions to approximate β(t), by Theorem 6.27 in Schumaker (1981), for k = 1, . . . , pn, if βk(t) has bounded second derivatives and lim supn da/db < ∞, then , thus .

Collary 2. Suppose B-spline basis approximation, for k = 1, . . . , pn, with coefficient functions βk(t) having bounded second derivates, limsupn da/db < ∞, and the conditions in Corollary 1. Then

For the conditions given in Corollary 2, the number of covariates pn can be as large as , which can be much larger than n.

4. Selection Consistency of Adaptive Group Lasso

As just shown, the group Lasso has nice selection and estimation properties. It selects a model that has the same order of dimension as that of the underlying model. However, there is still room for improvement. To achieve variable selection accuracy and reduce the estimation bias of the group Lasso, we consider the adaptive group Lasso given an initial consistent estimator . Take weights

| (4.1) |

so wk is proportional to the inverse of the norm of . Here we define 0·∞ = 0. Thus the variables not included in the initial estimator are not included in the adaptive group Lasso. Given a zero-consistent initial estimator (Huang, Ma, and Zhang (2008)), the adaptive group Lasso penalty level λk goes to zero when is large, which satisfies the conditions given in Lv and Fan (2009) for a penalty function having the oracle selection property.

Consider the following additional conditions.

- (C5) The initial estimator is zero-consistent with rate rn if

and there exists a constant ξb > 0 such that as n → ∞, where . - (C6) If sn = pn – qn is the number of unimportant variables,

(C7) All the eigenvalues of are bounded away from zero and infinity.

Theorem 3. Suppose that (C3), (C5)–(C7) are satisfied. Under NSC,

Theorem 3 shows that the adaptive group Lasso is selection consistent if an initial consistent estimator is available. Condition (C5) is critical, and is very difficult to establish. It assumes that we can consistently differentiate between important and unimportant variables. For fixed pn and dk, the ordinary least squares estimator can be used as the initial estimator. However, when pn > n, the least squares estimator is no longer feasible. Theorem 1 and Theorem 2 show that, under certain conditions, the group Lasso estimator is zero-consistent with rate

Thus if we use the group Lasso estimator as the initial estimator for the adaptive group Lasso, we have the selection consistent property in Theorem 3. In addition, we reduce the dimensionality of the problem using this initial estimator. Condition (C6) restricts the numbers of important variables and basis functions, the penalty parameter, and the smallest important coefficient function (in the sense). When d and θb are fixed constants and the ni, i = 1, . . . , n are bounded, (C6) can be simplified to

which can be obtained by choosing appropriate and initial estimator. Condition (C7) assumes that the eigenvalues of are finite and bounded away from zero; this is reasonable since the number of important variables is small in a sparse model.

Using the group Lasso result as the initial estimator for the adaptive group Lasso, we then have the following theorem.

Theorem 4. Suppose the conditions of Theorem 1 hold, and θb > tb for some constant tb > 0. Let for some 0 < α < 1/2. Then with probability converging to one,

For k = 1, . . . , pn, if all βk(t) are constant functions, qn and the number of observations ni for the ith subject are fixed, then the result of Theorem 4 is consistent with the well-known result for low-dimensional linear regression problem, . Moreover, similar to Corollary 2, if B-spline basis functions are used to approximate the regression coefficient functions, then we have the following.

Corollary 3. Consider B-spline basis approximation and choose da = O(n1/5). For k = 1, . . . , pn, the coefficient function βk(t) has a bounded second derivative, lim supn da/db < ∞, and the conditions in Theorem 4 hold. If qn and ni are fixed, then with probability converging to one, .

5. Numerical Studies

In this section, we derive a group coordinate descent algorithm to compute the group Lasso and adaptive group Lasso estimates in varying coefficient models. For the adaptive group Lasso, we use the group Lasso as the initial estimator. We compare the results from the group Lasso and the adaptive group Lasso with the results from the group SCAD (Antoniadis and Fan (2001); Wang, Li, and Huang (2008)).

5.1. The group coordinate descent algorithm

The group coordinate descent algorithm is a natural extension of standard coordinate descent, see for example, Fu (1998) and Friedman et al. (2007). Meier, Van de Geer and Bülmann (2008) also used a group coordinate descent for selecting groups of variables in high-dimensional logistic regression.

Let and for k = 1, . . . , pn, so (2.6) can be rewritten as

| (5.1) |

The group Lasso estimates of (2.6) can then be obtained as for k = 1, . . . , pn.

Denote by the objective function in (5.1). Suppose we have estimates for and wish to partially optimize with respect to . The gradient at only exists is , and then

where is the fitted value excluding the contribution from . With for k = 1, . . . , pn, simple calculus shows that the group coordinate-wise update has the form

where and . Then for fixed λ, the above estimator can be computed with the following iterative algorithm.

Center and standardize Y and Ũ, such that for j = 1, . . . , pn.

Initialize and let m = 0, r = Y.

Calculate .

Update .

Update and m = m + 1.

Repeat Steps 3-5 until convergence or a fixed number of maximum iterations has been reached. The at convergence is the group Lasso estimate of (5.1).

Change to the original scale corresponding to original Y and Ũ before centering and standardization, and .

It can be seen that the idea of the group coordinate descent algorithm is simple but effcient, every update cycle requires only O(Npn) operations and the computational burden increases linearly with pn. If the number of iterations is smaller than pn, the solution is reached with even less computational burden than the operations required to solve a linear regression problem by QR decomposition.

For the adaptive group Lasso, we can use the same coordinate descent algorithm by simple substitution, as in (2.6) and (5.1). We use the same set of cubic B-spline basis functions for each βk. That is, d1 = . . . = dpn ≡ d0 and . In our application, apply the BIC criterion (Schwarz (1978)) to select (λ, d0) for the group Lasso and for the adaptive group Lasso. The BIC criterion is

where RSS is the residual sum of squares, df is the number of selected variables for a given (λ, d0). We choose d0 from an increasing sequence of ten values, starting from 5 to 14; for any given value of d0, we choose λ from a sequence of 100 values, starting from λmax to 0.001λmax with , where Ũk is the N × d0 submatrix of the “designed” matrix Ũ corresponding to the covariate Xk. This λmax is the smallest penalty value that forces all the estimated coefficients to be zero.

5.2. Monte Carlo simulation

We used simulation to assess the performance of the proposed procedures. Because our main interest is in the case when pn is large, we focused on the case pn > n. We consider the model

The time points tij for each individual subject are scheduled to be {1, . . . , 30}, each scheduled time point has some probability to be skipped, then the number of actual observed time points ni for diffierent subject is diffierent. This generating model is similar to the one in Wang, Li, and Huang (2008).

The first six variables xi1, xi2, xi3, xi4, xi5 and xi6, i = 1, . . . , 100, are the true relevant variables, and were simulated as follows: xi1(t) was uniform [t/10, 2 + t/10] at any given time point t; xij(t), j = 2, . . . , 5, conditioned on xi1(t), were i.i.d. from the normal distribution with mean zero and variance (1 + xi1(t))/(2 + xi1(t)); xi6, independent of xij, j = 1, . . . , 5, was normal with mean 3 exp(t/30) and variance 1. For k = 7, . . . , 500, each xik(t), independent of others, was multivariate normal distribution with covariance structure cov(xik(t), xik(s)) = 4 exp(–|t – s|). The random error εi(t) was Z(t) + E(t), where Z(t) had the same distribution as xik, k = 7, . . . , 500, and E(t) were independent measurement errors from N(0, 22) at each time point t. The coefficient functions were

The observation time points tij for each individual were generated from scheduled time points {1, . . . , 30}, each scheduled time point had a probability of 60% being skipped, and the actual observation time tij was obtained by adding a random perturbation from uniform [–0.5, 0.5] to the non-skipped scheduled time.

We consider the cases n = 50, 100, 200 with pn = 500, to see the performance of our proposed methods as sample size increases. The penalty parameters were selected using BIC. The results for the group Lasso, the adaptive group Lasso, and the group SCAD methods are given in Tables 1 and 2 based on 200 replications. The columns in Table 1 include the average number of variables (NV) selected, model error (ER), percentage of occasions on which correct variables were included in the selected model (%IN), and percentage of occasions on which the exactly correct variables were selected (%CS), with standard error in parentheses. Table 2 summarizes the mean square errors for the six important coefficient functions , with standard error in parentheses.

Table 1.

Simulation study.

| Results for high dimension cases, p = 500 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| adaptive group Lasso | group Lasso | group SCAD | ||||||||||

| NG | ER | IN% | CS% | NG | ER | IN% | CS% | NG | ER | IN% | CS% | |

| n = 200 | 6.13 (0.38) | 15.18 (3.33) | 99 (0.01) | 93 (0.26) | 6.20 (0.64) | 21.07 (5.88) | 99 (0.01) | 82 (0.38) | 6.12 (0.37) | 14.29 (2.37) | 100 (0.00) | 95 (0.22) |

| n = 100 | 6.21 (0.72) | 15.20 (3.58) | 87 (0.34) | 84 (0.36) | 6.34 (1.25) | 26.81 (4.44) | 87 (0.34) | 69 (0.51) | 6.25 (0.78) | 15.37 (2.52) | 90 (0.10) | 83 (0.37) |

| n = 50 | 7.04 (1.43) | 15.98 (3.86) | 72 (0.48) | 68 (0.52) | 10.29 (5.64) | 27.09 (4.96) | 72 (0.48) | 53 (0.57) | 6.99 (1.38) | 15.74 (3.12) | 78 (0.42) | 70 (0.49) |

NG, number of selected variables; ER, model error; IN%, percentage of occasions on which the correct variables were included in the selected model; CS%, percentage of occasions on which exactly correct variables were selected, averaged over 200 replications. Enclosed in parentheses are the corresponding standard errors.

Table 2.

Simulation study.

| β 1 | β 2 | β 3 | β 4 | β 5 | β 6 | |

|---|---|---|---|---|---|---|

|

n = 200 |

||||||

| adaptive group Lasso | 6.49 (2.71) | 4.46 (1.21) | 2.28 (1.10) | 1.36 (0.95) | 1.49 (0.98) | 7.72 (3.58) |

| group Lasso | 16.41 (9.09) | 9.72 (4.10) | 8.31 (3.08) | 4.98 (2.39) | 5.14 (3.92) | 10.81 (11.91) |

| group SCAD | 6.46 (2.70) | 4.44 (1.20) | 2.27 (1.09) | 1.34 (0.93) | 1.45 (0.94) | 7.59 (3.31) |

|

n = 100 |

||||||

| adaptive group Lasso | 8.19 (3.82) | 7.65 (2.17) | 3.97 (1.44) | 2.02 (1.30) | 2.14 (1.35) | 9.10 (5.27) |

| group Lasso | 29.99 (15.95) | 18.04 (5.15) | 11.12 (3.96) | 8.51 (3.85) | 9.69 (5.09) | 22.03 (18.55) |

| group SCAD | 8.20 (3.84) | 7.65 (2.17) | 3.95 (1.39) | 2.01 (1.28) | 2.12 (1.30) | 9.70 (6.05) |

|

n = 50 |

||||||

| adaptive group Lasso | 9.08 (3.88) | 8.20 (3.84) | 4.49 (1.76) | 3.41 (1.73) | 3.86 (1.75) | 9.72 (5.56) |

| group Lasso | 34.39 (20.25) | 26.05 (17.44) | 14.15 (4.92) | 12.98 (4.80) | 12.64 (6.66) | 33.49 (22.18) |

| group SCAD | 9.05 (3.86) | 8.22 (3.91) | 4.51 (1.77) | 3.38 (1.64) | 3.82 (1.72) | 9.73 (5.64) |

Mean square errors for the important coefficient functions based on 200 replications. Enclosed in parentheses are the corresponding standard errors.

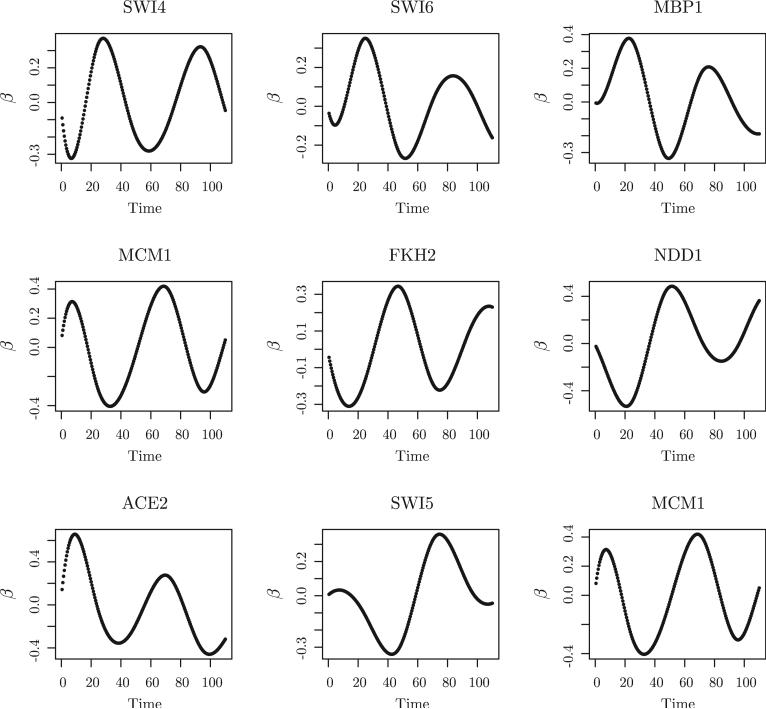

Several observations can be obtained from Tables 1 and 2. The model that was selected by the adaptive group Lasso was similar to the one selected by the group SCAD, and better than the one selected by the group Lasso in terms of model error, the percentage of occasions on which the true variables were selected and the mean square errors for the important coefficient functions. The group Lasso included the correct variables with high probability. For smaller sample sizes, the performance of both methods was worse. This is expected since variable selection in models with a small number of observations is more difficult. To examine the estimated time-varying coefficient functions from the adaptive group Lasso, we plot them along with the true function components in Figure 1. The estimated coefficient functions are from the adaptive group Lasso method in one run when n = 200. From the graph, the estimators of the time-varying coefficient functions βk(t), k = 3, 4, 5, fit the true coefficient functions well, which is consistent with the mean square errors for the coefficient functions reported in Table 2.

Figure 1.

Adaptive group Lasso method. The estimated coefficient functions (dashed line) and true coefficient (solid line) functions in one run when n = 200

These simulation results have the adaptive group Lasso with good selection and estimation performance, even when p is larger than n. They also suggest that the adaptive group Lasso can better the selection and estimation results of the group Lasso.

5.3. Identification of yeast cell cycle transcription factors

We apply our procedures to investigate the transcription factors (TFs) involved in the yeast cell cycle, which is helpful for understanding the regulation of yeast cell cycle genes. The cell cycle is an ordered set of events, culminating in cell growth and division into two daughter cells. Stages of the cell cycle are commonly divided into G1-S-G2-M. The G1 stage stands for “GAP 1”. The S stage stands for “Synthesis”; is the stage when DNA replication occurs. The G2 stage stands for “GAP 2”. The M stage stands for “mitosis”, when nuclear (chromosomes separate) and cytoplasmic (cytokinesis) division occur. Coordinate expression of genes whose products contribute to stage-specific functions is a key feature of the cell cycle (Simon et al. (2001), Morgan (1997), Nasmyth (1996)). Transcription factors (TFs) have been identified that play critical roles in gene expression regulation. To understand how the cell cycle is regulated and how cell cycle regulates other biological processes, such as DNA replication and amino acids biosynthesis, it is useful to identify the cell cycle regulated transcription factors.

We apply the group Lasso and the adaptive group Lasso methods to identify the key transcription factors that play critical roles in the cell cycle regulations from a set of gene expression measurements which are captured at equally spaced sampling time points. The data set we use comes from Spellman et al. (1998). They measured the genome-wide mRNA levels for 6178 yeast ORFs simultaneously over approximately two cell cycle periods in a yeast culture synchronized by α factor relative to a reference mRNA from an asynchronous yeast culture. The yeast cells were measured at 7-min intervals for 119 mins, with a total of 18 time points after synchronization. Using a model-based approach, Luan and Li (2003) identified 297 cell-cycle-regulated genes based on the α factor synchronization experiments. In our study, we consider 240 genes without missing values out of these 297 cell-cycle-regulated genes. Let yi(tj) denote the log-expression level for gene i at time point tj during the cell cycle process, for i = 1, . . . , 240 and j = 1, . . . , 18. We then use the chromatin immunoprecipitation (ChIP-chip) data of Lee et al. (2002) to derive the binding probabilities xik for these 240 cell-cycle-regulated genes for a total of 96 transcriptional factors with at least one nonzero binding probability in the 240 genes. We assume the following varying coefficient model to link the binding probabilities to the gene expression levels

where βk(tj) represents the effect of the kth TF on gene expression at time tj during the cell cycle process. Our goal is to identify the TFs that might be related to the cell cycle regulated gene expression.

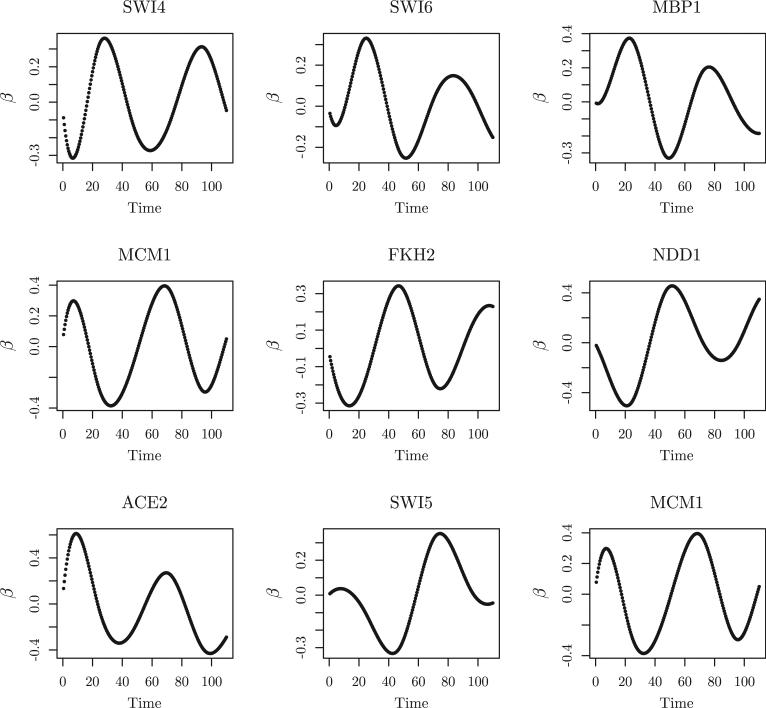

We used BIC to select the tuning parameters in the group Lasso and adaptive group Lasso. The selected tuning parameters were d0 = 7, λ = 0.89 and λ = 1.07. The group Lasso identified a total of 67 TFs related to yeast cell-cycle processes, including 19 of the 21 known and experimentally verified cell-cycle related TFs. The other two TFs, LEU3 and MET31, were not selected by the group Lasso method. Using the result from the group Lasso as the initial estimator for the adaptive group Lasso, adaptive group Lasso identified a total of 54 TFs, including the same 19 of the 21 known and experimentally verified cell-cycle related TFs. In addition, all of the identified TFs showed certain estimated periodic transcriptional effects on the cell cycle regulated gene expression, for example, MBP1, SWI4, SWI6, MCM1, FKH1, FKH2, NDD1, SWI5, and ACE2 (Simon et al. (2001)). The transcriptional effects of these 9 TFs are shown in Figure 2 and Figure 3 estimated by the group Lasso and the adaptive group Lasso methods, respectively. MBP1, SWI4, and SWI6 control late G1 genes. MCM1, together with FKH1 or FKH2, recruits the NDD1 protein in late G2, and thus controls the transcription of G2/M genes. MCM1 is also involved in the transcription of some M/G1 genes. SWI5 and ACE2 regulate genes at the end of M and early G1 (Simon et al. (2001)).

Figure 2.

Application to yeast cell cycle gene expression data. The results are from the group Lasso.

Figure 3.

Application to yeast cell cycle gene expression data. The results are from the adaptive group Lasso.

Moreover, the identified key TFs from both the group Lasso and the adaptive group Lasso include many pairs of cooperative or synergistic pairs of TFs involved in the yeast cell cycle process reported in the literature (Banerjee and Zhang (2003), Tsai, Lu, and Li (2005)). Among the 67 TFs identified by the group Lasso, 27 of them belong to the cooperative pairs of the TFs identified by Banerjee and Zhang (2003), including 23 out of 31 significant cooperative TF pairs. For the 54 TFs identified by the adaptive group Lasso, 25 of them belong to the cooperative pairs of the TFs, including 21 out of 31 significant cooperative TF pairs. The results are summarized in Table 2.

For this data set, the binding data are only available for 96 TFs. The sample size is larger than the number of variables. In order to see the performance of our proposed method with p > n, we artificially added 200 more variables to this data set. We randomly chose 200 values from the whole binding data set without replacement to add those 200 additional variables to each gene. We repeated this process 100 times. We first looked at the results concerning the 21 known TFs, since this is the only ‘truth’ we know about this data set. The average number of the 21 known important TFs identified was: the group Lasso 17.0 (standard deviation 0.12), the adaptive group Lasso 14.2 (standard deviation 0.42). The group Lasso tended to have a much higher false positive rate. The selected TFs sets after we artificially added 200 variables had a large intersection with the ones selected using only the data set itself. This suggests our method works well with many noisy variables in the model.

Finally, to compare the group Lasso and the adaptive group Lasso with simple linear regression with lasso penalty, we performed simple linear regression with binding probability as the predictors and the gene expression at each time point as the response with lasso penalty. We found only 1 TF significant related to cell cycle regulation. The result is not surprising, since the effects of the TFs on gene expression levels are time-dependent. Overall, our procedures can effectively identify the important TFs that affect the gene expression over time.

6. Concluding Remarks

In this article, we studied the estimation and selection properties of the group Lasso and adaptive group Lasso in time varying coefficient models with high dimensional data. For the group Lasso method, we considered its properties in terms of the sparsity of the selected model, bias, and the convergence rate of the estimator, as given in Theorems 1 and 2. An interesting aspect in our study is that we can allow many small non-zero coefficient functions as long as the sum of their norm is below a certain level. Our simulation results indicate that the group Lasso tends to select some non-important variables. An effective remedy then is to use the adaptive group Lasso. Compared with the group Lasso, the advantage of the adaptive group Lasso is that it has the oracle selection property. Moreover, the convergence rate of the adaptive group Lasso estimator is better. In addition, the computational cost is the same as the group Lasso. The adaptive group Lasso that uses group Lasso as the initial estimator is an effective way to analyze varying coefficient problems in sparse, high-dimensional settings.

In this paper, we have focused on the group Lasso and the adaptive group Lasso in the context of linear varying coefficient models. These methods can be applied in a similar way to other nonlinear and nonparametric regression models, but more work is needed.

Table 3.

Yeast cell cycle study. Identified cooperative pairs of TFs involved in the cell cycle process.

| adaptive group Lasso |

group Lasso |

|

|---|---|---|

| MBP1-SWI6, | MBP1-SWI6, | |

| MCM1-NDD1, | MCM1-NDD1, | |

| FKH2-MCM1, | FKH2-MCM1, | |

| FKH2-NDD1, | FKH2-NDD1, | |

| SWI4-SWI6, | SWI4-SWI6, | |

| FHC1-GAT3, | FHL1-GAT3 | |

| NRG1-YAP6, | NRG1-YAP6, | |

| GAT3-MSN4, | GAT3-MSN4, | |

| REB1-SKN7, | REB1-SKN7 | |

| ACE2-REB1, | ACE2-REB1, | |

| GCN4-SUM1, | GAL4-RGM1, | |

| FKH1-FKH2, | GCN4-SUM1 | |

| CIN5-NRG1, | FKH1-FKH2, | |

| SMP1-SWI5, | CIN5-NRG1, | |

| FKH1-NDD1, | SMP1-SWI5 | |

| ACE2-SWI5, | FKH1-NDD1, | |

| CIN5-YAP6, | ACE2-SWI5, | |

| STB1-SWI4, | CIN5-YAP6 | |

| ARG81-GCN4, | STB1-SWI4, | |

| NDD1-STB1, | ARG81-GCN4, | |

| NRG1-PHD1. | NDD1-STB1, | |

| DAL81-STP1, | ||

| NRG1-PHD1. | ||

| No. of pairs | 21 | 23 |

Acknowledgements

The authors wish to thank the Editor, an associate editor and three referees for their helpful comments. The research of Jian Huang is supported in part by NIH grant R01CA120988 and NSF grant DMS 0805670. The research of Hongzhe Li is supported in part by NIH grant R01CA127334.

Appendix: Proofs

This section provides the proofs of the results in Sections 3 and 4. For simplicity, we often drop the subscript n from certain quantities, for example, we simply write p for pn, q for qn. Let ỹ = E(y) = X(t)β(t), , then . We write , and find the rates of convergence of .

For any two sequences {an, bn, n = 1, 2, . . .}, we write if there are constants 0 < c1 < c1 < ∞ such that c1 ≤ an/bn ≤ c2 for all n sufficiently large, and write if this inequality holds with probability converging to one.

Lemma A.1. .

Proof of Lemma A.1. By the properties of basis functions and (C2), there exists for such that . Thus with for and for k ∈ A0, such that g*(t = B(t)γ*

By the definition of ỹ, and Lemma A.3 in Huang, Wu, and Zhou (2004), we have

Thus . By Lemma 1 in Zhang and Huang (2008),

By (C4),

It follows that

By Lemma A.1 in Huang, Wu, and Zhou (2004),

Since and η1 ≤ ρ,

This completes the proof of Lemma A.1.

Proof of Theorem 1. The proof of Theorem 1 is similar to the proof of the rate consistency of the group Lasso in Wei and Huang (2008). The only diffierence is in Step 3 of their proof of Theorem 1, where we need to consider the approximation error of the regression coefficient functions by basis expansion. Thus we omit the other details of the proof here.

From (2.6) and the definition of , we know

| (A.1) |

If , then (2.6) can be rewritten as

| (A.2) |

and the estimator of (A.1) can be approximated by where is the estimator of (A.2).

By the definition of , we have NSC on the regression coefficient , namely, . From Lemma A.3 in Huang, Wu, and Zhou (2004), the matrix U* satisfies (C1). Compared with the sufficient conditions for the group Lasso problem given in Wei and Huang (2008), the only change is in the error terms in our (A.2). From (2.2), we have

Define

for i = 1, . . . , n, j = 1, . . . , ni. Let δn = (δn(11), . . . , δn(nnn))′, εn = (εn(11), . . . , εn(nnn)) and ρn = (ρn(11), . . . , ρn(nnn))′. By (C4), we have for some constant C1 > 0. Define

| (A.3) |

where , and bAk is a dAk-dimensional unit vector. For a sufficiently large constant C2 > 0, also define, as Borel sets in Rn×mn × Rn,

where m0 ≥ 0. As in the proof of Theorem 1 of Wei and Huang (2008), . By the triangle and Cauchy-Schwarz inequalities,

In the proof of Theorem 1 of Wei and Huang (2008), it is shown that . Since , we have for all m ≥ 0 and p sufficiently large that . Then P((U*, εn) → 1. By the definition of λn,p, and we have

| (A.4) |

where u is defined as in the proof of Theorem 1 of Wei and Huang (2008). Since ε1(t), . . . , εn(t) are iid with Eεi(tij) = 0, by (C3) and the proof of Theorem 1 of Wei and Huang (2008), we have and

| (A.5) |

From Lemma A.1 in Huang, Wu, and Zhou (2004) and (A.5), we have,

| (A.6) |

By the definition of ξ2 and the triangle inequality,

From Lemma A.1, (A.6) and dk ≥ 1, we have

This completes the proof of Theorem 1.

Proof of Theorem 2. From the proof of Theorem 1 and Theorem 2 of Wei and Huang (2008),

By Lemma A.1 in Huang, Wu, and Zhou (2004), we know that, . Then

By Lemma A.1, we know that . Thus

This completes the proof of Theorem 2.

Proof of Theorems 3 and 4. Theorems 3 and 4 can be obtained directly from Theorems 3 and 4 of Wei and Huang (2008) and Lemma A.1 in Huang, Wu, and Zhou (2004); we omit the proofs here.

References

- Antoniadis A, Fan J. Regularization of wavelet approximation (with discussion). J. Amer. Statist. Assoc. 2001;96:939–967. [Google Scholar]

- Banerjee N, Zhang MQ. Identifying cooperativity among transcription factors controlling the cell cycle in yeast. Nucleic Acids Res. 2003;31:7024–7031. doi: 10.1093/nar/gkg894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiang CT, Rice JA, Wu CO. Smoothing spline estimation for varying coefficient models with repeatedly measured dependent variables. J. Amer. Statist. Assoc. 2001;96:605–619. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Amer. Statist. Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Lv J. A selective overview of variable selection in high dimensional feature space (invited review article). Statist. Sinica. 2010;20:101–148. [PMC free article] [PubMed] [Google Scholar]

- Fan J, Zhang JT. Two-step estimation of functional linear Models with applications to longitudinal data. J. Roy. Statist. Soc. Ser. B. 2000;62:303–322. [Google Scholar]

- Friedman J, Hastie, Hoefling H, Tibshirani R. Pathwise coordinate optimization. Ann. Appl. Statist. 2007;35:302–332. [Google Scholar]

- Fu WJ. Penalized regressions: the bridge versus the LASSO. J. Comp. Graph. Statist. 1998;7:397–416. [Google Scholar]

- Hoover DR, Rice JA, Wu CO, Yang L. Nonparametric smoothing estimates of time-varying coefficient models with longitudinal data. Biometrika. 1998;85:809–822. [Google Scholar]

- Huang J, Ma S, Xie HL, Zhang CH. A group bridge approach for variable selection. Biometrika. 2007;96:339–355. doi: 10.1093/biomet/asp020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang J, Ma S, Zhang C-H. Adaptive Lasso for high-dimensional regression models. Statist. Sinica. 2008;18:1603–1618. [Google Scholar]

- Huang JH, Wu CO, Zhou L. Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Statist. Sinica. 2004;14:763–788. [Google Scholar]

- Kim Y, Kim J, Kim Y. The blockwise sparse regression. Statist. Sinica. 2006;16:375–90. [Google Scholar]

- Lee TI, Rinaldi NJ, Robert F, Odom DT, Bar-Joseph Z, Gerber GK, Hannett NM, Harbison CT, Thompson CM, Simon I. Transcriptional regulatory networks in S.cerevisae. Science. 2002;298:799–804. doi: 10.1126/science.1075090. [DOI] [PubMed] [Google Scholar]

- Luan Y, Li H. Clustering of time-course gene expression data using a mixed- effects model with B-splines. Bioinformatics. 2003;19:474–482. doi: 10.1093/bioinformatics/btg014. [DOI] [PubMed] [Google Scholar]

- Lv J, Fan Y. A unified approach to model selection and sparse recovery using regularized least squares. Ann. Statist. 2009;37:3498–3528. [Google Scholar]

- Meier L, Van de Geer S, Bülmann P. The group lasso for logistic regression. J. Roy. Statist. Soc. Ser. B. 2008;70:53–71. [Google Scholar]

- Morgan DO. Cyclin-dependent kinases: engines, clocks, and microprocessors. Annu. Rev. Cell Dev. Biol. 1997;13:261–291. doi: 10.1146/annurev.cellbio.13.1.261. [DOI] [PubMed] [Google Scholar]

- Nasmyth K. At the heart of the budding yeast cell cycle. Trends Genet. 1996;12:405–412. doi: 10.1016/0168-9525(96)10041-x. [DOI] [PubMed] [Google Scholar]

- Qu A, Li R. Quadratic inference functions for varying coefficient models with longitudinal data. Biometrics. 2006;62:379–391. doi: 10.1111/j.1541-0420.2005.00490.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rice JA. Functional and longitudinal data analysis: perspectives on smoothing. Statist. Sinica. 2004;14:631–647. [Google Scholar]

- Schumaker L. Spline Functions: basic theory. Wiley; New York: 1981. [Google Scholar]

- Spellman PT, Sherlock G, Zhang MQ, Iyer VR, Anders K, Eisen MB, Brown PO, Botstein D, Futcher B. Comprehensive identification of cell cycle-regualted genes of the yeast saccharomyces cerevisiae by microarray hybridization. Molecular Biology of Cell. 1998;9:3273–3297. doi: 10.1091/mbc.9.12.3273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz G. Estimating the dimension of a model. Ann. Statist. 1978;6:461–464. [Google Scholar]

- Simon I, Barnett J, Hannett N, Harbison CT, Rinaldi NJ, Volkert TL, Wyrick JJ, Zeitlinger J, Gifford DK, Jaakola TS, Young RA. Serial regulation of transcriptinal regulators in the yeast cell cycle. Cell. 2001;106:697–708. doi: 10.1016/s0092-8674(01)00494-9. [DOI] [PubMed] [Google Scholar]

- Tsai HK, Lu SHH, Li WH. Statistical methods for identifying yeast cell cycle transcription factors. Proc. Nat. Acad. Sci. 2005;38:13532–13537. doi: 10.1073/pnas.0505874102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang LF, Li HZ, Huang JH. Variable selection in nonparametric varying-coefficient models for analysis of repeated measurements. J. Amer. Statist. Assoc. 2008;103:1556–1569. doi: 10.1198/016214508000000788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang H, Xia Y. Shrinkage estimation of the varying coefficient model. Journal of the American Statistical Association. 2008;104:747–757. [Google Scholar]

- Wei FR, Huang J. Group selection in high-dimensional linear regression. Department of Statistics and Actuarial Science, The University of Iowa; 2008. Technical report No. 387. [Google Scholar]

- Wu CO, Chiang CT. Kernel smoothing on varying coefficient models with longitudinal dependent variable. Statist. Sinica. 2000;10:433–456. [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. J. Roy. Statist. Soc. Ser. B. 2006;68:49–67. [Google Scholar]

- Zhang CH, Huang J. Model-selection consistency of the LASSO in high-dimensional linear regression. Ann. Statist. 2008;36:1567–1594. [Google Scholar]

- Zhao P, Rocha G, Yu B. Grouped and hierarchical model selection through composite absolute penalties. To appear in the Ann. Statist. 2008 [Google Scholar]

- Zou H. The adaptive Lasso and its oracle properties. J. Amer. Statist. Assoc. 2006;101:1418–1429. [Google Scholar]