Abstract

In the digital era of radiology, picture archiving and communication system (PACS) has a pivotal role in retrieving and storing the images. Integration of PACS with all the health care information systems e.g., health information system, radiology information system, and electronic medical record has greatly improved access to patient data at anytime and anywhere throughout the entire enterprise. In such an integrated setting, seamless operation depends critically on maintaining data integrity and continuous access for all. Any failure in hardware or software could interrupt the workflow or data and consequently, would risk serious impact to patient care. Thus, any large-scale PACS now have an indispensable requirement to include deployment of a disaster recovery plan to ensure secure sources of data. This paper presents our experience with designing and implementing a disaster recovery and business continuity plan. The selected architecture with two servers in each site (local and disaster recovery (DR) site) provides four different scenarios to continue running and maintain end user service. The implemented DR at University Hospitals Health System now permits continuous access to the PACS application and its contained images for radiologists, other clinicians, and patients alike.

Keywords: Disaster Recovery, Business Continuity, PACS, Implementation, Design, Health System

Introduction

In the digital era of radiology, picture archiving and communication system (PACS) has a pivotal role in retrieving and storing the images. All components supporting digital systems including local area network, data base servers, and digital archives require a disaster recovery (DR) plan in order to minimize the duration of disruption in the event of loss of information technology (IT) infrastructure. The current adoption of full-scale electronic medical records (EMR) by health care practices has accentuated our dependence on continuous function of the underlying IT.

Modern radiology departments cannot operate if they lose their IT infrastructure for any reason, and these departments could be affected by natural disasters [1–3] and by minor or major technical failures. In 2001, a group of IT industry analysts reported the most important causes of down time in order of frequency: planned downtime, application failure, operator error, and operation systems failure were the most frequent, with hardware failures, power outage, and natural disasters classified near the end of the list [4]. Furthermore, the health insurance portability and accountability (HIPAA) security rule identifies contingency as a standard under administrative safe guards [5]. Identifying the operational and financial impact of any disaster in the radiology practice can provide a key basis for investment in DR strategies.

The DR solution for PACS is simply defined as any configuration to the system that will provide an extended uninterrupted access to images under various stresses. Fundamental in DR is to have multiple, redundant, and synchronized copies of data in at least one separate physical location. Various issues must be addressed when designing an appropriate DR solution including availability of a (1) suitable hardware platform, (2) suitable secondary version of the operating system, (3) established network connectivity to the remote site, (4) appropriate secondary version of database system, (5) recovery database from off-site backup, and (6) recovery of image data from off-site backup [8]. Choosing the best technology for DR is determined largely by two factors, the timeframe which is tolerable to recover the data and the maximum acceptable data loss [6]. Other important considerations are on-site versus off-site storage, degrees of redundancy, online versus off-line storage of backup, and access to removable storage media for disaster recovery [7]. The ultimate selection of the best solution also can depend on the size of the hospital, available funding for the project, and the business continuity policies of the operational section.

In the literature, there are several reports discussing the best digital image storage designs [7–12]. However, there is a paucity of relevant information on the best DR strategy for the radiology departments. In this paper, we present our institutional experience with designing and implementing robust technology in support of a PACS disaster recovery plan for our health system, and have evaluated the effects on unplanned outages and scheduled downtime required for software upgrades.

Problem Statement

University Hospitals (UH) represents a health system anchored by a tertiary and quaternary medical center comprised of four hospitals including their 12 satellite outpatient centers, with additional ownership of six community hospitals in the surrounding geography. Our PACS installation started in 2003 at the core medical center and expanded throughout the enterprise in the successive years until completing uniform coverage. UH now provides more than 700,000 radiology examinations over 25 sites annually on its PACS [13]. Imaging studies are distributed and reported from multiple central locations employing an architecture that allows viewing the images anywhere, anytime, and by anyone. UH Health Systems (UHHS) had an initial PACS-DR solution with two linked servers in one location that operated in a failover support mode. If one server failed, all the services and the application shifted to the other with data still accessible from the single short-term storage. However, this left UH with a residual problem of gaps in redundancy:

The initial solution did not protect against complete physical disaster that lost both servers in the same location.

There was only one copy of the master database, though it was backed up to tape nightly and stored offsite; a rebuild at this level could take days.

The archived image data was located at one site, with tape copy offsite, but following disaster rebuild could take months.

The goal of implementing an enterprise PACS with improved disaster recovery is for an anticipated outcome of improved workflow under stress and support for future growth within the UH Health System. Furthermore, it will promote continuous access to diagnostic studies for radiologists, which will improve the continuum of patient care and business continuity with data replication.

Cluster Definition and Failover Design

A cluster represents multiple computers and their interconnections forming a single, highly available system and clustering refers to the use of two or more systems working together to achieve a common goal. Clusters can provide protection against downtime for important applications and load can be distributed among several computers to accomplish the work such that under failure in one portion of the system, that service will be available on another. When part of the system unexpectedly falls or is intentionally taken down, clustering ensures that the processes and services being run switch automatically to another machine, or "failover" in the cluster. This happens without interruption or the need for immediate administrative intervention providing a high availability solution that maintains critical data availability [15]. A multi-layered and clustered configuration can have additional backup with two distinctly located and separate clusters for local failover and further capabilities of failover remotely between the sites. In the following section, we review briefly the different DR options evaluated at UH and how they would operate in failover when either a single server or the entire primary data center becomes unavailable.

Analysis of Options

Option A: Metrocluster without Local Failover

There is one server in each separate location. There is a quorum server that serves as a tie-breaker used by the system to decide where to run services in case of hardware failure and redundant short-term image storage arrays (enterprise virtual arrays (EVA)) between both locations. There is no ability to failover at the local site.

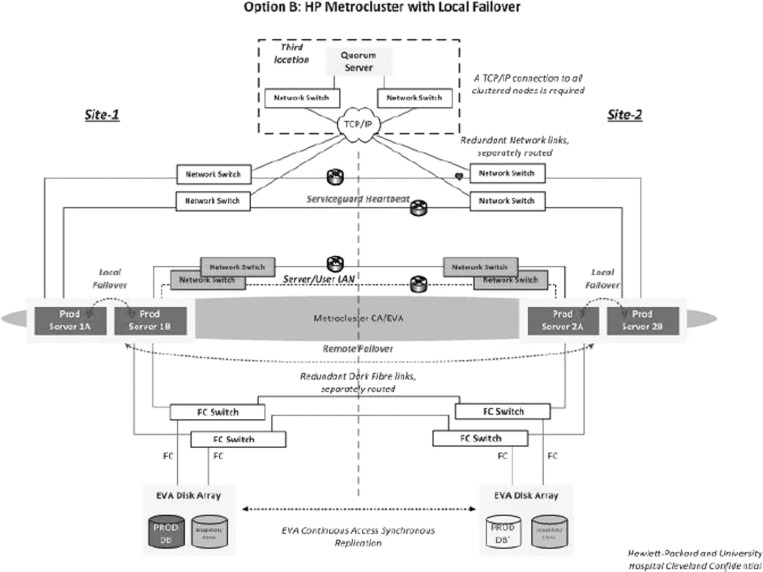

Option B: Metrocluster with Local Failover

There are two servers at each location with EVAs in both sites and with a quorum server at a third site. This option has the ability to fail over and run on one server locally and at the secondary site without involving the opposite site. If one site is completely down, the full system could run on the other site. This option provides four different scenarios to continue running and maintain end user service.

Option C: Service Cluster with Local Failover

There are two servers at the local site and one server at the DR site with no quorum server between the two sites. This option has the ability to automatically fail over and run on one server locally without involving the secondary site. There is the option to manually failover to the DR site if the primary site is completely down.

Choosing an Option

Considerable planning went to choosing and purchasing the best DR solution to meet our goals of an exceptionally solid technical base to support our essential operations. Our Radiology Informatics Committee chose to recommend a “Metrocluster” with local failover design (option B above) given its more extensive backup and functions. Figure 1 is an overview of the design, set with automated failover between clustered servers at a site, yet requiring manual failover between sites. This architecture with two servers in each site (local and DR site), had the greatest potential to provide seamless operation in case of failure; however, option B was clearly more costly than the other two options. Then, the proposal entered our governance process with review by our Executive IT Steering Committee that principally weighed the trade-off between the benefits of critical business continuity against the costs. UH then approved the project in order to achieve enhanced quality, maintain work efficiency under stress, and improve the patient care experience.

Fig. 1.

An overview of the selected DR plan at UHHS

Project

Team

The DR planning process included several important steps: identifying the systems currently in use, determining the critical recovery time frames and recovery strategy, and the documentation of responsibilities and planning for the training and maintenance procedures [14]. At the first step of designing the DR solution at UH, we created a multi-disciplinary committee consisting of UH radiology PACS team members, IT networking experts, Sectra PACS vendor representatives, and Hewlett–Packard (HP) hardware designers.

Components

After selecting the best DR option for UH and obtaining necessary institutional approvals, the hardware and connections for the new DR solution were implemented and continuous access (CA) were installed. Our redundant systems included two archives, each at a different data center. The primary archive consists of read–write redundancy with dual gateway nodes to ingest the data for archival. Therefore, if one gateway node is not available, we can fail over to the additional node and continue writing and retrieving. The secondary archive stores a copy of the data but it is a read-only archive, so it can be utilized to retrieve from in the event that the primary archive is completely unavailable. This is in addition to a separate duplicate PACS-DR system with CA synching for redundant data storage between the primary and DR EVAs. These DR systems are located at separate sites from the primary production environment. The Metrocluster failover design hardware and software description are summarized in Tables 1 and 2, and component details follows:

Network: we employed a design sufficient to support the bandwidth for all images running on the network architecture with a medical grade network with a 10 Gbps backbone. This provides connectivity between sites, pathway redundancy, and efficient transfer for sharing of all digital images.

Dual environments: Both the primary production and DR systems run on a robust server cluster configuration (Itanium) with the addition of a local short-term storage (EVA 8400) providing further redundancy. This design included two core Itanium servers that run in a clustered configuration located at a separate site. The primary production and DR server clusters coordinate with smaller image servers located at some larger remote sites to provide faster access to near-term and local images.

Redundant archive: Our long-term archive is available 24/7 designed with the HP medical archiving system (MAS) that includes a primary production redundant read/write system located at the DR site and a read-only redundant system located at the central site. The capacity has already grown to over 120 TB (redundant). Real-time on demand retrieval is available and occurs in 5–60 s for full exams based on the complexity of the modality.

Workstations: Upgrade of all workstations to Windows 7, 64-bit following the upgrade to SECTRA IDS7 version 12.5 allowed the equipment to run the advanced application more efficiently. Downtime for hardware and application upgrades was eliminated by improving the performance and minimizing crashes at the workstation level.

Continuous synching: We implemented continuously available synching between the redundant PACS system using the two additional Itanium servers and a second EVA 8400 unit with of the live data from the production site to the DR site. The production and DR sites are then monitored with a quorum server located at a third site. This configuration eliminates or dramatically minimizes any downtime for the application.

Image storage: At UH, we created and use “disk to disk” method when information from our local server disk is sent to our archive disks. There are two basic strategies for storing exams in remote locations. One is the disk to disk method, which is the replication of exam data to other disk storage devices in a secondary location. Because of the significant price decreases in disk-based storage devices, many disk-only DR solutions have been penetrating the PACS market and became accessible to us. The other method is the “removable media,” which used to be the least expensive, but required computer processing to move exams from disk to removable media and from removable media back to disk [16].

Table 1.

UH PACS with advanced DR hardware configuration

| Database and application servers production | RX6600 4 dual-core Intel Itanium 2 (1.6GHz) with 64 GB RAM, quantity of 2 remote image servers (1 at each location) quantity of 14—RX2660 with single dual-core Intel Itanium 2 |

|---|---|

| Database and application servers DR site | RX7640 4 dual-core Intel Itanium 2 (1.4 GHz) with 64 GB RAM, quantity of 2 |

| Other equipment | Quorum Server—RX2660 with single dual-core Intel Itanium 2 |

| Long-term archive (medical images) | HP MAS—Bycast software on SuSE Linux OS. 120 TB redundant |

| Short-term storage | Mirrored EVA 8400 storage arrays running command view replication. 24 TB each quantity of 2 |

| Web servers | Dell featuring 4 Xeon quad core 2.27GHz processors each quantity of 28 located at production site, DR site, and remote sites. |

Table 2.

UH PACS with advanced DR software configuration

| Application and database | SECTRA PACS 12.5 consisting of IMS4S (Image Server), WISE database, and SECTRA Healthcare Database packages running in an HP Metrocluster environment on Oracle 9i with HPUX B11.31 |

|---|---|

| Application interfaces and web servers | SECTRA SHS SECTRA Healthcare Server—web server and Health Level 7 interfaces running on Windows Server 2003 and 2008 |

| Storage | Short-term storage on HP EVAs with Command view CA replication between production and DR environment. Long-term storage (medical image archive) on HP MAS (Medical Archive Solution) running on SuSE Linux |

Implementation

All the components were compiled, configured, tested, and when appropriate, placed into production. Beginning in 2010, applications were upgraded and improvements in hardware and networking began. Then, the majority of the work necessary to create our robust DR design was completed in 2011. We installed new Itanium servers into production, and the application was upgraded to IDS7 (12.5 version) with workstations upgraded to 64-bit Windows-7 with 12 GB RAM to support IDS7 requirements. The archive and HP MAS, was expanded with new short-term spinning disk and online storage units, EVA, were installed. It should be noted that during this period, three new major sites (including a new hospital) launched with our radiology services. The Itanium servers in both production and DR modes proved to be robust and stable. Itaniums provided the power necessary to process 700,000 exams annually with real-time interfaces to radiology information system and two EMR systems and in combination, have the capacity to handle 1 million exams annually. This configuration has allowed the system to be easily expandable to handle not only more sites but also increase volumes with little effort or concerns for performance.

Once we completed the design, configuration, and implementation of these complex systems, a thorough testing phase began. The testing consisted of all required elements needed to complete a successful validation and verification of the DR system and Metrocluster functionality. The overall strategy was to divide the testing into phases for two reasons. First, we wanted to limit the hours of consecutive downtime to a minimum by focusing on the validation and acceptance of the Metrocluster functionality before expanding the scope. Secondly, we wanted to thoroughly test individual cases for the different failover scenarios to prove that the cluster components performed as designed. Multiple scenarios and predicted stresses served as tests comprising the first phase. The second test phase focused on when a full failover might happen and concentrated on proving the whole system functionality, running the core services on the secondary site by focusing on common end user scenarios. The final part of the testing was to return back to the primary site after running on the secondary site for a couple of days while demonstrating no loss of function or data.

Our biggest challenges were resources, coordination of work efforts for redundant sites, and trying to accomplish all tasks without affecting patient care. Strong collaboration between SECTRA, HP, IT, and radiology attributed to the success of the project: many hours were necessary and spent on project management, communication, and task assignments. This implementation provided not only patient care improvements, but also synergy between the facilities with integrated work teams established to standardize the exams, procedures, and policies.

UH—Latest Metrics

We had 3.8 million studies on our previous system performed between 2004 and 2011. On the new DR system we have now expanded to 4.8 million total studies. Recently, we did a mock DR with three distinct downtimes. We ran the system on the DR site for 3 days, to ensure that there were no long-term errors or detectable impact. Then, we moved it back to the production environment in less than 5 min without loss of data. Also, we have already tested the system for an upgrade. In one of the DR tests, which included a database upgrade, data and systems were recovered within 30 min. An earlier test (including database upgrade) using the previous DR system took 36 h. In a very recent unplanned downtime because of a bad memory module on our primary servers which needed to be replaced, we had to fail over to a single server which resulted in no downtime. The memory module was temporarily replaced in less than 12 h. At that point, we failed back to run on both servers resulting in less than 1-min interruption to users. However, because of the insufficient memory capacity on the replacement module, we had a planned downtime where we failed over to our DR servers resulting in 8-min downtime. When we failed back to the primary servers after running on DR for 48 h, we experienced 6 min of downtime. The previous DR system did not provide us with a sustainable solution for downtimes greater than 8 h or for those happening during peak hours. At the present, our policy is to test the system once per quarter to ensure we can failover to the DR site. Failover time after hardware failure at one site now typically takes 10 min on the new DR system; while prior, we would have a downtime until the hardware was repaired.

Discussion

We report on the design and implementation of the DR solution at UH performed by a multi-disciplinary committee consisting of UH radiology PACS, IT networking experts, and SECTRA PACS vendor representatives. Based on the size of the institution and volume of exams being performed in the medical (hospitals) and health (ambulatory) centers, the committee reviewed different designs with different levels of redundancy and continuity before choosing a Metrocluster failover design as the proper DR solution. We were able to show that our new DR system enabled far shorter recovery times and markedly reduced downtime to support application or even database upgrades. In a study conducted in 2000 by Avrin et al. [8], the authors reported time to recover database of 2 h and 30 min for 198,215 studies data set. In our recent DR testing, it took merely 30 min to recover vastly more (4.8 million) studies.

“If it isn't documented, it wasn't done” is a fact that every manager has probably heard and used many times throughout his or her career, and we might expand this to “if it can't be recovered, it wasn't documented.” Documentation is important when dealing with different types of loss such as property, general liability, business interruption, and catastrophe [17]. Also, in today's environment with the mind-boggling amount of information available in different fields, guidance on how to recover the documented information has become a much harder issue for IT specialists. These problems show themselves more vividly when it comes to clinical information that must be kept both confidential and quick to access. Electronic health records above and beyond core clinical systems now create new data management challenges for healthcare IT executives, since they are creating increasing amounts of data, which must be accessed in real time across distinct sites of care and downtime is not an option. Another significant driver for having a proper DR solution is the increased enforcement of HIPAA security requirements that requires data backup, DR, and emergency-mode operations planning. Perhaps due to the lack of enforcement, some healthcare organizations have chosen to provide the most basic of DR protocols, but the health information technology for economic and clinical health act of 2009 changed that by increasing penalties, oversight, mandatory breach notifications, and the extension of obligations to business associates [18].

DR planning for healthcare institutes benefits from new tools and technologies. Current outlines of the latest disaster recovery trends include data center outsourcing (co-location data centers), cloud disaster recovery, and automation of the disaster recovery process (e.g., virtual servers) [19]. Another new technology that permits rapid recovery is “active archiving,” which is a method of tiered storage that gives the user access to data across a virtualized file system. Data migrates between multiple storage systems and media types including solid-state drive, hard disk drives, magnetic tape, and optical disk [20]. All these available technologies make it possible to continuously access data during a disaster. The UH DR solution incorporates some of these features such as a partially tiered archive and two data centers. Furthermore, our architecture consists of a primary archive with redundant gateway nodes for read and write capability and a secondary archive for a read-only archive (back up). Areas for potential improvement include the use of the cloud technology and an automatic failover to the DR, which is possible, but currently, this is a manual process at the site's request.

Our experience is affected by concomitant other modifications (including software, hardware, and multiple upgrades) along the advanced DR implementation. Thus, part of the improvement seen in our system might be advanced at least in part by other changes. The study comparisons were constrained by a relative paucity of automatic audit data from the original configuration. Finally, our DR system is a relatively new launch and we will learn more through ongoing evaluation and assessment of the system.

Conclusion

We succeeded in establishing an advanced system with more robust duplication, much better uptime, and shorter scheduled upgrades confirming that advanced DR technology contributes to a more stable and more widely available clinical tool. This implementation has reduced the unplanned downtime and dramatically decreased scheduled downtime required for software upgrades.

Acknowledgments

The authors would like to acknowledge the valuable suggestions made by Marcus Torngrip, PACS support specialist at UH.

References

- 1.Ehara S. In a radiology department during the earthquake, tsunami, and nuclear power plant accident. AJR Am J Roentgenol. 2011;197(4):W549–W550. doi: 10.2214/AJR.11.7004. [DOI] [PubMed] [Google Scholar]

- 2.Engel A, Soudack M, Ofer A, et al. Coping with war mass casualties in a hospital under fire: the radiology experience. AJR Am J Roentgenol. 2009;193(5):1212–1221. doi: 10.2214/AJR.09.2375. [DOI] [PubMed] [Google Scholar]

- 3.Brake D, Nasralla CA, Hagman JE, Samarah JK. Impact of a major tornado on the radiology department of a large teaching hospital. Kans Med. 1994;95(7):167–169. [PubMed] [Google Scholar]

- 4.Hanning S. Recovering from disaster. Implementing disaster recovery plans following terrorism.2001; SANS Security Essentials. GSEC Practical Assignment Version 1.2e

- 5.http://training-hipaa.net/compliance/Security_Contingency_Planning.htm. Last access on 1/07/2012

- 6.Peglar R. Beefing up your storage networks: a solution for disaster recovery. Radiol Manage. 2003;25(1):18–21. [PubMed] [Google Scholar]

- 7.Wallack S. Digital image storage. Veterinary Radiology and Ultrasound. 2008;49:S37–S41. doi: 10.1111/j.1740-8261.2007.00332.x. [DOI] [PubMed] [Google Scholar]

- 8.Avrin DE, Andriole KP, Yin L, Gould R, Arenson RL. Simulation of disaster recovery of a picture archiving and communications system using off-site hierarchal storage management. J Digit Imaging. 2000;13(2 Suppl 1):168–170. doi: 10.1007/BF03167652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Frost MM, Jr, Honeyman JC, Staab EV. Image archival technologies. Radiographics. 1992;12(2):339–343. doi: 10.1148/radiographics.12.2.1561423. [DOI] [PubMed] [Google Scholar]

- 10.Dumery B. Digital image archiving: challenges and choices. Radiol Manage. 2002;24(3):30–38. [PubMed] [Google Scholar]

- 11.Smith EM. Storage options for the healthcare enterprise. Radiol Manage. 2003;25(6):26–30. [PubMed] [Google Scholar]

- 12.Armbrust LJ. PACS and image storage. Vet Clin North Am Small AnimPract. 2009;39(4):711–718. doi: 10.1016/j.cvsm.2009.04.004. [DOI] [PubMed] [Google Scholar]

- 13.Mansoori B, Erhard KK, Sunshine JL. Picture Archiving and Communication System (PACS) implementation, integration and benefits in an integrated health system. Acad Radiol. 2012;19(2):229–235. doi: 10.1016/j.acra.2011.11.009. [DOI] [PubMed] [Google Scholar]

- 14.Disaster recovery and business continuity. Template. ISO 27000. Sarbanes-Oxley, HIPAA, PCI DSS, COBIT, and ITIL Compliant. Prepared by. Janco. Available at:www.laintermodal.com/SamplePages/DisasterRecoveryPlanSample.pdf. Last access on 2/22/2012

- 15.Failover Clustering Available at: http://networksandservers.blogspot.com/2011/04/failover-clustering-i.html. Last access on 2/22/2012

- 16.Heckman K, Schultz TJ. PACS Architecture. In: Dreyer KJ, Hirschorn DS, Mehta A, Thrall JH, editors. PACS a guide to the digital revolution. New York: Springer; 2006. pp. 249–269. [Google Scholar]

- 17.Boisvert S. Disaster recovery: Mitigating loss through documentation. J Healthc Risk Manag. 2011;31(2):15–17. doi: 10.1002/jhrm.20083. [DOI] [PubMed] [Google Scholar]

- 18.Ranajee N. Best practices in healthcare disaster recovery planning: The push to adopt EHRs is creating new data management challenges for healthcare IT executives. Health Manag Technol. 2012;33(5):22–24. [PubMed] [Google Scholar]

- 19.Dorion P. Hot IT Disaster Recovery Trends in 2011. Available at: http://searchdisasterrecovery.techtarget.com/news/1524540/Hot-IT-disaster-recovery-trends-for-2011. Last access on 7/19/2012.

- 20.Rector M. Improving disaster recovery outcomes. Healthcare data must be protected to conform to HIPAA requirements, which active archive supports through the expanded role of tape. Health Manag Technol. 2012;33(3):16–17. [PubMed] [Google Scholar]