Abstract

The purpose of this study was to investigate radiologist and trainee-preferred sources for solving imaging questions. The institutional review board determined this study to be exempt from informed consent requirements. Web-based surveys were distributed to radiology staff and trainees at 16 academic institutions. Surveys queried ownership and use of tablet computers and habits of utilization of various electronic and hardcopy resources for general reference. For investigating specific cases, respondents identified a single primary resource. Comparisons were performed using Fisher’s exact test. For staff, use of Google and online journals was nearly universal for general imaging questions (93 [103/111] and 94 % [104/111], respectively). For trainees, Google and resident-generated study materials were commonly utilized for such questions (82 [111/135] and 74 % [100/135], respectively). For specific imaging questions, online journals and PubMed were rarely chosen as a primary resource; the most common primary resources were STATdx for trainees and Google for staff (44 [55/126] and 52 % [51/99], respectively). Use of hard copy journals was nearly absent among trainees. Sixty percent of trainees (78/130) own a tablet computer versus 41 % of staff (46/111; p = 0.005), and 71 % (55/78) of those trainees reported at least weekly use of radiology-specific tablet applications, compared to 48 % (22/46) of staff (p < 0.001). Staff radiologists rely heavily on Google for both general and specific imaging queries, while residents utilize customized, radiology-focused products and apps. Interestingly, residents note continued use of hard copy books but have replaced hard copy journals with online resources.

Keywords: Survey, Peer-reviewed, References, Google, Mobile

Introduction

Prior to the widespread adoption of electronic media, much or most radiology learning and practice-based resources were in hard copy book and journal form. Historically, radiologists have answered specific imaging questions by consultation with more experienced colleagues or by referencing peer-reviewed sources, such as books or journals. By contrast, mobile devices have facilitated ubiquitous use of the internet and integration of digital imaging and communication into practices. Vast amounts of new electronic resources are now available for impromptu reference. Several recent studies have shown internet tools such as Google Scholar to be equivalent or superior to PubMed in identifying research references [1, 2], but another study cast doubt on whether graduate students were able to find necessary curriculum materials with an internet search alone [3]. Improved understanding regarding the preferred types and formats of resources in typical use in radiology training programs and practices for answering specific imaging questions would help optimize production of and access to reliable and peer-reviewed content.

The purpose of this study was to investigate radiologist and trainee-preferred sources for solving imaging questions.

Materials and Methods

Study Design

Two similar surveys were developed by the authors to assess reference resource utilization within various radiology departments at teaching institutions. The target audience of this survey was both staff radiologists and trainees. Given the limited risk, this study met criteria for institutional review board (IRB) exemption of informed consent. Responses were voluntary and incentivized with one of three nominal offers including a guaranteed $5 gift card, a chance to win a tablet computer, or the option of taking the second, slightly shorter survey as the incentive. The short-version survey differed by excluding several sets of questions unrelated to the purposes of this study and are not reported here. Potentially identifying information such as name, email address, or mailing address was required to be entered for the tablet computer drawing and for delivery of the gift card, but respondents were guaranteed anonymity relating to their survey answers in data analysis and publication otherwise. Because multiple responses from a single individual or otherwise unauthorized response from a common institutional IP address were possible, review of identifying information removed the possibility of a recipient receiving multiple rewards. In cases of individuals taking multiple or duplicate surveys, the first response survey was analyzed and the remainders excluded. Results related to incentive choice are published elsewhere [4]. Following IRB review, links to the online surveys were electronically disseminated to all residents and staff at the authors’ two academic institutions via a web-based commercially available site (http://surveymonkey.com), who were asked to choose one of the three incentives. The survey links were distributed to 14 additional US radiology residency program directors with a request to distribute it to all radiology residents, fellows, and staff. These additional institutions were chosen randomly from the Association of American Medical Colleges Electronic Residency Application Service list of both match-participating and nonparticipating radiology residency programs. The extent of the survey distribution was limited by incentive budgetary constraints. The survey was open for responses for approximately 3 weeks from March 26 to April 17, 2012. The first three authors were involved in collecting and maintaining survey results and performing statistical analyses, after the first author had deidentified incentive contact information.

Survey Respondent Demographics and Outcomes

Demographic questions included level of training and program size, type (community vs. university), and geographic location. Participants were asked to indicate (1) which resources from a provided list they use commonly for general reference and (2) from a similar list, which resources they use as their single most preferred source for answering specific imaging questions. In analysis, resources were divided into “peer-reviewed” and “non-peer-reviewed” resources. Participants were also asked regarding ownership of tablet computers and how often they used radiology-specific tablet applications (“apps”) (never, rarely, 1-2× per month, 1-2× per week, and daily). All survey questions including lists of optional answers formulated by the authors after an informal survey of colleagues and also included a free-response box. Except for demographic questions, the order of the options presented was randomized. For general resource questions including lists of resources, respondents could choose multiple answers unless specified. “Trainees” were defined as residents and fellows combined, “junior residents” were those in their first 2 years of radiology training, and “senior residents” were those in their last 2 years of residency. “Junior staff” were those who are institutionally employed attendings and consultants with less than 10 years staff experience, and “senior staff” were those with greater than 10 years of experience.

Statistical Analysis

All statistical analyses were performed using JMP version 9.0 (SAS Institute, Cary, NC). Categorical data were displayed as relative frequencies (percent) and compared using Fisher’s exact test with p < 0.05 considered significant.

Results

Responses

In total, 251 responses from 134 trainees and 117 staff were received (Table 1). Eight individuals entered responses for both of the incentivized surveys based on contact information provided. The first response survey was included and the second was excluded from analysis. Forty-six percent of these responses (116/251) were collected from the authors’ two academic medical centers, accounting for 42.5 (57/134) and 50.4 % (59/117) of all trainee and staff responses, respectively. The response rates at these two centers were 72.9 (51/70) and 28.6 % (6/21) for radiology trainees, and 36.0 (54/150) and 22.7 % (5/22) from radiology staff, overall. Ten of 14 (71.4 %) of the additional institutions had >1 response, determined by comparing responses to geographic location and institutional size to the list of institutions queried; however, absolute response rates for these institutions are unknown as the number of distributed surveys is not known.

Table 1.

Respondent demographics

| Trainees | Staff | |

|---|---|---|

| Training/experience level | ||

| PGY*-2 | 37 | |

| PGY-3 | 33 | |

| PGY-4 | 28 | |

| PGY-5 | 19 | |

| PGY-6 | 17 | |

| Total trainees | 134 | |

| New staff (<3 years) | 6 | |

| Established staff (3–10 years) | 21 | |

| Senior staff (>10 years) | 88 | |

| Retired/Emeritus staff | 2 | |

| Total staff | 117 | |

| Program size (#of residents per year) | ||

| 1–3 | 3 | |

| 4–7 | 32 | |

| 8–12 | 39 | |

| 13+ | 60 | |

| Practice size (# of radiologists) | ||

| <10 | 2 | |

| 11–20 | 5 | |

| 21–50 | 21 | |

| 51–100 | 7 | |

| 101+ | 80 | |

| Program type | ||

| University-based | 116 | 105 |

| Community-based | 18 | 10 |

| Program location | ||

| Northeast | 9 | 9 |

| Northwest | 0 | 0 |

| South/southeast | 35 | 22 |

| West/midwest | 90 | 83 |

| Survey completion rate | 93.2 % (125/134) | 91.5 % (107/117) |

*PGY post-graduate year

General Resources

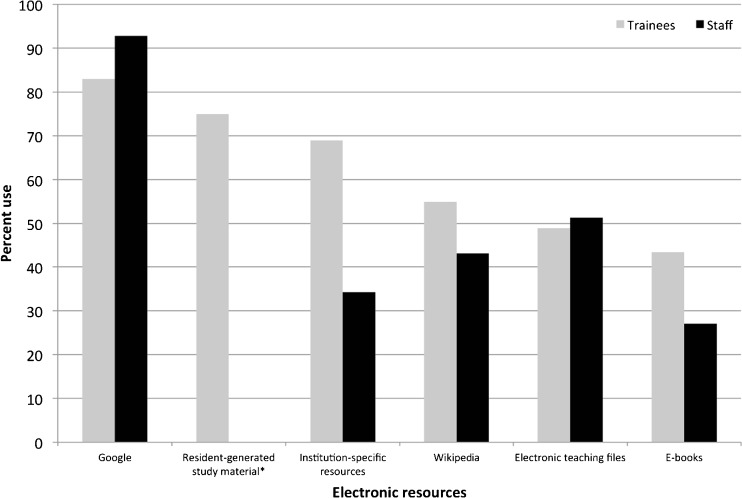

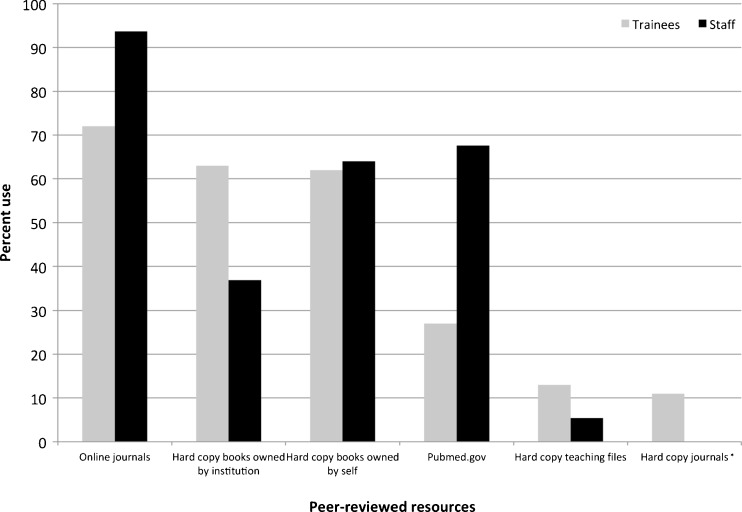

Of resources used routinely for general radiology reference, radiology staff utilize online radiology journals (93.7 % [104/111]) and Google (92.8 % [103/111]) at the highest rates (Figs. 1 and 2). For trainees, Google and resident-generated study materials were commonly utilized for such questions (82 [111/135] and 74 % [100/135], respectively). Junior residents were more likely to report using Wikipedia for general reference compared to senior residents and fellows (66 [46/70] vs. 44 % [28/64], p = 0.015), and senior residents were more likely to use resident-generated study material (88 [56/64] vs. 63 % [44/70], p = 0.001) (Table 2). There were no significant differences between preferences of staff (Table 3). Free text responses concerning other online resources used included STATdx, radswiki.com, imaios.com, headneckbrainspine.com, Google Scholar, and SearchingRadiology.com. Other non-online free text responses included audio and video lectures.

Fig. 1.

Electronic resource use by radiology staff and trainees for general radiology reference

Fig. 2.

Peer-reviewed resource use by radiology staff and trainees for general radiology reference

Table 2.

Resource utilization among junior and senior radiology trainees for general imaging questions

| Reference source | Junior residents | Senior trainees | p value |

|---|---|---|---|

| Hard copy books owned by the program | 66 % (46/70) | 59 % (38/64) | 0.48 |

| Hard copy books owned by yourself | 60 % (42/70) | 64 % (41/64) | 0.72 |

| PDF books | 56 % (39/70) | 44 % (28/64) | 0.23 |

| E-books | 37 % (26/70) | 38 % (24/64) | 1 |

| Online radiology journals | 66 % (46/70) | 78 % (50/64) | 0.13 |

| Hard copy radiology journals | 13 % (9/70) | 9 % (6/64) | 0.59 |

| PubMed | 20 % (14/70) | 34 % (22/64) | 0.08 |

| Google or other online search engine | 83 % (58/70) | 83 % (53/64) | 1 |

| Resident-generated study material | 63 % (44/70) | 88 % (56/64) | 0.0013 |

| Program-specific resources (old case conferences, old department lectures, case files, etc.) | 70 % (49/70) | 67 % (43/64) | 0.85 |

| Hard copy film teaching files (ACR) | 14 % (10/70) | 11 % (7/64) | 0.61 |

| Electronic film teaching files | 41 % (29/70) | 58 % (37/64) | 0.08 |

| Wikipedia | 66 % (46/70) | 44 % (28/64) | 0.015 |

ACR American College of Radiology, PDF Adobe portable document format, and e-books electronic books. P values below the threshold of significance (p < 0.05) are indicated in bold text

Table 3.

Resource utilization among junior and senior radiology staff for general imaging questions

| Reference source | Junior staff | Senior staff | p value |

|---|---|---|---|

| Hard copy books owned by the program | 42 % (11/26) | 35 % (30/85) | 0.64 |

| Hard copy books owned by yourself | 65 % (17/26) | 64 % (54/85) | 1.0 |

| PDF books | 23 % (6/26) | 22 % (19/85) | 1.0 |

| E-books | 38 % (10/26) | 29 % (25/85) | 0.47 |

| Online radiology journals | 96 % (25/26) | 93 % (79/85) | 1.0 |

| Hard copy radiology journals | 54 % (14/26) | 53 % (45/85) | 1.0 |

| PubMed | 62 % (16/26) | 69 % (59/85) | 0.48 |

| Google or other online search engine | 96 % (25/26) | 92 % (78/85) | 0.68 |

| Resident-generated study material | 38 % (10/26) | 33 % (28/85) | 0.64 |

| Program-specific resources (old case conferences, old department lectures, case files, etc.) | 12 % (3/26) | 4 % (3/85) | 0.14 |

| Hard copy film teaching files (ACR) | 58 % (15/26) | 49 % (42/85) | 0.51 |

| Electronic film teaching files | 50 % (13/26) | 41 % (35/85) | 0.50 |

| Wikipedia | 0 % (0/26) | 0 % (0/85) | 1.0 |

ACR American College of Radiology, PDF Adobe portable document format, and e-books electronic books.

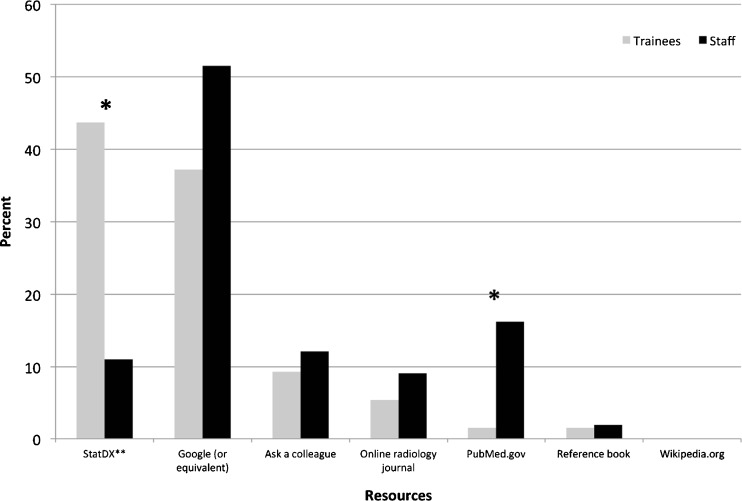

Resources for Specific Case Research

When asked to indicate a single preferred resource for answering specific radiology questions, leading choices among trainees were STATdx (43.7 % [55/126]) and Google (37.2 % [48/129]); whereas staff preferred Google (51.5 % [51/99]) and PubMed (16.2 % [16/99]). Trainees very rarely reported using PubMed, reference books, or specific online journals, and uncommonly chose consulting a colleague (9.3 % [12/111]) (Fig. 3). There was no significant difference in preferred resources between junior and senior staff, but junior residents were more likely than senior trainees to consult a colleague with specific questions (15 [10/67] vs. 3 % [2/62], p = 0.03) (Tables 4 and 5).

Fig. 3.

Preferred resource for answering specific radiology questions

Table 4.

Comparison between trainee preferred sources for investigating specific imaging questions

| Preferred imaging source | Junior residents | Senior residents | p value |

|---|---|---|---|

| Google it (or equivalent) | 31 % (21/67) | 44 % (27/62) | 0.20 |

| Search PubMed | 0 % (0/67) | 3 % (2/62) | 0.23 |

| Search STATdx | 46 % (31/67) | 44 % (27/62) | 0.86 |

| Pick up a reference book and search for it | 3 % (2/67) | 0 % (0/62) | 0.5 |

| Search for it on a specific online radiology journal | 4 % (3/67) | 6 % (4/62) | 1.0 |

| Ask a colleague or staff | 15 % (10/67) | 3 % (2/62) | 0.03 |

| Wikipedia | 0 % (0/67) | 0 % (0/62) | 1.0 |

P values below the threshold of significance (p < 0.05) are indicated in bold text

Table 5.

Comparison between junior (<10 years of experience) and senior (>10 years) staff preferred sources for investigating specific imaging questions

| Preferred imaging source | Junior staff | Senior staff | p value |

|---|---|---|---|

| Google it (or equivalent) | 44 % (8/18) | 54 % (43/80) | 0.60 |

| Search PubMed | 17 % (3/18) | 15 % (12/80) | 1.0 |

| Search STATdx | 17 % (3/18) | 8 % (6/80) | 0.36 |

| Pick up a reference book and search for it | 6 % (1/18) | 13 % (1/80) | 0.34 |

| Search for it on a specific online radiology journal | 6 % (1/18) | 10 % (8/80) | 1.0 |

| Ask a colleague or staff | 11 % (2/18) | 13 % (10/80) | 1.0 |

| Wikipedia | 0 % (0/18) | 0 % (0/80) | 1.0 |

Sixty percent of trainees (78/130) own a tablet computer versus 41.4 % of staff (46/111; p < 0.01), and 70.5 % (55/78) of those trainees reported at least weekly use of radiology-specific tablet apps, compared to 47.8 % (22/46) of staff (p < 0.01).

Discussion

The current study demonstrates that Google has emerged as the most utilized resource in radiology workflow, and that staff radiologists rely heavily on many online resources, including PubMed and online journals. Google and STATdx were noted as primary reference sources for answering specific, case-related questions. Use of hard copy radiology journals has nearly completely disappeared among trainees. These results are important as they suggest that the traditional “literature,” defined as peer-reviewed publications available in hard copy books and journals, is largely ignored by trainees seeking specific imaging questions. Instead, STATdx and search engines such as Google are providing gateway access to presumably both peer-reviewed and non-peer-reviewed material. Although electronic resources dominate trainee searches for specific imaging questions, it seems that a majority of trainees are still using both electronic and hard copy books and online radiology journals for routine studying. Tablet use is becoming widespread as well and may represent a major emerging medium for accessing radiology resources. These results indicate that radiologists at all levels of training are migrating to online and mobile resources at high rates.

Multiple previous studies have surveyed the use of resources among radiology residents. A 1991 study reported that residents spent most of their study time reading text books, hard copy journals, and reviewing hard copy case files such as the American College of Radiology teaching file [5]. By2007, a significant shift had occurred as internet use became more ubiquitous and radiology residents reported high rates of electronic resource use for studying and referencing radiology with up to 83 % citing the internet as a preferred learning resource at one training program [6]. In that same study, however, STATdx garnered only 3 % use by residents for researching specific imaging questions despite an institutional license [6]. In contrast to those findings, we find increasing popularity of Google, online radiology journals, and electronic books. Hard copy textbook use among residents has remained about 75 % in other studies as well, but two recent studies by Korbage et al. found that residents significantly shifted their study habits to electronic resources when provided with iPads [7, 8].

There are several limitations to this study. First, as a survey study, these data are subject to significant opinion and recall bias. Second, as a single large institution with a high response rate accounted for over a third of all trainee responses and nearly half of staff responses, results will be biased toward the opinions of this institution and should not necessarily be construed to represent a completely random sampling of US training programs. Budgetary restraints prohibited us from obtaining a larger sample, and as such, some geographic regions and training/practice group sizes were not sampled (the Northwest US region, for example). Given this limitation, no significant regional or practice size differences were seen.

Although we removed duplicate surveys for those submitting both incentivized surveys, we cannot exclude the possibility that some individuals submitted surveys under false identities. Additionally, because identifying information was not required for the short-version survey, there exists the possibility that some may have taken this survey multiple times. We feel that the possibility of mistaken or fraudulent surveys is low, given no meaningful incentive for taking multiple non-reward surveys and due to aforementioned multiple reward safeguards.

Additional efforts are needed in order to bring peer-reviewed resources to the workflow of residents and to continue increasing availability of reliable online content. Future studies could attempt to assess the efficacy and reliability of common learning methods and reference resources in order to ensure that both resident training and radiology problem-solving remains up to date.

Acknowledgments

Funding

Mayo Clinic internal funds were utilized for survey incentives.

Footnotes

Portions of this study were presented as an informal presentation at RSNA 2012 under the title: “Moving to the Digital Age of Radiology Education: A Survey of Learning Resources at an Academic Institution.”

References

- 1.Nourbakhsh E, Nugent R, Wang H, Cevik C, Nugent K. Medical literature searches: a comparison of PubMed and Google Scholar. Health Info Libr J. 2012;29:214–222. doi: 10.1111/j.1471-1842.2012.00992.x. [DOI] [PubMed] [Google Scholar]

- 2.Jean-Francois G, Laetitia R, Stefan D. Is the coverage of Google Scholar enough to be used alone for systematic reviews. BMC Med Inform Decis Mak. 2013;13:7. doi: 10.1186/1472-6947-13-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kingsley K, Galbraith GM, Herring M, Stowers E, Stewart T, Kingsley KV. Why not just Google it? An assessment of information literacy skills in a biomedical science curriculum. BMC Med Educ. 2011;11:17. doi: 10.1186/1472-6920-11-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ziegenfuss JY, Niederhauser BD, Kallmes D, Beebe TJ. An assessment of incentive versus survey length trade-offs in a Web survey of radiologists. J Med Internet Res. 2013;15:e49. doi: 10.2196/jmir.2322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Slone RM, Tart RP. Radiology residents’ work hours and study habits. Radiology. 1991;181:606–607. doi: 10.1148/radiology.181.2.1924814. [DOI] [PubMed] [Google Scholar]

- 6.Kitchin DR, Applegate KE. Learning radiology a survey investigating radiology resident use of textbooks, journals, and the internet. Academic radiology. 2007;14:1113–1120. doi: 10.1016/j.acra.2007.06.002. [DOI] [PubMed] [Google Scholar]

- 7.Korbage AC, Bedi HS. The iPad in radiology resident education. Journal of the American College of Radiology : JACR. 2012;9:759–760. doi: 10.1016/j.jacr.2012.05.006. [DOI] [PubMed] [Google Scholar]

- 8.Korbage AC, Bedi HS. Mobile technology in radiology resident education. Journal of the American College of Radiology : JACR. 2012;9:426–429. doi: 10.1016/j.jacr.2012.02.008. [DOI] [PubMed] [Google Scholar]