Abstract

Every act of information processing can in principle be decomposed into the component operations of information storage, transfer, and modification. Yet, while this is easily done for today's digital computers, the application of these concepts to neural information processing was hampered by the lack of proper mathematical definitions of these operations on information. Recently, definitions were given for the dynamics of these information processing operations on a local scale in space and time in a distributed system, and the specific concept of local active information storage was successfully applied to the analysis and optimization of artificial neural systems. However, no attempt to measure the space-time dynamics of local active information storage in neural data has been made to date. Here we measure local active information storage on a local scale in time and space in voltage sensitive dye imaging data from area 18 of the cat. We show that storage reflects neural properties such as stimulus preferences and surprise upon unexpected stimulus change, and in area 18 reflects the abstract concept of an ongoing stimulus despite the locally random nature of this stimulus. We suggest that LAIS will be a useful quantity to test theories of cortical function, such as predictive coding.

Keywords: visual system, neural dynamics, predictive coding, local information dynamics, voltage sensitive dye imaging, distributed computation, complex systems, information storage

1. Introduction

It is commonplace to state that brains exist to “process information.” Curiously enough, however, it is much more difficult to exactly quantify this putative processing of information. In contrast, we have no difficulties to quantify information processing in a digital computer, e.g., in terms of the information stored on its hard disk, or the amount of information transferred per second from its hard disk to its random access memory, and then on to the CPU. Why then is it so difficult to perform a similar quantification for biological, and especially neural information processing?

One answer to this question is the conceptual difference between a digital computer and a neural system: in a digital computer all components are laid out such that they only perform specific operations on information: a hard disk should store information, and not modify it, while the CPU should quickly modify the incoming information and then immediately forget about it, and system buses exist solely to transfer information. In contrast, in neural systems it is safe to assume that each element of the system (each neuron) simultaneously stores, transfers and modifies information in variable amounts, and the component processes are hard to separate quantitatively. Thus, while in digital computers the distinction between information storage, transfer and modification comes practically for free, in neural systems separating the components of distributed information processing requires thorough mathematical definitions of information storage, transfer and modification. Such definitions, let alone a conceptual understanding of what the terms meant in distributed information processing, were unavailable until very recently (Langton, 1990; Mitchell, 1998; Lizier, 2013).

These necessary mathematical definitions were recently derived building on Turing's old idea that every act of information processing can be decomposed into the component processes of information storage, transfer and modification (Turing, 1936)—very much in line with our everyday view of the subject. Later, Langton and others expanded Turing's concepts to describe the emergence of the capacity to perform arbitrary information processing algorithms, or “universal computation,” in complex systems, such as cellular automata (Langton, 1990; Mitchell et al., 1993), or neural systems. The definitions of information transfer and storage were then given by Schreiber (2000), Crutchfield and Feldman (2003), and Lizier et al. (2012b). However, the definition of information modification is still a matter of debate (Lizier et al., 2013).

Of these three component processes above—information transfer, storage, and modification—information storage in particular has been used with great success to analyze cerebro-vascular dynamics (Faes et al., 2013), information processing in swarms (Wang et al., 2012), and most importantly, to evolve (Prokopenko et al., 2006), and optimize (Dasgupta et al., 2013) artificial information processing systems. This suggests that the analysis of information storage could also be very useful for the analysis of neural systems.

Yet, while neuroscientists have given much attention to considering how information is stored structurally in the brain, e.g., via synaptic plasticity, the same attention has not been given to information storage in neural dynamics, and its quantification. As an exception Zipser et al. (1993) clearly contrasted two different ways of storing information: passive storage, where information is stored “in modified values of physiological parameters such as synaptic strength,” and active storage where “information is preserved by maintaining neural activity throughout the time it must be remembered.” In the same paper, the authors go on to point out that there is evidence for the use of both storage strategies in higher animals, and link the relatively short time scale for active storage (at maximum in the tens of seconds) with short-term or working memory and, therefore, refer to it as “active information storage.”

Despite the importance of information storage for neural information processing, information theoretic measures of active information storage have not yet been used to quantify information processing in neural systems, and in particular not to measure spatiotemporal patterns of information storage dynamics. Therefore, it is the aim of this article to introduce measures of information storage as analysis tools for the investigation of neural systems, and to demonstrate how cortical information storage in visual cortex unfolds in space and time. We will also demonstrate how neural activity may be misinformative about its own future and thereby generates “surprise.”

To this end, we first give a rigorous mathematical definition of information storage in dynamic activity in the form of local active information storage (LAIS). We then show how to apply this measure to voltage sensitive dye imaging data from cat visual cortex. In these data, we found sustained increases in dynamic information storage during visual stimulation, organized in clear spatiotemporal patterns of storage across the cortex, including stimulus-specific spatial patterns, and negative storage, or surprise, upon a change of the stimulus. Finally, we discuss the implications of the LAIS measure for neurophysiological theories of predictive coding [see Bastos et al. (2012), and references therein], that have been suggested to explain general operating principles of the cortex and other hierarchical neural systems.

2. Materials and methods

The use of the stored information for information processing inevitably requires its re-expression in neural activity and its interaction with ongoing neural activity and incoming information. Hence, information storage actively in use for information processing will inevitably be reflected in the dynamics of neural activity, and is therefore accessible in recordings of neural activity alone. To quantify this stored information that is present in neural time series we will now introduce a measure of information storage called local active information storage (Lizier et al., 2012b). In brief, this measure quantifies the amount of information in a sample from a neural time series that is predictable from its past—and thereby has been stored in this past. This is done by simply computing the local mutual information between the past of a neural signal and its next sample at each point in time, and for each channel of a recording. As the following material is necessarily formal, the reader may consider skipping ahead to section 2.2.3 at first reading to gain an intuitive understanding of mechanisms that serve active information storage.

2.1. Notation and information theoretic preliminaries

To avoid confusion, we first have to state how we formalize observations from neural systems mathematically. We define that a neural (sub-)system of interest (e.g., a neuron, or brain area) ![]() produces an observed time series {x1, …, xt, …, xN}, sampled at time intervals δ. For simplicity we choose our temporal units such that δ = 1, and hence index our measurements by t ϵ {1…N} ⊆ ℕ, i.e., we index in terms of samples. The full time series is understood as a realization of a random process

X. This random processes is nothing but a collection of random variables Xt, sorted by an integer index (t in our case). Each random variable Xt, at a specific time t, is described by the set of all its J possible outcomes

produces an observed time series {x1, …, xt, …, xN}, sampled at time intervals δ. For simplicity we choose our temporal units such that δ = 1, and hence index our measurements by t ϵ {1…N} ⊆ ℕ, i.e., we index in terms of samples. The full time series is understood as a realization of a random process

X. This random processes is nothing but a collection of random variables Xt, sorted by an integer index (t in our case). Each random variable Xt, at a specific time t, is described by the set of all its J possible outcomes ![]() Xt = {a1, …, aj, …, aJ}, and their associated probabilities pt(xt = aj). The probabilities of a specific outcome pt(xt = a) may change with t, i.e., when going from one random variable to the next. In this case, we will indicate the specific random variable Xt the probability distribution belongs to—hence the subscript in pt(·). For practical estimation of pt(·) then, multiple time-series realizations or trials would be required. For stationary processes, where pt(xt = a) does not change with t, we simply write p(xt), and practical estimation may be done from a single time-series realization. In sum, in this notation the individual random variables Xt produce realizations xt, and the time-point index of a random variable Xt is necessary when the random process is non-stationary. When using more than one system, the notation is generalized to multiple systems

Xt = {a1, …, aj, …, aJ}, and their associated probabilities pt(xt = aj). The probabilities of a specific outcome pt(xt = a) may change with t, i.e., when going from one random variable to the next. In this case, we will indicate the specific random variable Xt the probability distribution belongs to—hence the subscript in pt(·). For practical estimation of pt(·) then, multiple time-series realizations or trials would be required. For stationary processes, where pt(xt = a) does not change with t, we simply write p(xt), and practical estimation may be done from a single time-series realization. In sum, in this notation the individual random variables Xt produce realizations xt, and the time-point index of a random variable Xt is necessary when the random process is non-stationary. When using more than one system, the notation is generalized to multiple systems ![]() ,

, ![]() ,

, ![]() , ….

, ….

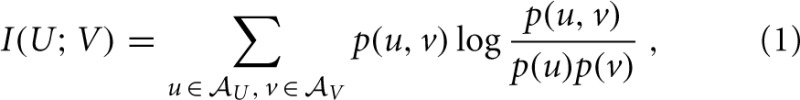

As we will see below, active information storage is nothing but a specific mutual information between collections of random variables in the process in question. We therefore start by giving the definition of mutual information (MI) I(X; Y) as the amount of information held in common by two random variables U, V on average (Cover and Thomas, 1991):

where the log can be taken to an arbitrary base, and choosing base 2 yields the mutual information in bits. Note that the mutual information I(U; V) is symmetric in U and V. As shown more explicitly in Equation (2), the MI I(U; V) measures the amount of information provided (or the amount that uncertainty is reduced) by an observation of a specific outcome u of the variable U about the occurrence of another specific outcome v of V—on average over all possible values of u and v. As originally pointed out by Fano (1961), the summands have a proper interpretation even without the weighted averaging—as the information that observation of a specific u provides about the occurrence of a specific v. The pointwise or local mutual information is therefore defined as:

| (3) |

It is important to note the distinction of the local mutual information measure i(x; y) considered here from partial localization expressions, i.e., the partial mutual information or specific information I(u; V) which are better known in neuroscience (DeWeese and Meister, 1999; Butts, 2003; Butts and Goldman, 2006). Partial MI expressions consider information contained in specific values u of one variable U about the other (unknown) variable V. Crucially, there are two valid approaches to measuring partial mutual information, one which preserves the additivity of information and one which retains non-negativity (DeWeese and Meister, 1999). In contrast, the fully local mutual information i(x; y) that is used here is uniquely defined as shown by Fano (1961).

2.2. Local active information storage

Using the definition in Equation (3), we can immediately quantify how much of the information in the outcome xt of the random variable Xt at time t was predictable from the observed past state xk−t − 1 of the process at time t − 1:

| (4) |

| (5) |

This quantity was introduced by Lizier et al. (2012b) and called local active information storage (LAIS). Here, xk−t − 1 is an outcome of the collection of previous random variables Xk−t − 1 = {Xt − 1, Xt − t1, …, Xt − tkmax}, called a state (see below). The corresponding expectation value over all possible observations of xt and xk−t − 1, A(Xt) = I(Xk−t − 1; Xt), is known simply as the active information storage. The naming of this measure aligns well with the concept of active storage in neuroscience by Zipser et al. (1993), but is more general than capturing only sustained firing patterns. In the following subsections, we comment on practical issues involved in estimating the LAIS, and discuss its interpretation.

2.2.1. Interpretation and construction of the past state

As indicated above, the joint variable xk−t − 1 in Equation (4) is an outcome of the collection of previous random variables: Xk−t − 1 = {Xt − 1, Xt − t1,…, Xt − tkmax}. This collection should be constructed such, that it captures the state of the underlying dynamical system ![]() , and can be viewed as a state-space reconstruction of this system. In this sense, Xk−t − 1 must be chosen such that Xt is conditionally independent of all Xt − tl with tl > tkmax, i.e., of all variables that are observed earlier in the process X than the variables in the state at t − 1. The choice must be made carefully, since using too few variables Xt − tl from the history can result in an underestimation of a(xt), while using too many [given the amount of data used to estimate the probability density functions (PDFs) in Equation (4)] will artificially inflate it. Typically, the state can be captured via Takens delay embedding (Takens, 1981), using d variables Xt − tl with the tl delays equally spaced by some τ ≥ 1, with d and τ selected using the Ragwitz criteria (Ragwitz and Kantz, 2002)—as recommended by Vicente et al. (2011) for the related transfer entropy measure (Schreiber, 2000). Alternatively, non-uniform embeddings may be used (e.g., see Faes et al., 2012).

, and can be viewed as a state-space reconstruction of this system. In this sense, Xk−t − 1 must be chosen such that Xt is conditionally independent of all Xt − tl with tl > tkmax, i.e., of all variables that are observed earlier in the process X than the variables in the state at t − 1. The choice must be made carefully, since using too few variables Xt − tl from the history can result in an underestimation of a(xt), while using too many [given the amount of data used to estimate the probability density functions (PDFs) in Equation (4)] will artificially inflate it. Typically, the state can be captured via Takens delay embedding (Takens, 1981), using d variables Xt − tl with the tl delays equally spaced by some τ ≥ 1, with d and τ selected using the Ragwitz criteria (Ragwitz and Kantz, 2002)—as recommended by Vicente et al. (2011) for the related transfer entropy measure (Schreiber, 2000). Alternatively, non-uniform embeddings may be used (e.g., see Faes et al., 2012).

If the process has infinite memory, and kmax does not exist, then the local active information storage is defined as the limit of Equation (4):

| (6) |

| (7) |

2.2.2. Relation to other measures and dynamic state updates

The average active information storage (AIS), is related to two measures introduced previously. On the one hand, a similar measure called “regularity” had been introduced by Porta et al. (2000). On the other hand, AIS is closely related to the excess entropy (Crutchfield and Feldman, 2003), as observed in Lizier et al. (2012b). The excess entropy E(Xt) = I(Xk−t − 1; Xk+t), with Xk+t = {Xt, Xt + t1, …, Xt + tkmax} being a similar collection of future random variables from the process, measures the amount of information (on average) in the future outcomes xk+t of the process this is predictable from the observed past state xk−t − 1 at time t − 1. As such, the excess entropy captures all of the information in the future of the process that is predictable from its past. In measuring the subset of that information in only the next outcome of the process, the AIS is focused on the dynamic state updates of the process.

From the point of view of dynamic state updates, the AIS is complementary to a well-known measure of uncertainty of the next outcome of the process which cannot be resolved by its past state. Following Crutchfield and Feldman (2003) we refer to this quantity as the “entropy rate,” the conditional entropy of the next outcome given the past state: Hμ(Xt) = H(Xt | Xk−t − 1) = 〈− log2pt(xt | xk−t − 1)〉. The complementarity of the entropy rate and AIS was shown by Lizier et al. (2012b): H(Xt) = A(Xt) + Hμ(Xt), where H(Xt) is the Shannon entropy of the next measurement Xt. Hμ(Xt) is approximated by measures known as the Approximate Entropy (Pincus, 1991), Sample Entropy (Richman and Moorman, 2000), and Corrected Conditional Entropy (Porta et al., 1998), which have been well studied in neuroscience [see e.g., the work by Gómez and Hornero (2010); Vakorin et al. (2011), and references therein]. Many such studies refer to Hμ(Xt) as a measure of complexity, however, modern complex systems perspectives focus on complexity as being captured in how much structure can be resolved rather than how much cannot (Crutchfield and Feldman, 2003).

Furthermore, given that the most appropriate measure of complexity of a process is a matter of open debate (Prokopenko et al., 2009), we take the perspective that complexity of a system is best approached as arising out of the interaction of the component operations of information processing: information storage, transfer and modification (Lizier, 2013), and focus on measuring these quantities since they are rigorously defined and well-understood. Crucially, in comparison to the excess entropy discussed above, the focus of AIS in measuring the information storage in use in dynamic state updates of the process make it directly comparable with measures of information transfer and modification. Of particular importance here is the relationship of AIS to the transfer entropy (Schreiber, 2000), where the two measures together reveal the sources of information (either being the past of that process itself—storage, or of other processes—transfer) which contribute to prediction of the process' next outcome.

The formulation of the transfer entropy specifically eliminates information storage in the past of the target process from being mistakenly considered as having been transferred (Lizier and Prokopenko, 2010; Lizier, 2013; Wibral et al., 2013). An interesting example is where a periodic target process is in fact causally driven by another periodic process—after any initial entrainment period, our information processing view concludes that we have information storage here in the target but no transfer from the driver (Lizier and Prokopenko, 2010). While causally there is a different conclusion, our observational information processing perspective is simply focussed on decomposing apparent information sources of the process, regardless of underlying causality (which in practise cannot often be determined anyway). In this view, a causal interaction can computationally subserve both information storage or transfer (as discussed further in the next section). Information transfer is necessarily linked to a causal interaction, but the reverse is not true. It has previously been demonstrated that the information processing perspective is more relevant to emergent information processing structure in complex systems, e.g., coherent information cascades, in contrast to causal interactions being more relevant to the micro-scale physical structure of a system, e.g., axons in a neural system (Lizier and Prokopenko, 2010).

2.2.3. Mechanisms producing active information storage

In contrast to passive storage in terms of modifications to system structure (e.g., synaptic gain changes), the mechanisms underlying active information storage are not immediately obvious. The mechanisms that subserve this task have been formally established, however, and can be grouped as follows:

Physical mechanisms in the system. This could incorporate some internal memory mechanism in the individual physical element giving rise to the process X (e.g., some decay function, or the stereotypical processes during the refractory period after a neural spike). More generally, it may involve network structures which offload or distribute the memory function onto edges or other nodes. In particular, Zipser et al. (1993) reported that networks with fixed, recurrent connections were sufficient to account for such active storage patterns, which is in line with earlier proposals. Furthermore, Lizier et al. (2012a) quantified the AIS contribution from self-loops, feedback and feedforward loops (as the only network structures contributing to active information storage).

Input-driven storage. This describes situations where the apparent memory in the process is caused by information storage structure which lies in another element which is driving that process, e.g., a periodically spiking neuron that may cause a downstream neuron to spike with the same period (Obst et al., 2013). As described in section 2.2.2 above, an observer of the process attributes these dynamics to information storage, regardless of the (unobserved) underlying causal mechanism.

Of these mechanisms of active information storage the case of circular causal interactions in a loop motif, and the causal, but repetitive influence from another part of the system may seem counterintuitive at first, as we might think that in these cases there should be information transfer rather than active information storage. To see why these interactions serve storage rather than transfer, it may help to consider that all components of information processing, i.e., transfer, active storage and modification, ultimately have to rely on causal interactions in physical systems. Hence, the presence of a causal interaction cannot be linked in a one-to-one fashion to information transfer, as otherwise there would be no possibility for physical causes of active information storage and of information modification left, and no consistent decomposition of information processing would be possible. Therefore, the notion of storage that is measurable in a part of the system but that can be related to external influences onto that part is to be preferred for the sake of mathematical consistency and ultimately, usefulness. We acknowledge that information transfer has often been used as a proxy for a causal influence, dating back to suggestions by Wiener (1956) and Granger (1969). However, now that causal interventional measures and measures of information transfer can be clearly distinguished (Ay and Polani, 2008; Lizier and Prokopenko, 2010) it seems no longer warranted to map causal interactions to information transfer in a one-to-one manner.

2.2.4. Interpretation of LAIS values

Measurements of the LAIS tells us the amount to which observing the past state xk−t − 1 reduced our uncertainty about the specific next outcome xt that was observed. We can interpret this in terms of encoding the outcome xt in bits: encoding xt using an optimal encoding scheme for the distribution pt(xt) takes −log2pt(xt) bits, whereas encoding xt if we know xk−t − 1 using an optimal encoding scheme for the distribution pt(xt | xk−t − 1) takes −log2pt(xt | xk−t − 1) bits, and the LAIS is the number of bits saved via the latter approach.

At first glance we may assume that the LAIS is a positive quantity. Indeed, as a mutual information, the average AIS will always be non-negative. However, the LAIS can be negative as well as positive. It is positive where pt(xt | xk−t − 1) > pt(xt), i.e., where the observed past state xk−t − 1 made the following observation xt more likely to occur than we would have guessed without the knowledge of the past state. In this case, we state that xk−t − 1 was informative. In contrast, the LAIS is negative where pt(xt | xk−t − 1) < pt(xt); i.e., where the observed past state xk−t − 1 made the following observation xt less likely to occur than we would have guessed without the knowledge of the past state (but it occurred nevertheless, making the cue given by xk−t − 1 misleading). In this case, we state that xk−t − 1 was misinformative about xt. To better understand negative LAIS also see the further discussion in Lizier et al. (2012a), including examples in cellular automata where the past state of a variable was misinformative about the next observation due to the strong influence of an unobserved other source variable at that time point.

2.2.5. Choice of the overall time window for constructing probability densities from data

As already pointed out above, active information storage is tightly related to predictability of a given brain area's output as seen by the receiving brain area. This predictability hinges on the ability of the receiver to see the past states in the output of a brain area (see previous section) and to interpret the past states in the received time series in order to make a prediction about the next value. In other words, the receiver needs to guess pt(xt, xk−t − 1) correctly in order to exploit the active information storage. If the guess of the receiving neuron (n) or brain area, i.e., (xt, xk−t − 1), is incorrect, then only a fraction of the information storage can be used for successfully predicting future events. The losses could be quantified as the extra coding cost for the receiving area, when assuming (·) instead of pt(·). This loss would simply be the Kullback–Leibler divergence DKL(pt||). This scenario sees the receiving brain area mostly as an optimal encoder or compressor. In contrast, the cost occurring in the framework of predictive coding theories would arise because the receiving brain area could not predict the incoming signal well, and thereby inhibit it via feedback to the sending brain area (Rao and Ballard, 1999). In this scenario, the cost of imperfect predictions resulting from using instead of pt, would be reduced inhibition and a more frequent signaling of prediction errors by the sending system, leading to a metabolic cost.

To see the storage that the receiving brain area can exploit, the time interval used for the practical estimation of the probability density functions (PDFs) from neural recordings should best match the expected sampling strategy of the receiving brain area. For example, if we think that probabilities are evaluated over long time frames, then it might make sense to pool all available data in the experiment, as even a mis-estimation of the true probability densities pt(·) (due to potential non-stationarities) then will better reflect the internal estimate (xt, xk−t − 1), and thus the internally predictable information. However, if we think that probabilities are only estimated instantaneously by pooling over all available inputs to a brain area at any time point, then we should construct the necessary PDFs only from all simultaneously acquired data from all measurement channels, but not pool over time. The latter view could also be described as assuming that the brain area receiving the signals in question computes the PDF instantaneously by pooling over all its inputs, without keeping any longer term memory of the observed probabilities. This construction of a PDF would be linked closely to an instantaneous physical ensemble approach, considering that all incoming channels are physically equivalent, but are only assessed at a single instant in time. In contrast, if we assume that learning of the relevant PDFs takes place on a lifelong timescale, then PDFs should be acquired from very long recordings of a freely behaving subject or animal in a natural environment, and the outcomes of a specific experiment should be interpreted using this “lifelong” PDF. Here we lean toward this latter approach and pool all available data to estimate the internally available .

Note that while we indeed pool over all the available data to obtain the distribution , the interpretation of the data in terms of the active information storage is local per agent and time step. This is exactly the meaning of “local” in local active information storage as introduced in Lizier et al. (2012b) (this is also akin to the relation of the local mutual information introduced by Fano (1961) and the corresponding global PDF). The local active information storage values are thus obtained by interpreting realizations for a single agent and a single time step in the light of a probability distribution that is obtained over a more global view of the system in space and time. This is also indicated by the use of instead of pt. Also see the discussion section for potential other choices of obtaining p.

2.3. Acquisition of neural data

2.3.1. Animal preparation

Data were obtained from an anesthetized cat. The animal had been anesthetized and artificially ventilated with a mixture of O2 and N2O (30/70%) supplemented with Halothane (0.7%). All procedures were along the guidelines of the Society for Neuroscience, in accordance with the German law for the protection of laboratory animals, permitted by the local authorities and overseen by a designated veterinarian.

2.3.2. Voltage sensitive dye imaging

For optical imaging the visual cortex (area 18) was exposed and an imaging chamber was implanted over the craniotomy. The chamber was filled with silicone oil and sealed with a glass plate. A voltage sensitive dye (RH1691, Optical Imaging Ltd, Rehovot, Israel) was applied to the cortex for about 2 h and subsequently the excess of the dye was washed out. For imaging we used a CMOS camera system (Imager 3001, Optical Imaging Ltd, Rehovot, Israel, Camera: Photon Focus MV1 D1312, chip size 1312 × 1082 pixel) fitted with a lens system consisting of two 50 mm Nikon objectives providing a field of view of 8.7 × 10.5 mm and an epifluorescence illumination system (excitation: 630 ± 10 nm, emission high pass 665 nm). In order to optimize the signal-to-noise ratio raw camera signals were spatially binned to 32 × 32 camera pixels allowing for a spatial resolution of 30 × 32 μm2 per data pixel. Camera frames were collected at a rate 150 Hz, resulting in a temporal resolution of 6.7 ms.

2.3.3. Visual stimulation

Stimuli were presented triggered to the heartbeat of the animal for 2 s and camera frames were collected during the entire stimulation period. We will denote such a single stimulation period and the corresponding data acquisition as a trial here. Each trial consisted of 1 s stimulation with an isoluminant gray screen followed by stimulation with fields of randomly positioned dots (dot size: 0.23° visual angle; 384 dots distributed over an area of 30° (vertical) by 40° (horizontal) visual angle) moving coherently in one of eight different directions at 16 degree/s. Stimuli were presented in blocks of 16 trials, consisting of eight trials using the stimuli described before and an additional eight trials which consisted only of the presentation of the isoluminant gray screen for 2 s (“blank trials”). Each motion direction condition was presented eight times in total (eight trials), resulting in the presentation on 64 stimulus trials and 64 blank trials in total. Of the presented set of eight stimulus types, seven were used for the final analysis, as the computational process for one condition did not finish on time before local compute clusters were taken down for service.

2.3.4. VSD data post-processing

After spatial binning of 32 × 32 camera pixels into one data pixel, VSD data were averaged over all presentations of blank trials and this average was subtracted from the raw data to remove the effects of dye-bleaching and heartbeat. Finally, the data were denoised using a median filter of 3 × 3 data pixels.

2.4. Measurement of LAIS on VSD neural data

Estimation of LAIS was performed using the open source Java information dynamics toolkit (JIDT) (Lizier, 2012), with a history parameter kmax of ten time points, spaced 2 samples, or (2/150 Hz)= 13.3 ms, apart. The total history length thus covered 133 ms, or roughly one cycle of a neural theta oscillation, which seems to be a reasonable time horizon for a downstream neural population that ultimately must assess these states. To enable LAIS estimation from a sufficient amount of samples, we considered the data pixels as homogeneous variables executing comparable state transitions, such that the pixels form a physical ensemble in terms of information storage dynamics. Pooling data over pixels thus enables an ensemble estimate of the PDFs in question. This approach seems justified as all pixels reported activity from a single brain area (area 18 of cat visual cortex, see below). Mutual information was estimated using a box kernel-estimator (Kantz and Schreiber, 2003) with a kernel width of 0.5 standard deviations of the data.

Here we assume that the neural system is at least capable of exploiting the statistics arising from the stimulation given throughout the experiment and thus construct PDFs from all data (time points and pixels) for a given condition. Therefore, we pool data over the full time course from −1 to 1 s of the experiment. Thus, each image of the VSD data had a spatial configuration of 67 × 137 spatial data pixels after removal of the two rows/columns on each side of an image because of the median filter that was applied. Each trial (of a total of eight trials per condition) resulted in 288 LAIS values, based on an original data length of 298 samples and a history length (state dimension) of 10 pixels. The product of final image size and LAIS samples resulted in 2.64 · 106 data points per trial for the estimation of the PDF for each of the eight motion direction conditions. Due to computational limitations, LAIS estimates were performed on two blocks of four trials separately, resulting in 1.06 · 107 data points entering the estimation in JIDT.

2.5. Correlation analysis of LAIS and VSD data

For each of the seven analyzed motion direction conditions, VSD data and LAIS were initially organized separately per condition into 5 dimensional data structures, with dimensions: blocks (1,2), trials (1–4), time (−1 to 1 s), and pixel row (67) and columns (137). For correlation analysis, these arrays were linearized and entered into a Spearman rank correlation analysis to obtain correlation coefficients ρ(VSD,LAIS) and significance values.

3. Results

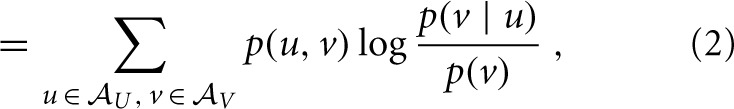

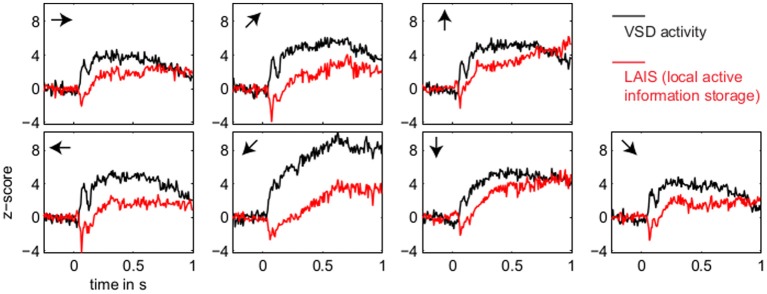

LAIS values exhibited a clear spatial and temporal pattern. The temporal pattern exhibited higher LAIS values during stimulation with a moving random dot pattern than under baseline stimulation with an isoluminant gray screen, with effects being largest in spatially clearly segregated regions (Figures 1–3). The spatial pattern of LAIS under stimulation was dependent on the motion direction of the drifting random dots in the stimulus (Figure 2).

Figure 1.

Local active information storage (LAIS) allows to trace neural information processing in space and time. Spatio-temporal structure of LAIS in cat area 18—seven frames from the spatio-temporal LAIS data, taken at the times indicated below each frame. Stimulation onset was at time 0. Baseline activity (−74.5 ms) is around zero and mostly uniform. At 40 ms after stimulus onset, LAIS is negative in a region that correlates to the region that later exhibits high LAIS. Around 227 ms increased LAIS sets in and lasts until the end of the data epoch, albeit with slow fluctuations (up to 1 s, see Figure 3). Also see the post-stimulus time-average in Figure 2.

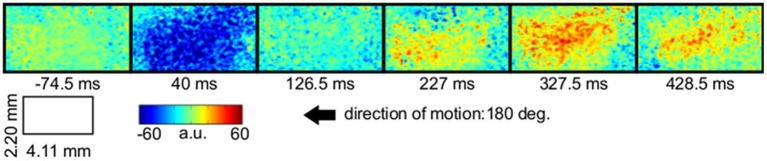

Figure 3.

Temporal evolution of VSD activity and local active information storage. Spatial averages over the 67 × 137 data pixels for VSD activity (black traces), and the LAIS (red traces) versus time. Motion directions are indicated by arrows for each panel. Note that LAIS for the vertical, the right, and the downward-right motion directions continues to rise toward the end of the stimulus interval, despite declining activity levels. Also note that the unexpected onset response at approximately 40 ms leads to negative active information storage. For an explanation see the Materials and Methods section.

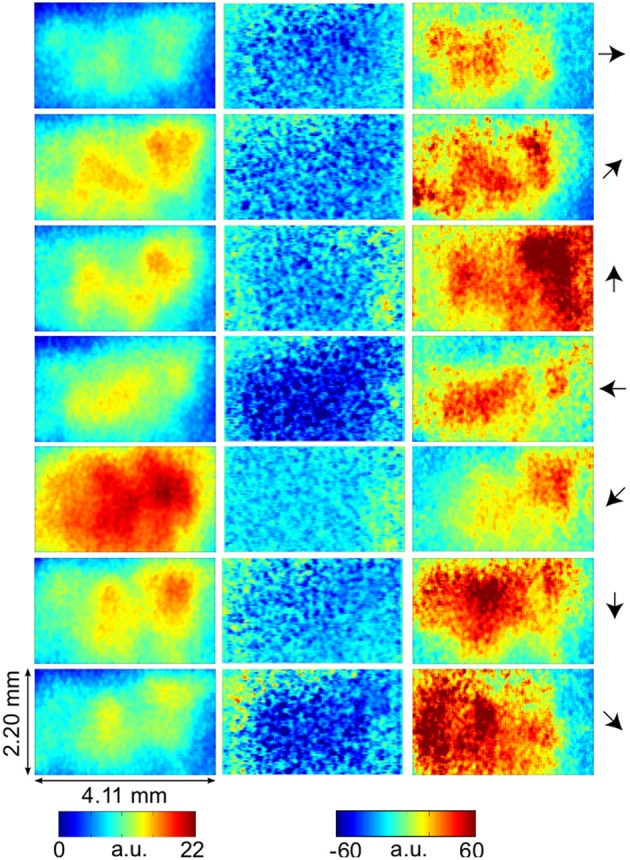

Figure 2.

VSD-activity and local active information storage (LAIS) maps. VSD activity averaged over stimulation epochs and time after stimulus onset after the initial transient (0.2–1 s) (left column). LAIS map immediately after stimulus onset—negative values (blue) indicate surprise of the system (middle column). Time-average LAIS maps from the stimulus period after the initial transient (0.2–1 s) (right column). Rows 1–7 present different stimulus motion directions: 0, 45, 90, 180, 225, 270, 315 (in degrees, indicated by arrows on the right, arrow colors match time-trace colors in Figure 3). 67 × 137 data pixel per image, pixel dimension 30 × 32 μm2. Left–right image direction is anterior–posterior direction.

In contrast to this spatially highly selective elevation of LAIS values under stimulation, there was a sharp drop in LAIS values at approximately 40 ms after stimulus onset, with negative LAIS values measured at many pixels (Figure 1, 40 ms window; Figure 2, middle column; Figure 3, lower row). This indicates that the baseline activity was misinformative about the following stimulus related activity (since an observer would expect the baseline activity to continue). This transient, stimulus induced drop in LAIS was more evenly distributed throughout the imaging window than the elevated LAIS in the later stimulus period post 200 ms (Figure 2, middle column). The transient drop in LAIS had a recovery time of approximately 34 ms, also giving an estimate of the dominant intrinsic storage duration of the neural processes.

In all conditions we observed a positive, but weak correlation between the local VSD activity values and LAIS values over time and space (Table 1). Looking at individual time intervals, we found stronger, and negative, correlation coefficients both, for the baseline interval (−1 to 0 s), and for the initial interval after the onset of the moving dot stimulus (0.04–0.14 s). In contrast, we observed a strong positive correlation at the late stimulus interval (0.2–1 s). This means that the increased dynamic range observed in the VSD signals during stimulation with the moving stimuli led to an increased amount of predictable information, rather than to a decrease. This correlation also means that storage was generally higher in neurons that were preferentially activated by the respective moving stimulus (also compare left and right columns in Figure 2 for each motion direction).

Table 1.

Correlation of LAIS and local VSD activity.

| Motion direction | Correlation coefficient | |||

|---|---|---|---|---|

| Full epoch | −1 to 0 s | 0.04–0.14 s | 0.2–1 s | |

| 0° | 0.05* | −0.33* | −0.09* | 0.45* |

| 4 ° | 0.09* | −0.50* | −0.20* | 0.65* |

| 90° | 0.12* | −0.30* | −0.13* | 0.48* |

| 180° | 0.07* | −0.27* | −0.22* | 0.44* |

| 225° | 0.07* | −0.58* | −0.22* | 0.71* |

| 270° | 0.17* | −0.39* | −0.33* | 0.68* |

| 315° | 0.03* | −0.37* | −0.17* | 0.40* |

Correlation coefficients are Spearman rank correlations.

p < 0.05/7.

4. Discussion

Our results demonstrate increased local active information storage in the primary visual cortex of the cat under sustained stimulation, compared to baseline. The spatial pattern of the LAIS increase was clustered spatially and stimulus-specific (Figure 2). The temporal pattern of LAIS consisted of a first sharp drop in LAIS from 0.04 to 0.14 s after onset of the moving stimulus and a sustained rise in LAIS up to the end of the stimulation epoch (Figure 3). The sharp drop at stimulus onset for many pixels is important because it indicates the past activity of the pixels was surprising or misinformative about the next outcomes near that onset. This has the potential to be used in detecting changes of processing regimes directly from neural activity.

The subsequent sustained rise in LAIS is particularly notable because of the random spatial structure of each stimulus on a local scale; this random spatial structure translates into a random temporal stimulation sequence in the receptive field of each neuron because of the stimulus motion. The increased LAIS despite random stimulation of the neurons suggests that our observation is not due to input-driven storage, i.e., memory or storage contained already in the spatio-temporal stimulus features that drive the observed LAIS [as discussed in section 2.2.3 and by Obst et al. (2013)]. Nevertheless, as revealed by correlation analysis, storage was highest in regions preferentially activated by the stimulus, suggesting a representational nature of LAIS in these data with respect to the motion features of the stimulus. In sum, the changes of LAIS with stimulation onset, stimulation duration, and stimulus type clearly demonstrate that LAIS reflects neural processing, rather than mere physiological or instrumentation-dependent noise regularities. This leads us to believe that LAIS is a promising tool for the analysis of neural data in general, and of VSD data in particular.

4.1. Local active information storage and neural activity levels

Any increase in LAIS may in principle arise from two sources: first, a richer dynamics with a larger amplitude range—increasing overall information content, while maintaining the predictability of the time series (e.g., quantified as the inverse of the signal prediction error, or the entropy-normalized LAIS), may increase LAIS. Alternatively, increased LAIS may be based on increased predictability under essentially unchanged dynamics. The significant positive correlation between LAIS and VSD activity after stimulus onset suggests that a richer, but still predictable, dynamics of VSD activity is at the core of the stimulus-dependent effects observed here. As a caveat we have to note that the use of a kernel estimator for LAIS measurement, coupled with pooling of observations over the whole ensemble of pixels and time points may also have introduced a slight bias in favor of a positive correlation between high VSD activity and LAIS, as it allows storage to be more easily measured in pixels with larger amplitude here. The negative correlation observed in the baseline interval, however, demonstrates that this bias is not a dominant effect in our data. This is because a dominant effect of the kernel-based bias would also assign higher storage values to high amplitude data in the baseline interval, and thereby result in a positive correlation in the baseline. This was not the case. The relatively low correlation coefficients across the complete time-interval, which are between 0.02 and 0.13, further suggest that LAIS increases due not follow higher VSD signals tightly. Therefore, LAIS extracts additional useful information about neural processing. This point is further supported by the stimulus-dependent changes that seem more pronounced in LAIS maps than in the VSD activity maps (compare left and right columns in Figure 2).

For future studies the amplitude-bias problem introduced by the fixed-width kernel estimator should easily be overcome using a Kraskov-type variable width kernel estimator—see the original work of Kraskov et al. (2004), and Lindner et al. (2011); Vicente et al. (2011); Wibral et al. (2011, 2013); Lizier (2012) for implementation details of Kraskov-type estimators. Another possibility would be to condition the analysis on the activity level, as for example done for the transfer entropy measure by Stetter et al. (2012).

4.2. Timescales of LAIS

The recovery time of the stimulus-induced, transient drop in LAIS was 34 ms. A drop of this kind means that the activity before the drop (baseline activity) was not useful to predict the activity during the drop (the onset response). This is expected as the stimulus is presented in an unpredictable way to the neural system. However, the recovery time of this drop of approximately 34 ms yields an insight into the intrinsic storage time scales of the neural processes. We note that the observed time-scale corresponds to the high beta frequency band around 29 Hz (1/34 ms). In how far this is an incidental finding or bears significance must be clarified in future studies.

4.3. On the interpretation of local active information storage measures in neuroscience

When working with measures from information theory, it is important to keep in mind that the basic definition of information as given by Shannon revolves around the probabilities of events and the possibility to encode something using these events. To separate Shannon information content from information about something (new) in a more colloquial sense, one often also speaks about potential or syntactic information, when referring to Shannon information content, of semantic information when referring to human interpretable information, and last of pragmatic information for our everyday notion of information as in “news” [for details see for example the treatment of this topic by Deacon (2010)]. In the same way, LAIS does not directly describe information that the neural system stores about things in the outside world—rather, it quantifies how much of the future (Shannon) information in the activity can be predicted from its past.

In fact, information in the neural system about something in the outside world would have to be quantified by some kind of mutual information between aspects of the outside world and neural activity, while information in the classic sense of semantic information represented symbolically (e.g., in books, and other media) would be even more complicated: theoretically it should be quantified as a mutual information between the medium containing the symbols and activity in the neural system, while additionally satisfying the constraint that this mutual information should vanish when conditioning on the states of the world variables represented by the symbols.

While this lack of a more semantic interpretation of LAIS may seem disappointing at first, the quantification of the predictable amount of information makes this measure highly useful in understanding information processing at a more abstract level. This is important wherever we have not yet gained insights into what (if anything) may be explicitly represented by a neural system. Moreover, the focus on predictability provides a non-trivial link between LAIS and current theories of brain function as pointed out below. Nevertheless, a use of the concept in neuroscience may have to take the properties of the receiving neuron or brain area into account to consider how much of the mathematical storage in a signal is accessible to neural information processing. To address this concern, we used a pooling over all available data in space and time here as it seems to represent a way by which a receiving brain area could construct its (implicit) guesses of the underlying probability densities. However, also other strategies are possible and need to be explored in the future. As one example for another strategy of probability-density estimation, we have investigated a construction of probability densities via pooling over all data pixels but separately for each point in time. This approach avoids any potential issues with non-stationarities, but obscures the view of the “typical transitions” in the system over time to a point that no interpretable results were obtained (data not shown).

4.4. Local active information storage and predictive coding theories

Information storage in neural activity means that information from the past of a neural process will predict some non-zero fraction of information in the future of this process. It is via this predictability improvement that information storage is also tightly connected with predictive coding, an important family of theories of cortical function. Predictive coding theories propose that a neural system is constantly generating predictions about the incoming sensory input (Rao and Ballard, 1999; Knill and Pouget, 2004; Friston, 2005; Bastos et al., 2012) to adapt internal behavior and processing accordingly. These predictions of incoming information must be implemented in neural activity, and they typically need to be maintained for a certain duration—as it will typically be unknown to the system when the predictive information will be needed. Hence, the neural activity subserving prediction must itself have a predictable character, i.e., non-zero information storage in activity. Analysis of active information storage may thereby enable us to test central assumptions of predictive coding theories rather directly. This is important because tests of predictive coding theories so far mostly relied on the predictions being explicitly known and then violated—a condition not given for most brain areas beyond early sensory cortices, and for most situations beyond simple experimental designs. Here, the quantification of the predictability of brain signals themselves via LAIS may open a second approach to testing these important theories. To this end we may scan brain signals for negative LAIS, as negative LAIS values indicate the past states of the neural signals in question were not informative about the future, i.e., negative LAIS signals a breakdown of predictions. In our example dataset this was brought about by the sudden, unexpected onset of the stimulus. However, the same analyses may be applied in situations that are not a under external control—for example to analyze internally driven changes in information processing regimes.

In relation to predictive coding theories it is also encouraging that the predictive information was found on timescales related to the beta band. This is because this frequency band has been implied in the intra-cortical transfer of predictions (Bastos et al., 2012).

4.5. Sub-sampling and coarse graining, and non-locality of PDF estimation

When interpreting LAIS values it should be kept in mind that in neural recordings we typically do not observe the system fully or at the relevant scales—in contrast to artificial systems, such as cellular automata and robots, where the full system is accessible. More precisely, in neural data one of two types of sub-sampling is typically present—either coarse graining with local averaging of activity indices (as in VSD) or sub-sampling proper, where neural activity is recorded faithfully (e.g., via intracellular recordings) but with incomplete coverage of the full system. This sub-sampling may have non-trivial effects on the probability distributions of neural events [see for example Priesemann et al. (2009, 2013)]. Hence, LAIS values obtained under sub-sampling should be interpreted as relative rather than absolute measures and should only be compared to other experiments, or experimental conditions, when obtained under identical sampling conditions.

In addition there is necessarily temporal subsampling in the form of finite data; we therefore note again the potential for bias in the actual MI values returned via the use of kernel estimation here, particularly for large embedding dimensions and small kernel widths. Alternatives to kernel estimator are known to be more effective in bias compensation [e.g., Kraskov-Grassberger-Stögbauer estimation (Kraskov et al., 2004)]; or use of use kernel estimation is solely motivated by practical computational reasons. Effects of temporal subsampling also mandates to focus on relative rather than absolute values within this experiment.

Even within the experiment though, the bias may not be evenly distributed amongst the local MI values, which tend to exhibit larger bias for low frequency events. With that said, our experiment did use a large amount of data (by pooling observations over pixels and time), which counteracts such concerns to a large degree, and many of the key results (e.g., Figure 3) involve averaging or correlating over many local values, which further ameliorates this. There are techniques suggested to alleviate bias in local or pointwise MI, e.g., Turney and Pantel (2010), and while none were applied here, we do not believe this alters the general conclusions of our experiment for the aforementioned reasons. As a particular example, the surprise caused by the onset of stimulus is still clearly visible as negative LAIS, despite any propensity for such low frequency events to have been biased strongly toward positive values.

4.6. On the locality of information values

As a concluding remark, we would like to point out again that various “levels of locality” have to be carefully chosen in the analysis of neural data. One important level is the spatial extent (ensemble of agents) and the time span over which data are pooled to obtain the PDF. However, even pooling over a large spatial extent, i.e., many agents and a long time span, may still allow to interpret the information value of the data agent-by-agent and time step-by-time step, if agents i are identical and samples at subsequent time points t come from a stationary random process [see the book of Lizier (2013) for several examples]. This is because one may pool data to estimate a PDF as long as these data can be considered “replications,” i.e., as coming from the same random variable. Pooling data under these conditions will obviously not bias the PDF estimate away from the ground truth for any agent or time step. Irrespective of how many data points are pooled this way, it is then still possible to interpret each data point (xi,t, xk−i,t − 1) individually in terms of its LAIS, a(xt, xtk−t − 1). This locality of information values is identical to the local interpretation of the (Shannon) information terms h(xi) = −log(p(xi)) that together, as a weighted average over all possible outcomes xi, yield the (Shannon) entropy H(X) = ∑i p(xi)h(xi) of a random variable X. As explained for example by MacKay (2003, chapter4), each and every outcome xi of a random variable X has its own meaningful Shannon information value h(xi), that may be very different from that of another outcome xj, although repeated draws from this random variable can be considered stationary. It is this sense of “local” that gives local active information storage its name. In contrast, how locally in space and time we obtain the PDF is more important for the precision of the LAIS estimates.

In the analysis of LAIS from neural data three issues will necessarily blur locality, and impair the precision of the LAIS estimate to some extent:

If a pool of identical agents i, all running identical stationary random processes Xi, is available, the only blurring of locality arises due to the intrinsic temporal extent of the state variables. However, the while the stored information may be encoded in a temporally non-local state xtk−t − 1, this information is used to predict the next value of the process xt at a single point in time.

If agents are non-identical, but their data are pooled nonetheless, then the overall empirical PDF obtained across these agents is no longer fully representative of each single agent and the local information storage values per agent are biased due to the use of this non-optimal PDF. This effect may be present to some extent in our analysis, as we cannot guarantee that all parts of area 18 behave strictly identical.

If the random process in question is not stationary, then a PDF obtained via pooling samples across time is also not representative of what happens at single points in time, and again a bias in the LAIS values for each agent and time step arises. This bias is potentially more severe. Nevertheless, we pooled data across all available time samples here, as this seems to be closer to the strategy available to a neuron in a downstream brain area (also see section 2.2.5), when trying to estimate, or adapt to, its input distribution. This is because a neuron may more easily estimate approximate PDFs of its inputs across time than across all possible neurons in an upstream brain area, to most of which it simply doesn't interface.

5. Conclusion

Distributed information processing in neural systems can be decomposed into component processes of information transfer, storage and modification. Information storage can be quantified locally in space and time using an information theoretic measure termed local active information storage (LAIS). Here we present for the first time the application of this measure to neural data. We show that storage reflects neural properties such as stimulus preferences and surprise, and reflects the abstract concept of an ongoing stimulus despite the locally random nature of this stimulus. We suggest that LAIS will be a useful quantity to test theories of cortical function, such as predictive coding.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Matthias Kaschube from the Frankfurt Institute for Advanced Studies (FIAS) for fruitful discussions on active information storage. Viola Priesemann received funding from the Federal Ministry of Education and Research (BMBF) Germany under grant number 01GQ0811 (Bernstein).

Funding

Michael Wibral and Viola Priesemann were supported by LOEWE Grant “Neuronale Koordination Forschungsschwerpunkt Frankfurt (NeFF).” Michael Wibral thanks the Commonwealth Scientific and Industrial Research Organisation (CSIRO) for supporting a visit in Sydney which contributed to this work. Sebastian Vögler was supported by the Bernstein Focus: Neurotechnology (BFNT) Frankfurt/M.

References

- Ay N., Polani D. (2008). Information flows in causal networks. Adv. Complex Syst. 11, 17 10.1142/S0219525908001465 [DOI] [Google Scholar]

- Bastos A. M., Usrey W. M., Adams R. A., Mangun G. R., Fries P., Friston K. J. (2012). Canonical microcircuits for predictive coding. Neuron 76, 695–711 10.1016/j.neuron.2012.10.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butts D. A. (2003). How much information is associated with a particular stimulus? Network 14, 177–187 10.1088/0954-898X/14/2/301 [DOI] [PubMed] [Google Scholar]

- Butts D. A., Goldman M. S. (2006). Tuning curves, neuronal variability, and sensory coding. PLoS Biol. 4:e92 10.1371/journal.pbio.0040092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cover T. M., Thomas J. A. (1991). Elements of Information Theory. New York, NY: Wiley-Interscience; 10.1002/0471200611 [DOI] [Google Scholar]

- Crutchfield J. P., Feldman D. P. (2003). Regularities unseen, randomness observed: levels of entropy convergence. Chaos 13, 25–54 10.1063/1.1530990 [DOI] [PubMed] [Google Scholar]

- Dasgupta S., Wörgötter F., Manoonpong P. (2013). Information dynamics based self-adaptive reservoir for delay temporal memory tasks. Evol. Syst. 4, 235–249 10.1007/s12530-013-9080-y [DOI] [Google Scholar]

- Deacon T. W. (2010). “What is missing from theories of information?” (chapter 8) in Information and the Nature of Reality, eds Davies P., Gregersen N. H. (Cambridge: Cambridge University Press; ), 146 [Google Scholar]

- DeWeese M. R., Meister M. (1999). How to measure the information gained from one symbol. Network 10, 325–340 10.1088/0954-898X/10/4/303 [DOI] [PubMed] [Google Scholar]

- Faes L., Nollo G., Porta A. (2012). Non-uniform multivariate embedding to assess the information transfer in cardiovascular and cardiorespiratory variability series. Comput. Biol. Med. 42, 290–297 10.1016/j.compbiomed.2011.02.007 [DOI] [PubMed] [Google Scholar]

- Faes L., Porta A., Rossato G., Adami A., Tonon D., Corica A., et al. (2013). Investigating the mechanisms of cardiovascular and cerebrovascular regulation in orthostatic syncope through an information decomposition strategy. Auton. Neurosci. 178, 76–82 10.1016/j.autneu.2013.02.013 [DOI] [PubMed] [Google Scholar]

- Fano R. (1961). Transmission of Information: A Statistical Theory of Communications. Cambridge, MA: The MIT Press [Google Scholar]

- Friston K. (2005). A theory of cortical responses. Philos. Trans. R. Soc. Lond. B Biol. Sci. 360, 815–836 10.1098/rstb.2005.1622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gómez C., Hornero R. (2010). Entropy and complexity analyses in alzheimer's disease: an MEG study. Open Biomed. Eng. J. 4, 223 10.2174/1874120701004010223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granger C. W. J. (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438 10.2307/1912791 [DOI] [Google Scholar]

- Kantz H., Schreiber T. (2003). Nonlinear Time Series Analysis. 2nd Edn Cambridge: Cambridge University Press; 10.1017/CBO9780511755798 [DOI] [Google Scholar]

- Knill D. C., Pouget A. (2004). The bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712–719 10.1016/j.tins.2004.10.007 [DOI] [PubMed] [Google Scholar]

- Kraskov A., Stoegbauer H., Grassberger P. (2004). Estimating mutual information. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 69, 066138 10.1103/PhysRevE.69.066138 [DOI] [PubMed] [Google Scholar]

- Langton C. G. (1990). Computation at the edge of chaos: phase transitions and emergent computation. Physica D 42, 12–37 10.1016/0167-2789(90)90064-V [DOI] [Google Scholar]

- Lindner M., Vicente R., Priesemann V., Wibral M. (2011). Trentool: a Matlab open source toolbox to analyse information flow in time series data with transfer entropy. BMC Neurosci. 12:1–22 10.1186/1471-2202-12-119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lizier J. T. (2012). JIDT: an information-theoretic toolkit for studying the dynamics of complex systems. Available online at: http://code.google.com/p/information-dynamics-toolkit/

- Lizier J. T. (2013). The Local Information Dynamics of Distributed Computation in Complex Systems. Springer theses. Springer; 10.1007/978-3-642-32952-4_2 [DOI] [Google Scholar]

- Lizier J. T., Atay F. M., Jost J. (2012a). Information storage, loop motifs, and clustered structure in complex networks. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 86(2 Pt 2), 026110 10.1103/PhysRevE.86.026110 [DOI] [PubMed] [Google Scholar]

- Lizier J. T., Prokopenko M., Zomaya A. Y. (2012b). Local measures of information storage in complex distributed computation. Inform. Sci. 208, 39–54 10.1016/j.ins.2012.04.016 [DOI] [Google Scholar]

- Lizier J. T., Flecker B., Williams P. L. (2013). Towards a synergy-based approach to measuring information modification, in Proceedings of the 2013 IEEE Symposium on Artificial Life (ALIFE) (Singapore: ), 43–51 10.1109/ALIFE.2013.6602430 [DOI] [Google Scholar]

- Lizier J. T., Prokopenko M. (2010). Differentiating information transfer and causal effect. Eur. Phys. J. B 73, 605–615 10.1140/epjb/e2010-00034-5 [DOI] [Google Scholar]

- MacKay D. J. (2003). Information Theory, Inference and Learning Algorithms. Cambridge: Cambridge University Press [Google Scholar]

- Mitchell M. (1998). Computation in cellular automata: a selected review, in Non-Standard Computation, eds Gramß T., Bornholdt S., Groß M., Mitchell M., Pellizzari T. (Weinheim: Wiley-VCH Verlag GmbH & Co. KGaA; ), 95–140 [Google Scholar]

- Mitchell M., Hraber P., Crutchfield J. P. (1993). Revisiting the edge of chaos: evolving cellular automata to perform computations. Complex Systems 7, 89–130 [Google Scholar]

- Obst O., Boedecker J., Schmidt B., Asada M. (2013). On active information storage in input-driven systems. arXiv: 1303.5526. [Google Scholar]

- Pincus S. M. (1991). Approximate entropy as a measure of system complexity. Proc. Natl. Acad. Sci. U.S.A. 88, 2297–2301 10.1073/pnas.88.6.2297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Porta A., Baselli G., Liberati D., Montano N., Cogliati C., Gnecchi-Ruscone T., et al. (1998). Measuring regularity by means of a corrected conditional entropy in sympathetic outflow. Biol. Cybernet. 78, 71–78 10.1007/s004220050414 [DOI] [PubMed] [Google Scholar]

- Porta A., Guzzetti S., Montano N., Pagani M., Somers V., Malliani A., et al. (2000). Information domain analysis of cardiovascular variability signals: evaluation of regularity, synchronisation and co-ordination. Med. Biol. Eng. Comput. 38, 180–188 10.1007/BF02344774 [DOI] [PubMed] [Google Scholar]

- Priesemann V., Munk M. H. J., Wibral M. (2009). Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC Neurosci. 10:40 10.1186/1471-2202-10-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V., Valderrama M., Wibral M., Le Van Quyen M. (2013). Neuronal avalanches differ from wakefulness to deep sleep–evidence from intracranial depth recordings in humans. PLoS Comput. Biol. 9:e1002985 10.1371/journal.pcbi.1002985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prokopenko M., Boschietti F., Ryan A. J. (2009). An information-theoretic primer on complexity, self-organization, and emergence. Complexity 15, 11–28 10.1002/cplx.20249 [DOI] [Google Scholar]

- Prokopenko M., Gerasimov V., Tanev I. (2006). Evolving spatiotemporal coordination in a modular robotic system, in From Animals to Animats 9: Proceedings of the Ninth International Conference on the Simulation of Adaptive Behavior (SAB'06). Lecture notes in computer science. Vol. 4095, eds Nolfi S., Baldassarre G., Calabretta R., Hallam J. C. T., Marocco D., Meyer J.-A., et al. (Berlin: Springer; ), 558–569 [Google Scholar]

- Ragwitz M., Kantz H. (2002). Markov models from data by simple nonlinear time series predictors in delay embedding spaces. Phys. Rev. E Stat. Nonlin. Soft Matter. Phys. 65(5 Pt 2), 056201 10.1103/PhysRevE.65.056201 [DOI] [PubMed] [Google Scholar]

- Rao R. P., Ballard D. H. (1999). Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 2, 79–87 10.1038/4580 [DOI] [PubMed] [Google Scholar]

- Richman J. S., Moorman J. R. (2000). Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart. Circ. Physiol. 278, H2039–H2049 [DOI] [PubMed] [Google Scholar]

- Schreiber (2000). Measuring information transfer. Phys. Rev. Lett. 85, 461–464 10.1103/PhysRevLett.85.461 [DOI] [PubMed] [Google Scholar]

- Stetter O., Battaglia D., Soriano J., Geisel T. (2012). Model-free reconstruction of excitatory neuronal connectivity from calcium imaging signals. PLoS Comput. Biol. 8:e1002653 10.1371/journal.pcbi.1002653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takens F. (1981). “Detecting strange attractors in turbulence” (chapter 21) in Dynamical Systems and Turbulence, Warwick 1980. Lecture notes in mathematics. Vol. 898, eds Rand D., Young L.-S. (Berlin: Springer; ), 366–381 [Google Scholar]

- Turing A. M. (1936). On computable numbers, with an application to the Entscheidungsproblem. Proc. Lond. Math. Soc. 42, 230–265 [Google Scholar]

- Turney P. D., Pantel P. (2010). From frequency to meaning: vector space models of semantics. J. Artif. Intell. Res. 37, 141–188 [Google Scholar]

- Vakorin V. A., Mišić B., Krakovska O., McIntosh A. R. (2011). Empirical and theoretical aspects of generation and transfer of information in a neuromagnetic source network. Front. Syst. Neurosci. 5:96 10.3389/fnsys.2011.00096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vicente R., Wibral M., Lindner M., Pipa G. (2011). Transfer entropy—a model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 30, 45–67 10.1007/s10827-010-0262-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X. R., Miller J. M., Lizier J. T., Prokopenko M., Rossi L. F. (2012). Quantifying and tracing information cascades in swarms. PLoS ONE 7:e40084 10.1371/journal.pone.0040084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wibral M., Pampu N., Priesemann V., Siebenhühner F., Seiwert H., Lindner M., et al. (2013). Measuring information-transfer delays. PLoS ONE 8:e55809 10.1371/journal.pone.0055809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wibral M., Rahm B., Rieder M., Lindner M., Vicente R., Kaiser J. (2011). Transfer entropy in magnetoencephalographic data: quantifying information flow in cortical and cerebellar networks. Prog. Biophys. Mol. Biol. 105, 80–97 10.1016/j.pbiomolbio.2010.11.006 [DOI] [PubMed] [Google Scholar]

- Wiener N. (1956). The theory of prediction, in In Modern Mathematics for the Engineer, ed Beckmann E. F. (New York, NY: McGraw-Hill; ). [Google Scholar]

- Zipser D., Kehoe B., Littlewort G., Fuster J. (1993). A spiking network model of short-term active memory. J. Neurosci. 13, 3406–3420 [DOI] [PMC free article] [PubMed] [Google Scholar]