Abstract

Computer-aided intraocular surgery requires precise, real-time knowledge of the vasculature during retinal procedures such as laser photocoagulation or vessel cannulation. Because vitreoretinal surgeons manipulate retinal structures on the back of the eye through ports in the sclera, voluntary and involuntary tool motion rotates the eye in the socket and causes movement to the microscope view of the retina. The dynamic nature of the surgical workspace during intraocular surgery makes mapping, tracking, and localizing vasculature in real time a challenge. We present an approach that both maps and localizes retinal vessels by temporally fusing and registering individual-frame vessel detections. On video of porcine and human retina, we demonstrate real-time performance, rapid convergence, and robustness to variable illumination and tool occlusion.

I. Introduction

ViTREORETINAL surgery is often regarded as particularly demanding due to the extraordinary precision required to manipulate the small, delicate retinal structures, the confounding influence of physiological tremor on the surgeon’s micromanipulation ability, and the challenging nature of the surgical access [1], [2]. Routine procedures such as membrane peeling require the surgeon to manipulate anatomy less than 10 μm thick [3–5], and laser photocoagulation operations benefit from accurate placement of laser burns [6], [7]. Promising new procedures such as vessel cannulation necessitate precise and exacting micromanipulation to inject anticoagulants into veins less than 100 μm in diameter [8], [9].

To address micromanipulation challenges in retinal surgical procedures, a variety of assistive robots have been proposed. Master/slave robots developed for eye surgery include the JPL Robot Assisted MicroSurgery (RAMS) system [10], the Japanese ocular robot of Ueta et al. [11], and the multi-arm stabilizing micromanipulator of Wei et al. [12]. Retinal surgery with the da Vinci master/slave robot has been investigated [13] and led to the design of a Hexapod micropositioner accessory for the da Vinci end-effector [14]. The Johns Hopkins SteadyHand Eye-Robot [15] shares control with an operator who applies force to the instrument while it is simultaneously held by the robot arm. A unique MEMS pneumatic actuator called the Microhand allows grasping and manipulation of the retina [16]. The Microbots of Dogangil et al. aim to deliver drugs directly to the retinal vasculature via magnetic navigation [17]. A lightweight micromanipulator developed in our lab, Micron, is fully handheld and has been used with vision-based control to aid retinal surgical procedures [18], [19].

While classic robot control can provide general behaviors such as motion scaling, velocity limiting, and force regulation, more specific and intelligent behaviors require knowledge of the anatomy. Vision-based control combines visual information of the anatomy with robotic control to enforce tip constraints, or virtual fixtures, which enact task-specific behaviors and provide guidance to the surgeon during procedure [20], [21]. In retinal vessel cannulation, knowledge of the vessel location relative to the instrument tip can aid robotic behavior and more effectively help guide the robot during injections into the vessel [22], [23]. During retinal laser photocoagulation, placing bums on retinal vessels should be avoided as this can occlude the vein, possibly causing vitreous hemorrhage [7]. However, existing methods for vessel detection or retinal registration are not suited to real-time operation, preferring accuracy over speed for offline use, and do not handle constraints required for intraocular surgery, such as robustness to tool occlusion.

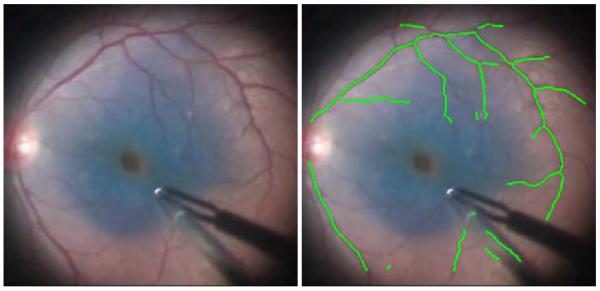

In this paper, we propose an approach to map and localize the vasculature of the retina in real time that is robust to tool occlusions and variable illumination conditions, for use in intraocular surgery. In Section II, related work in simultaneous localization and mapping (SLAM) and in retinal registration is described. Section III describes our approach of using the fast retinal vessel detection of [24] for feature extraction, an occupancy grid for mapping [25], and iterative closest point (ICP) [26] for localization. In Section IV, we evaluate our approach on videos of an in vitro eyeball phantom, ex vivo porcine retina, and in vivo human retina (see Fig. 1). Section V concludes with a discussion and future work.

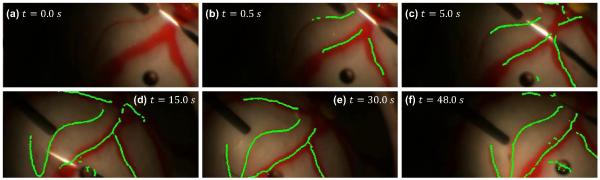

Fig. 1.

The proposed mapping and localization algorithm for retinal vasculature running in real time on recorded in vivo human retina during a retinal peeling with blue die. Video source: http://youtu.be/CTnavOgDsXA

II. Related Work

A wealth of published work related to localization and mapping of retinal vessels exists and can be grouped into three general categories: vessel detection, retinal registration, and the more general robotic approach of simultaneous localization and mapping (SLAM).

A. Vessel Detection

Vessel detection is the process of extracting vasculature in retinal imagery and often includes calculating the center lines, width, and orientation of vessels. One set of methods uses local color and intensity information to classify the image on a per-pixel basis [27–30]. Another popular approach is to search across the image for vessel-like structures using matched filters at various locations, scales, and orientations [24], [31], [32]. Other algorithms use a bank of Gabor wavelets to do a pixel-wise classification of the image [33–35]. However, most focus on offline analysis of low-magnification, wide-area images such as fundus images where accuracy is prioritized over speed. With the exception of speed-focused algorithms such as [24], [30], [35] and other hardware-accelerated methods [36], [37], most algorithms require more than 1 s to run, which is insufficiently fast to benefit robotic control loops.

One notable exception is the rapid exploratory algorithm of Can et al. [24] that traces the vasculature, yielding a monotonically improving set of partial results suitable for real-time deployment at 30 Hz. Can et al. [24] achieves high-speed vessel detection through a very fast sparse initialization followed by a tracing algorithm. First, a fast search for vessel points along a coarse grid is performed to initialize a set of seed points on vessels. Each seed point, or detected candidate vessel, is then explored in both directions along the vessel with an approximate and discretized matched filter. At each iteration, the best fit for location, orientation, and width of the vessel center line is estimated through the evaluation of several matched filters. Using orientation estimates to initialize the next iteration, the network of vessels in the image is traced without having to evaluate areas lacking vessels. Because only a small fraction of the total number of pixels in the image is ever processed, most of the vessels can be detected very rapidly. However, the vessel detections of [24] are noisier and less complete than other, more computationally expensive methods.

B. Retinal Image Registration

Numerous approaches to registering, mosaicking, and tracking exist to take a sequence of retinal imagery and calculate relative motion between images. In general, approaches match one or more of several features between images: key points, vasculature landmarks, or vasculature trees. Key point algorithms use image feature descriptors such as SIFT [38] to find and match unique points between retinal images [39–42]. Vasculature landmark matching algorithms find distinctive points based on vessel networks, such as vein crossings or bifurcations, and match custom descriptors across multiple images [43–45]. Other approaches augment or eschew key points and use the shape of extracted vessels to match vasculature trees [46], [47].

Methods that depend on local key point features [39], [40], [42], [48] often result in poor tracking at high magnification because of the lack of texture on the retina and the non-distinctive nature of individual points on the veins. With optimization, the algorithms of [43], [45] could be run in real time on modem hardware; however, they only use sparse retinal vessel landmarks, which are relatively few or non-existent at high magnifications. More importantly, they only perform localization and do not build a map of the vasculature. Also, all of these approaches suffer from interference caused by the instruments, which both occlude existing features in the image and create new, spurious features on the moving shaft. Our approach is most similar to Stewart et al. [44] which uses a robust, dual-boot ICP algorithm to register vasculature landmarks (bifurcations and crossovers) and trees (centerlines of the vessels). Stewart et al. achieves very accurate results, but our algorithm is 100X faster and handles occlusions, dynamic lighting conditions, and occlusion while yielding temporally consistent map.

C. Simultaneous Localization and Mapping (SLAM)

The problem this paper addresses is similar to a core problem addressed in robotics: simultaneous localization and mapping, or SLAM. In SLAM, a robot with imprecise, noisy localization (e.g., odometry) explores an unknown environment with local sensors (such as a laser range-finder) with the goal of building a global map and localizing itself relative to this map [49]. Using a probabilistic formulation, SLAM optimizes a joint probability over the map and the localization to simultaneously solve for the true positions of the robot and global environmental features. Early solutions such as the Extended Kalman Filter (EKF) scaled poorly and did not handle ambiguous landmark associations well [49]. Recent particle filter approaches such as FastSLAM are faster and more robust [50]. With the introduction of occupancy grids, which discretize the map and maintain a grid of probabilities representing whether each cell is occupied, SLAM algorithms scale more effectively [25].

Comparing SLAM to our problem, the task of building a temporally consistent map of vasculature and localizing the current observation of vessels to this map exhibits many similarities. However, most implementations of SLAM are tailored to space-carving sensors such as laser range-finders instead of over-head sensors and assume a reasonably good robotic motion model, both of which are poor assumptions in the problem of retinal localization and mapping.

III. Methods

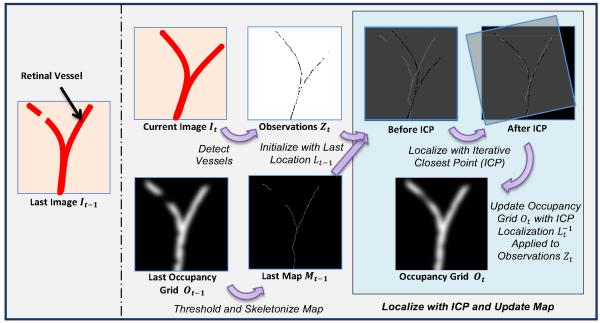

Our goal is to design an algorithm that maps and localizes retinal vessels by merging retinal vessel detection with retinal image registration and taking advantage of temporal information as seen in SLAM approaches. A fusion of methods is needed: fast retinal vessel detections algorithms are noisy, incomplete, and do not handle occlusions [24]; retinal image registration methods that do build vasculature maps are orders of magnitude slower than required for real-time robotic guidance [46], [47]; and SLAM algorithms are not designed or tuned for application in intraocular surgeries. Fig. 2 shows a new algorithm that incorporates aspects of [24], [44], [49] to perform 30 Hz vasculature mapping and localization of retinal video using rapidly-detected vessels as features, an occupancy grid for mapping, and iterative closest point (ICP) for localization to robustly handle noise, tool occlusions, and variable illumination.

Fig. 2.

Block diagram detailing the steps in our proposed algorithm that maps and localizes retinal vessels during intraocular surgery. Retinal vessels are detected III each frame, localized to a skeletonized map of the occupancy grid with iterative closest point (ICP), and the map probabilities are updated.

A. Problem Definition

Given an series of input video frames I = [I0, I1, … , IT] over a discretized time period t ∈ [0, 1, … , T], the algorithm should output a global map in the form of N vasculature points M = [M0, M1, … , MN] and the corresponding camera viewpoint locations L = [L0, L1, … , LT] of the input video frames in the map. At time t, we parameterize the ith vasculature point as a 2D location . Because typical retinal surgeries have high magnification (often the view is only a few mm2 of a 25 m diameter eye), we approximate the global map as a plane planar section of retina. Similar to many other approaches to retinal registration, we assume an affine camera with a viewpoint at time t as a 2D translation and rotation Lt = [xt, yt, θt] from the initial position at t = 0. As seen from our results, this 3-DOF motion model is sufficient even with fair large field of views of the retina. Observations of vessels in the camera at time t are denoted by Zt. The remainder of this section describes the steps that take these video inputs and return these mapping and localization outputs.

B. Feature Extraction

Finding features for matching at high magnification is difficult. Traditional key point detectors such as SIFT [38] or SURF [51] fail to find distinctive points on the textureless retina. Likewise, enough vasculature landmarks such as crossovers or bifurcations may not be present in sufficient numbers to function as good features with high magnification. We instead use many anonymous feature points extracted from vessel detection algorithms. These features cannot be matched individually with a local feature descriptor, but can instead be matched as a group based on the shape of the vasculature network of vessels. We use the highly-efficient, but noisy vessel tracing algorithm of Can et al. [24] to detect vessels, which form the anonymous points (see Fig. 3).

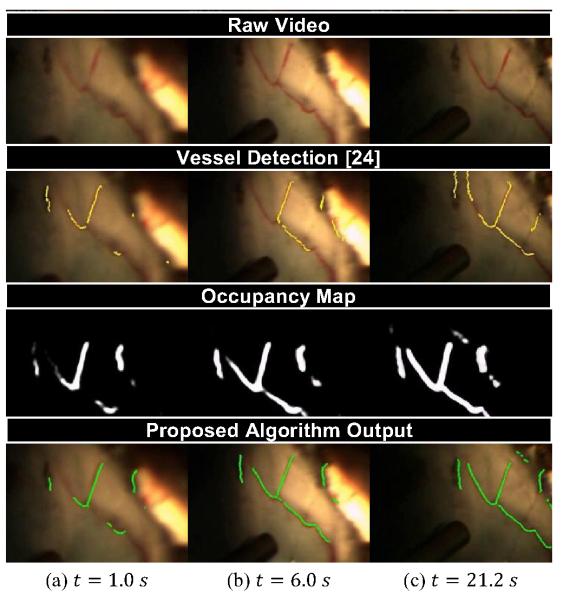

Fig. 3.

Snapshots of (a) the raw video, (b) feature extraction, (c) mapping, and (d) localization of the proposed algorithm at various times during 21 s video of porcine retina. Notice that our algorithm builds up the full vessel network even with incomplete frame-to-frame detections from [24].

To cull spurious detections on vessel-looking structures such as the tip of the instrument or light-pipe, each vessel point must pass a color test and bloom proximity test. The color test rejects pixels that are too dark or insufficiently red, while the bloom proximity test rejects vessels points that are too close to large white specular blooms in the image. These two simple tests reject many false positives in the detection stage and yield the current observation Zt as 2D points.

C. Mapping

A global map that holds the current best estimate of all the observed vasculature is maintained using an occupancy grid Ot, which discretizes the map into pixel-sized cells (see Fig. 3). Each pixel in the occupancy grid represents the probability that a vessel occupies that particular spatial location. At each time instance t, the current observations Zt are transformed to the map with the best estimate of the location and used to update the probabilities in the occupancy map by adding a Gaussian around each detected vessel point as each observation increases the probability that a vessel exists at the detected point. The occupancy grid has a maximum value to prevent unbounded evidence from accumulating. A global decay function decreases the probability of all grid cells, allowing vessels that have not been detected to vanish after some time. While it might be more robust to explicitly consider deformation instead of a decay to let the map react to changes, tool/tissue interaction is non-rigid and difficult to model, especially in real-time.

The formulation of the occupancy map reasons about uncertainty over time, smoothing noise and handling occlusions and deformations. A final map containing the centerlines of the most probable vessels is constructed by skeletonizing the occupancy grid, which is approximated by thresholding, computing the distance transform, calculating the Laplacian, and thresholding again. This yields a map of 2D points Mt of vessels in the occupancy grid (see Fig. 3).

D. Localization and Motion Model

To localize eye-ball motion (which is mathematically identical to localizing camera motion), a 3-DOF planar motion model is chosen. The problem of localization is then to estimate the 2D translation and rotation Lt between the current observations Zt and the map Mt, both of which are represented by an un-ordered, anonymous set of 2D points. Iterative closest point (ICP) is used to find point correspondences and calculate the transformation. To guarantee real-time performance, Zt and Mt are randomly sampled to have a maximum of 500 points. To prevent spurious detections from causing large mismatches and adversely affecting the solution, candidate correspondences are only used to estimate the transform if their distance is under some threshold. Horn’s quatemion-based method [52] is used to estimate the rigid transform instead of an affine or similarity transform because scale and shear are negligible. Incomplete vessel detections at each frame are noisy, so the final ICP estimation of the localization is smoothed using a constant-velocity Kalman filter, yielding the localization Lt. The occupancy map is then updated with the newly registered vessel points Zt to close the loop on the algorithm.

IV. Evaluation

We have evaluated the proposed algorithm on a variety of videos of recorded in vitro eye phantom, ex vivo porcine retina, and in vivo human procedures.

A. Setup and Timing Performance

For ease of robotic testing in our lab, color video recorded of an eyeball phantom or porcine retina is captured at 30 Hz with a resolution of 800×608 at a variety of high magnifications (10-25X). Each frame is converted to grayscale by selecting the green channel, a common practice in many vessel detection algorithms. For efficiency, the image is scaled to half-size. On a fast, modem computer (Intel i7-2600K), our algorithm implemented in C++ with OpenCV [53] runs at 30-40 Hz, including all vessel feature detection, occupancy grid mapping, and ICP localization run in a single thread. Fig. 1 shows the proposed algorithm output on an in vivo human procedure.

B. Initialization and Convergence Results

Initialization is fast, requiring less than a second to start building the map and only a few seconds to build a full map. Fig. 4 shows the output of the algorithm running on a 48 s clip of a surgeon tracing a vein in an eyeball phantom. Within half a second, the map for visible areas is initialized. Because the light-pipe only illuminates portions of the eye at a time, the map is built as new vessels become visible. The proposed algorithm is able to handle the occlusion of the vessels by the instrument shaft and light-pipe. Notice the algorithm has been able to accurately map and correctly localize even though the entire view has moved, rotated, and been occluded by the tool under variable illumination.

Fig. 4.

Output of the proposed algorithm at various points during a 48 s video of an eye phantom filled with saline and illuminated with a light-pipe.

C. Intermediate Detection and Occupancy Grid Results

Fig. 3 shows the intermediate steps of the algorithm on ex vivo porcine retina in an eyeball phantom filled with saline. The view is through a vitrectomy lens and illumination is provided by the surgeon solely through a light-pipe. Incomplete vessel detections are merged over time into the occupancy grid to form a full and accurate map after a few seconds. Fig. 3(c) shows that the localization is still maintained after movement of the eyeball by the surgeon.

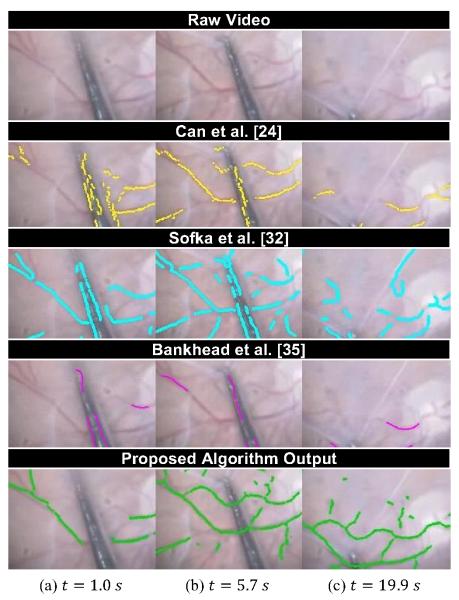

D. Comparison to Vessel Detection Algorithms

Fig. 5 demonstrates why current vessel tracing algorithms are insufficient for robotic guidance in real time surgical environments. We compare to three existing vessel tracing algorithms on video of human retina in vivo taken in during a membrane peel. Our proposed approach provides a more complete output than any of the other methods. In particular, as examination of Figs. 5(a) and 5(b) shows, our algorithm learns over time to ignore the tool shaft, exhibiting more robustness to occlusion and illumination. Overall, the temporal fusion of the proposed approach increases coverage and consistency.

Fig. 5.

Comparison of our approach (30 Hz) to the vessel detection algorithms of Can et al. [24] (80 Hz), Sofka et al. [32] (0.3 Hz), and Bankhead et al. [35] (8 Hz) on a 20 s video of human retina during an in vivo membrane peel. Source: http://youtu.be/_naooJFuxPI

V. Discussion

We have presented a new algorithm for retinal mapping and localization that operates in real time at 30 Hz. Designed to handle the dynamic environment of high-magnification, variable illumination retinal surgery, our approach converges quickly and is robust to occlusion. In comparisons on retinal video, it has proven to be an effective method to temporally smooth vessel detections and build a comprehensive map of the vasculature. Shortcomings to the algorithm include some lag when smoothing jitter with the Kalman filter and loss of tracking in the case of large, sudden movements. Future improvements should include robust vessel detection, ICP, or scan-matching approaches. More effective handling of uncertainty during localization and advanced motion models (such as spherical) would also be beneficial. Finally, future work includes quantitative comparisons to existing methods.

Acknowledgments

This work was supported in part by the National Institutes of Health (grant nos. R01EB000526, R21EY016359, and R01EB007969), the American Society for Laser Medicine and Surgery, the National Science Foundation (Graduate Research Fellowship), and the ARCS Foundation.

References

- [1].Holtz F, Spaide RF. Medical Retina. Springer; Berlin: 2007. [Google Scholar]

- [2].Singh SPN, Riviere CN. Physiological tremor amplitude during retinal microsurgery. Proc. IEEE Northeast Bioeng. Conf. 2002:171–172. [Google Scholar]

- [3].Brooks HL. Macular hole surgery with and without internal limiting membrane peeling. Ophthalmology. 2000;vol. 107(no. 10):1939–1948. doi: 10.1016/s0161-6420(00)00331-6. [DOI] [PubMed] [Google Scholar]

- [4].Packer AJ. Manual of Retinal Surgery. Butterworth-Heinemann Medical; 2001. [Google Scholar]

- [5].Rice TA. Internal limiting membrane removal in surgery for full-thickness macular holes. Am. J. Ophthalmol. 1999;vol. 129(no. 6):125–146. doi: 10.1016/s0002-9394(00)00358-5. [DOI] [PubMed] [Google Scholar]

- [6].Frank RN. Retinal laser photocoagulation: Benefits and risks. Vision Research. 1980;vol. 20(no. 12):1073–1081. doi: 10.1016/0042-6989(80)90044-9. [DOI] [PubMed] [Google Scholar]

- [7].Infeld DA, O’Shea JG. Diabetic retinopathy. Postgrad. Med. J. 1998;vol. 74(no. 869):129–133. doi: 10.1136/pgmj.74.869.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Weiss JN, Bynoe LA. Injection of tissue plasminogen activator into a branch retinal vein in eyes with central retinal vein occlusion. Ophthalmology. 2001;vol. 108(no. 12):2249–2257. doi: 10.1016/s0161-6420(01)00875-2. [DOI] [PubMed] [Google Scholar]

- [9].Bynoe LA, Hutchins RK, Lazarus HS, Friedberg MA. Retinal endovascular surgery for central retinal vein occlusion: Initial experience of four surgeons. Retina. 2005;vol. 25(no. 5):625–632. doi: 10.1097/00006982-200507000-00014. [DOI] [PubMed] [Google Scholar]

- [10].Das H, Zak H, Johnson J, Crouch J, Frambach D. Evaluation of a telerobotic system to assist surgeons in microsurgery. Comput. Aided Surg. 1999;vol. 4(no. 1):15–25. doi: 10.1002/(SICI)1097-0150(1999)4:1<15::AID-IGS2>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- [11].Ueta T, Yamaguchi Y, Shirakawa Y, Nakano T, Ideta R, Noda Y, Morita A, Mochizuki R, Sugita N, Mitsuishi M. Robot-assisted vitreoretinal surgery: Development of a prototype and feasibility studies in an animal model. Ophthalmology. 2009;vol. 116(no. 8):1538–1543. doi: 10.1016/j.ophtha.2009.03.001. [DOI] [PubMed] [Google Scholar]

- [12].Wei W, Goldman RE, Fine HF, Chang S, Simaan N. Performance evaluation for multi-arm manipulation of hollow suspended organs. IEEE Trans. Robot. 2009;vol. 25(no. 1):147–157. [Google Scholar]

- [13].Bourla DH, Hubschman JP, Culjat M, Tsirbas A, Gupta A, Schwartz SD. Feasibility study of intraocular robotic surgery with the da Vinci surgical system. Retina. 2008;vol. 28(no. 1):154–158. doi: 10.1097/IAE.0b013e318068de46. [DOI] [PubMed] [Google Scholar]

- [14].Mulgaonkar AP, Hubschman JP, Bourges JL, Jordan BL, Cham C, Wilson JT, Tsao TC, Culjat MO. A prototype surgical manipulator for robotic intraocular micro surgery. Stud. Health Technol. Inform. 2009;vol. 142(no. 1):215–217. [PubMed] [Google Scholar]

- [15].Uneri A, Balicki M, Handa J, Gehlbach P, Taylor RH, Iordachita I. New Steady-Hand Eye Robot with micro-force sensing for vitreoretinal surgery. Proc. IEEE Int. Conf. Biomed. Robot. Biomechatron. 2010:814–819. doi: 10.1109/BIOROB.2010.5625991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Hubschman JP, Bourges JL, Choi W, Mozayan A, Tsirbas A, Kim CJ, Schwartz SD. The Microhand: A new concept of micro-forceps for ocular robotic surgery. Eye. 2009;vol. 24(no. 2):364–367. doi: 10.1038/eye.2009.47. [DOI] [PubMed] [Google Scholar]

- [17].Dogangil G, Ergeneman O, Abbott JJ, Pané S, Hall H, Muntwyler S, Nelson BJ. Toward targeted retinal drug delivery with wireless magnetic microrobots. Proc. IEEE Intl. Conf. Intell. Robot. Syst. 2008:1921–1926. [Google Scholar]

- [18].MacLachlan RA, Becker BC, Cuevas Tabares J, Podnar GW, Lobes LA, Jr., Riviere CN. Micron: An actively stabilized handheld tool for microsurgery. IEEE Trans. Robot. 2012;vol. 28(no. 1):195–212. doi: 10.1109/TRO.2011.2169634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Becker BC, MacLachlan RA, Lobes LA, Jr., Hager G, Riviere C. Vision-based control of a handheld surgical micromanipulator with virtual fixtures. IEEE Trans. Robot. doi: 10.1109/TRO.2013.2239552. submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Rosenberg LB. Virtual fixtures: Perceptual tools for telerobotic manipulation. IEEE Virt. Reality Ann. Int. Symp. 1993:76–82. [Google Scholar]

- [21].Bettini A, Marayong P, Lang S, Okamura AM, Hager GD. Vision-assisted control for manipulation using virtual fixtures. IEEE Trans. Robot. 2004 Dec.vol. 20(no. 6):953–966. [Google Scholar]

- [22].Lin HC, Mills K, Kazanzides P, Hager GD, Marayong P, Okamura AM, Karam R. Portability and applicability of virtual fixtures across medical and manufacturing tasks. Proc. IEEE Int. Conf. Robot. Autom. 2006:225–231. [Google Scholar]

- [23].Becker BC, Voros S, Lobes LA, Jr., Handa JT, Hager GD, Riviere CN. Retinal vessel cannulation with an image-guided handheld robot. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010:5420–5423. doi: 10.1109/IEMBS.2010.5626493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Can A, Shen H, Turner JN, Tanenbaum HL, Roysam B. Rapid automated tracing and feature extraction from retinal fundus images using direct exploratory algorithms. IEEE Trans. Inform. Technol. Biomed. 1999;vol. 3(no. 2):125–138. doi: 10.1109/4233.767088. [DOI] [PubMed] [Google Scholar]

- [25].Hahnel D, Burgard W, Fox D, Thrun S. An efficient FastSLAM algorithm for generating maps of large-scale cyclic environments from raw laser range measurements. Proc. IEEE Intl. Conf. Intell. Robot. Syst. 2003;vol. 1:206–211. [Google Scholar]

- [26].Besl PJ, McKay ND. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992;vol. 14(no. 2):239–256. [Google Scholar]

- [27].Chanwimaluang T. An efficient blood vessel detection algorithm for retinal images using local entropy thresholding. Proc. Int. Symp. Circuits Syst. 2003;vol. 5:21–24. [Google Scholar]

- [28].Mojon D. Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 2003 Jan.vol. 25(no. 1):131–137. [Google Scholar]

- [29].Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imag. 2004 Apr.vol. 23(no. 4):501–9. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- [30].Alonso-Montes C, Vilariño DL, Dudek P, Penedo MG. Fast retinal vessel tree extraction: A pixel parallel approach. International Journal of Circuit Theory and Applications. 2008 Jul.vol. 36(no. 5-6):641–651. [Google Scholar]

- [31].Chaudhuri S, Chatterjee S, Katz N, Nelson M, Goldbaum M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imag. 1989 Jan.vol. 8(no. 3):263–269. doi: 10.1109/42.34715. [DOI] [PubMed] [Google Scholar]

- [32].Sofka M, Stewart CV. Retinal vessel centerline extraction using multiscale matched filters, confidence and edge measures. IEEE Trans. Med. Imag. 2006 Dec.vol. 25(no. 12):1531–1546. doi: 10.1109/tmi.2006.884190. [DOI] [PubMed] [Google Scholar]

- [33].Soares JVB, Leandro JJG, Cesar RM, Jelinek HF, Cree MJ. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans. Med. Imag. 2006 Sep.vol. 25(no. 9):1214–1222. doi: 10.1109/tmi.2006.879967. [DOI] [PubMed] [Google Scholar]

- [34].Lupascu CA, Tegolo D, Trucco E. FABC: Retinal vessel segmentation using AdaBoost. IEEE Trans. Inform. Technol. Biomed. 2010 Sep.vol. 14(no. 5):1267–1274. doi: 10.1109/TITB.2010.2052282. [DOI] [PubMed] [Google Scholar]

- [35].Bankhead P, Scholfield CN, McGeown JG, Curtis TM. Fast retinal vessel detection and measurement using wavelets and edge location refinement. PLOS ONE. 2012 Jan.vol. 7(no. 3) doi: 10.1371/journal.pone.0032435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Perfetti R, Ricci E, Casali D, Costantini G. Cellular neural networks with virtual template expansion for retinal vessel segmentation. IEEE Transactions on Circuits and Systems II: Express Briefs. 2007 Feb.vol. 54(no. 2):141–145. [Google Scholar]

- [37].Nieto A, Brea VM, Vilarino DL. FPGA-accelerated retinal vessel-tree extraction. International Conference on Field Programmable Logic and Applications. 2009:485–488. [Google Scholar]

- [38].Lowe DG. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004;vol. 60(no. 2):91–110. [Google Scholar]

- [39].Cattin P, Bay H, Van Gool L, Székely G. Retina mosaicing using local features. Medical Image Computing and Computer-Assisted Intervention. 2006;vol. 4191:185–192. doi: 10.1007/11866763_23. [DOI] [PubMed] [Google Scholar]

- [40].Wang Y, Shen J, Liao W, Zhou L. Automatic fundus images mosaic based on SIFT feature. Journal of Image and Graphics. 2011;vol. 6(no. 4):533–537. [Google Scholar]

- [41].Chen J, Smith R, Tian J, Laine AF. A novel registration method for retinal images based on local features. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2008;vol. 2008:2242–2245. doi: 10.1109/IEMBS.2008.4649642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Becker BC, Yang S, MacLachlan RA, Riviere CN. Towards vision-based control of a handheld micromanipulator for retinal cannulation in an eyeball phantom. Proc. IEEE Int. Conf. Biomed. Robot. Biomechatron. 2012:44–49. doi: 10.1109/BioRob.2012.6290813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Becker DE, Can A, Turner JN, Tanenbaum HL, Roysam B. Image processing algorithms for retinal montage synthesis, mapping, and real-time location determination. IEEE Trans. Biomed. Eng. 1998;vol. 45(no. 1):105–118. doi: 10.1109/10.650362. [DOI] [PubMed] [Google Scholar]

- [44].Can A, Stewart CV, Roysam B, Tanenbaum HL. A feature-based, robust, hierarchical algorithm for registering pairs of images of the curved human retina. IEEE Trans. Pattern Anal. Mach. Intell. 2002 Mar.vol. 24(no. 3):347–364. [Google Scholar]

- [45].Broehan AM, Rudolph T, Amstutz CA, Kowal JH. Realtime multimodal retinal image registration for a computer-assisted laser photocoagulation system. IEEE Trans. Biomed. Eng. 2011 Oct.vol. 58(no. 10):2816–2824. doi: 10.1109/TBME.2011.2159860. [DOI] [PubMed] [Google Scholar]

- [46].Stewart CV, Tsai C-L, Roysam B. The dual-bootstrap iterative closest point algorithm with application to retinal image registration. IEEE Trans. Med. Imag. 2003 Nov.vol. 22(no. 11):1379–1394. doi: 10.1109/TMI.2003.819276. [DOI] [PubMed] [Google Scholar]

- [47].Chanwimaluang T, Fan G, Fransen SR. Hybrid retinal image registration. IEEE Trans. Inform. Technol. Biomed. 2006 Jan.vol. 10(no. 1):129–142. doi: 10.1109/titb.2005.856859. [DOI] [PubMed] [Google Scholar]

- [48].Chen J, Tian J, Lee N, Zheng J, Smith RT, Laine AF. A partial intensity invariant feature descriptor for multimodal retinal image registration. IEEE Trans. Biomed. Eng. 2010 Jul.vol. 57(no. 7):1707–1718. doi: 10.1109/TBME.2010.2042169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Thrun S. Simultaneous Localization and Mapping. In: Jefferies M, Yeap W-K, editors. Robotics and Cognitive Approaches to Spatial Mapping. vol. 38. Springer; Berlin / Heidelberg: 2008. pp. 13–41. [Google Scholar]

- [50].Montemerlo M, Thrun S, Koller D, Wegbreit B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. Proc. Nat. Conf. Artificial Intell. 2002:593–598. [Google Scholar]

- [51].Bay H, Ess A, Tuytelaars T, van Gool L. SURF: Speeded Up Robust Features. Comp. Vis. Img. Understand. 2008;vol. 110(no. 3):346–359. [Google Scholar]

- [52].Horn BKP. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A. 1987;vol. 4(no. 4):629–642. [Google Scholar]

- [53].OpenCV Library. [Online]. Available: http://opencv.willowgarage.com/