Abstract

Little is known about the network of brain regions activated prior to explicit awareness of emotionally salient social stimuli. We investigated this in a functional magnetic resonance imaging study using a technique that combined elements of binocular rivalry and motion flash suppression in order to prevent awareness of fearful faces and houses. We found increased left amygdala and fusiform gyrus activation for fearful faces compared to houses, despite suppression from awareness. Psychophysiological interaction analyses showed that amygdala activation was associated with task-specific (fearful faces greater than houses) modulation of an attention network, including bilateral pulvinar, bilateral insula, left frontal eye fields, left intraparietal sulcus and early visual cortex. Furthermore, we report an unexpected main effect of increased left parietal cortex activation associated with suppressed fearful faces compared to suppressed houses. This parietal finding is the first report of increased dorsal stream activation for a social object despite suppression, which suggests that information can reach parietal cortex for a class of emotionally salient social objects, even in the absence of awareness.

Keywords: motivated attention, vision, parietal, fMRI, PPI

INTRODUCTION

Emotional stimuli summon our attention more than neutral stimuli. Fearful faces, an emotive social stimulus, are particularly compelling, and effectively capture our attention. This is potentially due to the role of fearful faces as a warning to other conspecifics of nearby threat and represents an evolved mechanism to automatically detect stimuli important for survival (Ekman and Friesen, 1971; LeDoux, 1996; Ohman et al., 2000, Anderson and Phelps, 2001). Emotional stimuli have a privileged processing status, attributed to the automatic engagement of selective attention by emotionally salient objects (Vuilleumier and Schwartz, 2001; Vuilleumier, 2005).

Even when emotional stimuli are presented very briefly or outside of the focus of attention or awareness, they are processed with increased efficacy compared to non-emotional stimuli. One paradigm that has been used to investigate processing of emotional or arousing stimuli is the interocular technique of continuous flash suppression (CFS; Tsuchiya and Koch, 2005). Behaviorally, this technique can be used to measure differences in the detectability of different stimuli. Briefly, a target is presented to the participant’s non-dominant eye and a continuous flow of ‘noise’ images (e.g. Mondrian-like patterns) is presented to the participant’s dominant eye. CFS causes awareness of target stimuli to be temporarily suppressed from conscious perception. The target stimulus eventually ‘breaks through’ to conscious perception and time to break through of various stimuli can be compared. Using CFS, it has been demonstrated that highly salient social stimuli such as fearful faces (vs neutral faces), upright faces (vs inverted faces) and faces with direct gaze (vs averted gaze) break through to awareness faster (Jiang et al., 2007; Yang et al., 2007; Stein et al., 2011). Thus, arousing or motivationally relevant stimuli are prioritized during visual processing, but how this occurs is not well understood.

At the neural level, fearful faces and other emotional stimuli engage the amygdala, which is particularly reactive to signals of impending threat or biological relevance, such as fearful faces (LeDoux, 1996; Davis and Whalen, 2001; Adolphs, 2002; Phelps and Ledoux, 2005). Regardless of whether fearful stimuli are presented subliminally or supraliminally, the amygdala is robustly activated in many neuroimaging studies (Whalen et al., 1998; Anderson et al., 2003; Glascher and Adolphs, 2003; Wager et al., 2003). The amygdala plays a role in guiding endogenous attention toward emotionally salient stimuli (Adolphs, 2008; Pessoa, 2010). For social perception, it is important for spontaneously attending to salient parts of the face, such as the eyes (Whalen et al., 1998; Whalen et al., 2004; Adolphs, 2005; Adolphs, 2010). Patients with bilateral amygdala damage do not show this automatic fixation toward the eye region of faces, nor do they show the enhanced perception for aversive stimuli present in healthy observers (Anderson and Phelps, 2001; Tsuchiya et al., 2009). This is thought to be due to impaired bottom–up (e.g. stimulus-driven or feature-based) attention in patients with amygdala lesions (Kennedy and Adolphs, 2010).

One proposed route through which the amygdala may receive low-level visual information is via a subcortical visual pathway, although its existence is controversial (Pessoa and Adolphs, 2010). We have previously demonstrated differential responses in regions associated with the subcortical visual pathway (including the amygdala, pulvinar and superior colliculus) for unperceived faces compared to chairs using binocularly rivalry and motion suppression (Pasley et al., 2004). Additional evidence for the processing of emotional stimuli in the absence of awareness comes from patients with ‘blindsight’ who have sustained a primary visual cortex (V1) lesion that prevents conscious vision in the corresponding portion of the visual field. Despite these lesions, patients with blindsight exhibit residual abilities to detect visual stimuli, suggesting this information can be still processed. Morris and colleagues (2001) used functional MRI (fMRI) to demonstrate increased amygdala activation in response to emotionally expressive faces in a blindsight patient, and these patients can also learn aversive associations with neutral stimuli presented in their blind hemifield (Anders et al., 2004). This suggests information can reach the amygdala and influence behavior without conscious awareness.

Conscious awareness is likely a continuum, rather than a dichotomous event. Between unawareness and awareness exist other states. For example, ‘implicit awareness’ refers to a state during which stimuli cannot be explicitly reported, but have a measureable impact on subject performance. ‘Explicit awareness’ occurs when visual events can be explicitly reported by the subject (Kihlstrom et al., 1992; Mack and Rock, 1998). Selective attention can operate at any stage of this continuum, with largely unconscious attentional mechanisms thought to operate on stimuli, with the outcome of visual awareness sometimes resulting from this attentional step (Crick and Koch, 1990). Thus, attention can select invisible objects. Although we do not manipulate attention in the current design, any processing differences between stimulus categories may reflect attentional mechanisms operating prior to awareness.

CFS in conjunction with fMRI provides a useful framework for examining neural responses to objects that are not explicitly perceived but nevertheless processed. Prior studies utilized binocular rivalry and CFS techniques to understand neural responses to different object categories. Most early binocular rivalry neuroimaging studies examined neural responses to alternations in stimulus dominance (Tong et al., 1998; Polonsky et al., 2000). More recent neuroimaging studies utilized CFS and required participants to search for object stimuli and report when they detect such stimuli (Pessoa et al., 2005; Jiang and He, 2006). These experiments answer specific questions regarding the threshold of awareness.

The current study used a CFS-like paradigm to examine neural responses to fearful faces compared to a class of neutral stimuli—i.e. houses. Unlike most other CFS studies, the current design does not involve an explicit search task, and was not designed to compare brain activity when targets are seen vs unseen. Rather, the goal here was to examine purely non-conscious processing of emotional stimuli. Participants performed a task that was orthogonal to the underlying fearful face vs house manipulation, and trained to respond if they saw anything other than the blue disk or the dynamically moving checkered grid. Using this approach, we were able to capture neural responses to suppressed stimuli in the absence of search strategies. This study follows-up our previous work, which found significant subcortical activation in the amygdala, pulvinar and superior colliculus in response to suppressed fearful faces but not suppressed chairs (Pasley et al., 2004). The current study improves upon our previous work in several ways: use of a more visually complex suppressed control (houses instead of chairs) allowed us to examine responses in the fusiform and another higher-level control region (parahippocampal place area, PPA). We also employed a language-based instead of object-based orthogonal task, which allows for detection of differences in the ventral visual pathway that could have been obscured by the complex visual object task used previously. Finally, while our previous work imaged only the ventral visual pathway and subcortical structures, the full brain coverage collected in the current design is crucial to understanding the whole-brain network involved in non-conscious processing of emotional stimuli. Because amygdala responses in the absence of explicit awareness are thought to play a role in prioritizing selection mechanisms and ultimately influencing behavior, we additionally employed a psychophysiological interaction (PPI) analysis to characterize the network of activity associated with a fearful face-specific amygdala response.

MATERIALS AND METHODS

Subjects

Sixteen adults from Yale University with normal or corrected-to-normal vision were recruited to participate in the study. Four subjects were excluded from analysis: three subjects experienced failure of binocular suppression during fMRI scanning: one reported clear, conscious perception of faces and houses during the task and two participants reported seeing intermittent eyes or ‘parts of faces’. The fourth subject excluded from the study reported that he ‘guessed’ the content of the suppressed stimuli based on his knowledge of the laboratory’s research interests. All 12 remaining subjects (6 females, mean age = 22.9 years) reported complete unawareness of the face and house stimuli. All participants gave written informed consent in accordance with procedures approved by the Yale University’s Institutional Review Board and were paid for their participation.

Experimental procedure

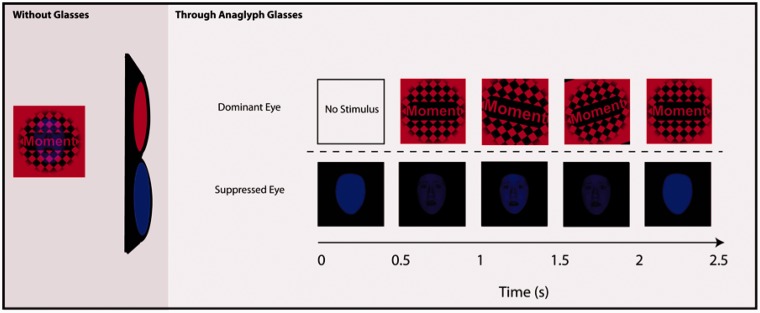

Participants wore custom red/blue anaglyph glasses, made to accommodate both left and right eye dominance. Eye dominance was determined using a variant of the Miles test (Miles, 1929, 1930). Because of the importance of full suppression of the non-dominant stimulus to the current investigation, participant eligibility for study inclusion was determined through individual behavioral pretesting of the rivalry effect. Only individuals reporting complete suppression and dominance in response to rivalrous stimuli were considered for fMRI scanning. Pretesting was completed in the context of unrelated behavioral testing for other laboratory experiments, and participants were unaware that their responses to presented stimuli impacted eligibility for the current investigation. The experimenter briefed participants on the binocular rivalry effect and explained that they would be asked to view and comment on a set of rivalrous stimuli. Participants were told that the stimuli would be utilized in future fMRI studies and the importance of honest, thorough reporting was emphasized. Using anaglyph glasses, participants viewed rivalrous stimuli with a dynamic red checkerboard and centrally presented word presented to the dominant eye and blue abstract shapes to the non-dominant eye. Participants were introduced to the phenomenon of breakthrough to prepare them for reporting any experiences of breakthrough during the test procedure.

Participants viewed 16 alternating blocks of suppressed faces and suppressed houses. Eight 10 s blocks of each rivalrous condition appeared across the duration of the experiment, separated in each instance by an equal-duration block of rest. Four 2.5 s trials were presented consecutively within each block.

During the test procedure, stimuli were back-projected onto a translucent screen mounted at the rear of the MRI gantry and were viewed through a periscope prism system on the head coil. Each trial began with a 500 ms monocular presentation of a blue disk to the non-dominant eye. To induce independent perception of the intended dominant and suppressed stimuli through the anaglyph glasses, checkerboard images were defined by red luminance and suppressed stimuli were defined by blue luminance. Following presentation of the blue disk, a red checkerboard with a centrally presented word appeared to the dominant eye and began moving sharply back and forth (Figure 1). Accompanying the checkerboard display, the blue disk displayed to the non-dominant eye gradually faded into the target presentation of a blue fearful face or blue house. The target stimulus faded again to a blue disk after ∼1.5 s. The participant’s task was to identify the first letter of each word as a consonant or vowel as soon as they were able to identify the letter. If participants saw anything other than the blue disk or checkerboard (such as objects or parts of objects) in any trial, they were trained to press a third key. A single catch trial at the end of the experiment (in which breakthrough from interocular suppression is mimicked by presenting stimuli to both eyes) was used as an additional probe to determine if participants perceived the subliminal stimuli presented prior to the catch trial. All subjects responded appropriately to the catch trial that simulated breakthrough. If it was determined via button press, catch trial or post-scan debriefing that subjects perceived objects or parts of objects, this data were not used in the analysis (see Subjects section for individual subject details). This method allowed us to obtain a report of participant awareness of the stimuli, without biasing participants to look for stimuli, allowing us to achieve the ultimate goal of a long-duration scanning session in the absence of awareness.

Fig. 1.

Schematic of binocular rivalry stimulus presentation. Without glasses: Example view of stimulus as seen without anaglyph glasses. Through anaglyph glasses: Words were presented into the dominant eye through the red lens of anaglyph glasses while faces and houses were presented into the suppressed eye through the blue lens.

A functional localizer scan followed the main scan, in which participants made same or different identity judgments (i.e. subordinate-level discrimination) on unfamiliar faces or houses, presented in a blocked design. Two images of faces or houses were presented side-by-side on a black background for 3500 ms (followed by a 1000 ms interstimulus interval), with four 22.5 s blocks of each stimulus type separated by a 10 s rest period during which two asterisks were presented side-by-side on the screen. This localizer scan served to identify face- and house-selective regions (fusiform face area; FFA and parahippocampal place area; PPA) for functional region-of-interest (ROI) analyses.

MRI acquisition

Scans were performed at Yale University on a 3 T Siemens Trio scanner equipped with a standard quadrature head coil (40 axial slices parallel to the AC–PC plane, whole-brain coverage, in-plane voxel size = 3.516 × 3.516 mm, slice thickness/gap = 3.5/0 mm, TR = 2320 ms, TE = 25 ms, flip angle = 60°, 127 volumes collected in one functional run). High-resolution T1-weighted 3D anatomical data were also acquired (MPRAGE, TR = 2530, TE = 3.66, TI = 1100, flip angle = 7°, resulting in 1 mm3 voxels).

Stimuli

Visual stimuli were gray scale images of 32 fearful faces and 32 houses. Faces were from the Ekman stimuli (Ekman and Friesen, 1976) and face photos taken from a Yale theater group. Houses were from a locally collected set of photos of homes from New Haven County, CT. All stimuli were 450 × 450 pixels. Each face and house image was presented once, with no repetition. Each block contained either four faces or four houses.

Face and house stimuli were matched for mean and standard deviations of luminance values [mean: t(64) = 1.34; s.d.: t(64) = 0.580, both P > 0.05, n.s.]. Spatial frequency for each stimulus was determined using a 2D discrete Fourier transform, shifting the zero-frequency component to center, and averaging frequencies within a stimulus. We then compared these values, but found no differences between face and house stimuli [face mean = 10 774 (s.d. 1003); house mean = 10 792 (s.d. 1346); t = 0.06, P > 0.05, n.s.].

Words of low imagability and relatively low age of acquisition were chosen for the experiment from the UWA MRC Psycholinguistic Word database. The thirty-two words were: Might, Excuse, Lie, Age, Luck, Aim, Sense, Edge, Amount, Escape, Gain, Whole, Ideal, Moment, Act, Reason, Bother, Try, Extra, Object, Find, Answer, Clever, Usual, Wonder, Order, Issue, Bet, Area, Item, Normal and Repeat. Words were repeated one additional time across the length of the experiment, such that suppressed face and suppressed house conditions contained the same number of repeated words. Centered within the checkerboard, each word was displayed in Arial font of mixed case, with the first letter of each word capitalized and the other letters lowercase. Participants performed near ceiling on this task [96.8 ( ± 0.05)% accuracy overall] and there were no differences in accuracy between the face and house blocks.

Data analysis

Anatomical ROI

Functional data were processed using tools from the Oxford University Centre for fMRI of the Brain (fMRIB) Software Library (FSL 4.1, http://www.fmrib.ox.ac.uk/fsl/). Data were motion corrected, with resulting movement parameters subsequently entered as covariates in statistical analysis. We used fMRI Expert Analysis Tool version 5.2 to submit functional data to a mixed-model random-effects analysis. Data were spatially smoothed using a Gaussian filter with a full-width half-maximum (FWHM) of 5 mm, a 40 s high pass filter was applied to remove low frequency artifacts, pre-whitened using FILM to minimize temporal autocorrelations in the data and non-linearly registered to the Montreal Neurological Institute (MNI) template using FNIRT (http://www.fmrib.ox.ac.uk/fsl/fnirt). We included two regressors of interest (faces and houses), which were convolved with a double-gamma hemodynamic response function (HRF) waveform, and a voxel-wise general linear model was implemented to identify regions showing significant task-related activation for each condition. ROI analyses were implemented to identify task-related differences in the amygdala, based on our a priori hypothesis. Normalized estimates from the main effects of suppressed faces and suppressed houses were extracted from left amygdala using the Harvard–Oxford subcortical atlas (distributed with FSL). Average values for each individual were entered into a t-test for significance testing.

Functional ROIs

Data from the functional localizer were preprocessed and analyzed identically to experimental data, with face and house blocks included as regressors of interest. Two ROIs were defined in each subject using data from functional localizer scans. Both were 4 mm spherical ROIs drawn around the peak voxel from the appropriate contrast of interest. The fusiform ROI was selected based on the region surrounding the most selective voxel (voxel with highest t-statistic) within the fusiform gyrus responding more to faces than houses. The parahippocampal ROI was selected as the region surrounding the most selective voxel within the posterior parahippocampal/collateral sulcus region.

Connectivity

In order to perform a PPI analysis incorporating the hemodynamic deconvolution procedure implemented in SPM, individual participants’ data were remodeled in SPM8 (Wellcome Trust Centre for Neuroimaging). Individual subject-level analyses were remodeled by realigning to the first image, coregistering to the structural image, and normalizing to the MNI space. Images were then spatially smoothed with a 5 mm FWHM Gaussian Kernel. Each block was convolved with a HRF to produce a predicted neural response, with additional regressors included for motion. Subject-specific amygdala peaks were identified as a 4 mm sphere surrounding the maxima within the amygdala ROI, for the suppressed face greater than suppressed house contrast. For each ROI, the first eigenvariate of the time series was extracted to summarize the time course of activation. Neural activity was then estimated using a simple deconvolution model; the estimated neural activity was then multiplied by the psychological variable (faces vs houses) and reconvolved with a canonical HRF to obtain an interaction term. Individual subjects’ data were then modeled using the ROI time course, psychological variable (i.e. stimulus type: suppressed faces vs suppressed houses) and interaction term as regressors. Contrast images were created for the interaction term, which reflected correlations between the seed region that differed depending on stimulus category. These single-subject contrast images were then entered into a second level analysis to test for group effects. To control for multiple comparisons, we used threshold-free cluster enhancement (TFCE) (Smith and Nichols, 2009), which determines statistical significance using permutation labeling, with the α-level set at P < 0.05.

Whole-brain general linear model

In addition to the ROI and PPI analyses, we also performed a whole-brain general linear model in order to assess whether unexpected regions were activated for one condition compared to another. As with the PPI analyses, multiple comparisons were controlled using a permutation method with the α-level set at P < 0.05 (corrected using TFCE for whole-brain significance).

RESULTS

ROI analysis

We defined an a priori left amygdala ROI, based on our previous finding of increased left amygdala response to unperceived faces (Pasley et al., 2004). Increased amygdala activation is associated with fearful face processing (Morris et al., 1996), and is thought to influence a rapid fear- or threat-related response. We examined differences in bilateral amygdala activation for the two suppressed conditions and found greater left amygdala activation for suppressed faces than suppressed houses (t = 2.5, P < 0.05; Figure 2A), consistent with our a priori hypothesis and previous work. There were no significant differences between the two suppressed conditions in the right amygdala.

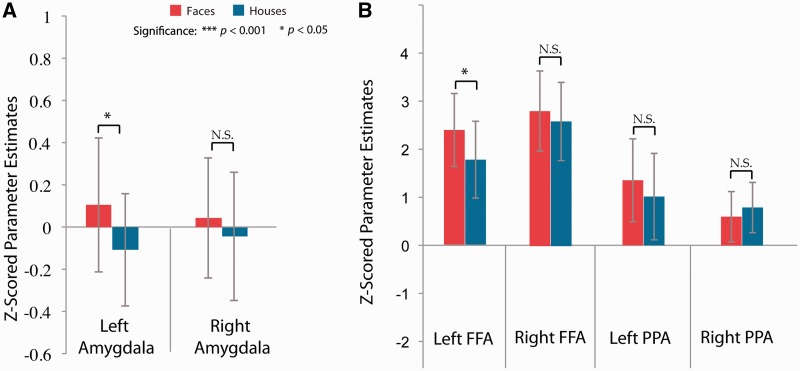

Fig. 2.

Effects of unconscious faces and houses in (A) amygdala and (B) FFA and PPA. (A) Amygdala regions determined by the Harvard–Oxford atlas. Significantly greater activation for left amygdala for suppressed faces than suppressed houses (P < 0.05). (B) FFA and PPA ROIs. ROIs were defined as a 4 mm sphere around the peak voxel for face- and house-selective regions with peaks defined based on an independent localizer scan. Significant activation for suppressed fearful faces (compared to suppressed houses) were only observed in the left FFA. Correlations between amygdala, FFA and PPA regions (x-axis) and normalized estimates (y-axis) for two representative subjects, with face blocks represented in red and house blocks in blue.

Next, we examined activation in our functional ROIs, including the FFA and PPA, separately for each hemisphere (Figure 2B). We find significantly greater activation in left FFA for suppressed fearful faces compared to suppressed houses (t = 2.2, P < 0.05), while this same comparison in right FFA was not significant (t = 1.1, P = 0.299, n.s.). There was no significant difference in activation in left or right PPA for suppressed fearful faces compared to suppressed houses (left: t = 1.4, P = 0.204, n.s.; right: t = 1.2, P = 0.267, n.s.). In our previous study, we found that amygdala activation associated with suppressed fearful faces was not accompanied by increases in fusiform cortex, which is contrary to the current findings.

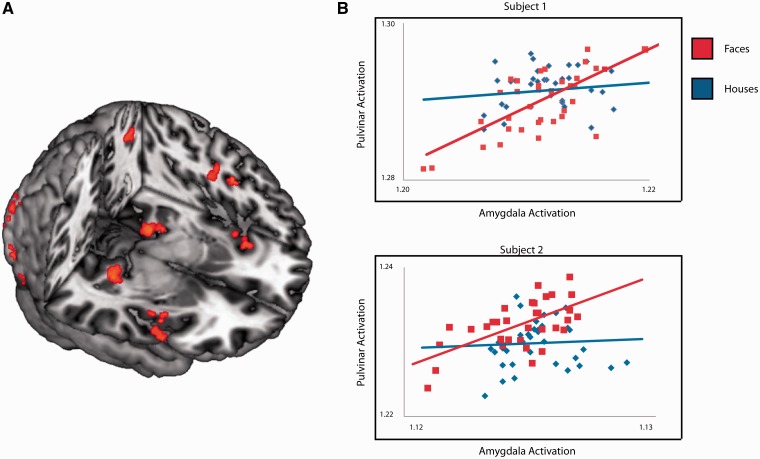

Functional connectivity analysis

The amygdala is thought to guide attention toward objects of biological relevance. Based on our previous finding of amygdala–pulvinar connectivity (Pasley et al., 2004), we anticipated that amygdala activation would be associated with increased connectivity to the pulvinar and potentially other regions not covered by our previous slice selection. To examine this hypothesis, we employed a PPI analysis (Friston et al., 1997). A PPI analysis identifies regions that covary with a given reference region in a condition-specific manner. The PPI analysis revealed increased connectivity between amygdala and multiple regions implicated in visual attention, including bilateral pulvinar, bilateral insula, left frontal eye fields, left inferior parietal and early visual cortex for non-conscious faces compared to houses (Table 1, Figure 3A and B).

Table 1.

Peaks of significant clusters identified in the PPI analysis

| Region | Hemisphere | Coordinates in MNI |

t-Value | ||

|---|---|---|---|---|---|

| x | y | z | |||

| Pulvinar | Right | 26 | −30 | −6 | 4.89 |

| Pulvinar | Left | −14 | −30 | −4 | 5.17 |

| Insula | Right | 44 | 6 | −2 | 4.07 |

| Insula | Left | −38 | 12 | −8 | 4.06 |

| Inferior parietal | Left | −32 | −54 | 48 | 4.16 |

| Early visual cortex | Bilateral | −12 | −92 | −16 | 7.93 |

| Frontal eye fields | Left | −44 | −4 | 32 | 4.09 |

Seed region was a 4 mm sphere around each individual’s left amygdala peak. Results are corrected for multiple comparisons using TFCE (see Materials and Methods section for details). Regions correspond with Figure 3A.

Fig. 3.

(A) Regions that interact with the amygdala in a task-dependent manner (suppressed face blocks greater than suppressed house blocks). (B) Correlations between amygdala (x-axis) and pulvinar (y-axis) for two representative subjects, with suppressed face blocks represented in red and suppressed house blocks in blue.

Whole-brain general linear model

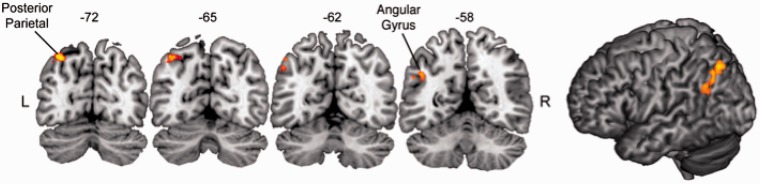

Perceptually suppressed fearful faces produced significantly greater activation compared to suppressed houses in left parietal cortex (Figure 4). Regions included the left angular gyrus (42, −56, 32; t-value: 4.8) and left posterior parietal cortex (30, −70, 50; t-value: 5.7). These two parietal regions were the only regions of significant activation. There were no regions of increased activation for suppressed houses greater than suppressed fearful faces.

Fig. 4.

Whole-brain analysis for perceptually suppressed faces. Voxels showing significant activation are plotted on coronal slices and a left lateral view of the MNI template brain. Perceptually suppressed faces led to increased fMRI response in the left posterior superior parietal sulcus and left angular gyrus, when compared to perceptually suppressed houses. This was the only region of significant activation at the whole-brain level after correcting for multiple comparisons.

DISCUSSION

We hypothesized that the tendency for people to prioritize fearful faces compared to neutral, non-social stimuli corresponds to differences in amygdala responsivity. To test this, we suppressed fearful faces and houses while participants performed an orthogonal letter-detection task. This method allowed us to examine neural responses to two object categories without invoking a search strategy in participants (e.g. ‘Search for a face and report when this search is successful’). Because search is known to heighten perceptual awareness and activate object-selective cortices (Peelen et al., 2009), any corresponding activation would conflate the influences of stimulus-driven attention (e.g. bottom–up) and goal-directed search (top–down). By explicitly not using a search paradigm, our results should better reflect stimulus-driven networks. We found increased left amygdala activation for suppressed fearful faces as compared to suppressed houses, replicating our previous work (Pasley et al., 2004). The increased amygdala response was accompanied by significant fearful face-specific activation in object-selective cortices, with fearful faces increasing activation in left fusiform cortex. Examination of whole-brain and PPI analyses revealed significant differential findings in regions involved in attention, including bilateral insula, pulvinar and early visual cortex, as well as a region of left inferior parietal cortex and left frontal eye fields. Together, these results suggest that the amygdala guides attention to emotionally salient objects, like fearful faces, even in the absence of visual awareness.

These findings represent an advance on previous work, which typically focused on differentiating neural activation to faces below and above the threshold of implicit awareness. Implicit awareness refers to seeing that occurs when visual stimuli cannot be explicitly reported, but have measurable impact on subject performance. In contrast, explicit awareness occurs when subjects can explicitly report a visual event (Kihlstrom et al., 1992; Mack and Rock, 1998). This differentiation is not necessarily dichotomous; however, and may represent a continuum of awareness. Participants included in the current analysis had no explicit awareness of the stimuli, and thus we interpret associated activation to reflect processes prior to explicit awareness. Because we found that fearful faces engage both emotional (amygdala) and attentional (pulvinar, parietal) resources prior to explicit awareness, this activation may represent the mechanism by which motivationally salient stimuli are prioritized in attention and enhanced by amygdala activation. Thus, we expect that with a longer presentation, fearful faces would reach awareness more quickly as a result of pre-conscious attention. Consistent with this expectation, results of a behavioral breakthrough from CFS study using the same face and house stimuli found that participants detect suppressed fearful faces much more quickly than suppressed houses (P < 0.001; see Supplementary Data for details).

We found unexpected activation in aspects of the inferior parietal cortices to suppressed faces vs houses. An important unresolved question is how this information reaches parietal cortex. We see three possibilities. One possibility is that information can ‘leak through’ suppression from a magnocellular pathway, projecting more heavily to dorsal visual regions involved in spatial processing, rather than to ventrotemporal object recognition regions (Livingstone and Hubel, 1987). Under this hypothesis, information reaching parietal cortex may influence behavior by shifting attention to the regions of space where this information is ‘leaking through’. Another hypothesized route by which information from the suppressed eye can reach parietal regions is the subcortical pathway. This phylogenetically older pathway consists of the superior colliculus, pulvinar nucleus of the thalamus and the amygdala, and is thought to process crude visual information quickly, in order to activate a rapid response to threatening stimuli (for review, see Johnson, 2005). A final path by which visual information from a suppressed stimulus may influence allocation of neural resources and consequently, behavior, is via integrative functions in the pulvinar nucleus of the thalamus. The pulvinar is a retinotopically organized nucleus of the thalamus, with robust bidirectional connections to multiple cortical and subcortical regions (Sherman and Guillery, 2002; Shipp, 2003) and rudimentary visual abilities (Fischer and Whitney, 2009). Pulvinar–amygdala connections are thought to underlie increased amygdala activation to fearful stimuli in the absence of awareness. This is supported by our previous functional imaging work (Pasley et al., 2004), as well as observations from patients with lesions to either amygdala (Vuilleumier et al., 2004) or pulvinar (Ward et al., 2006), who show impaired processing of social stimuli. Transient inactivation of the pulvinar leads to a spatial neglect syndrome in macaque monkeys, while lesions of the pulvinar in humans can lead to inabilities to filter out salient distractors (Snow et al., 2008; Wilke et al., 2010). On the basis of the connectivity of the human pulvinar, it may serve as one nexus to integrate signals from multiple regions (including the amygdala and insula in the current study), in order to generate signals regarding the biological relevance of the stimulus.

Other evidence that emotional stimuli can be processed without awareness comes from patients with partial cortical blindness or ‘blindsight’. Despite absence of awareness, blindsight patients nevertheless are influenced by and act on stimuli within their blind hemifield. In a particularly informative study, blindsight patients were trained to associate neutral face expressions with a threatening sound prior to an fMRI experiment. When the conditioned visual stimulus was presented to the blind hemifield of these patients during an fMRI scan, activation in left parietal cortex was enhanced compared to unconditioned faces (Anders et al., 2004). The locus of the left parietal activation in the study by Anders and coworkers is very similar to the left parietal activation demonstrated in the current design for suppressed fearful faces compared to houses. In another study, emotionally expressive faces presented in the blind hemifield of a blindsight patient increased amygdala activation (Morris et al., 2001). Thus, this enhanced processing is thought to be due to engagement of the amygdala.

In another study using an active search paradigm, healthy participants were cued to spatial locations prior to performing a search task, in which they had to locate a tilted face among an array in a cued visual search task (Mohanty et al., 2009). The cues could either be spatially and/or emotionally informative or uninformative. Spatially informative cues enhanced regions of the intraparietal sulcus, frontal eye fields and fusiform gyrus, as well as superior parietal cortex and supplementary motor areas. Negative emotional cues activated the amygdala, insula and fusiform, as well as the orbitofrontal cortex, subcollosal gyrus and posterior cingulate. Authors concluded that active search for threatening stimuli may benefit from amygdala input to the spatial attention network and contribute to the compilation of a salience map that combines the spatial coordinates of an event with its motivational relevance. We show a very similar network of activation, but participants are performing a completely orthogonal task that does not engage an active search for stimuli. Thus, this is the first report of amygdala guidance of attention using an interocular suppression technique while participants are not engaged in active search for the stimulus. These results suggest emotionally relevant stimuli may also inform such a salience map even when they are not explicitly perceived, and even when participants are not actively searching for a motivationally relevant target.

A notable difference between the current findings and our prior study (Pasley et al., 2004) is activation in higher-level visual regions for suppressed fearful faces instead of houses. One potential role of amygdala activation is to prime the computational activities of the FFA, in order to increase the likelihood that visual representations with affective value reach awareness (Duncan and Barrett, 2007). The current results are consistent with this role for the amygdala. In addition, our prior design used a complex visual object task that might lead to a ceiling effect in the detectable activation differences between subliminal object images. Thus, perhaps using a language-based orthogonal task in the current design allowed us to better detect signal in higher-level visual cortex caused by the undetected images. Increased fusiform in the absence of awareness is consistent with work by Jiang and He (2006). Authors examined activation in regions of the face network (FFA and superior temporal sulcus) while face stimuli were rendered invisible using CFS. Bilateral FFA activation was measurable, albeit much reduced, compared to a fusiform activation in response to visible faces. However, it should be noted that Jiang and He used an explicit face search task. Thus, any corresponding activations could be due to the activation of a search template, as merely searching for faces can activate ventral visual cortex (Peelen et al., 2009). Because participants in the current study were not searching for faces, our results are more consistent with an amygdala priming fusiform account.

Although parietal activation has been previously found in the absence of awareness, this is the first report of parietal activation in response to emotional, social stimuli (fearful faces) compared to non-emotive, non-social stimuli (houses). We interpret these findings from two potential perspectives: (i) an increased parietal response due the link between fear and action (e.g. to mitigate potential personal harm), similar to how tools are related to action and (ii) an increased parietal response reflects increased demands on attentional resources or altered spatial attention. The first possibility stems from previous studies using CFS to test the hypothesis that information processing in parietal cortex/dorsal stream regions is biased toward manipulable objects. One fMRI study examined categorical activation differences for CFS-suppressed tools compared to suppressed neutral faces, and found greater activation in dorsal stream regions for tools (Fang and He, 2005). Dorsal steam activation was ascribed to its association with tools, due to its importance in reaching and grasping. In a behavioral priming study using CFS, unperceived category congruent primes facilitated object categorization for man-made tools, but not for animals (Almeida et al., 2008). Again, these findings were interpreted as a category-specific processing advantage for objects associated with grasping or manipulation (and thus, increased reliance on the dorsal stream), although recent work suggests this effect is for any elongated or manipulable shape (Sakuraba et al., 2012). Thus, prior work has interpreted parietal activation as due to the manipulable/action-related nature of the objects under study. However, we report similar activations using classic ventral stream-associated objects—i.e. faces. From an evolutionary perspective, fear is very closely linked to action, and thus fearful faces may activate a similar pathway to non-conscious tools, with parietal cortex activation reflecting the launch of a motor preparation plan. Thus, emotionally laden information might also reach parietal cortex in order to serve action preparation. As we only used fearful faces in the current design, we cannot parse whether this effect is due to the emotional or social nature of these stimuli. Other studies have found faster breakthrough from suppression for fearful faces compared to happy or neutral faces (Yang et al., 2007), suggesting a fearful face advantage. However, we cannot be sure the current fMRI findings will not generalize to other emotional or salient facial expressions.

An alternate interpretation within an attentional framework is that the increased parietal activation is associated with altered attention due to the increased effort devoted to the vowel/consonant detection task. Emotional stimuli could produce increased processing and serve as a distractor, creating competition for resources and thus requiring increased effort and attention in order to complete the language-based task presented to the dominant eye.

A second possibility is that parietal cortex activation reflects altered spatial attention. More specifically, this region may reflect a comprehensive priority map for target selection that integrates bottom–up demands on attention and top–down goals. Determining the most relevant stimuli in complex settings likely relies on the coordination of a distributed network of cortical and limbic regions involved in various aspects of perception. Consistent with this idea, recent work has focused on systems-based perspectives, reflecting limbic modulation of non-conscious vision when the content is emotional (Pessoa and Engelmann, 2010; Tamietto and De Gelder, 2010). Several studies have demonstrated the influence of arousal on visual attention. Adults responded with increased spatial attention to pictures depicting food stimuli relative to tools only after food and water deprivation (Mohanty et al., 2008). Arousing, erotic images rendered invisible with CFS can attract or repel observers’ attention, influenced by gender and sexual orientation (Jiang et al., 2006). Learned associations also influence visual attention: advantages in overcoming suppression induced by CFS have been demonstrated for fearful vs neutral faces, and Chinese vs Hebrew characters for Chinese observers (Jiang et al., 2007). In a study pairing biological reward with line gratings suppressed from awareness using CFS, individuals were more accurate in discriminating gratings previously paired with water rewards (even when ‘unseen’) (Seitz et al., 2009). Thus, results from several veins of research implicate contributions of emotion, arousal or biological relevance to stimulus prioritization in breakthrough from interocular suppression.

To summarize, we found that suppressed fearful faces were associated with increased activation in the left parietal cortex, left amygdala and left fusiform gyrus, and increased task-dependent correlations between the left amygdala and the pulvinar, insula, frontal eye fields, intraparietal sulcus and early visual cortex. This suggests that these regions evaluate visual stimuli despite a lack of explicit awareness. We interpret these correlations as amygdala-dependent modulation of a network of regions that serve to evaluate pre-attentive stimulus value in order to prioritize locations of future target selection. Contributions of several regions can then be integrated via thalamocortical connections and an overall salience value computed in parietal cortex. When this information is integrated, the pulvinar has the anatomical connections necessary to generate a signal to re-orient attention via eye-gaze shifts, generated by intraparietal cortex and frontal eye fields.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Acknowledgements

We would like to thank Caitlin Connors and Carley Piatt for assistance in design and preliminary analyses. This work was supported by a grant from the National Institute of Health grant to R.T.S. (5R01MH073084 to R.T.S.) and V.T. is funded by a National Science Foundation Graduate Fellowship.

REFERENCES

- Adolphs R. Neural mechanisms for recognizing emotion. Current Opinion in Neurobiology. 2002;12:169–78. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R. A mechanisms for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18:166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Almeida J, Mahon BZ, Nakayama K, Caramazza A. Unconscious processing dissociates along categorical lines. Proceedings of the National Academy of Sciences. 2008;105(39):15214–8. doi: 10.1073/pnas.0805867105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anders S, Birbaumer N, Sadowski B, et al. Parietal somatosensory association cortex mediates affective blindsight. Nature Neuroscience. 2004;7:339–40. doi: 10.1038/nn1213. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Panitz DA, De Rosa E, Gabrieli JDE. Neural correlates of the automatic processing of threat facial signals. Journal of Neuroscience. 2003;23:5627–33. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–9. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Crick F, Koch C. Towards a neurobiological theory of consciousness. Seminar in the Neurosciences. 1990;2:263–75. [Google Scholar]

- Davis M, Whalen PJ. The amygdala: vigilance and emotion. Molecular Psychiatry. 2001;6:13–34. doi: 10.1038/sj.mp.4000812. [DOI] [PubMed] [Google Scholar]

- Duncan S, Barrett LF. Affect is a form of cognition: a neurobiological analysis. Cognition and Emotion. 2007;21:1184–211. doi: 10.1080/02699930701437931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Constants across cultures in the face and emotion. Journal of Personality and Social Psychology. 1971;17:124–9. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Fang F, He S. Cortical responses to invisible objects in the human dorsal and ventral pathways. Nature Neuroscience. 2005;8(10):1380–5. doi: 10.1038/nn1537. [DOI] [PubMed] [Google Scholar]

- Fischer J, Whitney D. Precise discrimination of object position in the human pulvinar. Human Brain Mapping. 2009;30(1):101–11. doi: 10.1002/hbm.20485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Beuechel C, Fink GR, Morris J, Rolls E, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6:218–29. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Glascher J, Adolphs R. Processing of the arousal of subliminal and supraliminal stimuli by the human amygdala. Journal of Neuroscience. 2003;23(32):10274–82. doi: 10.1523/JNEUROSCI.23-32-10274.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y, Costello P, Fang F, Huang M, He S. A gender- and sexual orientation-dependent spatial attention effect of invisible images. PNAS. 2006;103(45):17048–52. doi: 10.1073/pnas.0605678103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y, Costello P, He S. Processing of invisible stimuli: advantage of upright faces and recognizable words in overcoming interocular suppression. Psychological Science. 2007;18(4):349–55. doi: 10.1111/j.1467-9280.2007.01902.x. [DOI] [PubMed] [Google Scholar]

- Jiang Y, He S. Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Current Biology. 2006;16:2023–9. doi: 10.1016/j.cub.2006.08.084. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Subcortical face processing. Nature Reviews Neuroscience. 2005;6:766–74. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- Kennedy DP, Adolphs R. Impaired fixation to eyes following amygdala damage arises from abnormal bottom-up attention. Neuropsychologia. 2010;48(12):3392–8. doi: 10.1016/j.neuropsychologia.2010.06.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kihlstrom JF, Barnhardt TM, Tataryn DJ. Implicit perception. In: Bornstein RF, Pittman TS, editors. Perception Without Awareness: Cognitive, Clinical, and Social Perspectives. New York: Guilford; 1992. pp. 17–54. [Google Scholar]

- LeDoux JE. The Emotional Brain. New York: Simon and Schuster; 1996. [Google Scholar]

- Livingstone MS, Hubel DH. Psychophysical evidence for separate channels for the perception of form, color, movement, and depth. Journal of Neuroscience. 1987;7:3416–68. doi: 10.1523/JNEUROSCI.07-11-03416.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mack A, Rock I. Inattentional Blindness. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Miles WR. Ocular dominance demonstrated by unconscious sighting. Journal of Experimental Psychology. 1929;12:113–26. [Google Scholar]

- Miles WR. Ocular dominance in human adults. Journal of General Psychology. 1930;3:412–29. [Google Scholar]

- Mohanty A, Egner T, Monti JM, Mesulam MM. Search for a threatening target triggers limbic guidance of spatial attention. Journal of Neuroscience. 2009;29(34):10563–72. doi: 10.1523/JNEUROSCI.1170-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohanty A, Gitelman DR, Small DM, Mesulam MM. The spatial attention network interacts with limbic and monaminergic systems to modulate motivation-induced attention shifts. Cerebral Cortex. 2008;18:2604–13. doi: 10.1093/cercor/bhn021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124:1241–52. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, et al. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–5. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Ohman A, Flykt A, Lundqvist D. Unconscious emotion: evolutionary perspectives, psychophysiological data, and neuropsychological mechanisms. In: Lane R, Nadel L, editors. The Cognitive Neuroscience of Emotion. New York: Oxford University Press; 2000. pp. 296–327. [Google Scholar]

- Pasley BN, Mayes LC, Schultz RT. Subcortical discrimination of unperceived objects during binocular rivalry. Neuron. 2004;42:163–72. doi: 10.1016/s0896-6273(04)00155-2. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Fei-Fei L, Kastner S. Neural mechanisms of rapid natural scene categorization in human visual cortex. Nature. 2009;460(7251):94–7. doi: 10.1038/nature08103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. Emotion and cognition and the amygdala: from ‘what is it?’ to ‘what’s to be done?’. Neuropsychologia. 2010;48:3416–29. doi: 10.1016/j.neuropsychologia.2010.06.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nature Reviews Neuroscience. 2010;11:773–83. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Engelmann JB. Embedding reward signals into perception and cognition. Frontiers in Neuroscience. 2010;4(17):1–8. doi: 10.3389/fnins.2010.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Ungerleider LG. Visual awareness and the detection of fearful faces. Emotion. 2005;5:243–7. doi: 10.1037/1528-3542.5.2.243. [DOI] [PubMed] [Google Scholar]

- Phelps EA, LeDoux JE. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron. 2005;48:175–87. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

- Polonsky A, Blake R, Braun J, Heeger DJ. Neuronal activity in human primary visual cortex correlates with perception during binocular rivalry. Nature Neuroscience. 2000;3:1153–9. doi: 10.1038/80676. [DOI] [PubMed] [Google Scholar]

- Sakuraba S, Sakai S, Yamanaka M, Yokosawa K, Hirayama K. Does the human dorsal stream really process a category for tools? Journal of Neuroscience. 32:3949–53. doi: 10.1523/JNEUROSCI.3973-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61:700–7. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherman SM, Guillery RW. The role of thalamus in the flow of information to cortex. Philosophical Transactions of the Royal Society of London Series B: Biological Sciences. 2002;357:1695–708. doi: 10.1098/rstb.2002.1161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shipp S. The functional logic of cortico-pulvinar connections. Philosophical Transactions of the Royal Society of London Series B: Biological Sciences. 2003;358:1605–24. doi: 10.1098/rstb.2002.1213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Nichols TE. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage. 2009;44:83–98. doi: 10.1016/j.neuroimage.2008.03.061. [DOI] [PubMed] [Google Scholar]

- Snow JC, Allen HA, Rafal RD, Humphreys GW. Impaired attentional selection following lesions to the human pulvinar: evidence for homology between human and monkey. PNAS. 2008;108(25):4054–9. doi: 10.1073/pnas.0810086106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein T, Senju A, Peelen MV, Sterzer P. Eye contact facilitates awareness of faces during interocular suppression. Cognition. 2011;119:307–11. doi: 10.1016/j.cognition.2011.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M, de Gelder B. Neural bases of the non-conscious perception of emotional signals. Nature Reviews Neuroscience. 2010;11:697–709. doi: 10.1038/nrn2889. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K, Vaughan JT, Kanwisher N. Binocular rivalry and visual awareness in human extrastriate cortex. Neuron. 1998;21:753–9. doi: 10.1016/s0896-6273(00)80592-9. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C. Continuous flash suppression reduces negative afterimages. Nature Neuroscience. 2005;8(8):1096–101. doi: 10.1038/nn1500. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Moradi F, Felsen C, Yamazaki M, Adolphs R. Intact rapid detection of fearful faces in the absence of the amygdala. Nature Neuroscience. 2009;12(10):1224–5. doi: 10.1038/nn.2380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. Staring fear in the face. Nature. 2005;433:22–3. doi: 10.1038/433022a. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7(11):1271–8. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Schwartz S. Beware and be aware: capture of spatial attention by fear-related stimuli in neglect. NeuroReport. 2001;12:1119–22. doi: 10.1097/00001756-200105080-00014. [DOI] [PubMed] [Google Scholar]

- Wager TD, Phan KL, Liberzon I, Taylor SF. Valence, gender, and lateralization of functional brain anatomy in emotion: a meta-analysis of findings from neuroimaging. Neuroimage. 2003;19:513–31. doi: 10.1016/s1053-8119(03)00078-8. [DOI] [PubMed] [Google Scholar]

- Ward R, Calder AJ, Parker M, Arend I. Emotion recognition following human pulvinar damage. Neuropsychologia. 2006;45(8):1973–8. doi: 10.1016/j.neuropsychologia.2006.09.017. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, et al. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience. 1998;18:411–8. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilke M, Turchi J, Smith K, Mishkin M, Leopold DA. Pulvinar inactivation disrupts selection of movement plans. Journal of Neuroscience. 2010;30(25):8650–9. doi: 10.1523/JNEUROSCI.0953-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7(4):882–6. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.