Abstract

Emotion recognition problems are frequently reported in individuals with an autism spectrum disorder (ASD). However, this research area is characterized by inconsistent findings, with atypical emotion processing strategies possibly contributing to existing contradictions. In addition, an attenuated saliency of the eyes region is often demonstrated in ASD during face identity processing. We wanted to compare reliance on mouth versus eyes information in children with and without ASD, using hybrid facial expressions. A group of six-to-eight-year-old boys with ASD and an age- and intelligence-matched typically developing (TD) group without intellectual disability performed an emotion labelling task with hybrid facial expressions. Five static expressions were used: one neutral expression and four emotional expressions, namely, anger, fear, happiness, and sadness. Hybrid faces were created, consisting of an emotional face half (upper or lower face region) with the other face half showing a neutral expression. Results showed no emotion recognition problem in ASD. Moreover, we provided evidence for the existence of top- and bottom-emotions in children: correct identification of expressions mainly depends on information in the eyes (so-called top-emotions: happiness) or in the mouth region (so-called bottom-emotions: sadness, anger, and fear). No stronger reliance on mouth information was found in children with ASD.

1. Introduction

Facial expressions inform us about the feelings and state of mind of others and enable us to adjust our behavior and to react appropriately. Therefore, the ability to interpret facial expressions accurately and to derive socially relevant information from them is considered a fundamental requirement for typical reciprocal social interactions and communication [1].

Autism spectrum disorders (ASD) are pervasive developmental disorders characterized by quantitative and qualitative deficits in reciprocal social interactions and communication and by the presence of restricted and repetitive behavior patterns, interests, and activities [2]. Difficulties in recognizing, identifying, and understanding the meaning of emotions are often considered as one of the trademarks of their social problems. Different procedures have been used to examine emotion processing abilities in children and adults with ASD, with or without intellectual disability: sorting, (cross-modal) matching, and labeling tasks (for a literature review and a meta-analysis on this topic, see [3, 4], resp.). Each of these procedures has revealed problems with affect processing in individuals with ASD (e.g., [5–8]). Other studies, however, failed to find atypical emotion recognition skills in individuals with ASD (e.g., [9–13]). Inconsistencies (for an overview, see [3]) may be due to differences in sample and participants' characteristics, task demands [14], and stimuli. Performances of individuals with ASD seem to be especially impaired for negative, more subtle, or more complex emotions (e.g., [7, 15, 16]) or expressions embedded in a social context [17, 18]. Besides, it is important to note that an intact performance does not exclude the use of atypical—more cognition- or language-mediated—or more feature-based emotion processing strategies leading to impairments in some emotion recognition tasks, but camouflaging these deficits in other tasks (and therefore often referred to as compensation mechanisms; [3, 11, 19]).

Interestingly, face identity research has provided evidence for atypical face processing strategies in individuals with ASD (for a review, see [20]). People with ASD show an attenuated reliance on the eyes region: they are less attentive towards the upper face part in comparison to neurotypicals, but instead the lower face seems to be more salient. This was found using face identification tasks with familiar [21] and unfamiliar faces (e.g., [22, 23]) and with eye-movement studies using neutral faces or faces embedded in a social context (e.g., [24, 25]). This atypical attention pattern with an increased “mouth bias” in ASD was sometimes interpreted as a specific expertise for mouth regions [26], or as a (language-and-cognition mediated) compensation strategy [27], or even as an active aversion for the quickly and unpredictably moving eyes [28]. However, not all studies found differences in gaze patterns between children with and without ASD (e.g., [29, 30]). Here also, inconsistencies are supposed to be caused by differences in samples, tasks, and stimuli [3].

The above-mentioned studies concern facial identity processing. It is sometimes suggested that face identity recognition (which is based on invariant face properties) and face expression recognition (which is based on changeable aspects of a face) can be dissociated at a neural and theoretical level [31, 32]. According to this view, findings about face identity processing will not necessarily generalize to the domain of emotion perception (see also findings on patients with amygdalectomy [33] or acquired prosopagnosia [34]). The aim of the present study is to examine whether the atypical saliency of upper versus lower face area also holds for emotion perception in children with ASD.

Attendance to a narrow range of specific features in the face (e.g., the mouth region) when judging facial affect could partly explain the difficulties that children with ASD experience in reading emotional expressions. Some evidence for increased reliance on mouth information and less sensitivity for the eyes was already found ([16, 27, 35, 36], but also see, e.g., [37]). A disturbed attention pattern might affect emotion recognition performance most strongly in emotions where information of the eyes is more crucial. Research in typical individuals provided evidence for a distinction between top-emotions (most salient information in the eyes region) and bottom-emotions (most salient information in the mouth region). Behavioral research in adults demonstrated that the recognition of sadness, anger, and fear mostly relies on information in the eyes region, and the recognition of happiness and disgust relies on information in the mouth region ([38], Experiment 1). More recently, eye-movement studies also demonstrated that the most characteristic face regions (top or bottom) depend on nature of the emotional expression [39–41].

Using an emotion labeling task with hybrid stimuli, we wanted to compare the reliance on eyes versus mouth information in children with and without ASD when recognizing emotional expressions. Hence the original expressions of anger, fear, happiness, and sadness and neutral expressions were shown together with their hybrid counterparts. The hybrid facial expressions used here are computer-morphed emotional expressions with the upper (or lower) face half being replaced by a neutral expression, such that emotional information is only available in the mouth (or eyes, resp.) region (see Figure 1 for an example). The generation of hybrid facial stimuli is a rather novel technique with some significant benefits in comparison to eye-movement studies. Whereas both methods aim at evaluating automatic and implicit emotion recognition strategies, the most salient advantage of hybrid stimuli is a practical one. An expensive eye-tracking setup is obviously not required, making the experimental design easier to implement, and also significantly reduces the burden on participants. The data obtained within the context of an eye-movement experiment are clearly richer, yet much more difficult and less clear-cut to process. In addition to these more pragmatic advantages of using hybrid stimuli, an important methodological difference is the active manipulation of available information in hybrid expressions. Showing full facial expressions allows participants to (freely) scan the whole picture. However, this is impossible when using hybrid expressions, simply because no all emotional information is available. Clearly, both paradigms offer a slightly different perspective on the evaluation of saliency of upper versus lower facial areas. Eye-movement studies indeed provide a stronger indication of spontaneous viewing strategies, but the drawback is that participants can adapt their viewing style, with both strategies being impossible to disentangle. Hybrid facial expressions, on the other hand, offer the opportunity to evaluate the reliance on a specific face half when recognizing emotions, by comparing performances on full expressions and their hybrid counterparts. Studies using face parts, such as, for instance, the part-whole paradigm or composite face illusions, also actively intervene in the available information. However, the lack of naturalness is a major disadvantage of using face parts. The processing of these nonfacial and artificial stimuli might be unnatural anyway, not telling us much about (full) face perception.

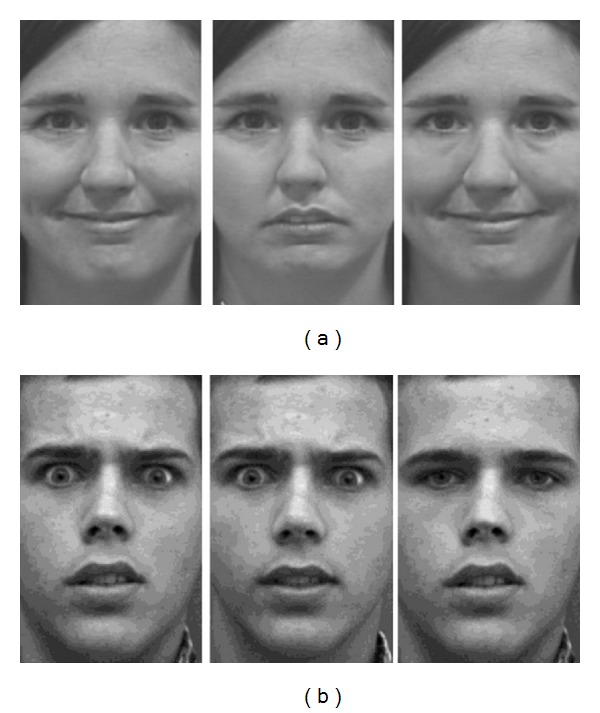

Figure 1.

Representative sample stimulus of bottom-emotion happiness (a) and top-emotion fear (b), from left to right: original expression, hybrid expression with neutral mouth, and hybrid expression with neutral eyes (the pictures used in our paper are part of the California Facial Expression database, which is freely available online for research purpose. For more information regarding this database, we refer you to their website: http://cseweb.ucsd.edu/users/gary/CAFE/readme.txt).

This study had two goals. We firstly wanted to evaluate whether children with ASD would experience difficulties when labeling four basic emotional expressions and a neutral expression. Secondly, we aimed at comparing eyes versus mouth reliance between children with and without ASD. If typically developing children mostly relied on information in the eyes region, they were expected to experience more difficulties recognizing a hybrid expression with neutral eyes, and especially for those emotions with more characteristic features in the eyes region (top-emotions), such as fear, anger, and sadness. An attenuated bias for the eyes region in the ASD group, or in increased reliance on the mouth area, would result in the reverse pattern. The ASD sample would then be mostly impaired when recognizing hybrid expressions with neutral mouths, and especially for bottom-emotions which have more salient features in the mouth area, such as happiness.

2. Methods

2.1. Participants

Two groups of six-to-eight-year-old boys without an intellectual disability (IQ ≥ 70) participated in this study: an experimental group and a matched comparison group. The experimental group consisted of 22 boys with ASD, recruited through the child psychiatry unit at the university hospital (n = 16) and through a special needs school for children with ASD (n = 6). They all received a formal clinical diagnosis of ASD based on a comprehensive assessment by a multidisciplinary team (first subgroup) or by a medical specialist (second subgroup), and according to DSM-IV-TR criteria [2]. Clinical diagnoses were confirmed within the research protocol using the Autism Diagnostic Interview-Revised (ADI-R; [42]) and by file review by a child psychiatrist experienced in ASD. Whereas all diagnoses were confirmed based on file review, all but two participants scored above cut-off on the reciprocal social interactions and the language/communication ADI-R domains. Four additional patients did not meet the restrictive and repetitive behaviors ADI-R domain. In the ASD group, full-scale IQ was measured within the recent clinical diagnostic protocol using the Wechsler Scales of Intelligence (WISC-III-R; [43]) or the Snijders-Oomen Non-Verbal Intelligence Test (SON-R; [44]).

The comparison group comprised 22 typically developing boys (TD group), representative for the general population, and recruited through two mainstream schools. Within the experimental protocol, full-scale IQ was estimated based on an abbreviated version of the WISC-III-R [45], comprising four subtests (vocabulary, similarities, picture arrangement, and block design). The ASD and TD groups were groupwise matched based on age and full-scale IQ (for detailed participant characteristics and group comparisons, see Table 1).

Table 1.

Demographic characteristics of both participant groups: means (M) and standard deviations (SD) for age (expressed in months), full-scale IQ, and ADI-R scores. Group differences in age or IQ were evaluated with independent samples t-tests (homoscedasticity assumption checked with F-test).

| ASD group (n = 22) | Comparison group (n = 22) | Group comparison | ||||

|---|---|---|---|---|---|---|

| M (SD) | Range | M (SD) | Range | 2-sided t-test | P value | |

| Age | 92.14 (10.54) | 75–106 | 95.36 (8.16) | 79–107 | 1.11 | .2483 |

| Full-scale IQ | 94.36 (11.93) | 78–119 | 98.50 (7.78) | 84–108 | 1.39 | .1816 |

| ADI-R scores | 44.50 (12.59) | 15–65 | N/A | N/A | N/A | |

| Communication | 15.68 (5.27) | 3–24 | ||||

| Social | 20.45 (5.63) | 8–28 | ||||

| Repetitive behaviors | 5.41 (2.65) | 1–9 | ||||

2.2. Materials

Black-and-white photographs of twelve adult faces (seven males and five females) were selected from the California Facial Expressions database [46]. These stimuli were posed according to the Facial Action Coding System (FACS; [47]) and were normalized for the location of eyes and mouth. Hybrid facial expressions were created with Adobe Photoshop. In a hybrid expression, the upper (or lower) face part is replaced by a neutral upper (or lower) face of the same actor. For every emotion-identity combination, two types of hybrid expressions were made: one in which the upper part was replaced by a neutral expression (“hybrid with neutral eyes”) and one in which the lower part was replaced by a neutral expression (“hybrid with neutral mouth”). The clone sample tool was used as smoothing tool to mask the lines between both face halves (see Figure 1 for an example).

Five different facial expressions were used: one neutral expression and four emotional faces, expressing happiness, sadness, anger, and fear. Based upon a pilot study (with six TD children and eight TD adults), the three (out of four) best recognizable emotions were selected for every actor, in such a way that, in total, all emotional expressions were presented the same number of times (i.e., all four emotions were presented nine times).

2.3. Procedure and Design

Approval by the Medical Ethical Committee of the University Hospital was obtained and the participants' parents gave their written informed consent before start. All participants were tested individually in a quiet room. Stimuli were presented on a portable computer with a 38 cm screen, at a viewing distance of approximately 76 cm. Stimuli were presented within the E-prime environment. The experimental procedure followed a step-by-step protocol, including a phase wherein participants were made familiar with the different response alternatives and their understanding of the (emotion) labels was checked. This was done by asking for an example situation for the different emotions. If participants could not provide a correct example situation, the experimenter presented a standard example, as outlined in the protocol. Children were instructed to label the facial expressions. All participants understood the task and the emotion labels. Trials consisted of a face stimulus (presented at the left side) and the response alternatives (presented at the right side). Participants responded verbally or by pointing to the label at the screen. Experimenters always entered the participant's response. In between two trials a blank screen with fixation cross was presented for one second. The face stimuli were presented with an unlimited presentation time.

In total, this experiment consisted of 120 trials, so that each expression was presented 10 times per identity: 12 neutral facial expressions (1 per identity) + 36 original emotional expressions (3 emotions × 12 identities) + 72 hybrid emotional expressions (3 emotions × 12 identities × 2 hybrid types, namely, neutral mouth and neutral eyes). Trial order was randomized.

2.4. Data and Data Analysis

All analyses were performed with SAS. Split-plot ANOVAs were conducted on accuracy scores. Accuracy data for the original expressions were analyzed separately, with group (ASD-TD group) as between-subjects factor and expression (neutral-happiness-sadness-anger-fear) as within-subjects factor. In a second analysis, all versions, the hybrid and the original expressions, were analyzed, with the neutral expressions being omitted from these analyses because they had no hybrid counterpart. Again, a split-plot ANOVA with group (ASD-TD group) as between-subjects factor and emotional expression (happiness-sadness-anger-fear) and hybrid type (hybrid neutral mouth-hybrid neutral eyes-original emotional expression) as within-subjects factors was conducted for accuracy data. A significance level of P < .05 (two-sided) was adopted, and effects with a P value between .05 and .10 were indicated as marginally significant.

All analyses performed on the whole ASD group (n = 22) yielded similar results as analyses with the subgroup of ASD participants who scored above the cut-off on the social and communication domain of the ADI-R (n = 20), or on those participants who scored above cut-off on all ADI-R domains (n = 16). Therefore, we will report the results of the analyses on the whole group.

3. Results

3.1. Recognition of the Original Expressions and Relationship with Age, Full-Scale IQ, or ASD Severity

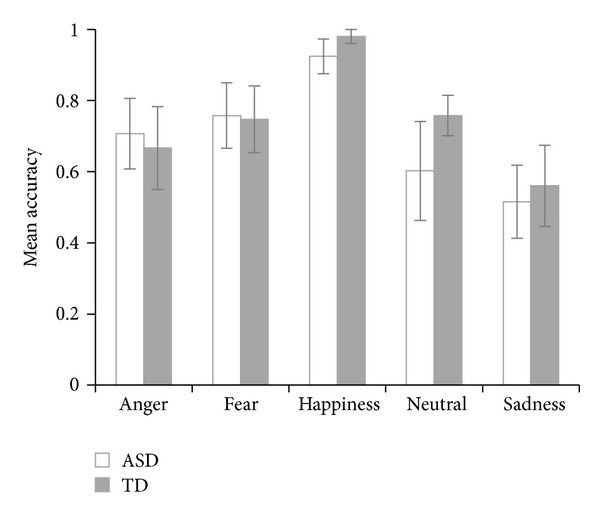

Results (see Table 2) indicated a main effect of expression (F(4,168) = 27.28, P < .0001, see Figure 2). Post hoc Tukey-Kramer tests (α = .05) revealed that sadness (M = .54) was overall recognized less accurately than all other emotions, and happiness (M = .95) was overall recognized more accurately than all other emotions. We did not find evidence for a main effect of participant group (F(1,42) = 1.14, P = .29) and the participant group-by-expression-interaction was not significant either (F(4,168) = 1.69, P = .15).

Table 2.

Accurate labeling of the expressions for both participant groups: mean accuracy and standard deviation (between brackets).

| ASD | TD | |

|---|---|---|

| Anger | .71 (.22) | .67 (.26) |

| Fear | .76 (.21) | .75 (.21) |

| Happiness | .92 (.11) | .98 (.04) |

| Neutral | .60 (.31) | .76 (.13) |

| Sadness | .52 (.23) | .56 (.26) |

Figure 2.

Mean performances for both participant groups on the different original expressions (error bars represent 95% confidence intervals of the means).

No evidence for a correlation between emotion recognition and age, intelligence, or ASD traits (measured with the ADI-R) was found (all correlations with P values > .10).

3.2. Which Face Region Is Used to Recognize Emotional Expressions: Mouth or Eyes?

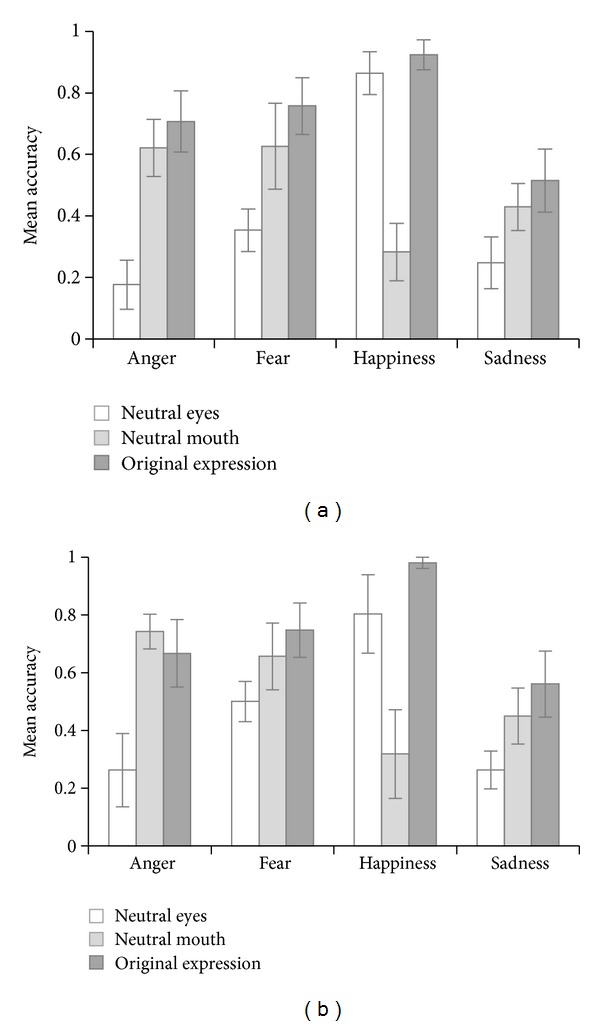

Again, sadness was recognized less accurately (across group and hybrid type) than the other expressions, and happiness was the best recognized emotion (F(3,126) = 34.35, P < .0001; post-hoc Tukey-Kramer tests). Evidence for a main effect of hybrid type was also found (F(2,84) = 78.14, P < .0001), such that original stimuli were recognized the most accurate, and hybrid expressions with neutral eyes were recognized the least accurate. Interestingly, the interaction between emotion and hybrid type was highly significant (F(6,252) = 57.28, P < .0001), indicating that the most characteristic region (eyes or mouth) is emotion-dependent. Post hoc Bonferroni adjusted tests supported the distinction between top-emotions (anger, fear, and sadness) and bottom-emotions (happiness). Moreover, these tests indicated that adding the other, less characteristic face half did not significantly improve the performance (i.e., no statistically significant difference between the recognition of original expressions and the most salient hybrid ones).

The main effect of participant group was not significant (F(1,42) = 2.31, P = .14), nor were the interactions between participant group and emotion, or between participant group and hybrid type (all F < 1). Contrary to our expectations, the 3-way interaction among participant group, emotion, and hybrid type was not significant (F(6,252) = 1.59, P = .15, depicted in Figure 3 and Table 3). This suggests that both children with and without ASD show a similar distinction between expressions for which the mouth region contains the most salient information (bottom-emotions) and expressions for which the eyes region is the most informative (top-emotions).

Figure 3.

The effect of stimulus presentation (hybrid expression with neutral eyes, hybrid expression with neutral mouth, and original expression) on emotion recognition performance was similar in both the ASD group (a) and in the TD group (b). Happiness is a bottom-emotion; sadness, anger, and fear are top-emotions. Error bars represent 95% confidence intervals of the means.

Table 3.

Accurate labeling of the hybrid expressions for both participant groups: mean accuracy and standard deviation (between brackets).

| Emotion | Hybrid type | ASD | TD |

|---|---|---|---|

| Anger | Neutral eyes | .18 (.18) | .26 (.29) |

| Neutral mouth | .62 (.21) | .74 (.13) | |

| Original | .71 (.22) | .67 (.26) | |

|

| |||

| Fear | Neutral eyes | .35 (.16) | .50 (.16) |

| Neutral mouth | .63 (.32) | .66 (.26) | |

| Original | .76 (.21) | .75 (.21) | |

|

| |||

| Happiness | Neutral eyes | .86 (.16) | .80 (.31) |

| Neutral mouth | .28 (.21) | .32 (.35) | |

| Original | .92 (.11) | .98 (.04) | |

|

| |||

| Sadness | Neutral eyes | .25 (.19) | .26 (.15) |

| Neutral mouth | .43 (.17) | .45 (.22) | |

| Original | .52 (.23) | .56 (.26) | |

4. Discussion

The goal of this research was to investigate (1) whether children with ASD were impaired in recognizing facial expressions of four basic emotions and a neutral one and (2) whether children with ASD tend to rely more on features in the lower part of the face (mouth region) when recognizing emotions. We evaluated this with a novel stimulus generation technique, namely, hybrid expressions, hereby actively manipulating the amount of emotional information available in the face stimulus. A computer-morphed facial expression was generated, with the upper (or lower) face half being replaced by a neutral expression, such that emotional information is only present in the other face half (lower or upper region, resp.).

No impairment in the recognition of angry, happy, sad, and fearful and neutral original expressions was found in children with ASD, compared to an age- and IQ-matched group of TD children. Both participant groups performed well above chance on these original expressions, which indicated that our stimulus selection was successful. Indeed, it was necessary for this study to use expressions with a high recognition rate, so that correct identification was still feasible based on a reduced amount of information (when recognizing the hybrid emotions). Although some studies found children with ASD to be outperformed by TD children in basic emotion recognition tasks, nowadays it has been generally accepted that children with ASD (without intellectual disability) mostly succeed in structured emotion processing tasks, using basic and easily recognizable stimuli [3]. In line with this, a challenge for future applied research is to develop experimental procedures that can tap into the more profound understanding of the meaning of emotions. Besides, one should evaluate whether existing emotion training programs for children with ASD teach them more than mere labelling the basic affects correctly.

Interestingly, we found an interaction between emotion and hybrid type, providing evidence for the existence of top- and bottom-emotions in children. Mouth information holds crucial information to correctly identify the emotion happiness. For the emotions anger, fear, and sadness, the most salient information is located in the region of the eyes. Hereby we nicely replicate and extend the previously demonstrated distinction between top- and bottom-emotions to a group of children with and without a developmental disorder (studies in adults: [38–40]). At the same time, this effect demonstrates that the experiment had sufficient statistical power to pick up subtle performance differences. Moreover, it proves that this method—using hybrid stimuli—is valuable for a coarse evaluation of attentional bias, without the need for eye-movement registration.

In general, original expressions were recognized more accurately than hybrid faces with neutral eyes and hybrid faces with neutral mouth. However, this effect can be mainly attributed to the apparent overrepresentation of top-emotions in the stimulus selection. In addition, providing the most salient or relevant emotional information (mouth or eyes region, for bottom- and top-emotions, resp.) is not only sufficient for a successful identification of the expression, but moreover, adding more emotional cues (namely, the other face half), does not cause a significant increase in performance level. Although it must be noted that a trend in that direction is visible in both groups. These findings could suggest that both groups of children did not integrate emotional information across both face parts, depending mostly on a more local analysis of the salient regions. Since our participant group consisted of young children—all the participants were younger than 10 years—it could indeed be that they tend to use a more feature-based recognition strategy in daily life too. However, it should be noted that the age at which children shift from using a primarily feature-based representation for faces to more holistic encoding processes is not uncontroversial [48, 49]. Although there are inconsistencies, some studies suggest that children with ASD use a more feature-based face processing strategy (for a recent overview, see [20]), which is in line with the neurocognitive theories attributing an atypical, more locally biased perceptual style to individuals with ASD (for two different views on this topic, see [50, 51]). Further research is necessary to explore the role of holistic processing in the emotion recognition in individuals with ASD and the developmental trajectory of this evolution.

This study did not provide evidence for the use of an atypical emotion recognition strategy in children with ASD: children with ASD were not found to rely more on information in the lower part of the face in comparison to TD children. These findings contrast with those of previous work which argued for the higher reliance on the mouth region in children with ASD ([16, 27, 35, 36], and also see, e.g., [37]). Apparent inconsistencies could be explained by several factors. Stimulus-specific aspects probably play an important role. Previous studies attributed a crucial influence to stimulus complexity, and most importantly, the embedding of the facial stimulus in a social context [3, 18]. It was sometimes suggested that the increased fixation on mouths can be linked to speech perception: children with ASD, and especially the group of children without language disorder, focused on the mouth region as a compensatory mechanism for their difficulties in processing eye information [52]. The use of dynamic and auditory material is consistent with the proposal that more naturalistic and ecologically valid paradigms should be developed in ASD research [27]. In addition, most previous work did not make a distinction between viewing patterns for different expressions, hereby disregarding that the significance of information in the eyes or mouth region could be strongly emotion dependent, as suggested by the difference between top- and bottom-emotions. In addition, compensatory mechanisms could also cause inconsistencies. Although one of the advantages of using hybrid stimuli is the fact that you get an insight into the implicit processing strategies, more cognition- or language-mediated compensatory mechanisms cannot be fully excluded. The unlimited presentation time (which maybe gave the children the time to use compensation strategies), the fact that the experimental group consisted of children without intellectual disability (deficits are more often found in younger children and in children with intellectual disability), the multiple choice response format, the types of stimuli, and the use of basic emotions, could make children to use these compensation strategies. One child, for example, commented while viewing an angry expression that “the eyebrows are turned downwards so this must be angry.” Please note that it is nearly impossible to exclude the possibility that the ASD sample used a more time-consuming, less efficient processing strategy. One could argue that designs with a limited presentation time partially exclude this. However, a confusion is created in these instances: it becomes very hard to distinguish between task difficulty and the use of time-consuming compensatory mechanisms. Whenever participants fail at a task using shorter presentation times, it becomes unclear whether this is due to the increased difficulty as such or because of an atypical default processing manner (i.e., a compensation strategy).

Employing an emotion labeling task with static emotional expressions, we found no evidence for an emotion recognition impairment in six-to-eight-year-olds with ASD. Furthermore, using hybrid stimuli, we demonstrated that the face region providing the most salient information is strongly emotion dependent. We hereby extended research findings on top- and bottom-emotions to children with and without a developmental disorder. This factor should be taken into account in future research, and the distinction between top- and bottom-emotions and the impact hereof on scanning patterns should be evaluated. In addition, we showed that coarse attention patterns can be evaluated with this innovative stimulus manipulation technique, which does not require eye-movement registration. Lastly, no evidence for a stronger mouth reliance in ASD was found in our task. Future research needs to investigate whether the same holds for more complex emotions and for emotions where information of both the upper and lower parts is required to achieve good recognition.

Acknowledgments

The authors are grateful to all participating children and their parents, as well as to the participating special needs schools. they would like to acknowledge the assistance in participant recruitment and testing by Eline Verbeke and want to thank Patricia Bijttebier, Wilfried Peeters, and Eline Verbeke for their careful reading and feedback on a previous version of this paper. This research was funded by a Grant of the Flemish government, awarded to Johan Wagemans (METH/02/08), and an Interdisciplinary Grant of KU Leuven, awarded to Jean Steyaert, Johan Wagemans, and Ilse Noens (IDO 08/013). Kris Evers and Inneke Kerkhof are joint first authors.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Nachson I. On the modularity of face recognition: the riddle of domain specificity. Journal of Clinical and Experimental Neuropsychology. 1995;17(2):256–275. doi: 10.1080/01688639508405122. [DOI] [PubMed] [Google Scholar]

- 2.American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 4th edition. Washington, DC, USA: 2000. [Google Scholar]

- 3.Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychology Review. 2010;20(3):290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- 4.Uljarevic M, Hamilton A. Recognition of emotions in autism: a formal meta-analysis. Journal of Autism and Developmental Disorders. 2013;43(7):1517–1526. doi: 10.1007/s10803-012-1695-5. [DOI] [PubMed] [Google Scholar]

- 5.Tantam D, Monaghan L, Nicholson H, Stirling J. Autistic children’s ability to interpret faces: a research note. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1989;30(4):623–630. doi: 10.1111/j.1469-7610.1989.tb00274.x. [DOI] [PubMed] [Google Scholar]

- 6.Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. Journal of Autism and Developmental Disorders. 1999;29(1):57–66. doi: 10.1023/a:1025970600181. [DOI] [PubMed] [Google Scholar]

- 7.Law Smith MJ, Montagne B, Perrett DI, Gill M, Gallagher L. Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia. 2010;48(9):2777–2781. doi: 10.1016/j.neuropsychologia.2010.03.008. [DOI] [PubMed] [Google Scholar]

- 8.Hobson RP. The autistic child’s appraisal of expressions of emotion: a further study. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1986;27(5):671–680. doi: 10.1111/j.1469-7610.1986.tb00191.x. [DOI] [PubMed] [Google Scholar]

- 9.Kuusikko S, Haapsamo H, Jansson-Verkasalo E, et al. Emotion recognition in children and adolescents with autism spectrum disorders. Journal of Autism and Developmental Disorders. 2009;39(6):938–945. doi: 10.1007/s10803-009-0700-0. [DOI] [PubMed] [Google Scholar]

- 10.Prior M, Dahlstrom B, Squires T-L. Autistic children’s knowledge of thinking and feeling states in other people. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1990;31(4):587–601. doi: 10.1111/j.1469-7610.1990.tb00799.x. [DOI] [PubMed] [Google Scholar]

- 11.Teunisse J-P, De Gelder B. Face processing in adolescents with autistic disorder: the inversion and composite effects. Brain and Cognition. 2003;52(3):285–294. doi: 10.1016/s0278-2626(03)00042-3. [DOI] [PubMed] [Google Scholar]

- 12.Tracy JL, Robins RW, Schriber RA, Solomon M. Is emotion recognition impaired in individuals with autism spectrum disorders? Journal of Autism and Developmental Disorders. 2011;41(1):102–109. doi: 10.1007/s10803-010-1030-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wright B, Clarke N, Jordan J, et al. Emotion recognition in faces and the use of visual context Vo in young people with high-functioning autism spectrum disorders. Autism. 2008;12(6):607–626. doi: 10.1177/1362361308097118. [DOI] [PubMed] [Google Scholar]

- 14.Evers K, Noens I, Steyaert J, Wagemans J. Combining strengths and weaknesses in visual perception of children with an autism spectrum disorder: perceptual matching of facial expressions. Research in Autism Spectrum Disorders. 2011;5(4):1327–1342. [Google Scholar]

- 15.Wallace GL, Case LK, Harms MB, Silvers JA, Kenworthy L, Martin A. Diminished sensitivity to sad facial expressions in high functioning autism spectrum disorders is associated with symptomatology and adaptive functioning. Journal of Autism and Developmental Disorders. 2011;41(11):1475–1486. doi: 10.1007/s10803-010-1170-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Baron-Cohen S, Wheelwright S, Jolliffe T. Is there a “language of the eyes”? Evidence from normal adults, and adults with autism or Asperger Syndrome. Visual Cognition. 1997;4(3):311–331. [Google Scholar]

- 17.Da Fonseca D, Santos A, Bastard-Rosset D, Rondan C, Poinso F, Deruelle C. Can children with autistic spectrum disorders extract emotions out of contextual cues? Research in Autism Spectrum Disorders. 2009;3(1):50–56. [Google Scholar]

- 18.Speer L, Cook A, McMahon W, Clark E. Face processing in children with autism: effects of stimulus contents and type. Autism. 2007;11(3):265–277. doi: 10.1177/1362361307076925. [DOI] [PubMed] [Google Scholar]

- 19.Behrmann M, Avidan G, Leonard GL, et al. Configural processing in autism and its relationship to face processing. Neuropsychologia. 2006;44(1):110–129. doi: 10.1016/j.neuropsychologia.2005.04.002. [DOI] [PubMed] [Google Scholar]

- 20.Weigelt S, Koldewyn K, Kanwisher N. Face identity recognition in autism spectrum disorders: a review of behavioral studies. Neuroscience and Biobehavioral Reviews. 2012;36(3):1060–1084. doi: 10.1016/j.neubiorev.2011.12.008. [DOI] [PubMed] [Google Scholar]

- 21.Langdell T. Recognition of faces: an approach to the study of autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1978;19(3):255–268. doi: 10.1111/j.1469-7610.1978.tb00468.x. [DOI] [PubMed] [Google Scholar]

- 22.Riby DM, Doherty-Sneddon G, Bruce V. The eyes or the mouth? Feature salience and unfamiliar face processing in Williams syndrome and autism. Quarterly Journal of Experimental Psychology. 2009;62(1):189–203. doi: 10.1080/17470210701855629. [DOI] [PubMed] [Google Scholar]

- 23.Joseph RM, Ehrman K, McNally R, Keehn B. Affective response to eye contact and face recognition ability in children with ASD. Journal of the International Neuropsychological Society. 2008;14(6):947–955. doi: 10.1017/S1355617708081344. [DOI] [PubMed] [Google Scholar]

- 24.Chawarska K, Shic F. Looking but not seeing: atypical visual scanning and recognition of faces in 2 and 4-Year-old children with Autism spectrum disorder. Journal of Autism and Developmental Disorders. 2009;39(12):1663–1672. doi: 10.1007/s10803-009-0803-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Jones W, Carr K, Klin A. Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2-year-old toddlers with autism spectrum disorder. Archives of General Psychiatry. 2008;65(8):946–954. doi: 10.1001/archpsyc.65.8.946. [DOI] [PubMed] [Google Scholar]

- 26.Joseph RM, Tanaka J. Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 2003;44(4):529–542. doi: 10.1111/1469-7610.00142. [DOI] [PubMed] [Google Scholar]

- 27.Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59(9):809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- 28.Gepner B, Féron F. Autism: a world changing too fast for a mis-wired brain? Neuroscience and Biobehavioral Reviews. 2009;33(8):1227–1242. doi: 10.1016/j.neubiorev.2009.06.006. [DOI] [PubMed] [Google Scholar]

- 29.Pelphrey K, Goldstein J, Reznick S, Piven J, Allison T, McCarthy G. The influence of observed gaze shift context and timing upon activity within the superior temporal sulcus. Journal of Cognitive Neuroscience. 2002:p. 69. [Google Scholar]

- 30.Van Der Geest JN, Kemner C, Camfferman G, Verbaten MN, van Engeland H. Looking at images with human figures: comparison between autistic and normal children. Journal of Autism and Developmental Disorders. 2002;32(2):69–75. doi: 10.1023/a:1014832420206. [DOI] [PubMed] [Google Scholar]

- 31.Bruce V, Young A. Understanding face recognition. The British journal of psychology. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- 32.Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4(6):223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- 33.Adolphs R, Tranel D, Damasio H, Damasio A. Impared recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372(6507):669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- 34.Bruyer R, Laterre C, Seron X, et al. A case of prosopagnosia with some preserved covert remembrance of familiar faces. Brain and Cognition. 1983;2(3):257–284. doi: 10.1016/0278-2626(83)90014-3. [DOI] [PubMed] [Google Scholar]

- 35.Gross TF. The perception of four basic emotions in human and nonhuman faces by children with autism and other developmental disabilities. Journal of Abnormal Child Psychology. 2004;32(5):469–480. doi: 10.1023/b:jacp.0000037777.17698.01. [DOI] [PubMed] [Google Scholar]

- 36.Rice K, Moriuchi JM, Jones W, Klin A. Parsing heterogeneity in autism spectrum disorders: visual scanning of dynamic social scenes in school-aged children. Journal of the American Academy of Child and Adolescent Psychiatry. 2012;51(3):238–248. doi: 10.1016/j.jaac.2011.12.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rutherford MD, Towns AM. Scan path differences and similarities during emotion perception in those with and without autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38(7):1371–1381. doi: 10.1007/s10803-007-0525-7. [DOI] [PubMed] [Google Scholar]

- 38.Calder AJ, Burton AM, Miller P, Young AW, Akamatsu S. A principal component analysis of facial expressions. Vision Research. 2001;41(9):1179–1208. doi: 10.1016/s0042-6989(01)00002-5. [DOI] [PubMed] [Google Scholar]

- 39.Eisenbarth H, Alpers GW. Happy mouth and sad eyes: scanning emotional facial expressions. Emotion. 2011;11(4):860–865. doi: 10.1037/a0022758. [DOI] [PubMed] [Google Scholar]

- 40.Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychological Science. 2005;16(3):184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- 41.Scheller E, Büchel C, Gamer M. Diagnostic features of emotional expressions are processed preferentially. PLoS ONE. 2012;7(7) doi: 10.1371/journal.pone.0041792.e41792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lord C, Risi S, Lambrecht L, et al. The Autism Diagnostic Observation Schedule-Generic: a standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30(3):205–223. [PubMed] [Google Scholar]

- 43.Wechsler D. Wechsler Intelligence Scale for Children. 3rd edition. London, UK: The Psychological Corporation; 1992. [Google Scholar]

- 44.Tellegen PJ, Laros JA, Winkel M. SON-R 2 1/2-7 Niet-Verbale Intelligentietest. Amsterdam, The Netherlands: Hogrefe; 2003. [Google Scholar]

- 45.Grégoire J. L’Evaluation clinique de l’intelligence de l’enfant: Théorie et pratique du WISC-III. Sprimont, Belgium: Mardaga; 2000. [Google Scholar]

- 46.Dailey MN, Cottrell GW, Reilly J. California Facial Expressions (CAFE) Computer Science and Engineering Departement, UCSD, La Jolla, Calif, USA, 2001, http://www.cs.ucsd.edu/users/gary/CAFE/

- 47.Ekman P, Friesen WV. The Facial Action Coding System (FACS): A Technique for the Measurement of Facial Action. Palo Alto, Calif, USA: Consulting Psychologists Press; 1978. [Google Scholar]

- 48.Pellicano E, Rhodes G. Holistic processing of faces in preschool children and adults. Psychological Science. 2003;14(6):618–622. doi: 10.1046/j.0956-7976.2003.psci_1474.x. [DOI] [PubMed] [Google Scholar]

- 49.Carey S, Diamond R. Are faces perceived as configurations? More by adults than by children? Visual Cognition. 1994;1(2/3):253–274. [Google Scholar]

- 50.Happé F, Frith U. The weak coherence account: detail-focused cognitive style in autism spectrum disorders. Journal of Autism and Developmental Disorders. 2006;36(1):5–25. doi: 10.1007/s10803-005-0039-0. [DOI] [PubMed] [Google Scholar]

- 51.Mottron L, Dawson M, Soulières I, Hubert B, Burack J. Enhanced perceptual functioning in autism: an update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders. 2006;36(1):27–43. doi: 10.1007/s10803-005-0040-7. [DOI] [PubMed] [Google Scholar]

- 52.Norbury CF, Brock J, Cragg L, Einav S, Griffiths H, Nation K. Eye-movement patterns are associated with communicative competence in autistic spectrum disorders. Journal of Child Psychology and Psychiatry and Allied Disciplines. 2009;50(7):834–842. doi: 10.1111/j.1469-7610.2009.02073.x. [DOI] [PubMed] [Google Scholar]