Abstract

The aim of this study was to assess the accuracy of clinician-entered data in imaging clinical decision support (CDS). We used CDS-guided CT angiography (CTA) for pulmonary embolus (PE) in the emergency department as a case example because it required clinician entry of d-dimer results which could be unambiguously compared with actual laboratory values. Of 1296 patients with CTA orders for suspected PE during 2011, 1175 (90.7%) had accurate d-dimer values entered. In 55 orders (4.2%), incorrectly entered data shielded clinicians from intrusive computer alerts, resulting in potential CTA overuse. Remaining data entry errors did not affect user workflow. We found no missed PEs in our cohort. The majority of data entered by clinicians into imaging CDS are accurate. A small proportion may be intentionally erroneous to avoid intrusive computer alerts. Quality improvement methods, including academic detailing and improved integration between electronic medical record and CDS to minimize redundant data entry, may be necessary to optimize adoption of evidence presented through CDS.

Keywords: Decision Support Systems, Clinical; Computerized Physician Order Entry System; Radiology; Electronic Health Records; Evidence-Based Practice

Background

The implementation of clinical decision support (CDS) is now federally mandated, and CDS has been described as an important tool for improving evidence-based practice.1 2 Although the integrity and relevance of evidence presented is generally dependent on the clinical information input into CDS, there is little evidence on accuracy of data entered into CDS systems by clinicians. A study examining the impact of a cancer CDS system found a data entry error rate of 4.2%,3 and another study reviewing an outpatient electronic medical record (EMR) found an exception rate of 6.4% for electronic CDS.4 Although CDS designed to improve the appropriateness of imaging has been reported to lower inappropriate utilization, improve quality, and reduce waste, questions remain regarding its data integrity. It is unclear whether providers modify the clinical information they enter into imaging CDS in order to avoid intrusive computer alerts and interactions.5 6 Inaccurate computerized provider order entry (CPOE) data could lead to erroneous CDS recommendations and inappropriate testing, which might, in turn, result in suboptimal care or even potential patient harm. Thus further assessment of the potential data entry errors in CDS systems and understanding the unintended consequences of such errors are critical to optimal design and implementation of EMRs for maintaining and improving quality of care and patient safety.

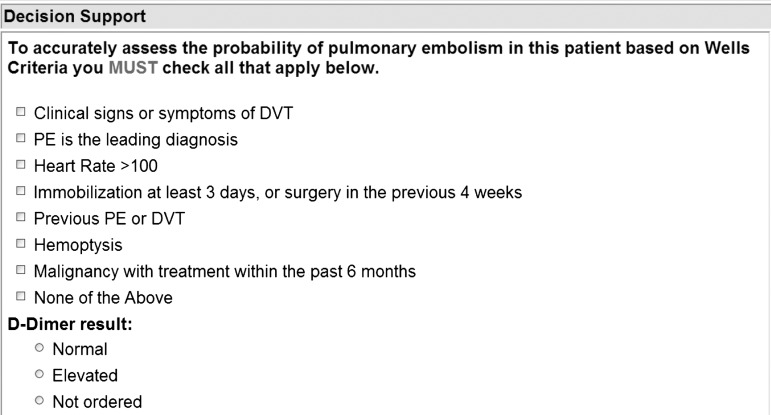

Testing the accuracy of CPOE data entered into CDS requires a robust, objective, unambiguous, and evidence-based CDS. While many evidence-based guidelines exist to serve as the basis for imaging CDS, most lack objective reference standards for assessing the accuracy of clinician data entry, since they are dependent on subjective findings from the history and physical examination. However, CDS to guide clinicians on the use of CT angiography (CTA) for the evaluation of patients with suspected pulmonary embolus (PE) in the emergency department (ED) is an exception. Well-supported evidence-based guidelines recommend the use of CTA in patients suspected of having PE with either an elevated d-dimer laboratory marker or a high pretest probability for PE based on a clinical score termed the Wells Criteria.7 Our institution deployed an evidence-based CDS in the ED for the evaluation of PE that requires clinicians to electronically enter either the d-dimer laboratory value or the Wells score before ordering a CTA for PE (figure 1). CDS provides evidence in real time if clinical data entered do not support performing CTA based on Well's Criteria. The clinician can then choose to continue (over-ride the CDS recommendation), delay, or cancel the test. A recent study demonstrated that implementation of this CDS decreased the use, and increased the yield, of CTAs for patients with suspected PE in the ED.5 However, the effectiveness of CDS may be limited by the extent to which it relies on clinician data entry, as erroneously entered data might lead to either CDS-recommended potentially inappropriate imaging or avoidance of potentially appropriate imaging. Additionally, it is unknown whether clinicians modify clinical information they enter into imaging CDS to avoid intrusive computer alerts and interactions.

Figure 1.

Clinician data entry screenshot for ordering CT angiography for pulmonary embolus.

Since the algorithm behind the recommendations on CTA evaluation of patients with suspected PE relies directly on the laboratory d-dimer result, these same d-dimer laboratory values can serve as a gold standard to help assess the accuracy of clinician data entry via CPOE. Previous literature has defined ‘gaming’, in the setting of electronic CDS, as the scenario of clinicians entering erroneous data for personal gain to either bypass interruptive decision support screens or avoid system capture of clinicians’ decisions to disregard evidence-based guidelines.4 6 8 9 We hypothesized that providers enter accurate information into imaging CDS, even if it results in intrusive alerts, and that inaccuracies leading to inappropriate recommendations are uncommon. More specifically, we compared clinician-entered d-dimer descriptor values with actual d-dimer laboratory results. We also evaluated CDS recommendations triggered in order to follow the potential ‘downstream’ effects of data erroneously entered into CDS on patient care.

Objective

To determine the accuracy and downstream effects of clinician data entry-dependent CDS designed to guide evidence-based use of CTA for ED patients with suspected PE.

Methods

Study setting and population

The study population included all patients for whom clinicians ordered CTAs for suspected PE between 1 January 2011 and 31 December 2011 in the ED of our 793-bed urban, academic, level 1 trauma center. The requirement to obtain informed consent was waived by the institutional review board for this Health Insurance Portability and Accountability Act (HIPAA)-compliant study. Our institution uses a web-based CPOE system for imaging (Percipio, Medicalis Corp, San Francisco, California, USA), which is integrated into the enterprise information technology infrastructure. Details of the implementation have been described previously.10

Clinical decision support system

ED clinicians use the CPOE system to place imaging requests. CDS launches on the basis of the type of examination ordered as well as the clinical data inputted by the ED clinicians. In the case of CTA for PE, the requesting clinician is required to input data specifically designed to automatically calculate a Wells score (including clinical signs and symptoms of deep venous thrombosis (DVT), alternative diagnosis less likely than PE, heart rate >100, immobilization of at least 3 days or surgery in previous 4 weeks, previously diagnosed PE or DVT, hemoptysis, and malignancy with treatment within 6 months), as well as their reported laboratory descriptor of the serum d-dimer (high, elevated, or not ordered), which is provided from the laboratory report (figure 1).11 If a patient is found to have a high pretest risk of PE based on the Wells score, no CDS recommendations are made and the imaging request is allowed to proceed. If a patient is found to have a low pretest risk of PE based on the Wells score, the system references the clinician-entered d-dimer value. In the case of an elevated d-dimer value, no advice is provided and the order for the CTA proceeds. However, if no d-dimer was ordered in a low-risk patient (or the d-dimer result has not yet returned from the laboratory), CDS recommends ordering a d-dimer (or waiting for the result). Similarly, if a normal d-dimer value is inputted for a low-risk patient, the CDS recommends not obtaining a CTA, as per the guidelines.

Data collection

We reviewed the EMR of all patients for whom CTA was ordered for suspected PE and compared clinician-entered descriptors regarding d-dimer results from the CPOE system with actual d-dimer results obtained directly from the laboratory results section of the EMR, which served as the gold standard for comparison purposes. The following variables were recorded for each patient: date and time of CTA study order, d-dimer laboratory level, date and time of d-dimer laboratory study, and clinician-entered d-dimer descriptor. We assessed the concordance between clinician data entry regarding, and actual laboratory assay results for, d-dimers. Medical records were manually searched by an ED attending physician for downstream effects (including potentially inappropriate imaging and missed opportunities for potentially appropriate imaging per the CDS guidelines, and missed diagnoses of PE) in cases of discordance. EMRs of cases with inappropriate CTA imaging and unavailable d-dimer values were reviewed to identify any patients with d-dimer results obtained at another institution before arrival at our ED; none were found.

Results

Of 59 519 patients presenting to the ED during the study period, 1296 (2.2%) had CTAs ordered for suspected PE. Clinicians accurately entered d-dimer descriptors for 1175 (90.7%) of the patients who had CTAs ordered (table 1). We compared the timing of laboratory results and order entry and found that, of the 121 data entry errors (121 of 1296; 9.3%), 78 (64.5%) occurred after d-dimer results were available from the laboratory, while 43 (35.5%) occurred before the d-dimer results were available (table 2).

Table 1.

Computerized provider order entry compared with laboratory results for all CT angiography orders in the emergency department, 1 January to 31 December 2011

| Laboratory d-dimer | Total | |||

|---|---|---|---|---|

| High | Normal | Not performed | ||

| Clinician entered d-dimer | ||||

| Elevated | 346 (26.7%) | 2* (0.2%) | 13* (1.0%) | 361 (27.9%) |

| Normal | 0 | 11 (0.8%) | 6 (0.5%) | 17 (1.3%) |

| Not ordered | 60 (4.6%) | 40* (3.1%) | 818 (63.1%) | 918 (70.8%) |

| Total | 406 (31.3%) | 53 (4.1%) | 837 (64.6%) | 1296 |

Cells with accurate clinician data entry are in italics.

Cells with errors in clinician data entry are shaded.

*Cases of potential ‘gaming’.

Table 2.

d-Dimer laboratory result availability at the time of CT angiography (CTA) order entry for discordant computerized provider order entry (CPOE), n=121

| Laboratory d-dimer at time of CTA ordering | |||||

|---|---|---|---|---|---|

| High | Normal | Not performed | |||

| Available | Unavailable | Available | Unavailable | ||

| Clinician entered d-dimer in CPOE | |||||

| Elevated | – | – | 2 | – | 13 |

| Normal | – | – | – | – | 6 |

| Not ordered | 37 | 23 | 39 | 1 | – |

With respect to downstream effects, 40 (33.1%) of the 121 errors resulted in potentially inappropriate CTAs being performed, all of which were negative for PE. For another 21 (17%) patients, the clinician entered descriptors stating that the d-dimer was ‘elevated’ or ‘not ordered,’ but the clinician still appropriately canceled the CTA when the d-dimer level was low or unavailable (table 3).

Table 3.

Downstream effects of discordant clinician order entry on CT angiography (CTA) imaging for pulmonary embolus evaluation

| Clinician entered D-Dimer value | Elevated | Normal | Not ordered | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| D-Dimer lab assay result | High | Nml | NA | High | Nml | NA | High | Nml | NA | |

| CTA results | ||||||||||

| Positive | 0 | 0 | 0 | 0 | 4 | 0 | 4 | |||

| Negative | 2* | 10* | 0 | 6* | 42 | 22* | 82 | |||

| Non-diagnostic | 0 | 0 | 0 | 0 | 2 | 0 | 2 | |||

| Canceled | 0 | 3† | 0 | 0 | 12‡ | 18† | 33 | |||

| Total | 2 | 13 | 0 | 6 | 60 | 40 | 121 | |||

Cells with concordance between clinician order entry and laboratory result are excluded and shaded.

*Inappropriate CTAs.

†Appropriately canceled CTAs after clinical decision support (CDS).

‡Inappropriately canceled CTAs after CDS.

NA, Not Available; Nml, Normal.

Overall, 55 of the 121 errors (45% of errors; 4.2% of all CTA orders) represent imaging requests that may be perceived to have erroneous data entered to avoid CDS-triggered intrusive workflow disruptions and alerts to cancel CTA. In 15 of these cases, the clinician stated that the d-dimer result was elevated despite a laboratory-returned d-dimer that was normal and available before the imaging order was placed. In the remaining 40 cases, the clinician stated that a d-dimer result was not ordered despite a laboratory-returned d-dimer that was normal. Of these 55 imaging requests, 21 (38%) were eventually canceled by the clinician (before CTA was performed), and the other 34 (62%) were completed but demonstrated no PE.

Of the 121 inaccurate imaging requests, 12 (10%) involved patients who, per evidence-based guidelines, should have had a CTA based on their elevated d-dimer values but who did not because of the inaccurate data entered into the CDS. Eleven of these patients were ultimately determined to not have a PE, based on review of their medical records, alternative imaging studies, repeated history and physical examinations, and clinical judgment. One of the 12 patients had a PE diagnosed by ventilation-perfusion scan; however, this patient would not have been eligible to undergo CTA in the ED because of renal failure and the risk of contrast-induced nephropathy with CTA.

Discussion

We found that, in the great majority of cases, clinical information entered by clinicians into imaging CDS when requesting CTA for evaluation of PE in the ED was accurate. Only a small portion of data entry errors resulted in potential overuse, and an even smaller portion resulted in potential underuse of CTA. In only a small portion of cases (4.2%) did erroneous data entered shield the clinician from intrusive computer alerts to cancel the CTA, behavior that has been referred to as ‘gaming.’ These cases of potential gaming (n=55) represent 45% of all data entry errors (n=121). However, our study design does not permit assessment of clinicians’ motivation while using CDS; further studies are needed to evaluate whether errors that could be perceived as ‘gaming’ were intentional or simply reflect random data entry errors.

Health policy initiatives such as the Health Information Technology for Economic and Clinical Health (HITECH) Act have goals to standardize and improve care, and Meaningful Use criteria have been designed to act as an electronic path by which to achieve these goals, with CDS described as an important goal in stage 2.1 2 12 Although our data confirm the accuracy of clinician-entered data for imaging CDS in the majority of cases, performance improvement opportunities remain. On the basis of our findings and the small number of studies identified in the literature, there may be an inherent limitation to clinician data entry accuracy of ∼90–95%.3 4 Potential ‘gaming’ behaviors, even if minor, need to be minimized to ensure that CDS recommendations are optimized for each patient. A previous study of pharmacy utilization demonstrated that clinicians using computerized order entry were undertaking such gaming to order certain medications without appropriate evidence-based indications.8 Another recent study reported similar inappropriate exception rates of 3% for computerized CDS that might similarly be classified as potential gaming.4 Standard quality assurance activities such as retrospective review of orders to assess accuracy of data entry, combined with academic detailing of providers displaying potential ‘gaming’ behavior, may be needed. When possible, minimizing redundant data entry with auto-population of CDS with required clinical data from EMR (such as laboratory d-dimer values for CTA evaluation of PE) may improve efficiency, accuracy, and safety.

Our study had several limitations. It was performed in one academic institution with an established history of CPOE use and systematic quality improvement efforts, thereby making generalizability uncertain. We chose to examine CTA for PE evaluation because of the available gold standard laboratory values; however, this specific scenario may not adequately represent other clinical situations that utilize CPOE and imaging CDS systems. Conducted in a large ED with multiple clinicians, this study potentially allows for the circumstance where one clinician orders a d-dimer laboratory test and a different clinician orders a CTA imaging test on the same patient. Since our study is only able to examine patients with CTA orders, it is potentially biased towards eliminating overuse. We did not assess the clinical validity of physicians’ over-ride of CDS recommendations, as accurately recreating the context of the clinical judgment to do so from the medical record is subjective and was not the focus of our study. Also, we did not assess whether the small number of erroneous data entries was limited to a few clinicians or more widespread, as the study included more than 100 ordering providers and only 121 errors were identified. Finally, our study design did not permit assessment of clinicians’ motivation while using CDS; further studies are needed to evaluate whether errors that could be perceived as ‘gaming’ were intentional or simply reflect random data entry errors.

Conclusions

We found the great majority (>90%) of provider clinical data entries in imaging CDS to be accurate. However, performance improvement opportunities still exist to eliminate data entry errors and optimize CDS patient-centric recommendations and alerts. In a small portion of orders, data entry errors can result in potential overuse and, less frequently, underuse of imaging. More robust integration could reduce redundant clinical data entry in EMR with embedded CDS to minimize errors. However, quality improvement strategies including retrospective sampling of clinical data entered into CDS and academic detailing when appropriate are probably needed to minimize the small portion of erroneous data entries that may be perceived to be motivated by the desire to avoid intrusive computer interactions and alerts.

Acknowledgments

We would like to thank Laura E Peterson, BSN, SM for her assistance in editing this manuscript, and Stacy D O'Connor, MD for data extraction.

Footnotes

Contributors: All authors participated in research design, execution, analysis and manuscript preparation.

Funding: This study was funded in part by Grant T15LM007092 from the National Library of Medicine and by Grant 1UC4EB012952-01 from the National Institute of Biomedical Imaging and Bioengineering. These funding sources had no bearing on study design, data collection, analysis, interpretation, or decision for journal submission.

Competing interests: The institution and RK have equity and royalty interests in Medicalis and some of its products. The computerized physician order entry and clinical decision support systems used at our hospital and in this study were developed by Medicalis.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Blumenthal D. Launching HITECH. N Engl J Med 2010;362:382–5 [DOI] [PubMed] [Google Scholar]

- 2.Jha AK. Meaningful use of electronic health records. JAMA 2010;304:1709–10 [DOI] [PubMed] [Google Scholar]

- 3.Séroussi B, Blaszka-Jaulerry B, Zelek L, et al. Accuracy of clinical data entry when using a computerized decision support system: a case study with OncoDoc2. Stud Health Technol Inform 2012;180:472–6 [PubMed] [Google Scholar]

- 4.Persell SD, Dolan NC, Friesema EM, et al. Frequency of inappropriate medical exceptions to quality measures. Ann Intern Med 2010;152:225–31 [DOI] [PubMed] [Google Scholar]

- 5.Raja AS, Ip IK, Prevedello LM, et al. Effect of computerized clinical decision support on the use and yield of CT pulmonary angiography in the emergency department. Radiology 2012;262:468–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Raja AS, Walls RM, Schuur JD. Decreasing use of high-cost imaging: the danger of utilization-based performance measures. Ann Emerg Med 2010;56:597–9 [DOI] [PubMed] [Google Scholar]

- 7.Schoepf UJ, Goldhaber SZ, Costello P. Spiral computed tomography for acute pulmonary embolism. Circulation 2004;109:2160–7 [DOI] [PubMed] [Google Scholar]

- 8.Bates DW. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003;10:523–30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Doran T, Fullwood C, Reeves D, et al. Exclusion of patients from pay-for-performance targets by English physicians. N Engl J Med 2008;359:274–84 [DOI] [PubMed] [Google Scholar]

- 10.Ip IK, Schneider LI, Hanson R, et al. Adoption and meaningful use of computerized physician order entry with an integrated clinical decision support system for radiology: ten-year analysis in an urban teaching hospital. JAm Coll Radiol 2012;9:129–36 [DOI] [PubMed] [Google Scholar]

- 11.Wells PS, Anderson DR, Rodger M, et al. Derivation of a simple clinical model to categorize patients probability of pulmonary embolism: increasing the models utility with the SimpliRED D-dimer. Thromb Haemost 2000;83:416–20 [PubMed] [Google Scholar]

- 12.Medicare and medicaid programs; electronic health record incentive program—stage 2. Final rule. Fed Regist 2012;77:53967–4162 [PubMed] [Google Scholar]