Abstract

Objective

Increasing use of electronic health records (EHRs) provides new opportunities for public health surveillance. During the 2009 influenza A (H1N1) virus pandemic, we developed a new EHR-based influenza-like illness (ILI) surveillance system designed to be resource sparing, rapidly scalable, and flexible. 4 weeks after the first pandemic case, ILI data from Indian Health Service (IHS) facilities were being analyzed.

Materials and methods

The system defines ILI as a patient visit containing either an influenza-specific International Classification of Disease, V.9 (ICD-9) code or one or more of 24 ILI-related ICD-9 codes plus a documented temperature ≥100°F. EHR-based data are uploaded nightly. To validate results, ILI visits identified by the new system were compared to ILI visits found by medical record review, and the new system's results were compared with those of the traditional US ILI Surveillance Network.

Results

The system monitored ILI activity at an average of 60% of the 269 IHS electronic health databases. EHR-based surveillance detected ILI visits with a sensitivity of 96.4% and a specificity of 97.8% based on chart review (N=2375) of visits at two facilities in September 2009. At the peak of the pandemic (week 41, October 17, 2009), the median time from an ILI visit to data transmission was 6 days, with a mode of 1 day.

Discussion

EHR-based ILI surveillance was accurate, timely, occurred at the majority of IHS facilities nationwide, and provided useful information for decision makers. EHRs thus offer the opportunity to transform public health surveillance.

Keywords: Influenza, Human; Population Surveillance; Epidemiologic Methods; Electronic Health Records; Medical Informatics Applications; Data Collection

Introduction and background

As the USA moves toward universal adoption of electronic health records (EHRs), anticipated benefits range from improved patient care to more complete public health surveillance of reportable diseases. The US Department of Health and Human Services (DHHS) has outlined the EHR characteristics that qualify for Medicare and Medicaid incentive payments under the Health Information Technology for Economic and Clinical Health (HITECH) Act of 2009.1 The guidelines contain ‘meaningful use’ objectives to ensure that healthcare providers implement EHRs to achieve significant improvements in care, and EHRs are also a key component of the Affordable Care Act of 2010.2 Many of the ‘meaningful use’ objectives have public health ramifications, such as those that focus on use of EHRs for surveillance of reportable conditions, syndromic illnesses, and immunization coverage. The use of EHRs for surveillance, however, is relatively untested, and the demonstration of practical and timely applications is crucial to increasing EHR acceptance.3

The Indian Health Service (IHS), a DHHS agency, is responsible for providing healthcare to eligible American Indian and Alaska Native (AI/AN) people through IHS, Tribal, and Urban Indian health facilities (collectively referred to here as ‘IHS’). IHS facilities cared for approximately 60% of the USA AI/AN population, or 1.5 million beneficiaries, in 2009. IHS facilities from remote Alaskan villages to large urban hospitals gather electronic clinical and administrative health data through an IHS-designed, EHR-based health information technology platform.

Influenza surveillance in the USA monitors the extent and timing of influenza activity, including morbidity, mortality, and circulating virus strains. Coverage may be insufficient, however, for subgroups such as the AI/AN population, whose influenza epidemiology differs from that of the general population.4–6 The current ‘gold standard’ for morbidity surveillance, the US Outpatient Influenza-like Illness Surveillance Network (ILINet), monitors outpatient influenza-like illness (ILI) using a network of voluntary sentinel providers,7 but does not collect race data.

Because American Indians and Alaska Natives have experienced disproportionate morbidity and mortality from influenza,4–6 the need for accurate and timely influenza surveillance in this population was critical during the 2009 influenza A (H1N1) virus (H1N1pdm09) pandemic. In response, the IHS Division of Epidemiology and Disease Prevention (DEDP) and the IHS Office of Information Technology (OIT), with technical assistance from the Centers for Disease Control and Prevention (CDC) and Food and Drug Administration (FDA), designed and developed a new EHR-based surveillance system, the IHS Influenza Awareness System (IIAS; referred to herein as the ‘system’ or ‘surveillance system’). The objective of the surveillance system, which was based only on routinely collected clinical data, was to provide accurate, timely, and geographically representative information on the burden, severity, and spread of ILI among AI/AN people. In addition, we wanted the system to be resource sparing, rapidly scalable, and flexible. On April 28, shortly after the detection of the first H1N1pdm09 case in California on April 15, DEDP and OIT staff started electronically searching local databases and developing surveillance software. By May 12, data from the surveillance system began arriving at DEDP/IHS.

Objective

To describe the characteristics and utility of this new EHR-based surveillance system and present an evaluation of its ILI surveillance component.

Materials and methods

Surveillance system design and function

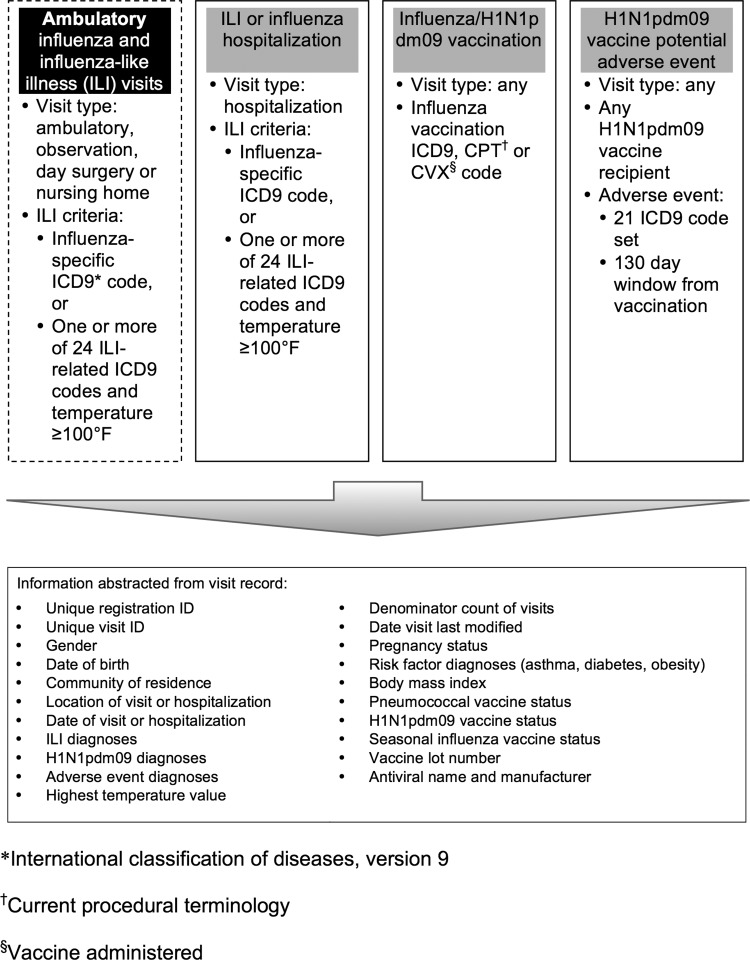

IHS designed IIAS to monitor ILI and influenza ambulatory visits and hospitalizations, seasonal and H1N1pdm09 influenza vaccine administration, potential adverse events related to seasonal and H1N1pdm09 influenza vaccines, and possible risk factors for severe influenza disease (including asthma, pregnancy, diabetes mellitus, and body mass index). Denominator data consist of total ambulatory visits and hospitalizations. The surveillance strategy uses algorithms based on International Classification of Disease, V.9 (ICD-9) codes, Current Procedural Terminology codes, and routinely collected clinical data. Automated surveillance software searches multi-facility health information databases daily, looking retrospectively 90 days. The system extracts demographic, diagnostic, risk factor, and vaccine information from visits meeting the search criteria (figure 1). Electronic visit information travels securely each day to DEDP, where automated statistical analyses create weekly reports containing daily tabulations of ILI visits, hospitalizations, and influenza vaccine administration counts, plus plots of weekly ILI visit proportions and ILI hospitalizations. Facilities have access to facility-specific reports, and aggregated regional and national reports are available publicly on the IHS web portal (http://www.ihs.gov/flu/).

Figure 1.

Search logic and data collection schema for the four modules of the Indian Health Service Influenza Awareness System.

The system identifies ILI visits using a subset of the ICD-9 codes used by a previously developed syndromic surveillance system (ESSENCE)8 and additional clinical data not available to ESSENCE, such as measured temperature. Our algorithm was developed by pilot testing different combinations of ICD-9 codes and measured patient temperatures at an IHS site in the southwestern USA, and then performing confirmatory algorithm testing in Alaska. The IIAS ICD-9 codes primarily represent acute respiratory tract infections and viral disease symptoms (24 codes), and influenza-specific illnesses (3 codes, table 1).

Table 1.

Frequency of Indian Health Service (IHS) Influenza Awareness System (IIAS) influenza-like illness algorithm ICD-9 codes found by chart review and electronic surveillance at two Alaska hospitals, September 2009

| ICD-9 code | Description | Chart review N=144 n (%) |

IIAS N=196 n (%) |

False positives N=53 |

|---|---|---|---|---|

| Influenza-specific codes (N=3) | ||||

| 487.1 | Influenza with other respiratory manifestations | 24 (21.6) | 60 (38.5) | 36 |

| 487.0 | Influenza with pneumonia | 0 | 0 | 0 |

| 487.8 | Influenza with other manifestations | 0 | 0 | 0 |

| Influenza-like illness codes (N=24) | ||||

| 786.2 | Cough | 32 (28.8) | 32 (20.5) | 0 |

| 780.60 | Fever, unspecified | 27 (24.3) | 36 (23.1) | 10 |

| 079.99 | Unspecified viral infection | 22 (19.8) | 24 (15.4) | 2 |

| 486 | Pneumonia, organism unspecified | 11 (9.9) | 13 (8.3) | 2 |

| 465.9 | Acute laryngopharyngitis, unspecified site | 9 (8.1) | 9 (5.8) | 0 |

| 382.9 | Unspecified otitis media | 9 (8.1) | 11 (7.1) | 2 |

| 462 | Acute pharyngitis | 4 (3.6) | 4 (2.6) | 0 |

| 466.19 | Acute bronchiolitis due to other infectious organisms | 2 (1.8) | 3 (1.9) | 1 |

| 490 | Bronchitis, not specified as acute or chronic | 2 (1.8) | 2 (1.3) | 0 |

| 460 | Acute nasopharyngitis (common cold) | 1 (0.9) | 1 (0.6) | 0 |

| 466.0 | Acute bronchitis | 1 (0.9) | 1 (0.6) | 0 |

| 382.00 | Acute suppurative otitis media without spontaneous rupture of ear drum | 0 | 0 | 0 |

| 461.8 | Other acute sinusitis | 0 | 0 | 0 |

| 461.9 | Acute sinusitis, unspecified | 0 | 0 | 0 |

| 463 | Acute tonsillitis | 0 | 0 | 0 |

| 464.00 | Acute laryngitis without mention of obstruction | 0 | 0 | 0 |

| 464.10 | Acute tracheitis without mention of obstruction | 0 | 0 | 0 |

| 464.20 | Acute laryngotracheitis without mention of obstruction | 0 | 0 | 0 |

| 465.0 | Acute laryngopharyngitis | 0 | 0 | 0 |

| 465.8 | Acute laryngopharyngitis, other multiple sites | 0 | 0 | 0 |

| 478.9 | Other and unspecified diseases of upper respiratory tract | 0 | 0 | 0 |

| 480.9 | Viral pneumonia, unspecified | 0 | 0 | 0 |

| 485 | Bronchopneumonia, organism unspecified | 0 | 0 | 0 |

| 780.6 | Fever and other physiologic disturbances of temperature regulation | 0 | 0 | 0 |

The system defines ILI as a patient visit to a participating IHS facility after March 20, 2009 that contained either an influenza-specific ICD-9 code or one or more of 24 ILI-related ICD-9 codes plus a documented temperature ≥100°F (figure 1).

Surveillance evaluation

Counts of participating facilities and geocoding of their locations (Google Earth 5, Google, Mountain View, California, USA and Manifold V.8.0, Manifold, San Mateo, California, USA) describe the extent of surveillance. We evaluated the system's timeliness by calculating the days between an ambulatory ILI visit and that visit's detection by IIAS using date fields in the IIAS export datasets, limiting our evaluation to facilities reporting at least weekly during weeks 41–51 (October 17 to December 26, 2009), which included the peak of reported ILI activity. Additionally, we restricted the analysis to a window of 30 days from the visit date because of outlier time values that skewed the distribution of visit detection times. To validate our case definition and determine the sensitivity and specificity of the system to detect ILI visits, we reviewed ambulatory patient charts at one urban and one rural Alaska hospital. At each site we chose 2 days in September 2009, the peak month of H1N1pdm09 activity in Alaska, to compare ILI chart documentation to the data collected by the surveillance system from the same visits. We applied the ILINet ILI case definition as our ‘gold standard’ during medical record review (temperature ≥100°F and either sore throat or cough in the absence of a known cause other than influenza).7 To evaluate the summary surveillance data produced by the system, we compared IIAS and ILINet data from IHS facilities that reported independently to both surveillance systems for more than 50% of the analysis weeks (weeks 14–52; April 4, 2009 to January 2, 2010). We correlated the weekly ILI visit percentages from the two systems using Spearman's correlation statistic and analyzed the cumulative proportion of ILI visits, dates of peak ILI activity, and the shape of the epidemic curve by facility. We used Stata V.10 for statistical analyses.

Results

System function

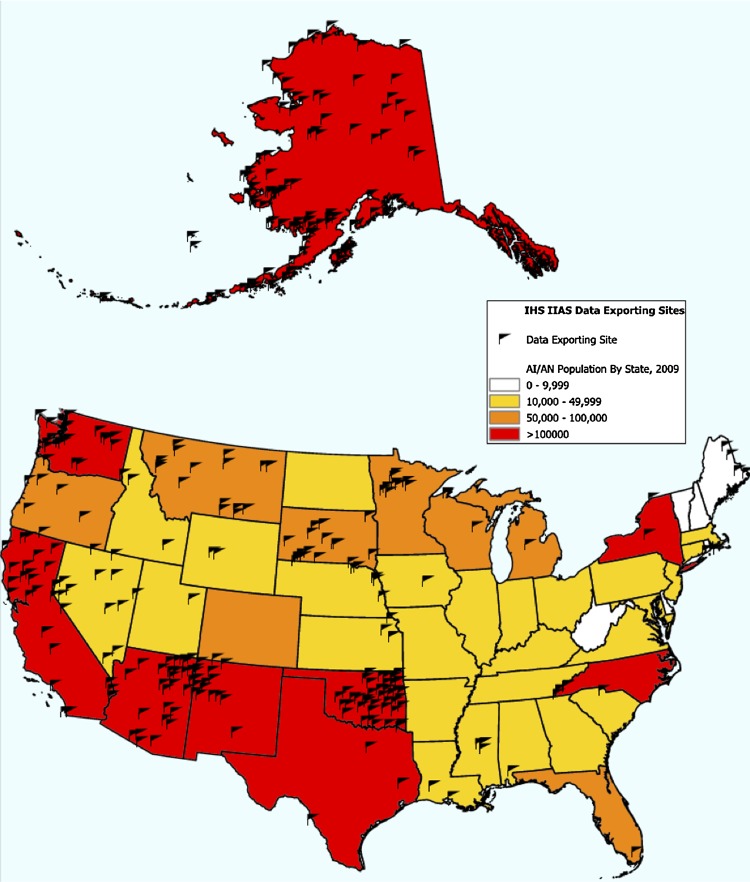

The number of facility databases that reported data to IIAS varied over time due to facility uptake and the release of multiple software updates. As of November 1, 2010, there were 269 IHS electronic health information databases, many of which included data from multiple clinical sites. Following the release of IIAS V.3 on October 7, 2010, an average of 60.3% (162/269) of IHS databases participated regularly in surveillance over the next 6 months. The locations of the 343 health facilities that ever participated in the surveillance system from May 1, 2009 to March 29, 2010 are shown in figure 2.

Figure 2.

Location of 343 health facilities that ever reported to the Indian Health Service Influenza Awareness System between May 1, 2009 and March 29, 2010 and the American Indian/Alaska Native Population by State.14

The surveillance ILI algorithm reviewed and transmitted pertinent visit information daily to DEDP or after the surveillance software program completed searching the database, which for larger databases took 1 to 3 days. For regularly reporting facilities during weeks 41–51 (October 17 to December 26, 2009), the range of mean times from an ILI visit to IIAS detection and data transmission was 8.2–12.1 days; median times ranged from 6–12 days, and mode was 1 day for all facilities.

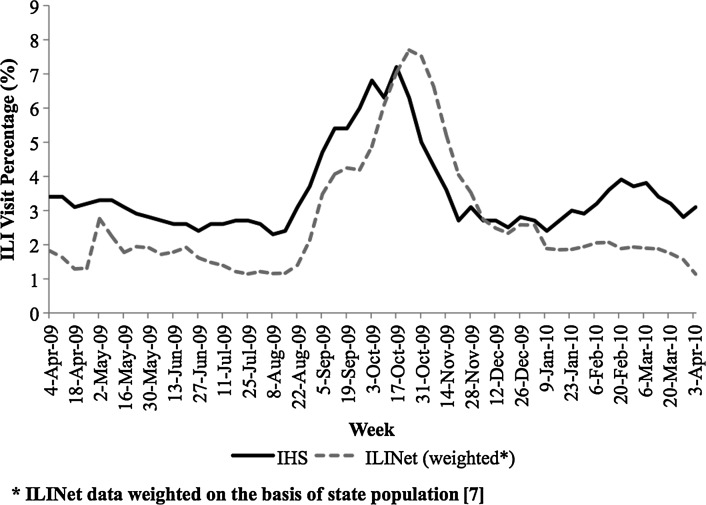

For the 2009–2010 influenza season, the proportion of ILI visits increased sharply in week 33 (August 16, 2009) and peaked at 7.2% in week 41 (October 11, 2009), as shown in figure 3. National ILINet data also showed an upswing in ILI visits starting with week 34 (August 23, 2009) and peaking at 7.7% in week 42 (October 18, 2009), a 1 week delay from the IIAS peak. Additionally, IIAS surveillance revealed a second epidemic of ILI visits in week 7 (February 14, 2010) that ILINet surveillance did not show (figure 3).

Figure 3.

National influenza-like illness (ILI) activity reported by Indian Health Service (IHS) Influenza Awareness System and ILI Surveillance Network between weeks 13, 2009–2010.

System validation

We reviewed 2375 ambulatory visits at two Alaska IHS hospitals and found 111 visits (4.7%) that met the ILINet ILI case definition. In the same sample IIAS detected 156 ILI visits (6.6%). The system had a sensitivity of 96.4%, a specificity of 97.8%, a positive predictive value of 68.6%, and a negative predictive value of 99.8% for detecting medical record-confirmed ILI visits. The 111 ILI visits found by medical record review contained 144 instances of ICD-9 codes that were part of the system's ILI detection algorithm. The most commonly found ILI codes (ICD-9 code, n, %) were cough (786.2, 32, 22.2%), fever (780.60, 27, 18.8%), influenza (487.1, 24, 16.7%), and unspecified viral infection (79.99, 22, 15.3%). Fifteen of the system's ILI surveillance ICD-9 codes did not appear in any of the medical record-confirmed ILI visits: 13 from the 24 code ILI set and two of the influenza-specific codes (table 1).

The system incorrectly identified 49 visits as having ILI when the patients did not meet the ‘gold standard’ definition on chart review (false positives). Thirty-three of these visits (67%) had influenza-specific ICD-9 codes but lacked chart documentation of a temperature ≥100°F. Sixteen of these visits (33%) had at least one ILI-related ICD-9 code and documented temperature ≥100°F but lacked chart documentation of cough or sore throat. The system incorrectly missed four visits that met the ‘gold standard’ for ILI (false negatives). Two of these visits (50%) had ICD-9 codes that were not part of the 24 ILI code set: 493.9 (asthma, unspecified) and 564 (constipation). Both had a temperature ≥100°F and either cough or sore throat that had been recorded locally but had not been transcribed into the electronic record. The other two false-negative ILI visits (50%) had ICD-9 codes contained in the 24-code set, but had missing or incorrect temperature information in the electronic record.

In an additional comparison, we identified seven IHS facilities that reported regularly (>50% of weeks) to both IIAS and ILINet. These seven facilities were located in five widely dispersed states (Alabama, Arizona, New Mexico, Oklahoma, and Wyoming). The facilities reported a mean of 31–1718 weekly ambulatory visits to the systems. Spearman rank correlation of the weekly ILI visit percentage between IIAS and ILINet showed statistical significance (p<0.05) at five facilities and for the group of seven facilities (r=0.66, p<0.001) (table 2). The reporting week at which peak ILI activity (highest proportion of visits with ILI) occurred was offset by not more than 1 week at the five facilities with mean weekly visits >100 (table 2).

Table 2.

Correlation of weekly ILI visit percentage between the Indian Health Service Influenza Awareness System and ILINet at seven Indian Health Service facilities that reported to both systems between April 4, 2009 and January 2, 2010

| Facility State | Mean weekly visits (IHS) | ILINet peak (%) | Week | IHS peak (%) | Week | Peak offset (weeks)* | Spearman correlation (r)† | p Value |

|---|---|---|---|---|---|---|---|---|

| AL | 31 | 3.6 | Jan 2 | 18.2 | Nov 21 | 6 | 0.13 | 0.52 |

| AZ | 1352 | 10.6 | Sep 12 | 12.8 | Sep 12 | 0 | 0.77 | <0.001 |

| NM1 | 235 | 16.6 | Oct 17 | 6.2 | Oct 10 | 1 | 0.52 | 0.001 |

| NM2 | 305 | 19.4 | Oct 10 | 25.6 | Oct 3 | 1 | 0.86 | <0.001 |

| OK1 | 116 | 8.8 | Oct 17 | 9.1 | Oct 10 | 1 | 0.42 | 0.02 |

| OK2 | 98 | 33.3 | Oct 10 | 6.7 | Sep 12 | 4 | 0.37 | 0.03 |

| WY | 248 | 12.3 | Oct 24 | 13.2 | Oct 17 | 1 | 0.38 | 0.08 |

| All | 2723 | 9.1 | Oct 17 | 8.8 | Oct 10 | 1 | 0.66 | <0.001 |

*Difference, in weeks, of peak ILI percentage visit between the two reporting systems.

†The higher the correlation coefficient, the more similar the two systems in reporting.

HIS, Indian Health Service; ILI, influenza-like illness; ILINet, Influenza-like Illness Surveillance Network.

Discussion

The 2009 influenza A (H1N1) pandemic occurred during the early phase of EHR adoption in the USA, presenting an opportunity to meaningfully use electronic health data for nationwide surveillance. By leveraging previous work with other ILI surveillance systems such as ILINet and ESSENCE, this new surveillance system, IIAS, used EHR data and an innovative analytic process to accurately monitor ILI at IHS facilities. Our evaluation of IIAS provides further evidence of the utility of EHRs for public health disease surveillance. The inclusion of measured temperature in our ILI definition shows that electronic uploading of additional data, such as physical findings or laboratory results, as opposed to only diagnosis data, is feasible and may be an important feature of future electronic surveillance systems.

IIAS met its objectives: accuracy and timeliness in ILI detection, substantial geographic coverage of the AI/AN population, rapid scale-up, minimal resource consumption, and system flexibility. The system had high sensitivity and specificity for identifying ambulatory ILI visits, and its broad geographic coverage made it an integral part of the national IHS H1N1pdm09 response. The system enabled IHS facilities to report to their constituent tribes the magnitude of the H1N1pdm09 influenza burden in their communities; for many, IIAS was the only source of timely ILI data.9 In addition, data were available via the IHS web portal (http://www.ihs.gov/flu) and were used by state health departments and a CDC-led international group tracking the H1N1pdm09 pandemic in indigenous people worldwide.

EHRs make possible the rapid deployment of new surveillance systems without the need to dedicate local personnel to data collection. The presence of national IHS electronic health information facilitated the rapid design and implementation of this EHR-based system during the pandemic. Resources necessary to operate the system at IHS clinical facilities were minimal, in contrast to the relatively personnel-intense nature of surveillance systems that rely on individual reporters, such as the sentinel providers used in ILINet.

EHR-based disease surveillance is adaptable to changing needs. Our system can be modified centrally and software patches distributed nationwide. Revisions to the system during the pandemic expanded data collection from ILI to include influenza hospitalizations, vaccine administration, and potential vaccine adverse events. These modifications enabled the monitoring of vaccine safety with FDA and the study of risk factors for severe influenza disease with CDC.10 11 The system continues to operate, so as analysis, evaluation, and improvement of it proceed, IHS can modify and update the ILI case definition as needed.

Timely availability of data is another hallmark of EHR-based surveillance systems. Our system reviewed and exported health data every 1–3 days, depending on facility patient volume. The automatic data uploads used by IIAS meant that results were available very quickly; DEDP posted weekly surveillance summaries 3 days after the close of data collection for a given influenza week. A recent evaluation of ESSENCE, an electronic syndromic surveillance system designed to detect outbreaks of various disease syndromes, found a 1–3 day delay from patient visit to availability of surveillance data during the H1N1pdm09 pandemic. This delay, as well as inadequate sensitivity and poor positive predictive value, was found to limit the ability of ESSENCE to meet its primary objective of detecting outbreaks.12 Our system's primary goal was not outbreak detection but to monitor geographic and temporal trends in ILI disease burden in AI/AN people. The inclusion of both measured temperature and an ‘or’ statement in the ILI definition used in IIAS (either an influenza-specific ICD-9 code or one or more of 24 ILI-related ICD-9 codes plus a documented temperature ≥100°F) (figure 1) resulted in higher sensitivity (96.4% vs 71.4%) and positive predictive value (68.6% vs 31.8%) than ESSENCE. Finally, IIAS reported data more rapidly than ILINet (3 days vs 1 week following completion of data collection), providing information in a timely manner for public health decision-making.

Improved data validity and reliability are potential advantages of EHR-based surveillance. Our system accurately detected ILI visits when measured against the ILINet case definition. We used the ILINet case definition because both systems conduct syndromic ILI surveillance, and ILINet has functioned nationally for years with demonstrated correlation to laboratory-based influenza surveillance.13 Because ILINet uses a different approach from IIAS, we found differences in ILI reporting between the two systems. Differences in case definitions, the type of visits being observed (all ambulatory visits in our system versus individual provider, department, or facility visits in ILINet), and variability in the method of ILINet surveillance all contributed to the differences in ILI visits reported by the same facility to the two systems. ILINet sites that used systematic and comprehensive chart review (Charlotte Briggs, written personal communication, 2012) showed a high level of concordance with our electronic surveillance system, suggesting that the IHS EHR-based system is more complete and accurate than ILINet sites using less systematic methods. Our system offers the advantage of reporting uniformity across sites because every visit that meets ILI criteria is counted. Supplementing ILINet data with EHR-based surveillance system data that may better target at-risk populations can help to inform local public health action.

Comparison of national IIAS and ILINet data (figure 3) revealed interesting differences that might be explored in subsequent system analysis. First, IIAS data did not show the early May 2009 increase in ILI that ILINet data did. This difference may have arisen due to different healthcare-seeking behavior on the part of IHS patients and the patients monitored in ILINet, perhaps due to the ability of IHS patients to seek care without regard to cost. Second, during the period from weeks 31 (August 8, 2009) until the pandemic peak in mid-October 2009 and during its subsequent decline, the IIAS results led the ILINet results by approximately 1 to 2 weeks. Especially given the disparate impact of influenza-related disease on indigenous people, the apparent early detection of ILI activity by our system is worth considering during future outbreaks. Lastly, IIAS surveillance revealed a second peak of ILI visits in week 7 (February 14, 2010) that ILINet surveillance did not. We speculate that this peak may also reflect different healthcare-seeking behavior by IHS patients. Continued analysis and comparison of the results of the different ILI surveillance systems will be important to maintain, especially as the USA increasingly implements the EHR.

The design, deployment, and function of IIAS faced several challenges. Within IHS several EHR platforms exist, requiring the adaptation of IIAS to these various software systems. IIAS deployment relied on local downloading and installation of software, which was the limiting factor in the system coverage. Its function relied on up-to-date and accurate health visit information (particularly ICD-9 codes) in electronic health datasets. The two false negative ILI visits with inaccurately transcribed temperature information underscore the reliance of the surveillance system on accurate data entry.

We recognize that our evaluation of IIAS had limitations. First, we did not have diagnostic influenza laboratory data to assess the predictive value of ILI for confirmed influenza disease. Second, the fact that IHS is a unique system in the USA that provides both clinical and public health services to a particular population may limit our findings’ generalizability to other clinical and public health systems.

Conclusion

Within 4 weeks of the first recognized US H1N1pdm09 cases, IHS created and implemented a nationwide EHR-based influenza surveillance system, which accurately monitored ILI in a vulnerable population. The use of electronic data from an existing health information system facilitated the rapid and widespread deployment of the new surveillance system, and its results were useful on both a national and local level. Our successful experience during the pandemic H1N1pdm09 influenza season demonstrates the potential capabilities for public health surveillance in the coming age of the EHR.

Acknowledgments

We thank Lenee Blanton of the US Centers for Disease Control and Prevention for ILINet data.

Footnotes

Contributors: JWK: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. JTR: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. JEC: substantial contributions to conception and design, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. LJL: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. AVG: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. SK: substantial contributions to acquisition of data; drafting the article and revising it critically for important intellectual content. MGB: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. AS: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. NLA: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. TC: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. RTB: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. TWH: substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; drafting the article and revising it critically for important intellectual content. In addition, all authors approve of the final version to be published.

Funding: All work was performed by the authors while they were employees of the US Department of Health and Human Services. No separate funding source was used.

Competing interests: None.

Ethics approval: Institutional Review Boards at both the US Centers for Disease Control and Prevention and the US Indian Health Service gave their approval of the publication of this manuscript.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Centers for Medicare & Medicaid Services Medicare and Medicaid programs; electronic health record incentive program; final rule. Washington, DC: Department of Health and Human Services, 2010 [Google Scholar]

- 2.Better Health, Better Care, Lower Costs: Reforming Health Care Delivery. http://www.healthcare.gov/news/factsheets/2011/07/deliverysystem07272011a.html# (accessed 31 Aug 2012).

- 3.Lipton-Dibner W. Getting Doctors and Staff On Board With EHR. Medscape Business of Medicine 2009. http://www.medscape.com/viewarticle/707993 (accessed 31 Aug 2012).

- 4.Centers for Disease Control and Prevention Deaths related to 2009 pandemic influenza A (H1N1) among American Indian/Alaska natives—12 states, 2009. MMWR Morb Mortal Wkly Rep 2009;58:1341–4 [PubMed] [Google Scholar]

- 5.Groom AV, Jim C, Laroque M, et al. Pandemic influenza preparedness and vulnerable populations in tribal communities. Am J Public Health 2009;99:S271–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Indian Health Service Trends in Indian Health, 2002–2003 Edition. Washington, DC: US Department of Health and Human Services, 2009 [Google Scholar]

- 7.Centers for Disease Control and Prevention Overview of Influenza Surveillance in the United States. 2010. http://www.cdc.gov/flu/weekly/fluactivitysurv.htm (accessed 31 Aug 2012).

- 8.Marsden-Haug N, Foster VB, Gould PL, et al. Code-based syndromic surveillance for influenza-like illness by International Classification of Diseases, ninth revision. Emerg Infect Dis 2007;13:207–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wenger JD, Castrodale LJ, Bruden DL, et al. 2009 Pandemic influenza A H1N1 in Alaska: temporal and geographic characteristics of spread and increased risk of hospitalization among Alaska Native and Asian/Pacific Islander people. Clin Infect Dis 2011;52(Suppl 1):S189–97 [DOI] [PubMed] [Google Scholar]

- 10.Penman-Aguilar A, Tucker MJ, Groom AV, et al. Validation of algorithm to identify American Indian/Alaska Native pregnant women at risk from pandemic H1N1 influenza. Am J Obstet Gynecol 2011;204:S46–53 [DOI] [PubMed] [Google Scholar]

- 11.Salmon DA, Akhtar A, Mergler M, et al. Monitoring immunization safety for the pandemic 2009–2010 H1N1 influenza vaccination program. Pediatrics 2011;127(Suppl 1):S78–86 [DOI] [PubMed] [Google Scholar]

- 12.Centers for Disease Control and Prevention Assessment of ESSENCE performance for influenza-like illness surveillance after an influenza outbreak—U.S. Air Force Academy, Colorado, 2009. MMWR Morb Mortal Wkly Rep 2011;60:406–9 [PubMed] [Google Scholar]

- 13.Buffington J, Chapman LE, Schmeltz LM, et al. Do family physicians make good sentinels for influenza? Arch Fam Med 1993;2:859–64 [DOI] [PubMed] [Google Scholar]

- 14.U.S. Census Bureau, Population Division Estimates of the Resident Population by Race and Hispanic Origin for the United States and States: July 1, 2009(SC-EST2009–04). http://www.census.gov/popest/data/state/asrh/2009/index.html (accessed 31 Aug 2012).