Abstract

Individuals with autism spectrum disorder (ASD) often have difficulty with social-emotional cues. This study examined the neural, behavioral, and autonomic correlates of emotional face processing in adolescents with ASD and typical development (TD) using eye-tracking and event-related potentials (ERPs) across two different paradigms. Scanning of faces was similar across groups in the first task, but the second task found that face-sensitive ERPs varied with emotional expressions only in TD. Further, ASD showed enhanced neural responding to non-social stimuli. In TD only, attention to eyes during eye-tracking related to faster face-sensitive ERPs in a separate task; in ASD, a significant positive association was found between autonomic activity and attention to mouths. Overall, ASD showed an atypical pattern of emotional face processing, with reduced neural differentiation between emotions and a reduced relationship between gaze behavior and neural processing of faces.

Keywords: Autism spectrum disorder, Eye-tracking, Event-related potentials, Pupillometry, Emotional face processing

Introduction

Individuals with autism spectrum disorder (ASD) share a range of characteristics, including impairments in social and emotional reciprocity, communicative difficulties, and restricted or repetitive behaviors. Because adept face processing is an essential component of successful social functioning, particularly with respect to interpreting another individual’s emotional state, a growing body of research has focused on understanding emotional face processing difficulties in individuals with ASD. This work has identified atypicalities in the behavioral, neural, and autonomic processing of emotionally-expressive faces (e.g., Ashwin et al. 2006; Bal et al. 2010; Celani et al. 1999; Dawson et al. 2004; de Wit et al. 2008; Hall et al. 2003; Pelphrey et al. 2002; Rutherford and Towns 2008).

Some behavioral studies have found impaired emotion recognition in adults with ASD, including slower and less accurate identification of emotions (e.g., Celani et al. 1999; Ashwin et al. 2006; Baron-Cohen et al. 1997; Sawyer et al. 2012). Work using eye-tracking methods has examined scanning of emotional faces in children and adults with ASD and found that these individuals spend less time on core features (i.e., eyes, nose, and mouth) as compared to their typically-developing peers (e.g., de Wit et al. 2008; Pelphrey et al. 2002). A recent study by Sawyer et al. (2012) measured emotion recognition performance and scanning patterns in individuals with Asperger’s Syndrome (AS) and found that deficits in emotion recognition cannot be fully explained by differences in face scanning, as individuals with AS showed no difference in time on the eye region but still showed this behavioral difference.

While behavioral measures can provide important information about impairments in face processing, recent research has also begun exploring the timing of neural activity associated with these atypicalities using event-related potentials (ERPs). ERPs provide a non-invasive method for assessing the temporal dynamics of the brain from infancy through adulthood (for a review, see Nelson and McCleery 2008), and extensive work has used this method to examine emotional face processing across development. Two early-occurring face-sensitive components have been studied: the P1 (a positive peak at 100 ms after stimulus onset) and the N170 (a negative peak at 170 ms after stimulus onset) (e.g., Bentin et al. 1996; Cassia et al. 2006; Hileman et al. 2011; Itier and Taylor 2002, 2004; Rossion et al. 1999). In typically-developing populations, differential ERP responses to emotional faces have been found in adults, as well as infants as young as 7-months-old, with several studies pointing to larger amplitude face-sensitive components to fearful expressions in comparison to other emotions (e.g., Batty and Taylor 2003; Dawson et al. 2004; Leppanen et al. 2007; Nelson and de Haan 1996; Rossignol et al. 2005).

A growing set of studies with children and adults with ASD have used electrophysiological measures to examine neural responding to faces in general (e.g., Dawson et al. 2002; Hileman et al. 2011; McPartland et al. 2004, 2011; Webb et al. 2006, 2009, 2010; for a review, see Jeste and Nelson 2009), but few have examined ERPs in response to emotionally-salient face stimuli in this population, and for these studies, the results have been mixed. Dawson et al. (2004) presented preschoolers with ASD with fearful and neutral expressions while recording ERPs and found that while typically-developing participants show heightened responses to fearful faces, children with ASD showed no such difference. A recent study, however, found minimal differences between ASD and typically-developing participants. Wong et al. (2008) presented emotional faces to 6- to 10-year-old children with ASD and a group of typically-developing children and found no differences in responding for the two groups (and no differences between emotions) in the P1 and N170, though source localization analyses did reveal differential activity in the ASD group as compared to the typically-developing group.

One reason for the discrepancies found in previous ERP studies may be due to the heterogeneity in face scanning patterns in individuals with ASD. For example, while studies using fMRI have pointed to atypical neural responding to faces in ASD (e.g., Critchley et al. 2000; Ogai et al. 2003; Pierce et al. 2001), more recent work examining scanning patterns alongside neural activation by Dalton et al. (2005) reveals that these differences can be explained in part by differential visual attention to faces. However, the previously discussed work of Sawyer et al. (2012) shows that differences in visual scanning alone cannot account for the impairments in emotion recognition found in their study. Differences in emotion processing in individuals with ASD has also been posited to relate to atypical regulation of the sympathetic nervous system, which can lead to physiological manifestations of heightened arousal, such as increased pupil dilation, heart rate, and skin conductance, and decreased respiratory sinus arrhythmia (e.g., Anderson and Colombo 2009; Bal et al. 2010; Hirstein et al. 2001; Schoen et al. 2008; Vaughn Van Hecke et al. 2009).

The present study used one eye-tracking task and one ERP task in a group of adolescents with ASD and a group of age- and IQ-matched typically-developing (TD) adolescents with three aims: (1) examine scanning patterns and autonomic activation, as indexed by pupil diameter, to emotional faces using eye-tracking, (2) examine early posterior ERP responses (i.e., P1 and N170) to emotional face stimuli and non-social control stimuli (i.e., houses), and (3) examine how behavioral, autonomic, and electrophysiological measures of emotional face processing relate to one another across tasks. The present work had four hypotheses: (1) ASD will show larger pupil diameter, a measure of sympathetic arousal, in response to emotional faces as compared to TD (e.g., Bal et al. 2010; Vaughn Van Hecke et al. 2009; Anderson and Colombo 2009); (2) TD will show the greatest N170 face-sensitive ERP component in response to fearful faces (e.g., Batty and Taylor 2003; Leppanen et al. 2007; Rossignol et al. 2005); ASD will show no differentiation between emotional faces (e.g., Dawson et al. 2004); (3) TD and ASD will both show a significant relationship between behavioral responses to faces in an eye-tracking task (i.e., visual scanning patterns) and N170 face-sensitive ERP efficiency in an electrophysiological task (e.g., McPartland et al. 2004); (4) ASD will show a relationship between autonomic activation (i.e., pupil diameter) and visual scanning patterns (e.g., Dalton et al. 2005). Because results from past work are mixed with regards to group differences in visual scanning patterns to faces (e.g., Pelphrey et al. 2002 find differences; Sawyer et al. 2012 find similarities), analyses of visual scanning patterns were exploratory.

Methods

Participants

The final sample consisted of two groups of adolescent males ranging in age from 13–21 years-old: 18 individuals with a diagnosis of ASD and 20 typically-developing (TD) participants. Participants were included in the final analysis if they had sufficient data from either the eye-tracking or the ERP paradigm. ERP data from four participants (one ASD and three TD) were excluded due to experimenter error. After data-editing, one additional typically-developing participant was excluded due to a high level of ERP noise. Eye-tracking data from two participants (one ASD and one TD) was lost due to experimenter error. The ASD and TD groups did not differ significantly in age (TD group: M = 17.9, SD = 2.5, range: 14.0–21.6; ASD group: M = 17.0, SD = 2.2, range: 13.6–21.1; t(36) = 1.25, p = .22).

Participants with autism were recruited from the community in the greater (Boston) area, including participants who had already participated in studies at (Children’s Hospital Boston, Boston University, or Massachusetts Institute of Technology). Typically-developing participants were recruited from the Participant Recruitment Database of the (Laboratories of Cognitive Neuroscience at Children’s Hospital Boston). Project approval was obtained from the Institutional Review Board of (Children’s Hospital Boston).

All subjects were asked for their medical history during a phone screen at the time of enrollment; for participants over 18, this was self-report, and for those under 18, it was through parental report. The phone screen asked about any past and current medical and psychiatric diagnoses and use of medications. All participants in the autism group had received a prior clinical diagnosis of autism spectrum disorder. Upon enrollment, diagnoses of ASD were confirmed using the Autism Diagnostic Observation Schedule (ADOS; Lord et al. 2002) and the Social Communication Questionnaire (SCQ; Berument et al. 1999). Participants included in the ASD group had an ADOS score >7 (M = 12.4, SD = 4.2, range: 8–23) and an SCQ score >15 (M = 22.3, SD = 3.7, range: 16–28).

Eleven of the 18 participants with ASD reported medications associated with symptoms of ADHD, depression, and psychosis, or some combination thereof. Due to low sample size associated with removal of these participants, the present study was unable to isolate the impact of medications on the results (a limitation that is discussed in the “Discussion” section). Despite the large number of ASD reporting the use of medication, only one subject reported co-morbid diagnoses of Obsessive-Compulsive Disorder (OCD) and Attention Deficit Hyperactivity Disorder (ADHD). Preliminary analyses revealed that removal of this single participant did not influence results of the eye-tracking and ERP analyses, and the subject was therefore included in all subsequent analyses.

TD subjects not reporting any psychiatric disorders or related medications during the enrollment questionnaire were enrolled and subsequently administered the SCQ to rule out an ASD diagnosis. All TD individuals had SCQ scores <5 (M = 1.9, SD = 1.1, range: 1–4). For participants in both groups, IQ was tested using the Kaufman Brief Intelligent Test, Second Edition (K-BIT-2; Kaufman and Kaufman 2004). The ASD and TD groups did not differ significantly in IQ (TD group: M = 116.5, SD = 14.8, range: 81–135; ASD group: M = 111.2, SD = 18.1, range: 63–139; t(35) = .97, p = .34).

ASD and TD subjects enrolled in the present study were enrolled in a larger multi-site and multi-modal study funded by the (Boston Autism Consortium), with fMRI conducted at (Massachusetts Institute of Technology) and MEG conducted at (Massachusetts General Hospital). The ERP task used in the present work was designed in parallel with the task and stimuli used in the fMRI and MEG task. The eye-tracking task was a separately added task based on work in our lab with younger children with ASD.

Stimuli

Eye-Tracking

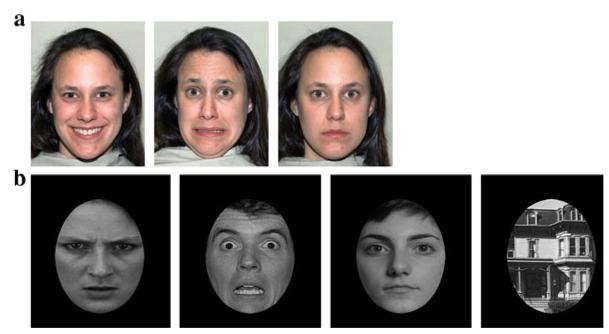

Stimuli for the eye-tracking task consisted of photographs of five female faces, each displaying happy, fearful, or neutral expressions (see Fig. 1a). These images were chosen from the NimStim library (Tottenham et al. 2009).

Fig. 1.

Examples of stimuli used in a the eye-tracking study, and b the ERP experiment

Event-Related Potentials

Stimuli for the ERP task consisted of photographs of male and female faces conveying angry, fearful, or neutral expressions, as well as photographs of houses (see Fig. 1b). Faces were shown in a frontal orientation and cropped with a black ovoid mask to cut out extraneous cues, such as hair, ears, and clothing. Houses were cropped in the same manner to ensure standardization of stimuli. The images of faces were taken from Ekman pictures of facial affect (Ekman and Friesen 1976). Images of houses were taken from prior work by Kanwisher et al. (1997).

Apparatus

Eye-Tracking

Participants were seated on a chair in front of a 17″ TFT Tobii T60 monitor. Images were presented on the monitor using Clearview software running off a Dell laptop (Tobii Technology AB; www.tobii.com). During stimulus presentation, the Tobii monitor recorded gaze location and pupil diameter for both eyes based on the reflection of near-infrared light from the cornea and pupil. Gaze and pupil information was sampled at a frequency of 60 Hz. Monitor specifications included an accuracy of 0.5° of the visual angle and a tolerance of head movements within a range of 44 × 22 × 30 cm.

Event-Related Potentials

Electroencephalogram (EEG) was recorded continuously throughout the ERP task using a 128-channel HydroCel Geodesic Sensor Net, which was referenced on-line to vertex (Cz). The electrical signal was amplified with a .1–100 Hz bandpass filter, digitized at a 500 Hz sampling rate, and stored on a computer disk for further processing and analysis.

Procedure

For the eye-tracking and ERP tasks, participants were seated in a chair in front of a Tobii T60 monitor in an electrically- and sound-shielded testing room with dim lighting. The chair was positioned such that each participant’s eyes were approximately 60 cm from the monitor. All participants were run first through the eye-tracking task and then the ERP task.

Before beginning the eye-tracking experiment, participants completed a calibration procedure to ensure the eye-tracker was adequately tracking gaze. In this calibration procedure, participants were asked to follow a red dot as it appeared at 5 locations: each of the four corners of the monitor and the center of the screen. Following calibration, the Clearview program reported whether the eye-tracker successfully picked up gaze at the five locations. If calibration was successful, the experimental procedure was begun. If calibration was unsuccessful, the monitor and chair were adjusted and the calibration procedure was re-run until it successfully picked up on all five locations of gaze.

Following calibration, participants were presented with 15 images of female faces. Each image in the stimulus set (which included five different female faces, each displaying happy, fearful, and neutral expressions) was presented once in a randomized order. Faces were shown for 5 s each, with a 2-s interstimulus interval in which the screen was blank white. Participants were instructed simply to scan the faces as they appeared on the screen.

Next, continuous EEG was recorded as participants viewed face and house stimuli. Each stimulus was shown on the screen for 500 ms, followed by a white screen for 100 ms. Participants were chosen randomly to view one of three possible versions of the test. Each version showed 46 angry faces, 46 fearful faces, 46 neutral faces, and 46 houses (184 photographs in total) in a semi-randomized order. Between blocks of randomly ordered stimuli, one photograph was shown twice in a row. In an effort to ensure participants were remaining attentive to the stimuli throughout the test, participants were instructed to press a button when they saw two photographs appear in row.

Data Analysis

Eye-Tracking

Gaze and pupil data were collected at a sampling rate of 60 Hz throughout the testing session. Before the eye-tracking data were exported from the Clearview program, specified areas of interest (AOIs) were drawn onto the stimuli, enabling the subsequent export and analysis of gaze data within these particular AOIs. For each face image, three AOIs were drawn: (1) overall face, (2) eye region, and (3) mouth region. The size of each AOI remained constant across the three emotions.

Following the drawing of AOIs, each participant’s eye-tracking data was exported from Clearview. This export yielded a series of text files containing the x and y coordinates of gaze for each eye as well as pupil diameter throughout the test session. The Clearview software also identified the time samples in which gaze fell within one or more of the three specified AOIs. These exported data files were run through a custom-made Python script (Python Programming Language; www.python.org), which extracted and summed gaze duration for each AOI and calculated average pupil diameter. Using gaze duration and pupil diameter from same-emotion trials, three sets of variables were calculated: (1) duration of gaze to the eyes and mouth (out of the 25 s total time each emotion was presented); (2) proportion of time on the eyes and mouth, calculated as a function of total time on the face; and (3) average pupil diameter to the eyes, mouth, and face overall.

Event-Related Potentials

The data were analyzed offline with NetStation 4.2 EEG analysis computer software (EGI: electrical geodesic incorporated). The continuous EEG was digitally filtered using a 30 Hz low-pass filter and then segmented to 1,200 ms after stimulus presentation, with a baseline period beginning 100 ms before stimulus onset. It was then baseline corrected to the mean amplitude of the 100 ms baseline period. Trials with eyeblinks (defined by a voltage exceeding ±140 μV) were excluded from further analysis. The remaining segments were visually examined by an experimenter to identify bad channels and other artifacts (e.g., eye movements, body movements, or high-frequency noise). The whole trial was excluded from further analysis if >10 % of channels were marked bad for that trial.

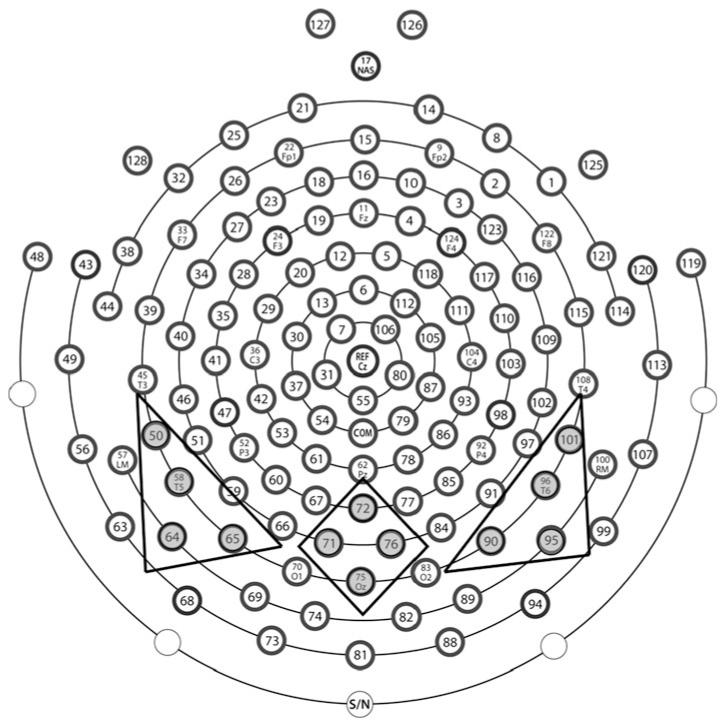

Average waveforms were generated for each individual participant within each experimental condition (angry, fearful, neutral, and house) and re-referenced to the average reference. Participants with fewer than 10 good trials per condition were excluded from further analysis. The mean number of total accepted trials did not differ between the ASD group (M = 115.53, SD = 28.18) and the TD group (M = 132.27, SD = 34.7); t(30) = −1.49, p = .15. Analyses focused on posterior electrodes, which were grouped into left (50, 58, 64, 65), middle (71, 72, 75, 76), and right (90, 95, 96, 101) regions of interest (see Fig. 2). Average peak amplitude and latency values for the P1 and N170 components were extracted for each individual participant for each stimulus condition at each of the three posterior scalp regions.

Fig. 2.

Electrode groupings for 128-channel HydroCel Geodesic Sensor Net

Results

The behavioral, autonomic, and electrophysiological analyses were first conducted with all subjects and then conducted after removal of the two subjects with IQ below 85 (one ASD with IQ of 63 and one TD with IQ of 81). Only one finding changed as a result: Amplitude of the P1 in response to house stimuli (analysis detailed below) went from a significant interaction between region and group (p = .041) to a marginally significant trend (p = .068). All other findings remained consistent across the two analyses and these two subjects were therefore included in all reported results.

Single Task Analyses: Eye-Tracking

Scanning of Eyes and Mouth

To examine duration of looking to the eye and mouth region across the five 5 s presentations of each emotion, a 3 (Emotion: fearful, happy, neutral) × 2 (Region: eyes, mouth) × 2 (Group: ASD, TD) repeated-measures ANOVA with the within-subjects effects of emotion and region and the between-subject effect of group was used. This analysis revealed a main effect of region, with participants spending significantly more time on the eye region (M = 8.9 s, SD = 4.7) as compared to the mouth region (M = 2.4 s, SD = 2.2), F(1,34) = 63.77, p < .001, . Further, an interaction between emotion and region was observed (F(2,68) = 10.16, p < .001, ), such that for the mouth region, participants spent significantly more time during happy faces (M = 3.4 s, SD = 3.2) as compared to fearful (M = 2.4 s, SD = 2.4; t(35) = 2.80, p = .008, d = 0.35) and neutral faces (M = 1.5 s, SD = 1.5; t(35) = 5.46, p < .001, d = 0.76). Looking to the mouth during fearful faces was also significantly greater than during neutral faces, t(35) = 3.75, p = .001, d = 0.45. For the eye region, however, the most time was spent on neutral faces (M = 9.4 s, SD = 5.4) as compared to fearful (M = 8.8 s, SD = 4.9) and happy faces (M = 8.4 s, SD = 4.7), though only the neutral versus happy difference approached significance, t(35) = 1.99, p = .054, d = 0.20. No other main effects or interactions were significant.

A parallel set of analyses examined proportion of time spent on the eyes and mouth and found the identical set of findings. Table 1 presents mean duration and proportion of time spent on the eye and mouth region for each emotion for ASD and TD.

Table 1.

Mean eye-tracking response for ASD and TD (standard deviations in parentheses)

| ASD |

TD |

|||||

|---|---|---|---|---|---|---|

| Fearful | Happy | Neutral | Fearful | Happy | Neutral | |

| Duration (s) | ||||||

| Eye region | 7.5 (4.9) | 7.5 (4.5) | 8.9 (6.1) | 10.0 (4.7) | 9.3 (4.8) | 9.8 (4.8) |

| Mouth region | 2.2 (2.2) | 3.2 (3.8) | 1.1 (1.5) | 2.7 (2.5) | 3.7 (2.8) | 1.7 (1.4) |

| Proportion | ||||||

| Eye region | 0.59 (0.23) | 0.57 (0.18) | 0.63 (0.24) | 0.60 (0.16) | 0.56 (0.17) | 0.63 (0.17) |

| Mouth region | 0.14 (0.11) | 0.18 (0.17) | 0.07 (0.07) | 0.16 (0.12) | 0.20 (0.13) | 0.10 (0.08) |

| Pupil diameter (mm) | ||||||

| Eye region | 3.56 (0.85) | 3.55 (0.80) | 3.57 (0.86) | 3.41 (0.76) | 3.42 (0.74) | 3.46 (0.76) |

| Mouth region | 3.68 (0.81) | 3.60 (0.81) | 3.67 (0.78) | 3.49 (0.71) | 3.47 (0.72) | 3.48 (0.77) |

Mean duration of time to eye and mouth region for each subject calculated from total time across the five 5 s instances of each emotion (25 s total)

Pupil Diameter to Eyes and Mouth

To examine autonomic activation during viewing of emotional faces, a 3 (Emotion) × 2 (Region: eyes, mouth) × 2 (Group) repeated-measures ANOVA was run with the within-subjects effects of emotion and region and the between-subject effect of group. A main effect of region was found, F(1,29) = 23.92, p < .001, , with larger pupil diameter to the mouth region (M = 3.56, SD = .75) than to the eye region (M = 3.49, SD = .78). No other main effects or interactions were significant (see Table 1 for mean pupil diameter for each emotion for ASD and TD).

Eye-Tracking Summary

Analyses of total looking, proportion of looking, and pupil diameter to the eye and mouth regions revealed no differences between ASD and TD. All three analyses found differences between responses to the eye and mouth region, with scanning patterns showing increased looking to the eye region, and the scanning of each emotional face modified by the emotion presented. Finally, pupil diameter showed increased size to the mouth region as compared to the eye region.

Single Task Analyses: Event-Related Potentials

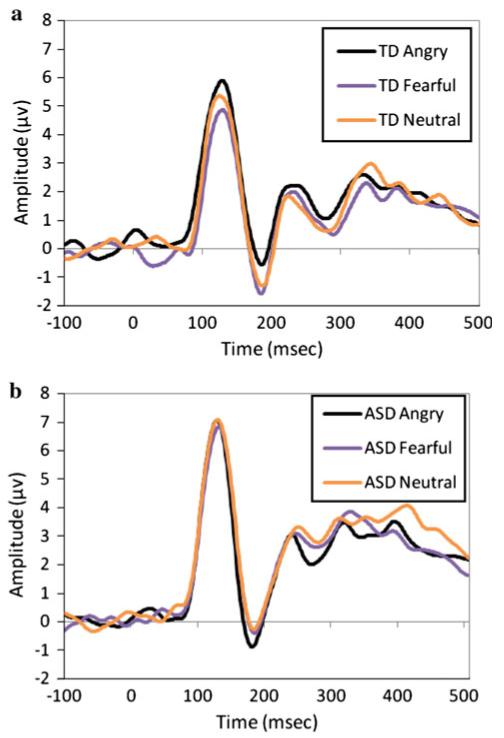

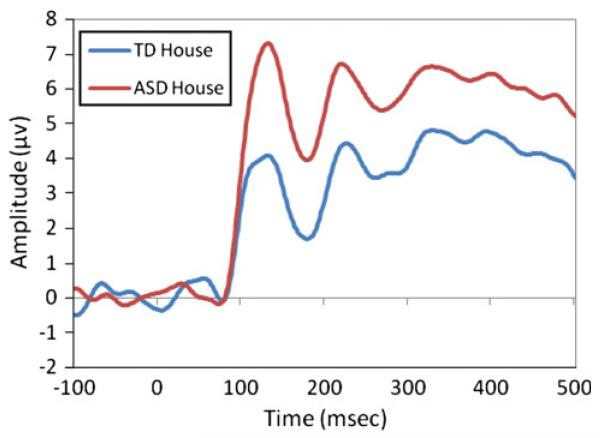

Figures 3 and 4 show grand averaged ERP waveforms for angry, fearful, and neutral faces (Fig. 3a, b) and for house (Fig. 4) for TD and ASD. The present analyses examined peak amplitude of the P1 and N170 components for the three emotional faces, as well as for the house stimuli. Additional analyses examined latency of responses in the P1 and N170 components but revealed no significant effects or interactions with group and will not be discussed in detail in the single-task ERP analyses.

Fig. 3.

Grand averaged waveform for P1 and N170 to angry, fearful, and neutral expressions in a TD participants and b ASD participants

Fig. 4.

Grand averaged waveform for P1 and N170 to house stimuli

Response to Emotional Faces: Amplitude

P1 In order to examine the peak amplitude of the P1 component, a 3 (Emotion: angry, fearful, neutral) × 3 (Region: left, middle, right) × 2 (Group: ASD, TD) repeated-measures ANOVA was run using emotion and region as the within-subjects factors and group as the between-subjects factor. A main effect of region was found, F(2,60) = 11.60, p < .001, , with a significantly higher amplitude component over the middle region (M = 8.95, SD = 4.49) as compared to the left region (M = 6.32, SD = 2.76; t(31) = 4.40, p < .001, d = .71) and the right region (M = 6.59, SD = 2.44; t(31) = 3.52, p = .001, d = .65). No other main effects or interactions were significant.

N170 Parallel to the previous ERP analysis, a 3 (Emotion) × 3 (Region) × 2 (Group) repeated-measures ANOVA was used to examine the peak negative amplitude of the N170 component. This analysis revealed a main effect of region, F(2,60) = 52.69, p < .001, , driven by a significantly larger N170 in lateral regions (right: M = −3.80, SD = 3.23; left: M = −3.14, SD = 2.71) as compared to the middle region (M = 1.18, SD = 3.62; ts > 7.3, ps < .001, d > 1.35). The difference between the right and left region was not significant (p = .15).

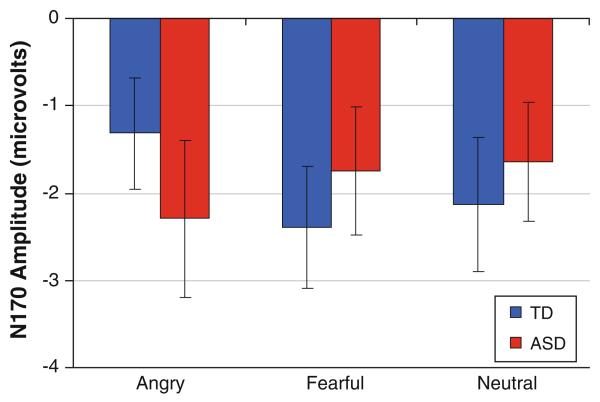

Additionally, an interaction between emotion and group was found, F(2,60) = 3.35, p = .042, (Fig. 5). Post hoc paired comparisons revealed that while ASD showed no differentiation in the N170 component between fearful (M = −1.75, SD = 3.05), angry (M = −2.29, SD = 3.71), and neutral faces (M = −1.64, SD = 2.80) (all ps > .18), TD showed a significantly more negative N170 response to fearful faces (M = −2.39, SD = 2.72) as compared to angry faces (M = −1.32, SD = 2.46; t(14) = 2.27, p = .04, d = .41), and a trend towards a more negative response to neutral faces (M = −2.13, SD = 2.96) than angry faces (t(14) = 1.68, p = .11, d = .30). No other significant main effects or interactions were found.

Fig. 5.

Peak amplitude of the N170 in response to the three emotion faces for ASD and TD participants. A significant group by emotion interaction was found (p < .05) with ASD showing no difference between emotions in this component and TD showing a significantly larger response for fearful faces as compared to angry. Error bars represent ± SE

Response to Houses: Amplitude

P1 A 3 (Region: left, middle, right) × 2 (Group: ASD, TD) repeated-measures ANOVA with region as the within-subjects factor and group as the between-subjects factor examined the amplitude of the P1 component in response to houses and found a main effect of group (F(1,30) = 6.14, p = .019, ): ASD showed a significantly larger P1 amplitude to houses (M = 8.57, SD = 3.48) than TD (M = 5.91, SD = 2.42) (Fig. 4). Further, an interaction between group and region was found, F(2,60) = 3.37, p = .041, , and post hoc comparisons revealed that while ASD showed a significantly larger P1 over the middle region (M = 9.85, SD = 5.04) as compared to the right (M = 7.47, SD = 2.96; t(16) = 2.45, p = .026, d = .58), and a trend towards a larger P1 amplitude over the middle region as compared to the left (M = 8.38, SD = 4.06; t(16) = 1.70, p = .11, d = .32), TD show no significant difference between P1 amplitude across the right (M = 6.56, SD = 2.79), middle (M = 5.80, SD = 3,71), and left regions (M = 5.36, SD = 2.38), though there is a trend towards larger P1 amplitude over the right region as compared to the left region, t(14) = 1.90, p = .08, d = .46.

N170 A parallel analysis used a 3 (Region) × 2 (Group) repeated-measures ANOVA to examine N170 amplitude in response to images of houses. A main effect of region was found, F(2,60) = 4.51, p = .015, , with the greatest minimum amplitude found over the right region. A marginal main effect of group was also found, F(1,30) = 3.77, p = .062, , such that TD participants showed a trend towards a more negative N170 amplitude to houses (M = .20, SD = 3.82) as compared to ASD (M = 2.86, SD = 3.93).

ERP Summary

Analyses of the amplitude of the P1 and N170 components in response to emotional faces and house stimuli revealed a pattern of differences between ASD and TD. The N170 response to faces showed no differentiation between emotions in ASD, while TD showed a larger response to fearful faces as compared with angry faces. ASD did, however, show a greater P1 response to houses as compared to TD. There were no significant group differences in the P1 response to emotional faces or the N170 response to houses.

Cross-Task Analyses: Eye-Tracking and Event-Related Potentials

A final set of analyses were run to examine the relations between performance on the eye-tracking task and the ERP task. Four scanning measures and four ERP measures were used in the present analyses. The face scanning measures included: (1) total time on eyes, (2) total time on mouth, (3) proportion of time on eyes, and (4) proportion of time on mouth; ERP measures included: (1) P1 amplitude to faces; (2) P1 latency to faces; (3) N170 amplitude to faces; and (4) N170 latency to faces. Eye-tracking and ERP variables were collapsed across emotion to capture general behavioral and neural processing of faces. One additional variable, average pupil diameter to faces overall during the eye-tracking task, was also included to assess the relation between scanning measures, ERP responses, and autonomic activation. Pupil diameter to faces overall was used in order to allow data from all eye-tracking subjects to be included, as three subjects had missing data in the eyes and mouth analysis above.

Partial correlations, controlling for age and composite IQ, were run examining each scanning measure alongside five variables, the four ERP variables and pupil diameter. The Bonferroni correction was applied to account for multiple comparisons (i.e., instead of a significance level of p = .05 or less, this level was divided by 5, the number of comparisons for each variable, so a significance level of p = .01 or less was used for the present correlations).

In the ASD group, no significant correlations between eye-tracking scanning patterns and ERP measures were found; however, individuals with ASD showed a significant positive association between total time on the mouth and pupil diameter, r(12) = .68, p = .007, as well as proportion of time on the mouth and pupil diameter, r(12) = .66, p = .01. For the TD group, a significant correlation was identified between scanning patterns and ERPs: a greater proportion of time spent on the eyes during the eye-tracking task was associated with a faster N170 response to faces, r(9) = −0.79, p = .004. A greater proportion of time spent on the mouth during the eye-tracking task was also associated with a slower N170 response to faces, r(9) = 0.67, p = .024, but this finding did not reach significance after accounting for multiple comparisons (corrected p = .01). Additionally, pupil diameter in TD participants was positively associated with P1 latency, such that greater pupil diameter in response to faces related to a slower P1 component, r(9) = 0.78, p = .005.

Discussion

The present study is the first to examine associations between early ERP responses to faces, measures of face scanning, and autonomic arousal to faces in ASD and TD adolescents. Eye-tracking measures revealed similar scanning of emotional faces in ASD and TD groups overall. For example, both groups looked more at the eye region overall and more at the mouth for happy than fearful or neutral faces. While visual scanning of faces showed minimal group differences, ERP measures revealed a lack of neural differentiation between emotion types in the ASD group. Furthermore, while individual differences in looking time predicted ERP differences in the TD group, no such correlations were seen in the ASD group. Finally increased pupil diameter (an index of sympathetic arousal) was associated with increased looking time to the mouth in ASD participants only. Overall these findings suggest subtle differences in neural and behavioral emotion perception in high-functioning adolescents with ASD as compared to age- and IQ-matched typically-developing adolescents.

With regards to our first hypothesis of greater autonomic activation in ASD as compared to TD, there were no group differences in pupil diameter in response to emotional faces. However, in line with our fourth hypothesis of a relation between autonomic activity and scanning patterns, there was a significant positive relation between pupil diameter and attention to the mouth region (both absolute time and proportion of time as a function of total time on the face). Pupil diameter can be controlled by the sympathetic and parasympathetic nervous system, with the sympathetic nervous system mediating responses to emotionally-salient stimuli (e.g., Bradley et al. 2008; Siegle et al. 2003) and the parasympathetic nervous system mediating processes related to increased cognitive load or attention (e.g., Porter et al. 2007; Siegle et al. 2004; for a recent review, see Laeng et al. 2012). Following from recent work by Bradley et al. (2008), pupil diameter in the present work is likely mediated by sympathetic arousal. Bradley et al. (2008) presented typically-developing individuals with images of faces varying in emotion while monitoring pupil dilation, heart rate, and skin conductance. By measuring pupil responses alongside two other measures of autonomic activity, Bradley et al.’s findings (2008) strongly support the conclusion that pupil dilation in response to emotionally-salient faces is moderated by the sympathetic nervous system. It could then be posited that adolescents with ASD in the present study who find faces increasingly arousing have adapted differential scanning strategies, with increased attention to the mouth region. The significant association between sympathetic arousal and gaze patterns in individuals with ASD is consistent with recent work by Dalton et al. (2005, 2007), that found increased amygdala activation associated with increased fixations in the eye region.

Our second hypothesis posited that TD would show greater N170 responses to fearful faces, while ASD would not show electrophysiological differences between the three emotions. TD participants showed significantly greater responses to fearful faces as compared to angry faces, which is consistent with past ERP work in adults showing a larger N170 to fearful faces as compared to other emotions (e.g., Batty and Taylor 2003; Leppanen et al. 2007; Rossignol et al. 2005). However, individuals with ASD showed no differences in N170 amplitude between the three emotions. A potential concern with using passive viewing tasks in ASD is that, since participants are not being asked to look at a particular area of the face, neural differences in face processing could be explained by behavioral differences in scanning (e.g., Dalton et al. 2005). A supplementary analysis of scanning behavior during the eye-tracking task examined whether group differences in gaze behavior during the first 500 ms of presentation of an emotional face were found. No group differences were found in this shortened window (which matched the duration of ERP stimulus presentation), consistent with the lack of group differences in the eye-tracking analyses overall. In line with work by Sawyer et al. (2012), it appears that atypicalities in emotional face processing (i.e., an emotion recognition task in their work and the ERP task in our work) are likely not explained solely by differences in scanning of faces. The present study, however, used a different task to examine scanning behavior, and therefore this possibility of differential viewing patterns during the ERP task cannot be entirely ruled out.

In response to images of houses, individuals with ASD showed a larger amplitude P1 component compared to TD participants, and this response was distributed differently across the scalp by the two groups. Increased P1 responses have been attributed to enhanced early visual processing and attention (e.g., Heinze et al. 1990; Luck et al. 1990), and the present finding suggests increased resources for processing non-social stimuli in ASD alongside a lack of differentiation between emotional faces. This is consistent with studies showing enhanced attention and processing of non-social stimuli in ASD as compared to TD individuals (e.g., Ashwin et al. 2009; Kemner et al. 2008; Webb et al. 2006; Mottron et al. 2009), and fMRI work showing enhanced processing of visuospatial stimuli in early visual areas in ASD (Manjaly et al. 2007; Soulieres et al. 2009). Webb et al. (2006), for example, used ERPs in 3- to 4-year-old children with ASD and typically-developing children and found that the ASD group showed slower neural responding to faces alongside larger amplitude responses to objects.

Enhanced attention to non-social stimuli has also been found to distinguish between first-degree relatives of individuals with ASD and individuals with no familial risk of ASD (e.g., McCleery et al. 2009; Noland et al. 2010). McCleery et al. (2009) used ERPs to measure face and object processing in two groups of 10-month-old infants, one group with an older sibling diagnosed with ASD and another group with no familial risk. While low-risk infants showed faster responses to faces than objects, high-risk infants showed the opposite pattern, and object responses were faster overall for high-risk infants as compared to low-risk infants.

In line with our hypothesis regarding the relation between scanning patterns and ERP responses to faces, our work revealed a significant association between visual scanning patterns to faces and neural processing of faces in TD participants, but no such relationship in individuals with ASD. After accounting for age and IQ, the TD group showed a significant relationship between eye-tracking and ERPs, whereby the greater the proportion of time spent scanning the eye region, the faster the N170 response to faces, a marker of efficient face processing in past work (e.g., McPartland et al. 2004). A different trend was also seen with respect to the mouth region, with a greater proportion of time spent scanning the mouth region associated with a slower N170 response to faces. Relating to a growing body of work highlighting the importance of information extracted from the eye region for successfully navigating the social world (see Itier and Batty 2009, for a review), the present associations showed increasingly efficient (faster) processing of faces in TD participants who spent increasingly greater attention to the eye region.

The present associations found in typically-developing individuals between neural responding to faces and behavioral measures of face processing are consistent with past work: McPartland et al. (2004) showed that faster N170 latencies were marginally associated with better face recognition in typically-developing adolescents and adults, and Hileman et al. (2011) showed greater (more negative) N170 amplitudes associated with fewer atypical social behaviors in typically-developing children and adolescents. Past studies have mixed results examining associations between ERP responses in individuals with ASD and behavioral measures of face recognition and social competence (Hileman et al. 2011; McPartland et al. 2004; Webb et al. 2009, 2010). The absent associations between ERPs and visual scanning measures in adolescents with ASD despite significant relationships between these variables in typically-developing participants suggests that differential mechanisms are responsible for the individual differences in these measures in the ASD group. Future work should continue to delineate the influence of sympathetic arousal on individual variability in neural responding to faces in ASD (e.g., Dalton et al. 2005).

One important limitation of the present study is the use of two different tasks and stimulus sets for the eye-tracking task and the ERP task. As described in the Methods, the ERP task was one of three neuroimaging methods used with the present participants (along with fMRI and MEG), and the task was designed in line with the other two paradigms using the identical set of stimuli. The eye-tracking task was based on prior work using passive viewing of emotional faces in young children with ASD, and the longer duration of stimulus presentation aimed to allow for more naturalistic viewing of faces. Also, although ERP stimuli were presented only when subjects were looking at the display monitor, no behavioral data were collected during this task that could address potential differences in levels of attention and engagement between groups during the ERP session. Future studies should aim to use an integrated eye-tracking and ERP system in order to ensure that looking behavior and attention during ERP cannot account for differences between groups.

A further limitation relates to medication use in the adolescents with ASD. As described in the Methods, 11 of 18 participants were on one or more medications, some typically prescribed for ADHD, some for depression, and some for psychosis. Due to the small sample size remaining after removal of these 11 participants, the present study was unable to fully assess the impact of medication on the present results. Future work should examine this potentially influential factor. Relatedly, the present study did not use a formal clinical interview or questionnaire to screen for comorbid psychopathology, using instead a self-report (or parent-report) measure. Only one participant with ASD reported a comorbid psychiatric disorder; however, since past work finds a high rate of comorbidity in ASD, future work should examine this further through additional screening measures.

In conclusion, the present eye-tracking task with ASD and typically-developing adolescents showed highly similar overall scanning of emotional faces, but differential patterns of activation in the ERP task for the N170 component, with no differentiation between the three faces in ASD. With regards to the overall similarities in eye-tracking alongside differences in ERPs, it is important to note that this high-functioning ASD group who willingly participated in a multi-site study of face processing might be inherently more socially adept compared to other individuals with ASD (see Webb et al. 2009 for discussion) and relatedly, these participants may have already received interventions aimed at increasing overt attention to the eyes and other social behaviors. The subtle differences in neural processing in individuals with ASD alongside behavioral similarities are therefore even more striking. Further, the differential relationships between visual scanning strategies favoring the eyes and efficiency in the N170 face-processing component in TD and between increased sympathetic arousal and greater attention to the mouth region in ASD points to different underlying mechanisms for face processing between groups, and future work should continue to explore these differential processes.

Acknowledgments

This research was made possible by a grant from the Autism Consortium for a multi-site, multi-modal study (in collaboration with Boston University, Massachusetts Institute of Technology, and Massachusetts General Hospital). We would like to thank our many collaborators across sites who contributed to study conception and design, including John Gabrieli, Robert Joseph, Nancy Kanwisher, Tal Kenet, Pawan Sinha, and Helen Tager-Flusberg. This work was conducted in accordance with the ethical standards of the APA and all the authors concur with the contents of the manuscript.

Contributor Information

Jennifer B. Wagner, Laboratories of Cognitive Neuroscience, Division of Developmental Medicine, Children’s Hospital Boston, 1 Autumn Street, 6th Floor, Boston, MA 02215, USA; Harvard Medical School, Boston, MA, USA

Suzanna B. Hirsch, Laboratories of Cognitive Neuroscience, Division of Developmental Medicine, Children’s Hospital Boston, 1 Autumn Street, 6th Floor, Boston, MA 02215, USA

Vanessa K. Vogel-Farley, Laboratories of Cognitive Neuroscience, Division of Developmental Medicine, Children’s Hospital Boston, 1 Autumn Street, 6th Floor, Boston, MA 02215, USA

Elizabeth Redcay, Department of Psychology, University of Maryland, College Park, MD, USA.

Charles A. Nelson, Laboratories of Cognitive Neuroscience, Division of Developmental Medicine, Children’s Hospital Boston, 1 Autumn Street, 6th Floor, Boston, MA 02215, USA; Harvard Medical School, Boston, MA, USA

References

- Anderson CJ, Colombo J. Larger tonic pupil size in young children with autism spectrum disorder. Developmental Psychobiology. 2009;51:207–211. doi: 10.1002/dev.20352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashwin E, Ashwin C, Rhydderch D, Howells J, Baron-Cohen S. Eagle-eyed visual acuity: An experimental investigation of enhanced perception in autism. Biological Psychiatry. 2009;65:17–21. doi: 10.1016/j.biopsych.2008.06.012. [DOI] [PubMed] [Google Scholar]

- Ashwin C, Chapman E, Colle L, Baron-Cohen S. Impaired recognition of negative basic emotions in autism: A test of the amygdala theory. Social Neuroscience. 2006;1:349–363. doi: 10.1080/17470910601040772. [DOI] [PubMed] [Google Scholar]

- Bal E, Harden E, Lamb D, Vaughan Van Hecke A, Denver JW, Porges SW. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. Journal of Autism and Developmental Disorders. 2010;40:358–370. doi: 10.1007/s10803-009-0884-3. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Jolliffe T. Is there a “language of the eyes”? Evidence from normal adults, and adults with autism or Asperger Syndrome. Visual Cognition. 1997;4:311–331. [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berument S, Rutter M, Lord C, Pickles A, Bailey A. Autism screening questionnaire: Diagnostic validity. British Journal of Psychiatry. 1999;175:444–451. doi: 10.1192/bjp.175.5.444. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Miccoli L, Escrig MA, Lang PJ. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology. 2008;45:602–607. doi: 10.1111/j.1469-8986.2008.00654.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cassia V, Kuefner D, Westerlund A, Nelson C. A behavioural and ERP investigation of 3-month-olds’ face preferences. Neuropsychologia. 2006;44:2113–2125. doi: 10.1016/j.neuropsychologia.2005.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Celani G, Battacchi MW, Arcidiacono L. The understanding of emotional meaning of facial expressions in people with autism. Journal of Autism and Developmental Disorders. 1999;29:57–66. doi: 10.1023/a:1025970600181. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Daly EM, Bullmore ET, Williams SC, Van Amelsvoort T, Robertson DM, et al. The functional neuroanatomy of social behaviour: Changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain: A. Journal of Neurology. 2000;123:2203–2212. doi: 10.1093/brain/123.11.2203. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Alexander AL, Davidson RJ. Gaze-fixation, brain activation, and amygdala volume in unaffected siblings of individuals with autism. Biological Psychiatry. 2007;61:512–520. doi: 10.1016/j.biopsych.2006.05.019. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, et al. Gaze-fixation and the neural circuity of face processing in autism. Nature Reviews Neuroscience. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Carver L, Meltzoff AN, Panagiotides H, McPartland J, Webb SJ. Neural correlates of face and object recognition in young children with autism spectrum disorder, developmental delay, and typical development. Child Development. 2002;73:700–717. doi: 10.1111/1467-8624.00433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, Carver L, Panagiotides H, McPartland J. Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Developmental Science. 2004;7:340–359. doi: 10.1111/j.1467-7687.2004.00352.x. [DOI] [PubMed] [Google Scholar]

- de Wit TC, Falck-Ytter T, von Hofsten C. Young children with autism spectrum disorder look differently at positive versus negative emotional faces. Research in Autism Spectrum Disorders. 2008;2:651–659. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologists Press; Palo Alto, CA: 1976. [Google Scholar]

- Hall GBC, Szechtman H, Nahmias C. Enhanced salience and emotion recognition in autism: A PET study. American Journal of Psychiatry. 2003;160:1439–1441. doi: 10.1176/appi.ajp.160.8.1439. [DOI] [PubMed] [Google Scholar]

- Heinze HJ, Luck SJ, Mangun GR, Hillyard SA. Visual event-related potentials index focused attention within bilateral stimulus arrays, I: Evidence for early selection. Electroencephalography and Clinical Neurophysiology. 1990;75:511–527. doi: 10.1016/0013-4694(90)90138-a. [DOI] [PubMed] [Google Scholar]

- Hileman CM, Henderson H, Mundy P, Newell L, Mark J. Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Developmental Neuropsychology. 2011;36:214–236. doi: 10.1080/87565641.2010.549870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirstein W, Iverson P, Ramachandran VS. Autonomic responses of autistic children to people and objects. Proceedings of the Royal Society of London B. 2001;268:1883–1888. doi: 10.1098/rspb.2001.1724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier RJ, Batty M. Neural bases of eye and gaze processing: The core of social cognition. Neuroscience and Biobehavioral Reviews. 2009;33:843–863. doi: 10.1016/j.neubiorev.2009.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: A repetition study using ERPs. Neuroimage. 2002;15:353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14:132–142. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Jeste SS, Nelson CA. Event related potentials in the understanding of autism spectrum disorders: An analytical review. Journal of Autism and Developmental Disorders. 2009;39:495–510. doi: 10.1007/s10803-008-0652-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extra striate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaufman AS, Kaufman NL. Kaufman Brief Intelligence Test. 2nd ed. Pearson, Inc.; Bloomington, MN: 2004. [Google Scholar]

- Kemner C, van Ewijk L, van Engeland H, Hooge I. Brief report: Eye movements during visual search tasks indicate enhanced stimulus discriminability in subjects with PDD. Journal of Autism and Developmental Disorders. 2008;38:553–557. doi: 10.1007/s10803-007-0406-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laeng B, Sirois S, Gredeback G. Pupillometry: A window to the preconscious? Perspectives on Psychological Science. 2012;7:18–27. doi: 10.1177/1745691611427305. [DOI] [PubMed] [Google Scholar]

- Leppanen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Development. 2007;78:232–245. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S. Autism Diagnostic Observation Schedule: ADOS: Manual. Western Psychological Services; Los Angeles, CA: 2002. [Google Scholar]

- Luck SJ, Heinze HJ, Mangun GR, Hillyard SA. Visual event-related potentials index focused attention within bilateral stimulus arrays, II: Functional dissociation of P1 and N1 components. Electroencephalography and Clinical Neurophysiology. 1990;75:528–542. doi: 10.1016/0013-4694(90)90139-b. [DOI] [PubMed] [Google Scholar]

- Manjaly ZM, Bruning N, Neufang S, Stephan KE, Brieber S, Marshall JC, et al. Neurophysiological correlates of relatively enhanced local visual search in autistic adolescents. Neuroimage. 2007;35:283–291. doi: 10.1016/j.neuroimage.2006.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCleery JP, Askshoomoff N, Dobkins KR, Carver LJ. Atypical face versus object processing and hemispheric asymmetries in 10-month-old infants at risk for autism. Biological Psychiatry. 2009;66:950–957. doi: 10.1016/j.biopsych.2009.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45:1235–1245. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- McPartland JC, Webb SJ, Keehn B, Dawson G. Patterns of visual attention to faces and objects in autism spectrum disorder. Journal of Autism and Developmental Disorders. 2011;41:148–157. doi: 10.1007/s10803-010-1033-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mottron L, Dawson M, Soulieres I. Enhanced perception in savant syndrome: Patterns, structure and creativity. Philosophical Transactions of the Royal Society B. 2009;364:1385–1391. doi: 10.1098/rstb.2008.0333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology. 1996;29:577–595. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson CA, McCleery JP. Use of event-related potentials in the study of typical and atypical development. Journal of American Academy of Child and Adolescent Psychiatry. 2008;47:1252–1261. doi: 10.1097/CHI.0b013e318185a6d8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noland JS, Reznick JS, Stone WL, Walden T, Sheridan EH. Better working memory for non-social targets in infant siblings of children with Autism Spectrum Disorder. Developmental Science. 2010;13:244–251. doi: 10.1111/j.1467-7687.2009.00882.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogai M, Matsumoto H, Suzuki K, Ozawa F, Fukudu R, Uchiyama I, et al. fMRI study of recognition of facial expressions in high-functioning autistic patients. NeuroReport. 2003;14:559–563. doi: 10.1097/00001756-200303240-00006. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick J, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Pierce K, Muller RA, Ambrose J, Allen G, Courchesne E. Face processing occurs outside the fusiform ‘face area’ in autism: Evidence from functional MRI. Brain. 2001;124:2059–2073. doi: 10.1093/brain/124.10.2059. [DOI] [PubMed] [Google Scholar]

- Porter G, Troscianki T, Gilchrist ID. Effort during visual search and counting: Insights from pupillometry. Quarterly Journal of Experimental Psychology. 2007;60:211–229. doi: 10.1080/17470210600673818. [DOI] [PubMed] [Google Scholar]

- Rossignol M, Philippot P, Douilliez C, Crommelinck M, Campanella S. The perception of fearful and happy facial expression is modulated by anxiety: An event-related potential study. Neuroscience Letters. 2005;377:115–120. doi: 10.1016/j.neulet.2004.11.091. [DOI] [PubMed] [Google Scholar]

- Rossion B, Delvenne JF, Debatisse D, Goffaux V, Bruyer R, Crommelinck M, et al. Spatio-temporal localization of the face inversion effect: An event related potentials study. Biological Psychology. 1999;50:173–189. doi: 10.1016/s0301-0511(99)00013-7. [DOI] [PubMed] [Google Scholar]

- Rutherford MD, Towns AM. Scan path differences and similarities during emotion perception in those with and without autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38:1371–1381. doi: 10.1007/s10803-007-0525-7. [DOI] [PubMed] [Google Scholar]

- Sawyer ACP, Williamson P, Young RL. Can gaze avoidance explain why individuals with Asperger’s syndrome can’t recognize emotions from facial expressions? Journal of Autism and Developmental Disorders. 2012;42:606–618. doi: 10.1007/s10803-011-1283-0. [DOI] [PubMed] [Google Scholar]

- Schoen SA, Miller LJ, Brett-Green B, Hepburn SL. Psychophysiology of children with autism spectrum disorder. Research in Autism Spectrum Disorders. 2008;2:417–429. [Google Scholar]

- Siegle GJ, Steinhauer SR, Carter CS, Ramel W, Thase ME. Do the seconds turn into hours? Relationships between sustained pupil dilation in response to emotional information and self-reported rumination. Cognitive Therapy and Research. 2003;27:365–383. [Google Scholar]

- Siegle GJ, Steinhauer SR, Thase ME. Pupillary assessment and computational modeling of the stroop task in depression. International Journal of Psychophysiology. 2004;52:63–76. doi: 10.1016/j.ijpsycho.2003.12.010. [DOI] [PubMed] [Google Scholar]

- Soulieres I, Dawson M, Samson F, Barbeau EB, Sahyoun C, Strangman GE, et al. Enhanced visual processing contributes to matrix reasoning in autism. Human Brain Mapping. 2009;30:4082–4107. doi: 10.1002/hbm.20831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon A, McCarry T, Nurse M, Hare T, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaughn Van Hecke A, Lebow J, Bal E, Lamb D, Harden E, Kramer A, et al. Electroencephalogram and heart rate regulation to familiar and unfamiliar people in children with autism spectrum disorders. Child Development. 2009;80:1118–1133. doi: 10.1111/j.1467-8624.2009.01320.x. [DOI] [PubMed] [Google Scholar]

- Webb SJ, Dawson G, Bernier R, Panagiotides H. ERP evidence of atypical face processing in young children with autism. Journal of Autism and Developmental Disorders. 2006;36:881–890. doi: 10.1007/s10803-006-0126-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb SJ, Jones EJH, Merkle K, Murias M, Greenson J, Richards T, et al. Response to familiar faces, newly familiar faces, and novel faces as assessed by ERPs is intact in adults with autism spectrum disorders. International Journal of Psychophysiology. 2010;77:106–117. doi: 10.1016/j.ijpsycho.2010.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb SJ, Merkle K, Murias M, Richards T, Aylward E, Dawson G. ERP responses differentiate inverted but not upright face processing in adults with ASD. Social, Cognitive & Affective Neuroscience. 2009 doi: 10.1093/scan/nsp002. doi:10.1093/scan/nsp002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong TKW, Fung PCW, Chua SE, McAlonan GM. Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: Dipole source analysis of event-related potentials. European Journal of Neuroscience. 2008;28:407–416. doi: 10.1111/j.1460-9568.2008.06328.x. [DOI] [PubMed] [Google Scholar]