Abstract

Properly coordinating cooperation is relevant for resolving public good problems, such as clean energy and environmental protection. However, little is known about how individuals can coordinate themselves for a certain level of cooperation in large populations of strangers. In a typical situation, a consensus-building process rarely succeeds, owing to a lack of face and standing. The evolution of cooperation in this type of situation is studied here using threshold public good games, in which cooperation prevails when it is initially sufficient, or otherwise it perishes. While punishment is a powerful tool for shaping human behaviours, institutional punishment is often too costly to start with only a few contributors, which is another coordination problem. Here, we show that whatever the initial conditions, reward funds based on voluntary contribution can evolve. The voluntary reward paves the way for effectively overcoming the coordination problem and efficiently transforms freeloaders into cooperators with a perceived small risk of collective failure.

Keywords: public good game, evolution of cooperation, reward, punishment, coordination problem

1. Introduction

Public goods, such as clean energy and environmental protection, are the building blocks of sustainable human societies and failures in these areas can have far-reaching effects. However, the private provision of public goods can pose a challenge, as often cooperation and coordination do not succeed (e.g. [1]). First, voluntary cooperation to provide public goods suffers from self-interest behaviours. Exploiters can freeload on the efforts of others. In collective actions, proper coordination among individuals is usually required to attain a cooperation equilibrium. Otherwise, freeloading leads individuals to the non-cooperation equilibrium, which is a social trap.

The coordination problem has been broadly studied by game theory and its ubiquity is indicated by a variety of names: coordination game, assurance game, stag-hunt game, volunteer's dilemma or start-up problem [2–4]. Evolutionary game models tackling sizeable groups are often built on public good games of cooperation and defection but have generally resulted in a system that has two equilibria (ones with no cooperation and certain-level cooperation) [5,6]. Thus, it is a challenge to develop a mechanism that allows populations to evolve towards the cooperation equilibrium, independent of the initial conditions. The situation is most stringent in cases where unanimous agreement is required for the public good, as the only desirable initial condition is a state in which almost all cooperate. Theoretical and empirical analyses have clarified that prior communication [7] or social exchange situations [8] can facilitate the selection of the cooperation equilibrium. Little is known, however, about how equilibrium selection can materialize from one-shot anonymous interactions in large populations, where such a consensus-building process is less likely to succeed. Previous studies showed that the higher the risk perception of collective failure, the higher the chance of coordinating cooperative actions [9–11]. Recent research has shown that considering institutional punishment can further relax the initial conditions for establishing cooperation [12].

What happens if reward is considered instead of punishment? Reward is one of the most studied structural solutions for cooperation in sizeable groups and inspires cooperation [13,14]. While in real life there exists an array of subsidy systems for encouraging cooperative actions, here we turn to endogenous fundraising (see [15] for formal rewards). Early work revealed that replicator dynamics [16], whereby the more successful strategy spreads further, can lead to the dynamic maintenance of cooperation in public good games with reward funds [17]. This model considered three strategies: (i) a cooperator or (ii) defector in the standard public goods game, or (iii) a rewarder that contributes to both the public good and reward fund. Only those who contribute to the public good are invited to share the returns from the reward fund. Rewarders can spread even in a population of defectors, because defectors are excluded from the rewards. The fundraising itself, however, is voluntary and costly. Thus, this incentive scheme can easily be subverted by ‘second-order freeloading’ cooperators who contribute to the public good but not the rewards. In the next step, as contribution to the public good is also costly, cooperators will be displaced by ‘first-order freeloading’ defectors. This leads to a rock–scissors–paper type of cyclical replacement among the three strategies.

2. Model

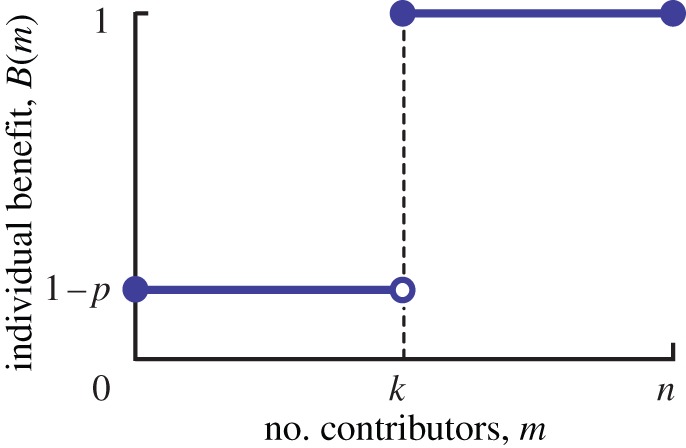

We extend public good games with reward funds [17] with a provision threshold [12], which can easily generate a coordination situation. We consider infinitely large, well-mixed populations from which n individuals with n > 2 are randomly sampled and form a gaming group. After one interaction, the group is dissolved. We assume the three strategies as before: both the rewarder and cooperator are willing to contribute with a personal cost c > 0; the defector contributes nothing and incurs no cost. All of the public benefit is provided only if the number of contributors m (0 ≤ m ≤ n) exceeds or equals a threshold value k (1 ≤ k ≤ n); otherwise, just part of the public benefit, discounted by a risk factor p (0 ≤ p ≤ 1), is provided. However, the resulting benefit goes to every player equally, whatever she/he contributes. The individual benefit is given by B(m) = 1, if m ≥ k; otherwise, B(m) = 1 − p (figure 1).

Figure 1.

Step returns in the public good. Each member receives benefits given by 1 if the number of contributors in the group m exceeds or equals threshold k (1 ≤ k ≤ n); otherwise, 1 − p. (Online version in colour.)

Next, we consider a voluntary reward fund for the threshold public good game. Beforehand, only the rewarders are willing to contribute c′ > 0 to the fund; after the game, the integrated fund multiplied by interest rate r′ > 1 will be shared equally among m contributors (i rewarders and m − i cooperators) to the public good. The reward fund is thus a ‘club’ good, excluding the defectors. In summary, a rewarder earns B(m) − c + c′r′i/m − c′, a cooperator B(m) − c + c′r′i/m and a defector B(m). (The corresponding replicator equations are available in the electronic supplementary material.) Furthermore, if the reward fund is very beneficial with r′ ≥ n (i.e. its marginal return is non-negative, c′r′/n − c′ ≥ 0) the reward fund is sustainable against the second-order freeloaders. Thus, we assume r′ < n.

3. Results

First, we look at the evolutionary outcomes without rewarders. The step function B(m) with the intermediate threshold value k (figure 1) can lead to the bistability of both no cooperation and a mixture of cooperation and defection for a sufficiently large risk factor p for 1 < k < n, and for k = 1, with just mixed cooperation [10,18]. Pure coordination between no and 100% cooperation only occurs if k = n, a case where avoiding a collective failure requires homogeneous cooperation among all participants. It holds for 1 < k ≤ n that the larger the risk p, the smaller (larger) the attraction for a no (certain) cooperation equilibrium [10]. To bring about bistability, the critical risk factor p* takes its smallest value in the case of unanimous agreement.

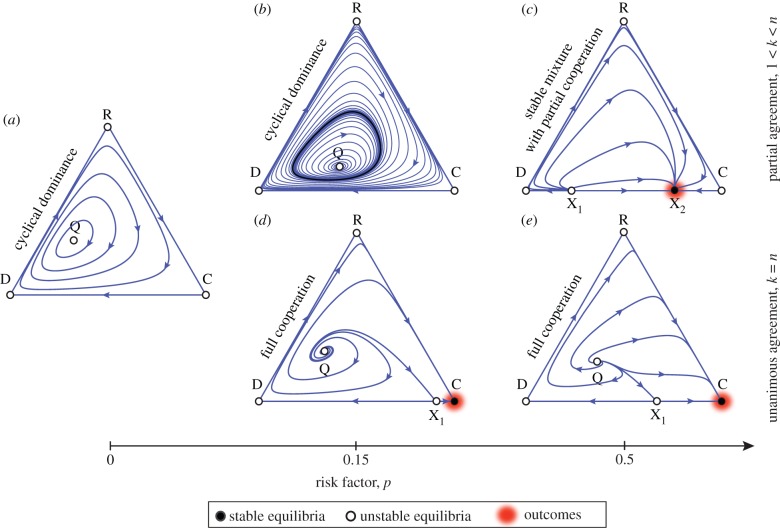

Evolutionary outcomes change dramatically with rewarders (figure 2; the electronic supplementary materials for details). The analytical investigation shows that if a certain level of rewards is considered, the replicator dynamics first lead the rewarders to invade a state where all individuals defect. Individuals are better off rewarding in mixed groups (defectors and rewarders) as long as the most promising return of the fund c′r′ is greater than the total cost c + c′. The non-rewarding cooperators then invade the population of the rewarders and propagate. This is common for whatever the risk factor p and provision threshold k and leads to a state where all individuals cooperate.

Figure 2.

Threshold public good games with reward funds. The simplex represents the state space. The three nodes, D: 100% defectors, C: 100% cooperators and R: 100% rewarders, are trivial equilibria. (a) Risk zero (p = 0). The unique interior equilibrium Q is surrounded by closed orbits, along which the three strategies dynamically coexist. Boundary orbits form a cycle connecting the three nodes. (b,c) Partial agreement (1 < k < n). For a small risk p, there can exist a stable closed orbit (bold, black line) (b). When p goes beyond a critical value p*, a mix among the three strategies is no longer sustainable, and only cooperators can stably coexist with defectors at point X2. All interior population states evolve to this state (c). (d,e) Unanimous agreement (k = n). When p increases beyond p*, all individuals end up with the all-cooperation equilibrium C. Parameters: n = 5, c = c′ = 0.1, r′ = 2.5, and for (b,c), k = 3. (Online version in colour.)

In the absence of bistability for the threshold public good game, the population state pulls back to states in which defectors are the majority. Thus, the population ends up with a rock–scissors–paper cycle, and the dominant strategy is replaced by a rotation from defector to rewarder to cooperator to defector (figure 2a,b). Similar oscillatory dynamics for cooperation and rewards have been obtained in complicated models with reputation systems [14]. In the presence of the bistability, the resulting mixed state of defectors and cooperators is sustainable, even after the reward fund falls. Once escaping the state of 100% defectors, the population evolves to the mixed state for 1 < k < n (figure 2c), with k = n being the state of 100% cooperators (figure 2d,e). Therefore, it is through the rise and fall of the reward that the coordination problem is resolved.

4. Discussion

Voluntary rewards can provide a powerful mechanism for overcoming coordination problems, without considering second-order punishment. This is an intriguing scenario that is not easily predicted using traditional models with voluntary punishment [16]. Furthermore, second-order freeloading has been an issue that needs to be defeated or suppressed [13,19,20]. The present model is in striking contrast to previous models and can generate 100% cooperation when second-order freeloading terminates the voluntary rewarders.

There are three key steps for evolving to the cooperation equilibrium. First, the rewarders need to evolve among the defectors. This requires that the average fitness of the rewarders is higher than that of the defectors. This is the case when the returning reward offsets the costs to the public good and reward fund (c′r′ > c + c′). We note that the degree of risk factor p does not affect this result, because there is positive feedback between the increase in the number of rewarders and the jump in the return. Second, the rewarders are replaced with cooperators because, assuming mild rewards (r′ < n), switching to cooperators causes an increase in fitness (c′ − c′r′/n > 0). Finally, the resulting state needs to be stable, despite the fact that a single mutant defector has a higher net benefit in a group of cooperators. For a sufficiently large p, however, switching to defection leads to a loss where the average fitness of the defectors falls below that of cooperators, and thus the cooperation equilibrium is stabilized.

Collaboration results for transforming defectors into cooperators in coordination games have been obtained by considering optional participation [21,22] or institutional punishment [12]. Optional participation can provide a simple but effective resolution for escaping the social trap [13,16]. In human societies, however, there are many issues at play, such as nationality, religion, energy and environment. The present model focuses on such an unavoidable situation, and thus players are forcibly admitted to games.

Although institutional punishment influences the establishment of a stable level of cooperation, in large groups it may face a coordination problem in itself [7,23]. Thus, it would be difficult for a single punisher to make an impact that activates a sanctioning system that covers the whole group. What about punishing those who do not make any contribution to institutional punishment? This triggers an infinite regression to the question: who pays for (higher order) punishment? By contrast, a reward fund can rise in response to a single volunteer and then spread in a population of defectors.

We revealed that cooperation with a reward fund is a more powerful tool than institutional punishment. Voluntary rewarding is an efficient mechanism that enables the resolution of coordination problems with minimal risk.

Acknowledgements

We thank Karl Sigmund and Voltaire Cang.

Funding statement

T.S. was supported by the Foundational Questions in Evolutionary Biology Fund (grant no. RFP-12-21).

References

- 1.Barrett S, Dannenberg A. 2012. Climate negotiations under scientific uncertainty. Proc. Natl Acad. Sci. USA 109, 17 372–17 376 (doi:10.1073/pnas.1208417109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kollock P. 1998. Social dilemmas: the anatomy of cooperation. Annu. Rev. Sociol. 24, 183–214 (doi:10.1146/annurev.soc.24.1.183) [Google Scholar]

- 3.Archetti M, Scheuring I. 2012. Review: game theory of public goods in one-shot social dilemmas without assortment. J. Theor. Biol. 299, 9–20 (doi:10.1016/j.jtbi.2011.06.018) [DOI] [PubMed] [Google Scholar]

- 4.Centola DM. 2013. Homophily, networks, and critical mass: solving the start-up problem in large group collective action. Ration. Soc. 25, 3–40 (doi:10.1177/1043463112473734) [Google Scholar]

- 5.Boza G, Szmadá S. 2010. Beneficial laggards: multilevel selection, cooperative polymorphism and division of labour in threshold public good games. BMC Evol. Biol. 10, 336 (doi:10.1186/1471-2148-10-336) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Archetti M, Scheuring I. 2011. Coexistence of cooperation and defection in public goods games. Evolution 65, 1140–1148 (doi:10.1111/j.1558-5646.2010.01185.x) [DOI] [PubMed] [Google Scholar]

- 7.Boyd R, Gintis H, Bowles S. 2010. Coordinated punishment of defectors sustains cooperation and can proliferate when rare. Science 328, 617–620 (doi:10.1126/science.1183665) [DOI] [PubMed] [Google Scholar]

- 8.Hayashi N, Ostrom E, Walker J, Yamagishi T. 1999. Reciprocity, trust, and the sense of control: a cross-societal study. Ration. Soc. 11, 27–46 (doi:10.1177/104346399011001002) [Google Scholar]

- 9.Milinski M, Sommerfeld RD, Krambeck HJ, Reed FA, Marotzke J. 2008. The collective-risk social dilemma and the prevention of simulated dangerous climate change. Proc. Natl Acad. Sci. USA 105, 2291–2294 (doi:10.1073/pnas.0709546105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Santos FC, Pacheco JM. 2011. Risk of collective failure provides an escape from the tragedy of the commons. Proc. Natl Acad. Sci. USA 108, 10 421–10 425 (doi:10.1073/pnas.1015648108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Archetti M. 2009. Cooperation as a volunteer's dilemma and the strategy of conflict in public goods games. J. Evol. Biol. 22, 2192–2200 (doi:10.1111/j.1420-9101.2009.01835.x) [DOI] [PubMed] [Google Scholar]

- 12.Vasconcelos VV, Santos FC, Pacheco JM. 2013. A bottom-up institutional approach to cooperative governance of risky commons. Nat. Clim. Change 3, 797–801 (doi:10.1038/nclimate1927) [Google Scholar]

- 13.Sasaki T, Brännström Å, Dieckmann U, Sigmund K. 2012. The take-it-or-leave-it option allows small penalties to overcome social dilemmas. Proc. Natl Acad. Sci. USA 109, 1165–1169 (doi:10.1073/pnas.1115219109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hauert C. 2010. Replicator dynamics of reward & reputation in public goods games. J. Theor. Biol. 267, 22–28 (doi:10.1016/j.jtbi.2010.08.009) [DOI] [PubMed] [Google Scholar]

- 15.Chen X, Gross T, Dieckmann U. 2013. Shared rewarding overcomes defection traps in generalized volunteer's dilemmas. J. Theor. Biol. 335, 13–21 (doi:10.1016/j.jtbi.2013.06.014) [DOI] [PubMed] [Google Scholar]

- 16.Sigmund K. 2010. The calculus of selfishness. Princeton, NJ: Princeton University Press [Google Scholar]

- 17.Sasaki T, Unemi T. 2011. Replicator dynamics in public goods games with reward funds. J. Theor. Biol. 287, 109–114 (doi:10.1016/j.jtbi.2011.07.026) [DOI] [PubMed] [Google Scholar]

- 18.Bach LA, Helvik T, Christiansen FB. 2006. The evolution of n-player cooperation: threshold games and ESS bifurcations. J. Theor. Biol. 238, 426–434 (doi:10.1016/j.jtbi.2005.06.007) [DOI] [PubMed] [Google Scholar]

- 19.Sigmund K, De Silva H, Traulsen A, Hauert C. 2010. Social learning promotes institutions for governing the commons. Nature 466, 861–863 (doi:10.1038/nature09203) [DOI] [PubMed] [Google Scholar]

- 20.Perc M. 2012. Sustainable institutionalized punishment requires elimination of second-order free-riders. Sci. Rep. 2, 344 (doi:10.1038/srep00344) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bchir MA, Willinger M. 2013. Does a membership fee foster successful public good provision? An experimental investigation of the provision of a step-level collective good. Public Choice 157, 25–39 (doi:10.1007/s11127-012-9929-9) [Google Scholar]

- 22.Wu T, Fu F, Zhang Y, Wang L. 2013. The increased risk of joint venture promotes social cooperation. PLoS ONE 8, e63801 (doi:10.1371/journal.pone.0063801) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Raihani NJ, Bshary R. 2011. The evolution of punishment in n-player games: a volunteer's dilemma. Evolution 65, 2725–2728 (doi:10.1111/j.1558-5646.2011.01383.x) [DOI] [PubMed] [Google Scholar]