Summary

Many animals use cues from another animal’s gaze to help distinguish friend from foe [1–3]. In humans, the direction of someone’s gaze provides insight into their focus of interest and state of mind [4] and there is increasing evidence linking abnormal gaze behaviors to clinical conditions such as schizophrenia and autism [5–11]. This fundamental role of another’s gaze is buoyed by the discovery of specific brain areas dedicated to encoding directions of gaze in faces [12–14]. Surprisingly, however, very little is known about how others’ direction of gaze is interpreted. Here we apply a Bayesian framework that has been successfully applied to sensory and motor domains [15–19] to show that humans have a prior expectation that other people’s gaze is directed toward them. This expectation dominates perception when there is high uncertainty, such as at night or when the other person is wearing sunglasses. We presented participants with synthetic faces viewed under high and low levels of uncertainty and manipulated the faces by adding noise to the eyes. Then, we asked the participants to judge relative gaze directions. We found that all participants systematically perceived the noisy gaze as being directed more toward them. This suggests that the adult nervous system internally represents a prior for gaze and highlights the importance of experience in developing our interpretation of another’s gaze.

Highlights

► A novel application of a Bayesian framework to gaze perception ► We tend to perceive others’ gaze as directed toward us when not certain ► Head orientation influences perceived eye direction, even when only the eye region is visible

Results and Discussion

In order to apply a Bayesian framework to gaze, we used a discrimination task in which subjects viewed a sequence of two faces and were required to make a judgment about the relative gaze between the first and second face (Figure 1B). One face had a constant direction of gaze deviation (“test”), and the other had an offset added to this test gaze deviation (“comparator”). The five test values used were determined for each observer from a pilot experiment in which subjects categorized the faces’ gaze as looking straight at them, averted to their right, or averted to their left.

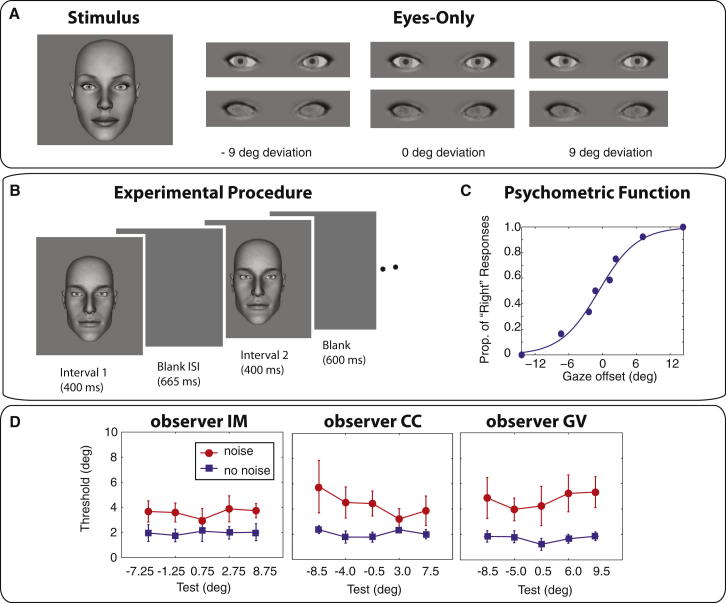

Figure 1.

Gaze Discrimination

(A) Sample face stimulus (left) and close-ups of the eyes-only stimuli in the noiseless and noisy conditions for three different gaze deviations.

(B) Experimental procedure showing one trial for experiment 1. The same identity’s face was presented twice, and the observers’ task was to indicate the direction of gaze in the second interval relative to the first.

(C) Sample psychometric function for stimuli measured around a test of +7.5°. A logistic function is fit to the proportion of trials in which gaze in the comparison stimulus was judged to be more rightward than the test. An estimate of discrimination threshold is derived from the slope of the function, and an estimate of bias is taken as the gaze offset producing 50% “right” responses.

(D) Discrimination thresholds measured at five (test) gaze deviations in the low uncertainty condition (neither face contains noise, blue curves) and the high uncertainty condition (both faces contain noise, red curves) for three observers. Error bars are ± 95% confidence intervals.

First, we estimated the variability of sensory representation of gaze direction (likelihood functions) under two levels of uncertainty. Gaze discrimination thresholds were measured in a low uncertainty condition when the gaze of both faces was noiseless (a sample stimulus is shown in Figure 1A, left, and a close-up of the eyes as shown in the eyes-only condition is shown in Figure 1A, top right) and in high uncertainty when fractal noise was added to the eye region of both faces (a close up of the eyes is shown in Figure 1A, bottom right; see Experimental Procedures). At the end of each run, the data were compiled, and a logistic function was fitted to the proportion of “rightwards” responses as a function of the offset tested (Figure 1C). For all observers, thresholds in the low uncertainty condition were predictably low and remained essentially constant across all test values (Figure 1D, blue curves). Increasing uncertainty in the stimuli raised each observer’s thresholds consistently across the range of tests (Figure 1D, red curves).

In order to examine how our prior beliefs about gaze alter perception, experiment 2 used a mixed noise condition to measure bias. In this experiment, either the test or the comparator face had noise added to the eyes. According to Bayesian inference, increasing the uncertainty in one of the stimuli will increase the influence of the prior for that stimulus, effectively “pulling” the perceived direction of gaze toward the peak of the prior distribution [17] (Figure 2A). These two conditions were interleaved within a run, and Figure 2B shows the discrimination performance for naive observer G.V. in the same run (a) when the test had noise added to the eyes (high uncertainty) and the comparators were noiseless (Figure 2B, test noise) and (b) when the test was noiseless (low uncertainty) and the comparators had noise added to the eyes (Figure 2B, comp noise). In line with our predictions, the psychometric functions are shifted in opposite directions depending on whether noise was added to the test or to the comparator. For example, if a leftward test gaze deviation is seen as more direct because of increased uncertainty, the psychometric function will shift to the right so that the point of subjective equality approaches 0°. If the test is noiseless, it will be seen more accurately as leftward, but the comparator’s deviations will appear more direct, causing the psychometric function to shift to the left. Figures 2C–2E plot biases for the two conditions as a function of the test gaze deviations and reveal a systematic shift in the sign of the biases. Solid curves are the fits according to a Bayesian model with a Gaussian prior and incorporating the aforementioned likelihood functions (see Supplemental Information). The model fits the data very well and accounts for 90.4% (I.M.), 85.9% (C.C.), and 79.5% (G.V.) of the variance. Estimates of the priors’ peaks are +6.0° (I.M.), +1.6° (C.C.), and +6.7° (G.V.), all shifted slightly rightward of direct. The widths of the prior distributions are broad, with SDs of +9.7° (I.M.), +9.0° (C.C.), and +14.1° (G.V.), compared to the SDs of the likelihood functions (mean SDs across the baselines in noiseless conditions are +2.9° [I.M.], +3.0° [C.C.], and +2.5° [G.V.]; and +5.3° [I.M.], +6.5° [C.C.], and +7.0° [G.V.] in noise).

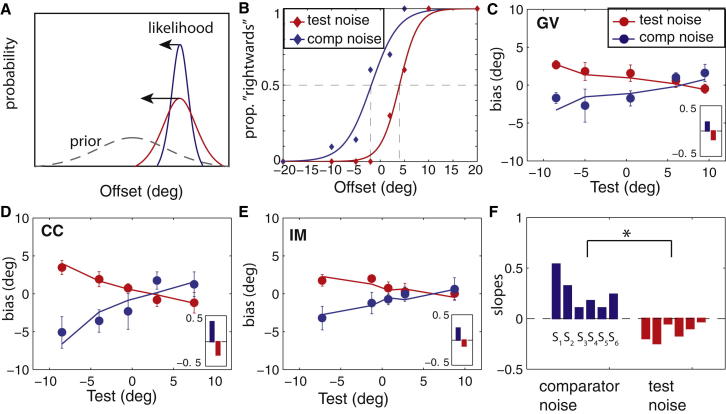

Figure 2.

Biases Measured When One Stimulus Increased in Uncertainty by Adding Noise to the Eyes

(A) Illustration of the Bayesian framework; the noiseless stimulus (blue) has a narrow likelihood function, whereas the noisy stimulus (red) has a broader likelihood function. The prior has a greater influence for the stimulus with high uncertainty.

(B) Sample psychometric functions for naive observer G.V. when the test (−5°) contained noise (test noise, red) or when the comparator contained noise (comp noise, blue).

(C) Biases measured at the same tests as in Figure 1 in the mixed condition for observer G.V. Curves are the Bayesian model fits for the two conditions. Lapse rates at the extremities of the psychometric functions were very low (2.2% averaged across the three observers) indicating that observers were reliably seeing the stimuli.

(D and E) Biases for observers C.C. (D) and I.M. (E). Error bars are ± 95% confidence intervals. Insets show slopes of the bias data in the two conditions.

(F) Slopes of the biases in the two mixed conditions estimated in an additional three observers (S1, S2, and S3) and in G.V. (S4), C.C. (S5), and I.M. (S6) with the use of the eyes-only stimulus. Intersection points are +6.5°, +0.2°, and −5.1° for the naive observers with the use of the face stimuli and +2.0° (G.V.), +6.6° (I.M.), and +1.2° (C.C.) with the eyes-only stimuli.

A signature of a prior for direct gaze when uncertainty is increased in one of the stimuli is that the slopes of the bias curves are oppositely signed depending on whether the increased uncertainty is in the test or whether the increased uncertainty is in the comparator (Figures 2C–2E). The intersection point between the two oppositely signed curves corresponds to the peak of the prior, which was measured in an additional three observers who only performed the discrimination task at two extreme test gaze deviations (+9° and −9°) (Figure 2F, observers S1, S2, and S3). Additionally, observers G.V., C.C., and I.M. performed this reduced experiment with windowed eyes-only stimuli (Figure 1A) for the minimization of the influence of head orientation on gaze judgment (Figure 2F, observers S4, S5, and S6). Slopes of the linear fits through the data collected around these two extreme tests are significantly different for the two conditions (t[1,5] = 3.61, p < 0.01) and are plotted in Figure 2F. Note that the peaks of the priors for the three naive observers (S1, S2, and S3) are distributed more evenly around 0° (+6.5°, +0.2°, and −5.1°, respectively), not supporting a consistent rightward shift in the priors. Using the eyes-only stimuli, observers G.V., I.M., and C.C. displayed a similar shift in their biases despite the absence of head orientation information (slope estimates to end points of full face data are presented as insets in Figures 2C–2E).

In a control experiment that examined whether the shift toward direct that we measured reflected a more generalized tendency to view objects as being centered (e.g., centering the iris in the sclera), three observers (I.M., D.C., and B.R.S.) performed the mixed discrimination task on a gray circle (target) viewed within a larger white circle (referent). The displacement of the target within the referent followed the same angular rotation as the eyes in our stimuli, and observers performed the task at two extreme tests (±9.5°), one of the targets being presented in noise. We found that the slopes of the linear fits to these two extreme deviations were not significantly different from zero (t[1,5] = 0.357, p > 0.3; average slopes of +0.09 and −0.07). These results support our interpretation of a prior for direct gaze that cannot be reduced to a more general tendency for anything to look “centered.” Note, however, that our data do hint at a weaker tendency to view objects as centered, which may reflect a natural tendency to view simple concentric stimuli as eyes or a more generic process of favoring symmetry.

Finally, we examined how the orientation of the head influenced observers’ perception of gaze [20–22]. In the previous stimuli, the head was always forward-facing (direct), such that, even in the eyes-only stimulus, the configuration of the eyes supported the interpretation that the (absent) head was direct. In this experiment, observers judged the deviation of gaze in stimuli where the head was either direct, oriented leftward (−30°), or oriented rightward (+30°). Stimuli were cropped around the eye region (examples shown in Figure 3A) to promote reliance on eye deviation.

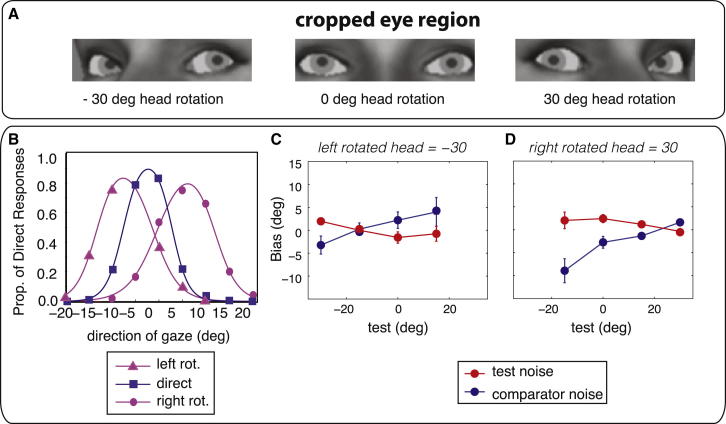

Figure 3.

Judgments of Gaze in Rotated Heads

(A) Sample stimuli used for categorization and discrimination, all with a physical 0° gaze deviation. The heads were cropped around the eye region and were either direct (middle) or rotated leftward (left) or rightward (right) by 30°. Notice that a physically direct gaze (i.e., looking straight ahead) does not appear directed toward the observer in the rotated heads.

(B) Average gaze categorization data and fits to the direct responses as a function of head orientation with the use of leftward-rotated (purple triangles), direct (blue squares), and rightward-rotated heads (purple circles).

(C and D) Average gaze discrimination data with rotated heads when the test contained noise (red circles), and when the test was noiseless (blue circles) for leftward-rotated heads and (D) for rightward-rotated heads. Error bars are between subjects’ SEs. When the heads were rotated leftward, the tests used were −30°, −15°, 0°, and 15°; when the heads were rotated rightward, the tests were −15°, 0°, 15°, and 30°s.

In order to determine which gaze deviation observers judge as direct when the head is rotated, subjects used key presses to categorize the direction of gaze as looking straight at them, to their right, or to their left. Figure 3B plots the direct responses as a function of gaze deviation for the categorization experiment using the rotated cropped heads averaged across three observers (I.M., C.C., and naive observer D.C.). A shift in the peak of the direct responses toward the direction of the head orientation was clearly evident. The peak of the direct response (e.g., the gaze deviation that each observer judged to be directed at them) for a leftward-facing head is −7.23° ± 0.43°, −2.40° ± 0.66° for a direct-facing head, and +5.37° ± 1.53° for a rightward-facing head.

In order to examine if the prior shifted with head orientation, biases were measured at four test gaze deviations with the use of the mixed discrimination condition for leftward-rotated heads (Figure 3C) and rightward-rotated heads (Figure 3D). The crossover points in the averaged data lie between the gaze deviation that observers judge to be direct when viewing a rotated head (roughly 6° in the same direction as the head) and the actual orientation of the head (30°). The fact that the peaks of the priors lie away from the head rotation is not consistent simply with a greater influence of head orientation on gaze judgments when noise is added to the eyes. If that were the case, the point at which there was no bias in the perceived direction of the noisy relative to the noiseless stimuli would occur when eye and head directions were congruent, (i.e., a gaze deviation of 30°). This is clearly not the case in our data, which show a null close to 20° (crossover points are shown in Figures 3C and 3D).

The deviation of the null directions in Figures 3C and 3D away from the head orientation and toward direct indicates that some process must be pulling the perception of gaze toward direct, and we propose that this is a prior to see gaze as direct. However, the deviation of the null directions away from direct and toward the orientation of the head suggests that increased weight is attached to head orientation in estimating gaze direction when eye information is degraded (e.g., Perrett et al. [23] suggest that eye direction is more important than head orientation, but that, when eye direction is uncertain, then head angle can be used as a default). Thus, it appears that there are two processes at play when estimating gaze: a prior to see eye gaze as direct and a bias to rely more heavily on the head orientation in the presence of uncertain or missing eye information.

Here, we report that a fundamental component of social interactions, namely the ability to correctly identify the occurrence of eye contact, can be understood within a Bayesian framework. Why should we have a prior for direct gaze? From a social perspective, direct gaze often signals an intention to engage in communication [1, 24, 25], but it can also signal dominance or a threat [24]. In both cases, the gazer is signaling an imminent interaction. From an evolutionary perspective, it has been proposed [21] that, when one is unsure about a stimulus, assuming direct gaze is simply a safer strategy. Finally, from a developmental perspective, there is evidence that, from a very early age, babies engage more with direct gaze [26]. Whether this finding supports the existence of an innate prior or one that would be learned has important repercussions for clinical populations with deviant eye gaze behavior. Given that the prior may result from a buildup of our experience with gazing stimuli, it would be important to test its plasticity. For example, it has been proposed that professional athletes should spend time studying their opponents in order to optimize their a priori knowledge [16]. It may well be that a similar training regime with eye gaze may be relevant for improving performance in social communication.

Experimental Procedures

Apparatus

A Dell XPS computer running MATLAB was used for stimulus generation, experiment control, and recording subjects’ responses. Programs controlling the experiment incorporated elements of the PsychToolbox [27]. Stimuli were viewed at 57 cm and displayed on a calibrated Sony Trinitron 20SE monitor (1024 × 768 pixels, 75 Hz) driven by the computer’s built-in NVIDIA GeForce GTS 240 graphics card. Two of the authors and four naive observers served as subjects. Experiments received approval from the University of Sydney Human Research Ethics Committee. All observers gave written informed consent.

Stimuli

Grayscale synthetic neutral faces (Daz software), subtending, on average, 15.1 × 11.2 deg were used. The deviation of each eye was independently controlled with MATLAB procedures, giving us precision down to the nearest pixel for horizontal eye rotations. For the noise conditions, fractal noise (1/f amplitude spectrum) was added to the eyes. The noise contrast was held constant at 6% rms and all observers used a Michelson contrast of 7.5% between the pupil and sclera of the eyes, except C.C., who used a contrast of 10%. For each observer, the contrast of the noise and eyes were the same across the different experiments. In eyes-only stimuli, the eye region of the same eight faces was presented by applying an elliptical raised cosine contrast envelope over each eye.

Procedure

All procedures used a method of constant stimuli; within a run, all gaze deviations were presented the same number of times in random order.

Discrimination

Discrimination thresholds were measured with pairs of successively presented stimuli (666 ms interstimulus interval) in the noiseless, noisy, and mixed (stimuli in only one interval had noise) conditions. In a single trial, the same face with different gaze deviations was presented in both stimulus intervals (400 ms), and the observer’s task was to indicate whether the direction of gaze of the face in the second interval was averted to the left or to the right in comparison to the direction of gaze in the first interval (Figure 1B). The position of each face was randomly jittered along the vertical meridian (maximum ± 10 pixels). In order to measure discrimination thresholds, one interval contained a test gaze deviation, and the other the test had an offset added to it (comparator). The offset values were chosen from the set (−12°, −6°, −2°, −1°, 1°, 2°, 6°, and 12°) when no noise was added or −20°, −10°, −5°, −2°, 2°, 5°, 10°, and 20° when noise was added. Each offset level was sampled 12 times in one run, and a logistic function was fit to the data to obtain an estimate of threshold and bias. Average thresholds and biases were calculated from the six separate estimates and used to determine 95% confidence intervals.

Categorization

Observers indicated whether the direction of gaze in the rotated faces (400 ms) was averted left, averted right, or direct with the use of key presses J, K, and L, respectively. Gaze deviations were selected from the set (−9°, −6°, −3°, −1°, 0°, 1°, 3°, 6°, and 9°) and each deviation was presented 12 times per run. Data from six runs were compiled, and separate logistic functions were fitted to the proportion of left and right responses as a function of the gaze deviations. A function for direct responses was calculated by subtracting the sum of the left and right responses from one (Figure 3B). These three functions were fitted as an ensemble with the Nelder-Mead simplex method [28] implemented via MATLAB’s fminsearch function.

Acknowledgments

This work is supported by the Australian Research Council Discovery Project (DP120102589) to C.C. and A.C. C.C. is supported by an Australian Research Council Future Fellowship (FT110100150). A.C. is supported by the Medical Research Council, UK (MC_US_A060_5PQ50).

Supplemental Information

References

- 1.Kleinke C.L. Gaze and eye contact: a research review. Psychol. Bull. 1986;100:78–100. [PubMed] [Google Scholar]

- 2.Senju A., Johnson M.H. The eye contact effect: mechanisms and development. Trends Cogn. Sci. 2009;13:127–134. doi: 10.1016/j.tics.2008.11.009. [DOI] [PubMed] [Google Scholar]

- 3.George N., Conty L. Facing the gaze of others. Neurophysiol. Clin. 2008;38:197–207. doi: 10.1016/j.neucli.2008.03.001. [DOI] [PubMed] [Google Scholar]

- 4.Baron-Cohen S. MIT Press; London, England: 1995. Mindblindness: an essay on autism and theory of mind. [Google Scholar]

- 5.Senju A., Yaguchi K., Tojo Y., Hasegawa T. Eye contact does not facilitate detection in children with autism. Cognition. 2003;89:B43–B51. doi: 10.1016/s0010-0277(03)00081-7. [DOI] [PubMed] [Google Scholar]

- 6.Campbell R., Lawrence K., Mandy W., Mitra C., Jeyakuma L., Skuse D. Meanings in motion and faces: developmental associations between the processing of intention from geometrical animations and gaze detection accuracy. Dev. Psychopathol. 2006;18:99–118. doi: 10.1017/S0954579406060068. [DOI] [PubMed] [Google Scholar]

- 7.Webster S., Potter D.D. Brief report: eye direction detection improves with development in autism. J. Autism Dev. Disord. 2008;38:1184–1186. doi: 10.1007/s10803-008-0539-9. [DOI] [PubMed] [Google Scholar]

- 8.Ristic J., Mottron L., Friesen C.K., Iarocci G., Burack J.A., Kingstone A. Eyes are special but not for everyone: the case of autism. Brain Res. Cogn. Brain Res. 2005;24:715–718. doi: 10.1016/j.cogbrainres.2005.02.007. [DOI] [PubMed] [Google Scholar]

- 9.Langdon R., Corner T., McLaren J., Coltheart M., Ward P.B. Attentional orienting triggered by gaze in schizophrenia. Neuropsychologia. 2006;44:417–429. doi: 10.1016/j.neuropsychologia.2005.05.020. [DOI] [PubMed] [Google Scholar]

- 10.Gamer M., Hecht H., Seipp N., Hiller W. Who is looking at me? The cone of gaze widens in social phobia. Cogn. Emotion. 2011;25:756–764. doi: 10.1080/02699931.2010.503117. [DOI] [PubMed] [Google Scholar]

- 11.Leekam S., Baron-Cohen S., Perrett D., Milders M., Brown S. S. Eye-direction detection: a dissociation between geometric and joint attention skills in autism. Br. J. Dev. Psychol. 1997;15:77–95. [Google Scholar]

- 12.Perrett D.I., Smith P.A.J., Potter D.D., Mistlin A.J., Head A.S., Milner A.D., Jeeves M.A. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc. R. Soc. Lond. B Biol. Sci. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- 13.Calder A.J., Beaver J.D., Winston J.S., Dolan R.J., Jenkins R., Eger E., Henson R.N.A. Separate coding of different gaze directions in the superior temporal sulcus and inferior parietal lobule. Curr. Biol. 2007;17:20–25. doi: 10.1016/j.cub.2006.10.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pelphrey K.A., Morris J.P., McCarthy G. Neural basis of eye gaze processing deficits in autism. Brain. 2005;128:1038–1048. doi: 10.1093/brain/awh404. [DOI] [PubMed] [Google Scholar]

- 15.Weiss Y., Simoncelli E.P., Adelson E.H. Motion illusions as optimal percepts. Nat. Neurosci. 2002;5:598–604. doi: 10.1038/nn0602-858. [DOI] [PubMed] [Google Scholar]

- 16.Körding K.P., Wolpert D.M. Bayesian integration in sensorimotor learning. Nature. 2004;427:244–247. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- 17.Stocker A.A., Simoncelli E.P. Noise characteristics and prior expectations in human visual speed perception. Nat. Neurosci. 2006;9:578–585. doi: 10.1038/nn1669. [DOI] [PubMed] [Google Scholar]

- 18.Girshick A.R., Landy M.S., Simoncelli E.P. Cardinal rules: visual orientation perception reflects knowledge of environmental statistics. Nat. Neurosci. 2011;14:926–932. doi: 10.1038/nn.2831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tomassini A., Morgan M.J., Solomon J.A. Orientation uncertainty reduces perceived obliquity. Vision Res. 2010;50:541–547. doi: 10.1016/j.visres.2009.12.005. [DOI] [PubMed] [Google Scholar]

- 20.Anstis S.M., Mayhew J.W., Morley T. The perception of where a face or television “portrait” is looking. Am. J. Psychol. 1969;82:474–489. [PubMed] [Google Scholar]

- 21.Langton S.R.H., Honeyman H., Tessler E. The influence of head contour and nose angle on the perception of eye-gaze direction. Percept. Psychophys. 2004;66:752–771. doi: 10.3758/bf03194970. [DOI] [PubMed] [Google Scholar]

- 22.Ricciardelli P., Driver J. Effects of head orientation on gaze perception: how positive congruency effects can be reversed. Q J Exp Psychol (Hove) 2008;61:491–504. doi: 10.1080/17470210701255457. [DOI] [PubMed] [Google Scholar]

- 23.Perrett D.I., Hietanen J.K., Oram M.W., Benson P.J. Organization and functions of cells responsive to faces in the temporal cortex. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- 24.Emery N.J. The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- 25.Stoyanova R.S., Ewbank M.P., Calder A.J. “You talkin’ to me?” Self-relevant auditory signals influence perception of gaze direction. Psychol. Sci. 2010;21:1765–1769. doi: 10.1177/0956797610388812. [DOI] [PubMed] [Google Scholar]

- 26.Farroni T., Csibra G., Simion F., Johnson M.H. Eye contact detection in humans from birth. Proc. Natl. Acad. Sci. USA. 2002;99:9602–9605. doi: 10.1073/pnas.152159999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Brainard D.H. The psychophysics toolbox. Spat. Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- 28.Nelder J.A., Mead R. A simplex method for function minimization. Comput. J. 1965;7:308–313. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.