Abstract

When people walk together in groups or crowds they must coordinate their walking speed and direction with their neighbors. This paper investigates how a pedestrian visually controls speed when following a leader on a straight path (one-dimensional following). To model the behavioral dynamics of following, participants in Experiment 1 walked behind a confederate who randomly increased or decreased his walking speed. The data were used to test six models of speed control that used the leader's speed, distance, or combinations of both to regulate the follower's acceleration. To test the optical information used to control speed, participants in Experiment 2 walked behind a virtual moving pole, whose visual angle and binocular disparity were independently manipulated. The results indicate the followers match the speed of the leader, and do so using a visual control law that primarily nulls the leader's optical expansion (change in visual angle), with little influence of change in disparity. This finding has direct applications to understanding the coordination among neighbors in human crowds.

Keywords: visual control, locomotion, pedestrian model, crowd dynamics

Introduction

Collective locomotion is observed throughout the animal kingdom in the form of flocks, herds, and schools. Similarly, humans often walk together in small groups and large crowds. These natural systems exhibit orderly and coherent patterns of motion that are believed to be self-organized (Couzin & Krause, 2003; Helbing, Molnár, Farkas, & Bolay, 2001; Vicsek & Zafeiris, 2012). On this account, global patterns of behavior emerge from the dynamics of local interactions between individuals. The principle challenge is to determine how individuals interact locally with their neighbors and the environment in order to guide behavior.

While many theoretical models of such local interactions have been proposed (Czirók & Vicsek, 2000; Helbing & Molnár, 1995; Reynolds, 1987), they are often only weakly constrained by behavioral evidence (Sumpter, Mann, & Perna, 2012). In some cases, observational or experimental data have been used to fit the parameters of a candidate model (Huth & Wissel, 1994; Johansson, Helbing, & Shukla, 2007; Lemercier et al., 2012; Moussaïd et al., 2009) or to test competing models (Fajen & Warren, 2007; Ondřej, Pettré, Olivier, & Donikian, 2010). Recently, there have been calls for developing pedestrian models that are not only empirically grounded, but also cognitively plausible, in that they incorporate the perceptual abilities of individuals (Goldstone & Gureckis, 2009; Moussaïd, Helbing, & Theraulaz, 2011; Ondřej et al., 2010; Warren & Fajen, 2004).

The steering dynamics model proposed by Fajen and Warren (2003, 2007; Warren & Fajen, 2008) is an empirically grounded, visually based model of human locomotor behavior. Based on a series of experiments on human walking in virtual reality, it consists of a set of ordinary differential equations that define attractors and repellers in the direction of locomotion (heading), and successfully describe how an individual steers to stationary goals, avoids stationary obstacles, intercepts moving targets, and avoids moving obstacles. These four components were intended as building blocks of a full pedestrian model that would characterize locomotion in complex settings and crowds. In a preliminary study, Bonneaud, Rio, Chevaillier, and Warren (2012) analyzed the trajectories of four pedestrians walking to a common goal, and, not surprisingly, found that they could not be reproduced by these four components alone. This finding justifies the pursuit of additional components to capture the complexity of collective behavior in human crowds.

A likely candidate is a velocity coupling, whereby individuals coordinate their speed and align their heading direction with their neighbors. Theoretical studies suggest these components may be fundamental in producing collective patterns of motion, for self-propelled particles that align their directions and travel at the same speed can form stable swarms in the absence of other forces (Vicsek, Czirók, Ben-Jacob, Cohen, & Shochet, 1995). The simplest case of speed and direction coordination, which is quite common in everyday locomotion, is following another pedestrian. In this article we investigate speed control for following in dyads, as a bridge to modeling the local coupling between neighbors in a crowd.

Following has been studied extensively in the context of vehicular traffic since the 1950s (for a review, see Brackstone & McDonald, 1999), including work on the optical information used by drivers (Anderson & Sauer, 2007; Lee & Jones, 1967; Van Winsum, 1999). While there are differences between pedestrian and vehicular locomotion (namely, greater speeds and a greater range of speeds in motor vehicles), this rich literature can be brought to bear on investigations of pedestrian following. Many of the models described in the next section and tested in the present experiments were directly inspired by research on car-following. Recently, pedestrian following has been studied in participants walking the perimeter of a circular arena (Jelić, Appert-Rolland, Lemercier, & Pettré, 2012; Lemercier et al., 2012). Here, our goal is to understand both the behavioral dynamics and visual control of one-dimensional following, when the leader and follower walk on a straight path, with the aim of deriving a speed control law that may generalize to pedestrian groups.

Speed control in following

A complete characterization of locomotor behavior includes two aspects: the behavioral dynamics, a physical description of the observed behavior in terms of physical variables (i.e., what agents are doing), and the control laws that characterize how the behavior is regulated by perceptual information (i.e., how agents do it). This dual modeling approach has two advantages. First, modeling the behavioral dynamics simplifies the problem of identifying a control law, because a physical description of the behavior constrains the possible optical variables that might govern it. Second, the physical description is more general, because the same behavior may be governed by different information in different contexts. Here we introduce several candidate behavioral models of following and several hypotheses about the information used for visual control.

Behavioral dynamics

The aim of behavioral dynamics is to formally model the behavior of the agent–environment system in terms of physical variables and how they change over time, which can be thought of as a behavioral strategy for a given task. There are a number of hypothetical strategies that could, in principle, be used to coordinate speed in following.

Speed

One simple strategy that has been proposed in car-following is for the follower to match the speed of the leader (Lee & Jones, 1967). The follower should accelerate if they are traveling slower than the leader and decelerate if they are traveling faster than the leader. Formally, the follower's acceleration is given by:

|

where ẋl is the leader's speed, ẋf is the follower's speed, and c is a free parameter. This can be equivalently stated in terms of the relative speed Δẋ, which is the difference in speed between the leader and follower, or the speed of the leader in the follower's reference frame:

|

Thus, acceleration goes to zero as the follower's speed approaches the leader's speed; that is, as the relative speed between them goes to zero. One advantage of this speed-matching strategy is that it does not assume a fixed distance between leader and follower; this is an advantage both for the follower (who does not need to store a reference value) and for the model (which does not require an additional parameter).

Distance

Another simple strategy is for the follower to maintain a fixed distance behind the leader, as proposed for car-following by Kometani and Sasaki (1958). When the distance between leader and follower is above a reference value, the follower should accelerate; when it is below that value, the follower should decelerate. Formally, the follower's acceleration ẍf at each time step is given by:

|

where Δx is the current distance (difference in position) between the leader and follower, Δx0 is the fixed reference distance, and c is a free parameter. Thus, the follower's acceleration goes to zero as the current distance approaches the reference distance. From a modeling perspective, the reference distance might be chosen in several ways: it can be derived from observational data, such as the initial distance between leader and follower (we refer to this as the initial distance model), or it can be a free parameter that represents the “preferred” distance (the free parameter distance model).

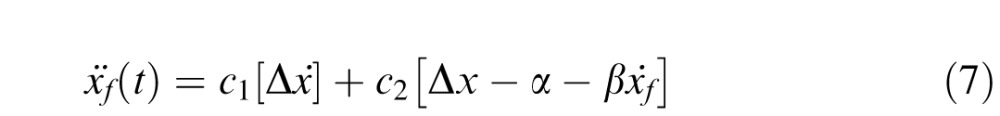

Velocity-based distance

A related strategy involves maintaining a distance that depends on the current velocity, rather than the constant distance described above. This strategy was proposed in the car-following literature by Pipes (1953) and Herman, Montroll, Potts, and Rothery (1959), and is the basis for the “one-car length for every 10 mph” rule of thumb taught in driving schools. It can be formalized by the expression:

|

where ẋf is the follower's speed, Δx is the current distance between leader and follower, and α, β, and c are free parameters. Acceleration goes to zero as the distance between leader and follower approaches the desired velocity-based distance determined by α and β.

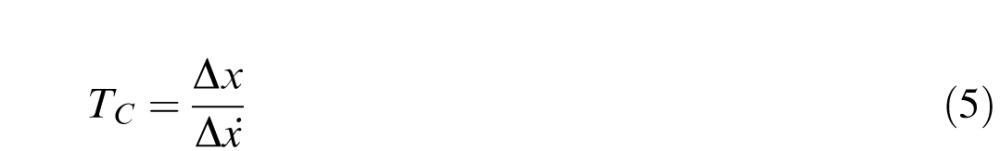

Time-to-contact

The notion of “time to contact” (or “time to collision”) has proven fruitful in research on the visual control of braking (e.g., Lee, 1976). Time to contact, TC, provides an estimate of the time before one collides with an object (or vice versa), given the distance to the object and one's speed, assuming a constant velocity. For following, it provides an estimate of the time before a follower collides with a leader, given the distance between them, Δx, and their relative speed, Δẋ:

|

A negative value of TC specifies that the follower will collide with the leader at some time in the future, and thus is gaining ground; a positive value implies that the leader is getting away from the follower and, if both maintain their current speeds, the two will not collide. Thus, followers might maintain a value of TC that is neither positive nor negative, to avoid either colliding with the leader or letting him get away. If Δx is zero then TC will be zero, but that means a collision has occurred. Alternatively, if Δẋ is zero then TC will be undefined, but this applies regardless of the value of Δx and is thus a reformulation of the speed-matching strategy, which aims to bring Δẋ to zero (Equation 2). Therefore, a time to contact strategy will not be considered further, but the next strategy is based upon its inverse, the “immanence” of collision.

Ratio

The Gazis-Herman-Rothery (GHR) model (Gazis, Herman, & Rothery, 1961) is “perhaps the most well-known model” of car-following, according to Brackstone and McDonald (1999), Such a model was used by Lemercier et al. (2012) in their study of pedestrians walking the perimeter of a circular arena. We tested a simplified version, based on the inverse of time-to-contact, that we call the ratio model:

|

where ẋf is the follower's speed, Δẋ and Δx are the relative speed and distance, respectively, between leader and follower, and c, M, and L are free parameters. Like the speed-matching strategy, acceleration will go to zero as the relative speed between leader and follower goes to zero, but it is modulated by both the follower's current speed and the relative distance between leader and follower.

Linear

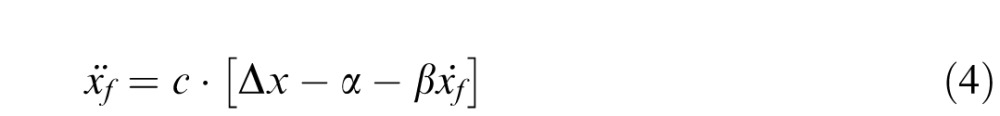

A linear combination of the speed and distance models was proposed for car-following by Helly (1959). Again we use a simplified version, which we call the linear model, given by:

|

where Δẋ and Δx are the relative speed and distance, respectively, between leader and follower, ẋf is the follower's speed, and c1, c2, α, β, and c are free parameters. In general, acceleration goes to zero when the relative speed is zero (i.e., speed is matched), and the difference between the current distance and the velocity-based reference distance is zero (i.e., distance is maintained).

Experiment 1 was designed to test these six dynamical models of following behavior.

Optical information

The behavioral models described above are written in terms of physical variables, like speed and distance. Of course, observers do not have direct access to these variables, but are coupled to the physical environment by optical information. Followers could potentially use a number of sources of information about the leader's distance, including motion parallax and declination angle; here we focus on visual angle and binocular disparity (Gray & Regan, 1997; Heuer, 1993; Regan & Beverley, 1979; Rushton & Wann, 1999), because they permit direct online control, without an intermediate computation of distance or speed. Moreover, there is evidence from the driving literature that visual angle is sufficient to regulate car-following (Anderson & Sauer, 2007).

Visual angle

The leader's visual angle, α, is a function of the distance between leader and follower, Δx, and the leader's size, w. Assuming the latter is fixed, visual angle depends only on the distance between leader and follower, so maintaining a constant distance behind the leader (the distance strategy) can be achieved by maintaining a constant visual angle of the leader. The change in visual angle, or optical expansion, α̇, is a function of the relative speed and distance between the leader and follower, but a constant relative speed (the speed-matching strategy) can be achieved by cancelling changes in the leader's visual angle (i.e., nulling optical expansion). Anderson and Sauer (2007) proposed that drivers use a weighted sum of these two variables to follow a lead vehicle, which is similar to the linear strategy (Equation 7).

Binocular disparity and vergence

These behavioral strategies might also be based on binocular disparity and vergence. Absolute (or retinal) disparity refers to the difference between the left and right retinal locations of the projected images of a point in the scene, and is related to the distance of the point (Neri, Bridge, & Heeger, 2004). However, the absolute disparities of points in a static 3D scene depend on the vergence angle of the two eyes, so the retinal disparities vary with the fixation distance. Vergence itself provides information about the fixation distance up to several meters (Cutting & Vishton, 1995), and is used to scale perceived distance from the disparities. During following, we will assume that the follower fixates the leader directly in front of them. A change in the leader's distance produces a corresponding change in the leader's disparity, which elicits a rapid adjustment in vergence angle to refixate the leader (Busettini, Fitzgibbon, & Miles, 2001). Thus, vergence indicates leader distance, and the combined change in disparity and vergence specify a change in leader distance (relative speed).

Alternatively, followers might use relative disparity, which is the difference between absolute disparities of two points at different distances. This requires a stationary visual surround, so that relative disparity is defined between the leader and the background (Regan, Erkelens, & Collewijn, 1986). However, during following, the depth difference between the leader and the background is necessarily large (or else the former would run into the latter); this creates a large disparity difference that exceeds Panum's fusional area, making relative disparity difficult to detect. It is thus likely that absolute disparity and vergence are more useful to control following than relative disparity. In sum, followers could maintain a constant distance by holding the disparity and vergence of the leader constant, and maintain relative speed at zero by nulling changes in disparity and vergence. For convenience, we will refer to this combination of absolute disparity and vergence as “disparity.”

There is some reason to suspect that disparity might be more effective in pedestrian following than car-following. It has been reported that optical expansion plays a greater role with large objects like cars (Anderson & Sauer, 2007), which subsume large visual angles, while disparity plays a greater role small objects like cricket-balls (Regan & Beverley, 1979). The human body is somewhere in between cars and cricket-balls, and typically subsumes intermediate visual angles during following, so either variable might dominate, or they could be combined. Rushton and Wann (1999) reported that subjects used both optical expansion and binocular disparity in a one-handed catching task, relying on whichever cue specified the earliest time of arrival.

When the leader speeds up relative to the follower, the leader's uncrossed disparity increases (eliciting a decrease in vergence angle) and its visual angle decreases (optical contraction), and vice versa when the leader slows down. These variables are thus normally coupled, but they can be dissociated in virtual reality. We manipulated disparity and visual angle independently by systematically expanding or shrinking a virtual lead object as it moved in depth relative to the walking participant. If followers rely on only one of these optical variables, they should speed up when it specifies an increase in leader speed (and vice versa), but they should be unaffected by changes in the other variable. On the other hand, if followers rely on both variables, their behavior should be sensitive to changes in both.

In sum, there are several candidate models of following behavior, and several hypotheses about the optical information used to control that behavior. Experiment 1 was designed to test the six following models, including (a) initial distance, (b) free parameter distance, (c) velocity-based distance, (d) speed, (e) ratio of speed and distance, and (f) linear combination of speed and distance. Experiment 2 was then designed to test whether the visual control law is predominantly based on (a) change in visual angle, (b) change in binocular disparity and vergence, or (c) some combination of the two.

Experiment 1: Behavioral dynamics of following

Experiment 1 investigated the behavioral dynamics of following, with the aim of deriving a physical model of following behavior. Data were collected from leader–follower dyads in which the leader was a confederate, and follower acceleration was simulated using six candidate models.

Methods

Participants

Ten undergraduate and graduate students, six female and four male, participated as followers in Experiment 1. None reported having any visual or motor impairment. They were paid for their participation. The study was conducted in accordance with the Declaration of Helsinki.

Apparatus

The experiment was conducted in the Virtual Environment Navigation Laboratory (VENLab) at Brown University. Leader and follower walked in a 12 × 12 m room, while wearing head-trackers affixed to bicycle helmets. Their head position and orientation were recorded at a sampling rate of 60 Hz by a hybrid inertial-ultrasonic tracking system (IS-900, Intersense, Billerica, MA). Note that virtual displays were not present in this experiment.

Procedure

A confederate acted as the “leader,” and the participant acted as the “follower.” The participant was instructed to follow the leader at a constant distance. At the beginning of each trial, they positioned themselves on marks on the floor with an initial distance of either 1 m or 4 m. To initiate each trial, an experimenter gave a verbal “go” command to both walkers, and the leader began walking at a self-selected comfortable speed in a straight line. After a random number of steps (two, three, or four steps), the leader would change speed (increase, decrease, or remain constant) for three steps, and then return to his initial speed. The leader read the number of steps and speed change for each trial from a set of index cards they carried with them. Conditions were randomized before the experiment and presented in that order.

Design

Experiment 1 had a 2 × 3 × 3 (initial distance × speed change × steps) factorial design, with three trials per condition, for a total of 54 trials per participant. All factors were within-subject.

Data analysis

The time series of the leader's and the follower's head position in three dimensions were recorded, but only data in the horizontal x,y plane were analyzed. Each time series was filtered, using a forward and backward fourth-order low-pass Butterworth filter with a cutoff frequency of 1 Hz, to reduce error due to the position tracker and attenuate anterior–posterior accelerations due to the step cycle. To eliminate edge effects from filtering at the end of the trial (endpoint error), the position time series were extended by 2 s using linear extrapolation based on the last 0.5 s of data (Howarth & Callaghan, 2009; Vint & Hinrichs, 1996). The extrapolated data were only used to extend the time series during filtering, and were not used for any subsequent analysis. The filtered position data were differentiated to produce a time series of speed, and differentiated again, to produce a time series of acceleration. Due to tracking errors, 78 trials (14%) were excluded from further analysis.

Model fitting

The first 1.5 s of each time series was truncated to eliminate the large initial acceleration associated with the stand-to-walk transition. Leader and follower accelerations were highly correlated during this transient, likely due to the simultaneous “go” command rather than the visual coupling. The overall pattern of results is similar with or without truncation.

To compare the six hypothetical models, each was fit to all time series of follower acceleration. Each trial was simulated by taking as inputs the time series of leader speed (for the speed, velocity-based distance, ratio, and linear models), the time series of leader position (for the initial distance, free parameter distance, velocity-based distance, ratio, and linear models), and/or the initial distance between leader and follower (for the initial distance, velocity-based distance, ratio, and linear models). Performance was evaluated on each trial by computing the correlation coefficient (Pearson's r) between the simulated follower acceleration produced by each model with the observed follower acceleration. The Broyden–Fletcher–Goldfarb–Shanno (Shanno, 1985) method for numerical optimization was used to find the set of parameter values that maximized the mean value of r for each model across all trials using a least-squares criterion. The same parameter values were used for all participants to avoid overfitting and to yield a model that generalizes to novel (untested) pedestrians. For statistical comparisons, mean r values for each participant were computed using Fisher's z′ transform to correct for nonnormality (Martin & Bateson, 1986); the mean z′ values were transformed back into the mean r values reported below. The root-mean-squared-error (RMSE) between the two time series was also analyzed.

Results

Human data

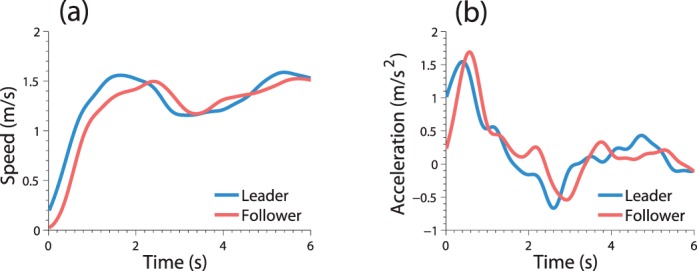

The untruncated time series of leader and follower speed, and leader and follower acceleration, are shown for a representative trial in Figure 1. Notice that in this trial the leader first accelerates quickly (to 1.5 m/s), then decelerates briefly (to 1.1 m/s), and finally returns to a roughly constant speed (1.5 m/s).

Figure 1.

(a) Time series of leader and follower speeds for a representative trial (participant 3, trial 54). (b) Time series of leader and follower accelerations for the same trial.

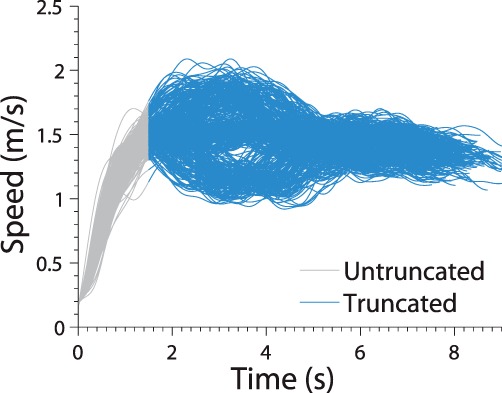

Figure 2 presents the time series of leader speed for every trial, with the analyzed portion in blue. Note that blue traces show three distinct clusters, which correspond to trials in which the leader increased, decreased, or remained at the same speed, indicating that the confederate successfully produced distinct patterns of velocity change.

Figure 2.

Time series of leader speed for all trials. Truncated time series (blue) begins 1.5 s after trial onset, and does not include initial acceleration from standstill (gray).

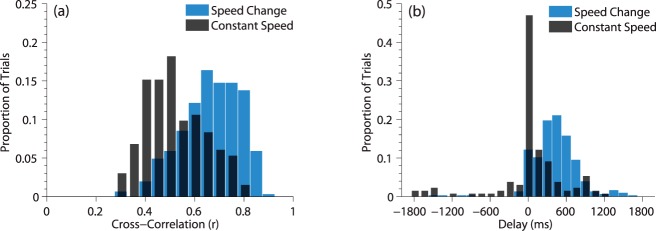

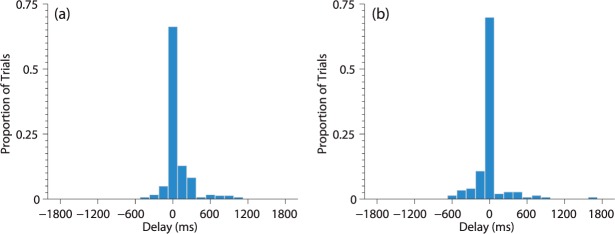

As a measure of the temporal coordination between follower and leader, we computed the cross-correlation between the two time series of acceleration for each trial, varying the time delay from −2000 ms to +2000 ms (positive delays imply that the follower time series lags behind the leader time series). The mean optimal delay in speed-up and slow-down trials did not differ, t(303) = 6.11, p > 0.05, so they were combined; histograms for the resulting speed change condition and the constant speed condition appear in Figure 3.

Figure 3.

Histograms of (a) cross-correlation values and (b) optimal delays between leader and follower data, for speed change (blue) and constant speed (grey) trials.

The cross-correlations were quite high in the speed change condition (mean r = 0.68, median r = 0.67) and slightly lower in the constant speed condition (mean r = 0.53, median r = 0.50), indicating a strong temporal coupling between follower and leader. The mean optimal delay in the speed change condition (M = 420 ms, Mdn = 417 ms, SD = 373 ms) was significantly greater than that in the constant speed condition (M = 25 ms, Mdn = 0 ms, SD = 530 ms), t(435) = 8.87, p < 0.001, which in turn was not significantly different from zero, t(131) = 0.557. By design, there was little variation in leader speed during constant speed trials, yielding lower correlations and poorer estimates of the delay. Therefore, we take the mean optimal delay of 420 ms in the speed change condition as an estimate of the follower's visual–motor delay. This value is similar to estimates from other locomotor tasks (e.g., Benguigui, Baurés, & Le Runigo, 2008; Cinelli & Warren, 2012; Le Runigo, Benguigui, & Bardy, 2010). In sum, the leader produced marked changes in speed, and the follower responded with closely coordinated speed changes after a short delay.

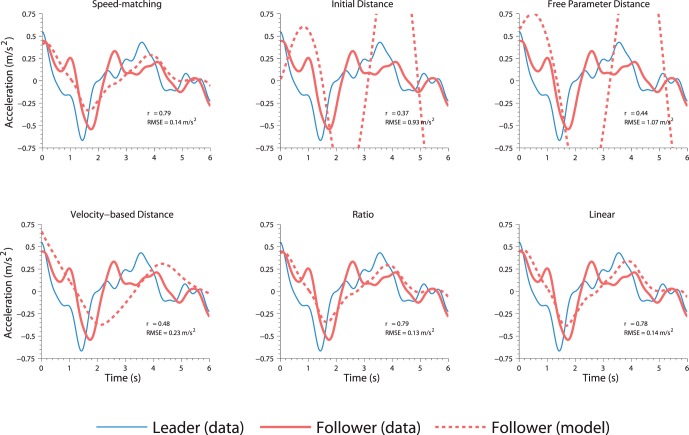

Model evaluation

Figure 4 presents a plot of the simulated and observed follower acceleration (both in red), together with the observed leader acceleration (in blue) for each of the six models for the same sample trial. The main results for each model are listed in Table 1.

Figure 4.

Time series of leader (blue) and follower (red) acceleration for a representative trial (participant 3, trial 54), compared with the predicted acceleration (dashed red) for each of the six models using the best-fit parameters. RMSE and r values indicate goodness of fit between each model and follower data.

Table 1.

Mean correlation coefficients (r), root mean squared error, and parameters for the six behavioral models, for key models with added damping and with delay, and for the optical expansion control law. R values are from the inverse of Fisher's z′ transform. Duncan grouping indicates significance at p = 0.05; models with the same letter are not significantly different.

|

Model |

Mean r |

Mean RMSE (m/s2) |

Number of parameters |

Parameter values |

Duncan grouping |

| Speed-matching | 0.67 | 0.21 | 1 | c = 1.87 | a, d |

| Initial distance | 0.37 | 0.61 | 1 | c = 3.49 | b, e |

| Free parameter distance | 0.40 | 0.82 | 3 | c = 2.69 | b |

| Δx0,1m = 1.32 | |||||

| Δx0,4m = 3.93 | |||||

| Velocity-based distance | 0.52 | 0.80 | 3 | c = 2.44 | c |

| α = 0.35 | |||||

| β = 0.75 | |||||

| Ratio | 0.67 | 0.21 | 3 | c = 2.09 | a |

| M = 0.004 | |||||

| L = 0.16 | |||||

| Linear | 0.67 | 0.21 | 4 | c1 = 2.11 | a |

| c2 = 0.02 | |||||

| α = 23.31 | |||||

| β = −16.91 | |||||

| Speed + damping | 0.68 | 0.21 | 2 | c = 1.93 | d |

| d = −0.15 | |||||

| Distance + damping | 0.37 | 0.66 | 2 | c = 3.35 | e |

| d = −0.07 | |||||

| Speed + delay | 0.68 | 0.21 | 1 | c = 1.83 | d |

| Optical expansion | 0.62 | 0.23 | 1 | c = 13.00 | f |

Statistical tests were performed on the participant means of the z-transformed r values for each model, using the overall best fit parameters for that model. A one-way repeated measures analysis of variance showed significant differences between the models, F(5,45) = 156.96, p < 0.001. Post hoc comparisons for all pairwise combinations were conducted using Bonferroni adjusted alpha levels of 0.0033 (0.05/15). Results indicated that the mean r values for the speed (M = 0.672, SD = 0.111), ratio (M = 0.673, SD = 0.111), and linear (M = 0.673, SD = 0.110) models were not significantly different from one another, p > 0.05, and were all significantly greater than those for the initial distance (M = 0.372, SD = 0.080), free parameter distance (M = 0.398, SD = 0.069), and velocity-based distance (M = 0.518, SD = 0.081) models, p < 0.001. Mean r was significantly greater for the velocity-based distance model than the initial distance and free parameter distance models (p < 0.01), which did not differ from one another (p = 1.00). Thus the follower data were fit significantly more closely by models that contain a relative speed term than by the distance-based models.

We note that the correlations between the time series of speed are generally higher than those for acceleration, but reveal a similar pattern. When using the same best-fit parameter values, the mean r values are 0.87, 0.87, 0.87, 0.56, 0.47, and 0.45, for the speed, ratio, linear, initial distance, free parameter distance, and velocity-based distance models, respectively.

A similar pattern of results holds for statistical tests on RMSE in acceleration, again using the same parameters (see Table 1). A one-way repeated measures analysis of variance showed significant differences in mean RMSE between the models, F(5, 45) = 164.16, p < 0.001. Bonferroni-adjusted post hoc comparisons indicated that the mean RMSE values for the speed (M = 0.210 m/s2, SD = 0.055 m/s2), ratio (M = 0.210 m/s2, SD = 0.054 m/s2), and linear (M = 0.212 m/s2, SD = 0.054 m/s2) models were not significantly different from one another, p = 1.00, but were all significantly lower than those for the initial distance (M = 0.611 m/s2, SD = 0.092 m/s2), free parameter distance (M = 0.825 m/s2, SD = 0.195 m/s2), and velocity-based distance (M = 0.79 8 m/s2, SD = 0.086 m/s2) models, p < 0.001. Mean RMSE was significantly lower for the initial distance model than the free parameter and velocity-based distance models (p < 0.01), but they did not significantly differ from one another, p = 1.00.

In sum, by all of these measures, following behavior was best described by the simple speed-matching model; the ratio and linear models (which also contain a relative speed term) do not improve upon it, despite having more free parameters (Table 1).

Damping

Many models of human locomotor behavior (Fajen & Warren, 2003, 2007; Garcia, Kuo, Peattie, Wang, & Full, 2000) include a damping term, which reflects resistance to change and acts to reduce oscillations. However, damping is often absent from models of following, both in cars (Anderson & Sauer, 2007; Gazis, Herman, & Rothery, 1961; Helly, 1959; Lee & Jones, 1967) and in pedestrians (Lemercier et al., 2012).

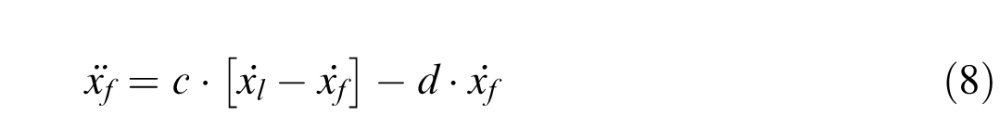

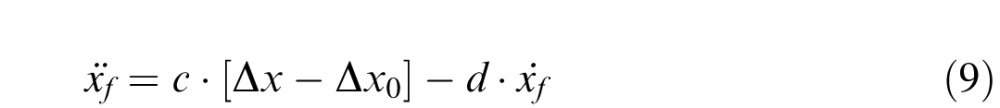

We tested whether adding damping to the speed and initial distance models would better match the human data by modifying Equations 1 and 3 to include a term inversely proportional to the follower's speed, with an additional free parameter d:

|

|

Mean r was slightly higher for the speed-matching model with damping (M = 0.678, SD = 0.106, c = 1.93, d = −0.15) than without (M = 0.672, SD = 0.111, c = 1.87), but this difference was not significant, t(9) = 2.14, p > 0.05 (paired sample t test). Likewise, mean r was slightly but not significantly greater for the initial distance model with damping (M = 0.374, SD = 0.081, c = 3.35, d = −0.068) than without (M = 0.372, SD = 0.080, c = 3.49), but not significantly, t(9) = 1.28, p > 0.05. Furthermore, the best fit for parameter d was very near zero. Taken together, these results indicate that adding a damping term does not improve performance over the simpler speed and initial distance models.

Visual–motor delay

By definition, following involves a unidirectional coupling, because the follower is outside the leader's field of view; thus, followers respond to leaders with a visual–motor delay of about 420 ms, but not vice versa. At first glance, this result suggests that an explicit delay term should be added to the speed-matching model (Equation 2). Such terms are found in many models of following (e.g., Chandler, Herman, & Montroll, 1958; Lemercier et al., 2012), but not all (e.g., Anderson & Sauer, 2007). At present, Equation 4 uses the difference in speed between leader and follower at time t to govern the follower's acceleration at the same instant t. But parameter c in Equations 1 and 2 modulates the follower's rate of response to a given speed difference, implicitly introducing delay into the model. To analyze the empirical adequacy of this solution, we computed the cross-correlation between time series of follower acceleration for the model and the data on each trial As shown in Figure 5, for both speed change (M = 71 ms, Mdn = 0 ms, SD = 217 ms) and no speed change conditions (M = −0.45 ms, Mdn = 0 ms, SD = 247 ms), the optimal delays are sharply peaked around zero, indicating that the speed-matching model implicitly accounts for visual–motor delay.

Figure 5.

Histogram of optimal delays from the cross-correlation of follower data and follower model, for (a) speed change trials and (b) constant speed trials.

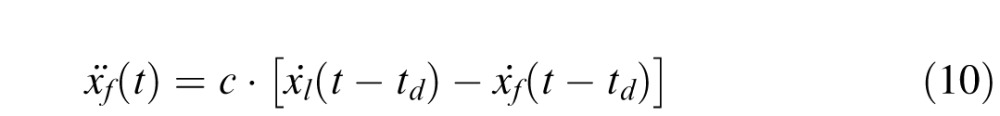

To determine whether performance would be improved with an explicit delay term, we added a constant visual–motor delay to the speed-matching model. We modified Equation 1 so that the follower's acceleration at time t is a function of the speed difference at a previous time in the past, t – td, where td = 420 ms:

|

As before, we fit Equation 8 using numerical optimization to maximize the mean value of r across all trials. A paired-sample t test revealed that this model (M = 0.633, SD = 0.101, c = 1.52, td = 301 ms) failed to perform as well as the simpler model without a delay term (M = 0.672, SD = 0.111, c = 1.87); t(9) = 2.49, p < 0.05. Thus, including an explicit visual–motor delay does not improve the performance of the speed-matching model, at least over the observed range of speed differences.

Discussion

The simple speed-matching model performs just as well as the more complicated ratio and linear models, and significantly better than any of the distance-based models. Moreover, adding a damping term or an explicit delay term does not improve its performance. Taken together, these results support the hypothesis that pedestrian following is best described by a simple physical model in which the follower matches the leader's speed, regardless of distance.

It is surprising that participants were not influenced by the leader's distance, despite being instructed to follow at a constant distance. Distance has been found to increase with velocity in car-following (Filzek & Breuer, 2001), and Lemercier et al. (2012) included a distance term when modeling pedestrians walking the perimeter of a circular arena, although they did not did not report the degree to which this improved performance over a simple speed-matching model. Here, we find no evidence of a preferred interpersonal distance; rather, followers match the leader's speed independent of distance over a range of 1–4 m. This principle is inconsistent with distance-based models of collective behavior, in which individuals are attracted to distant neighbors and repelled from nearby neighbors, yielding a preferred equilibrium distance (Huth & Wissel, 1994; Reynolds, 1987). In contrast, it is consistent with velocity-based models in which individuals match the speed and direction of their neighbors (Ondřej et al., 2010; Vicsek & Zafeiris, 2012). The advantage of such a strategy is that it yields reliable following and coherent swarms that are robust to variations in density.

We should point out several constraints in Experiment 1 that may limit the generality of this conclusion. First, trials were fairly short, in both distance (12 m) and duration (8 s). It is possible that a preferred distance might only be revealed over longer periods of following. Second, we tested a limited range of initial leader–follower distances (1 and 4 m); although this is fairly typical of pedestrian groups, distance may play a role in speed control at larger distances. Finally, walking speeds were in the range of 1–2 m/s and speed changes were limited to a range of ±0.5 m/s (Figure 2). It is possible that greater variation in speed might reveal a velocity-dependent distance effect. Nevertheless, we find that the speed-matching model performs well in conditions relevant to everyday locomotion, namely following another pedestrian within a few meters at typical walking speeds. This raises the question of the optical information that is used to achieve speed-matching, to which we turn in Experiment 2.

Experiment 2: Visual control of following

Experiment 2 was designed to investigate the optical information used to control walking speed in pedestrian following. Data were collected from participants following a moving object in virtual reality, while the visual angle and binocular disparity of the object were orthogonally manipulated. This allowed us to determine the optical information followers use to match the leader's speed, and to derive a visual control law for one-dimensional following.

It has been observed that when viewing a virtual environment in a head-mounted display (HMD), distances greater than a few meters tend to be underestimated by about 50% (Loomis & Knapp, 2003; Thompson et al., 2004). This underestimation may be attributed in part to the fact that while vergence varies normally in an HMD, accommodation is constant due to the fixed focal length of the HMD lens (the virtual image is typically at 1–2 m). This vergence/accommodation mismatch could result in an underscaling of distance from binocular disparity for distances beyond a few meters. However, it has been shown that after 5–10 min of walking with visual feedback in virtual reality, distance is rescaled and the underestimation is eliminated (Mohler, Creem-Regehr, & Thompson, 2006; Richardson & Waller, 2007). In the present experiment, the virtual “leader” appeared at a distance of only 3 m and participants were given 5 min of familiarization (including five practice trials in which the virtual “leader” moved at a constant speed) in the virtual environment prior to testing, which was sufficient to rescale any underestimation. The results should thus be unaffected by the fact that displays were viewed in an HMD.

Methods

Participants

Twelve undergraduate and graduate students, six male and six female, participated in Experiment 2. None reported having any visual or motor impairment. They were paid $8 for their participation, plus $5 to cover travel expenses. The study was conducted in accordance with the Declaration of Helsinki.

Apparatus

Experiment 2 was conducted in the Virtual Environment Navigation Laboratory (VENLab) at Brown University. Participants walked freely in a 12 × 12 m room while viewing a virtual environment through a head-mounted display (SR-80A, Rockwell Collins, Cedar Rapids, IA). The HMD provided stereoscopic viewing with a 63° × 53° (horizontal × vertical) field of view, resolution of 1280 × 1024 pixels in each eye and complete binocular overlap. Displays were generated on a Dell XPS workstation (Round Rock, TX) at a frame rate of 60 fps, using the Vizard software package (WorldViz, Santa Monica, CA). Head position and orientation were recorded as in Experiment 1. Head coordinates from the tracker were used to update the display with a latency of approximately 50 ms (three frames).

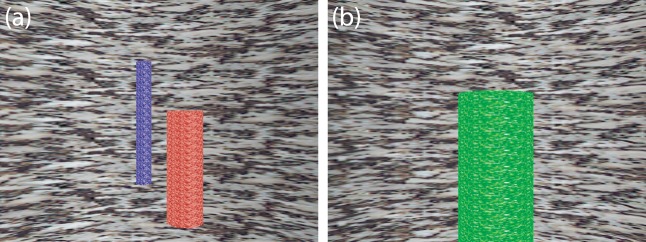

Displays

The virtual environment (see Figure 6) included a visual surround consisting of a distant, large vertical cylindrical surface (radius 500 m) mapped with a grayscale granite texture, but no ground plane or horizon. A blue home pole (radius 0.3 m, height 1.6 m) with a granite texture appeared at the center of the environment, and a red target pole (radius 0.3 m, height 1.6 m) appeared in front of the participant, at an initial distance of 3 m.

Figure 6.

(a) First-person view of the virtual display used in Experiment 2. The blue pole is the “home” pole, which participants walk to before turning to face the red “target” pole. (b) First-person view of the target pole during following. The target pole turns green and begins moving after a button press by the participant.

Procedure

To begin each trial, participants stood at the blue home pole, faced the red target pole, and pushed a button on a handheld mouse, which caused the target pole to turn green. A sound effect (“boing!”) provided feedback that the button press was successful. After 1 s, the green target pole began moving on a straight path away from the participant in depth. During the first 0.5 s of the trial, the pole's velocity increased linearly from 0 to 0.8 m/s. Its speed then remained constant for a variable amount of time (M = 2.5 s, SD = 1 s) until a “manipulation” changed the target speed specified by binocular disparity (the “disparity-specified speed”) or by visual angle (the “expansion-specified speed”) for 3 s (see Table 2).

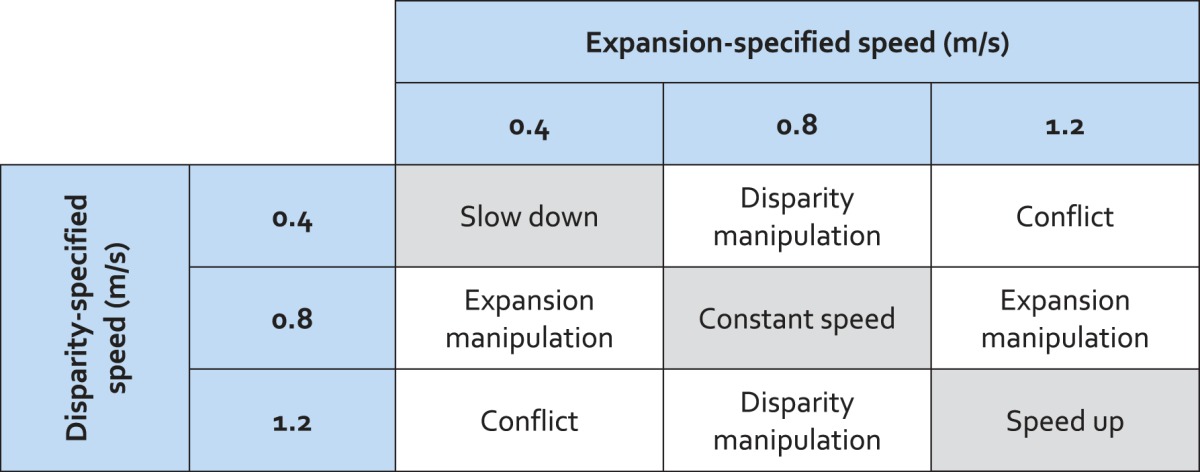

Table 2.

Matrix of visual manipulation conditions in Experiment 2. The target pole's initial speed is 0.8 m/s, so a speed of 0.4 m/s specifies a slow down while 1.2 m/s specifies a speed up. Shaded cells signify conditions in which both sources of information are congruent (specify the same speed); unshaded cells signify that the sources are incongruent (specify different speeds).

Binocular disparity was manipulated by instantaneously increasing the pole's speed (from 0.8 m/s to 1.2 m/s), decreasing it (from 0.8 to 0.4 m/s), or holding it constant (at 0.8 m/s); the pole remained at the new speed for 3 s, and then instantaneously returned to its original speed (0.8 m/s). Visual angle was manipulated by growing or shrinking the target pole so that its visual angle was consistent with a pole increasing or decreasing its speed for 3 s (from 0.8 to 0.4 or 1.2 m/s). This was accomplished by simulating an invisible “canonical” pole of the same size moving at the desired speed, and uniformly growing or shrinking the actual target pole so that its visual angle matched that of the canonical pole at every time step. Thus, a change in disparity-specified speed (−0.4, 0.0, or +0.4 m/s) was isolated by simultaneously decreasing the target's speed and size (or increasing them), while a change in expansion-specified speed (−0.4, 0.0, or +0.4 m/s) was isolated by increasing the target's size only (or decreasing it). The three levels of each optical variable were fully crossed, for a total of nine conditions (Table 2).

Participants were instructed to follow behind the pole as it moved across the room, “as if you were following a friend down the street.” No further instructions were provided, and in particular no instructions were given regarding distance or speed.

Design

Experiment 2 had a 3 × 3 (disparity change × expansion) factorial design, with eight trials per condition, for a total of 72 trials per participant. All variables were within-subject, and trials were presented in a random order.

Data Analysis

The time series of head position were processed as in Experiment 1, to yield a time series of leader and follower speed for each trial. The change in walking speed (ΔSpeed) during the 3 s visual manipulation on each trial was computed by subtracting the mean speed in the last 1 s of the visual manipulation from the mean speed in the 1 s prior to the manipulation. Thus, a positive value of ΔSpeed indicates that the participant sped up during the manipulation, while a negative value indicates that a participant slowed down. The mean of these ΔSpeed values was computed for each participant and each condition, and used as a measure of followers' behavioral response to each visual manipulation.

Results and discussion

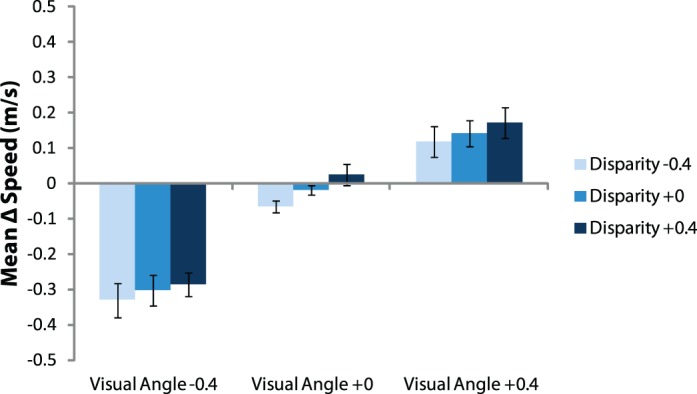

The observed changes in walking speed produced by visually specified changes in target speed of ±0.4 m/s are presented in Figure 7. On average, an expansion-specified speed decrease elicited a comparable decrease in walking speed (ΔSpeed = −0.30 m/s), a constant visual angle yielded no change in walking speed (ΔSpeed = −0.020 m/s), and an expansion-specified increase in target speed elicited a moderate increase in walking speed (ΔSpeed = 0.14 m/s), In contrast, disparity-specified changes in target speed of ±0.4 m/s elicited very little response. On average, all disparity-specified speed produced a small decrease in walking speed, including a disparity-specified speed decrease (ΔSpeed = −0.031 m/s), constant disparity (ΔSpeed = −0.060 m/s), and, surprisingly, a disparity-specified speed increase (ΔSpeed = −0.093 m/s).

Figure 7.

Mean changes in speed for the nine visual manipulation conditions, averaged across trials and participants. Positive values specify an increase in walking speed as a result of the manipulation; negative values specify a decrease in speed. Error bars represent standard error of the mean.

This pattern of results—a large effect of expansion and a minimal effect of change in disparity—was observed in all nine conditions, regardless of whether the optical variables were congruent or in conflict (Figure 7). A two-way analysis of variance confirms a significant main effect of expansion, F(2, 144) = 221.58, p < 0.001, a marginally significant effect of disparity, F(2, 144) = 3.149, p = 0.046, and no interaction, F(4, 142) = 0.151, p > 0.05. Measures of effect size indicate that optical expansion (ω2 = 0.690) explained a far greater proportion of the variance in follower speed than changes in disparity (ω2 = 0.007).

We also noted an asymmetry in the follower's response to a decrease compared to an increase in leader speed, indicating a greater influence of optical expansion than optical contraction on walking speed. The magnitude of ΔSpeed for expansion (M = 0.31 m/s, SD = 0.13 m/s) was twice that for contraction (M = 0.14 m/s, SD = 0.13 m/s), t(100) = 6.30, p < 0.001. This may reflect a fundamental asymmetry in following behavior—for example, followers may prioritize deceleration to avoid collisions in response to optical expansion (emergency braking) over acceleration in response to optical contraction.

To further analyze the relative influence of these optical variables on walking speed, we performed a stepwise multiple linear regression. Both expansion (β = 0.85, p < 0.001) and disparity (β = 0.10, p = 0.015) were significant predictors of ΔSpeed (adjusted R2 = 0.737), but the expansion weight was 8.5 times the disparity weight. A model that included expansion alone accounted for 73% of the variance (adjusted R2 = 0.728); thus adding disparity to the model explained only an additional 1% of the variance. These results indicate that followers are sensitive to information from both optical expansion and binocular disparity, but rely primarily on expansion.

In sum, the results of Experiment 2 indicate that optical expansion, rather than change in binocular disparity and vergence, is the primary optical information used to control speed in human following. Walking speed varied significantly in response to an optical expansion and contraction, while corresponding changes in disparity elicited only marginal changes in speed.

General discussion

The results of Experiment 1 show that followers match the speed of the leader, rather than maintaining a constant distance or using a combination of speed and distance, at least over distances of 1 to 4 m. The results of Experiment 2 show that followers primarily rely on optical expansion to regulate their walking speed. Synthesizing these two results allows us to formulate a visual control law for speed control in pedestrian following that can account for the observed behavior.

Visual control law for following

The simplest speed control law of this form would be one in which the follower nulls the optical expansion of the leader; that is, the follower accelerates if the leader's visual angle is decreasing, decelerates if it is increasing, and maintains the current speed if visual angle is constant. Formally, this control law for following by optical expansion can be simply stated as:

|

where b is a constant and α̇ is the rate of optical expansion of the leader. It can be shown that this control law is mathematically related to the speed-matching model (Equation 2) for a leader of constant size; a derivation is provided in Appendix A.

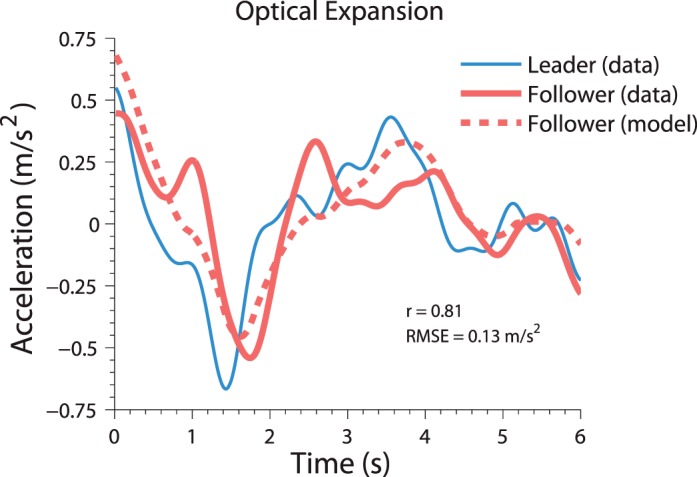

To test this control law, we fit Equation 9 to the data from Experiment 1 as before, using numerical optimization to maximize the mean value of z′-transformed r over all trials. The only input to the model was the rate of change of the leader's visual angle computed from the data, and the output was the follower's predicted acceleration. Figure 8 presents a simulation of the same sample trial as in Figure 3. A paired-sample t test on the mean z′-transformed r values showed that the speed-matching model (M = 0.672, SD = 0.111) provided a slightly better fit to than the optical expansion control law (M = 0.624, SD = 0.086, c = 13.00), t(9) = 7.81, p < 0.001. This result suggests that followers rely primarily, but perhaps not entirely, on optical expansion to regulate their speed, consistent with the results of Experiment 2. Binocular disparity may provide additional information necessary to perceive relative speed.

Figure 8.

Follower acceleration for the representative trial in Figure 2 (participant 3, trial 54), as observed and as predicted by the optical expansion model. RMSE and r values indicate goodness of fit.

Further questions

Armed with an understanding of speed control in one-dimensional following, we can pursue further questions about visually guided pedestrian interactions. The first question is whether the speed-matching model generalizes from following, with a unidirectional coupling, to dyads walking side-by-side, with a bidirectional coupling. Results from Page and Warren (2012, 2013) indicate that the answer is yes: the speed-matching model again best accounts for side-by-side walking, and offers a general description of the behavioral dynamics of coordinating walking speed with one's neighbors. This is somewhat surprising, because it shows that dyads do not actually prefer to walk side-by-side.

The second question involves generalizing from one dimension to the case of two-dimensional following. In this situation, the follower must now regulate not only speed, but also heading direction, essentially matching the leader's velocity. A simple approach would be to do so by controlling speed and heading independently, combining the speed-matching model (Equation 2) with a direction-matching or heading alignment model (Bonneaud & Warren, 2013; Vicsek et al., 1995), in which the difference in heading direction is nulled. One visual control law for heading alignment might be the constant bearing (CB) strategy, which provides a good description of how pedestrians intercept a moving target (Fajen & Warren, 2007): steer to null change in the target's bearing direction. When the pedestrian and the target move at approximately the same speed, the CB strategy yields parallel heading directions. In a preliminary analysis, Rhea, Cohen, and Warren (2009) found that the CB model reproduced the follower's path when the follower's speed was greater than or equal to the leader's speed. However, when the follower's speed was lower than the leader's, the model often generated a mirror image of the observed path, because this solution also maintains the leader at a constant bearing. We are currently pursuing this problem experimentally.

A third question is whether the following model can be scaled up from dyads to account for the collective behavior of pedestrian groups. It is possible that local speed-matching and heading alignment provide the basic coupling between neighbors that yields coherent crowd behaviors. For example, can speed-matching explain how individuals in a group adopt a common walking speed? In a preliminary analysis of groups of four pedestrians walking to a goal, Rio, Bonneaud, and Warren (2012) showed that the speed-matching model (Equation 2) predicts the acceleration of the two rear pedestrians based on the speed of the two front pedestrians. Further experiments are in progress to determine whether the speed-matching strategy extends to larger crowds.

Finally, a model for following based on speed-matching or optical expansion may have applications to pedestrian models for animation and simulation, assistive technology for mobility, and social and swarm robotics (Gockley, Forlizzi, & Simmons, 2007; Monteiro & Bicho, 2010).

Conclusion

Pedestrian following is an important locomotor behavior, both because it is common in our everyday experience and because it forms a basis for the more complex behavior of small groups and large crowds. Here we have characterized the behavioral dynamics of one-dimensional following using a speed-matching model, which can be implemented by a visual control law based on nulling optical expansion. This model provides a basis for understanding following in two dimensions and coordination among neighbors in a crowd. In addition to the elementary behaviors of steering, obstacle avoidance, and interception, new components for speed-matching and heading alignment are key steps toward a full model of pedestrian and crowd behavior (Bonneaud & Warren, 2013; Warren & Fajen, 2008).

Acknowledgments

This research was supported by National Institute of Health Grant R01EY010923 awarded to William Warren and the Link Foundation Advanced Training and Simulation Fellowship awarded to Kevin Rio. The authors wish to thank Jonathan A. Cohen, Henry Harrison, and Nicholas Varone for their assistance with data collection and analysis.

Commercial relationships: none.

Corresponding author: Kevin W. Rio.

Email: kevin_rio@brown.edu.

Address: Department of Cognitive, Linguistic, and Psychological Sciences, Brown University, Providence, RI, USA.

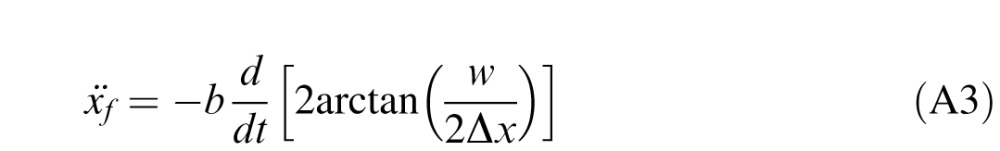

Appendix A

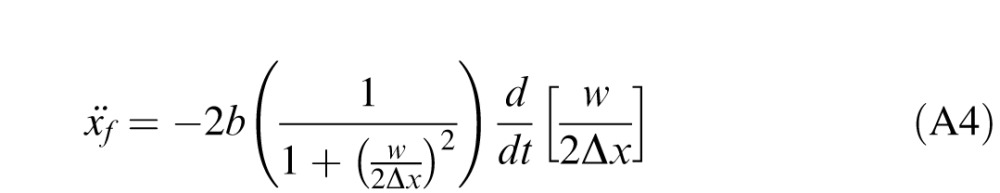

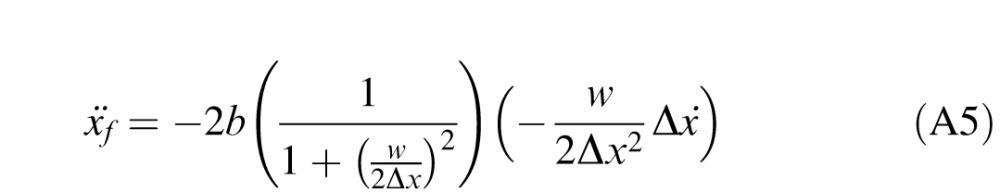

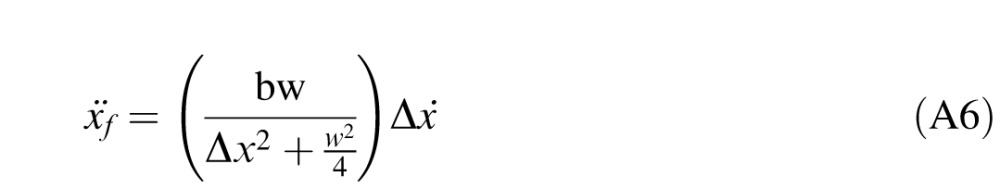

The visual angle control law (Equation 9) is:

|

where α is the visual angle that the leader subtends at the follower's eye. We can rewrite visual angle in terms of real-world variables:

|

where w is the width of the leader and Δx is the distance between leader and follower. Substituting this formula into Equation A1 yields:

|

Taking the derivative in Equation A3, using the chain rule, yields:

|

|

Simplifying and combining terms in Equation A5 yields:

|

Thus, the expansion model (Equation A6) resembles the speed-matching model (Equation 2), except that the coefficient for the expansion model (in parentheses) is a nonlinear function of leader size w and distance Δx.

Contributor Information

Kevin W. Rio, Email: kevin_rio@brown.edu.

Christopher K. Rhea, Email: ckrhea@uncg.edu.

William H. Warren, Email: william_warren_jr@brown.edu.

References

- Anderson G. J., Sauer C. W. (2007). Optical information for car following: The driving by visual angle (DVA) model. Human Factors , 49, 878–896 [DOI] [PubMed] [Google Scholar]

- Benguigui N., Baurés R., Le Runigo C. (2008). Visuomotor delay in interceptive actions. Behavioral and Brain Sciences , 31 (2), 200–201 [Google Scholar]

- Bonneaud S., Rio K., Chevaillier P., Warren W. H. (2012). Accounting for patterns of collective behavior in crowd locomotor dynamics for realistic simulations. In Pan Z., Cheok A. D., Muller W., Chang M., Zhang M. (Eds.), Lecture Notes in Computer Science: Transactions on Edutainment VII (pp 1–11) Heidelberg, Germany: Springer; [Google Scholar]

- Bonneaud S., Warren W. H. (2013). Dynamics of pedestrian behavior and emergent crowd phenomena. Manuscript submitted for publication; [Google Scholar]

- Brackstone M., McDonald M. (1999). Car-following: A historical review. Transportation Research Part F , 2, 181–196 [Google Scholar]

- Busettini C., Fitzgibbon E. J., Miles F. A. (2001). Short-latency disparity vergence in humans. Journal of Neurophysiology, 85, 1129–1152 [DOI] [PubMed] [Google Scholar]

- Chandler R. E., Herman R., Montroll E. W. (1958). Traffic dynamics: Studies in car following. Operations Research , 6 (2), 165–184 [Google Scholar]

- Cinelli M., Warren W. H. (2012). Do walkers follow their heads? Investigating the role of head rotation in locomotor control. Experimental Brain Research , 219, 175–290 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Couzin I. D., Krause J. (2003). Self-organization and collective behavior in vertebrates. Advances in the Study of Behavior , 32, 1–75 [Google Scholar]

- Cutting J. E., Vishton P. M. (1995). Perceiving layout and knowing distances: The integration, relative potency, and contextual use of different information about depth. In Epstein W., Rogers S. (Eds.), Handbook of perception and cognition: Perception of space and motion. (pp 69–117) San Diego, CA: Academic Press; [Google Scholar]

- Czirók A., Vicsek T. (2000). Collective behavior of interacting self-propelled particles. Physica A: Statistical Mechanics and its Applications , 281 (1), 17–29 [Google Scholar]

- Fajen B. R., Warren W. H. (2003). Behavioral dynamics of steering, obstacle avoidance, and route selection. Journal of Experimental Psychology: Human Perception and Performance , 29 (2), 343–362 [DOI] [PubMed] [Google Scholar]

- Fajen B. R., Warren W. H. (2007). Behavioral dynamics of intercepting a moving target. Experimental Brain Research , 180, 303–319 [DOI] [PubMed] [Google Scholar]

- Filzek B., Breuer B. (2001). Distance behaviour on motorways with regard to active safety: A comparison between adaptive-cruise-control (ACC) and driver. Proceedings of the International Technical Conference on Enhanced Safety of Vehicles , 201, 1–8 [Google Scholar]

- Garcia M., Kuo A., Peattie A., Wang P., Full R. (2000). Damping and size: Insights and biological inspiration. Proceedings of the First International Symposium on Adaptive Motion of Animals and Machines , 1–7 [Google Scholar]

- Gazis D. C., Herman R., Rothery R. W. (1961). Nonlinear follow the leader models of traffic flow. Operations Research , 9, 545–567 [Google Scholar]

- Gockley R., Forlizzi J., Simmons R. (2007). Natural person-following behavior for social robots. Proceedings of the 2007 ACM/IEEE International Conference on Human-Robot Interaction (pp 17–24) New York: Association for Computing Machinery [Google Scholar]

- Goldstone R. L., Gureckis T. M. (2009). Collective behavior. Topics in Cognitive Science , 1 (3), 412–438 [DOI] [PubMed] [Google Scholar]

- Gray R., Regan D. (1997). Accuracy of estimating time to collision using binocular and monocular information. Vision Research , 38, 499–512 [DOI] [PubMed] [Google Scholar]

- Helbing D., Molnár P. (1995). Social force model for pedestrian dynamics. Physical Review E , 51 (5), 4282–4286 [DOI] [PubMed] [Google Scholar]

- Helbing D., Molnár P., Farkas I. J., Bolay K. (2001). Self-organizing pedestrian movement. Environment and Planning B , 28 (3), 361–384 [Google Scholar]

- Helly W. (1959). Simulation of bottlenecks in single lane traffic flow. Proceedings of the Symposium on Theory of Traffic Flow (pp 207–238) New York: Elsevier. [Google Scholar]

- Herman R., Montroll E., Potts R., Rothery R. (1959). Traffic dynamics: Analysis of stability in car following. Operations Research , 7, 86–106 [Google Scholar]

- Heuer H. (1993). Estimates of time to contact based on changing size and changing target vergence. Perception , 22, 549–563 [DOI] [PubMed] [Google Scholar]

- Howarth S. J., Callaghan J. P. (2009). The rule of 1s for padding kinematic data prior to digital filtering: Influence of sampling and filter cutoff frequencies. Journal of Electromyography and Kinesiology , 19 (5), 875–881 [DOI] [PubMed] [Google Scholar]

- Huth A., Wissel C. (1994). The simulation of fish schools in comparison with experimental data. Ecological Modelling , 75, 135–146 [Google Scholar]

- Jelić A., Appert-Rolland C., Lemercier S., Pettré J. (2012). Properties of pedestrians walking in line: Fundamental diagrams. Physical Review , 86 (4), 046111 [DOI] [PubMed] [Google Scholar]

- Johansson A., Helbing D., Shukla P. K. (2007). Specification of the social force pedestrian model by evolutionary adjustment to video tracking data. Advances in Complex Systems , 10 (supp02), 271–288 [Google Scholar]

- Kometani E., Sasaki T. (1958). On the stability of traffic flow. Journal of the Operations Research Society of Japan , 2, 11–26 [Google Scholar]

- Lee D. N. (1976). A theory of visual control of braking based on information about time-to-collision. Perception , 5, 437–459 [DOI] [PubMed] [Google Scholar]

- Lee J., Jones J. (1967). Traffic dynamics: Visual angle car following models. Traffic Engineering and Control , 348–350 [Google Scholar]

- Lemercier S., Jelic A., Kulpa R., Hua J., Fehrenbach J., Degond P. … Pettré, J. (2012). Realistic following behaviors for crowd simulation. Computer Graphics Forum , 31 (2), 489–498 [Google Scholar]

- Le Runigo C., Benguigui N., Bardy B. G. (2010). Visuo-motor delay, information-movement coupling, and expertise in ball sports. Journal of Sports Sciences , 28 (3), 327–337 [DOI] [PubMed] [Google Scholar]

- Loomis J. M., Knapp J. M. (2003). Visual perception of egocentric distance in real and virtual environments. In Hettinger L. J., Haas M. W. (Eds.), Virtual and adaptive environments (pp 21–46) Mahwan, NJ: Erlbaum; [Google Scholar]

- Martin P., Bateson P. (1986). Measuring behaviour. Cambridge, UK: Cambridge University Press; [Google Scholar]

- Mohler B. J., Creem-Regehr S. H., Thompson W. B. (2006). The influence of feedback on egocentric distance judgments in real and virtual environments. Proceedings of the 3rd symposium on applied perception in graphics and visualization (pp 9–14) New York: Association for Computing Machinery; [Google Scholar]

- Monteiro S., Bicho E. (2010). Attractor dynamics approach to formation control: Theory and application. Autonomous Robots , 29 (3–4), 331–355 [Google Scholar]

- Moussaïd M., Helbing D., Garnier S., Johansson A., Combe M., Theraulaz G. (2009). Experimental study of the behavioral mechanisms underlying self-organization in human crowds. Proceedings of the Royal Society B , 276, 2755–2762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moussaïd M., Helbing D., Theraulaz G. (2011). How simple rules determine pedestrian behavior and crowd disasters. Proceedings of the National Academy of Sciences , 108 (17), 6884–6888 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neri P., Bridge H., Heeger D. J. (2004). Stereoscopic processing of absolute and relative disparity in human visual cortex. Journal of Neurophysiology , 92 (3), 1880–1891 [DOI] [PubMed] [Google Scholar]

- Ondřej J., Pettré J., Olivier A. H., Donikian S. (2010). A synthetic-vision based steering approach for crowd simulation. ACM Transactions on Graphics (TOG) , 29 (4): 123:1–123:9 [Google Scholar]

- Page Z., Warren W. H. (2012). Visual control of speed in side-by-side walking. Journal of Vision , 12 (9), 4, http://www.journalofvision.org/content/12/9/188, doi:10.1167/12.9.188. [Article] [Google Scholar]

- Page Z., Warren W. H. (2013). Speed-matching strategy used to regulate speed in side-by-side walking. Journal of Vision , 13 (9): 4 http://www.journalofvision.org/content/13/9/950, doi:10.1167/13.9.950. [Article] [Google Scholar]

- Pipes L. A. (1953). An operation analysis of traffic dynamics. Journal of Applied Physics , 24, 274–281 [Google Scholar]

- Regan D., Beverley K. I. (1979). Binocular and monocular stimuli for motion in depth: Changing-disparity and changing-size feed the same motion in depth stage. Vision Research , 19, 1331–1342 [DOI] [PubMed] [Google Scholar]

- Regan D., Erkelens C. J., Collewijn H. (1986). Necessary conditions for the perception of motion in depth. Investigative Ophthalmology and Visual Science , 27 (4), 584–597, http://www.iovs.org/content/27/4/584. [PubMed] [Article] [PubMed] [Google Scholar]

- Reynolds C. W. (1987). Flocks, herds, and schools: A distributed behavioral model. Computer Graphics , 21 (4), 25–34 [Google Scholar]

- Rhea C. K., Cohen J. A., Warren W. H. (2009). Follow the leader: Extending the locomotor dynamics model to crowd behavior. International Conference on Perception and Action, Minneapolis, MN, July, 2009 [Google Scholar]

- Richardson A. R., Waller D. (2007). Interaction with an immersive virtual environment corrects users' distance estimates. Human Factors , 47 (3), 507–517 [DOI] [PubMed] [Google Scholar]

- Rio K., Bonneaud S., Warren W. H. (2012). Speed coordination in pedestrian groups: Linking individual locomotion with crowd behavior. Journal of Vision , 12 (9): 4 http://www.journalofvision.org/content/12/9/190, doi:10.1167/12.9.190. [Article] [Google Scholar]

- Rushton S. K., Wann J. P. (1999). Weighted combination of size and disparity: A computational model for timing a ball catch. Nature Neuroscience , 2 (2), 186–190 [DOI] [PubMed] [Google Scholar]

- Shanno D. F. (1985). On Broyden-Fletcher-Goldfarb-Shanno method. Journal of Optimization Theory and Applications , 46 (1), 87–94 [Google Scholar]

- Sumpter D. J., Mann R. P., Perna A. (2012). The modelling cycle for collective animal behaviour. Interface Focus , 2 (6), 764–773 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson W. B., Willemsen P., Gooch A. A., Creem-Regehr S. H., Loomis J. M., Beall A. C. (2004). Does the quality of the computer graphics matter when judging distances in visually immersive environments? Presence , 13, 560–571 [Google Scholar]

- Van Winsum W. (1999). The human element in car following models. Transportation Research Part F: Traffic Psychology and Behaviour , 2 (4), 207–211 [Google Scholar]

- Vicsek T., Czirók A., Ben-Jacob E., Cohen I., Shochet O. (1995). Novel type of phase transition in a system of self-driven particles. Physical Review Letters , 75 (6), 1226–1229 [DOI] [PubMed] [Google Scholar]

- Vicsek T., Zafeiris A. (2012). Collective motion. Physics Reports , 517, 71–140 [Google Scholar]

- Vint P. F., Hinrichs R. N. (1996). Endpoint error in smoothing and differentiating raw kinematic data: An evaluation of four popular methods. Journal of Biomechanics , 29 (12), 1637–1642 [PubMed] [Google Scholar]

- Warren W. H., Fajen B. R. (2004). From optic flow to laws of control. In Vaina L., Beardsley S., Rushton S. K. (Eds.), Optic flow and beyond (pp 307–337) Dordrecht, The Netherlands: Kluwer; [Google Scholar]

- Warren W. H., Fajen B. R. (2008). Behavioral dynamics of visually-guided locomotion. In Fuchs A., Jirsa V. (Eds.), Coordination: Neural, behavioral, and social dynamics. Heidelberg, Germany: Springer; [Google Scholar]