Abstract

Objective

The goal of this study was to examine the applicability of the Technology Acceptance Model (TAM) in explaining Human Immunodeficiency Virus (HIV) case managers’ acceptance of a prototype Continuity of Care Record (CCR) with context-specific links designed to meet their information needs.

Design

An online survey, based on the constructs of the Technology Acceptance Model (TAM), of 94 case managers who provide care to persons living with HIV (PLWH). To assess the consistency, reliability and fit of the model factors, three methods were used: principal components factor analysis, Cronbach’s alpha, and regression analysis.

Results

Principal components factor analysis resulted in three factors (Perceived Ease of Use, Perceived Usefulness, and Barriers to Use) that explained 84.88% of the variance. Internal consistency reliability estimates ranged from .69–.91. In a linear regression model, Perceived Ease of Use, Perceived Usefulness, and Barriers to Use scores explained 43.6% (p <.001) of the variance in Behavioral Intention to use a CCR with context-specific links.

Conclusion

Our study validated the use of the TAM in health information technology.Results from our study demonstrated that Perceived Ease of Use, Perceived Usefulness, and Barriers to Use are predictors of Behavioral Intention to use a CCR with context-specific links to web-based information resources.

Keywords: Technology Acceptance Model, Continuity of Care Record (CCR), Case Management

I. Introduction

Use of Health Information Technology (HIT) can play a critical role in supporting chronic care management, especially for PLWH. Application of information technology (IT) to health care services has the potential to streamline service delivery systems, improve the quality of care and increase cost-effectiveness of care [1–2]. HIT has been shown to contribute to the overall care of patients [3] by increasing efficiency [4–5], improving patient safety and quality of care [6–7].

Nevertheless, implementation and use of technology does not guarantee improved outcomes. Poorly implemented IT can result in systems that are difficult to learn or use, create additional workload for system users or lead to tragic errors [8]. Technology acceptance is important to consider when implementing a new system [9], since well-designed systems can reduces the risk of errors and ultimately improve outcomes [10].

Technology Acceptance Model (TAM)

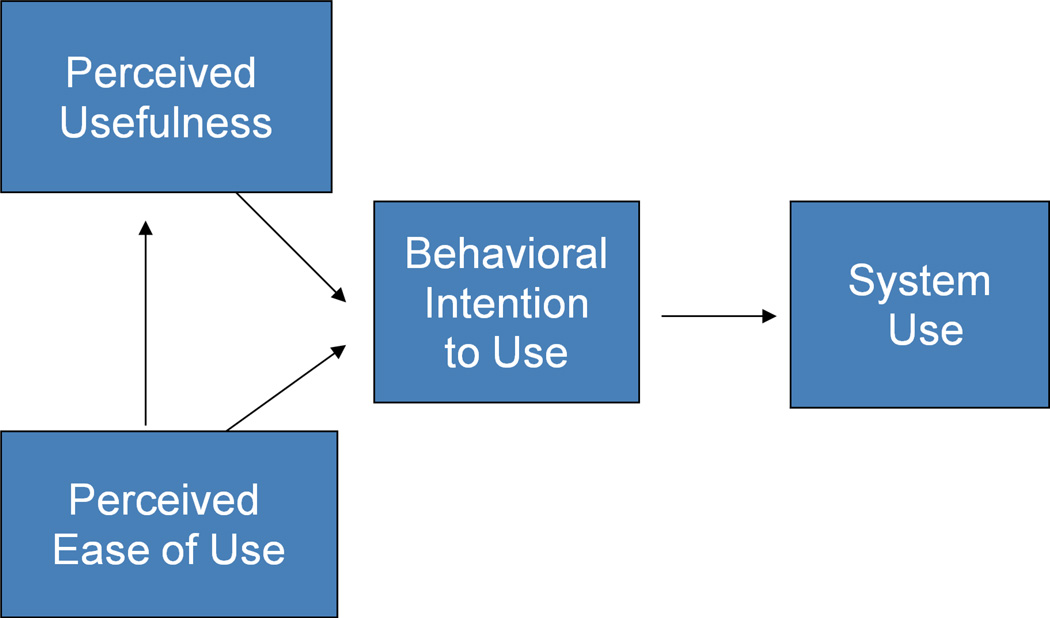

The Technology Acceptance Model (TAM) is an information systems theory that is based in principles adopted from social psychology and has been widely accepted as a parsimonious yet robust model which explains technology acceptance behavior. The TAM has two constructs that influence technology acceptance: perceived ease of use and perceived usefulness [11] (Figure 1). Perceived ease of use explains the degree to which an individual believes that using a particular system would require little physical and mental effort [12]. Perceived usefulness is the expected overall impact of system use on job performance. The model suggests that these two determinants largely influence a user’s attitude toward a system, which can predict behavioral intention to use the system.

Figure 1.

Technology Acceptance Model (TAM) (Davis, 1989)

Several researchers have replicated Davis’s original work to provide empirical evidence on the relationships between the constructs and system use [9, 13–15]. The TAM has been applied to many research settings; however, the majority of research has used software as the usage target and has used students or business professionals as study participants [16]. Since the TAM is not a model developed specifically for HIT, if used in its generic form, the model may not capture some of the unique features of computerized health care delivery [17].

This study aims to make a contribution to IT acceptance research by advancing the understanding of user technology acceptance and extending the theoretical validity of the TAM to a subset of healthcare professionals (case managers) who have not been included in past studies. The purpose of this study was to assess the applicability of the TAM in explaining HIV case managers’ acceptance of a prototype CCD with context-specific links designed to meet their information needs. Four research questions were addressed:

What are the psychometric properties of the Technology Acceptance Survey?

What are the predictors of perceived ease of use, perceived usefulness, and behavioral intention to use a CCR with context-specific links to information resources?

Is there a relationship between the demographic factors and perceived ease of use, perceived usefulness, barriers to use and behavioral intention?

What percent of the variance in behavioral intention is explained by the Technology Acceptance survey factors?

II. Methods

A. Sample and Setting

Study inclusion criteria were English-speaking case managers affiliated with agencies that provide services to members of an HIV Special Needs Managed Care Plan, willingness to provide informed consent, and a valid e-mail address to which the survey could be sent. Case managers coordinate community-based social, mental health and medical services for vulnerable populations facing long-term challenges and needing extended care [18]. Case managers comprise a multi-disciplinary workforce and have diverse academic educational backgrounds and work environments. Some of the major professions from which case managers are drawn are nursing and social work [19], with Registered Nurses (RNs) comprising the majority of case managers in the United States[20]. HIV case managers coordinate resources and referrals to community-based social services and medical care in order to facilitate continuity of care for PLWH [21]. In addition to addressing medical needs, case managers also spend considerable time addressing client needs related to social problems, including homelessness and substance abuse [22]. In addition, case managers can play a valuable role in promoting client adherence to HAART, particularly since physicians do not always have adequate time to provide adherence counseling [18]. Tools such as CCRs have the potential to provide case managers with more information regarding their clients’ health status, medication information and clinician visits.

A voluntary, convenience sample of 94 case managers employed at a COBRA (Consolidated Omnibus Budget Reconciliation Act of 1985) case management agency or a Designated AIDS Center (DAC) completed the survey. COBRA case management programs are designed for persons who have comprehensive service needs, require frequent contact with care providers and have had difficulty accessing medical care and supportive services [23]. DACs are State-certified, hospital-based programs that serve as the hubs for a continuum of hospital and community-based care for persons with HIV infection and AIDS [23].

B. Recruitment

After approval of the protocol by the Columbia University Institutional Review Board, case managers were recruited through direct contact by phone, e-mail and distribution of invitational flyers. In two case management agencies, recruitment was facilitated by a visit of an investigator who brought a laptop for data collection. Upon completion of the survey, participants were e-mailed to ask where to send compensation and were also asked to refer their colleagues to participate in the survey. In addition, participants were mailed a check as compensation with a study flyer to pass on to others who may have been interested in participating.

C

D. Procedures

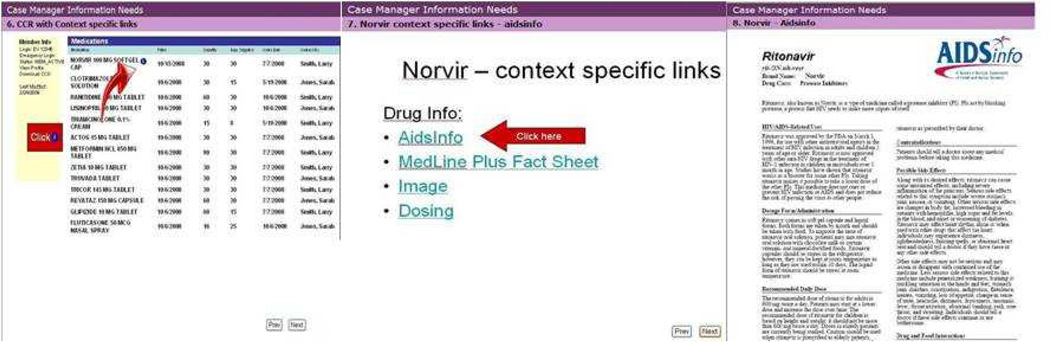

Data collection took place from March – June 2009. The survey was administered via an online survey. Participants were compensated $20 for their time. Case managers were asked to report their demographic information, including: age, gender, ethnicity, race, Internet usage and computer experience. The case managers were asked to view a mock-up of a CCR with context-specific links (Figure 2) to external knowledge sources (e.g., pharmacy information database, laboratory manual), and rate their intent to use such a system and their perceptions of ease of use, usefulness, and barriers to use [11]. Survey items were based upon constructs in the TAM and adapted from existing questionnaires [24–25]. Items were rated on a 5-point Likert scale (strongly agree = 5 and strongly disagree = 1).

Figure 2.

Mock-up of a Prototype CCR with Context-specific Links

This study occurred within the context of the development and implementation of a Continuity of Care Document (CCD) by NewYork-Presbyterian System SelectHealth. SelectHealth, an HIV Special Needs Plan in New York City, provides comprehensive health services to its members through a fully contracted network of providers. The SelectHealth CCD is implemented using the CCD standard [26] and integrates data from multiple data sources including pharmacy refill records, billing records, and laboratory results systems. The data included in the SelectHealth CCD is a subset of those specified in the ASTM CCR standard [27]. The existing system provide patients’ laboratory results and medication refills, but will not include information such as family history, social history, immunizations, vital signs, and procedures. The CCD is accessed by clinicians, case managers, and patients [28].

E. Data Analysis

Structured survey items were entered into Statistical Package for the Social Sciences (SPSS) Version 16. Response rate was computed as the number of participants divided by the number of potential participants. Participant characteristics were summarized using descriptive statistics. Two items were negatively worded and were reverse coded so that a higher score was associated with more positive attitudes toward the system. The construct validity of the Technology Acceptance Survey was measured using a principal component factor analysis (PCA) with Varimax rotation to examine its factorial structure. Although the sample size exceeded the minimum criterion for number of participants per questionnaire item for factor analysis, [29] sampling adequacy for factor analysis was also assessed post-hoc using the Kaiser-Meyer-Olkin (KMO) statistic. KMO overall should be >.60 to proceed with factor analysis [30].

After determining the dimensionality of each item set from the factor analyses, we chose a minimum loading of >.40 before naming a factor and considered the conceptual fit with the rest of the items loading on a factor [31]. Cronbach’s alpha coefficient of .70 or greater were considered acceptable for internal consistency reliability [32].

Independent samples t-tests and one-way analyses of variance (ANOVA) were used to calculate the relationship between the dependent variables (Perceived Ease of Use, Perceived Usefulness, Barriers to Use and Behavioral Intention) and participant and agency characteristics. When there was a single response to any demographic category, the response was deleted from the analysis. Linear regression was used to examine predictors of Perceived Ease of Use, Perceived Usefulness, Barriers to Use and Behavioral Intention. Statistical significance (p) level of analyses was set at alpha = 0.05.

III. Results

A total of 131 surveys were emailed to potential participants and 94 surveys were completed, yielding a response rate of 71.8%. Of the eight sites which participated in the survey, four were COBRA Case Management agencies, and four were DACs. Site response rates varied from 20–100%. Case managers at COBRA Case Management agencies (77.5%) were more likely to participate than case managers at DACs (59.5%) (χ2 = 19.89, p < .0001). The highest response rates (94.1% and 100%) were in agencies in which recruitment was facilitated by a visit of an investigator who brought a web-enabled laptop for data collection.

A. Demographics

The majority of the respondents were females (80.9%) between the ages of 20 and 40 years old. Most of the respondents were Black (41.5%) followed by White (34.1%); 38% of respondents identified their ethnicity as Hispanic. Respondents most often cited their job title as case manager (44.0%), followed by social worker (17.6%). Most respondents use the Internet at least once per day (79.8%), and all respondents use the Internet at least once per month. Nearly all respondents started using the computer more than two years ago (94.7%).

B. Factorial Structure

Psychometric analyses in the sample supported the factorial validity and internal consistency (Cronbach’s α) reliability of the adapted scale (Table 1). Three factors explained a total of 84.9% of the variance: (a) Perceived Usefulness (3 items, α =.91), (b) Perceived Ease of Use (3 items, α =.89) and (c) Barriers to Use (2 items, α = .69). Factors scores were created to serve as dependent variables in the multivariate analysis. Behavioral Intention to Use was measured through a single item.

Table 1.

Mean Scores, Factor Loadings, and Variance Explained (N = 93)

| Item (1=strongly disagree – 5=strongly agree) |

Mean (S.D.) | Perceived Usefulness 3 Items ά = .91 |

Perceived Ease of Use 3 Items ά = .89 |

Barriers to Use 2 Items ά = .69 |

|---|---|---|---|---|

| 1. Using the CCR with context-specific links will enhance my effectiveness in my work. | 4.27 (0.78) | .90 | ||

| 2. Using the CCR with context-specific links in my work will increase my productivity. | 3.91 (0.89) | .88 | ||

| 3. Using the CCR with context-specific links would be helpful in my work. | 4.37 (0.69) | .83 | ||

| 4. I anticipate that I will find the CCR with context-specific links easy to use. | 3.96 (0.91) | .86 | ||

| 5. I anticipate that I will find it easy to get the CCR with context-specific links to do what I want to do. | 3.93 (0.77) | .86 | ||

| 6. My interaction with the CCR with context-specific links will be clear and understandable. | 3.95 (0.80) | .82 | ||

| 7. Interacting with the CCR with context-specific links will require a lot of effort on my part. * | 3.11 (1.11) | .93 | ||

| 8. Using the CCR with context-specific links will not necessarily improve my performance in my work. * | 3.32 (1.13) | .80 | ||

| Percentage Variance Explained | 50.35 | 20.90 | 13.63 | |

Note. Reverse Coded Items – mean scores reflect scores after reverse coding for the factor analysis.

C. Factors Related to Acceptance of a CCR with Context-specific Links

The scores for Perceived Ease of Use, Perceived Usefulness and Barriers to Use suggest that case managers perceive it to be easy to use (M = 3.94, S.D. = 0.75) and useful (M = 4.17, S.D. = 0.75) and intend to use the system (M = 4.14, S.D. = 0.79). The Barriers to Use (M = 2.78, S.D. = 0.98) score comprised two negatively worded items. Therefore, lower scores indicate participants found the system to have few barriers to use. The mean scores support the directionality of the model, whereas Perceived Ease of Use and Usefulness indicate that users are more likely to use the system. In contrast Barriers to Use which is not a factor of the original TAM model have a negative effect on system use.

There were no significant relationships between Perceived Ease of Use or Perceived Usefulness and any of the demographic variables (Table 2). However, there were significant relationships between Behavioral Intention to Use the CCR with context-specific links and Internet use (F = 2.80, p < .05), ethnicity (t = 2.25, p < .05) and gender (t = 2.37, p < .05). Using the Bonferroni correction to control for multiple comparisons, case managers who use the Internet several times everyday were more likely to intend to use the system than people who only use the Internet once a day or several times per week. Males were more likely than females and non-Hispanics were more likely than Hispanics to intend to use the system.

Table 2.

Comparison of Factor Score Means by Participant Characteristics (N = 93)

| Mean (S.D.) | Factor Scores | ||||

|---|---|---|---|---|---|

| Demographics (N) | Perceived Usefulness |

Perceived Ease of Use |

Barriers to Use |

Behavioral Intention |

|

| Gender | Male (7) | 4.51 (0.54) | 4.04(0.90) | 2.59 (1.11) | 4.53 (0.62)* |

| Female (75) | 4.08 (0.77) | 3.92 (0.72) | 2.83 (0.96) | 4.04 (0.80) | |

|

Agency Type |

DAC (25) | 3.97 (0.78) | 3.93 (0.62) | 2.50 (0.61)** | 4.08 (0.76) |

| COBRA (68) | 3.95 (0.80) | 3.95 (0.80) | 2.89 (1.07) | 4.16 (0.80) | |

| Ethnicity | Hispanic (33) | 4.11 (0.72) | 3.88 (0.80) | 2.94 (1.03) | 3.94 (0.70)* |

| Non-Hispanic (55) |

4.21 (0.65) | 4.05 (0.61) | 2.77 (0.94) | 4.29 (0.71) | |

| Age | 20–30 years old (32) |

4.10 (0.72) | 4.08 (0.59) | 2.86 (1.02) | 4.25 (0.76) |

| 30–40 years old (31) |

4.14 (0.59) | 4.03 (0.60) | 2.84 (0.98) | 4.00 (0.73) | |

| 40–50 years old (15) |

4.24 (0.80) | 3.87 (0.59) | 2.83 (0.90) | 4.13 (0.52) | |

| 50–60 years old (13) |

4.51 (0.55) | 3.69 (1.10) | 2.58 (1.00) | 4.38 (0.77) | |

| Race | Black or African American (32) |

4.33 (0.59) | 4.07 (0.65) | 2.98 (1.09) | 4.31 (0.64) |

| White (27) | 4.19 (0.66) | 3.94 (0.62) | 2.46 (0.73) | 4.15 (0.77) | |

| Multi-Racial (6) |

4.11 (0.86) | 4.17 (0.46) | 3.08 (0.58) | 3.83 (0.75) | |

| Other (7) | 4.00 (0.69) | 3.33 (1.22) | 2.86 (1.03) | 3.71 (0.95) | |

| Decline to State (5) |

4.40 (0.55) | 4.13 (0.30) | 2.50 (1.00) | 4.80 (0.45) | |

|

Internet Use |

Several times every day (75) |

4.27 (0.68) | 4.02 (0.72) | 2.69 (0.98)* | 4.24 (0.73)* |

| Once a day (8) |

3.88 (0.25) | 3.79 (0.40) | 3.69 (0.59) | 3.50 (0.53) | |

| Several times per week (7) |

4.04 (0.89) | 3.76 (0.63) | 2.79 (0.70) | 4.29 (0.49) | |

| Several times per month (2) |

3.83 (0.24) | 3.67 (0.47) | 3.50 (0.71) | 4.00 (0.00) | |

|

Started Using Computers |

In the past year (2) |

4.0 (0.0) | 4.0 (0.0) | 4.0 (0.0) | 4.0 (0.0) |

| More than 2 years (88) |

4.21 (0.69) | 3.97 (0.70) | 2.76 (0.96) | 4.18 (0.74) | |

| Job Title | Case Manager (39) |

4.35 (0.66) | 3.95 (0.83) | 2.90 (1.08) | 4.21 (0.73) |

| Site Manager (3) |

4.22 (0.69) | 4.00 (0.0) | 2.83 (1.04) | 4.00 (0.0) | |

| Social Worker (16) |

3.81 (0.79) | 3.77 (0.64) | 2.44 (0.70) | 3.94 (0.77) | |

| Case Follow- up Worker (9) |

3.89 (0.41) | 3.74 (0.49) | 3.44 (0.73) | 3.78 (0.67) | |

| Assistant Case Manager (8) |

4.08 (0.53) | 3.88 (0.40) | 2.63 (0.59) | 4.13 (0.64) | |

| Other Case Management Title (14) |

4.50 (0.57) | 4.45 (0.46) | 2.75 (1.09) | 4.58 (0.65) | |

Significant at the .05 level

Significant at the .01 level

Note: Categories with less than two responses were excluded from this analysis; total responses <93 in some instances due to missing data

There was also a statistically significant relationship between Barriers to Use and agency type (t = 2.19, p < .01) and frequency of Internet use (F = 3.11, p < .05). Case managers at COBRA case management sites were more likely to perceive barriers to system use than case managers at DACs, and case managers who use the Internet several times everyday were less likely to perceive barriers to system use than those who only used the Internet once a day or several times per week.

In the linear regression model, agency type and Internet use had a significant overall effect (p < .05) on Barriers to Use, but only explained 7.2% of the variance (Table 3). Likewise, gender, ethnicity and Internet use (Table 4) had a significant overall effect (p < .05) on Behavioral Intention, but explained very little variance (R2 = .148). Perceived Ease of Use, Perceived Usefulness and Barriers to Use, (p < .001) explained 43.6% of the variance (R2 = .436) in Behavioral Intention to use a CCR with context-specific links (Table 5).

Table 3.

Summary of Regression Analysis for Predicting Perceived Barriers to Use the CCR with Context-Specific Links (N = 92)

| Variable | B | SE (B) | β |

|---|---|---|---|

| Agency Type | 0.29 | 0.22 | 0.13 |

| Internet Use | |||

| Several times everyday (reference) | 0.00 | - | - |

| Once a day | 0.91 | 0.35 | 0.27* |

| Several times per week | 0.09 | 0.37 | 0.02 |

| Several times per month | 0.72 | 0.67 | 0.11 |

Note: R2 = 0.072;

p < .05

Table 4.

Summary of Regression Analysis Examining Participant Characteristic Predictors of Behavioral Intention to Use the CCR with Context-Specific Links (N = 90)

| Variable | B | SE B | β |

|---|---|---|---|

| Gender | −0.43 | 0.18 | −0.23* |

| Ethnicity | 0.36 | 0.14 | 0.26* |

| Internet Use | |||

| Several times everyday (reference) | 0.00 | - | - |

| Once a day | −0.62 | 0.25 | −0.25* |

| Several times per week | 0.17 | 0.27 | 0.06 |

| Several times per month | −0.29 | 0.48 | −0.06 |

Note: R2 = 0.148 (p < .05);

p < .05

Table 5.

Summary of Regression Analysis for Technology Acceptance Survey Factor Scores Predictors of Behavioral Intention to Use the CCR with Context-Specific Links (N = 92)

| Variable | B | SE B | β |

|---|---|---|---|

| Perceived Ease of Use | 0.19 | 0.09 | 0.18 |

| Perceived Usefulness | 0.56 | 0.10 | 0.53* |

| Perceived Barriers to Use | −0.12 | 0.06 | −0.16 |

Note: R2 = .436(p < .001);

p < .05

IV. Discussion

This study sought to validate the use of the TAM to understand acceptance of a CCR with context-specific links. The internal consistency reliability for the factor scores of the Technology Acceptance Survey ranged from .69–.91. The Perceived Ease of Use and Usefulness factors both had scores > .7, suggesting strong internal consistency reliability of the factors. Cronbach’s ά was .69 for Barriers to Use, slightly below the study criterion. One of the possible explanations for the lower alpha values of this scale is the fact that it consists of a small number (two) of items [33].

Barriers to Use is an additional factor which is not in the original TAM. Nonetheless, later versions of the TAM, namely the Technology Acceptance Model II (TAM2) [16] and the Unified Theory of Acceptance and Use of Technology (UTAUT) [34], take into account individual and environmental factors, which can include factors related to Barriers to Use. According to the TAM2 framework, social influence processes, those processes influenced by external pressures, include subjective norm, voluntariness, experience and image. More specifically, experience and voluntariness have a moderating effect on the relationship between subjective norm and behavioral intention to use a technology. Experience describes the skills required to use a technology, while voluntariness is the degree to which use of a technology is perceived to be optional. Likewise, the UTAUT accommodates the extra factor of Barriers to Use that can be explained by social influence and facilitating conditions, two of the key determinants of intention and usage in the model.

The significant relationship between Internet use and Behavioral Intention to Use the system is expected because people who use the Internet are more likely to see the value in a system with information links to Internet resources [35–36]. There was also a significant relationship between Barriers to Use scores and agency type. Case managers working at COBRA case management agencies were more likely to perceive barriers to using the system than those at DAC sites. This is most likely related to the dearth of web-enabled computers at each workstation in some COBRA sites.

Although we expected a significant relationship between computer experience and the dependent variables [37–38], there were no notable findings. This is probably due to little variability, since almost all (94.7%) of the respondents had more than two years of computer experience and were generally very comfortable using computers. In a linear regression analysis, Perceived Ease of Use, Perceived Usefulness and Barriers to Use explained a substantial amount of the variance (R2 = .436) in Behavioral Intention scores. This is consistent with predictions of the TAM [9, 13–15] and previous research studies [39–43].

This study adds to theory development related to technology acceptance. A number of studies have provided theoretical frameworks for IT acceptance [40, 44–47], and the TAM has been widely accepted as a parsimonious, yet robust model which explains technology adoption behavior. Testing the theoretical model in the current study enabled the researchers to further develop and validate the TAM [11].

The majority of TAM research has used software as the usage target and has used students or business professionals as study participants [12, 15–16]. Very little published research has explicitly applied technology acceptance models to healthcare [17]. Nonetheless, the healthcare related studies that have examined technology acceptance have examined concepts such as acceptance, usefulness, ease of use and behavioral intent to use a system and have produced similar results to those in industry [24, 39, 41–42, 48–49]. Additional concepts such as clinical impact [50], management support [51], psychological ownership [43] and computer experience [38] have also been shown to have a positive effect on intention to use technology. However, user characteristics such as age and gender have rarely been evaluated [52], factors that were incorporated in our assessment of the TAM. Our findings validate Davis’ original model which posits that perceived usefulness and perceived ease of use influence adoption of technology [11].

Limitations

Selection bias is possible since all participants were Internet users and willing to complete an online survey and thus may be more likely to think favorably about technology. The demographics of the sample are appropriate for representing end-users since most case managers are female [53]. Second, the case managers evaluated a mock-up of a CCR with context-specific links, rather than a fully-functional system and did not use the system at the point of care. Moreover, study subjects did not explicitly complete tasks specifically designed for usability testing.

In addition, the current state of the art in model testing is not using regression, but rather structural equation modeling (SEM). Our sample size was insufficient for SEM and so we relied on regression testing which has frequently been used for model testing.

V. Conclusion

The TAM is a well regarded theory of technology acceptance and use that has been widely researched outside of health care. This study contributes to its use in HIT research by validating the use of the TAM in a new study population (HIV case managers) using a CCR, a system that has not been previously used to test the TAM. Moreover, our study builds on the current TAM model by suggesting that an additional factor, Barriers to Use, may be appropriate for consideration when attempting further model testing.

Acknowledgments

The authors thank Peter Gordon, MD and Eli Camhi, MSSW, Principal Investigators of the parent project (NewYork-Presbyterian Hospital/Select Health CCD Demonstration Project, H97HA08483), Martha Rodriguez for her assistance in subject recruitment, and the case managers who participated in the study. The study was supported by the National Institute of Nursing Research (P30NR010677) and the Health Resources and Services Administration Grant (D11HP07346).

Contributor Information

Rebecca Schnall, Columbia University, School of Nursing, 617 W. 168th Street, NY, NY 10032, Phone: (212) 342-6879, Fax: (212) 305-6937, Rb897@columbia.edu.

Suzanne Bakken, Columbia University, School of Nursing and Department of Biomedical Informatics, 617 W. 168th Street, NY, NY 10032.

References

- 1.Ford EW, Menachemi N, Phillips MT. Predicting the Adoption of Electronic Health Records by Physicians: When Will Health Care be Paperless? Journal of the American Medical Informatics Association. 2006;13(1):106–112. doi: 10.1197/jamia.M1913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hippisley-Cox J, et al. The electronic patient record in primary care--regression or progression? A cross sectional study. BMJ. 2003;326(7404):1439–1443. doi: 10.1136/bmj.326.7404.1439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tange HJ, et al. Medical narratives in electronic medical records. International Jounrnal of Medical Informatics. 1997;46(1):7–29. doi: 10.1016/s1386-5056(97)00048-8. [DOI] [PubMed] [Google Scholar]

- 4.Bjorvell C, Wredling R, Thorell-Ekstrand I. Long-term increase in quality of nursing documentation: effects of a comprehensive intervention. Scand J Caring Sci. 2002;16(1):34–42. doi: 10.1046/j.1471-6712.2002.00049.x. [DOI] [PubMed] [Google Scholar]

- 5.Moody LE, et al. Electronic health records documentation in nursing: nurses' perceptions, attitudes, and preferences. Computers Informatics Nursing. 2004;22(6):337–344. doi: 10.1097/00024665-200411000-00009. [DOI] [PubMed] [Google Scholar]

- 6.Cooper JD. Organization, management, implementation and value of ehr implementation in a solo pediatric practice. J Healthc Inf Manag. 2004;18(3):51–55. [PubMed] [Google Scholar]

- 7.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: 2001. [Google Scholar]

- 8.Johnson TR, et al. Attitudes toward medical device use errors and the prevention of adverse events. Jt Comm J Qual Patient Saf. 2007;33(11):689–694. doi: 10.1016/s1553-7250(07)33079-1. [DOI] [PubMed] [Google Scholar]

- 9.Mathieson K. Predicting User Intentions: Comparing the Technology Acceptance Model with the Theory of Planned Behavior. Information Systems Research. 1991;2:173–191. [Google Scholar]

- 10.Norman DA. User Centered System Design: New Perspectives on Human-computer Interaction. Hillsdale, NJ: Lawrence Erlbaum Associates; 1986. [Google Scholar]

- 11.Davis F. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13(3):318–340. [Google Scholar]

- 12.Davis F. User acceptance of information technology: system characteristics, user perceptions, and behavioral impacts. International Journal of Man-Machine Studies. 1993;38(3):475–487. [Google Scholar]

- 13.Adams DA, Nelson RR, Todd PA. Perceived usefulness, ease of use, and usage of information technology: a replication. MIS Quarterly. 1992:227–247. [Google Scholar]

- 14.Hendrickson AR, Massey PD, Gronan TP. On the test-retest reliability of perceived usefulness and perceived ease of use scales. MIS Quarterly. 1993;17:227–230. [Google Scholar]

- 15.Segars AH, Grover V. Re-examining perceived ease of use and usefulness: A confirmatory factor analysis. MIS Quarterly. 2001:517–525. [Google Scholar]

- 16.Venkatesh V, Davis F. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Management Science. 2000;46(2):186–204. [Google Scholar]

- 17.Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. J Biomed Inform. 2010;43(1):159–172. doi: 10.1016/j.jbi.2009.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shelton RC, et al. Role of the HIV/AIDS case manager: analysis of a case management adherence training and coordination program in North Carolina. AIDS Patient Care and STDS. 2006;20(3):193–204. doi: 10.1089/apc.2006.20.193. [DOI] [PubMed] [Google Scholar]

- 19.Park EJ, Huber DL. Case management workforce in the United States. Journal of Nursing Scholarship. 2009;41(2):175–183. doi: 10.1111/j.1547-5069.2009.01269.x. [DOI] [PubMed] [Google Scholar]

- 20.Park EJ. A comparison of knowledge and activities in case management practice Doctoral Dissertation. Iowa City, IA: University of Iowa; 2006. [Google Scholar]

- 21.Merrill EB. HIV/AIDS case management: a learning experience for undergraduate nursing students. ABNF J. 1996;7(2):47–53. [PubMed] [Google Scholar]

- 22.Abramowitz S, Obten N, Cohen H. Measuring case management for families with HIV. Soc Work Health Care. 1998;27(3):29–41. doi: 10.1300/J010v27n03_02. [DOI] [PubMed] [Google Scholar]

- 23.NY State Department of Health. AIDS Institue Health Care Services. 2007 [cited 2009 May 7]; Available from: http://www.health.state.ny.us/diseases/aids/about/hlthcare.htm#dacs.

- 24.Dillon T, et al. Perceived ease of use and usefulness of bedside-computer systems. Computers in Nursing. 1998;16(3):151–156. [PubMed] [Google Scholar]

- 25.Hyun S, et al. Nurses' use and perceptions of usefulness of National Cancer Institute's tobacco-related Cancer Information Service (CIS) resources. AMIA Annu Symp Proc. 2008:985. [PubMed] [Google Scholar]

- 26.Health Level Seven Inc. Clinical Document Architecture. 2007–2009 [cited 2009 December 3]; Available from: htp://www.hl7.org/implement/standards/cda.cfm.

- 27.ASTM International. ASTM E31.28 E-. Standard Specification for Continuity of Care Record (CCR) 2005 [cited 2009 December 14]; Available from: www.astm.org/COMMIT/E31_ConceptPaper.doc < http://www.astm.org/COMMIT/E31_ConceptPaper.doc>.

- 28.Schnall R, et al. Barriers to implementation of a Continuity of Care Record (CCR) in HIV/AIDS care. Stud Health Technol Inform. 2009;146:248–252. [PMC free article] [PubMed] [Google Scholar]

- 29.Knapp TR, Brown JK. Ten measurement commandments that often should be broken. Res Nurs Health. 1995;18(5):465–469. doi: 10.1002/nur.4770180511. [DOI] [PubMed] [Google Scholar]

- 30.Hutcheson G, Sofroniou N. The multivariate social scientist: Introductory statistics using generalized linear models. Thousand Oaks, CA: Sage Publications; 1999. [Google Scholar]

- 31.Waltz C, Strickland O, Lenz E. Measurement in nursing and health research. New York, NY: Springer Publishing Company; 2004. [Google Scholar]

- 32.Nunnally JC. Psychometric Theory. 2nd ed. New York: McGraw-Hill; 1978. [Google Scholar]

- 33.Moss S, et al. Reliability and validity of the PAS-ADD Checklist for detecting psychiatric disorders in adults with intellectual disability. J Intellect Disabil Res. 1998;42(Pt 2):173–183. doi: 10.1046/j.1365-2788.1998.00116.x. [DOI] [PubMed] [Google Scholar]

- 34.Venkatesh V, et al. User acceptance of information technology: Toward a unified view. MIS Quarterly. 2003;27(3):425–478. [Google Scholar]

- 35.Cimino JJ, et al. Redesign of the Columbia University Infobutton Manager. AMIA Annual Symposium Proceedings. 2007:135–139. [PMC free article] [PubMed] [Google Scholar]

- 36.Maviglia SM, et al. KnowledgeLink: impact of context-sensitive information retrieval on clinicians' information needs. Journal of the American Medical Informatics Association. 2006;13(1):67–73. doi: 10.1197/jamia.M1861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Taylor S, Todd P. Assessing IT Usage: The Role of Prior Experience. MIS Quarterly. 1995;19(4):561–570. [Google Scholar]

- 38.Ammenwerth E, et al. Factors affecting and affected by user acceptance of computer-based nursing documentation: results of a two-year study. Journal of the American Medical Informatics Association. 2003;10(1):69–84. doi: 10.1197/jamia.M1118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chang P, et al. Development and comparison of user acceptance of advanced comprehensive triage PDA support system with a traditional terminal alternative system. AMIA Annu Symp Proc. 2003:140–144. [PMC free article] [PubMed] [Google Scholar]

- 40.Davis F, Bagozzi R, Warshaw P. User acceptance of computer technology: a comparison of two theoretical models. Management Science. 1989;35(8):982–1003. [Google Scholar]

- 41.Hulse NC, Fiol GD, Rocha RA. Modeling End-users’ Acceptance of a Knowledge Authoring Tool. Methods of Information in Medicine. 2006;45(5):528–535. [PubMed] [Google Scholar]

- 42.Mazzoleni M, et al. Assessing users' satisfaction through perception of usefulness and ease of use in the daily interaction with a hospital information system. Proceedings of AMIA Annual Fall Symposium. 1996:752–756. [PMC free article] [PubMed] [Google Scholar]

- 43.Pare G, Sicotte C, Jacques H. The effects of creating psychological ownership on physicians' acceptance of clinical information systems. Journal of the American Medical Informatics Association. 2006;13(2):197–205. doi: 10.1197/jamia.M1930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ajzen I. From intentions to actions: a theory of planned behavior. In: Jkhul JB, editor. Action Control: From Cognition toBehavior. New York, NY: SpringerVerlag; 1985. [Google Scholar]

- 45.Ajzen I. The theory of planned behavior. Organization Behavior and Human Decision Processes. 1991;50(2):179–211. [Google Scholar]

- 46.Moore GC. End user computing and office automation: a diffusion of innovations perspective. Infor. 1987;25(3):214–235. [Google Scholar]

- 47.Taylor S, Todd PA. Understanding information technology usage: a test of competing models. Information systems research. 1995;6(2):144–176. [Google Scholar]

- 48.Barker DJ, et al. Evaluating a spoken dialogue system for recording clinical observations during an endoscopic examination. Med Inform Internet Med. 2003;28(2):85–97. doi: 10.1080/14639230310001600452. [DOI] [PubMed] [Google Scholar]

- 49.Mazzoleni M, et al. Spreading the clinical information system: which users are satisfied? Studies in Health Technology and Informatics. 1997;43(Pt A):162–166. [PubMed] [Google Scholar]

- 50.Seckman CA, Romano CA, Marden S. Evaluation of clinician response to wireless technology. Proc AMIA Symp. 2001:612–616. [PMC free article] [PubMed] [Google Scholar]

- 51.Wu JH, et al. Testing the technology acceptance model for evaluating healthcare professionals' intention to use an adverse event reporting system. International Journal for Quality in Health Care. 2008;20(2):123–129. doi: 10.1093/intqhc/mzm074. [DOI] [PubMed] [Google Scholar]

- 52.Palm J, et al. Determinants of user satisfaction with a Clinical Information System. AMIA Annu Symp Proc. 2006:614–618. [PMC free article] [PubMed] [Google Scholar]

- 53.Tahan HA, Campagna V. Case Management Roles and Functions Across Various Settings and Professional Disciplines. Professional Case Management. 2010;15(5):245–277. doi: 10.1097/NCM.0b013e3181e94452. [DOI] [PubMed] [Google Scholar]