Abstract

The impact of emotions on gaze-oriented attention was investigated in non-anxious participants. A neutral face cue with straight gaze was presented, which then averted its gaze to the side while remaining neutral or expressing an emotion (fear/surprise in Exp.1 and anger/happiness in Exp.2). Localization of a subsequent target was faster at the gazed-at location (congruent condition) than at the non-gazed-at location (incongruent condition). This Gaze-Orienting Effect (GOE) was enhanced for fear, surprise, and anger, compared to neutral expressions which did not differ from happy expressions. In addition, Event Related Potentials (ERPs) to the target showed a congruency effect on P1 for fear and surprise and a left lateralized congruency effect on P1 for happy faces, suggesting that target visual processing was also influenced by attention to gaze and emotions. Finally, at cue presentation, early postero-lateral (Early Directing Attention Negativity (EDAN)) and later antero-lateral (Anterior Directing Attention Negativity (ADAN)) attention-related ERP components were observed, reflecting, respectively, the shift of attention and its holding at gazed-at locations. These two components were not modulated by emotions. Together, these findings show that the processing of social signals such as gaze and facial expression interact rather late and in a complex manner to modulate spatial attention.

Keywords: Gaze orienting, Attention, ERPs, Emotions

Gaze direction is a crucial non-verbal cue, which we use to determine where and what others are attending. It can also direct another’s attention toward an object, a phenomenon called joint attention, which helps assess others’ intentions and understand their behaviors (Baron-Cohen, 1995).

Joint attention is typically studied using a modified version of the Posner cuing paradigm (Posner, 1980), in which a central face cue with averted gaze is followed by a lateral target. Congruent trials in which the target appears at the gazed-at location are responded to faster than incongruent trials in which the target appears at the opposite side of gaze. The response time difference between congruent and incongruent trials reflects the orienting of attention toward gaze direction (Friesen & Kingstone, 1998). This robust Gaze Orienting Effect (GOE) was shown for letter discrimination, target detection, or localization tasks, for Stimulus Onset Asynchronies (SOAs) up to 700 ms, and when the cue is non-predictive or even counter-predictive of the target location (for a review, see Frischen, Bayliss, & Tipper, 2007).

Facial expressions are also important in social attention, as they allow the observer to infer what an individual is feeling about an object. For example, a face with an averted gaze and expressing fear can communicate the presence of a danger located outside of the observer’s focus of attention. When gaze is averted, fearful faces provide additional information compared to neutral faces. This extra clue should incite faster orienting toward the looked-at object to speed up its localization and identification.

Many studies have investigated whether emotions modulate attention orienting by gaze. A GOE increase with fearful compared to neutral and/or happy faces has been reported and interpreted as reflecting the evolutionary advantage to orient rapidly in the direction of a potential threat (Bayless, Glover, Taylor, & Itier, 2011; Fox, Mathews, Calder, & Yiend, 2007; Graham, Friesen, Fichtenholtz, & LaBar, 2010; Mathews, Fox, Yiend, & Calder, 2003; Pecchinenda, Pes, Ferlazzo, & Zoccolotti, 2008; Putman, Hermans, & Van Honk, 2006; Tipples, 2006). However, some studies failed to report such a modulation (Fichtenholtz, Hopfinger, Graham, Detwiler, & LaBar, 2007; Galfano et al., 2011; Hietanen & Leppänen, 2003; Holmes, Mogg, Garcia, & Bradley, 2010). The lack of GOE modulation with fearful faces could be due to the use of short SOAs (e.g., Galfano et al., 2011 using 200 ms SOA), as Graham et al. (2010) suggested that a minimum of 300 ms was needed for a full gaze and emotion integration. It could also result from the use of a more difficult discrimination task rather than a localization task (e.g., Holmes et al., 2010). As the combination of gaze and emotion cues indicate where a danger might be in the environment, modulation of the GOE by fear might be seen more clearly with a localization task. Finally, this lack of GOE modulation by fear could originate from the use of static rather than dynamic facial expressions (e.g., Hietanen & Leppänen, 2003), since emotions are better processed when seen dynamically than statically (Sato & Yoshikawa, 2004). Additionally, some studies have shown that the GOE enhancement for fearful compared to neutral or happy faces depended on participants’ anxiety level (Fox et al., 2007; Mathews et al., 2003; Putman et al., 2006), while others reported such a modulation even in non-anxious participants (Bayless et al., 2011; Neath, Nilsen, Gittsovich, & Itier, 2013). Thus, it remains unclear whether modulation of attention orienting by gaze with fear is limited to high-anxious individuals or can be seen in the general population.

There are also inconsistent findings as to whether emotions other than fear modulate the GOE. Angry faces failed to enhance the GOE compared to neutral faces in most studies (Bayless et al., 2011; Fox et al., 2007; Hietanen & Leppänen, 2003). However, in these studies, fearful faces were always included. In one experiment in which fearful faces were not presented, angry faces actually enhanced the GOE compared with joyful and neutral expressions in high-anxious individuals (Holmes, Richards, & Green, 2006). Although these results need to be extended to a non-anxious population, they suggest that the modulation of the GOE by emotions may rely on the relative rather than absolute valence of an emotion. That is, in the context of fearful faces, angry faces might not be perceived as negative enough to trigger a GOE enhancement.

Surprise has seldom been investigated in the gaze-orienting literature but was recently shown to increase the GOE to the same extent as fear (Bayless et al., 2011; Neath et al., 2013). Fearful and surprised facial expressions share many facial features including eye widening (Gosselin & Simard, 1999), which contributes to their facilitation of gaze-oriented attention (Bayless et al., 2011). In addition, surprise’s valence is ambiguous (Fontaine, Scherer, Roesch, & Ellsworth, 2007) but is interpreted negatively in the context of negative emotions such as fearful faces (Neta & Whalen, 2010). Finally, surprise signals the presence of an unexpected event, which could prompt faster orienting to determine whether it is a danger. Overall, the literature concerning the impact of emotions on the GOE remains unclear, and a given facial expression may modulate the GOE differently depending on the other facial expressions it is presented with.

Event-Related Potentials (ERPs) can track brain activity occurring before a response is made and thus help uncover the temporal dynamics of spatial attention orienting by gaze and its modulation by emotion. However, few ERP studies have focused on gaze orienting. Some studies have investigated the ERP correlates of attention at target presentation and showed that the amplitude of early visual components, P1 and N1, was larger for targets preceded by congruent compared with incongruent gaze cues (Schuller & Rossion, 2001, 2004, 2005). These effects are thought to reflect the early facilitation of target visual processing, due to the enhancement of attention at the gazed-at location, and have also been reported for targets preceded by arrow cues (Eimer, 1997; Mangun & Hillyard, 1991).

Other studies have focused on ERPs elicited by the cue. In arrow cuing studies, two components were shown to index two different attention stages (Nobre, Sebestyen, & Miniussi, 2000). The Early Directing Attention Negativity (EDAN) indexes the initial orienting of attention in the cued direction and reflects the increase of activity in cortical regions devoted to the processing of the cued location (Simpson et al., 2006) or the selection of aspects of the cue relevant for the accomplishment of the task (Van Velzen & Eimer, 2003). The Anterior Directing Attention Negativity (ADAN) indexes the holding of attention at the cued location and reflects the engagement of the fronto-parietal attention network in the control and redirection of attention in space (Praamstra, Boutsen, & Humphreys, 2005). Only a few studies have investigated these components in gaze cuing paradigms. Using schematic faces, one study found no evidence for EDAN or ADAN with gaze cues, although they were both present with arrow cues (Hietanen, Leppänen, Nummenmaa, & Astikainen, 2008). Using face photographs, another study reported evidence for an ADAN but not an EDAN component (Holmes et al., 2010). It thus remains unclear whether these components can be found reliably in gaze cuing studies.

So far, few ERP studies investigated the influence of emotion on spatial attention and all of them failed to show emotional modulations of the attention-related ERPs with gaze orienting (Fichtenholtz et al., 2007; Fichtenholtz, Hopfinger, Graham, Detwiler, & LaBar, 2009; Galfano et al., 2011; Holmes et al., 2010). However, no clear modulations of the GOE with emotions were reported at the behavioral level in these experiments. To the best of our knowledge, no ERP study using the gaze-orienting paradigm has yet reported emotion modulations of P1 and N1 components related to the target or EDAN and ADAN components related to the gaze cue, in addition to behavioral modulations of the GOE.

In the present ERP study, we used a localization task and dynamic displays to investigate whether fearful, angry, happy, surprised, and neutral expressions modulate the GOE and tracked the neural correlates of these modulations in a non-anxious population using ERPs. Given gaze cues are mainly used to orient attention toward a given location in the environment; we believed that the localization task, coupled with dynamic rather than static stimuli, would be one step closer to real-life situations and would reveal emotional modulations of the GOE previously not reported. To ensure a sufficient number of trials per condition and to avoid a lengthy study, we ran two experiments, each including two emotions and neutral expressions. Fearful, surprised, and neutral expressions were compared in Experiment 1, while angry, happy, and neutral expressions were compared in Experiment 2. Happy and angry facial expressions are considered to be approach-related emotions, while fear and potentially surprise (in the context of fear) are avoidance-related emotions. This design allowed for determining whether the emotional modulation of the GOE differed between emotions signalling approach and emotions signalling avoidance. Most importantly, it allowed for testing the idea that anger can enhance the GOE compared to neutral faces when fear is not included in the design. At the behavioral level, in accordance with previous studies (Bayless et al., 2011; Holmes et al., 2006; Neath et al., 2013), we predicted that (i) relative to neutral faces, the GOE would be larger for fearful and surprised faces and (ii) angry faces would enhance the GOE compared to neutral and happy faces. Regarding ERP modulations, at target presentation, we expected to replicate the congruency effects on P1 and N1 components and predicted larger modulations of these effects for fearful, surprised, and angry expressions compared to neutral expressions, reflecting an enhancement of the early visual processing of the target for these emotions. For ERPs recorded to the face cue, we hypothesized that the task and the dynamic face photographs used would help reveal the presence of EDAN and ADAN attention-related components. Given that EDAN occurs between 200 and 300 ms after cue onset and that emotion and gaze cues seem to require more than 300 ms to be fully integrated, we predicted no modulation of EDAN by emotion. In contrast, since ADAN occurs between 300 and 500 ms during which the emotion and gaze cues are likely to be integrated, we anticipated it would show a larger modulation with fearful, surprised, and angry expressions compared to neutral and happy expressions.

METHODS

Participants

Twenty-eight participants (14 females), all right handed, with normal or corrected to normal vision and no self-reported history of psychiatric or neurological illness were recruited and tested at the University of Waterloo. They received $40 or course credits for their participation. Ten participants were excluded (5 different participants per experiment) due to a lack of clear P1 component after visual inspection of the ERPs. This resulted in a final sample size of 23 participants (12 females) in each experiment. Ages ranged from 19 to 27 years (Expt.1: mean = 21.4, SD = 2.3; Expt.2: mean = 21.5, SD = 2.5).

Participants were pre-screened based on their scores on the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA) test (Ree, French, MacLeod, & Locke, 2008), and only those whose trait anxiety scores were in the normal range, below the high anxiety score of 42, were tested (mean trait anxiety scores in Expt.1 = 30.74, SD = 6.76; Expt.2 = 31.57, SD = 7.08). Participants were also preselected on the Autism Quotient (AQ) test (Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001), which has been shown to modulate the GOE (Bayliss, di Pellegrino, & Tipper, 2005). Only participants whose autistic traits were below the threshold score of 26 (Woodbury-Smith, Robinson, Wheelwright, & Baron-Cohen, 2005) were selected (mean AQ scores in Expt.1 = 16.09, SD = 3.82; Expt.2 = 15.61, SD = 4.06). Age, AQ, and STICSA scores did not differ significantly between the two experiments. The study was approved by the University of Waterloo Research Ethics Board, and all participants gave informed written consent.

Stimuli

Photographs of eight individuals (four men, four women) each with surprised, fearful, angry, happy, and neutral expressions were selected from the MacBrain Face Stimulus Set1 (Tottenham et al., 2009). These faces were selected based on their high emotion recognition scores in the original validation set.

Eye gaze was manipulated using Photoshop (Version 11.0). For each image, the iris was cut and pasted to the corners of the eyes to produce a directional leftward or rightward gaze in addition to the original straight gaze. An elliptical mask was applied on each picture, so that hair, ears, and shoulders were not visible. The set of images was equated for contrast and luminance, using the SHINE toolbox (Willenbockel et al., 2010). All face photographs subtended a visual angle of 8.02° horizontally and 12.35° vertically and were centrally presented on a white background.

Procedure

All participants completed the two experiments one week apart. Experiment 1 included fearful, surprised, and neutral faces, while Experiment 2 included happy, angry, and neutral faces. This design maximized the number of trials per condition while diminishing fatigue effects that would have arisen in one single, lengthy experiment. The order in which the two experiments were run was counterbalanced across participants.

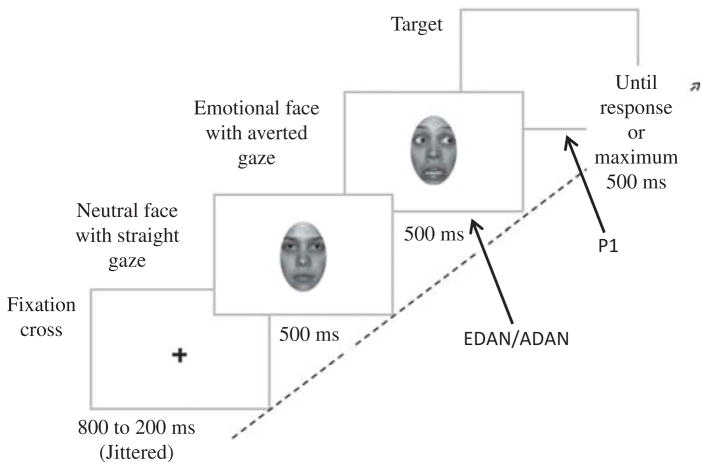

In each experiment, participants sat at a distance of 67 cm in front of a computer monitor in a quiet dim-lit and electrically shielded room, with their head restrained by a chin rest. Each trial started with a centered fixation cross (1.28° × 1.28° visual angle), presented randomly for 800, 900, 1000, 1100, or 1200 ms. A neutral face with straight gaze was then shown for 500 ms, followed by the same face expressing or not an emotion and with rightward, leftward, or straight gaze, also presented for 500 ms (Figure 1). This fast serial presentation provoked the perception of a face moving its eyes to the side and dynamically expressing an emotion (apparent motion). The target, a black asterisk (.85° × .85°), was then presented either on the right or on the left at a distance of 7.68° from the center of the screen. It remained on the screen until the response or for a maximum of 500 ms (requested, 516 ms of actual display).

Figure 1.

Procedure used, with the example of an incongruent trial (target appearing in the direction opposite to gaze cue) and a fearful expression, as used in Experiment 1. Arrows show at which stage the ERP components are measured (P1 at target presentation and EDAN/ADAN at cue presentation).

The experiments were programmed using Presentation® software (Version 0.70, www.neurobs.com), and each consisted of 11 blocks of 144 trials separated by a self-paced break, resulting in 88 trials for each of the 18 conditions. A condition consisted of a combination of a particular gaze direction (straight, rightward, and leftward) with a specific emotion and a target position (left or right). There were an equal number of congruent, incongruent, and straight-gaze trials. The trial order was fully randomized within a block, with the eight face models appearing once for each condition.

Throughout the experiments, subjects were instructed to maintain fixation at the central location. Twenty practice trials were run before starting each experiment and the participants were told that the direction of eye gaze was not predictive of target location. They were required to press the left key “C” on the keyboard with their left hand when the target was shown on the left and the right key “M” with their right hand when the target was presented on the right. They were asked to be as accurate and as fast as possible.

Electrophysiological recordings

The electroencephalogram (EEG) was recorded with an Active Two Biosemi system using a 66-channel elastic cap (extended 10/20 system) plus 3 pairs of extra electrodes, for a total of 72 recording sites. Two pairs of ocular sites monitored vertical and horizontal eye movements from the outer canthi and infra orbital ridges (IO1, IO2, LO1, LO2); one pair was situated over the mastoids (TP9/TP10). EEG was recorded at a sampling rate of 516 Hz. A common mode sense (CMS) active electrode and driven right leg (DRL) passive electrode serving as ground were used during acquisition. Offline, an average reference was computed and was used for the analysis.

For the target analysis, EEG was epoched relative to a 100 ms pre-target baseline up to 300 ms post-target onset. For the cue analysis, EEG was epoched relative to a 100 ms pre-gaze cue baseline up to 500 ms post-cue onset. Data were band-pass filtered (0.01 Hz–30 Hz). For each subject, trials with amplitudes larger than +/− 70 μV recorded at any given time point on any channel but excluding eye movements, were first rejected before Independent Component Analysis (ICA) decomposition. This represented less than 10% of the total number of trials. ICA was then performed as implemented in EEGLAB (Delorme & Makeig, 2004), derived from all trials. ICA components reflect-ing major artifacts, including ocular movements or electrode dysfunction, were removed for each participant. After ICA decomposition, some more trials with extreme values (+/−50 μV) were rejected, if needed. ERPs were then computed for each subject and each condition. Across subjects, the average number of trials per condition after artifact rejection was 82 ± 6 for Expt.1 and 83 ± 5 in Expt.2 for P1 and between 140 and 160, for EDAN and ADAN.

Data analysis

Behavior

Responses were recorded as correct if the response key matched the side of the target appearance and if Reaction Times (RTs) were above 100 ms and below 1200 ms. The remaining responses were marked as incorrect. Mean response times for correct answers were calculated according to facial expressions and congruency, with left and right target conditions averaged together. For each subject, only RTs within 2.5 standard deviations from the mean of each condition were kept in the mean RT calculation (Van Selst & Jolicoeur, 1994). On average, less than 7.5% of trials were excluded per condition in each experiment.

It has been shown that a face gazing directly at the participant triggers slower response times than the same face looking to the side, especially when displaying a threatening facial expression (Fox et al., 2007; Georgiou et al., 2005; Mathews et al. 2003), which is consistent with the idea that different processes underlie the perception of direct and averted gaze (George, Driver, & Dolan, 2001). Because direct gaze seems to capture attention to a larger extent than averted gaze (Senju & Hasegawa, 2005), we followed what has been done in previous gaze-orienting studies (Bayless et al., 2011; Fox et al., 2007; Mathews et al. 2003) and analyzed direct gaze separately from averted gaze.

For each experiment, error rates and RTs to averted gaze trials were analyzed separately using a mixed model ANOVA2 with Emotions (3: fearful, surprised, and neutral in Expt. 1 and happy, angry, and neutral in Expt.2) and Congruency (2: congruent, incongruent) as within-subject factors and Experiment Order as a between-subject factor. When the Emotion × Congruency interaction was significant, further analyses were conducted separately for congruent and incongruent trials, using the factor Emotion. RTs to straight-gaze trials were analyzed using an ANOVA with Emotion as a within-subject factor and Experiment Order as a between-subject factor.

ERPs

ERPs to targets

P1 peak was defined as the time point of maximum amplitude between 80 and 130 ms after target onset,3 automatically selected within this time window for each subject and condition. It was then verified by visual inspection. PO7/PO8 and O1/O2 were selected as the electrodes of interest based on data inspection. As P1 is maximal on the hemisphere contralateral to stimulus presentation, and to avoid unnecessarily complicated results, only the electrodes contralateral to the target side were analyzed, as done previously (e.g., Fichtenholtz et al., 2007, 2009). P1 amplitude and latency were analyzed using a 2(Electrodes: PO/O) × 2(Hemisphere: right or left) × 3(Emotions: fear, surprise, neutral or happy, angry, neutral) × 2(Congruency: congruent or incongruent) repeated measures ANOVA. Planned analyses were also carried out for each emotion separately.

ERPs to gaze cue

For this analysis, ERPs were computed time-locked to the gaze shift. Based on careful observation of the current data and previous reports (Hietanen et al., 2008; Holmes et al., 2010; Van Velzen & Eimer, 2003), EDAN component was measured at posterior electrodes (averaged across P7 and PO7 on the left hemisphere and across P8 and PO8 on the right hemisphere) between 200 and 300 ms, while ADAN component was measured at anterior electrode sites (averaged across F5, F7, FC5, and FT7 for the left hemisphere and F6, F8, FC6, and FT8 for the right hemisphere) between 300 and 500 ms.

Given that EDAN and ADAN are components characterized by more negative amplitudes for contralateral gaze cues compared to ipsilateral gaze cues, we investigated, for each hemisphere, whether amplitudes were more negative for face cues with gaze directed toward the contralateral side than for gaze directed toward the ipsilateral side.4 For the left hemisphere, leftward gaze was the ipsilateral gaze condition and rightward gaze the contralateral gaze condition and inverse was true for the right hemisphere.

For both components, mean amplitudes for the ipsilateral and contralateral conditions were calculated for each of the three emotions and for each hemisphere. In each study, a 2(Hemisphere) × 2 (Gaze laterality: contralateral, ipsilateral) × 3(Emotion) repeated measure ANOVA was computed.

For all analyses, statistical tests (including behavioral analyses) were set at α < .05 significance level, and Greenhouse–Geisser correction for sphericity was applied when necessary. Adjustment for multiple comparisons was carried out using Bonferroni corrections.

EXPERIMENT 1 RESULTS

Behavior

Averted gaze trials

Mean proportion of errors for averted gaze trials are shown in Table 1(a). The error rate analysis did not yield a main effect of Experiment Order (F =.37, p = .55) or an interaction involving Experiment Order. A main effect of Congruency was found (F (1, 21) = 15.19, MSE = 11.64, p < .01, η2 = .42) with more errors in the incongruent (7.04%) than in the congruent condition (4.78%). Note that in the remainder of the paper, for clarity, we used the Eta Squared symbol η2 but report the actual partial Eta Squared values (as calculated by SPSS Statistics 21). However, there was no main effect of Emotion (F = 1.53, p = .23) or interaction between Emotion and Congruency (F = 0.1, p = .99) on the error rate.

TABLE 1.

Mean error rates obtained in Experiment 1 for averted gaze trials (a) and straight-gaze trials (b)

| (a) Error (%) in Averted Gaze | Mean (SD) |

| Surprise congruent | 4.92 (5.73) |

| Surprise incongruent | 7.24 (5.38) |

| Neutral congruent | 5.04 (4.52) |

| Neutral incongruent | 7.26 (5.69) |

| Fear congruent | 4.40 (4.77) |

| Fear incongruent | 6.77 (5.90) |

| (b) Error (%) in Direct Gaze | Mean (SD) |

| Surprise straight gaze | 4.82 (4.87) |

| Neutral straight gaze | 6.47 (6.79) |

| Fear straight gaze | 5.95 (5.95) |

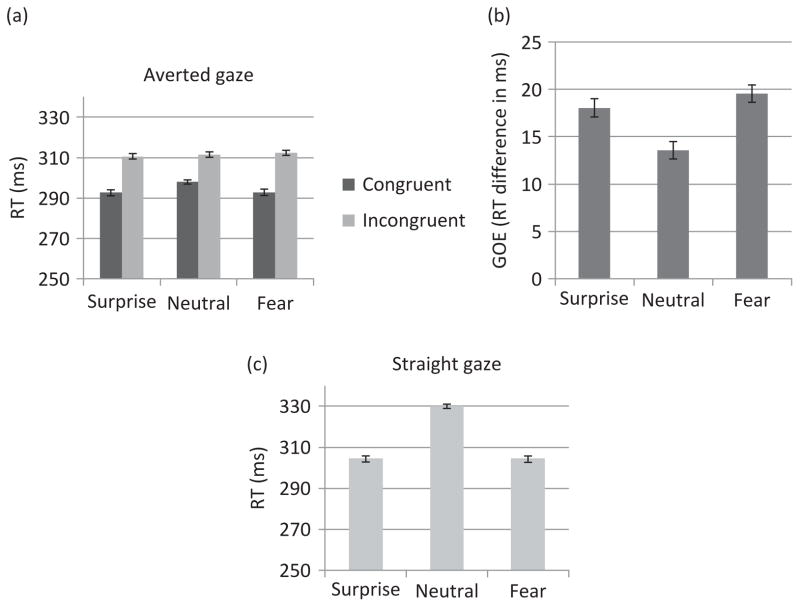

RT analysis to averted gaze trials yielded a main effect of Experiment Order (F (1, 21) = 7.28, MSE = 4074.24, p = .01, η2 = .26), such that RTs were overall faster when Expt.1 was run after Expt.2 (mean = 287.65 ms) than when it was run first (mean = 316.99 ms). However, no interaction involving Experiment Order was significant. Specifically, the order in which Expt.1 was run did not interact with Congruency or Emotion. In addition, there was a main effect of Emotion (F (2, 42) = 4.43, MSE = 24.67, p < .02, η2 =.17), with faster RTs to surprise than to neutral emotions (p < .01) and a tendency for faster RTs to fear than to neutral emotions (p = .08). Surprise and fear did not differ significantly. There was also a main effect of Congruency (F (1, 22) = 44.19, MSE = 226.80, p < .01, η2 = .67), reflecting faster RTs in the congruent than in the incongruent condition (Figure 2(a)). The Congruency by Emotion interaction was significant (F (2, 44) = 6.24, MSE = 17.81, p < .01, η2 = .22) due to a larger congruency effect for fear and surprise than for neutral emotions (p < .01 and p = .02, respectively; Figure 2(b)). The GOE did not differ significantly between fear and surprise. The congruent condition analyzed separately revealed a main effect of Emotion (F (2, 44) = 8.28, MSE = 25.25, p < .01, η2 = .27), with faster RTs for surprise and fear (which did not differ) than for neutral (p < .01 for each comparison). There was no Emotion effect for the incongruent condition.

Figure 2.

Behavioral results of Experiment 1 involving fearful, surprised, and neutral expressions. (a) Mean RTs to congruent and incongruent trials, (b) mean GOE (RT incongruent–RT congruent) for each emotion, and (c) mean RTs to straight-gaze trials. In all analyses, N = 23 and error bars represent SE.

Straight-gaze trials

Mean proportion of errors for straight-gaze trials are shown in Table 1(b). Analysis of errors revealed no main effect of Experiment Order (F = .97, p = .34) or an interaction between Experiment Order and Emotion (F = 2.16, p = .13).

RT analysis to straight-gaze trials also revealed a main effect of Experiment Order (F (1, 21) = 8.59, MSE = 2376.88, p < .01, η2 = .29), such that the RTs were faster when Expt.1 was run second (294.87 ms) than when it was run first (329.31 ms). However, the Experiment Order by Emotion interaction was not significant. In addition, as shown in Figure 2(c), RTs recorded to straight-gaze trials showed a main effect of Emotion (F (1.31, 28.77) = 66.80, MSE = 75.95, p < .01, η2 = .75) with faster RTs for surprise and fear than for neutral (p < .01 for both comparisons).

ERPs to targets

P1 amplitude

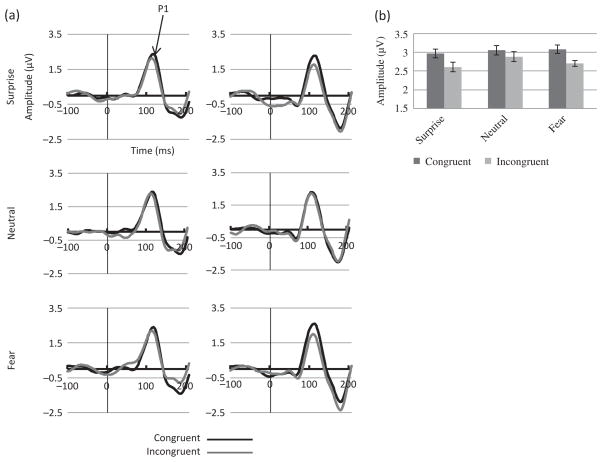

P1 amplitude analysis revealed a main effect of Electrode (F (1, 22) = 46.79, MSE = 5.96, p < .01, η2 = .68) with larger amplitudes at O1/O2 than at PO7/PO8. A main effect of Congruency (F (1, 22) = 5.19, MSE = 1.17, p = .03, η2 = .19) was due to larger amplitudes for the congruent than for the incongruent condition. However, this effect was significant at PO7/PO8 (F (1, 22) = 7.34, MSE = .86, p = .01, η2 = .25, Figure 3(a) and (b)) but not at O1/O2 sites, as revealed by a significant Congruency by Electrode interaction (F (1, 22) = 7.51, MSE = .16, p = .01, η2 = .25).

Figure 3.

ERPs to the target in Experiment 1. (a) ERP waveforms showing P1 component at electrodes PO7 (left hemisphere, left panels) and PO8 (right hemisphere, right panels) for each emotion (Fear, Surprise, Neutral: N = 23) and (b) mean amplitudes for the congruent and incongruent conditions for each emotion.

Although no Congruency by Emotion interaction was found, planned analyses were performed for each emotion separately at PO7/PO8. The Congruency effect was present for surprise (F (1, 22) = 4.48, MSE = .67, p = .05, η2 = .17) and fear (F (91, 22) = 6.40, MSE = .52, p = .02, η2 = .23) but not for neutral emotions (Figure 3(b)).

P1 latency

A main effect of Congruency (F (1, 22) = 6.24, MSE = 38.21, p = .02, η2 = .22) was due to overall later P1 peak in the congruent than in the incongruent condition. In addition, the Congruency by Hemisphere by Emotion interaction was significant (F (2, 44) = 4.34, MSE = 32.12, p = .02, η2 = .17) but when the analysis was computed separately for each hemisphere, the Congruency by Emotion interaction was not significant for any of the hemisphere.

ERPs to gaze cues

Early directing attention negativity (EDAN)

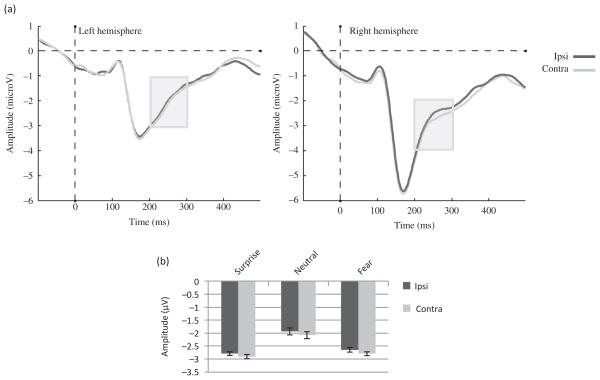

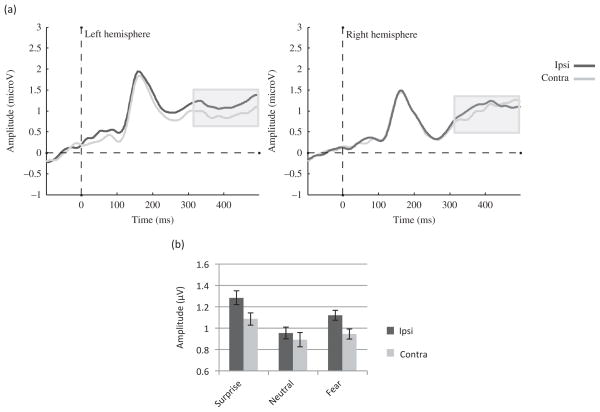

The analysis showed a trend toward a main effect of Hemisphere (F (1, 22) = 3.63, MSE = 9.99, p = .07, η2 = .14) with larger amplitudes in the right than in the left hemisphere. There was also a main effect of Gaze laterality (F (1, 22) = 10.76, MSE = .12, p < .01, η2 = .34), such that the amplitude was more negative for contralateral than ipsilateral gaze direction (Figure 4(a) and (b)). In addition, there was a main effect of Emotion (F (1.17, 25.65) = 16.38, MSE = 1.15, p < .01, η2 = .43), such that fearful and surprised faces yielded more negative amplitudes than neutral faces as shown in Figure 4(b) (p < .01 for both). However, the Emotion by Gaze laterality interaction was not significant (F (2, 44) = .13, MSE = .07, p = .88, η2 < .01).

Figure 4.

EDAN component to the face cue in Experiment 1. (a) Effect of Gaze laterality on the group ERP waveforms (averaged across emotions and electrodes). The gray zone marks the time limits of the analysis (200–300 ms) and (b) group amplitudes for contralateral and ipsilateral gaze directions for each emotion averaged across electrodes between 200 and 300 ms.

Anterior directing attention negativity (ADAN)

The analysis showed a main effect of Gaze laterality (F (1, 22) = 8.50, MSE = .17, p<.01, η2 = .28), such that the amplitude was less positive for the contralateral gaze when compared with the ipsilateral gaze (Figure 5(a) and (b)). There was also a main effect of Emotion (F (1.72, 37.91) = 7.47, MSE = .25, p < .01, η2 = .25), such that surprised faces yielded larger amplitudes than fearful (p = .01) or neutral faces (p < .01) as shown on Figure 5(b). No significant Emotion by Gaze laterality interaction was found (F (2, 44) =.80, MSE = .15, p = .80, η2 = .04). However, a Gaze laterality by Hemisphere interaction was present (F (1, 22) = 7.07, MSE = .08, p = .01, η2 = .24) due to a Gaze laterality effect present in the left hemisphere (F (1, 22) = 17.26, MSE = .11, p < .01, η2 = .44) but not in the right hemisphere (F (1,22) = .69, MSE = .14, p = .41, η2 = .03).

Figure 5.

ADAN component to the face cue in Experiment 1. (a) Effect of Gaze laterality on the group ERP waveforms averaged across emotions and electrodes (F5, F7, FC5, and FT7 for the left hemisphere; F6, F8, FC6, and FT8 for the right hemisphere). The gray zone marks the time limits of the analysis (300–500 ms) and (b) Effect of Gaze laterality on the average amplitude across ADAN electrodes between 300 and 500 ms for each emotion.

Summary

Participants were faster to respond to a gazed-at target than to a non-gazed-at target. This classic GOE was enlarged when the target was preceded by fearful or surprised compared with neutral faces. At target presentation, P1 amplitude showed a congruency effect at PO7/PO8 sites and planned comparisons revealed that this effect was restricted to targets preceded by fearful and surprised expressions. At cue presentation, we found evidence for a Gaze laterality effect, early at posterior sites (EDAN) and late at anterior sites (ADAN). Amplitudes were also larger for emotional than for neutral faces between 200 and 300 ms, and this effect was less pronounced between 300 and 500 ms. Finally, no Gaze laterality by Emotion interaction was found for EDAN or ADAN.

EXPERIMENT 2 RESULTS

Behavior

Averted gaze trials

Mean proportion of errors are shown in Table 2(a). No main effect of Experiment Order (F = 1.00, p = .76) or an interaction involving Experiment Order were found for errors. A main effect of Congruency was found (F (1, 22) = 7.92, MSE = 16.05, p = .01, η2 = .27), with more errors in the incongruent (5.90%) than in the congruent condition (3.98%). However, there was no main effect of Emotion (F = 2.73, p = .08) or interaction between Emotion and Congruency (F = 0.15, p = .96) on the error rate.

TABLE 2.

Mean error rates obtained in Experiment 2 for averted gaze trials (a) and straight-gaze trials (b)

| (a) Error (%) in Averted Gaze | Mean (SD) |

| Happy congruent | 3.65 (2.49) |

| Happy incongruent | 5.48 (4.69) |

| Neutral congruent | 4.57 (3.61) |

| Neutral incongruent | 6.25 (5.00) |

| Angry congruent | 3.71 (2.97) |

| Angry incongruent | 5.95 (5.39) |

| (b) Error (%) in Direct Gaze | Mean (SD) |

| Happy straight gaze | 3.71 (3.94) |

| Neutral straight gaze | 5.33 (5.07) |

| Angry straight gaze | 3.16 (3.19) |

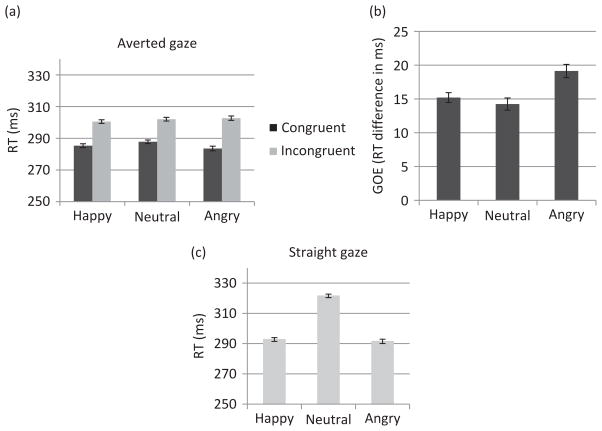

For RTs recorded to averted gaze trials, no main effect of Experiment Order or interaction involving Order was significant. A main effect of Congruency (F (1, 21) = 43.24, MSE = 207.83, p < .01, η2 = .67) reflected faster RTs in the congruent than in the incongruent condition (Figure 6(a)). In addition, the Congruency by Emotion interaction was significant (F (2, 42) = 5.53, MSE = 13.29, p < .01, η2 = .21) due to a larger GOE for angry than for neutral (p < .01) and happy (p = .02) faces which did not differ significantly (Figure 6(b)). Analyzed separately, the congruent condition showed a main effect of Emotion (F (2, 42) = 4.09, MSE = 24.49, p = .02, η2 = .16) with faster RTs for angry faces compared with neutral faces (p = . 01); no other comparisons were significant. There was no emotion effect for the incongruent condition (Figure 6(a)).

Figure 6.

Behavioral results of Experiment 2 involving happy, angry, and neutral expressions. (a) Mean RTs for congruent and incongruent trials, (b) mean GOE (RT incongruent–RT congruent) for each emotion, and (c) mean RTs for straight-gaze trials. In all analyses, N = 23 and error bars represent SE.

Straight-gaze trials

Mean proportion of errors are shown in Table 2(b). No main effect of Experiment Order (F = .05, p = .83) or an interaction between Experiment Order and Emotion (F = .06, p = .95) were found for errors.

No effect of, or interaction with, Experiment Order was found on the RT analysis. As shown in Figure 6(c), RT analysis for straight-gaze trials revealed a main effect of Emotion (F (2, 42) = 93.96, MSE = 75.17, p < .01, η2 = .82) with faster RT for angry and happy (which did not differ) than neutral faces (both at p < .01).

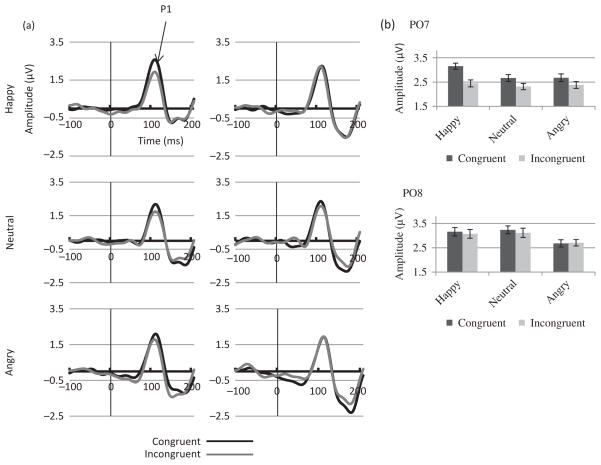

ERPs to the target

P1 amplitude

We found a main effect of Electrode (F (1, 22) = 22.46, MSE = 19.63, p < .05, η2 = .51), with larger amplitudes at O1/O2 than at PO7/PO8, and a main effect of Emotion (F (2, 44) = 3.33, MSE = 2.00, p < .05, η2 = .13), with overall larger amplitudes for targets preceded by happy than by angry faces (p < .01). Additionally, the expected congruency effect was found (F (1, 22) = 4.20, MSE = 1.73, p = .05, η2 = .16), with larger P1 amplitudes in the congruent than in the incongruent condition (Figure 7).

Figure 7.

ERPs to the target in Experiment 2. (a) ERP waveforms showing P1 component at electrodes PO7 (left hemisphere, left panels) and PO8 (right hemisphere, right panels) for each emotion (Happy, Angry, Neutral: N = 23) and (b) Mean P1 amplitudes for the incongruent and congruent conditions for each emotion: left hemisphere (PO7, upper panel) and right hemisphere (PO8, lower panel).

Planned analyses for each emotion revealed a main effect of Congruency for happiness (F (1, 22) = 6.42, MSE = .92, p = .02, η2 = .23) as well as a Hemisphere by Congruency interaction (F (1, 22) = 4.72, MSE = 1.08, p = .04, η2 = .18), which was due to the congruency effect being present on the left (p = .02) but trending on the right hemisphere (p = .09). The congruency effect was not significant for the neutral or angry emotions (Figure 7(a) and (b)).

P1 latency

There was neither a main effect of Congruency (F (1, 22) = 1.41, MSE = 89.21, p = .25, η2 = .06) nor a Congruency by Emotion interaction (F (2, 44) = .59, MSE = 63.87, p = .56, η2 = .03) on P1 latency.

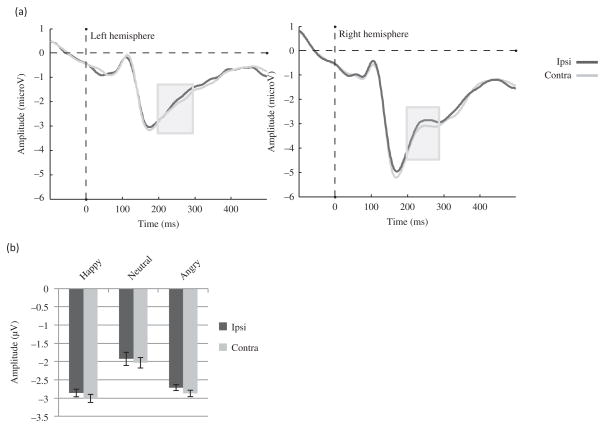

ERPs to the cue

Early directing attention negativity (EDAN)

A main effect of Hemisphere was found (F (1, 22) = 6.93, MSE = 11.18, p = .01, η2 = .24) with larger amplitudes in the right than in the left hemisphere. A main effect of Gaze laterality was also found (F (1, 22) = 9.25, MSE = .18, p < .01, η2 = .27), such that the amplitude was more negative for contralateral gaze when compared with ipsilateral gaze direction (Figure 8(a) and (b)). In addition, there was a main effect of Emotion (F (1.25, 27.42) = 12.87, MSE = 1.76, p < .01, η2 = .37), such that happy and angry faces led to more negative amplitudes than neutral faces as shown in Figure 8(b) (p < .01 for both). No significant interaction between Emotion and Gaze laterality was found (F (2, 44) = .28, MSE = .08, p = .63, η2 = .02).

Figure 8.

EDAN component to the face cue in Experiment 2. (a) Effect of Gaze laterality on the group ERP waveforms (averaged across emotions and across electrodes). The gray zone marks the time limits of the analysis (200–300 ms) and (b) group amplitudes for contralateral and ipsilateral gaze directions averaged across emotions and electrodes between 200 and 300 ms.

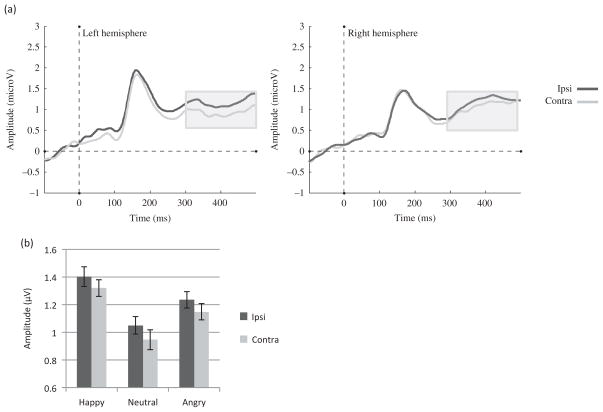

Anterior directing attention negativity (ADAN)

We found a main effect of Gaze laterality (F (1, 22) = 7.07, MSE = .13, p = .04, η2 = .17), such that the amplitude was less positive for the contralateral gaze direction when compared to the ipsilateral gaze direction as shown in Figure 9(a) and (b). In addition, there was a main effect of Emotion (F (2, 44) = 6.19, MSE = .49, p < .01, η2 = .22), such that happy faces led to larger amplitudes than neutral faces as seen in Figure 9(b) (p < .01). No Emotion by Gaze laterality interaction was found (F (2, 44) = .03 MSE = .10, p = .97, η2 < .01).

Figure 9.

ADAN component to the face cue in Experiment 2. (a) Effect of Gaze laterality on the average ERP waveform (averaged across emotions and across electrodes) and (b) group amplitudes for contralateral and ipsilateral gaze directions averaged across emotions and electrodes between 300 and 500 ms.

Summary

The classic GOE was found and was enlarged for targets preceded by angry faces compared to those preceded by neutral or happy faces. A congruency effect was found on P1 amplitude, and there was a left lateralized enhancement of this effect when the target was preceded by happy faces. At cue presentation, amplitudes were more negative when the gaze was directed toward the contralateral than the ipsilateral hemifield at early latencies posteriorly (EDAN) and at later latencies anteriorly (ADAN). Larger amplitudes were also seen for emotional than for neutral faces between 200 and 500 ms, although the effect was weaker between 300 and 500 ms. However, no Gaze laterality by Emotion interaction was found for either of these two components.

DISCUSSION

Both RTs and scalp ERPs were recorded in two gaze cuing experiments involving facial expressions. We found that attention orienting was enhanced at gazed-at locations and that the size of this enhancement varied depending on the emotion expressed by the face cue. These behavioral and electrophysiological results are discussed in turn in the following section.

GOE modulation by emotions

Our first goal was to establish the impact of anger, happiness, fear, and surprise on attention orienting in the general, non-anxious population. Using dynamic stimuli, a localization task and a 500 ms SOA, we observed faster RTs for the congruent compared to the incongruent conditions. This classic GOE (see Frischen et al., 2007 for a review) reflects the enhanced spatial attention allocation at the gazed-at location. Better accuracy was also found for congruent than for incongruent trials, as previously reported (e.g., Graham et al., 2010). Most importantly, this GOE was enlarged when the cue displayed a fearful or surprised rather than a neutral expression and when it displayed an angry rather than a happy or neutral expression. This GOE enhancement was driven by faster RTs for those emotions compared to neutral expressions in the congruent trials.

Numerous studies that investigated the modulation of the GOE with emotional faces focused on fear due to the intuitive advantage conferred by threat detection. Indeed, although fast attention orienting toward the direction of another’s gaze is expected regardless of facial expression, orienting to an object eliciting fear should be even faster, given that the object being looked-at is likely to be a threat. In accordance with this idea and with previous studies, we found an increased GOE for fearful compared to neutral faces (Fox et al., 2007; Graham et al., 2010; Mathews et al. 2003; Neath et al., 2013; Tipples, 2006). As outlined in the introduction, failure by some studies to find such a GOE increase for fearful compared to neutral faces could be attributed to the use of a too short SOA (e.g., Bayless et al., 2011; Galfano et al., 2011), the use of a static rather than dynamic cue (e.g., Hietanen & Leppanen, 2003), or the use of a discrimination task rather than a localization task (e.g., Mathews et al. 2003 in low anxious; Holmes et al., 2010).

Surprised expressions were rarely investigated in gaze cuing experiments. One study reported larger GOE for surprised than for angry and happy expressions, but not larger than neutral expressions (Bayless et al., 2011), possibly due to the use of a short SOA (200 ms). In accordance with our results, a recent study reported a larger GOE for surprised than neutral faces (Neath et al., 2013). Interestingly, the magnitude of the GOE was similar for surprise and fear, as also found previously (Bayless et al., 2011; Neath et al., 2013). Both fearful and surprised faces are characterized by enlarged sclera size, which may make the gaze changes more salient and contribute to the increase in GOE (Bayless et al., 2011; Tipples, 2006). As seen with fear, rapid orienting toward an object looked-at by a surprised face could also be highly beneficial for survival. Surprised faces signal the presence of a novel object but remain ambiguous regarding its valence. In the real world, surprise is transitory and is followed by another emotion (Fontaine et al., 2007). Given that this subsequent emotion is uncertain, it could be advantageous for the viewer to orient faster toward the object eliciting surprise in order to determine whether it is dangerous. Alternatively, the GOE enhancement with surprise could be due to its negative valence in the context of fear. Indeed, surprise is perceived negatively when presented with negative emotions (Neta & Whalen, 2010). Relative rather than absolute valence might be important for an emotional modulation of the GOE, an idea that future studies will have to test.

Tiedens (2001) suggested that anger displays are used by expressers who wish to be recognized as legitimate leaders, since power is conferred to angry individuals. It could thus be advantageous to attend faster to the same object as an angry individual to learn what to avoid in order to prevent conflict with the powerful expresser. Previous gaze-orienting studies failed to show an enhancement of the GOE with anger (e.g., Bayless et al., 2011; Fox et al., 2007; Hietanen & Leppänen, 2003). This lack of result could be explained by the use of a too short SOA in some cases (e.g., Bayless et al., 2011). In other studies (Hietanen & Leppänen, 2003 [Exp.6], Bayless et al., 2011; Fox et al., 2007), fearful expressions were also present in the design, and although localizing a conflict could be beneficial, it might be less so than localizing a danger. As a result, the effect of anger on the GOE could have been masked in experiments including fearful faces. One study in which fearful faces were not included did report an increase of the GOE with anger compared with neutral and happy faces, but only in high-anxious participants (Holmes et al., 2006). The lack of effect reported in low-anxious individuals in this study might be due to the use of a discrimination task. When using a localization task and a long enough SOA without fearful faces in the same design, we showed a GOE enhancement for angry relative to happy and neutral faces in non-anxious participants (Expt.2). Thus, like fear and surprise, anger can also enhance spatial attention orienting. Whether this enhancement is due to the task, the lack of fearful faces in the design, the SOA, or all factors combined will need to be addressed by future studies.

In contrast, joy never modulated the GOE in any study including the present one. From an evolutionary standpoint, there is no advantage to orient rapidly toward an object eliciting joy, as it is not likely to be crucial for the observer’s survival.

In addition to evolutionary relevance and relative valence of an emotion, eye sclera size has been linked to the modulation of the GOE with emotions and is larger in fearful and surprised faces compared to neutral faces (Bayless et al., 2011; Tipples, 2006). However, one study using similar faces as the ones used here found that sclera size was also larger for neutral than happy and angry faces (Bayless et al., 2011), making it unlikely to be the critical factor at play in our results, given the larger GOE found for angry compared to neutral faces.

The extent of apparent motion also differs depending on the emotion expressed by the face cue. In the emotional conditions, neutral faces with straight gaze changed to emotional faces with averted gaze, inducing apparent movement in gaze and in the rest of the face. Even when there was no gaze shift (straight-gaze condition), the rest of the face moved. In contrast, in the neutral expression condition, neutral faces remained neutral and thus showed less apparent motion than emotional faces in the averted gaze condition and none in the straight-gaze condition. However, movement did not seem to be a critical factor in eliciting the GOE here, as happy expressions, which also contained movement, did not enhance the GOE compared to neutral faces. In contrast, in the straight-gaze condition, it is impossible to disentangle whether emotional content or movement is driving the faster response for emotional relative to neutral faces, as all emotions decreased the response to targets compared to neutral faces. Future studies, using face inversion (which preserves movement but disrupts emotional processing), could help shed more light on this issue.

Importantly, this GOE enhancement with fearful, surprised, and angry facial expressions was found in the general, non-anxious population. Previous studies showed that the GOE enhancement for negative emotions such as fear or anger was dependant on the anxiety or fearfulness of the participants (Fox et al., 2007; Holmes et al., 2006; Mathews et al. 2003; Putman et al., 2006; Tipples, 2006). In contrast, the present study shows a modulation of the GOE with fear, anger, and surprise in non-anxious participants, replicating recent findings (Neath et al., 2013) and extending them, for the first time, to angry faces. Thus, the emotion modulation of the GOE can be found in the general population when using dynamic stimuli and a localization task.

Finally, the GOE enhancement for the emotional faces reported here was due to faster RTs in the congruent condition rather than longer RTs in the incongruent condition, reflecting a facilitation of gaze-oriented attention for these emotions. Overall, these findings suggest that certain emotions boost gaze-oriented attention and that the degree to which an emotion influences spatial attention depends on its relative valence and evolutionary relevance.

ERPs to targets

Our second main goal was to find neural correlates of the modulation of gaze orienting by emotions using ERPs. P1, a component influenced by attention (Mangun, 1995), was investigated. In accordance with previous studies, P1 showed larger amplitudes for congruent than for incongruent trials (Hietanen et al., 2008; Schuller & Rossion, 2001, 2004, 2005), which reflected enhanced spatial attention allocation to gazed-at targets compared to non-gazed-at targets.

Planned comparisons revealed that this congruency effect was restricted to targets following surprised and fearful faces in Exp.1 and to right-sided targets following happy faces in Exp.2. This is the first gaze cuing study showing a modulation of the congruency effect on P1 amplitude with emotion. Previous studies using shorter SOAs failed to observe this finding not only on P1 amplitude but also at the behavioral level (Fichtenholtz et al., 2007; Galfano et al., 2011), suggesting that when emotional faces are used, longer SOAs are required to influence the spatial attention network and the processing of the target, in accordance with previous research (Graham et al., 2010). Further supporting the idea that integration of gaze and emotion takes time, P1 was delayed in the congruent compared to the incongruent condition in Exp.1, whereas previous studies using only neutral faces reported a shorter P1 latency in the congruent compared to the incongruent condition (Hietanen et al., 2008; Schuller & Rossion, 2001, 2004, 2005) or simply did not analyze P1 latency (Fichtenholtz et al., 2007, 2009; Galfano et al., 2011; Holmes et al., 2010).

The presence of a congruency effect on P1 for fearful and surprised but not neutral faces in the current study suggests that spatial attention resources were preferentially allocated to targets following these emotional faces, likely because they suggest a threat for the observer. The lack of congruency effect on P1 amplitude for targets following neutral faces contradicts previous findings (Hietanen et al., 2008; Schuller & Rossion, 2001, 2004, 2005) but makes sense in this particular emotional context, as objects observed with a neutral face are likely to be less important than objects looked-at by a fearful or surprised face.

In addition, while we observed an enhancement of the GOE for angry relative to happy and neutral faces, the congruency effect on P1 was only enhanced for right targets preceded by happy faces (i.e., only in the left hemisphere). This might reflect the anticipation of a positive item, which has been linked to left hemispheric activation (Davidson & Irwin, 1999). Anticipation could also explain why anger did not modulate the congruency effect on P1 amplitude, as in this case the outcome is ambiguous (Carver & Harmon-Jones, 2009). Although not significant, there was a tendency for the P1 congruency effect to be localized to the right hemisphere for targets following fearful and surprised faces (Figure 3(a)), which is also consistent with the hypothesis of a lateralized P1 congruency effect linked to the anticipation of the outcome depending on the valence of the face cue.

Alternatively, this emotional modulation of the P1 congruency effect could reflect later stages of emotional processing of the cue interacting with the visual processing of the target. Indeed, it was recently argued that emotions for which the diagnostic feature is in the bottom part of the face (like mouth for happiness) activate the left hemisphere, while emotions for which the diagnostic feature is located in the top half of the face (like eyes for fear and surprise) activate the right hemisphere preferentially (Prodan, Orbelo, Testa, & Ross, 2001).

Overall, the attention effect on early visual processes related to the target was enhanced for surprise and fear and for happiness in the left hemisphere, possibly reflecting contamination by later processing stages of the preceding facial expression or the anticipated valence of the target. Future studies will have to disentangle between these hypotheses.

ERPs to the gaze cues

Our final goal was to establish the temporal stages involved in the emotional modulations of gaze-oriented attention during cue presentation. It was suggested that the processes at play in gaze-oriented attention are similar to those involved in arrow-oriented attention (e.g., Brignani, Guzzon, Marzi, & Miniussi, 2009). Thus, the two stages of attention, i.e., orienting toward a cued location and holding attention at that location, indexed, respectively, by EDAN and ADAN components in arrow cuing paradigms were expected. The present study is the first to report both EDAN and ADAN components in a gaze cuing paradigm. Hietanen et al. (2008) found EDAN and ADAN with arrow but not gaze cues, while Holmes et al. (2010) found no evidence for EDAN with gazing faces but did find an ADAN component. These discrepant results could be due to the use of different experimental parameters. We used face photographs presented dynamically, while Hietanen et al. (2008) used static schematic face drawings. In addition, we used a target localization task, and not a target discrimination or a detection task as used previously by Holmes et al. (2010) and Hietanen et al. (2008), respectively. Although the GOE was shown regardless of the task for neutral faces, smaller congruency effects were seen with discrimination compared with detection or localization tasks due to their higher cognitive demands (Friesen & Kingstone, 1998). The choice of the task was based on the idea that, in real life, we most often use eye gaze to localize the source of the emotion before discriminating it. Our results are consistent with studies suggesting that attention orienting by gaze and arrows may recruit similar neural networks (e.g., Brignani et al., 2009), although to be conclusive, prospective studies will need to directly compare EDAN and ADAN components to arrow and gaze cues in the same paradigm. It is also important to note the larger Gaze laterality effect at ADAN than at EDAN. This suggests that although attention orienting starts around 200 ms after gaze shift (EDAN), it is maximal between 300 and 500 ms of cue processing (ADAN).

As expected, EDAN was not modulated by emotion, given that it occurs between 200 and 300 ms after gaze cue onset, whereas emotion and gaze cues seem to require more than 300 ms to be fully integrated. In addition, in accordance with Holmes et al. (2010), we did not observe an emotional modulation of ADAN, suggesting that emotion does not modulate gaze-oriented attention before 500 ms after gaze onset.

Incidentally, we found that for faces with averted gaze, amplitudes at posterior sites were larger for facial expressions relative to neutral faces between 200 and 300 ms (EDAN). This effect is in line with the literature reporting an enhancement of ERP components with all emotions regardless of their valence between these latencies, and likely reflecting general emotional arousal (see Vuilleumier & Pourtois, 2007 for a review). At anterior sites, between 300 and 500 ms (ADAN), amplitudes were also enhanced, albeit less strongly, for surprise and happy relative to neutral faces, likely reflecting more complex emotional appraisal (Vuilleumier & Pourtois, 2007).

Temporal dynamics of gaze-oriented attention and its modulation by emotion

Overall, we showed that in a gaze cuing paradigm, just like in arrow cuing studies, orienting of attention by gaze and holding of attention at gazed-at location could be indexed by EDAN and ADAN components. The first response to emotions was seen between 200 and 300 ms after face cue onset (EDAN), during which attention orienting processes just began. Between 300 and 500 ms after cue onset (ADAN) emotion processing continued while attention orienting was fully expressed. Thus, both emotion and gaze-oriented attention processes occurred during the cue presentation, but the emotional effect was larger earlier on while attention orienting was maximal later on, suggesting a slight temporal difference between these processes.

The integration of gaze and emotion occurred even later. Emotional expressions began to influence gaze orienting only at target presentation, as seen by modulations of the congruency effect on P1 for targets preceded by happy, surprised, and fearful expressions. As P1 occurred, on average, around 100 ms after target onset, emotional modulation of attention started around 600 ms after cue onset. However, the emotional modulation observed at this stage was weak and lateralized. It also differed from the emotional modulation of gaze-oriented attention observed at the behavioral level (with larger GOE for angry, fearful, and surprised faces relative to neutral faces). The emotional modulation of gaze-oriented attention thus occurred between the P1 and the motor response, i.e., between 600 ms and 800 ms after cue onset (given an average of 300 ms response times) and varied as a function of emotions. That is, fear and surprise increased attention to the target (P1) and increased the behavioral GOE (compared to neutral faces). In contrast, happiness increased attention to the target but did not increase the behavioral GOE, while anger did not modulate attention to target but did increase the GOE. We thus conclude that fear and surprise modulate attention processes earlier than does anger. We also suggest that other processes occur between target-triggered P1 and the behavioral responses that would account for the emotional modulation of the GOE with anger and the lack thereof with happiness. Alternatively, it is possible that emotions start modulating attention processes before target onset but in incremental ways that would be individually too weak to be picked up by ERPs such as EDAN or ADAN. In this view, the behavioral response would be the result of the integration of these multiple neural processes occurring between the presentation of the cue and the motor response.

CONCLUSIONS

In the present ERP study involving a dynamic display of facial expressions and a target localization task, we showed that the gaze-orienting effect was enhanced for fearful and surprised faces compared to neutral faces and for angry faces compared to neutral and happy faces, in a sample of non-anxious individuals. We also presented evidence for an emotional modulation of the gaze-congruency effect on P1 ERP component recorded to the target. Finally, we were able to find ERP correlates of spatial attention orienting during gaze cue presentation (EDAN and ADAN components), although at these stages, attention was not yet modulated by the emotion of the face cue. Modulations of gaze-oriented attention by emotions arose later, starting weakly on P1 and being seen more clearly on the GOE. These effects were different depending on the emotion, with seemingly earlier modulations for fear and surprise than for anger. Together, these findings suggest that the modulation of spatial attention with emotion in gaze cuing paradigms is a rather late process, occurring between 600 and 800 ms after face cue onset. Future studies should extend this research to broaden our understanding of the mechanisms at play during integration of gaze and emotion cues.

Acknowledgments

This study was supported by the Canadian Institute for Health Research (CHIR), The Canada Foundation for Innovation (CFI), the Ontario Research Fund (ORF), and the Canada Research Chair (CRC) program to RJI.

Footnotes

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

An initial behavioral analysis revealed no effect of right or left targets, so they were averaged together. factor and Experiment Order as a between-subject factor.

N1 peak was defined as the peak of minimum amplitude between 115 and 205 ms after target onset. However, N1 was, in general, wide, and a clear peak could not be identified in more than half of the participants. Therefore, N1 analysis was dropped.

Note that, by definition, EDAN and ADAN are calculated for averted gaze trials only.

References

- Baron-Cohen S. Mindblindness: An essay on autism and theory of mind. Cambridge, MA: The MIT Press; 1995. [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry. 2001;42(2):241–251. [PubMed] [Google Scholar]

- Bayless SJ, Glover M, Taylor MJ, Itier RJ. Is it in the eyes? Dissociating the role of emotion and perceptual features of emotionally expressive faces in modulating orienting to eye gaze. Visual Cognition. 2011;19(4):483–510. doi: 10.1080/13506285.2011.552895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayliss AP, di Pellegrino G, Tipper SP. Sex differences in eye gaze and symbolic cuing of attention. The Quarterly Journal of Experimental Psychology. 2005;58A(4):631–650. doi: 10.1080/02724980443000124. [DOI] [PubMed] [Google Scholar]

- Brignani D, Guzzon D, Marzi CA, Miniussi C. Attentional orienting induced by arrows and eye-gaze compared with an endogenous cue. Neuropsychologia. 2009;47(2):370–381. doi: 10.1016/j.neuropsychologia.2008.09.011. [DOI] [PubMed] [Google Scholar]

- Carver CS, Harmon-Jones E. Anger is an approach-related affect: Evidence and implications. Psychological Bulletin. 2009;135(2):183. doi: 10.1037/a0013965. [DOI] [PubMed] [Google Scholar]

- Davidson RJ, Irwin W. The functional neu-roanatomy of emotion and affective style. Trends in Cognitive Sciences. 1999;3(1):11–21. doi: 10.1016/s1364-6613(98)01265-0. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Eimer MM. Uninformative symbolic cues may bias visual-spatial attention: Behavioral and electrophysiological evidence. Biological Psychology. 1997;46(1):67–71. doi: 10.1016/s0301-0511(97)05254-x. [DOI] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Happy and fearful emotion in cues and targets modulate event-related potential indices of gaze-directed attentional orienting. Social Cognitive and Affective Neuroscience. 2007;2(4):323–333. doi: 10.1093/scan/nsm026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Event-related potentials reveal temporal staging of dynamic facial expression and gaze shift effects on attentional orienting. Social Neuroscience. 2009;4(4):317–331. doi: 10.1080/17470910902809487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fontaine JRJ, Scherer KR, Roesch EB, Ellsworth PC. The world of emotions is not two-dimensional. Psychological Science. 2007;18(12):1050–1057. doi: 10.1111/j.1467-9280.2007.02024.x. [DOI] [PubMed] [Google Scholar]

- Fox E, Mathews A, Calder AJ, Yiend J. Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion. 2007;7(3):478–486. doi: 10.1037/1528-3542.7.3.478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen CK, Kingstone A. The eyes have it! reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin and Review. 1998;5(3):490–495. [Google Scholar]

- Frischen A, Bayliss AP, Tipper SP. Gaze cuing of attention: Visual attention, social cognition, and individual differences. Psychological Bulletin. 2007;133(4):694–724. doi: 10.1037/0033-2909.133.4.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galfano G, Sarlo M, Sassi F, Munafò M, Fuentes LJ, Umiltà C. Reorienting of spatial attention in gaze cuing is reflected in N2pc. Social Neuroscience. 2011;6(3):257–269. doi: 10.1080/17470919.2010.515722. [DOI] [PubMed] [Google Scholar]

- George N, Driver J, Dolan RJ. Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. NeuroImage. 2001;13(6):1102–1112. doi: 10.1006/nimg.2001.0769. [DOI] [PubMed] [Google Scholar]

- Georgiou GA, Bleakley C, Hayward J, Russo R, Dutton K, Eltiti S, Fox E. Focusing on fear: Attentional disengagement from emotional faces. Visual Cognition. 2005;12(1):145–158. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gosselin P, Simard J. Children’s knowledge of facial expressions of emotions: Distinguishing fear and surprise. The Journal of Genetic Psychology. 1999;160:181–193. [Google Scholar]

- Graham R, Friesen CK, Fichtenholtz HM, LaBar KS. Modulation of reflexive orienting to gaze direction by facial expressions. Visual Cognition. 2010;18(3):331–368. [Google Scholar]

- Hietanen JK, Leppänen JM. Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology: Human Perception and Performance. 2003;29(6):1228–1243. doi: 10.1037/0096-1523.29.6.1228. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppänen JM, Nummenmaa L, Astikainen P. Visuospatial attention shifts by gaze and arrow cues: An ERP study. Brain Research. 2008;1215(0006-8993):123–136. doi: 10.1016/j.brainres.2008.03.091. [DOI] [PubMed] [Google Scholar]

- Holmes A, Mogg K, Garcia LM, Bradley BP. Neural activity associated with attention orienting triggered by gaze cues: A study of lateralized ERPs. Social Neuroscience. 2010;5(3):285–295. doi: 10.1080/17470910903422819. [DOI] [PubMed] [Google Scholar]

- Holmes A, Richards A, Green S. Anxiety and sensitivity to eye gaze in emotional faces. Brain and Cognition. 2006;60(3):282–294. doi: 10.1016/j.bandc.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Mangun GR. Neural mechanisms of visual selective attention. Psychophysiology. 1995;32(1):4–18. doi: 10.1111/j.1469-8986.1995.tb03400.x. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Hillyard SA. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal of Experimental Psychology: Human Perception and Performance. 1991;17(4):1057–1074. doi: 10.1037//0096-1523.17.4.1057. [DOI] [PubMed] [Google Scholar]

- Mathews A, Fox E, Yiend J, Calder A. The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition. 2003;10(7):823–835. doi: 10.1080/13506280344000095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neath K, Nilsen ES, Gittsovich K, Itier RJ. Attention orienting by gaze and facial expressions across development. Emotion. 2013;13(3):397–408. doi: 10.1037/a0030463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Whalen PJ. The primacy of negative interpretations when resolving the valence of ambiguous facial expressions. Psychological Science. 2010;21(7):901–907. doi: 10.1177/0956797610373934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nobre AC, Sebestyen GN, Miniussi C. The dynamics of shifting visuospatial attention revealed by event-related potentials. Neuropsychologia. 2000;38(7):964–974. doi: 10.1016/s0028-3932(00)00015-4. [DOI] [PubMed] [Google Scholar]

- Pecchinenda A, Pes M, Ferlazzo F, Zoccolotti P. The combined effect of gaze direction and facial expression on cuing spatial attention. Emotion. 2008;8(5):628–634. doi: 10.1037/a0013437. [DOI] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32(1):3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Praamstra P, Boutsen L, Humphreys GW. Frontoparietal control of spatial attention and motor intention in human EEG. Journal of Neurophysiology. 2005;94(1):764–774. doi: 10.1152/jn.01052.2004. [DOI] [PubMed] [Google Scholar]

- Prodan CI, Orbelo DM, Testa JA, Ross ED. Hemispheric differences in recognizing upper and lower facial displays of emotion. Cognitive and Behavioral Neurology. 2001;14(4):206–212. [PubMed] [Google Scholar]

- Putman P, Hermans E, Van Honk J. Anxiety meets fear in perception of dynamic expressive gaze. Emotion. 2006;6(1):94–102. doi: 10.1037/1528-3542.6.1.94. [DOI] [PubMed] [Google Scholar]

- Ree MJ, French D, MacLeod C, Locke V. Distinguishing cognitive and somatic dimensions of state and trait anxiety: Development and validation of the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA) Behavioural and Cognitive Psychotherapy. 2008;36(3):313. [Google Scholar]

- Sato W, Yoshikawa S. The dynamic aspects of emotional facial expressions. Cognition and Emotion. 2004;18(5):701–710. [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye, gaze increases and speeds up early visual activity. NeuroReport. 2001;12(11):2381–2386. doi: 10.1097/00001756-200108080-00019. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Perception of static eye gaze direction facilitates subsequent early visual processing. Clinical Neurophysiology. 2004;115(5):1161–1168. doi: 10.1016/j.clinph.2003.12.022. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye gaze enhances and speeds up visual processing in upper and lower visual fields beyond early striate visual processing. Clinical Neurophysiology. 2005;116(11):2565–2576. doi: 10.1016/j.clinph.2005.07.021. [DOI] [PubMed] [Google Scholar]

- Senju A, Hasegawa T. Direct gaze captures visuospatial attention. Visual Cognition. 2005;12:127–144. [Google Scholar]

- Simpson GV, Dale CL, Luks TL, Miller WL, Ritter W, Foxe JJ. Rapid targeting followed by sustained deployment of visual spatial attention. NeuroReport. 2006;17(15):1595–1599. doi: 10.1097/01.wnr.0000236858.78339.52. [DOI] [PubMed] [Google Scholar]

- Tiedens LZ. Anger and advancement versus sadness and subjugation: The effect of negative emotion expressions on social status conferral. Journal of Personality and Social Psychology. 2001;80(1):86. [PubMed] [Google Scholar]

- Tipples J. Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition and Emotion. 2006;20(2):309–320. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168(3):242, 242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Selst M, Jolicoeur P. A solution to the effect of sample size on outlier elimination. Quarterly Journal of Experimental Psychology. 1994;47A:631–650. [Google Scholar]

- Van Velzen J, Eimer M. Early posterior ERP components do not reflect the control of attentional shifts towards expected peripheral events. Psychophysiology. 2003;40:827–831. doi: 10.1111/1469-8986.00083. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia. 2007;45(1):174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW. Controlling low-level image properties: The SHINE toolbox. Behavior Research Methods. 2010;42(3):671–684. doi: 10.3758/BRM.42.3.671. [DOI] [PubMed] [Google Scholar]

- Woodbury-Smith MR, Robinson J, Wheelwright S, Baron-Cohen S. Screening adults for asperger syndrome using the AQ: A preliminary study of its diagnostic validity in clinical practice. Journal of Autism and Developmental Disorders. 2005;35(3):331–335. doi: 10.1007/s10803-005-3300-7. [DOI] [PubMed] [Google Scholar]