Abstract

Objective: This project aimed to develop an open‐access website providing adaptable resources to facilitate best practices for multisite research from initiation to closeout.

Methods: A web‐based assessment was sent to the leadership of the Clinical and Translational Science Award (CTSA) Community Engagement Key Functions Committee (n= 38) and the CTSA‐affiliated Primary Care Practice‐based Research Networks (PBRN, n= 55). Respondents rated the benefits and barriers of multisite research, the utility of available resources, and indicated their level of interest in unavailable resources. Then, existing research resources were evaluated for relevance to multisite research, adaptability to other projects, and source credibility.

Results: Fifty‐five (59%) of invited participants completed the survey. Top perceived benefits of multisite research were the ability to conduct community‐relevant research through academic–community partnerships (34%) and accelerating translation of research into practice (31%). Top perceived barriers were lack of research infrastructure to support PBRNs and community partners (31%) and inadequate funding to support multisite collaborations (26%). Over 200 resources were evaluated, of which 120 unique resources were included in the website.

Conclusion: The PRIMER Research Toolkit (http://www.researchtoolkit.org) provides an array of peer‐reviewed resources to facilitate translational research for the conduct of multisite studies within PBRNs and community‐based organizations. Clin Trans Sci 2011; Volume 4: 259–265

Keywords: practice‐based research network, clinical research, resource

Introduction

Multisite research involves the collaboration of more than one entity—clinical practice, research network, or community organization—to conduct a study using the same overall research plan at different local, regional, or national sites. Multisite research is employed when a study requires a diverse set of participants (e.g., different ethnic or socioeconomic groups); geographical representation from different regions, states, or countries; or a large sample size that cannot be obtained from a single location. A majority of multisite research is practice based and occurs in highly specialized settings (i.e., academic medical centers). Increasingly, there are efforts underway to create more community‐relevant research projects that address important health priorities for the community‐based practices or community‐based organizations (CBO). Practice‐based and CBO research activities are similar in that they both involve the partnership of an academic investigator, and the research is conducted in a real‐world environment. The difference between the two types of research is that the setting of practice‐based research is the community‐based clinics and the setting of CBO research is the neighborhoods, churches, and other community organizations.

The creation of a national federation of Clinical and Translational Science Award (CTSA) institutions yields important opportunities for altering the pace and culture of research. Indeed, the United States is fortunate to have multiple networks of researchers and clinicians poised to accelerate the research enterprise and the challenging process of translation—that is, moving research results into daily practice. To date, however, the national research enterprise has functioned in a largely decentralized fashion, resulting in duplicative or undocumented processes and impeding the diffusion of established best practices for multisite research in community‐based settings. The absence of cohesive processes can have a cascading effect on participant recruitment and retention, external validity, and even inherent satisfaction among clinical researchers. To remedy this gap, many longstanding networks and other organizations have begun capturing and documenting proven strategies to streamline and standardize various aspects of the research process. 1 , 2 , 3 , 4 , 5

The Partnership‐driven Resources to Improve and Enhance Research (PRIMER) project, an administrative supplement of the CTSA Community Engagement Key Functions Committee (CE KFC), tapped into the collective expertise from two research network communities, the health maintenance organization (HMO) Research Network (HMORN) and the Practice‐Based Research Network (PBRN) community. Both groups have amassed decades of experience in population‐based clinical and health services research. The goal of the PRIMER project was to compile, organize, and disseminate a compendium of tangible resources to facilitate multisite research from initiation to closeout. Herein, we describe the assessment and subsequent process whereby the PRIMER Research Toolkit was launched on an open‐access website (http://www.ResearchToolkit.org).

Methods

Online survey

The purpose of the survey was to inform the development of the Research Toolkit by asking CTSA and PBRN researchers about critical research resources that were either valued by or of interest to them, with an emphasis on multisite research. A link to the web‐based survey was sent to the 93 CTSA‐affiliated research leaders who comprised the study‐sampling frame. These individuals were identified from two groups: members of the CTSA CE KFC and directors/research directors of PBRNs affiliated with a CTSA. The survey was approved by the institutional review board (IRB) of the Group Health Research Institute.

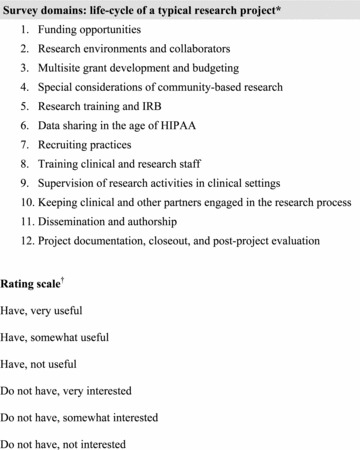

The primary content of the survey was organized around 12 topical areas associated with the life cycle of a typical research project ( Figure 1 ) and was based on the HMORN Collaboration Toolkit 4 and the PBRN Best Practices Self‐Assessment Checklist (AHRQ R01 HS016713–02). We created a list of potential tools, templates, policies, and procedures that could be helpful to each of the 12 life‐cycle areas. We asked respondents to select one of the following classifications for each potential resource: (1) have this, very useful; (2) have this, somewhat useful; (3) have this, not useful; (4) do not have this, very interested; (5) do not have this, somewhat interested; and (6) do not have this, not interested. For each of the 12 areas, we provided respondents with a text box in which to comment or suggest other resources relevant to the area. The survey also included questions about the respondents’ affiliations and research settings and asked the respondents to specify their view of the two greatest benefits of and barriers to multisite research involving community‐academic partners.

Figure 1.

Survey domains and rating scale. *Each domain drilled down to include three to six related subtopics. †Respondents were asked to rate each subtopic using one of these six scale values. HIPAA = Heath Information Portability and Accountability Act; IRB = institutional review board.

We pilot tested the web‐based survey instrument to refine content and flow from January–February 2009 with nine individuals from CTSA CE cores, PBRNs, the HMORN, and National Center for Research Resources, none of whom qualified for the survey sample. Following these revisions, we emailed the survey link in March 2009 to two groups: 38 voting members of the CE KFC and 55 directors of PBRNs affiliated with funded CTSA programs. The latter were identified via contact directories compiled by the Community Academic Partnerships Project workgroup of the CE KFC and via additional investigative efforts by the study team. Nonresponders received email reminders 1 and 2 weeks after the initial invitation.

Survey response rates varied from 55% of those from CE KFCs to 62% of those from PBRNs, with an overall completion rate of 59%, representing 55 respondents in 34 different CTSAs (out of the 38 CTSAs funded at the time of the survey). We conducted descriptive data analyses.

Resource guide development

Using the survey data, we prioritized resource types for the development of the PRIMER Research Toolkit. The study team reviewed, compiled, adapted, and catalogued over 200 existing research tools and resources from a variety of sources for inclusion in ResearchToolkit.org. We systematically evaluated each resource for inclusion based on five criteria judged by team members: (1) alignment with PRIMER goals; (2) relevance to multisite research; (3) degree of adaptability to other projects; (4) credibility of source; and (5) nonduplication of other resources. Permission was obtained to include any copyrighted materials. Ultimately, 120 unique resources were included into version 1.0 of ResearchToolkit.org ( Figure 2 ). These are organized into six categories: Building Collaborations, Developing Proposals, Starting Up a Study, Conducting and Managing Projects, Disseminating and Closing Research, and Resources for Training. Individual resources appear in multiple categories when applicable across areas, resulting in 198 total resource citations in the toolkit. Each resource listing contains a brief description of the tool, a source acknowledgment or citation, and the option to link to or download the item. Content may be browsed by the six domains or searched by free text.

Figure 2.

Home page of ResearchToolkit.org.

Additional features of ResearchToolkit.org include links to other resource websites, useful web utilities, our peer‐reviewers’“top picks,” background on the PRIMER project, help screens, contact information, and a link to an online user feedback form.

Results

Table 1 shows respondents’ perceived benefits of and barriers to multisite research. Subgroup analysis indicated no differences between CE KFC‐ and PBRN‐affiliated respondents, so only the total sample results are presented. The top two perceived benefits to multisite research were the ability to conduct community‐relevant research through bidirectional academic‐community partnerships (34%) and accelerating the translation of research into practice (31%). The top two perceived barriers to multisite research were lack of research infrastructure to support PBRNs and other community partners (31%) and inadequate funding opportunity announcements (FOAs) that support multisite collaborations (26%).

Table 1.

Benefits and barriers to multisite research.*

| Benefits | N | % |

|---|---|---|

| The ability to conduct community‐relevant research through bidirectional academic–community partnerships | 37 | 34 |

| Accelerating the translation of research into practice | 34 | 31 |

| The ability to collect data outside the primary setting | 10 | 9 |

| The ability to recruit the type or number of patients a study needs | 6 | 5 |

| Building trust between researchers and communities | 8 | 7 |

| Increased research capacity | 5 | 5 |

| Other | 5 | 5 |

| Tapping into expertise outside of the primary setting | 4 | 4 |

| Barriers | N | % |

| Lack of research infrastructure to support PBRNs and other partners | 34 | 31 |

| Inadequate funding opportunities | 29 | 26 |

| Differing agendas and expectations, or lack of trust | 13 | 12 |

| HIPAA or technical issues | 12 | 11 |

| Challenges in engaging partners in the research process, and maintaining engagement over time | 12 | 11 |

| Lack of information on potential community or academic partners | 3 | 3 |

| Training clinical and research staff on methods and project design | 3 | 3 |

| Other | 1 | 1 |

*Respondents were able to choose two items each from the benefits and barriers listing.

HIPAA = Health Information Portability and Accountability Act; PBRN = practice‐based research network.

Table 2 presents the respondent ratings for the presence and usefulness of various types of research tools, organized into 12 domains. Based on these ratings, we present highlights of an analysis that suggests priority areas for the Research Toolkit and the type and quantity of resources identified for each domain that were placed on the website. These are organized according to the logical life cycle of a research project.

Table 2.

PRIMER online survey results.

| Study phase/resources | Have resource | Somewhat useful | Very useful | Do not have resource | Somewhat interested | Very interested |

|---|---|---|---|---|---|---|

| Funding opportunities | ||||||

| Direct links to FOAs relevant to community research | 85% | 48% | 52% | 15% | 13% | 88% |

| List of links to non‐NIH agencies supporting community research (AHRQ, foundations, etc.) | 70% | 62% | 38% | 30% | 6% | 94% |

| Research environments and collaborators | ||||||

| Directory of potential research partners by research interest(s) and site | 25% | 69% | 15% | 75% | 50% | 40% |

| Summary tables of patient demographics, organizational characteristics, research capacity, etc., for research partners | 40% | 62% | 33% | 60% | 34% | 56% |

| Central calendar listing upcoming conferences for networking and sharing work | 25% | 38% | 62% | 75% | 44% | 46% |

| Multisite grant development and budgeting | ||||||

| Contact directory for grant and budget development contacts at your research partners’ organizations | 53% | 32% | 52% | 47% | 41% | 45% |

| Reimbursement guidelines for clinical practice providers and staff (based on role and study procedures) | 15% | 63% | 38% | 85% | 41% | 48% |

| Tools for grant and/or budget development when multiple institutions are collaborating | 33% | 56% | 39% | 67% | 39% | 58% |

| Special considerations of community‐based research | ||||||

| Recommended methods for effective participant recruitment | 59% | 38% | 59% | 41% | 14% | 64% |

| Guide to ethical participant recruitment within clinical practices | 56% | 37% | 60% | 44% | 46% | 42% |

| Resources to improve the readability of patient consent forms and other study materials | 57% | 65% | 32% | 43% | 35% | 57% |

| Cultural competency training resources for researchers | 54% | 76% | 21% | 46% | 40% | 56% |

| Research training & IRB | ||||||

| Human subjects and HIPAA training resources | 91% | 49% | 47% | 9% | 40% | 60% |

| Good clinical practices training resources | 70% | 52% | 42% | 30% | 57% | 29% |

| Research management training materials | 56% | 57% | 36% | 44% | 45% | 50% |

| Tools for tracking human subjects, HIPAA, and other types of training for clinic providers and staff | 63% | 50% | 44% | 37% | 53% | 42% |

| Tools for tracking IRB requirements for networks with multiple IRBs | 33% | 50% | 50% | 67% | 31% | 63% |

| Data sharing in the age of HIPAA | ||||||

| Strategies for maximizing flexibility and usability of data while ensuring data privacy and security | 38% | 37% | 58% | 62% | 13% | 81% |

| BAA template/sample | 49% | 43% | 48% | 51% | 25% | 67% |

| DUA template/sample | 57% | 43% | 50% | 43% | 14% | 81% |

| Guide to understanding and setting up BAAs | 24% | 36% | 64% | 76% | 34% | 51% |

| Guide to understanding and setting up DUAs | 33% | 56% | 38% | 67% | 21% | 67% |

| Recruiting practices | ||||||

| Resources for engaging practices early on (e.g., study design, methods development) | 55% | 46% | 54% | 45% | 13% | 83% |

| List of potential benefits to patients and practices for participation in studies | 53% | 59% | 30% | 47% | 21% | 75% |

| Strategies for approaching clinical practices about study participation | 60% | 48% | 48% | 40% | 33% | 62% |

| Tool for upfront delineation of project versus clinic staff research responsibilities | 31% | 47% | 47% | 69% | 21% | 74% |

| Training clinical and research staff | ||||||

| General training resources for study interviewers | 50% | 56% | 40% | 50% | 36% | 56% |

| General training resources for study chart abstractors | 43% | 50% | 45% | 57% | 31% | 58% |

| Tools/guidelines for clinical staff orientation to new studies | 39% | 53% | 47% | 61% | 23% | 63% |

| Overview of typical research coordinator role | 68% | 50% | 50% | 32% | 13% | 56% |

| General research coordinator training or orientation resources (checklists, requirements, etc.) | 56% | 57% | 43% | 44% | 18% | 64% |

| Supervision of research activities in clinical settings | ||||||

| Documented processes for monitoring data collected in practice settings | 54% | 48% | 48% | 46% | 22% | 78% |

| Processes for ensuring randomization protocols are followed | 51% | 42% | 58% | 49% | 32% | 56% |

| Checklists or other resources to ensure informed consent procedures are followed | 57% | 48% | 48% | 43% | 32% | 64% |

| Process for handling patients’“expressions of concern” about study activities | 45% | 45% | 45% | 55% | 33% | 63% |

| Keeping clinical and other partners engaged in research process | ||||||

| List of research‐related training opportunities for clinical staff engaged in research | 36% | 28% | 61% | 64% | 25% | 56% |

| Effective communication strategies for studies involving multiple sites and/or clinics | 40% | 70% | 30% | 60% | 20% | 67% |

| Processes to ensure clinical practices contribute to the development of new research ideas and implementation methods | 39% | 53% | 47% | 61% | 23% | 73% |

| Dissemination and authorship | ||||||

| Guidelines for sharing study results with policy makers or health system administrators | 27% | 86% | 14% | 73% | 26% | 61% |

| Guidelines or other resources for sharing study results with patients and/or the larger community | 21% | 45% | 45% | 79% | 21% | 71% |

| Sample authorship policy for community‐engaged research | 22% | 45% | 36% | 78% | 26% | 64% |

| Project documentation, closeout, and postproject evaluation | ||||||

| Checklist of documentation to keep at project closeout | 36% | 61% | 39% | 64% | 61% | 39% |

| Checklist of activities needed to close out a project (e.g., IRB, financial) | 41% | 67% | 33% | 59% | 20% | 57% |

| Tools for postproject evaluations (e.g., exit interviews, process improvement) | 22% | 64% | 36% | 78% | 26% | 64% |

Proportions reported out of 55 respondents. Given low numbers, responses are not shown for (a) Have this, not useful, and (b) Do not have this, not interested. Therefore, responses in columns 3 and 4, as well as 6 and 7, may not add up to 100%.

AHRQ: Agency for Healthcare Research and Quality; BAA: Business associate agreement; DUA: Data use agreement; FOA: funding opportunity announcement; HIPAA: Health Information Portability and Accountability Act; IRB: institutional review board; NIH: National Institutes of Health.

Funding opportunities

Most survey participants responded that links to FOAs by the National Institutes of Health (NIH) (85%) or other agencies (70%) are available and highly desirable to the minority without access. For those with access to FOAs, their opinions about the usefulness of these announcements were evenly split between very useful (52%) and somewhat useful (48%). On the PRIMER website, we added links to funding resources from federal agencies, foundations, health organizations, and professional societies.

Research environments and collaborators

The survey asked respondents to evaluate three resources related to environments and collaborators: (1) directories of potential research partners by research interest and site; (2) summary tables with characteristics about potential partner sites; and (3) central calendars of conferences to facilitate networking. The majority of respondents did not have these resources (ranging from 60% to 75%). We balanced the moderate level of interest in these tools against the challenge of compiling these directories, tables, and calendars in the toolkit. We identified websites maintained by federal agencies (e.g., NIH CTSA, Agency for Healthcare Research and Quality PBRN, Centers for Disease Control and Prevention, Health Resources and Service Administration) or professional and research associations (American Academy of Family Physicians, Society of General and Internal Medicine, American Academy of Pediatrics) as resources for contact directories, network information, and event calendars.

Multisite grant development and budgeting

Most respondents did not have access to the two of the three resources listed under this domain; that is, template for directories of budget and grant personnel (47%), reimbursement guidelines for clinical providers and staff (85%), and tools to facilitate multisite budget development (67%). There was moderate interest in obtaining these resources. Because contact directories tend to be dynamic and difficult to maintain as personnel change and there was only moderate interest in these tools, we included this item in a list of recommendations rather than as part of the Research Toolkit. Reimbursement guidelines for practices are a comparatively rare resource, so we sought examples of this resource from respondents, acknowledging that reimbursement policies may vary widely across institutions. On the website, the HMORN Collaboration Toolkit 4 was given as an example for multisite budget development.

Special considerations of community‐based research

This survey domain included methods for effective participant recruitment, guides to ethical recruitment, tools to improve the readability of consent forms and other documents, and cultural competency training for researchers. Over half of the respondents had these resources and rated the recruitment guides as very useful and the readability and cultural competency training as somewhat useful. Those without these resources were moderately interested in obtaining these items, and therefore, we placed links to recruitment guides, a consent form readability tool, and cultural competency training modules on the website.

Research training and IRB resources

We evaluated access to five types of research training and IRB resources: human subjects protection (HSP) and Health Information Portability and Accountability Act (HIPAA) training, good clinical practice (GCP) training, research management training, tools for tracking training of clinic providers and staff, and tools for tracking IRB requirements at different sites in research networks. Almost all had HSP and HIPAA training (91%), most had GCP training (70%), and 56% had research management training resources. Tools for tracking training were more common (63%) than for tracking IRB requirements (33%). The vast majority of both groups found some utility in these tools, although PBRN representatives were more likely than the CE KFC participants to identify these as useful. Because of the necessity of these regulatory tools, we included templates from 12 resources on IRB, HIPAA, and data safety monitoring boards to the website.

Data sharing in the age of HIPAA

This domain included five subtopics: strategies for balancing data usability with privacy, existence of business associate agreement (BAA) templates, existence of data use agreement (DUA) templates, guidance for setting up BAAs, and guidance for setting up DUAs. Data usability strategies were uncommon (62% without this resource) but viewed as very useful by 81%. Most had BAA and DUA templates (49% and 57%, respectively), but few had implementation guidance (76% and 67% without this resource), and these were highly desired by those without. Given the importance and complexity of data sharing, this particular component warranted a moderately high degree of our attention for inclusion within the Research Toolkit. The website includes DUA and BAA templates and guidance documents in the “Handling Data” section.

Recruiting practices

The four areas explored under the this domain were (1) resources for engaging practices early on (e.g., study design, methods development); (2) lists of potential benefits to patients and practices for study participation; (3) strategies for approaching clinical practices about study participation; and (4) tools for upfront delineation of project versus clinic staff responsibilities. Over half of the respondents had the first three resources. We ascertained that the main resource for practice and community engagement may be in the form of a clinician and/or community advisory board. Interest in these tools was high. Outlining the patient and practice benefits for study participation is a key component for community‐engaged research; thus, we prioritized the inclusion of examples in the toolkit. Tools for upfront delineation of project versus clinic staff responsibilities were not common. The majority of those without these tools were very interested, and we sought examples of these tools from the survey respondents. Example tools for engaging practices and patients were added to the website; however, we were unable to find a dedicated tool for outlining the project versus research staff responsibilities. Descriptions of the latter are often included within guides to collaboration and not as a separate resource.

Training clinical and research staff

This domain included five areas: (1) training resources for study interviewers; (2) training resources for study chart abstractors; (3) tools or guidelines for orienting clinical staff to new studies; (4) overview of the research coordinator role; and (5) general research coordinator training or orientation resources (e.g., checklists, requirements, etc.). Availability of these tools varied within CTSAs and PBRNs, ranging from 39% to 68%. Respondents most often rated the orientation and training checklists as useful; therefore, training resources from HMORN, American Academy of Family Physicians National Research Network, and Clinical Trials Network Best Practices were included on the PRIMER website.

Supervision of research activities in clinical settings

This domain included four subtopics: (1) documented processes for monitoring data collected in practice settings; (2) processes for ensuring randomization protocols; (3) checklists or other resources to ensure informed consent procedures; and (4) process for handling patient concerns about study activities. Over half of the respondents had resources for supervising research activities (ranging from 45% to 54%). Interest in these tools depended on the whether these components are required within a project; the highest interests were for monitoring data collection (78%) and following informed consent procedures (64%). Templates for those two resources were prioritized and included for the toolkit.

Keeping clinical and other partners engaged in the research process

We explored three strategies for maintaining the engagement of nonacademic partners in the research process: (1) research‐related training opportunities; (2) communication strategies; and (3) processes for ensuring partners are able to contribute to research ideas and methods. Between 36% and 40% reported access to tools that support these strategies. Most of those without these resources indicated a strong interest, so we sought examples of these tools from those sites reporting access to them for inclusion in the toolkit. We found two resources that address engagement of clinic staff and communities for the website.

Dissemination and authorship

The three resources included under this domain were (1) guidelines for sharing study results with policy makers or health system administrators; (2) resources for sharing study results with patients and/or communities; and (3) a sample authorship policy for community‐engaged research. Overall, very few respondents had these tools (range 21–27%). Among those without these resources, interest was higher in tools to disseminate results to patients and the community (71%) than to policymakers or administrators (61%). Seven examples were selected for inclusion in the Research Toolkit.

Project documentation, closeout, and postproject evaluation

Three resources comprised this domain: (1) a checklist of documentation to keep at project closeout; (2) a checklist of activities needed to close out a project; and (3) tools for postproject evaluation (e.g., exit interviews or process improvements). Most respondents did not have any of these tools (range 59–78%). The checklists were deemed essential for document retention and closeout, and there was high interest in a postproject evaluation tool, so we found two resources to include in the toolkit.

Discussion

The online survey showed that CTSA community‐engaged researchers believe that multisite research helps to accelerate the translation of research into practice through the conduct of community‐relevant research. Key barriers to the success of multisite collaboration are maintaining a stable research infrastructure and available grant funding opportunities. In addition, investigators conducting research in practice‐ and community‐based settings are enthusiastic overall about the use of research tools, perhaps reflecting their active engagement in multisite research with limited formal infrastructure support. Variation in reported utility or interest in the research tools likely reflects differences in research practices. For example, the level of interest in resources for orienting staff to new studies may be related to the level of involvement of the clinic staff within each study. PBRNs that rely on network staff to conduct studies may require less clinic staff orientation, whereas PBRNs that rely on clinic staff for study procedures may find orientation tools or guidelines essential. Respondents generally rated orientation and training checklists as useful. The predominance of low‐risk studies and lack of external auditing may indicate that training requirements are less robust.

The utility of having a protocol for handling patient concerns may depend on the level of patient involvement (i.e., not needed for chart review studies, but required for prospective studies requiring consent and follow‐up). Overall, identifying effective resources that maintain engagement of nonacademic partners appears to be a more elusive task. It may be more difficult to identify truly useful communication strategies for multisite research, so we depended on the six sites that reported having access to such a tool to provide resources for the toolkit. Dissemination and authorship resources were also scarce, which may reflect practice‐ and community‐based research being at an earlier stage of development.

After finalizing content and beta‐testing functionality, we launched http://www.ResearchToolkit.org in September 2009. Since the launch, limited, reiterative improvements have been made based on investigator and user feedback. The strengths of this toolkit are inclusion of a variety of community‐engaged researchers in the survey sample, the number and scope of resources evaluated, the creation of a user friendly utility for supporting multisite, community‐based research, and its creation by expert on‐site researchers with input from the target audience. Limitations to the online survey are the change in CTSA representation since the project initiated (38 CTSAs were surveyed; now there are 59 funded CTSAs), and the moderate response rate from the PBRN and CE groups. Thus, the generalizability of the online survey results may differ if we were able to repeat the survey with the full CTSA consortium. Alternatively, the number of existing tools or resources available for posting on the website could increase from the inclusion of a larger contingency of researchers. Limitations of the toolkit include the limited number of peer reviewers available to review and rank resources and the short‐term duration of project funding. To date, we have not conducted a postlaunch evaluation of the website. Risks to the long‐term success of this project include ongoing maintenance and “ownership” of the website content. To remain relevant, the Research Toolkit must attract users to the site, making it “top of mind” for those in need of resources.

The next steps include the following: (a) evaluating how well the website is meeting the research community’s needs, (b) enhancing the content and functionality of the website by including emergent products from the CTSA CE cores, and (c) adding web functionality that enables growth, visibility, and sustainability of the website. Through our work with our community partners in our respective CTSAs, we have observed the need to expand the resources that specifically meet the needs of community partners, such as educational modules on trust building or the ethics of dissemination.

The implications of the toolkit on creating greater efficiencies within the research process are yet to be determined. The challenges of dissemination, sustainability, and heterogeneity are described further in a companion paper. 6

Conclusion

From a survey of CTSA PBRN and CE leadership, the ResearchToolkit.org was created to provide researchers with a rich array of peer‐reviewed resources for the conduct of multisite studies such as those in PBRNs or community‐based organizations. By exploring the toolkit, researchers can learn about the resources that others have found most useful for planning, conducting, and disseminating collaborative, multisite research. Postdissemination feedback on the toolkit, such as suggestions for additional resources for future inclusion, is welcome to improve its usability. Given the heterogeneity of research networks, the question arises of whether “one size can fit most” for the resources contained in the current toolkit. Nevertheless, multisite research is proliferating, and the need to develop efficiencies will continue to grow. While there are many nuances to each research study, it behooves the scientific community to identify opportunities to accelerate the translation of findings into practice by accelerating the processes and functions that underlie the science.

Acknowledgments

We would like to thank Gail Christianer and Bill Tolbert for their work on this project. We also would like to thank the following institutions who contributed materials for ResearchToolkit.org: AAFP National Research Network, Clinical Trials Network Best Practices, Community Campus Partnerships for Health, HMO Research Network, Carver College of Medicine at the University of Iowa, North Texas Primary Care Practice‐based Research Network, Oregon Clinical and Translational Research Institute, University of California—San Francisco, and Yale Community Alliance for Research Engagement. We also thank Amanda McMillan for her editorial assistance.

This publication was supported by the National Center for Research Resources (part of NIH) through the Clinical and Translational Sciences Awards (CTSA) program; 3UL1RR025014‐02S1. The authors declare that there are no conflicts of interest.

References

- 1. About CTN Best Practices. Available at: http://www.ctnbestpractices.org/about‐ctn‐best‐practices. Accessed April 13, 2011.

- 2. Stetler CB, Mittman BS, Francis J. Overview of the VA quality enhancement research initiative (QUERI) and QUERI theme articles: QUERI series. Implement Sci. 2008; 3: 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Centers for Disease Control and Prevention . Diffusion of effective behavioral interventions (DEBI). http://www.effectiveinterventions.org. Accessed April 13, 2011.

- 4. HMO Research Network . HMO research network collaboration toolkit. http://www.hmoresearchnetwork.org/resources/collab_toolkit.htm. Accessed April 13, 2011.

- 5. Agency for Healthcare Research and Quality . AHRQ PBRN resource center. http://pbrn.ahrq.gov/portal/server.pt?open=512&objID=969&parentname=CommunityPage&parentid=3&mode=2&in_hi_userid=8762&cached=true. Accessed April 13, 2011.

- 6. Greene SM, Baldwin LM, Dolor RJ, Thompson E, Neale AV. Streamlining Research by Using Existing Tools. Clinical and Translational Science. 2011; 4: 266–267. [DOI] [PMC free article] [PubMed] [Google Scholar]