Abstract

Case mix index (CMI) has become a standard indicator of hospital disease severity in the United States and internationally. However, CMI was designed to calculate hospital payments, not to track disease severity, and is highly dependent on documentation and coding accuracy. The authors evaluated whether CMI varied by characteristics affecting hospitals' disease severity (eg, trauma center or not). The authors also evaluated whether CMI was lower at public hospitals than private hospitals, given the diminished financial resources to support documentation enhancement at public hospitals. CMI data for a 14-year period from a large public database were analyzed longitudinally and cross-sectionally to define the impact of hospital variables on average CMI within and across hospital groups. Between 1996 and 2007, average CMI declined by 0.4% for public hospitals, while rising significantly for private for-profit (14%) and nonprofit (6%) hospitals. After the introduction of the Medicare Severity Diagnosis Related Group (MS-DRG) system in 2007, average CMI increased for all 3 hospital types but remained lowest in public vs. private for-profit or nonprofit hospitals (1.05 vs. 1.25 vs. 1.20; P<0.0001). By multivariate analysis, teaching hospitals, level 1 trauma centers, and larger hospitals had higher average CMI, consistent with a marker of disease severity, but only for private hospitals. Public hospitals had lower CMI across all subgroups. Although CMI had some characteristics of a disease severity marker, it was lower across all strata for public hospitals. Hence, caution is warranted when using CMI to adjust for disease severity across public vs. private hospitals. (Population Health Management 2014;17:28–34)

Introduction

In 1983, the Centers for Medicare and Medicaid Services (CMS) implemented the Inpatient Prospective Payment System, based on Diagnosis-Related Groups (DRGs).1,2 This system uses the International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) to determine individual diagnostic codes for each patient. Those ICD-9-CM codes are grouped together to determine the patient's DRGs. Individual DRGs are assigned “relative weights,” and CMS payments are made proportionately to the relative weight of a patient's DRG assignment. For example, distinct ICD-9-CM codes, DRGs, and relative weights are assigned for a patient with documented evidence of pneumonia plus “acute congestive heart failure (CHF)” (ICD-9-CM 428.0, DRG 195, relative weight 0.7), “systolic CHF” (ICD-9-CM 428.2, DRG 194, relative weight 1.0), or “acute systolic CHF” (ICD-9-CM 428.21, DRG 193, relative weight 1.5).3 The care of the patient documented to have pneumonia plus acute systolic CHF would be reimbursed at twice the rate of the patient documented to have acute CHF because the relative weight is twice as large. DRG relative weights are adjusted annually by CMS based on the average length of stay and health care resource utilization that patients within individual DRG groups experience when hospitalized. A facility's case mix index (CMI) is calculated as the sum of the relative weights of the facility's DRGs divided by the number of admissions for the period of time (often 1 year).3

Although designed as a basis for calculating hospital payments for patient care, and not as an indicator of severity of illness per se, it has become increasingly common practice to normalize a variety of publicly reported quality indicators and costs for disease severity by dividing the indicator or costs by the medical center's individual CMI, allowing comparisons across medical centers.4–8 However, CMI may be affected by the accuracy of physician documentation and the skill and experience of the coder who abstracts data from the medical record and assigns ICD-9-CM codes.9–11 Accordingly, a variety of interventions designed to enhance documentation and coding may result in increased hospital CMI despite providing similar care for the same type of patients with the same disease severity.11–16 Such interventions include hiring additional coding specialists with higher levels of training to code charts, hiring clinical documentation specialists whose primary function is to evaluate documentation prospectively in real time for hospitalized patients, and additional training for providers and coders. However, these interventions are expensive, and require substantial up-front investment. Thus, this study hypothesized that public hospitals would have lower CMI than private hospitals because public hospitals are not for profit, have less financial motivation to increase reimbursement, and may have less access to the up-front investment capital necessary to fund documentation and coding improvement projects.17,18

To determine how CMI varied across a wide array of health care systems, this study used a publicly available database of all California hospitals to compare the impact of variables linked to disease severity, as well as variables distinct from disease severity, on a hospital's CMI.

Methods

Data abstraction

Aggregate CMI data from 1996 to 2009 for all medical facilities in California were obtained from the Office of Statewide Health Planning and Development (OSHPD) database.19 Thus, the unit of analysis for this study is CMI for each hospital in the state of California. The CMI for each hospital is based on data reported annually by each hospital to OSHPD. Hospitals report to OSHPD their CMI based on all inpatient admissions across all services (eg, internal medicine, pediatrics, psychiatry, general surgery, surgical subspecialties). OSHPD then posts the CMI by hospital separated by calendar years (rather than by CMS or federal fiscal years). The database does not contain patient-level data, and does not stratify CMI by subpopulations (eg, Medicare vs. non-Medicare, adult vs. pediatric).

A total of 591 medical facilities were identified. However, for the purpose of this analysis, only acute care inpatient facilities that had CMI values for the years 1996 and 2009 were included. In addition, hospitals that specifically admit only pediatric or psychiatric patients, long-term care facilities, and facilities that had closed were excluded because their CMIs are inherently distinct from acute care hospitals because of markedly different patient populations and/or payer mechanisms.

The remaining 364 acute care facilities were categorized by trauma level, ownership, bed number, and teaching affiliation. Trauma-level information was obtained from the California emergency medical services authority Web page.19 Trauma levels are defined in the state of California as 1 of 5 categories numbered 0–4. Trauma level 0 means no trauma capacity; trauma levels 1 to 4 indicate progressively lower trauma capability (1 is highest, 4 is lowest).20 Trauma level 1 hospitals are receiving centers for severe trauma, are required to have 24-hour in-house trauma and other specialty surgeons, and must admit a minimum number of patients per year with severe trauma to maintain certification.

All other hospital characteristics, such as ownership (public, private for-profit, private nonprofit), bed number, and teaching affiliation information were obtained from online searches of each individual hospital's Web site, as well as the American Hospital Association Data and Consumer Reports health Web site.21 A teaching hospital was defined as a hospital at which resident physicians who are enrolled in an Accreditation Council for Graduate Medical Education training program worked.

Statistical analysis

Hospital CMI was compared between the years 1996 and 2009 using the Student t test. Hospital CMI also was stratified by underlying hospital characteristics (eg, public vs. private, level 1 trauma vs. not). Analysis of variance (ANOVA) was used to compare mean CMI between public and privately owned hospitals, between teaching and nonteaching hospitals, and between trauma 1 and other trauma-level hospitals separately, and pair-wise comparisons were conducted using paired or unpaired Student t test with Tukey correction for multiple comparisons. Regression was used to estimate difference in CMI per 100 hospital beds. Multiple regression was used to adjust each variable for the others in the model (public/private, trauma level, teaching status, bed numbers). To analyze trends over time, standard process control limits were calculated.22 Control limits are used to detect changes over time in a process and are calculated based on internal variance within the data set. Data points crossing the 99% upper control limit, or 2 of 3 points between the 95% confidence interval limit and the 99% upper control limit, are considered statistically significant changes from the baseline process.22 Comparisons were analyzed in KyPlot (KyensLab, Inc., Tokyo, Japan) or SPSS (IBM, Armonk, NY) (multivariate) using 2-tailed tests; a P value of<0.05 was considered significant.

Results

Longitudinal case mix index changes over time

A total of 364 acute care hospitals were included in the analysis. The average CMI over time for the variables of interest is shown in Table 1.

Table 1.

Average (SD) Case Mix Index Over Time

| Variable | N | 1996 | 2009 | %Change | P value |

|---|---|---|---|---|---|

| All Hospitals | 364 | 1.04 | 1.18 | 13% | <0.0001 |

| Hospital ownership | |||||

| Public | 69 | 0.99 (0.21) | 1.05 (0.22) | 6% | <0.0001 |

| Private for-profit | 73 | 1.02 (0.25) | 1.25 (0.40) | 22% | <0.0001 |

| Private nonprofit | 219 | 1.07 (0.21) | 1.20 (0.23) | 13% | <0.0001 |

| Teaching affiliation | |||||

| Nonteaching | 280 | 1.01 (0.19) | 1.15 (0.27) | 14% | <0.0001 |

| Public Teaching | 23 | 1.12 (0.24) | 1.21 (0.26) | 8% | <0.0001 |

| Private Teaching | 59 | 1.15 (0.28) | 1.34 (0.29) | 17% | <0.0001 |

| Trauma levels | |||||

| 0 | 303 | 1.03 (0.21) | 1.17 (0.29) | 14% | <0.0001 |

| 4 | 7 | 0.90 (0.11) | 0.92 (0.11) | 2% | 0.646 |

| 3 | 8 | 1.20 (0.27) | 1.25 (0.18) | 4% | 0.182 |

| 2 | 31 | 1.08 (0.14) | 1.21 (0.19) | 13% | <0.0001 |

| 1 | 13 | 1.25 (0.23) | 1.45 (0.29) | 16% | 0.0007 |

| 1-public | 8 | 1.27 (0.28) | 1.42 (0.30) | 13% | 0.0018 |

| 1-private (nonprofit) | 5 | 1.22 (0.15) | 1.50 (0.29) | 23% | 0.030 |

| Hospital bed number | |||||

| 1–49 | 51 | 0.95 (0.21) | 1.10 (0.44) | 16% | 0.161 |

| Public | 18 | 0.90 (0.14) | 0.92 (0.14) | 2% | 0.406 |

| Private | 33 | 0.99 (0.23) | 1.15 (0.54) | 16% | 0.1862 |

| 50–99 | 42 | 0.96 (0.15) | 1.11 (0.22) | 16% | <0.0001 |

| Public | 7 | 0.93 (0.14) | 0.96 (0.14) | 3% | 0.664 |

| Private | 35 | 0.97 (0.15) | 1.12 (0.15) | 15% | <0.0001 |

| 100–249 | 155 | 1.02 (0.23) | 1.17 (0.26) | 15% | <0.0001 |

| Public | 22 | 0.94 (0.16) | 1.02 (0.16) | 9% | 0.002 |

| Private | 133 | 1.04 (0.24) | 1.20 (0.26) | 15% | <0.0001 |

| 250–350 | 54 | 1.11 (0.18) | 1.26 (0.19) | 14% | <0.0001 |

| Public | 7 | 1.08 (0.12) | 1.24 (0.16) | 15% | 0.003 |

| Private | 47 | 1.13 (0.18) | 1.26 (0.20) | 12% | <0.0001 |

| >350 | 60 | 1.16 (0.20) | 1.28 (0.25) | 10% | <0.0001 |

| Public | 15 | 1.16 (0.26) | 1.26 (0.30) | 8% | 0.005 |

| Private | 45 | 1.15 (0.18) | 1.31 (0.24) | 14% | <0.0001 |

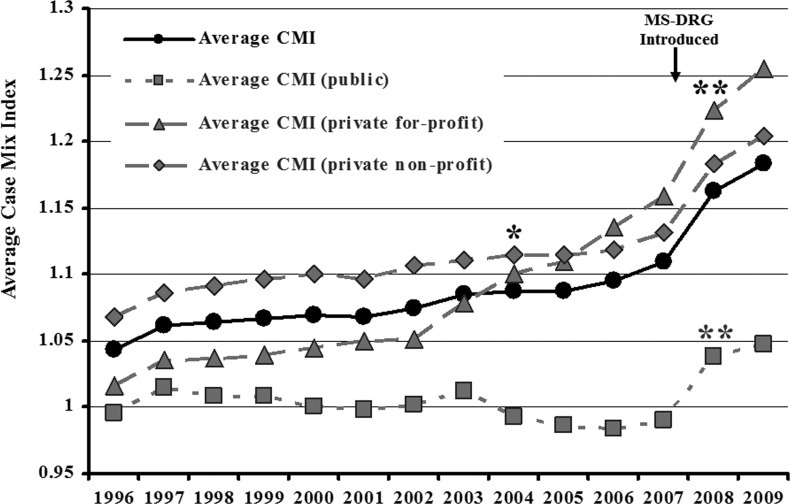

Across all hospitals in California, the average CMI increased 13% between 1996 and 2009 (Fig. 1). Using control limits to detect significant variance from baseline, all hospitals combined, private for-profit and private non-profit hospitals achieved a significant increase in their average CMI compared to baseline in 2004 (Fig. 1). In contrast, public hospitals did not achieve a significant increase in average CMI compared to baseline until 2008, after the implementation of the new MS-DRG system by CMS (Fig. 1). After CMS implementation of the MS-DRG system, private for-profit and private nonprofit hospitals again achieved a significant increase in their average CMIs (Fig. 1).

FIG. 1.

Average Case Mix Index (CMI) by Year (1996–2009). (A) All hospitals in the state of California reported to the Office of State Health Planning and Development database. (B) Hospitals separated by financial status as public, private, and nonprofit. *P<0.01 vs. baseline variation from 1996–2004 for all hospitals, private for-profit hospitals, and private nonprofit hospitals. **P<0.01 vs. baseline variation from 1996–2008 for public hospitals. MS-DRG, Medicare Severity Diagnosis Related Group.

To define factors affecting changing CMI, paired changes over time (comparing 1996 vs. 2009) were analyzed by hospital category (Table 1). Hospitals significantly increased their average CMI irrespective of ownership, teaching status, or bed size. However, privately owned hospitals increased their average CMI by nearly 3-fold more than publicly owned hospitals.

The largest increase in average CMI occurred in level 1 trauma hospitals (Table 1). Of the level 1 trauma hospitals, public hospitals experienced a substantially smaller increase in average CMI than private non-profit hospitals (Table 1). Indeed, in 1996, the average CMI was 4% higher at public than private nonprofit level 1 trauma hospitals, but in 2009 the average CMI was 6% lower at public than private nonprofit level 1 trauma hospitals (Table 1).

By regression analysis, hospital ownership and size were statistically significantly associated with differences in CMI over time (Table 2). However, the adjusted multivariate analysis found that only hospital ownership was independently associated with changes in CMI over time. Specifically, private hospitals experienced more than twice as great a change in CMI over time vs. public.

Table 2.

Multivariate Analysis for Changes in Case Mix Index (CMI) Over Time

| Adjusted differences between the means (P value) | ||||

|---|---|---|---|---|

| Unadjusted change in mean CMI 1996 vs. 2009 (P value) | Overall | Public only | Private only | |

| Private vs. public | 0.15 vs. 0.07 (0.0003)* | 0.09 (<0.0001)* | ||

| Teaching vs. not | 0.16 vs. 0.12 (0.10) | 0.03 (0.30) | 0.02 (0.66) | 0.03 (0.38) |

| Trauma 1 vs. not | 0.21 vs. 0.13 (0.09) | 0.09 (0.11) | 0.04 (0.41) | 0.11 (0.19) |

| Increasing # of beds | 0.013/100 beds (0.03)* | 0.00003 (0.65) | 0.0001 (0.26) | 0.00001 (0.87) |

P<0.05.

Cross-sectional analysis of case mix index in 2007 and 2009

In 2007, the Medicare Severity-DRG system (MS-DRG) was introduced by CMS to capture disease severity more accurately. Because the DRG system changed in 2007, it was potentially problematic to compare CMI across periods spanning the change. Therefore, the authors divided the data into 2 time periods: before and after 2007. Although private for-profit and nonprofit hospitals steadily increased their average CMI between 1996 and 2007 by 14% and 6%, respectively, public hospitals experienced a decline in their average CMI over the same time period (Fig. 1). In contrast, after 2007, private nonprofit and for-profit hospitals experienced average CMI increases as did public hospitals (Table 3).

Table 3.

Average (SD) Case Mix Index by Hospital Ownership and Teaching Status

| N (2006/2009) | 2006 | 2009 | Change | |

|---|---|---|---|---|

| Public | 73/69 | 0.99 (0.21) | 1.05 (0.22) | 6% |

| Private for-profit | 73/73 | 1.14 (0.40) | 1.25 (0.40) | 10% |

| Private nonprofit | 223/220 | 1.12 (0.24) | 1.20 (0.23) | 7% |

| P value | <0.0001* | <0.0001* | ||

| Teaching, all | 86/82 | 1.22 (0.29) | 1.30 (0.29) | 7% |

| Public | 25/23 | 1.13 (0.26) | 1.21 (0.26) | 7% |

| Private | 59/59 | 1.26 (0.30) | 1.34 (0.29) | 6% |

| Nonteaching | 285/280 | 1.06 (0.26) | 1.15 (0.27) | 8% |

| P value | 0.40 | 0.80 |

By analysis of variance.

In both 2006 and 2009, CMI varied significantly by hospital ownership (Table 3). In 2009, public hospitals in California had lower average CMI than either private for-profit or private nonprofit hospitals (Table 1). However, the average CMI of private for-profit and nonprofit hospitals did not differ significantly. In 2009, the teaching hospitals in the database had a significantly higher average CMI than the nonteaching hospitals (Table 3). Private for-profit and nonprofit teaching hospitals had similar average CMI (for-profit vs. nonprofit mean [SD]=1.30 [0.23] vs. 1.43 [0.43]). However, CMI differed across public vs. private teaching and nonteaching hospitals. The average CMI of nonteaching hospitals was lower than private teaching hospitals, and was similar to that of public teaching hospitals (Table 3).

When each hospital's CMI was compared to its trauma level, a statistically significant association was found (P=0.005 by ANOVA, Table 1). Pair-wise comparisons confirmed that hospitals with trauma level 1 had the highest average CMI, which was significantly higher than levels 0, 2, and 4 (P<0.05 for all comparisons).

Hospital bed number also affected CMI. Hospitals with higher bed numbers had higher average CMI (Table 1). By pair-wise comparisons, hospitals with <49 beds had significantly lower average CMI than those with 250–350 and with >350 beds (P<0.05 for both). Hospitals with >350 beds had significantly higher (P<0.05) average CMI than all groups except for hospitals with 250–349 beds.

Regression analysis was conducted to analyze impact of hospital factors on CMI in 2009. By both unadjusted analysis and adjusted multivariate analysis, all 4 factors (hospital ownership, teaching vs. not, trauma level 1 vs. not, and increasing hospital size) were associated with differences in CMI in 2009 (Table 4). Hospital size had similar impact in public and private hospitals. The CMI was not significantly different between public teaching vs. nonteaching hospitals (mean CMI in public teaching vs. nonteaching hospitals 1.16 vs. 1.12, P=0.49); in contrast, private teaching hospitals did have higher CMI than private nonteaching hospitals (P=0.005).

Table 4.

Multivariate Analysis of Hospital Factors Affecting Case Mix Index in 2009

| Adjusted Difference Between the Means (P value) | ||||

|---|---|---|---|---|

| Unadjusted Means (P value) | Overall | Public Only | Private Only | |

| Private vs. public | 1.21 vs. 1.05 (<0.0001)* | 0.09 (<0.0001)* | ||

| Teaching vs. not | 1.30 vs. 1.14 (<0.0001)* | 0.03 (0.30) | 0.02 (0.66) | 0.03 (0.38) |

| Trauma 1 vs. not | 1.45 vs. 1.17 (<0.0001)* | 0.09 (0.11) | 0.04 (0.41) | 0.11 (0.19) |

| Increasing # of beds | 0.06/100 beds (<0.0001)* | 0.00003 (0.65) | 0.0001 (0.26) | 0.00001 (0.87) |

P<0.05.

Discussion

Originally designed as a basis for calculating hospital payments, CMI has become a commonly used disease severity index. Nevertheless, examples of how severity of illness can be readily dissociated from CMI are the diagnoses, “unexplained cardiac arrest” (DRG 298) and “fatal” versus “non-fatal acute myocardial infarction” (DRG 285 vs. 282). It is intuitively obvious that cardiac arrest represents a very high severity of illness, and that fatal myocardial infarction is more severe than nonfatal myocardial infarction. Yet, because patients with cardiac arrest have a short average length of stay (1.1 day), and patients with fatal myocardial infarction have a shorter average length of stay than those with nonfatal infarction (1.4 vs. 2.2 days), the DRG for cardiac arrest is assigned a remarkably low relative weight (0.45), and the DRG for fatal myocardial infarction has a lower relative weight than nonfatal infarction (0.57 vs. 0.81).23 Comparison of these relative weights to that for uncomplicated appendectomy (DRG 343, relative weight 0.96) underscores the point that relative weights, and hence CMI (which is the average of relative weights), are higher for patients who cost more money to take care of, not necessarily for patients with more severe disease. Thus, this study hypothesized that CMI would correlate poorly with hospitals with higher disease acuity (eg, level 1 trauma centers). Furthermore, the accuracy of CMI is dependent on specific physician documentation and skilled coder abstraction of diagnoses from the medical record. Given their more limited access to capital than private hospitals, and the up-front cost associated with documentation and coding interventions to increase CMI, this study hypothesized that public hospitals would have lower CMI than private hospitals. Indeed, multivariate analysis confirms that when adjusting for other key hospital parameters, across all hospitals in the state of California, public hospitals had lower average CMI than private for-profit or nonprofit hospitals regardless of teaching status, hospital size, and trauma level.

Nevertheless, there were several lines of evidence that CMI does track with disease severity at a macro level, particularly since 2007 (when CMS introduced the new MS-DRG system). For example, the fact that level 1 trauma centers and hospitals with the greatest number of beds had the highest average CMI is consistent with the ability of CMI to accurately capture disease severity, at least at the extreme (ie, level 1 trauma vs. other, greatest number of beds vs. less). This study also hypothesized that teaching hospitals would have lower CMI because much of the documentation at teaching hospitals is performed by trainees who are poorly trained to document disease severity accurately. However, teaching hospitals in California had higher CMI than nonteaching hospitals, likely because teaching hospitals tend to serve as referral centers for patients with more advanced and complex diseases. Thus higher CMI in teaching hospitals also is evidence of the ability of CMI to capture disease severity in the MS-DRG system. Nevertheless, on testing for interaction and by multivariate analysis, teaching hospitals only had higher average CMI if they were privately owned. These data suggest that hospitals with sufficient resources to hire specialists to improve their documentation and coding supervision and feedback are able to overcome limitations of trainee documentation.

Publicly funded teaching hospitals had low average CMI, had no increase in their CMI until after the introduction of the MS-DRG system in 2007, and subsequently had the smallest increase in average CMI after the MS-DRG introduction. The MS-DRG system was explicitly designed and implemented to improve the ability of DRG-based payments to correlate with severity of illness. The MS-DRG system divides DRGs into 3 groups: those diagnoses not accompanied by comorbidities, those accompanied by comorbidities (called “complication codes”), and those accompanied by major comorbidities (called “major complication codes”). Payments are substantially higher for DRGs with complication codes and major complication codes. This study's findings suggest that the MS-DRG system did enhance disease severity capture because CMI increased for hospitals irrespective of ownership (public, private for-profit, private non-profit) after the system was implemented.

Thus, it may be reasonable to use CMI as a disease severity indicator within hospitals or among hospitals of a similar ownership group. For example, Ahmed et al24 recently reported that implementing a new Acute Care for the Elderly unit resulted in shorter lengths of stay when adjusted for by CMI for elderly patients at their hospital. Furthermore, the CMI of the elderly patients cared for increased after implementing the unit, indicating that sicker patients were being referred to the unit than had been cared for before the unit's existence. In this context, CMI was functioning appropriately to normalize for disease severity among their hospital's population. Nevertheless, a critical implication of the present study's findings is that CMI should not be used to compare across hospitals with varying ownership (public vs. private). By multivariate analysis, CMI varied by hospital ownership, both when analyzed longitudinally between 1996 and 2009, and when analyzed cross-sectionally in 2009. Furthermore, public hospital ownership blunted the impact of other hospital characteristics that were associated with higher CMI among private hospitals (eg, teaching status). Thus, public and private hospitals have fundamentally distinct accuracy at capturing disease severity in CMI. Specifically, CMI underestimates the true severity of illness of patients seen at public hospitals because there is a diminished motive to maximize financial reimbursement at public hospitals, and such hospitals lack the resources needed to implement coding and documentation improvement. Indeed, the authors recently demonstrated that an intensive educational program enabled a significant increase in CMI at a public hospital, demonstrating the impact that coding and documentation have on accurately capturing disease severity.25

Although CMS does not provide severity of illness (SOI) or risk of mortality (ROM) scores based on DRGs, other groups have developed means to estimate SOI and ROM based on ICD-9-CM codes.26 The SOI and ROM indexes are based on ICD-9-CM groupings, not specifically DRG assignments, and are derived by complex logistic regression analyses of large administrative databases. In contrast, MS-DRG assignments are based only on the principle diagnosis and the presence of usually no more than 1 additional diagnosis that is assigned by CMS to its annually published complication code or major complication code list.27 Nevertheless, data in the present study reveal that CMI based on MS-DRG assignments independently tracks with hospitals that care for patients with higher disease severity, including larger hospitals, level 1 trauma centers, and teaching hospitals.

There were several limitations of the current study. First, the OSHPD database analyzed contains only data from California hospitals, so generalizability to hospitals in other states in the United States and to hospitals in other countries is not clear. Confirmation of these findings in other large administrative data sets in other geographic areas both within and beyond the United States is warranted. Second, specific data on availability of financial capital at individual hospitals were not available, so this study was not able to assess for specific impact of capital on changes in CMI. Nevertheless, the OSHPD database has some substantial advantages over other administrative databases. For example, California is a very large geographic area and accounts for 10% of the population of the United States. Furthermore, the OSHPD database includes all hospitals in California, without selection of hospitals that are associated by specific academic, financial, or other networks. Finally, the hospital-based CMI data in the OSHPD database are derived from every admission to the hospitals in California, irrespective of such factors as age (young vs. old), inpatient service (eg, internal medicine vs. psychiatry), and funding source (Medicare vs. not), among others.

In summary, although it was designed as a basis for calculating reimbursement, CMI correlates with disease severity among hospitals of similar ownership and teaching status. However, CMI should not be used to adjust for disease severity across hospitals with varying ownership (public vs. private), either for comparison of quality metrics or performance, or for research purposes. CMI appears to underestimate disease severity at public/safety net hospitals, and public teaching hospitals, in particular. Thus, publicly reported data that are normalized by CMI unduly disadvantage public/safety net hospitals. Future research is warranted to define the underlying causes for lower CMI at public vs. private hospitals.

Acknowledgments

Statistical support was provided by the Los Angeles Biomedical Research Institute and National Institutes of Health Grant UL1TR000124 to the UCLA Clinical and Translational Sciences Institute.

Author Disclosure Statement

The authors declared no conflicts of interest with respect to the research, authorship, and/or publication of this article.

The authors received no financial support for the research, authorship, and/or publication of this article.

References

- 1.Iglehart JK. Medicare begins prospective payment of hospitals. N Engl J Med. 1983;308:1428–1432 [DOI] [PubMed] [Google Scholar]

- 2.Steinwald B, Dummit LA. Hospital case-mix change: Sicker patients or DRG creep? Health Aff (Millwood) 1989;8:35–47 [DOI] [PubMed] [Google Scholar]

- 3.Dennis G, Jr, Sherman BT, Hosack DA, et al. . DAVID: Database for annotation, visualization, and integrated discovery. Genome Biol 2003;4:P3. [PubMed] [Google Scholar]

- 4.Barnes SL, Waterman M, Macintyre D, Coughenour J, Kessel J. Impact of standardized trauma documentation to the hospital's bottom line. Surgery 2010;148:793–797; discussion 797–798. [DOI] [PubMed] [Google Scholar]

- 5.Kuster SP, Ruef C, Bollinger AK, et al. . Correlation between case mix index and antibiotic use in hospitals. J Antimicrob Chemother 2008;62:837–842 [DOI] [PubMed] [Google Scholar]

- 6.Tsan L, Davis C, Langberg R, Pierce JR. Quality indicators in the Department of Veterans Affairs nursing home care units: A preliminary assessment. Am J Med Qual 2007;22:344–350 [DOI] [PubMed] [Google Scholar]

- 7.Rosko MD, Chilingerian JA. Estimating hospital inefficiency: Does case mix matter? J Med Syst 1999;23:57–71 [DOI] [PubMed] [Google Scholar]

- 8.Imler SW. Provider profiling: Severity-adjusted versus severity-based outcomes. J Healthc Qual 1997;19:6–11 [DOI] [PubMed] [Google Scholar]

- 9.Grogan EL, Speroff T, Deppen SA, et al. . Improving documentation of patient acuity level using a progress note template. J Am Coll Surg 2004;199:468–475 [DOI] [PubMed] [Google Scholar]

- 10.Horn SD, Bulkley G, Sharkey PD, Chambers AF, Horn RA, Schramm CJ. Interhospital differences in severity of illness. Problems for prospective payment based on diagnosis-related groups (DRGs). N Engl J Med 1985;313:20–24 [DOI] [PubMed] [Google Scholar]

- 11.Ballentine NH. Coding and documentation: Medicare severity diagnosis-related groups and present-on-admission documentation. J Hosp Med 2009;4:124–130 [DOI] [PubMed] [Google Scholar]

- 12.Barreto EA, Bryant G, Clarke CA, Cooley SS, Owen DE, Petronelli M. Strategies and tools for improving transcription and documentation. Healthc Financ Manage 2008;62:1–4 [PubMed] [Google Scholar]

- 13.Didier D, Pace M, Walker WV. Lessons learned with MS-DRGs: Getting physicians on board for success. Healthc Financ Manage 2008;62:38–42 [PubMed] [Google Scholar]

- 14.Documentation initiative increases case mix index Hosp Case Manag 2008;16:156–158 [PubMed] [Google Scholar]

- 15.Courtright E, Diener I, Russo R. Clinical documentation improvement: It can make you look good! Case Manager 2004;15:46–49 [DOI] [PubMed] [Google Scholar]

- 16.Rosenstein AH, O'Daniel M, White S, Taylor K. Medicare's value-based payment initiatives: Impact on and implications for improving physician documentation and coding. Am J Med Qual 2009;24:250–258 [DOI] [PubMed] [Google Scholar]

- 17.Cousineau MR, Tranquada RE. Crisis & commitment: 150 years of service by Los Angeles county public hospitals. Am J Public Health 2007;97:606–615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dobson A, DaVanzo JE, El-Gamil AM, Berger G. How a new 'public plan' could affect hospitals' finances and private insurance premiums. Health Aff (Millwood) 2009;28:w1013–w1024 [DOI] [PubMed] [Google Scholar]

- 19.Office of Statewide Health Planning and Development Healthcare Information Division - Case Mix Index. Available at: http://www.oshpd.ca.gov/HID/Products/PatDischargeData/CaseMixIndex/default.asp Accessed October15, 2011

- 20.California Emergency Medical Services Authority EMS Systems Division–Trauma. Available at: http://www.emsa.ca.gov/systems/trauma/default.asp Accessed October15, 2011

- 21.Prokocimer P, De Anda C, Fang E, Mehra P, Das A. Tedizolid phosphate vs linezolid for treatment of acute bacterial skin and skin structure infections: The ESTABLISH-1 randomized trial. JAMA 2013;309:559–569 [DOI] [PubMed] [Google Scholar]

- 22.Carey RG, Lloyd RC. Measuring Quality Improvement in Healthcare. Milwaukee, WI: Quality Press; 2001 [Google Scholar]

- 23.Spellberg B. Acute bacterial skin and skin structure infection trials: The bad is the enemy of the good. Clin Infect Dis 2011;53:1308–1309; author reply 1309–1310. [DOI] [PubMed] [Google Scholar]

- 24.Ahmed N, Taylor K, McDaniel Y, Dyer CB. The role of an acute care for the elderly unit in achieving hospital quality indicators while caring for frail hospitalized elders. Popul Health Manag 2012;15:236–240 [DOI] [PubMed] [Google Scholar]

- 25.European Medicines Agency Guideline on the evaluation of medicinal products indicated for treatment of bacterial infections. 2011. Available at: http://www.ema.europa.eu/docs/en_GB/document_library/Scientific_guideline/2009/09/WC500003417.pdf Accessed March11, 2013

- 26.Spellberg B. Solving the antibiotic crisis: A top down strategic rethink. Presented at: Facilitating Antibacterial Drug Development, Brooking's Institute, Engelberg Center for Health Care Reform, May9, 2012, Washington, DC [Google Scholar]

- 27.Fleiss JL. Statistical Methods for Rates and Proportions. 2nd ed. New York: John Wiley; 1981 [Google Scholar]