Abstract

Background

Current readmission models use administrative data supplemented with clinical information. However, the majority of these result in poor predictive performance (area under the curve (AUC)<0.70).

Objective

To develop an administrative claim-based algorithm to predict 30-day readmission using standardized billing codes and basic admission characteristics available before discharge.

Materials and Methods

The algorithm works by exploiting high-dimensional information in administrative claims data and automatically selecting empirical risk factors. We applied the algorithm to index admissions in two types of hospitalized patient: (1) medical patients and (2) patients with chronic pancreatitis (CP). We trained the models on 26 091 medical admissions and 3218 CP admissions from The Johns Hopkins Hospital (a tertiary research medical center) and tested them on 16 194 medical admissions and 706 CP admissions from Johns Hopkins Bayview Medical Center (a hospital that serves a more general patient population), and vice versa. Performance metrics included AUC, sensitivity, specificity, positive predictive values, negative predictive values, and F-measure.

Results

From a pool of up to 5665 International Classification of Diseases, 9th Revision, Clinical Modification (ICD-9-CM) diagnoses, 599 ICD-9-CM procedures, and 1815 Current Procedural Terminology codes observed, the algorithm learned a model consisting of 18 attributes from the medical patient cohort and five attributes from the CP cohort. Within-site and across-site validations had an AUC≥0.75 for the medical patient cohort and an AUC≥0.65 for the CP cohort.

Conclusions

We have created an algorithm that is widely applicable to various patient cohorts and portable across institutions. The algorithm performed similarly to state-of-the-art readmission models that require clinical data.

Keywords: readmission, administrative claims data, predictive modelling, sensitivity and specificity, algorithm, portability

Background and significance

Administrative claims data are records of transactions that occurred between patients and healthcare providers. They are often the electronic versions of bills healthcare providers submit to payers for outpatient visits and inpatient hospital stays. The core data elements include services, diagnosis, procedures, beneficiary demographics, and admission characteristics. Administrative data are a viable source for research1 because they are readily available, inexpensive, cover large populations, and can be available in near real time in some hospitals (depending on the source). Researchers have used administrative data for a wide range of applications, including predicting hospital readmissions,2–6 assessing quality and patient safety,7 8 improving risk adjustment for hospital mortality9 and morbidity,10 identifying cohorts,11–15 and evaluating effectiveness of treatments.16–19 These predictive models can be used to make decisions about reimbursement, compare quality across hospitals, and perform comparative effectiveness research.2 3

Hospitalizations account for almost 31% of healthcare expenditures.20 Yet it is estimated that 20% of Medicare patients discharged from a hospital return within 30 days, and 34% are rehospitalized within 90 days.21 Most readmissions are potentially preventable and are indicators of poor quality of care or poor coordination among healthcare providers.21–23 The Medicare Payment Advisory Commission initiatives24 aim to reduce hospital readmissions through payment policies that penalize for excessive readmission rates. A first step to reducing preventable hospital readmissions is to identify predictors of early readmission and assess risk in individual patients. Reducing readmissions will reduce unnecessary costs and increase the value of the healthcare institution.25

A recent systematic review2 summarized 26 unique readmission prediction models based on administrative data,3 electronic medical records,26 27 or a combination of the two.26 28 Of the 14 models using 30-day readmission as the outcome, most models have poor predictive performance. Among the four models3 26 27 29 with moderate discriminative power (area under the curve (AUC)>0.70), three3 27 29 used clinical details and one26 was based on small samples (700 training samples, 704 validation samples).

Objective

In this study, we developed an algorithm to predict 30-day readmission using billing codes and basic administrative information available before discharge. We evaluated the performance using both within-site cross-validation and across-site validation at The Johns Hopkins Hospital (JHH) (a tertiary referral center) and at Johns Hopkins Bayview Medical Center (BMC) (a community hospital). We used two distinct application settings, (1) predicting 30-day outcome in medical patients and (2) predicting 30-day outcome in patients with chronic pancreatitis (CP) (known to be at high risk of readmission).30 The resulting models demonstrated discriminative power and easy portability between the referral and community hospitals. The latter model demonstrated a disease-specific application of this modeling approach, which could be applied to a number of other clinical contexts.

Methods

Data source

The JHH's Casemix Information Management Department developed the Johns Hopkins Medicine (JHM) DataMart using the Microsoft SQL Server. It includes JHH casemix and billing data (starting from 1993) and BMC casemix and billing data (starting from 1995). The core data elements at JHH and BMC include admission date, discharge date, admission type, admission source, length of stay, primary and varying numbers of secondary International Classification of Diseases, 9th Revision, Clinical Modification (ICD-9-CM) diagnosis and procedure codes, revenue procedure codes mappable to Current Procedural Terminology (CPT) codes, and some demographic attributes.

For our study, we created two types of patient cohort from JHH and BMC (table 1): (1) medical patients discharged between January 2012 and April 2013 at JHH (n=26 091) and BMC (n=16 194); (2) CP patients discharged between January 2007 and April 2013 at JHH (n=3218) and BMC (n=706). Medical patients were directly identified by an attribute in DataMart that classifies an index admission into either the ‘medical’ or ‘surgical’ service. We identified CP patients as those with an ICD-9-CM diagnosis code 577.1 at any position. For both patient cohorts, we required patients to be at least 18 years old, discharged home, and not having died during the hospitalization. We defined a case of readmission as an admission within 30 days of the prior discharge date, in concordance with published research.2 27 28 Since planned readmissions, possibly scheduled at the time of previous admissions, are unavoidable, only risk factors discriminating unplanned readmissions from those not followed by 30-day readmissions are of interest. For medical patients, readmission cases that have diagnoses or procedures in chemo- and radio-therapy, treatment follow-up, rehabilitation, procedures not carried out, and planned surgical interventions were excluded from the study cohorts. Specific ICD-9-CM codes are provided in online supplementary table S1. The CP cohorts were more homogeneous, and therefore no exclusion was made. In the subsequent analyses, ICD-9-CM codes used to identify planned readmissions were excluded from consideration. The attributes embedded in our derived patient cohort include age, sex, ethnicity, marital status, length of stay, accumulated number of inpatient admissions in the past 5 years, admission date, discharge date, admission to the medical or surgical service, total number of diagnoses, total number of procedures, all individual ICD-9-CM diagnoses and procedures, and all CPT codes that occurred during an index inpatient admission. The JHM institutional review board approved the protocol (IRB#: NA_00082734).

Table 1.

Characteristics of study cohorts

| Characteristic | ME | CP | ||

|---|---|---|---|---|

| JHH | BMC | JHH | BMC | |

| Total number of admissions | 26 091 | 16 194 | 3218 | 706 |

| Number of unique individuals | 18 974 | 12 803 | 1763 | 430 |

| Percent readmission | 11.5 | 8.7 | 15.6 | 7.8 |

| Age (years), mean±SD | 50.3±18.0 | 51.5±18.6 | 51.4±14.0 | 51.4±11.7 |

| Male (%) | 46 | 49 | 47 | 61 |

| LOS (days), mean±SD | 5.3±7.0 | 3.6±3.3 | 6.4±6.6 | 4.6±4.7 |

| Number of observed diagnoses | 5665 | 4507 | 3696 | 1229 |

| Number of observed procedures | 599 | 376 | 751 | 196 |

| Number of observed CPT codes | 1815 | 1274 | 1333 | 883 |

Number of observed diagnoses/procedures/CPTs is the total number of codes that appear at least once in the population cohort.

BMC, Bayview Medical Center; CP, patients with chronic pancreatitis; CPT, Current Procedural Terminology; JHH, Johns Hopkins Hospital; LOS, length of stay; ME, all patients admitted to the medical service.

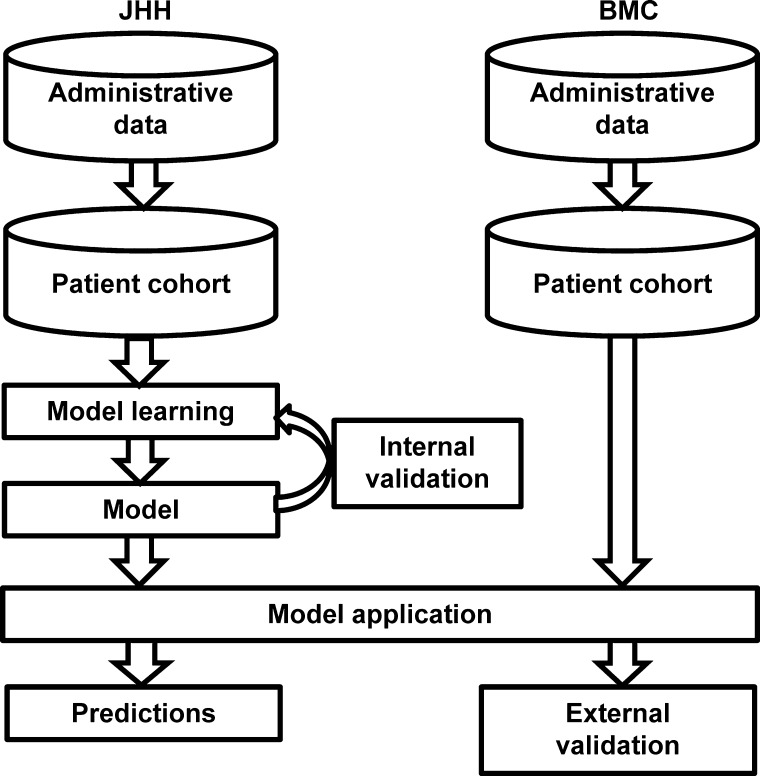

Study design

We evaluated our approach using two applications: (1) predicting 30-day outcomes in medical patients; (2) predicting 30-day outcomes in CP patients. As shown in figure 1, for each application, we curated the patient cohort from the administrative database and supplied it into the algorithm. On the training dataset, the algorithm inputted all billing codes except those used to identify planned readmissions, as well as a set of administrative attributes, and achieved fully automatic model learning. The output of the algorithm—the model—was a set of selected attributes together with their estimated coefficients. For within-site performance evaluation, we used fivefold cross-validation. For across-site performance evaluation, the model learned from the entire JHH cohort was tested on the BMC cohort, and in a similar fashion, the model learned from the entire BMC cohort was tested on the JHH cohort (figure 1).

Figure 1.

Evaluation workflow. The figure shows the model learning on the entire Johns Hopkins Hospital (JHH) cohort and tested on the entire Bayview Medical Center (BMC) cohort. Training on BMC and testing on JHH were performed in a similar fashion (not pictured).

Branch and bound algorithm

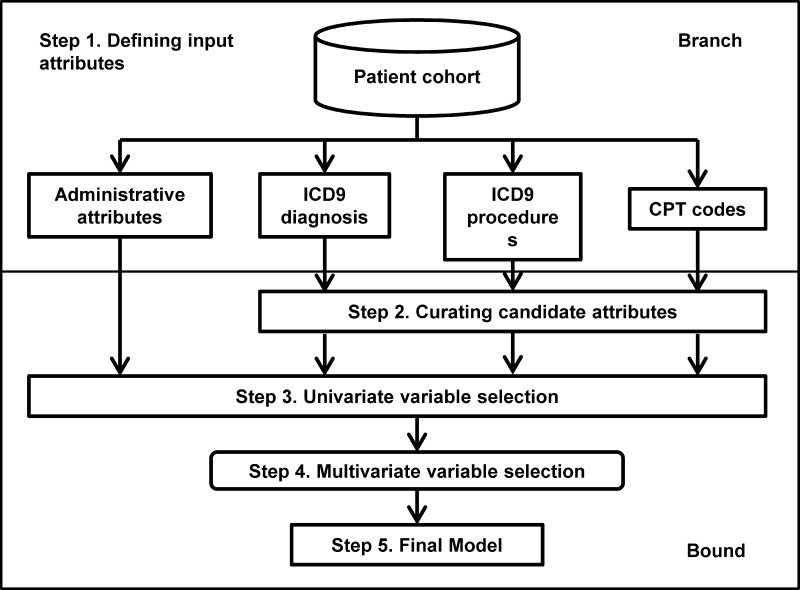

We used a five-step procedure to achieve automatic feature selection and model learning (figure 2). The latest source code for the algorithm is available at https://my.vanderbilt.edu/readmission/.

Defining input attributes. Input attributes included age, sex, ethnicity, marital status, length of stay, accumulated number of inpatient admissions in the past 5 years, ICD-9-CM diagnosis and procedure codes, and CPT codes. For billing codes, an attribute takes a value of 1 if that code is observed during the index admission, or 0 otherwise.

Curating potential attributes by prevalence prioritization. This step excluded the attributes with low prevalence (<1%) from further analyses. Prevalence is the proportion of individuals who have a specific code. Although candidate attributes were of high dimension (table 1), the majority of them occurred in <1% of the population. In hierarchical coding, increasing the granularity decreases the prevalence,19 suggesting that generic codes may be preferable. However, fine-level information serves as a better proxy of the conditions and procedures experienced by the patient, making it easier for healthcare providers to interpret models. We therefore kept all codes at their original level.

Univariate variable selection. This step identified top ranked attributes. Each attribute's significance of correlation with 30-day outcome was defined as the Likelihood Ratio Test (LRT) p values of the fitted logistic regression, in which the response factor was 30-day outcome, and the explanatory factor was the tested attribute. Attributes with a significance level above the user-supplied threshold were retained for further analyses.

Multivariate variable selection. This step performed subset selection for the multivariate model by forward variable selection along a path generated by random ordering of the variables. LRT was conducted to determine whether the addition of a new attribute significantly reduced the unexplained variance. An insignificant attribute was dropped, and the next attribute in the path was added. The process was repeated until all attributes had been tested once. Further details about this approach and discussion of its limitations are provided in online supplementary text and in the source code.

Final model. We estimated a risk score for each patient as the predicted probability of 30-day readmission conditional on attributes selected from step 4. We performed logistic regression on the selected attributes using the GLM function in the statistical program R.

Figure 2.

Overview of the algorithm. The flow chart outlines the five steps in the algorithm, which can be classified as branch and bound. CPT, Current Procedural Terminology; ICD9, International Classification of Diseases, 9th Revision.

Issues related to parameter adjustment

The algorithm required two parameters: the p value for univariate variable selection and the p value for multivariate variable selection, which were chosen via internal fivefold cross-validation. The search of p values was on a log10 scale. The search spaces for the first p values were those that allow 0.5–1.5% of the total observed codes to be included in the logistic regression model, and the search spaces for the second p values were those that allow <0.5% of the total observed codes to be in the final model (table 2).

Table 2.

Parameter adjustment and model size after univariate variable selection and multivariate variable selection

| Cohort | Total attributes | Univariate variable selection | Multivariate variable selection | ||||

|---|---|---|---|---|---|---|---|

| p Value cutoff | Number of attributes | Percentage | p Value cutoff | Number of attributes | Percentage | ||

| ME | |||||||

| JHH | 8083 | 1.00E-20 | 47 | 0.58 | 1.00E-06 | 18 | 0.21 |

| BMC | 6161 | 1.00E-20 | 75 | 1.20 | 1.00E-06 | 20 | 0.30 |

| CP | |||||||

| JHH | 5784 | 1.00E-06 | 37 | 0.60 | 1.00E-02 | 5 | 0.09 |

| BMC | 2312 | 1.00E-02 | 12 | 0.50 | 1.00E-02 | 7 | 0.30 |

The models refer to the ones learned from the entire patient cohort that were subject to across-site analyses using the p value cutoffs identified via internal cross-validation.

BMC, Bayview Medical Center; CP, patients with chronic pancreatitis; JHH, Johns Hopkins Hospital; ME, all patients admitted to the medical service.

Evaluation of performance

Within-site performance was evaluated by fivefold cross-validation. The complete algorithm (step 1 through step 5) was applied to the four training folds, and the final model learned from the fourfolds was validated on the remaining onefold. We reported performance metrics such as AUC, sensitivity, specificity, optimal F-measure, positive predictive values, and negative predictive values at the optimal cutoff (averaged over folds for cross-validation). We plotted receiver operating characteristic curves using the ROCR package.31 We based our interpretation of the model on the final models learned from the entire JHH cohort because it was the larger cohort.

Results

We list basic demographic and admission characteristics for the patient cohorts from JHH and BMC in table 1. The JHH cohorts had longer lengths of stay (Wilcoxon test p value=1.6E-120), possibly because the patient population included sicker patients seen at a referral center.

Evaluation on medical patients

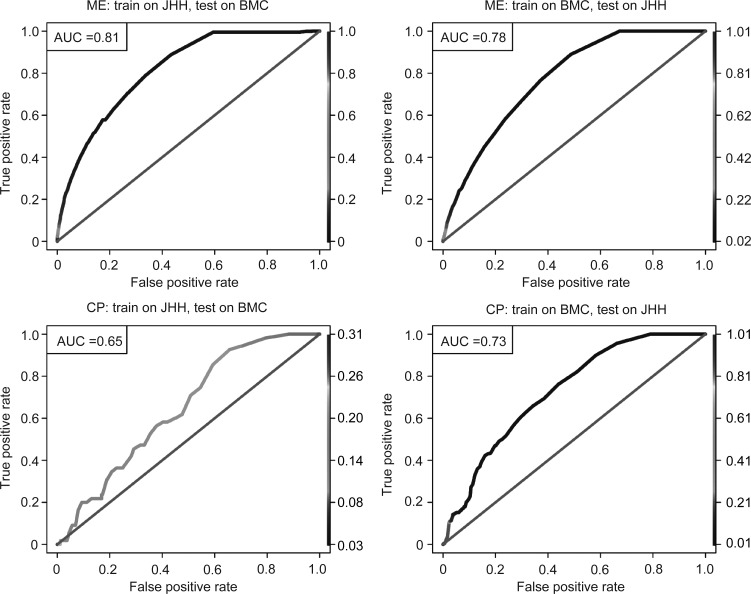

In the JHH medical patient cohort, of 26 091 indexed admissions between January 2012 and April 2013 (corresponding to 18 974 individuals), 11.5% were unplanned readmissions within 30 days of the prior discharge. From a pool of 5665 ICD-9-CM diagnoses, 599 ICD-9-CM procedures, 1815 CPT procedures (which have at least one occurrence in the population), the learned model contained 18 attributes (one ICD-9-CM procedure, 16 CPT procedures, and one administrative attribute). We list the coefficients’ estimates and their p values for the top 10 attributes in table 3, and provide a full model in online supplementary table S2. Across-site validation on the BMC cohort had AUC=0.81 (figure 3A). We provide the model derived from the entire BMC cohort (n=16 194) in online supplementary table S3. Across-site validation on the JHH cohort had AUC=0.78 (figure 3B). In general, the algorithm showed similar performance for JHH and BMC. Across-site validations showed similar performance to within-site fivefold cross-validation (AUC=0.75±0.01on the JHH cohort and AUC=0.79±0.02 on the BMC cohort). Other performance metrics are shown in table 4.

Table 3.

Coefficients for the top 10 attributes included in model derived from the JHH ME cohort

| Category | Code | Description | Estimate | SE | Z value | Pr(>|z|) |

|---|---|---|---|---|---|---|

| CPT procedure | 36430 | Transfusion, blood or blood components | 0.74 | 0.12 | 5.99 | 2.16E-09 |

| J1170 | Injection, hydromorphone, up to 4 mg | 0.29 | 0.05 | 5.60 | 2.12E-08 | |

| 86900 | Blood typing: ABO | 0.29 | 0.05 | 5.53 | 3.15E-08 | |

| J7507 | Tacrolimus, oral, per 1 mg | 0.37 | 0.07 | 4.99 | 6.06E-07 | |

| 87086 | Culture, bacterial; quantitative colony count, urine | 0.20 | 0.04 | 4.70 | 2.61E-06 | |

| 96375 | Therapeutic, prophylactic, or diagnostic injection (specify substance or drug); each additional sequential intravenous push of a new substance/drug (list separately in addition to code for primary procedure) | 0.49 | 0.11 | 4.34 | 1.40E-05 | |

| 83605 | Lactate (lactic acid) | 0.19 | 0.05 | 4.15 | 3.34E-05 | |

| 64891 | Nerve graft (includes obtaining graft), single strand, hand or foot; more than 4 cm length | −1.09 | 0.30 | −3.64 | 2.70E-04 | |

| 83690 | Lipase | 0.17 | 0.05 | 3.34 | 8.50E-04 | |

| Admission | adm_acc | 5-year accumulated number of admissions | 0.07 | 0.00 | 27.98 | 3.13E-172 |

We provide the complete model in online supplementary table S2.

Estimate, estimated coefficients; Pr(>|z|), coefficient t test p values.

CPT, Current Procedural Terminology; JHH, Johns Hopkins Hospital; ME, all patients admitted to the medical service.

Figure 3.

Receiver operating characteristic curves for across-site analyses on medical patient (ME) cohort and chronic pancreatitis (CP) cohort. (A) Training ME cohort on Johns Hopkins Hospital (JHH) and testing on Bayview Medical Center (BMC) (area under the curve (AUC)=0.81). (B) Training ME cohort on BMC and testing on JHH (AUC=0.78). (C) Training CP cohort on JHH and testing on BMC (AUC=0.65). (D) Training CP cohort on BMC and testing on JHH (AUC=0.73). Line color: threshold cutoff.

Table 4.

Model performance

| Cohort | Validation | F-measure | Sensitivity | Specificity | PPV | NPV | AUC | |

|---|---|---|---|---|---|---|---|---|

| ME | Within-site | CV on JHH | 0.37±0.01 | 0.50±0.05 | 0.84±0.03 | 0.29±0.01 | 0.93±0.01 | 0.75±0.01 |

| CV on BMC | 0.36±0.10 | 0.50±0.05 | 0.88±0.02 | 0.28±0.02 | 0.95±0.01 | 0.79±0.02 | ||

| Across-site | Test on BMC | 0.34 | 0.47 | 0.88 | 0.27 | 0.95 | 0.81 | |

| Test on JHH | 0.34 | 0.58 | 0.76 | 0.24 | 0.93 | 0.78 | ||

| CP | Within-site | CV on JHH | 0.36±0.10 | 0.50±0.11 | 0.68±0.20 | 0.34±0.06 | 0.84±0.02 | 0.71±0.05 |

| CV on BMC | 0.28±0.05 | 0.56±0.19 | 0.79±0.11 | 0.20±0.06 | 0.95±0.02 | 0.65±0.05 | ||

| Across-site | Test on BMC | 0.19 | 0.85 | 0.41 | 0.11 | 0.97 | 0.65 | |

| Test on JHH | 0.38 | 0.60 | 0.71 | 0.27 | 0.91 | 0.73 | ||

We report sensitivity, specificity, positive predictive values (PPVs), and negative predictive values (NPVs) at the cutoff thresholds that maximize the F-measure. For cross-validation, the table shows the mean±SD of the performance metric over the folds.

AUC, area under the curve; BMC, Bayview Medical Center; CP, patients with chronic pancreatitis; JHH, Johns Hopkins Hospital; ME, all patients admitted to the medical service.

Of the 19 attributes in the model derived from the JHH cohort, the top predictor was accumulated number of inpatient admissions in the past 5 years (t test p value=3.13E-172). Consistent with previous studies of readmissions,28 29 32 33 the accumulated number of prior admissions was an important predictor of early readmission.

Evaluation on CP patients

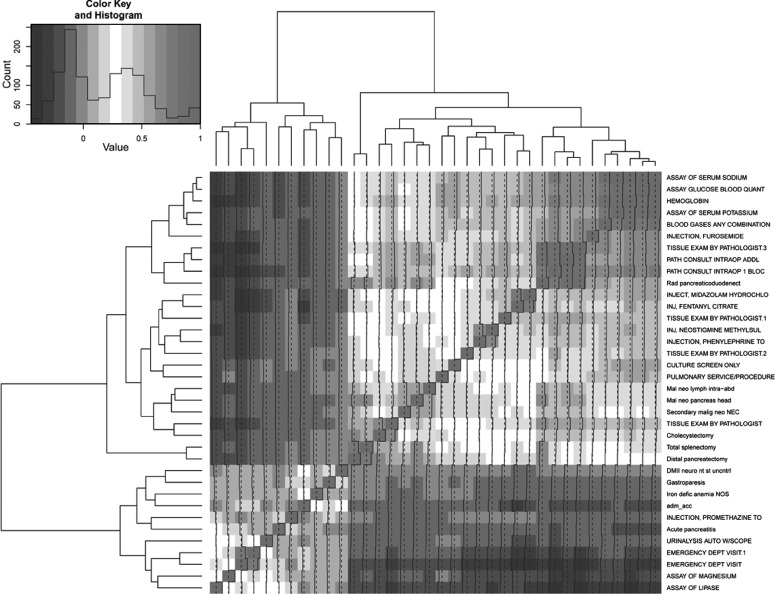

In the JHH CP cohort, of 3218 indexed admissions between January 2007 and April 2013 (corresponding to 1763 individuals), 15.6% were readmissions within 30 days of the previous discharge. There were 3696 ICD-9-CM diagnoses, 751 ICD-9-CM procedures, and 1333 CPT procedures that occurred at least once in the population, of which seven ICD-9-CM diagnoses, four ICD-9-CM procedures, 24 CPT procedures, and one administrative attribute passed univariate variable selection (we list their Spearman correlation coefficients in figure 4). The final model consisted of five attributes (one ICD-9-CM procedure, three CPT procedures, and one administrative attribute); we list their coefficients’ estimates and p values in table 5. Testing the model on the BMC cohort produced an AUC=0.65 (figure 3C). We provide the model derived from the BMC CP cohort (n=706) in online supplementary table S4. Testing it on the JHH cohort produced an AUC=0.73 (figure 3D). We list various performance metrics in table 3.

Figure 4.

Correlation matrix for the 37 attributes in Johns Hopkins Hospital chronic pancreatitis (CP) cohort after univariate variable selection. The matrix represents the set of attributes highly correlated with outcome. The final model contains five attributes that independently contribute to predicting outcome. Darker color corresponds to higher correlation, and lighter color corresponds to lower correlation. Diagonal entries represent self-correlation, which is always 1.0.

Table 5.

Final chronic pancreatitis readmission model learned from the JHH cohort

| Category | Code | Description | Estimate | SE | Pr(>|z|) |

|---|---|---|---|---|---|

| ICD-9-CM procedure | 527 | Radical pancreaticoduodenectomy | −1.301 | 0.649 | 4.50E-02 |

| CPT procedure | 82803 | Gases, blood, any combination of pH, pCO2, pO2, CO2, HCO3 (including calculated O2 saturation) | −0.138 | 0.150 | 3.56E-01 |

| 83690 | Lipase | 0.652 | 0.125 | 1.78E-07 | |

| 88309 | Level IV—surgical pathology, gross and microscopic (colon biopsy, lymph node biopsy, colorectal polyp) | −0.818 | 0.439 | 6.26E-02 | |

| Admission | adm_acc | 5-year accumulated number of admissions | 0.021 | 0.002 | 1.41E-28 |

CPT, Current Procedural Terminology; ICD-9-CM, International Classification of Diseases, 9th Revision, Clinical Modification; JHH, Johns Hopkins Hospital.

Of five attributes in the model derived from JHH, the strongest predictor was the accumulated number of inpatient admissions over the past 5 years (t test p value=1.41E-28), the same attribute as that of medical patients. Other predictors were disease specific. The next two predictors were lipase (CPT: 83690, t test p value=1.78E-07), which is related to pancreatic enzyme analysis and radical pancreaticoduodenectomy (ICD-9-CM procedure: 527, LRT p value=6.45E-20), a surgical procedure for total pancreatic resection. The other two attributes were arterial blood gas (CPT: 82803, t test p value=3.56E-01) and surgical pathology (CPT: 88309, t test p value=6.26E-02).

Discussion

Significance of the method

We developed an algorithm to predict hospital readmission based on standardized billing codes and basic administrative attributes that are readily accessible in most billing data. Our data-driven method was inspired by pharmacoepidemiologic studies,19 34 which identified empirical covariates such as diagnoses and medications from administrative data to adjust for confounders. We subsequently evaluated the predictive performance of the algorithm on large patient cohorts at both a tertiary and community hospital. The proposed algorithm has four significant advantages. First, it is fully automatic and can be applied to a wide range of patient cohorts, from medical patients to patients with specific diseases such as CP. Second, a type of branch and bound feature selection was applied by exploiting high-dimensional candidate attributes. No assumptions were made about the potential predictors of interest, with the algorithm automatically selecting top ranked, independent attributes specific to the given patient cohort. Such automated routines are most appropriate for fast exploitation of a large number of attributes to identify candidate risk factors and their surrogates for further detailed analysis, since little is known about the potential risk factors at this preliminary stage of discovery and there is no a priori reason to prefer one candidate attribute over another. Third, the algorithm requires only basic administrative attributes and billing codes that are readily available and standardized in billing databases across institutions. We were able to develop the model using a referral center cohort, and successfully validate it on a community hospital cohort (and vice versa) because of its easy portability. Although it is possible that additional information that is not stored as part of billing records or within standard electronic medical records may add to the predictability of 30-day readmission, such as functional status or physiological status at the time of discharge,29 these measures are not universally available. We aimed to create an algorithm that was widely applicable to various settings, and therefore did not include these measures as potential attributes. Fourth, our method demonstrated fairly good discriminatory power to predict the risk of readmission in medical patients (internal validation, AUC=0.75±0.01 and 0.79±0.02; across-site validation, AUC=0.78 and 0.81). In a recent systematic review2 that summarized 14 unique prediction models for 30-day readmission, of eight models targeting medical patients, only two had an AUC >0.70,3 26 and only one had an AUC >0.75 (this study was based on a much smaller cohort with a training size of 700 patients and validation size of 704 patients; internal validation, AUC=0.7726). The most recently published study29 on 30-day readmission in medical patients had an AUC of 0.71 via internal validation. We had hoped to compare our model with the most recent model, but we were not able to because three laboratory test values required by the model were not available in our administrative data. In summary, our model was created in a fully automatic fashion yet still showed comparable or even better performance when compared with state-of-the-art approaches that involve data not available in standard billing records.

Admittedly, there are many other machine learning techniques serving similar purposes. There are several reasons why we prefer the current approach to many others. (1) Our approach is simpler and more intuitive than other sophisticated machine learning techniques; it is important for non-statisticians such as clinicians and administrative staff to understand the method in order to adopt it. (2) Our choice of approach is also related to the administrative data we used. Unlike clinical data, which contain fine-grained detail at high accuracy, administrative data only provide coarse-grained information about a patient. Given that the documented diagnoses and procedures themselves only serve as proxies for the true conditions experienced by the patients and that our goal is fast exploitation of all attributes to identify candidate risk factors or their surrogates for further detailed clinical investigation, we believe the current approach is sufficient for pivotal discovery. (3) We compared the computation and predictive performance of our approach with the standard stepwise forward approach: our approach has less computation complexity, but similar predictive performance (using AUC as the performance metric). Since we aimed to produce an algorithm applied to high-dimensional data for cross-institutional analyses, speed is of key concern here. (4) The contribution of our study lies in the algorithmic workflow as a whole; further research can definitely be based on our proposed framework, but replacing the simple approach with more sophisticated machine learning techniques.

Significance of the discovery

While the large cohort of medical patients served as a good patient population for evaluating the performance of our algorithm relative to recent readmission prediction approaches, the additional application to a cohort of patients with a specific disease known to be at high risk of readmission may allow targeted interventions to reduce early readmissions. CP was selected for evaluation for several reasons. First, there is no current prediction model available for readmission in CP patients. Second, many patients with this condition need early unplanned readmission to hospital, resulting in significant healthcare costs. Finally, the incidence of CP is increasing in the USA. During hospitalizations, gastroenterologists often guide care. For an intervention to be implemented for patients with CP, the gastroenterologist must agree that these predictors have content validity before they are likely to support a readmission intervention as part of their care. Here, we implemented the algorithm in a CP patient cohort with the aim of identifying individual-level risk factors for preventable readmission. The risk factors, together with their highly correlated proxies (figure 4), differ from those for all patients admitted to the medical service and are specific to CP patients. Our model provides validated predictors for readmission on a going-forward basis creating a foundation for identifying potential targeted interventions. For example, in our CP cohort, this could mean real-time monitoring of specific test utilization associated with disease-specific readmission (eg, lipase and blood gas) through the electronic medical record, while also advocating more aggressive follow-up in a specific sub-population (eg, patients who have had a pancreaticoduodenectomy). The gastroenterology co-investigators are in the process of designing an intervention that will facilitate the adoption of best practice to prevent costly readmission of CP patients identified as high risk on the basis of predictors from our model and their proxies. The intervention may include delaying discharge, scheduling early outpatient follow-up, or phone calls from or home visits by gastroenterology nurses soon after discharge. In contrast, if the patient is predicted to be at low risk of readmission, the clinician might feel comfortable discharging him or her without the need for these interventions to prevent readmission.

Study limitations

Our study has several limitations. First, models based on administrative claims data may contain coding irregularities and lack clinical details. In addition, they generally cannot be used early in the course of an initial hospitalization, limiting the time and interventions available to effectively prevent potentially avoidable readmissions, especially for admissions with a short length of stay. Second, we were not able to identify readmissions to hospitals outside of JHH and BMC because of the lack of a universal identifier shared across sites. Third, our identification of unplanned readmissions was based on clinical judgment, since there is no follow-up indicator variable available in our administrative data. It is likely that our exclusion of planned readmissions is incomplete. Fourth, our identification of a CP patient cohort based solely on ICD-9-CM diagnosis codes may not be accurate enough, and predictive performance may be compromised as a result. Except for certain medical conditions, such as hip fracture and cancer, diagnosis/procedure codes for many other medical conditions, such as peripheral vascular disease (sensitivity=0.58, positive values=0.53), are of limited accuracy.35 For example, the absence of a chronic disease diagnosis code may be the result of more serious and acute illnesses that push the chronic condition off the list.34 However, we found the average diagnosis ranking of CP was 4, and our administrative data allow up to 50 diagnosis positions, suggesting that this potential limitation had minimal impact on our cohort identification. Fifth, the predictive performance of CP-specific readmission is lower than that of medical patients. Possible reasons may be related to cohort identification (discussed in the section above) and the small size of the validation cohort at BMC (n=706) (a significant proportion of codes that occurred in the JHH CP cohort never occurred in the BMC CP cohort, whereas this is not the case for the medical patient cohort). Another possible reason is that, whereas the CP cohort is a homogeneous population, the medical patient cohort contains various disease types. Patients with some types of disease were more likely than average to be readmitted, and surrogates of such diseases increase the predictive performance of the model, although these predictors may not be the driving underlying reason for readmission itself. Sixth, we were unable to incorporate clinical laboratory data in our model and as a result were not able to directly compare its performance with other recent approaches. Nevertheless, our model had performance characteristics that were comparable to or better than existing alternatives. Seventh, there are many other more sophisticated machine learning techniques for variable selection than our proposed approach. However, given that the administrative data only provide coarse information on the patients, and the documented diagnoses and procedures themselves only serve as proxies for the true conditions experienced by the patients, we felt that a simple intuitive approach, which would be more acceptable for clinicians and administrative staff, would suffice for our purposes. In fact, a comparison with another variable-selection method reveals similar predictive performances (see online supplementary text).

Conclusion

This study presents a branch and bound algorithm for predicting 30-day readmission using administrative claims data. Through exploitation of high-dimensional yet universally available information in claims, the algorithm is widely applicable to various patient cohorts across both tertiary and community-based hospital centers, with good performance characteristics. In summary, the advantage of the algorithm is its ability to maintain performance while maximizing portability and adaptability. Future application of this approach could include the use of nationally available data such as Medicaid and Medicare billing records for cross-institution analyses.

Supplementary Material

Acknowledgments

The authors wish to acknowledge and thank Harold Lehmann for his helpful suggestions on this study, and Eric Vohr for his editorial assistance with the manuscript.

Footnotes

Contributors: Study initialization: SH, ANK, DH, and SCM. Study design: DH and SH. Analysis and interpretation of data: DH, SH, and SCM. Drafting of the manuscript: DH, SH, SCM, and ANK. All authors contributed to refinement of the manuscript and approved the final manuscript.

Funding: The study was funded from departmental resources.

Competing interests: None.

Ethics approval: The Johns Hopkins Medicine Institutional Review Board approved this study (IRB#: NA_00082734).

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Ferver K, Burton B, Jesilow P. The use of claims data in healthcare research. Open Public Health J 2009;2:11–24 [Google Scholar]

- 2.Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission. JAMA 2011;306:1688–98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Halfon P, Eggli Y, Prêtre-Rohrbach I, et al. Validation of the potentially avoidable hospital readmission rate as a routine indicator of the quality of hospital care. Med Care 2006;44:972–81 [DOI] [PubMed] [Google Scholar]

- 4.Bottle A, Aylin P, Majeed A. Identifying patients at high risk of emergency hospital admissions: a logistic regression analysis. JRSM 2006;99:406–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Howell S, Coory M, Martin J, et al. Using routine inpatient data to identify patients at risk of hospital readmission. BMC Health Serv Res 2009;9:96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation 2006;113:1683–92 [DOI] [PubMed] [Google Scholar]

- 7.Zhan C, Miller M. Administrative data based patient safety research: a critical review. Qual Saf Health Care 2003;12(Suppl 2):ii58–63 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rosen AK, Rivard P, Zhao S, et al. Evaluating the patient safety indicators: how well do they perform on Veterans Health Administration data? Med Care 2005;43:873–84 [DOI] [PubMed] [Google Scholar]

- 9.Pine M, Jordan HS, Elixhauser A, et al. Enhancement of claims data to improve risk adjustment of hospital mortality. JAMA 2007;297:71–6 [DOI] [PubMed] [Google Scholar]

- 10.Klabunde CN, Warren JL, Legler JM. Assessing comorbidity using claims data: an overview. Med Care 2002;40:IV. [DOI] [PubMed] [Google Scholar]

- 11.Hebert PL, Geiss LS, Tierney EF, et al. Identifying persons with diabetes using Medicare claims data. Am J Med Qual 1999;14:270–7 [DOI] [PubMed] [Google Scholar]

- 12.Winkelmayer WC, Schneeweiss S, Mogun H, et al. Identification of individuals with CKD from Medicare claims data: a validation study. Am J Kidney Dis 2005;46:225–32 [DOI] [PubMed] [Google Scholar]

- 13.Losina E, Barrett J, Baron JA, et al. Accuracy of Medicare claims data for rheumatologic diagnoses in total hip replacement recipients. J Clin Epidemiol 2003;56:515. [DOI] [PubMed] [Google Scholar]

- 14.Freeman JL, Zhang D, Freeman DH, et al. An approach to identifying incident breast cancer cases using Medicare claims data. J Clin Epidemiol 2000;53:605. [DOI] [PubMed] [Google Scholar]

- 15.Mahajan R, Moorman AC, Liu SJ, et al. Use of the International Classification of Diseases, 9th revision, coding in identifying chronic hepatitis B virus infection in health system data: implications for national surveillance. J Am Med Inform Assoc 2013;20:441–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Crystal S, Akincigil A, Bilder S, et al. Studying prescription drug use and outcomes with Medicaid claims data strengths, limitations, and strategies. Med Care 2007;45(10 Suppl):S58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Laine C, Lin Y-T, Hauck WW, et al. Availability of medical care services in drug treatment clinics associated with lower repeated emergency department use. Med Care 2005;43:985–95 [DOI] [PubMed] [Google Scholar]

- 18.Du X, Goodwin JS. Patterns of use of chemotherapy for breast cancer in older women: findings from Medicare claims data. J Clin Oncol 2001;19:1455–61 [DOI] [PubMed] [Google Scholar]

- 19.Schneeweiss S, Rassen JA, Glynn RJ, et al. High-dimensional propensity score adjustment in studies of treatment effects using health care claims data. Epidemiology 2009;20:512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jacobson G, Neuman T, Damico A. Medicare Spending and Use of Medical Services for Beneficiaries in Nursing Homes and Other Long-Term Care Facilities. 2010. http://kaiserfamilyfoundation.files.wordpress.com/2013/01/8109.pdf.

- 21.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med 2009;360:1418–28 [DOI] [PubMed] [Google Scholar]

- 22.Goldfield NI, McCullough EC, Hughes JS, et al. Identifying potentially preventable readmissions. Health Care Financ Rev 2008;30:75. [PMC free article] [PubMed] [Google Scholar]

- 23.van Walraven C, Bennett C, Jennings A, et al. Proportion of hospital readmissions deemed avoidable: a systematic review. CMAJ 2011;183:E391–402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Commission MPA. Report to the Congress: promoting greater efficiency in Medicare: Medicare Payment Advisory Commission (MEDPAC). 2007

- 25.Marks E. Complexity science and the readmission Dilemma comment on “Potentially Avoidable 30-Day Hospital Readmissions in Medical Patients” and “Association of Self-reported Hospital Discharge Handoffs With 30-Day Readmissions” complexity science and the readmission Dilemma. JAMA Intern Med 2013;173:629–31 [DOI] [PubMed] [Google Scholar]

- 26.Coleman EA, Min Sj, Chomiak A, et al. Posthospital care transitions: patterns, complications, and risk identification. Health Serv Res 2004;39:1449–66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Amarasingham R, Moore BJ, Tabak YP, et al. An automated model to identify heart failure patients at risk for 30-day readmission or death using electronic medical record data. Med Care 2010;48:981–8 [DOI] [PubMed] [Google Scholar]

- 28.van Walraven C, Dhalla IA, Bell C, et al. Derivation and validation of an index to predict early death or unplanned readmission after discharge from hospital to the community. CMAJ 2010;182:551–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Donzé J, Aujesky D, Williams D, et al. Potentially Avoidable 30-Day Hospital Readmissions in Medical Patients: Derivation and Validation of a Prediction Model. JAMA Intern Med 2013;173:632–8 [DOI] [PubMed] [Google Scholar]

- 30.Yadav D, Muddana V, O'Connell M. Hospitalizations for Chronic Pancreatitis in Allegheny County, Pennsylvania, USA. Pancreatology 2011;11:546–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sing T, Sander O, Beerenwinkel N, et al. ROCR: visualizing classifier performance in R. Bioinformatics 2005;21:3940–1 [DOI] [PubMed] [Google Scholar]

- 32.Hasan O, Meltzer DO, Shaykevich SA, et al. Hospital readmission in general medicine patients: a prediction model. J Gen Intern Med 2010;25:211–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Billings J, Dixon J, Mijanovich T, et al. Case finding for patients at risk of readmission to hospital: development of algorithm to identify high risk patients. BMJ 2006;333:327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Brookhart MA, Stürmer T, Glynn RJ, et al. Confounding control in healthcare database research: challenges and potential approaches. Med Care 2010;48:S114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Fisher ES, Whaley FS, Krushat WM, et al. The accuracy of Medicare's hospital claims data: progress has been made, but problems remain. Am J Public Health 1992;82:243–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.