Summary

People with autism spectrum disorder (ASD) show abnormal processing of faces. A range of morphometric, histological, and neuroimaging studies suggest the hypothesis that this abnormality may be linked to the amygdala. Here for the first time we recorded from single neurons within the amygdalae of two rare neurosurgical patients with ASD. While basic electrophysiological response parameters were normal, there were specific and striking abnormalities in how individual facial features drove neuronal response. Compared to control patients, a population of neurons in the two ASD patients responded significantly more to the mouth, but less to the eyes. Moreover, we found a second class of face-responsive neurons whose responses to faces appeared normal. The findings confirm the amygdala’s pivotal role in abnormal face processing by people with ASD at the cellular level, and suggest that dysfunction may be traced to a specific subpopulation of neurons with altered selectivity for the features of faces.

Introduction

Social dysfunction is one of the core diagnostic criteria for autism spectrum disorders (ASD), and is also the most consistent finding from cognitive neuroscience studies (Chevallier et al., 2012; Gotts et al., 2012; Losh et al., 2009; Philip et al., 2012). Although there is evidence for global dysfunction at the level of the whole brain in ASD (Amaral et al., 2008; Anderson et al., 2010; Dinstein et al., 2012; Geschwind and Levitt, 2007; Piven et al., 1995), several studies emphasize abnormalities in the amygdala both morphometrically (Ecker et al., 2012) and in terms of functional connectivity (Gotts et al., 2012). Yet all functional data thus far come from studies that have used neuroimaging or EEG, leaving important open questions about their precise source and neuronal underpinnings. We capitalized on the comorbidity between epilepsy and ASD (Sansa et al., 2011), together with the ability to record from clinically implanted depth electrodes in patients with epilepsy who are candidates for neurosurgical temporal lobectomy. This gave us the opportunity to record intracranially from the amygdala in two rare neurosurgical patients who had medically refractory epilepsy, but who also had a diagnosis of ASD, comparing their data to those obtained from eight control patients who also had medically refractory epilepsy and depth electrodes in the amygdala, but who did not have a diagnosis of ASD (see Tables S1 and S2 for characterization of all the patients).

Perhaps the best studied aspect of abnormal social information processing in ASD is face processing. People with ASD show abnormal fixations onto (Kliemann et al., 2010; Klin et al., 2002; Neuman et al., 2006; Pelphrey et al., 2002; Spezio et al., 2007b), and processing of (Spezio et al., 2007a), the features of faces. A recurring pattern across studies is the failure to fixate, and to extract information from, the eye region of faces in ASD. Instead, at least when high-functioning, people with ASD may compensate by making exaggerated use of information from the mouth region of the face (Neuman et al., 2006; Spezio et al., 2007a), a pattern also seen, albeit less prominently, in their first-degree relatives (Adolphs et al., 2008). Such compensatory strategies may also account for the variable and often subtle impairments that have been reported regarding recognition of emotions from facial expressions in ASD (Harms et al., 2010; Kennedy and Adolphs, 2012).

These behavioral findings are complemented by findings of abnormal activation of the amygdala in neuroimaging studies of ASD (Dalton et al., 2005; Kleinhans et al., 2011; Kliemann et al., 2012), an anatomical link also supported by results from genetic relatives (Dalton et al., 2007). Furthermore, neurological patients with focal bilateral amygdala lesions show intriguing parallels to the pattern of facial feature processing seen in ASD, also failing to fixate and use the eye region of the face (Adolphs et al., 2005). The link between the amygdala and fixation onto the eye region of faces (Dalton et al., 2005; Kleinhans et al., 2011; Kliemann et al., 2012) is also supported by a correlation between amygdala volume and eye fixation in studies of monkeys (Zhang et al., 2012), and by neuroimaging studies in healthy participants that have found correlations between the propensity to make a saccade towards the eye region and BOLD signal in the amygdala (Gamer and Buechel, 2009). The amygdala’s role in face processing is clearly borne out also by electrophysiological data: single neurons in the amygdala respond strongly to images of faces, in humans (Fried et al., 1997; Rutishauser et al., 2011) as in monkeys (Gothard et al., 2007; Kuraoka and Nakamura, 2007).

The amygdala’s possible contribution to ASD is supported by a large literature showing structural and histological abnormalities (Amaral et al., 2008; Bauman and Kemper, 1985; Ecker et al., 2012; Schumann and Amaral, 2006; Schumann et al., 2004) as well as atypical activation across BOLD-fMRI studies (Gotts et al., 2012; Philip et al., 2012). Yet despite the wealth of suggestive data linking ASD, the amygdala, and abnormal social processing, data broadly consistent with long-standing hypotheses about the amygdala’s contribution to social dysfunction in autism (Baron-Cohen et al., 2000), there are as yet no such studies at the neuronal level. This gap in our investigations is important to fill for several reasons. First and foremost, one would like to confirm that the prior observations translate into abnormal electrophysiological responses from neurons within the amygdala, rather than constituting a possible epiphenomenon arising from altered inputs due to more global dysfunction, or from structural abnormalities in the absence of any clear functional consequence. Moreover, such findings should also yield a deeper understanding of precisely which functional abnormalities can be attributed to the amygdala: are there nonspecific electrophysiological deviations, or is the dysfunction more specific to processing faces? Are all neurons dysfunctional, or might there be some populations that are abnormal whereas others are not? Answers at this level of analysis would help considerably in constraining the interpretations from neuroimaging studies, would allow the formulation of more precise hypotheses about the amygdala’s putative role in social dysfunction in autism, and would better leverage animal models of autism that could be investigated at the cellular level.

Here for the first time we recorded from single neurons in the amygdalae of two rare neurosurgical patients with ASD. Basic electrophysiological response parameters as well as overall responsiveness to faces were comparable to responses recorded from a control patient group without ASD. However, there were specific differences in how individual facial features drove neuronal responses: neurons in the two ASD patients responded significantly more to the mouth, but less to the eyes. Additional analyses showed that the findings could not be attributed to differential fixations onto the stimuli, nor to differential task difficulty, but that they did correlate with behavioral use of facial features to make emotion judgments.

Results

Basic electrophysiological characterization and task performance

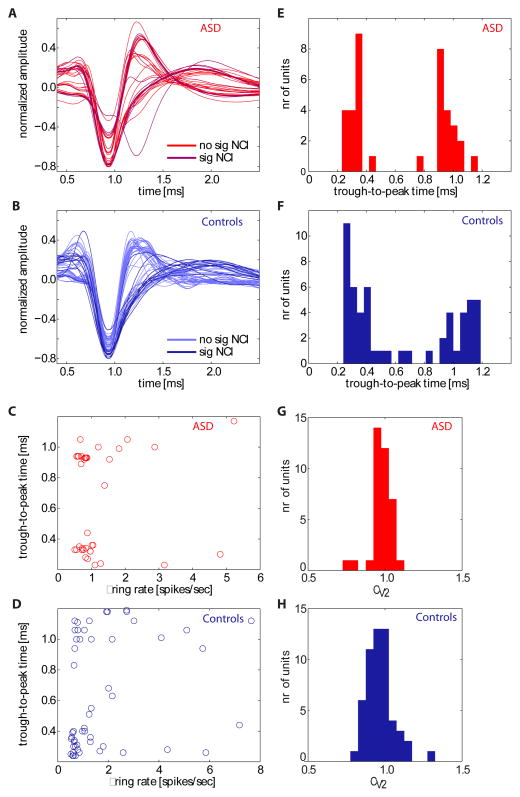

We isolated a total of 144 amygdala neurons from neurosurgical patients who had chronically implanted clinical-research hybrid depth electrodes in the medial temporal lobe (see Figure 1 and Figure S1 for localization of all recording sites within the amygdala). Recordings were mostly from the basomedial and basolateral nucleus of the amygdala (see Methods for details). We further considered only those units with firing rate ≥0.5 Hz (n = 91 in total, 37 from the patients with ASD). Approximately half the neurons (n = 42 in total, 19 from the patients with ASD) responded significantly to faces or parts thereof, whereas only 14% responded to a preceding “scramble” stimulus compared to baseline (Supplementary Tables S3 and S4; cf. Figure 3A for stimulus design). Waveforms and inter-spike interval distributions looked indistinguishable between neurons recorded from the ASD patients and controls (Fig. 2). To characterize basic electrophysiological signatures more objectively, we quantified the trough-to-peak time for each mean waveform of each neuron that was included in our subsequent analyses (Fig. 2 and Methods), a variable whose distribution was significantly bimodal with peaks around 0.4 and 1 ms (Hartigan’s dip test, P<1e-10) for neurons in both subject groups, consistent with prior human recordings (Viskontas et al., 2007). The distribution of trough-to-peak times was statistically indistinguishable between the two subject groups (Kolmogorov-Smirnov test, P = 0.16). We quantified the variability of the spike times of each cell using a burst index and a modified coefficient-of-variation (CV2) measure and found no significant differences in either measure when comparing neurons between the two subject groups (paired t-tests, Ps>0.05, see Supplementary Table S5). Similarly, measures of the variability of the spiking response (see methods) following stimulus onset did not differ between cells recorded in ASD patients and controls (mean CV in ASD 1.02±0.04 vs 0.93±0.04 in controls, p>0.05). Basic electrophysiological parameters characterizing spikes thus appeared typical in our two patients with ASD.

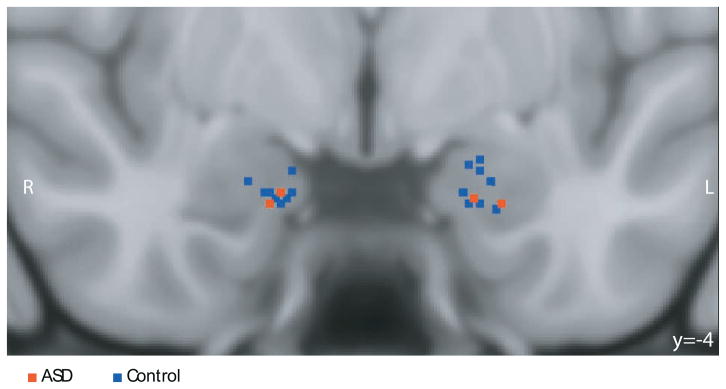

Figure 1.

Recording sites. Shown is a coronal MRI scan in MNI space showing the superposition of recording sites from the two patients with ASD (red) and controls (blue); image in radiological convention so that the left side of the brain is on the right side of the image. Recording locations were identified from the post-implantation structural MRI scans with electrodes in situ in each patient and coregistered to the MNI template brain (see Methods). The image projects all recording locations onto the same A-P plane (y = -4) for the purpose of the illustration. Detailed anatomical information for each recording site is provided in Supplementary Figure S1.

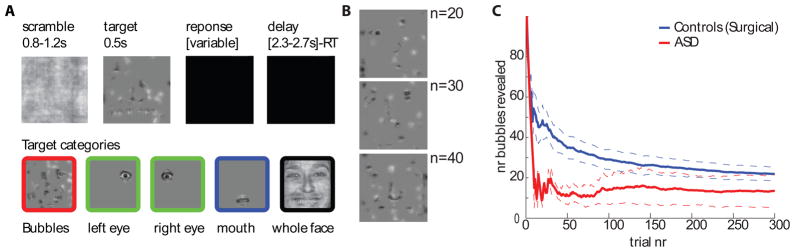

Figure 3.

Task design and behavioral performance. (A), Timeline of stimulus presentation and different types of stimuli used. B, Examples of bubbles stimuli with varying proportions of the face revealed (n is number of bubbles, same as scale in c). (C ), Performance (number of bubbles required to maintain 80% accuracy) over time. Dotted lines are s.e.m. See related Supplementary Figure S2.

Figure 2.

Electrophysiological properties of neurons in ASD patients and controls. (A,B) Mean spike waveforms (n = 37 units for ASD; n = 54 units for controls). Waveforms are shown separately for the group of cells that have significant classification images (sig NCI) and those that do not (no sig NCI, see Figure 5). (E,F) The waveforms shown in (A-B) quantified using their trough-to-peak times. (C,D) Relationship between mean firing rate and trough-to-peak time, (G,H), and coefficient of variation (CV2). There was no significant correlation between trough-to-peak time and mean firing rate (P>0.05 in both groups) and CV2 was centered at 1 in both groups.

To investigate face processing we used an unbiased approach in which randomly sampled pieces of emotional faces (“bubbles”; Gosselin and Schyns, 2001) were shown to participants while they pressed a button to indicate whether the stimulus looked happy or fearful, a technique we have previously used to demonstrate that the amygdala is essential to process information about the eye region in faces (Adolphs et al., 2005). Complementing this data-driven approach, we also showed participants specific cutouts of the eyes and mouth, as well as the whole faces from which all these were derived (Fig. 3A). The difficulty of the bubbles task was continuously controlled by adjusting the extent to which the face was revealed to achieve a target performance of 80% correct (Fig. 3B shows examples). As subjects improved in performance, less of the face was revealed (as quantified by the number of bubbles) to keep the task equally difficult for all subjects. The two patients with ASD performed well on the task (Fig. 3C), with accuracy as good as or exceeding that of the controls (number of bubbles for the last trial was 13.5±8.5 and 20.0±2.8, respectively; see Fig. S2A for individual subjects). Similarly, RT did not differ significantly between patients with ASD and controls (relative to stimulus onset across all bubble trials, 1357±346ms and 1032±49ms, respectively; P = 0.28, two-tailed t-test) although one of the ASD patients did have slower RT than any of the other participants (see Fig. S2B for individual subjects). Thus, overall behavioral performance of patients with ASD did not appear impaired.

Neurons in the primate amygdala are known to respond to faces (Fried et al., 1997; Gothard et al., 2007; Kuraoka and Nakamura, 2007), and in our prior work a subpopulation of about 20% of all amygdala neurons responded more to whole faces than to any of their parts („whole-face selective“; Rutishauser et al., 2011). Using published metrics, we here found that a third (12/37 and 17/54, respectively) of neurons we recorded qualified as whole-face selective (WF) in the ASD and control groups. To further establish that there was no difference in the strength of face selectivity between the two subject groups, we quantified whole-face response strength relative to the response to face parts using the whole-face index (WFI, see Methods), which quantifies the difference in firing rate between whole faces and parts (bubbles) (Rutishauser et al., 2011). The average WFI for all units recorded in the ASD patients and controls, respectively, was 19.1%±2.3% (n = 37) and 23.0%±4.5% (n = 54), which were not significantly different (two-tailed t-test, P = 0.45). The average WFI for units classified as WF cells was 49.3±12.1% for ASD (n = 12) and 60.2±12.4% for controls (n = 17) (not significantly different, P = 0.55, two-tailed t-test). WF cells did not differ in their basic electrophysiological properties between ASD and controls: there was no significant difference of waveform shape, burst index or CV2 (see Supplementary Table S5). Thus, there was no significant difference in the whole-face selectivity of amygdala neurons between our two ASD subjects and controls. Together with the comparable basic electrophysiological properties we described above, this provides a common background against which to interpret the striking differences we describe next.

Abnormal processing of the eye region of faces

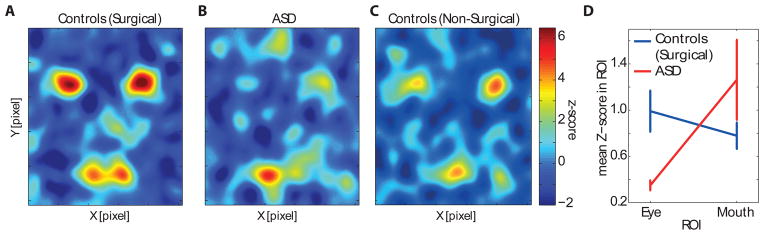

The “bubbles” methods allows the extraction of a classification image that describes how specific (but randomly sampled) regions of a face drive a dependent measure (Adolphs et al., 2005; Gosselin and Schyns, 2001; Spezio et al., 2007a), of which we here considered two: behavioral and neural. The behavioral classification image (BCI) depicts the facial information that influences behavioral performance in the task. It is based on the correlation between the trial-by-trial appearance of any part of the face, and the RT and accuracy on that trial, calculated across all pixels and all trials (Gosselin and Schyns, 2001). The BCI showed that subjects with ASD selectively failed to make use of the eye region of faces, relying almost exclusively on the mouth (Fig. 4B,D and Supplementary Figure S2C,D), a behavioral pattern typical of people with ASD (Spezio et al., 2007a) and one which clearly distinguishes our two ASD patients from the controls (2-way ANOVA of subject group (ASD/control) by ROI (eye/mouth) showed a significant interaction; F(1,16)=6.0, P = 0.026; Fig. 4D).

Figure 4.

Average behavioral classification images (BCIs) for (A) surgical controls (n = 8), (B) ASD patients (n = 2), and (C) an independent group of healthy, non-surgical controls for comparison (n = 6). The colorscale shown is valid for (A-C). (D) 2-way ANOVA of group (ASD/epilepsy control) versus ROI (eye/mouth) showed a significant interaction (P<0.05). Error bars are S.E.M. See related Supplementary Figure S2.

To understand what facial features were driving neuronal responses, we next computed a neuronal classification image (NCI) that depicts which features of faces were potent in modulating spike rates for a given neuron (the spike-rate-derived analog of the BCI). For each bubbles trial, we counted the number of spikes in a 1.5s window beginning 100ms post stimulus onset, and correlated this with the parts of the face revealed in the stimulus shown (the locations of bubbles on the face, see Methods). This procedure results in one NCI for each neuron, summarizing the regions of the face most potent in driving its response.

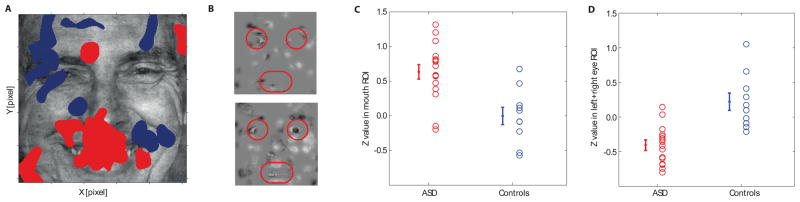

We found statistically significant NCIs in approximately a third of all neurons: 43% in ASD and 19% in controls (thresholded at P<0.05, corrected cluster test with t=2.3, minimal cluster size 748 pixels; Supplementary Table S3; see Supplementary Figure S3 for single-unit examples). Strikingly, the significant NCIs in the two patients with ASD were located predominantly around the mouth region of the face, whereas those in the controls notably included the eye region (Fig. 5A; Supplementary Figure S3). We quantified the mean difference in NCI Z-scores within eye and mouth regions for all neurons with significant NCIs using a region-of-interest (ROI) approach (Fig. 5B shows the ROIs used). The mean Z-score from the NCIs of the neurons of the two patients with ASD within the mouth ROI was significantly larger than that in the controls (Fig. 5C, P<0.0001, two-tailed t-tests throughout unless otherwise noted) and vice-versa for the eye ROIs (Fig. 5D, P<0.0001), an overall pattern also confirmed by a significant interaction between face region and patient group (2×2 ANOVA of subject group (ASD/control) by face region (eyes ROI/mouth ROI); F(2,68) = 14.3, P<0.00001; note this ANOVA controls for different cell numbers in different subjects using a nested random factor within the subject group factor). For the group of cells with significant NCIs, the proportion of neurons that had a higher mean Z-score within the eye ROI compared to the mouth ROI was significantly smaller in ASD compared to controls (6.25% vs. 60%, P=0.0026, χ2 test). In contrast, this proportion was not significantly different when considering only the neurons that did not have a significant NCI (P=0.26, χ2 test).

Figure 5.

Neuronal classification images (NCIs). (A) Overlay of all significant NCIs (red: ASD; blue: controls, all NCIs thresholded at cluster test P<0.05 corrected; see Supplementary Figure S3 for individual NCIs and their overlap). (B) Example bubble face stimuli with the ROIs used for analysis indicated in red. (C,D) Quantification of individual unthresholded Z-scores for all the significant NCIs shown in (A) calculated within the mouth and eye ROIs. The overlap with the mouth ROI was significantly larger in the ASD group compared to the controls (C, P<0.0008), whereas the overlap with eye ROIs was significantly smaller for the ASD group (D, P<0.0001). All P-values from two-tailed t-tests.

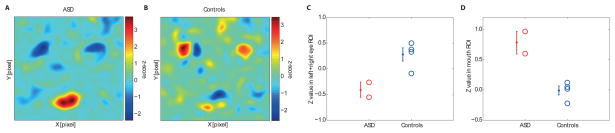

Our analyses utilized experimenter-defined ROIs in order to probe specific facial features. How sensitive is this analysis to the choice of ROIs we made? We conducted a complementary analysis instead using the continuous z-scored behavioral classification image obtained from the independent group of healthy nonsurgical subjects tested on the same task during eye tracking in the laboratory. This image (Fig. 4C) highlights the eyes and mouth, similar to our previous ROI analyses, but does so in a continuous manner directly reflecting the strength with which these regions normally drive actual behavioral emotion discrimination performance. We compared the significant NCIs obtained from each patient (Fig. 5A) with this behavioral classification image (Fig. 4C) by pixel-wise correlation. This analysis highlights the impaired neuronal feature selectivity in the patients with ASD: whereas the correlation was large and positive within the eye region for the controls, it was absent or negative for the patients with ASD (Fig. 6, see legend for statistics and Supplementary Figure S4 for individual subjects), just like their behavioral classification image was abnormal. Essentially the same pattern of results was obtained when we used as the basis for our continuous behavioral ROI the behavioral classification image derived from the surgical control patients without ASD (i.e., used Fig. 4A, rather than Fig. 4C).

Figure 6.

Correlation between neuronal and behavioral classification images. (A,B) Two-dimensional correlation between the BCI from an independent control group (Fig. 4C) and the NCIs of each patient, averaged over all neurons with significant NCIs recorded in the ASD patients (A) and all recorded in the controls (B). See Supplementary Figure S4 for individual correlations. (C,D) Statistical quantification of the same data: Average values inside the mouth and eye ROIs from the 2D correlations. The average Z-value inside the mouth and eye ROI was significantly different between patient groups (P=0.031 and P=0.0074, respectively). All P-values from two-tailed t-tests.

Further quantification of neuronal responses to face features

How representative were the neurons with significant NCIs of the population of all recorded amygdala neurons? To answer this question, we next generated continuous NCIs for all isolated neurons, regardless of whether these reached a statistically significant threshold or not. We used two approaches to quantify the NCIs of the population: first, we compared the results derived from the NCIs with those derived from independent eye and mouth cutout trials to validate the NCI approach (cf. Fig. 3A for classes of stimuli used), and second, we quantified the NCIs using an ROI approach.

If an NCI obtained from the bubbles trials had its maximal Z-score in one of the eye or mouth ROIs (Fig. 5B), an enhanced response would be expected on eye or mouth cutout trials (Fig. 3A), respectively. As trials showing the cutouts were not used to compute the NCI, they constitute an independent measure of the selectivity with which neurons responded to facial features. We grouped all recorded cells according to whether their NCI had the highest Z-score (across the entire image) in the mouth, the left eye, the right eye, or neither, and then computed the response to cutout trials for each group of neurons. We found that the response to mouth cutouts was significantly larger than to eye cutouts for neurons with high NCI Z-scores in the mouth (n = 23, Supplementary Figure S5), whereas it was significantly smaller for neurons with high NCI Z-scores in the eyes (n = 19; difference in response to mouth minus eye cutouts −12±3% vs. 8±3%, both significantly different from zero, P<0.05). Cells that did not have a mouth-or eye-dominated NCI did not show a differential response between eye and mouth cutouts (n = 49, Supplementary Figure S5). Thus, the NCIs identified a general feature sensitivity across all neurons that was replicated on the independent trials showing only mouth or eye cutouts.

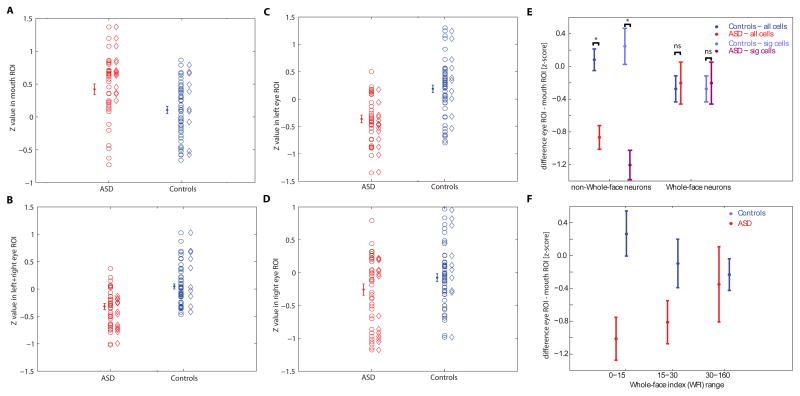

Examining all neurons (n = 91), we found that the average NCI Z-score within the mouth ROI was significantly greater in the patients with ASD compared to the controls (Fig. 7A) whereas the average NCI within the eye ROI was significantly smaller (Fig. 7B, P<0.001 and P<0.00001, respectively), a pattern again confirmed by a statistically significant interaction in a 2×2 ANOVA (mixed-model, see methods); F(2,263)=12.9, P<0.0001. Similarly, the proportion of all neurons that had an average NCI-score that was larger in the eye ROI compared to the mouth ROI was significantly different between the two subject groups (18.9% vs 46.3%, P=0.0072, χ2 test) Thus, the impaired neuronal sensitivity to the eye region of faces in ASD that we found in Fig. 5 is representative of the overall response selectivity of all recorded amygdala neurons. Interestingly, when considering the left and right eye separately we found that this difference was highly significant for the left eye (Fig. 7C, P<0.000001) but only marginally so for the right eye (Fig. 7D, P=0.07), an asymmetric pattern found in neurons from both left and right amygdalae. This finding at the neuronal level may be related to the prior finding that healthy subjects normally make more use of the left than the right eye region in this task (Gosselin et al., 2011).

Figure 7.

ROI-analyses for all neurons recorded. Shown are Z-values from each neuron’s NCI within the ROIs shown in Fig 5B. Cells recorded in ASD and in the controls are broken down into all (circles) and only those with statistically significant NCIs (diamonds). Errorbars and statistics are based on all cells. In contrast, the data depicted in Fig 5 is based only on cells with a significant NCI. (A) The average Z value inside the mouth ROI was significantly larger in ASD compared to controls (P=0.001). (B) The average Z value inside the eye ROI, on the other hand, was significantly smaller (P=2.6e-6). (C,D) left and right eye (from the perspective of the viewer) considered separately. For both, controls had a larger Z inside the eye compared to ASD, but this effect was only marginally significant for the right eye (P=0.07) compared to the left eye (P=2.4e-7). (E, F) Comparison of ROI analysis and whole-face responsiveness. (E) Comparison of each neuron’s NCI for two groups of cells: those which were identified as WF cells (right) and those which were not identified as WF (left). For both all recorded neurons (blue, red) as well as only those which are either identified as WF or have a significant NCI (light blue, light red) there was a significant difference in their NCI between ASD and controls only for the cells which were not WF cells (P=1.3e-5 and P=4.4e-5, respectively for non-WF cells and P=0.81 and P=0.81 for WF cells, respectively). (F) Comparison of each neuron’s NCI as a function of the WFI of each cell, regardless of whether the cell was a significant WF cell or not. Cells were grouped according to WFI alone. A significant difference was only found for cells with low WFI (P=0.0047) but not for medium or large WFI values (P=0.10 and P=0.79, respectively). All P-values are two tailed t-tests. See related Supplementary Figure S5.

Distinct neuronal populations are abnormal in ASD

There was no significant overlap between units that had significant NCIs and units that were classified as whole-face selective from the previous analysis (2 of the 26 units with a significant NCI were also WF-selective, a proportion expected by chance alone) and there was no evidence for increased WFIs within cells that had a significant NCI (average WFI 18.0%±3.6% for ASD patients and 12.3%±3.7% for controls). We further explored the relationship between WF-selectivity and part selectivity (as evidenced by a significant NCI). We quantified each neuron’s NCI by subtracting the average NCI Z-score within the eye ROI from that in the mouth ROI. If a group of cells equally often has NCI’s that focus on the eye or mouth, the average of this score should be approximately zero. On the other hand, if a group of cells is biased towards the eye or mouth, this measure will accordingly deviate from zero. We first compared all cells that were not identified as WF-selective with those that were identified as WF-selective. Note that the decision of whether a cell is WF-selective is only based on the cutout trials. The bubble trials, which are used to quantify the NCIs, remain statistically independent. Only those cells identified as not-WF selective showed a significant difference between ASD and controls, both for the entire population of cells and when restricting the analysis to only the NCI-selective cells (Fig. 7E, see legend for statistics). Secondly, we grouped all cells according to their WFI, regardless of whether they were significant WF cells. The higher the WFI, the more a cell fires selectively for whole faces rather than any of their parts (Rutishauser et al., 2011). We found that only the cells with very low WFI differed significantly between ASD and controls. In contrast, cells with high WFIs showed no significant difference in their NCIs between ASD and controls (Fig. 7F, see legend for statistics). Thus cells with high WFI are not differentially sensitive to different facial parts in ASD, nor in controls. Taken together with our earlier findings, this suggests that not only do neurons with significant NCIs appear to be distinct from neurons with whole-face selectivity (perhaps unsurprisingly, since achieving a significant NCI requires responses to face parts), but they may in fact constitute a specific cell population with abnormal responses in ASD. The cells with significant NCIs did not differ in their basic electrophysiology between the groups (see Fig. 2 for waveforms; Supplementary Table S5 shows statistics). Thus, the abnormal response of NCI cells in ASD appears to reflect a true difference in facial information processing, rather than a defect in basic electrophysiological integrity of neurons within the amygdala.

To explore whether the insensitivity to eyes in ASD at the neuronal population level might be driven by the subset of cells which had a significant NCI, we further classified the cells based on their response properties. There were two groups of cells that did not have a significant NCI: those classified as WF cells, and those classified neither as NCI nor WF cells. A 2×2 ANOVA revealed a significant interaction only for the subset of cells that was not classified as WF (F(2,128)=3.5, P=0.034) but not for the cells classified as WF cells (F(2,49)=0.5, P=0.60). Thus, the insensitivity to eyes we found in our ASD group appears in the responses of all amygdala neurons with the exception of WF cells.

Possible confounds

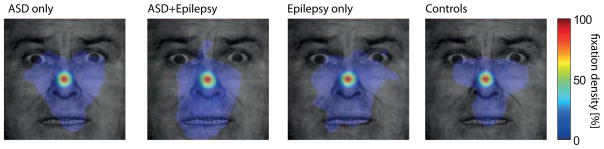

Since people with ASD may look less at eyes in faces on certain tasks (Kliemann et al., 2010; Klin et al., 2002; Neuman et al., 2006; Pelphrey et al., 2002; Spezio et al., 2007b), we wondered whether differential fixation patterns to our stimuli might explain the neuronal responses we found. This possibility seems unlikely, since by design our stimuli were of brief duration (500ms), small (approximately 9° of visual angle), and were preceded by a central fixation cross. To verify the lack of differences in eye movements to our stimuli, we subsequently conducted high-resolution eyetracking to the identical stimuli in the laboratory in our two epilepsy patients with ASD as well as three of the epilepsy controls from whom we had analyzed neurons. To ensure their data were representative, we also added two additional groups of subjects for comparison: 6 (nonsurgical) individuals with ASD (see Supplementary Table S2), and 6 matched entirely healthy participants from the community. All made a similar and small number of fixations onto the stimuli during the 500ms that the bubble stimuli were presented (1.5–2.5 mean fixations) and their fixation density maps did not differ (Fig. 8). In particular, the average fixation density within three ROIs (both eyes, mouth, center) showed that all subjects predominantly fixated at the center and there was no significant dependence on subject group for fixations within any one of the three ROIs (one-way ANOVA with factor subject group, P>0.05; post-hoc paired t-tests: ASD vs. control P=0.34, P=0.60, P=0.63 for eye, mouth, center, respectively). Similarly, fixation density to the cutout stimuli (isolated eyes and mouth), showed no differences between groups for time spent looking at the center, eyes, or mouth ROIs (Supplementary Figure S6A–C), even when we analyzed only the last 200ms in the trial to maximize fixation dispersion (all P>0.12 from one-way ANOVAs; Supplementary Figure S6 and Supplementary Table S6). Finally, we repeated the above analyses for the bubbles trials also using a conditional probability approach that quantified fixation probability conditional on the region of a face being revealed on a given trial and still found no significant differences between the groups (Supplementary Figure S6D; see Methods for details).

Figure 8.

Eye movements to the stimuli. Participants saw the same stimuli and performed the same task as during our neuronal recordings while we carried out eye tracking. Data were quantified using fixation density maps that show the probability of fixating different locations during the entire 500ms period after face onset. Shown are, from left to right, the average fixation density across subjects for ASD-only (n=6), ASD-and Epilepsy (n=2, same subjects as we recorded neurons from), Epilepsy-only (n=3), and control subjects (n=6). The scale bar (colorbar) is common for all plots. See related Supplementary Figure S6.

We performed further analyses to test whether ASD and control subjects might have differed in where they allocated spatial attention. The task was designed to minimize such differences (stimuli were small and sparse and their locations were randomized to be unpredictable). As subjects were free to move their eyes during the task, a situation in which covert and overt attention are expected to largely overlap, attentional differences would be expected to result either in overt eye gaze position or saccade latency differences, or, in the absence of eye movements, in shorter RTs to preferentially attended locations. Detailed analysis of all three of these aspects (see below) showed no significant differences between ASD and control groups.

Fixation probability: In addition to comparing fixation probability across the different subject and control groups (see above), we also considered fixations to individually shown cutouts (left eye, right eye, mouth) separately (Supplementary Figure S6E–G). First, if ASD subjects make anticipatory saccades to the mouth they would be expected to fixate there even on trials where no mouth is revealed. We found no such tendency (Supplementary Figure S6E–F). Secondly, if ASD subjects pay preferential attention to the mouth, their probability of fixating the mouth should increase when regions of the mouth are revealed in a trial. We found no significant difference in the conditional fixation probability to individually shown parts (see Table S8 for statistics).

Latency of fixations: Spatial attention might not only increase the probability of fixating but could also decrease the latency of saccades. While on most trials subjects fixated exclusively at the center of the image, they occasionally fixated elsewhere (as quantified above). We defined the saccade latency as the first point in time, relative to stimulus onset, at which the gaze position entered the eye or mouth ROI, conditional on that a saccade was made away from the center and on that this part of the face was shown in the stimulus (this analysis was carried out only for cutout trials). For the non-surgical subjects, average saccade latencies were 199±27ms and 203±30ms, for ASD and controls respectively (±s.d, n=6 subjects each, P=0.96) and a 2-way ANOVA with subject group vs ROI showed a significant main effect of ROI (F(1,20)=15.0, P<1e-4, a post-hoc test revealed that this was due to shorter RT to eyes for both groups), but none for subject group (F(1,20)=1.71) nor an interaction (F(1,20)=0.26). For the surgical subjects average saccade latencies were 204±16ms and 203±30ms, for ASD and controls, respectively, and not significantly different (2-way ANOVA showed no effect of subject group F(1,6)=0.37, of ROI, F(1,6)=0.88, nor interactions F(1,6)=0.38). We conclude that there were no significant differences in saccade latency towards the ROIs between ASD and controls.

Reaction times: Increased spatial attention should result in a faster behavioral response. We thus compared RT between individually shown eye and mouth cutouts as well as different categories of bubble trials (Tables S9, S10). There was no significant difference between ASD and controls both for the surgical and non-surgical subjects using a 2-way ANOVA with the factors subject group (ASD, Control) and ROI (eye, mouth) as well as post-hoc pairwise tests. Another possibility is that attentional differences only emerge for stimuli through competition between different face parts, such as during some bubble trials that reveal parts of both the eye and mouth. To account for this possibility, we separated the bubbles trials into three categories (see Methods): 1) those where mostly the eyes were shown, but little of the mouth; 2) those where mostly the mouth were shown, but little of the eyes; 3) those where both were visible. We found no differences in RT for all 3 categories between ASD and controls for both the surgical and non-surgical groups (see Table S10 for statistics). We thus conclude that there were no systematic RT differences between ASD and controls.

A final possibility we considered was that the behavioral performance (button presses) of the subjects influenced their amygdala responses. This also seems unlikely because the behavioral task did not ask subjects to classify the presence or absence of the eyes or mouth, but rather to make an emotion classification (fear vs. happy), and because reaction times (RT) did not differ significantly between trials showing substantial eyes or mouth, nor between ASD and control groups (2-way ANOVA of subject group by ROI with RT as the dependent variable, based on cutout trials; no significant main effect of ROI, F(1,16)=0.5, or subject group, F(1,16)=1.41, and no significant interaction F(1,16)=0.81; similar results also hold during eye tracking, see Table S9). There was no significant correlation between neuronal response and RT (only 2 of the 26 units with significant NCIs had a significant correlation (uncorrected), which would be expected by chance alone). Finally, the cells we identified were found to respond to a variety of features, among them the eyes and the mouth but also less common features outside those regions unrelated to the behavioral classification image (cf. Fig. 5).

Discussion

We compared recordings from a total of 56 neurons within the amygdala in two rare neurosurgical patients with ASD to recordings from a total of 88 neurons obtained from neurosurgical controls who did not have ASD. Basic electrophysiological response parameters of neurons did not differ between the groups, nor did the responsiveness to whole faces. Yet a subpopulation of neurons in the ASD patients-- namely, those neurons that were not highly selective for whole faces, but instead responded to parts of faces--showed abnormal sensitivity to the mouth region of the face, and abnormal insensitivity to the eye region of the face. These results obtained independently when using “bubbles” stimuli that randomly sampled regions of the face, or when using specific cutouts of the eye or mouth.

The correspondence between behavioral and neuronal classification images (Fig. 4A,B and Fig. 5) suggests that responses of amygdala neurons may be related to behavioral judgments about the faces. Are the responses we recorded in the amygdala cause or consequence of behavior? We addressed several confounding possibilities above (eye movements, RT), but the question remains interesting and not fully resolved. In particular, one possibility still left open by our control analyses in this regard is that people with ASD might allocate spatial attention differentially to our stimuli, attending more to the mouth than to the eyes compared to the control participants. While we consider this possibility unlikely, since it should be reflected in differential fixations, fixation latencies, or RTs to these facial features, it remains possible that attention could operate covertly, or perhaps at a variable level, such that these control measures would not have detected it but amygdala responses were still affected. For example, the latency of amygdala neurons in primates depends on whether value-predicting cues are presented ipsi-or contralaterally (Peck et al. 2013). While that study confirmed that amygdala neurons are responsive to the entire visual field, it was also found that latency varied according to where visual cues were presented and firing rate correlated with RT. In contrast, we found no correlation of firing rates with RTs in the present study, but note that our task was, by construction, not a speeded RT task and subjects had ample time to respond. Future studies will be needed to provide detailed assessments of attention simultaneously with neuronal recordings; and it will also be important to make direct comparisons between complex social stimuli such as faces, and simpler conditioned visual cues.

But even if effects arising from differences in fixation and/or attention could be completely eliminated, a question remains regarding how abnormal amygdala responses could arise. The amygdala receives input about faces from cortices in the anterior temporal lobe, raising important questions regarding whether the abnormal responses we observed in patients with ASD arise at the level of the amygdala, or are passed on from abnormalities already evident in temporal visual cortex. Patients with face agnosia due to damage in temporal cortex still appear able to make normal use of the eye region of faces and render normal judgments of facial emotion (Tranel et al., 1988), whereas patients with lesions of the amygdala show deficits in both processes (Adolphs et al., 2005). These prior findings together with the amygdala’s emerging role in detecting saliency (Adolphs, 2010) suggest that the abnormal feature selectivity of neurons we found in ASD may correspond to abnormal computations within the amygdala itself, a deficit that then arguably influences downstream processes including attention, learning and motivation (Chevallier et al., 2012) perhaps in part through feedback to visual cortices (Hadj-Bouziane et al., 2012; Vuilleumier et al., 2004).

Yet the otherwise normal basic electrophysiological properties of amygdala neurons we found in ASD, together with their normal responses to whole faces (also see below), argue against any gross pathological processing within the amygdala itself. One possibility raised by a prior fMRI study is that neuronal responses might be more variable in people with ASD (Dinstein et al., 2011). We found no evidence for this in our recordings, where amygdala neurons in ASD had coefficients of variation that were equivalent for those seen in the controls (both for cells with significant NCIs as well as for whole-face cells). This finding is consistent with the data from Dinstein et al., 2011, who also found reduced signal-to-noise in BOLD responses only in the cortex, but not in subcortical structures like the amygdala. The conclusion of otherwise intact cellular function of neurons within the amygdala then raises the question of how the abnormal feature selectivity that we observed in ASD might be synthesized. One natural candidate for this is the interaction between the amygdala and the prefrontal cortex: there is evidence for abnormal connectivity of the prefrontal cortex in ASD from prior studies (Just et al., 2007), and we ourselves have found subtle deficits in functional connectivity in the brains of people with ASD that may be restricted to anterior regions of the brain (Tyszka et al., 2013). The abnormal response selectivity in amygdala neurons we observed in ASD may thus arise from a more „top-down“ effect (Neumann et al., 2007) reflecting the important role of the amygdala in integrating motivation and context—an interpretation also consistent with the long response latencies of amygdala neurons we observed.

In contrast to the abnormal responses of part-sensitive cells, whole-face selective cells in ASD subjects responded with comparable strengths as quantified by the WFI in either population group and their response was indistinguishable between different facial parts. One possible model for the generation of WF cell response properties is that these cells represent a sum over the responses of part-selective cells. This model would predict that WF cells in ASD subjects should become overly sensitive to the mouth, which we did not observe. We previously found that WF-selective cells have a highly non-linear response to partially revealed faces (Rutishauser et al. 2011), which is also incompatible with this model. The present findings in ASD thus add evidence to the hypothesis that WF-selective cells respond holistically to faces rather than simply summing responses to their parts.

Another key question is whether our findings are related to increased avoidance of, or decreased attraction towards, the eye region of faces. Prior findings have shown that people with ASD actively avoid the eyes in faces (Kliemann et al., 2010), and that this avoidance is correlated with BOLD response in the amygdala in neuroimaging studies (Dalton et al., 2005; Kliemann et al., 2012). But others have found that amygdala BOLD response in healthy individuals correlates with fixations towards the eyes (Gamer and Buechel, 2009) and one framework hypothesizes that this is decreased in ASD as part of a general reduction in social motivation and reward processing (Chevallier et al., 2012). While both active social avoidance and reduced social motivation likely contribute to ASD, future studies using concurrent eyetracking and electrophysiology could examine this complex issue further. As we noted above, in our specific task we found no evidence for differential gaze or visual attention that could explain the amygdala responses we observed. It does remain plausible, however, that the amygdala neurons we describe here in turn trigger attentional shifts at later stages in processing

It is noteworthy that our ASD subjects were able to perform the task as well as our control subjects, showing no gross impairment. This was true both when comparing the ASD and non-ASD neurosurgical subjects (see results), as well as when comparing non-surgical ASD with their matched neurotypical controls (see methods). RTs for the neurosurgical subjects for experiments conducted in the hospital were increased by approximately 300ms (Table S9, bottom row) relative to RTs from the laboratory outside the hospital, which is not surprising given that these experiments take place while subjects are recovering from surgery. However, this slowing affected ASD and non-ASD neurosurgical subjects equally. Unimpaired behavioral performance in emotional categorization tasks such as ours in high-functioning ASD subjects is a common finding that several previous studies demonstrated (Spezio et al., 2007a; Neuman et al., 2006; Harms et al., 2010; Ogai et al., 2003). In contrast to their normal performance, however, our ASD subjects used a distinctly abnormal strategy to solve the task, confirming earlier reports. Thus, while they performed equally well, they used different features of the face to process the task.

Brain abnormalities in ASD have been found across many structures and white matter regions, arguing for a large-scale impact on distributed neural networks and their connectivity (Amaral et al., 2008; Anderson et al., 2010; Courchesne, 1997; Geschwind and Levitt, 2007; Kennedy et al., 2006; Piven et al., 1995). Neuronal responses in ASD have been proposed to be more noisy (less consistent over time) (Dinstein et al., 2012), or to have an altered balance of excitation and inhibition (Yizhar et al., 2011)-- putative processing defects that could result in a global abnormality in sensory perception (Markram and Markram, 2010). The specificity of our present findings is therefore noteworthy: the abnormal feature selectivity of amygdala neurons we found in ASD contrasts with otherwise intact basic electrophysiological properties and whole-face responses. Given the case-study nature of our ASD sample together with their epilepsy and normal intellect, it is possible that our two ASD patients describe only a subset of high-functioning individuals with ASD, and it remains an important challenge to determine the extent to which the present findings will generalize to other cases. Our findings raise the possibility that particular populations of neurons within the amygdala may be differentially affected in ASD, which could inform links to synaptic and genetic levels of explanation, as well as aid the development of more specific animal models.

Experimental procedures

Patients

Intracranial single-unit recordings were obtained from 10 neurosurgical inpatients (Supplementary Table S1) with chronically implanted depth electrodes in the amygdalae for monitoring epilepsy as previously described (Rutishauser et al., 2010). Of these, only 7 ultimately yielded useable single-units (the 2 with ASD and 5 without); the other 3 did not have ASD and provided only their behavioral performance data. Electrodes were placed using orthogonal (to the midline) trajectories, and utilized to localize seizures for possible surgical treatment of epilepsy. We included only participants who had normal or corrected-to-normal vision, intact ability to discriminate faces on the Benton Facial Discrimination Task, and who were fully able to understand the task. Each patient performed one session of the task consisting of multiple blocks of 120 trials each (see below). While some patients performed several sessions on consecutive days, we specifically only include the first session of each patient to allow a fair comparison to the autism subjects (who only performed the task once). All included sessions are the first sessions and patients had never performed the task or anything similar before. All participants provided written informed consent according to protocols approved by the Institutional review boards of the Huntington Memorial Hospital and the California Institute of Technology.

Autism diagnosis

The two patients with ASD had a clinical diagnosis according to DSM-IV-TR criteria and met algorithm criteria for an ASD on the Autism Diagnostic Observation Schedule (ADOS). Scores on the Autism Quotient and Social Responsiveness Scale, where available, further confirmed a diagnosis of ASD. All ASD diagnoses were corroborated by at least two independent clinicians blind to the identity of the participants or the hypotheses of the study. While not diagnostic, the behavioral performances of the two epilepsy patients with ASD on our experimental task were also consistent with the behavioral performance of a different group of subjects with ASD that we had reported previously (Spezio et al., 2007a) as well as a new control group of 6 ASD control subjects we tested in the present paper (see Table S2).

Electrophysiology

We recorded bilaterally from implanted depth electrodes in the amygdala. Target locations were verified using post-implantation structural MRIs (see below). At each site, we recorded from eight 40μm microwires inserted into a clinical electrode as described previously (Rutishauser et al., 2010). Only data acquired from recording contacts within the amygdala are reported here. Electrodes were positioned such that their tips were located in the upper third to center of the deep amygdala, about 7mm from the uncus. Microwires projected medially out at the end of the depth electrode and electrodes were thus likely sampling neurons in the mid-medial part of the amygdala (basomedial nucleus or deepest part of the basolateral nucleus) (Oya et al. 2009). Bipolar recordings, using one of the eight microwires as reference, were sampled at 32 kHz and stored continuously for off-line analysis with a 64-channel Neuralynx system (Digital Cheetah; Neuralynx, Inc.). The raw signal was filtered and spikes were sorted using a semiautomated template matching algorithm as described previously (Rutishauser et al., 2006). Channels with interictal epileptic spikes in the LFP were excluded. For wires which had several clusters of spikes (47 wires had at least one unit, 25 of which had at least two), we additionally quantified the goodness of separation by applying the projection test (Rutishauser et al., 2006) for each possible pair of neurons. The projection test measures the number of standard deviations by which the two clusters are separated after normalizing the data, so that each cluster is normally distributed with a standard deviation of 1. The average distance between all possible pairs (n = 170) was 12.6±2.8 S.D. The average SNR of the mean waveforms relative to the background noise was 1.9±0.1 and the average percentage of interspike intervals that were less than 3ms (a measure of sorting quality) was 0.31±0.03. All above sorting results are only for units considered for the analysis (baseline of 0.5 Hz or higher).

Stimuli and Task

Patients were asked to judge whether faces (or parts thereof) shown for 500ms looked happy or fearful (2-alternative forced choice). Stimuli were presented in blocks of 120 trials. Stimuli consisted of bubbled faces (60% of all trials), cutouts of the eye region (left and right, 10% each), mouth region (10% of all trials), or whole (full) faces (10% of all trials) and were shown fully randomly interleaved at the center of the screen of a laptop computer situated at the patient’s bedside. All stimuli were derived from the whole face stimuli, which were happy and fearful faces from the Ekman and Friesen stimulus set we used in the same task previously (Spezio et al., 2007a). Mouth and eye cutout stimuli were all the same size. Each trial consisted of a sequence of images shown in the following order: 1) scrambled face, 2) face stimulus, 3) blank screen (cf. Figure 3a). Scrambled faces were created from the original faces by randomly re-ordering their phase spectrum. They thus had the same amplitude spectrum and average luminance. Scrambled faces were shown for 0.8–1.2s (randomized). Immediately afterwards, the target stimulus was shown for 0.5s (fixed time), which was then replaced by a blank screen. Subjects were instructed to make their decision as soon as possible. Regardless of reaction time (RT), the next trial started after an interval of 2.3–2.7s after stimulus onset. If the subject did not respond by that time, a timeout was indicated by a beep (2.2% of all trials were timeouts and were excluded from analysis; there was no difference in timeouts between ASD patients and controls). Patients responded by pressing marked buttons on a keyboard (happy or fearful). Distance to the screen was 50 cm, resulting in a screen size of 30° X 23° of visual angle and a stimulus size of approximately 9°×9° of visual angle. Patients completed 5–7 blocks during which we collected electrophysiological data continuously (on average 6.5 blocks for the epilepsy patients with ASD and 5.6 for epilepsy patients without ASD, resulting in 696±76 trials on average). After each block, the achieved performance was displayed on a screen to participants as an incentive.

Derivation of behavioral and neuronal classification images

Behavioral classification images (BCI) were derived as described previously (Gosselin and Schyns, 2001). Briefly, the BCIs were calculated for each session based on accuracy and RT. Only bubble trials were used. Each pixel C(x,y) of the CI is the correlation of the noise mask at that pixel with whether the trial was correct/incorrect or the RT (Eq 1). Pixels with high positive correlation indicate that revealing this pixel increases task performance. The raw CI C(x,y) is then rescaled (z-scored) such that it has a Student’s-t distribution with N-2 degrees of freedom (Eq 2).

| (Eq 1) |

| (Eq 2) |

N is the number of trials, Xi ( x, y) is the smoothened noise mask for trial i, Yi the response accuracy or the RT for trial i and X̄(x, y) and Ȳ is the mean over all trials. The noise masks Xi (x, y) are the result of a convolution of bubble locations (where each center of a bubble is marked with a 1, the rest 0) with a 2D Gaussian kernel with width σ=10 pixels and a kernel size of 6σ (exactly as shown to subjects, no further smoothing is applied). Before convolution, images were zero-padded to avoid edge effects. For each session, we calculated two CIs: one based on accuracy and one based on RT. These were then averaged as to obtain the BCI for each session. BCIs across patients were averaged using the same equation, resulting in spatial representations of where on the face image there was a significant association between that part of the face shown and accurate emotion classification (Fig. 4a–c). As a comparison, we also computed the BCIs only considering accuracy (not considering RT) and found very similar BCIs (not shown).

Neuronal classification images (NCI) were computed as shown in Eqs 1 and 2, however the response Yi and its average Ȳ was equivalent to spike counts in this case. Otherwise, the calculation is equivalent. Spikes were counted for each correct bubble trial i in a time window of 1.5s length starting at 100ms after stimulus onset. Incorrect trials are not used to construct the NCI.

An NCI was calculated for every cell with a sufficient number of spikes. The NCI has the same dimension as the image (256×256 pixels), but due to the structure of the noise mask used to construct the bubbles trials it is a smooth random Gaussian field in 2D. Nearby pixels are thus correlated and appropriate statistical tests need to take this into account. We used the well-established Cluster test (Chauvin et al., 2005) with t=2.3 that has been developed for this purpose. The test enforces a minimal significance value and a minimal cluster size for an area in the NCI to be significant and multiple-comparison corrected. Note that the desired significance and the minimal cluster size are anti-correlated, i.e. if setting a low significance the minimal size of clusters considered significant increases. Our value of t=2.3 corresponds to a minimal cluster size of 748 pixels. The NCI for a cell was considered significant if there was at least 1 cluster satisfying the cluster test. For plotting purposes only, thresholded CIs are shown in some Figures that only reveal the proportion determined to be significant by the cluster test (specifically: Figure 5a and Supplementary Figure S3). For analysis purposes, however, the raw and continuous NCI was always used. No analysis was based on thresholded behavioral or neuronal CIs, although some analyses are based on only those neurons whose NCI had regions that surpassed a statistical threshold for significance.

Data analysis: Spikes

Only single units with an average firing rate of at least 0.5 Hz (entire task) were considered. Only correct trials were considered and all raster plots only show correct trials. Also, the first 10 trials of the first block were discarded. Trials were aligned to stimulus onset, except when comparing the baseline to the scramble-response for which trials were aligned to scramble onset (which precedes the stimulus onset). Statistical comparisons between the firing rates in response to different stimuli were made based on the total number of spikes produced by each unit in a 1s interval starting at 250ms after stimulus onset. Pairwise comparisons were made using a two-tailed t-test at P<0.05 and Bonferroni-corrected for multiple comparisons where necessary. Average firing rates (PSTH) were computed by counting spikes across all trials in consecutive 250ms bins. To convert the PSTH to an instantaneous firing rate, a Gaussian kernel with sigma 300ms was used (for plotting purposes only, all statistics are based on the raw counts).

Statistical tests

2-way ANOVAs to quantify the difference in NCIs between the ASD and control groups were performed using a mixed-model ANOVA with cell number as a random factor nested into the fixed factor subject group. ROI was a fixed factor. Cell number was a random factor because it is a priori unknown how many significant cells will be discovered in each recording session. The 2-way ANOVAs to quantify the behavior (BCI and RT) had only fixed factors (subject group and ROI).

All data analysis was performed using custom written routines in MATLAB. All errors are ±s.e.m. unless specified otherwise. All P-values are from two-tailed t-tests unless specified otherwise.

Supplementary Material

Highlights.

We recorded single neurons from the amygdala in two patients with autism

Some amygdala neurons in autism patients were hypersensitive to the mouth region of faces

These same neurons showed reduced sensitivity to the eye region of faces

Only a specific population of amygdala neurons appears abnormal in autism

Acknowledgments

We thank all patients and their families for their help in conducting the studies, Lynn Paul, Daniel Kennedy and Christina Corsello for performing ADOS, Christopher Heller for neurosurgical implantation of some of our subjects, Linda Philpott and William Sutherling for neuropsychological assessment, and the staff of the Huntington Memorial Hospital for their support with the studies. We also thank Erin Schuman for providing some of the electrophysiology equipment, and Frederic Gosselin, Michael Spezio, Julien Dubois, and Jeffrey Wertheimer for discussion. This research was made possible by funding from the Simons Foundation (R.A.), the Gordon and Betty Moore Foundation (R.A.), the Max Planck Society (U.R.), the Cedars-Sinai Medical Center (U.R., A.M.), a fellowship from Autism Speaks (O.T.), and a Conte Center from NIMH (R.A.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Bibliography

- Adolphs R. What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Spezio ML, Parlier M, Piven J. Distinct face-processing strategies in parents of autistic children. Current Biology. 2008;18:1090–1093. doi: 10.1016/j.cub.2008.06.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaral DG, Schumann CM, Nordahl CW. Neuroanatomy of autism. Trends in Neurosciences. 2008;31:137–145. doi: 10.1016/j.tins.2007.12.005. [DOI] [PubMed] [Google Scholar]

- Anderson JS, Druzgal TJ, Froehlich A, DuBray MB, Lange N, Alexander AL, Abildskov T, Nielsen JA, Cariello AN, Cooperrider JR, et al. Decreased interhemispheric functional connectivity in autism. Cerebral Cortex. 2010 doi: 10.1093/cercor/bhq190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Ring HA, Bullmore ET, Wheelwright S, Ashwin C, Williams SC. The amygdala theory of autism. Neurosci Biobehav Rev. 2000;24:355–364. doi: 10.1016/s0149-7634(00)00011-7. [DOI] [PubMed] [Google Scholar]

- Bauman M, Kemper TL. Histoanatomic observations of the brain in early infantile autism. Neurology. 1985;35:866–874. doi: 10.1212/wnl.35.6.866. [DOI] [PubMed] [Google Scholar]

- Chauvin A, Worsley K, Schyns P, Arguin M, Gosselin F. Accurate statistical tests for smooth classification images. Journal of Vision. 2005;5:659–667. doi: 10.1167/5.9.1. [DOI] [PubMed] [Google Scholar]

- Chevallier C, Kohls G, Troiani V, Brodkin ES, Schultz RT. The social motivation theory of autism. TICS. 2012;16:231–239. doi: 10.1016/j.tics.2012.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courchesne E. Brainstem, cerebellar and limbic neuroanatomical abnormalities in autism. Current Opinion in Neurobiology. 1997;7:269–278. doi: 10.1016/s0959-4388(97)80016-5. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Alexander AL, Davidson RJ. Gaze-fixation, brain activation, and amygdala volume in unaffected siblings of individuals with autism. Biological Psychiatry. 2007;61:512–520. doi: 10.1016/j.biopsych.2006.05.019. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinstein I, Heeger DJ, Lorenzi L, Minshew NJ, Malach R, Behrmann M. Unreliable evoked responses in autism. Neuron. 2012;75:981–991. doi: 10.1016/j.neuron.2012.07.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ecker C, Suckling J, Deoni SC, Lombardo MV, Bullmore ET, Baron-Cohen S, Catani M, Jezzard P, Barnes A, Bailey AJ, et al. Brain anatomy and its relationship to behavior in adults with autism spectrum disorder. Archives of General Psychiatry. 2012;69:195–209. doi: 10.1001/archgenpsychiatry.2011.1251. [DOI] [PubMed] [Google Scholar]

- Fried I, MacDonald KA, Wilson CL. Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron. 1997;18:753–765. doi: 10.1016/s0896-6273(00)80315-3. [DOI] [PubMed] [Google Scholar]

- Gamer M, Buechel C. Amygdala activation predicts gaze toward fearful eyes. The Journal of Neuroscience. 2009;29:9123–9126. doi: 10.1523/JNEUROSCI.1883-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geschwind DH, Levitt P. Autism spectrum disorders: developmental disconnection syndromes. Current Opinion in Neurobiology. 2007;17:103–111. doi: 10.1016/j.conb.2007.01.009. [DOI] [PubMed] [Google Scholar]

- Gosselin F, Schyns PG. Bubbles: a technique to reveal the use of information in recognition tasks. Vision Res. 2001;41:2261–2271. doi: 10.1016/s0042-6989(01)00097-9. [DOI] [PubMed] [Google Scholar]

- Gosselin F, Spezio ML, Tranel D, Adolphs R. Asymmetrical use of eye information from faces following unilateral amygdala damage. Social Cognitive and Affective Neuroscience. 2011;6:330–337. doi: 10.1093/scan/nsq040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. Journal of Neurophysiology. 2007;97:1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- Gotts SJ, Simmons WK, Milbury LA, Wallace GL, Cox RW, Martin A. Fractionation of social brain circuits in autism spectrum disorders. Brain. 2012;135:2711–2725. doi: 10.1093/brain/aws160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Liu N, Bell AH, Gothard KM, Luh W-M, Tootell RBH, Murray EA, Ungerleider LG. Amygdala lesions disrupt modulation of functional MRI activity evoked by facial expression in the monkey inferior temporal cortex. PNAS. 2012 doi: 10.1073/pnas.1218406109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychology Reviews. 2010;20:290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- Just MA, Cherkassky VL, Keller TA, Kana RK, Minshew NJ. Functional and anatomical cortical underconnectivity in autism: evidence from an FMRI study of an executive function task and corpus callosum morphometry. Cereb Cortex. 2007;17:951–961. doi: 10.1093/cercor/bhl006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy DP, Adolphs R. Perception of emotions from facial expressions in high-functioning adults with autism. Neuropsychologia. 2012;50:3313–3319. doi: 10.1016/j.neuropsychologia.2012.09.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy DP, Redcay E, Courchesne E. Failing to deactivate: resting functional abnormalities in autism. PNAS. 2006;103:8275–8280. doi: 10.1073/pnas.0600674103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinhans N, Richards T, Johnson LC, Weaver KE, Greenson J, Dawson G, Aylward E. fMRI evidence of neural abnormalities in the subcortical face processing system in ASD. Neuroimage. 2011;54:697–704. doi: 10.1016/j.neuroimage.2010.07.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kliemann D, Dziobek I, Hatri A, Baudewig J, Heekeren HR. The role of the amygdala in atypical gaze on emotional faces in autism spectrum disorders. The Journal of Neuroscience. 2012;32:9469–9476. doi: 10.1523/JNEUROSCI.5294-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kliemann D, Dziobek I, Hatri A, Steimke R, Heekeren HR. Atypical reflexive gaze patterns on emotional faces in autism spectrum disorders. The Journal of Neuroscience. 2010;30:12281–12287. doi: 10.1523/JNEUROSCI.0688-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K. Responses of single neurons in monkey amygdala to facial and vocal emotions. Journal of Neurophysiology. 2007;97:1379–1387. doi: 10.1152/jn.00464.2006. [DOI] [PubMed] [Google Scholar]

- Losh M, Adolphs R, Poe M, Couture S, Penn D, Baranek G, Piven J. Neuropsychological profile of autism and broad autism phenotype. Archives of General Psychiatry. 2009;66:518–526. doi: 10.1001/archgenpsychiatry.2009.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markram K, Markram H. The intense world theory - a unifying theory of the neurobiology of autism. Frontiers in Human Neuroscience. 2010;4:article 224. doi: 10.3389/fnhum.2010.00224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuman D, Spezio ML, Piven J, Adolphs R. Looking you in the mouth: abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Social Cognitive and Affective Neuroscience. 2006;1:194–202. doi: 10.1093/scan/nsl030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogai M, Matsumoto H, Suzuki K, Ozawa F, Fukuda R, Uchiyama I, Suckling J, Isoda H, Mori N, Takei N. fMRI study of recognition of facial expressions in high-functioning autistic patients. Neuroreport. 2003;14:559–63. doi: 10.1097/00001756-200303240-00006. [DOI] [PubMed] [Google Scholar]

- Oya H, Kawasaki H, Dahdaleh NS, Wemmie JA, Howard MA., 3rd Stereotactic atlas-based depth electrode localization in the human amygdala. Stereotact Funct Neurosurg. 2009;87:219–228. doi: 10.1159/000225975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck CJ, Lau B, Salzman CD. The primate amygdala combines information about space and value. Nature Neuroscience. 2013;16:340–348. doi: 10.1038/nn.3328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Philip RCM, Dauvermann MR, Whalley HC, Baynham K, Lawrie SM, Stanfield AC. A systematic review and meta-analysis of the fMRI investigation of autism spectrum disorders. Neuroscience and Biobehavioral Reviews. 2012;36:901–942. doi: 10.1016/j.neubiorev.2011.10.008. [DOI] [PubMed] [Google Scholar]

- Piven J, Arndt S, Bailey J, Havercamp S, Andreasen N, Palmer P. An MRI study of brain size in autism. American Journal of Psychiatry. 1995;152:1145–1149. doi: 10.1176/ajp.152.8.1145. [DOI] [PubMed] [Google Scholar]

- Rutishauser U, Ross IB, Mamelak A, Schuman EM. Human memory strength is predicted by theta-frequency phase-locking of single neurons. Nature. 2010;464:903–907. doi: 10.1038/nature08860. [DOI] [PubMed] [Google Scholar]

- Rutishauser U, Schuman E, Mamelak AN. Online detection and sorting of extracellularly recorded action potentials in human medial temporal lobe recordings, in vivo. Journal of Neuroscience Methods. 2006;154:204–224. doi: 10.1016/j.jneumeth.2005.12.033. [DOI] [PubMed] [Google Scholar]

- Rutishauser U, Tudusciuc O, Neumann D, Mamelak A, Heller AC, Ross IB, Philpott L, Sutherling W, Adolphs R. Single-unit responses selective for whole faces in the human amygdala. Current Biology. 2011;21:1654–1660. doi: 10.1016/j.cub.2011.08.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sansa G, Carlson C, Doyle W, Weiner HL, Bluvstein J, Barr W, Devinsky O. Medically refractory epilepsy in autism. Epilepsia. 2011 doi: 10.1111/j.1528-1167.2011.03069.x. [DOI] [PubMed] [Google Scholar]

- Schumann CM, Amaral DG. Stereological analysis of amygdala neuron number in autism. The Journal of Neuroscience. 2006;26:7674–7679. doi: 10.1523/JNEUROSCI.1285-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schumann CM, Hamstra J, Goodlin-Jones BL, Lotspeich L, Kwon H, Buonocore MH, Lammers CR, Reiss AL, Amaral DG. The amygdala is enlarged in children but not adolescents with autism; the hippocampus is enlarged at all ages. The Journal of Neuroscience. 2004;24:6392–6401. doi: 10.1523/JNEUROSCI.1297-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RS, Piven J. Abnormal use of facial information in high-functioning autism. J Autism Dev Disord. 2007a;37:929–939. doi: 10.1007/s10803-006-0232-9. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RS, Piven J. Analysis of face gaze in autism using “Bubbles”. Neuropsychologia. 2007b;45:144–151. doi: 10.1016/j.neuropsychologia.2006.04.027. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio AR, Damasio H. Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology. 1988;38:690–696. doi: 10.1212/wnl.38.5.690. [DOI] [PubMed] [Google Scholar]

- Tyszka JM, Kennedy DP, Paul LK, Adolphs R. Largely intact patterns of resting-state functional connectivity in high-functioning autism. Cerebral Cortex. 2013 doi: 10.1093/cercor/bht040. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viskontas IV, Ekstrom AD, Wilson CL, Fried I. Characterizing interneuron and pyramidal cells in the human medial temporal lobe in vivo using extracellular recordings. Hippocampus. 2007;17:49–57. doi: 10.1002/hipo.20241. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Yizhar O, Fenno LE, Prigge M, Schneider F, Davidson TJ, O’Shea DJ, Sohal VS, Goshen I, Finkelstein J, Paz JT, et al. Neocortical excitation/inhibition balance in information processing and social dysfunction. Nature. 2011;477:171–178. doi: 10.1038/nature10360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B, Noble PL, Winslow JT, Pine DS, Nelson EE. Amygdala volume predicts patterns of eye fixation in rhesus monkeys. Behavioral Brain Research. 2012;229:433–437. doi: 10.1016/j.bbr.2012.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.