Abstract

The purpose of this study was to determine the effect of the addition of binary vibrotactile stimulation to continuous auditory feedback (vowel synthesis) for human-machine-interface (HMI) control. Sixteen healthy participants controlled facial surface electromyography to achieve two-dimensional (2D) targets (vowels). Eight participants used only real-time auditory feedback to locate targets whereas the other eight participants were additionally alerted to having achieved targets with confirmatory vibrotactile stimulation at the index finger. All participants trained using their assigned feedback modality (auditory alone or combined auditory and vibrotactile) over three sessions on three days and completed a fourth session on the third day using novel targets to assess generalization. Analyses of variance performed on the 1) percentage of targets reached and 2) percentage of trial time at the target revealed a main effect for feedback modality: participants using combined auditory and vibrotactile feedback performed significantly better than those using auditory feedback alone. No effect was found for session or the interaction of feedback modality and session, indicating a successful generalization to novel targets but lack of improvement over training sessions. Future research is necessary to determine the cognitive cost associated with combined auditory and vibrotactile feedback during HMI control.

Keywords: human-machine-interfaces, vibrotactile, auditory, electromyography

Introduction

Human machine interfaces (HMIs) translate user intent into control signals used to operate machines such as computers or assistive devices. Currently there are many HMIs that can provide a means of communication for patients with locked-in syndrome who have little or no remaining motor function [1-4]. Despite this, HMIs are not widely used outside of specialized clinical or research settings because they often have poor performance in everyday situations.

In research settings, multiple HMIs have achieved successful real-time two-dimensional (2D) control, such as moving a computer cursor on a screen using measurements of brain activity [5-8]. In achieving this type of real-time closed-loop control of advanced HMIs, feedback from the HMI to the user is essential. Visual feedback is the most often used feedback modality, offering intuitive control and high performance. Despite this, there are some substantial disadvantages of employing visual feedback. Visual feedback systems can be cumbersome since they often require an external monitor. Additionally, use of visual feedback requires the user to exert constant visual attention. For instance, performance using a visually guided HMI has been shown to substantially decrease in the presence of distracting visual stimuli [9]. Thus, visual feedback may not be ideal for practical HMIs that need to be effective in situations where visual attention may be needed for other tasks. Use of alternative feedback modalities such as auditory or vibrotactile feedback may facilitate the translation of HMIs from research laboratories to patient homes.

Unfortunately, previous use of auditory feedback for HMI control has produced mixed results, depending on the nature of both the HMI and the auditory feedback provided to the user [10-16]. Many auditory-based HMIs rely on an elicited brain response to make discrete decisions (e.g., P300). The participant listens for a sound with known characteristics and when an auditory stimulus is presented that matches these characteristics, a measureable brain response is evoked that is used to control the interface. These studies have shown that individuals can achieve control of HMIs using only auditory stimuli [10-12, 16]. However, only a few studies have used continuous auditory feedback for control of HMIs [13, 15, 17, 18]. Despite some systems producing somewhat promising results, all of them are outperformed by similar HMIs that use visual feedback.

For instance, Nijboer et al. [13] asked healthy individuals to control their sensorimotor rhythms as measured with electroencephalography (EEG) in real-time using either auditory or visual feedback. Participants were given either visual feedback in the form of a cursor moving up and down on a screen or auditory feedback in the form of increases and decreases in sound level. The average performance of participants given auditory feedback was 56%, compared with 74% in the participants given visual feedback. Similarly, Pham et al. [15] investigated healthy participants’ ability to control slow cortical potentials (SCP) using either continuous auditory feedback consisting of a tone with increasing or decreasing frequency, or continuous visual feedback consisting of a cursor moving up and down on a screen. Although participants were able to use auditory feedback to control the HMI with SCPs, control was more accurate using visual feedback. These studies suggest that auditory feedback for HMI control is possible, but that simple strategies in which feedback is provided in the form of changes in sound amplitude and frequency (pitch) that are linearly mapped to the user's motor intent may not be adequate to achieve the control possible with visual feedback.

There is some evidence that leveraging human speech perception may result in more effective auditory feedback for HMI control [17, 18]. Guenther et al. [17] trained an individual with locked-in syndrome to actively control vowel synthesis using signals measured with intracortical electrodes. Control of brain activity in 2D was mapped to the first and second formants (f1 and f2) of real-time vowel synthesis. By placing targets at f1-f2 values associated with American English vowels, they were able to exploit the perceptual magnet effect, in which continuous changes are mapped to distinct learned categories (i.e. vowels) [19]. Similarly, Larson et al. [18] asked participants to perform 2D control of vowel synthesis using surface electromyography (sEMG). Participants (native American English speakers) were presented targets that either corresponded to vowels from American English (categorical) or vowel-like sounds not part of American English (non-categorical). The authors found that individuals given categorical auditory targets performed significantly better than those given non-categorical targets at reaching trained targets as well as reaching novel untrained targets (auditory-motor generalization). These results suggest that auditory feedback implemented via vowel synthesis may provide effective feedback for HMI control.

Another appealing feedback modality for HMIs is vibrotactile stimulation. Like auditory feedback, it is simple to implement, safe, does not rely on constant visual attention [20], and has been previously used successfully in HMIs [9, 21-23]. Muller-Putz et al. [22] showed that by attending to one of two tactors a steady-state somatosensory evoked potential could be extracted to control a HMI. Similarly, work has shown that vibrotactile stimulation can be used to evoke a P300 event-related potential [21, 23]. Alternatively, other studies have used vibrotactile stimulation as feedback for continuous HMI control.

Various levels of performance have been achieved using vibrotactile feedback as a primary feedback modality or as an adjunct to visual feedback. Cincotti et al. [9] used directional vibrotactile feedback (stimulating either the left or right of the participant) for an EEG-based HMI control and showed similar performance using vibrotactile feedback relative to visual feedback. Additional work by Chatterjee et al. [24] asked participants to perform motor imagery of either their left or right hand using vibrotactile feedback consisting of a frequency modulated pulse train; increases in right hand motor imagery corresponded to increases in pulse frequency. Subjects achieved an average performance of 56% with a maximum of 72%. This result is somewhat promising; however, amplitude modulated vibrotactile stimulation has been shown to provide superior HMI control compared to pulse train frequency modulated vibrotactile stimulation [25]. This suggests that even higher performance could be attained with a similar system using alternative vibrotactile stimulation strategies. In sum, these studies suggest that vibrotactile stimulation may be an effective feedback modality for HMI control. Additionally, a high level of success has been shown for using vibrotactile stimulation to augment visual feedback [26-30] for HMI control. Thus, use of vibrotactile feedback may be especially beneficial when coupled with auditory feedback to develop a HMI based on multimodal (audio-tactile) feedback.

In fact, many previous studies have explored the use of multiple feedback modalities [15, 26-33]. Simple tasks such as reach, grasp, or object manipulation that employ both visual and tactile/haptic feedback have shown the most promising effects of multimodal feedback [26-28, 32, 33], while other studies have shown deterioration of performance [15, 30, 31]. Specifically, Rosati et al. [34] found that combining auditory and visual feedback resulted in improved performance during learning of tracking motion exercises. However, they found that performance worsened when multiple visual feedback paradigms were presented, demonstrating the advantage of multimodal feedback while highlighting the importance of not over saturating sensory channels. This wide range of results is likely due to the nature of the underlying tasks as well as the particular formulation of the feedback modalities—and so far, no studies have directly compared the effectiveness of combining vibrotactile with auditory feedback for HMI control.

The goal of this study was to assess the effect of adding binary vibrotactile stimulation to continuous auditory feedback (vowel synthesis) for HMI control. The present study expanded a previously designed auditory-based HMI [18] by adding vibrotactile feedback. The task consisted of trying to achieve categorical targets (American English Vowels) using 2D control of facial sEMG [18]. For simplicity, the HMI system employed in this study utilized sEMG for control: a safe, easy, and non-invasive technique to measure the electrical activity of muscles [35]. The impact of augmenting auditory feedback with binary vibrotactile stimulation for HMI control was explored by comparing performance of individuals using only auditory feedback to the performance of individuals using combined auditory and vibrotactile feedback. We hypothesized that the multimodal vibrotactile and auditory feedback would lead to higher performance than auditory feedback alone.

Methods

Participants

Sixteen healthy young adults participated in the experiment. All subjects were native speakers of American English with no history of speech, language, or hearing problems. Their average age was 21.5 years (STD = 2.6). Participants were randomly divided into two experimental groups: one received auditory and vibrotactile feedback and the other received only auditory feedback. Each group consisted of eight participants (four female). All participants completed written consent in compliance with the Boston University Institutional Review Board

sEMG Data Acquisition

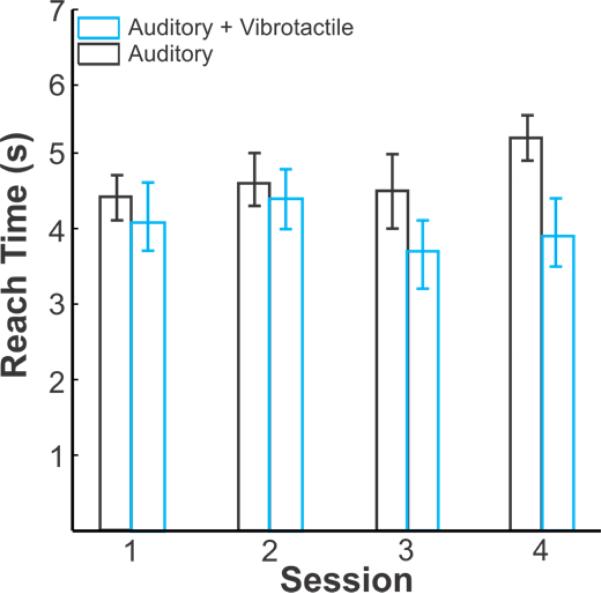

Two double differential sEMG electrodes (Delsys DE3.1) were placed bilaterally over the left and right side of the orbicularis oris muscle (see Figure 1) such that the electrode bars were perpendicular to the muscle fibers. A ground electrode was placed on spinous process of C7. The skin was prepared with alcohol and peeling (exfoliation) to reduce the skin-electrode impedance [36]. The sEMG signals were pre-amplified (1000x) and filtered using a Delsys Bagnoli system (Delsys, Boston MA) with low and high cutoffs of 20 Hz and 450 Hz, respectively. sEMG signals were digitalized and sampled at 44,100 Hz using either a Fast Track Pro USB (M-Audio, Inc., USA) or a FP10 (Presonus Audio Electronics, Inc., Baton Rouge LA). Both systems have comparable input specifications.

Figure 1.

Electrodes were placed over the right and left side of the participant's orbicularis oris muscle.

The sEMG signals were windowed using a 2048-sample Hanning window. The power estimates of the left and right sEMG channels within each window were mapped to the f1-f2 (first and second formants) space by using the maximum voluntary contractions (MVCs) of the left and right side of the orbicularis oris muscle. The MVCs were calculated as the maximum power from the left and right sEMG channel over four maximal contractions. The f1 axis (300 – 1200Hz) and the f2 axis (600 – 3400 Hz) were linearly mapped to 10% – 85% of the MVC for right and left sEMG channels, respectively. The sEMG signal was smoothed over time using a decaying exponential filter with a 1 second time constant.

Auditory and Vibrotactile Feedback

Auditory feedback consisted of a continuous vowel sound (with varying f1 and f2) generated using the Synthesis ToolKit [37] implementation of a Klatt Synthesizer [38]. The first and second formants of the vowel synthesized corresponded to power of the right and left sEMG, respectively. Auditory feedback was played over a small speaker placed in front of the participant at a comfortable listening level.

Vibrotactile feedback was generated using a precision haptic 10mm linear resonant actuator (LRA; Precision Microdrives Limited, England) placed on the distal pad of the right index finger of the participant. The LRA was driven by a sine wave at a frequency of 175 Hz (the resonant frequency of the LRA). The driving sine wave signal was digitally generated using the Synthesis ToolKit [37]. This stimulation frequency is easily perceptible on the distal pad of the finger [39] and was reported as such by all participants. The digital signal was converted to an analog driving output using the Presonus FP10 at 44100 Hz. The signal was then amplified using the ART Pro MPA II as well as the TA105 (Trust Automation, Inc., San Luis Obispo CA). Vibrotactile stimulation was presented to the participants only when participant was achieving the target.

Experimental Design

Participants completed four sessions over three consecutive days. Sessions lasted between 30-45 minutes depending on the individual's performance. The first three sessions were identical and served as training sessions. Participants were trained during the first three sessions to manipulate their sEMG to achieve the three training vowel targets /ɪ/, /u/, and /ɑ/ (e.g., “bit”, “boot”, and “pot”). The fourth session (generalization) occurred on the third day, immediately following session three. During the generalization session, participants were given three novel target vowels /i/, /æ/, and /o/ (e.g., “beat”, “bat”, and “boat”). The locations of the targets in the vowel space can be seen in Figure 2. Each session consisted of 40 trials for each of the three target vowels (total of 120 trials). At the beginning of each trial, the target vowel was played for two seconds while the token word was displayed in the center of the screen. After a two second gap, the participant was given 15 seconds to find and hold the target. The trial ended when the participant held the target vowel for one second or the 15 second time limit was reached. If the participant left the target vowel, the one second timer was reset; thus, a successful trial only ended when the target vowel was held continuously for one second. The token word remained in the center of the screen for the entire duration of the trial. See Figure 3 for trial diagram.

Figure 2.

Target locations in the vowel space. The x and y axes represent the frequency of the first and second formants respectively. Light pink ellipses (solid lines) denote the training target locations (/ɪ/, /u/, and /ɑ/) while the dark blue ellipses (dashed lines) denote the generalization target locations (/i/, /æ/, and /o/).

Figure 3.

Timing diagram for each trial. A two second cue was played followed by a two second pause. Continuous auditory feedback was then presented for 15 seconds or until the target was achieved and held for one second. There was a one second pause between trials.

Data Analysis

Participants were scored based on their ability to achieve each of the target locations within the trial period. “Performance” was calculated as the percent of trials during each session for which the target vowel was achieved. Additionally, the metric “target time” was calculated as the mean percentage of time during each trial that the participant was able to hold the target vowel; this value can range from 0% (never achieving the target vowel) to nearly 100% (moving immediately to the target vowel and holding it for 1 second). Finally, “Reach Time” or the mean time needed to initially reach each target location was calculated. Calculations of performance, target time, and reach time were computed off-line using custom software in MATLAB (Mathworks, Natick MA). Statistical analysis was performed using Minitab Statistical Software (Minitab Inc., State College, PA). A two-factor analysis of variance (ANOVA) was performed on performance, target time, and reach time to assess the effect of feedback modality (auditory alone vs. combined auditory and vibrotactile), session (1 – 4), and the interaction of feedback modality × session. All statistical analyses were performed using an alpha level of 0.05 for significance. The effect sizes were quantified using the squared partial curvilinear correlation (ηp2) [40].

Results

Figure 4 shows the mean performance as a function of session for the two feedback groups. Using combined auditory and vibrotactile feedback, participants were able to achieve an average performance of 76.6% (STD = 13.6) across all sessions. By comparison, participants using only auditory feedback achieved an average performance of 62.7% (STD = 17.6). The individual performances of participants using combined auditory and vibrotactile feedback ranged from 56.2% to 91.7%, while the individual performance scores for participants only using only auditory feedback ranged from 49.8% to 79.2%. A two factor ANOVA on performance (Table 1) revealed a main effect for feedback modality (p = 0.001): participants using combined auditory and vibrotactile feedback performed significantly better than those using auditory feedback alone. No significant effect was found for either session or the interaction of feedback modality and session (p > 0.1, both). The effect size (ηp2) for feedback modality was in the medium to large range (.19) [40].

Figure 4.

Performance (% of targets achieved) as a function of session. Lighter (blue) bars indicate performance of participants given combined auditory and vibrotactile feedback. Darker (black) bars indicate performance of participants given only auditory feedback. Error bars represent one standard error. Sessions 1 – 3 were training sessions and session 4 was the generalization session, which consisted of novel targets. No paired (post hoc) tests were performed because there was no significant main effect of session.

Table 1.

Performance ANOVA

| Source | DF | η p 2 | F | p |

|---|---|---|---|---|

| Feedback Modality | 1 | 0.19 | 12.86 | 0.001 |

| Session | 3 | 0.10 | 2.07 | 0.115 |

| Feedback Modality × Session | 3 | 0.03 | 0.50 | 0.685 |

The mean target time as a function of session for the two feedback groups can be seen in Figure 5. The mean target time across all sessions for participants using both auditory and vibrotactile feedback was 7.5% (STD = 2.3) whereas the mean target time across all sessions for participants using auditory feedback alone was 5.1% (STD = 2.5). Individual average target times ranged from 6.0% to 10.6% for participants using both auditory and vibrotactile feedback and 3.7% to 9.2% for participants using only auditory feedback. A two factor ANOVA of target time (Table 2) showed a significant effect of feedback modality (p < 0.001) but no effect of session or the interaction of feedback modality and session (p > 0.2, both). The effect size for feedback modality was in the large range (.22) [40]. Participants using combined auditory and vibrotactile feedback showed increased target times compared with participants using auditory feedback alone.

Figure 5.

Target Time (% of total trial time that target was held) as a function of session. Lighter (blue) bars indicate target time of participants given combined auditory and vibrotactile feedback. Darker (black) bars indicate target time of participants given only auditory feedback. Error bars represent one standard error. Sessions 1 – 3 were training sessions and session 4 was the generalization session, which consisted of novel targets. No paired (post hoc) tests were performed because there was no significant main effect of session.

Table 2.

Target Time ANOVA

| Source | DF | η p 2 | F | p |

|---|---|---|---|---|

| Feedback Modality | 1 | 0.22 | 15.96 | <0.001 |

| Session | 3 | 0.07 | 1.43 | 0.245 |

| Feedback Modality × Session | 3 | 0.03 | 0.56 | 0.647 |

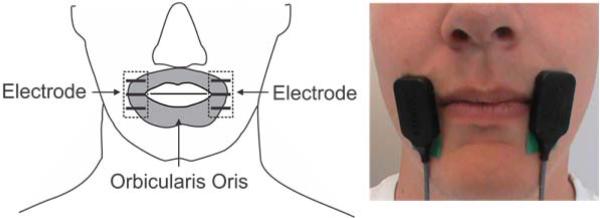

The average reach time is shown in Figure 6. Participants who received both auditory and vibrotactile took an average of 4.08 seconds (STD = 1.25) to reach the target while participants given only auditory feedback took 4.72 seconds. A two factor ANOVA of reach time (Table 3) revealed a significant effect of feedback modality (p = 0.030) with a moderate effect size (0.081). Participants given both vibrotactile and auditory feedback achieved significantly lower reach times compared to participants given only auditory feedback. There was no significant effect of session, or the interaction of session and feedback modality.

Figure 6.

Reach Time as a function of session. Lighter (blue) bars indicate performance of participants given combined auditory and vibrotactile feedback. Darker (black) bars indicate performance of participants given only auditory feedback. Error bars represent one standard error. Sessions 1 – 3 were training sessions and session 4 was the generalization session, which consisted of novel targets.

Table 3.

Reach Time ANOVA

| Source | DF | η p 2 | F | p |

|---|---|---|---|---|

| Feedback Modality | 1 | 0.08 | 4.94 | 0.030 |

| Session | 3 | 0.03 | 0.66 | 0.579 |

| Feedback Modality × Session | 3 | 0.43 | 0.84 | 0.479 |

Discussion

The goal of this study was to investigate the effect of adding binary vibrotactile feedback to an auditory-based HMI. We hypothesized that participants given combined auditory and vibrotactile feedback would perform better than participants given only auditory feedback. In fact, consistent with some previous studies incorporating multimodal feedback [26, 27, 32, 33], our results show that the addition of vibrotactile feedback significantly improved all three metrics studied: performance (the ability of individuals to achieve targets), target time (the ability of participants to hold at the target), and reach time (the time needed to get to the target).

Effects of Vibrotactile Stimulation

Compared to auditory feedback alone, combined auditory and vibrotactile feedback significantly increased both the performance and the target time and decreased reach time, i.e. participants were able to achieve a higher percentage of presented targets more quickly, and could hold those targets for a longer portion of the trial time. Increases in target time (or the percentage of each trial that the target vowel was achieved) with the addition of vibrotactile feedback is likely a result of individuals’ ability to hold achieved targets once they have located them in the auditory space: once they find the target, vibrotactile stimulation signals that they should stop changing motor activity and hold their current activations steady. The increased number of achieved targets due to the addition of vibrotactile feedback and the fact that targets were achieved faster is more interesting, since moving to and achieving targets happens prior to stimulation. While the lack of tactile stimulation prior to achieving a target provided subjects a form of feedback to alert them that they had not yet achieved a target, it is likely that participants were able to use confirmatory vibration after achieving early trials to quickly learn auditory-motor mappings and thus improve performance in achieving targets in later trials.

These results are consistent with the findings of several studies that have shown increases in performance with combined continuous visual and haptic feedback [26-28, 32, 33]. These results however are in contrast with other previous studies that found no increase in performance when combining multiple feedback modalities [15]. This difference is likely due to the nature of the feedback used in each experiment. Studies that have shown decreased performance when using multimodal feedback often ask the user to attend to both feedback modalities simultaneously. For instance, Pham et al. [15] suggested that multiple simultaneous feedback modalities led to selective attention, decreasing performance.

The current study aimed to use two non-conflicting forms of feedback in an attempt to reduce the cognitive load of the participant. The primary (auditory) modality was used to locate targets in the perceptual space and confirmatory vibrotactile stimulation was used to signal task success to aid in holding the target once it was reached. In this way, participants could switch attention between the two modalities based on which was most salient and/or useful for the current portion of the task. To further achieve simplicity in feedback mappings, categorical auditory targets were chosen, which leverage the perceptual magnet effect for learned vowel targets [19]. These targets have previously been shown to be more effective and intuitive compared to other auditory feedback mappings [17, 18]. The increased performance of participants given vibrotactile and auditory feedback suggests that participants were not overburdened cognitively and were able to effectively exploit the advantages of the multimodal feedback.

Effect of Session

Some previous studies [17, 41] have shown that increased training time with augmentative feedback leads to increased performance. Although Figure 4 suggests a potential trend for learning in the auditory only group over sessions 1 – 3, statistical analysis did not show a significant effect of session or the interaction between session and feedback modality on any of the three metrics studied. Participants did not perform significantly better during later sessions, indicating that learning did not occur over multiple visits. These results suggest that the combination of auditory and vibrotactile feedback used by these participants allows for immediate utilization for motor tasks, without the need for an extensive training period. The lack of an effect of session also suggests high generalization from training to novel targets during session 4. Overall, these findings suggest that the auditory and tactile feedback modalities employed in this study are intuitive, simple to understand, and easily generalizable.

Clinical Implications

The present study demonstrates that combining auditory and vibrotactile feedback could be exploited to design HMIs with high performance and short training times. This finding has implications for the design of HMIs for practical applications. Performance when using HMIs, especially those that rely solely on auditory feedback, is currently too poor for widespread user acceptance. The current work shows that by adding simple binary vibrotactile feedback, auditory-based HMI performance can be significantly increased. In fact, the performance of the current system is comparable to performance using HMIs to control a cursor in 2D that rely on real-time visual feedback [5, 42-44]. When using the current system with auditory and vibrotactile feedback, participants were able to achieve an average of 76.6% of the targets. Performance using recorded brain signals (e.g. EEG, EcoG, and intracranial electrodes) to control 2D cursor movement with full visual feedback has ranged from 62.6% to 82.25% [5, 42-44]. While some of these studies used noisier control signals than the sEMG employed in the current study, the high performance achieved with audio and vibrotactile feedback is encouraging. Additionally, current HMIs can have very slow learning curves and can take a long time to achieve maximum performance [45]. By combining simple vibrotactile feedback with auditory feedback, higher performance may be available rapidly without the need for prolonged training. This may increase both the accessibility and acceptance of HMIs and is especially relevant when designing HMIs for at home use. Based on the observation that users were able to achieve more targets using auditory-tactile feedback (where reaching the targets occurred before the tactor vibrated) suggests it might even be advantageous to train subjects initially using multi-modal feedback (e.g., auditory-tactile) even if the final HMI relies only feedback from only one sensory modality. Another key disadvantage of current HMIs employing visual feedback is the intrusive hardware necessary to display the feedback. Visual feedback systems depend on conveying visual information to the user, which currently relies on use of an external monitor. This can be cumbersome and bulky and often hinders natural communication. Auditory and vibrotactile feedback are attractive, however, because they can be discreetly relayed to the user. Auditory feedback can be easily delivered by headphones; vibrotactile feedback can be delivered using small, lightweight tactors, including advanced designs that can cheaply provide independent modulation of both the amplitude and frequency of vibration [46]. Both auditory and vibrotactile feedback can be applied during everyday situations with minimal interference or intrusion. Thus, vibrotactile and audio feedback may be more intuitive for users and may reduce interference of the HMI with real-world communication. Future work will assess user preferences and determine the cognitive attention necessary to utilize auditory feedback with and without vibrotactile feedback compared with visual feedback.

Conclusion

This study investigated the effect adding binary vibrotactile feedback to continuous auditory feedback in the form of vowel synthesis for HMI control. Using sEMG to control the HMI, participants trained over three sessions on three days and were tested on novel targets in a fourth session on the third day. The addition of vibrotactile feedback significantly improved both the ability of individuals to achieve targets as well as their ability to hold targets. No effect of session or the interaction between session and feedback modality was found, indicating that performance did not improve over the multiple sessions. Performance achieving targets was comparable to previous studies using visual feedback for 2D HMI control. Future research is necessary to determine the cognitive cost associated with combined auditory and vibrotactile feedback relative to visual feedback during HMI control.

Acknowledgements

The authors would like to thank Gabby Hands for assisting with participant recordings and Sylvan Favrot, PhD for help with software development. Portions of this work were funded by NIH NIDCD training grants T32DC000018 (EDL) and 1F32DC012456 (EDL) and Boston University.

References

- 1.Hinterberger T, Kubler A, Kaiser J, Neumann N, Birbaumer N. A brain-computer interface (BCI) for the locked-in: comparison of different EEG classifications for the thought translation device. Clin Neurophysiol. 2003 Mar;114:416–25. doi: 10.1016/s1388-2457(02)00411-x. [DOI] [PubMed] [Google Scholar]

- 2.Sellers EW, Donchin E. A P300-based brain-computer interface: initial tests by ALS patients. Clin Neurophysiol. 2006 Mar;117:538–48. doi: 10.1016/j.clinph.2005.06.027. [DOI] [PubMed] [Google Scholar]

- 3.Birbaumer N, Ghanayim N, Hinterberger T, Iversen I, Kotchoubey B, Kubler A, Perelmouter J, Taub E, Flor H. A spelling device for the paralysed. Nature. 1999 Mar 25;398:297–8. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 4.Nijboer F, Sellers EW, Mellinger J, Jordan MA, Matuz T, Furdea A, Halder S, Mochty U, Krusienski DJ, Vaughan TM, Wolpaw JR, Birbaumer N, Kubler A. A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin Neurophysiol. 2008 Aug;119:1909–16. doi: 10.1016/j.clinph.2008.03.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Trejo LJ, Rosipal R, Matthews B. Brain-computer interfaces for 1-D and 2-D cursor control: designs using volitional control of the EEG spectrum or steady-state visual evoked potentials. IEEE Trans Neural Syst Rehabil Eng. 2006 Jun;14:225–9. doi: 10.1109/TNSRE.2006.875578. [DOI] [PubMed] [Google Scholar]

- 6.Vaughan TM, McFarland DJ, Schalk G, Sarnacki WA, Krusienski DJ, Sellers EW, Wolpaw JR. The Wadsworth BCI Research and Development Program: at home with BCI. IEEE Trans Neural Syst Rehabil Eng. 2006 Jun;14:229–33. doi: 10.1109/TNSRE.2006.875577. [DOI] [PubMed] [Google Scholar]

- 7.Birbaumer N, Kubler A, Ghanayim N, Hinterberger T, Perelmouter J, Kaiser J, Iversen I, Kotchoubey B, Neumann N, Flor H. The thought translation device (TTD) for completely paralyzed patients. IEEE Trans Rehabil Eng, vol. 2000 Jun;8:190–3. doi: 10.1109/86.847812. [DOI] [PubMed] [Google Scholar]

- 8.Blankertz B, Dornhege G, Krauledat M, Muller KR, Kunzmann V, Losch F, Curio G. The Berlin Brain-Computer Interface: EEG-based communication without subject training. IEEE Trans Neural Syst Rehabil Eng. 2006 Jun;14:147–52. doi: 10.1109/TNSRE.2006.875557. [DOI] [PubMed] [Google Scholar]

- 9.Cincotti F, Kauhanen L, Aloise F, Palomaki T, Caporusso N, Jylanki P, Mattia D, Babiloni F, Vanacker G, Nuttin M, Marciani MG, Del RMJ. Vibrotactile feedback for brain-computer interface operation. Comput Intell Neurosci. 2007 doi: 10.1155/2007/48937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Furdea A, Halder S, Krusienski DJ, Bross D, Nijboer F, Birbaumer N, Kubler A. An auditory oddball (P300) spelling system for brain-computer interfaces. Psychophysiology. 2009 May;46:617–25. doi: 10.1111/j.1469-8986.2008.00783.x. [DOI] [PubMed] [Google Scholar]

- 11.Higashi H, Rutkowski TM, Washizawa Y, Cichocki A, Tanaka T. EEG auditory steady state responses classification for the novel BCI. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:4576–9. doi: 10.1109/IEMBS.2011.6091133. [DOI] [PubMed] [Google Scholar]

- 12.Lopez-Gordo MA, Fernandez E, Romero S, Pelayo F, Prieto A. An auditory brain-computer interface evoked by natural speech. J Neural Eng. 2012 Jun;9 doi: 10.1088/1741-2560/9/3/036013. [DOI] [PubMed] [Google Scholar]

- 13.Nijboer F, Furdea A, Gunst I, Mellinger J, McFarland DJ, Birbaumer N, Kubler A. An auditory brain-computer interface (BCI) J Neurosci Methods. 2008 Jan 15;167:43–50. doi: 10.1016/j.jneumeth.2007.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Oscari F, Secoli R, Avanzini F, Rosati G, Reinkensmeyer DJ. Substituting auditory for visual feedback to adapt to altered dynamic and kinematic environments during reaching. Exp Brain Res. 2012 Aug;221:33–41. doi: 10.1007/s00221-012-3144-2. [DOI] [PubMed] [Google Scholar]

- 15.Pham M, Hinterberger T, Neumann N, Kubler A, Hofmayer N, Grether A, Wilhelm B, Vatine JJ, Birbaumer N. An auditory brain-computer interface based on the self-regulation of slow cortical potentials. Neurorehabil Neural Repair. 2005 Sep;19:206–18. doi: 10.1177/1545968305277628. [DOI] [PubMed] [Google Scholar]

- 16.Schreuder M, Blankertz B, Tangermann M. A new auditory multi-class brain-computer interface paradigm: spatial hearing as an informative cue. PLoS One. 2010;5 doi: 10.1371/journal.pone.0009813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Guenther FH, Brumberg JS, Wright EJ, Nieto-Castanon A, Tourville JA, Panko M, Law R, Siebert SA, Bartels JL, Andreasen DS, Ehirim P, Mao H, Kennedy PR. A wireless brain-machine interface for real-time speech synthesis. PLoS One. 2009;4 doi: 10.1371/journal.pone.0008218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Larson E, Terry HP, Canevari MM, Stepp CE. Categorical vowel perception enhances the effectiveness and generalization of auditory feedback in human-machine-interfaces. PLoS ONE. 2013;8:e59860. doi: 10.1371/journal.pone.0059860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Iverson P, Kuhl PK. Mapping the perceptual magnet effect for speech using signal detection theory and multidimensional scaling. J Acoust Soc Am. 1995 Jan;97:553–62. doi: 10.1121/1.412280. [DOI] [PubMed] [Google Scholar]

- 20.Kaczmarek KA, Webster JG, Bach-y-Rita P, Tompkins WJ. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE Trans Biomed Eng. 1991 Jan;38:1–16. doi: 10.1109/10.68204. [DOI] [PubMed] [Google Scholar]

- 21.Brouwer AM, van Erp JB. A tactile P300 brain-computer interface. Front Neurosci. 2010;4:19. doi: 10.3389/fnins.2010.00019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Muller-Putz GR, Scherer R, Neuper C, Pfurtscheller G. Steady-state somatosensory evoked potentials: suitable brain signals for brain-computer interfaces. IEEE Trans Neural Syst Rehabil Eng. 2006 Mar;14:30–7. doi: 10.1109/TNSRE.2005.863842. [DOI] [PubMed] [Google Scholar]

- 23.van der Waal M, Severens M, Geuze J, Desain P. Introducing the tactile speller: an ERP-based brain-computer interface for communication. J Neural Eng. 2012 Aug;9 doi: 10.1088/1741-2560/9/4/045002. [DOI] [PubMed] [Google Scholar]

- 24.Chatterjee A, Aggarwal V, Ramos A, Acharya S, Thakor NV. A brain-computer interface with vibrotactile biofeedback for haptic information. J Neuroeng Rehabil. 2007;4:40. doi: 10.1186/1743-0003-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stepp CE, Matsuoka Y. Vibrotactile sensory substitution for object manipulation: amplitude versus pulse train frequency modulation. IEEE Trans Neural Syst Rehabil Eng. 2012 Jan;20:31–7. doi: 10.1109/TNSRE.2011.2170856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huang FC, Gillespie RB, Kuo AD. Visual and haptic feedback contribute to tuning and online control during object manipulation. J Mot Behav. 2007 May;39:179–93. doi: 10.3200/JMBR.39.3.179-193. [DOI] [PubMed] [Google Scholar]

- 27.Morris D, Hong T, Chang T, Salisbury K. Haptic Feedback Enhances Force Skill Learning. Second Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. 2007 [Google Scholar]

- 28.Stepp CE, Matsuoka Y. Object Manipulation Improvements with Single Session Training Outweigh the Differences among Stimulation Sites during Vibrotactile Feedback. IEEE Trans Neural Syst Rehabil Eng. 2011;19:677–685. doi: 10.1109/TNSRE.2011.2168981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Suminski AJ, Tkach DC, Fagg AH, Hatsopoulos NG. Incorporating feedback from multiple sensory modalities enhances brain-machine interface control. J Neurosci. 2010 Dec 15;30:16777–87. doi: 10.1523/JNEUROSCI.3967-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Vitense HS, Jacko JA, Emery VK. Multimodal feedback: an assessment of performance and mental workload. Ergonomics. 2003 Jan 15;46:68–87. doi: 10.1080/00140130303534. [DOI] [PubMed] [Google Scholar]

- 31.Thurlings ME, Brouwer AM, Van Erp JB, Blankertz B, Werkhoven PJ. Does bimodal stimulus presentation increase ERP components usable in BCIs? J Neural Eng. 2012 Aug;9:045005. doi: 10.1088/1741-2560/9/4/045005. [DOI] [PubMed] [Google Scholar]

- 32.Lee J-H, Spence C. Assessing the benefits of multimodal feedback on dual-task performance under demanding conditions. Proceedings of the 22nd British HCI Group Annual Conference on People and Computers: Culture, Creativity, Interaction. 2008;1:185–192. [Google Scholar]

- 33.Burke JL, Prewett MS, Gray AA, Yang L, Stilson FRB, Coovert MD, E. L. R., R. E. Comparing the Effects of Visual-Auditory and Visual-Tactile Feedback on User Performance: A Meta-analysis. Proceedings of the 8th international conference on Multimodal interfaces. 2006:108–117. [Google Scholar]

- 34.Rosati G, Oscari F, Spagnol S, Avanzini F, Masiero S. Effect of task-related continuous auditory feedback during learning of tracking motion exercises. J Neuroeng Rehabil. 2012 Oct 10;9:79. doi: 10.1186/1743-0003-9-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.De Luca CJ. The use of surface electromyography in biomechanics. Journal of Applied Biomechanics. 1997;13:135–163. [Google Scholar]

- 36.Stepp CE. Surface electromyography for speech and swallowing systems: measurement, analysis, and interpretation. J Speech Lang Hear Res. 2012 Aug;55:1232–46. doi: 10.1044/1092-4388(2011/11-0214). [DOI] [PubMed] [Google Scholar]

- 37.Scavone G, Cook P. RtMIDI, RtAudio, and a Synthesis (STK) Update. Proceedings of the International Computer Music Conference; Barcelona, Spain. 2005. [Google Scholar]

- 38.Klatt DH. Software for a cascade/parallel formant synthesizer. Journal of the Acoustical Society of America. 1980;67:971–995. [Google Scholar]

- 39.Verrillo RT. Vibrotactile thresholds measured at the finger. Perception and Psychophysics. 1971;9 [Google Scholar]

- 40.Witte RS, Witte JS. Statistics. 9th ed. J. Wiley & Sons; Hoboken, NJ: 2010. [Google Scholar]

- 41.Stepp CE, An Q, Matsuoka Y. Repeated training with augmentative vibrotactile feedback increases object manipulation performance. PLoS One. 2012;7:e32743. doi: 10.1371/journal.pone.0032743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Friehs GM, Black MJ. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011 Apr;19:193–203. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schalk G, Miller KJ, Anderson NR, Wilson JA, Smyth MD, Ojemann JG, Moran DW, Wolpaw JR, Leuthardt EC. Two-dimensional movement control using electrocorticographic signals in humans. J Neural Eng. 2008 Mar;5:75–84. doi: 10.1088/1741-2560/5/1/008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc Natl Acad Sci U S A. 2004 Dec 21;101:17849–54. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002 Jun;113:767–91. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 46.Cipriani C, D'Alonzo M, Carrozza MC. A miniature vibrotactile sensory substitution device for multifingered hand prosthetics. IEEE Trans Biomed Eng. 2012 Feb;59:400–8. doi: 10.1109/TBME.2011.2173342. [DOI] [PubMed] [Google Scholar]