Abstract

Timing cues are an essential feature of music. To understand how the brain gives rise to our experience of music we must appreciate how acoustical temporal patterns are integrated over the range of several seconds in order to extract global timing. In music perception, global timing comprises three distinct but often interacting percepts: temporal grouping, beat, and tempo. What directions may we take to further elucidate where and how the global timing of music is processed in the brain? The present perspective addresses this question and describes our current understanding of the neural basis of global timing perception.

Keywords: music, rhythm, grouping, meter, beat, tempo, brain, fMRI

Rhythm perception is essential to our appreciation of music. Since music is a human construct and rhythm is defined primarily by its use in music, it is unclear whether studies of rhythm in non-human species directly relate to human rhythm perception. In this perspective, we use the term “global timing” as a conceptual framework, to emphasize the temporal computation required to perceive and recognize rhythm that is not unique to musical contexts, but is nonetheless required to perceive and recognize a musical rhythm. With this terminology, we also aim to facilitate the comparison of research findings across different animal models, where perception may take a different behavioral form but neural mechanisms may nevertheless be shared. We discuss the conceptual framework of global timing in light of recent research in both humans and other animal species, examine which brain regions may underlie global timing perception, and speculate on its potential neural mechanisms.

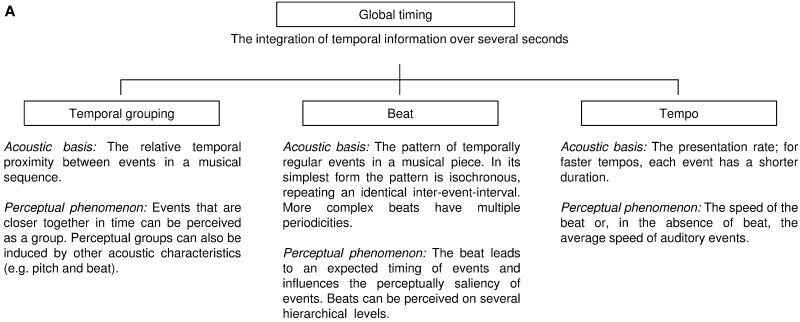

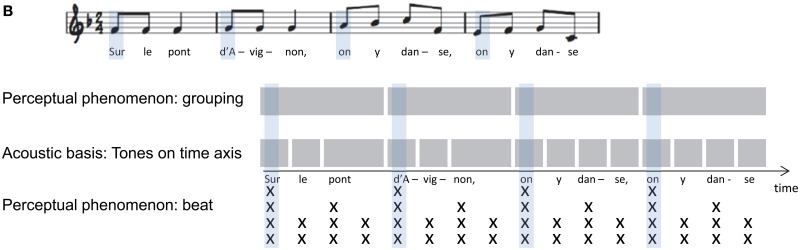

We define global timing as the percept of temporal patterns created by sequential acoustic events (i.e., notes and rests in a musical piece). It requires that the listener integrates temporal patterns spanning several seconds. While rhythm is also influenced by other acoustic characteristics such as pitch, intensity, and timber, global timing perception is, by definition, driven by timing. The human auditory system likely processes temporal patterns at two levels—it computes the local timing of individual events (e.g., the duration of a single note), and analyzes its global features; in human music perception, these include temporal grouping, beat, and tempo (Box 1A). Differences in the relative temporal proximity between musical notes can lead to temporal grouping perception, sometimes referred to as rhythmic or figural grouping. That is, musical notes that are relatively close in time appear to be functionally linked, thus inducing perceptual groups. A behavioral measure of how temporal proximity induces perceptual groups is the discrimination of grouping patterns across different tempi (Trehub and Thorpe, 1989), or the subjective elongation of time intervals between temporal groups (Geiser and Gabrieli, 2013). The beat is the expected timing of regularly recurring, often hierarchically structured, events in a piece of Western music, to which humans often clap or dance (Drake et al., 2000a). In its simplest form, the acoustic basis for beat perception is a sequence of temporally regular occurring sounds that repeat an identical inter-event-interval. The tempo in a musical sequence refers to how fast or slow the beat occurs (see also Box 1B). In the absence of beat, the perceived tempo will reflect the average rate of auditory events. Details on how these three terms are used in music perception are provided in reviews by McAuley (2010), Deutsch (2013), and Fitch (2013), and theoretical approaches to temporal pattern perception in humans have been summarized by Grahn (2012).

Box 1A. The framework of global timing with the three global temporal features in human music perception.

Box 1B. Musical notation of a temporal pattern in the French folk song “Sur le pont d'Avignon.”.

The schematic depiction indicates the relative tone onsets and duration on time axis (middle), of the perceptual groups based on temporal grouping (top), and the perceived beat (x) where number of stacked x's show the number of overlapping levels in the beat hierarchy (schema adapted from McAuley, 2010, p. 168, with kind permission from Springer Science and Business Media).

In music perception, beat, grouping, and tempo are perceptually distinguishable. This is, for example, reflected in their perceptual invariance. That is, a perceived temporal grouping pattern can be recognized across a range of different tempi (see Hulse et al., 1992 for a review). Also, the distinction between sequences comprising a beat and those comprising no beat, as well as the recognition of a tempo in two musical sequences, can be carried out across various grouping patterns. However, the perceptions of global timing features also influence each other. Temporal grouping can influence the perceived beat that, in turn, affects the perceived tempo (Povel and Essens, 1985). Moreover, the tempo can influence which level of beat is perceived in a musical sequence (Drake et al., 2000b) and beat perception can affect how musical notes are perceptually grouped (Povel and Essens, 1985; Clarke, 1987; Schulze, 1989; Desain and Honing, 2003; Fitch and Rosenfeld, 2007). Finally, there are individual differences in how these percepts interact (Grahn and McAuley, 2009). Age and musical experience can influence how we perceive global timing (Drake et al., 2000b; Geiser et al., 2010; Hannon et al., 2011, 2012). In general, humans tend to perceive temporally grouped tone sequences as multiples of a small symbolic duration, comprising an underlying temporal regularity or beat even when such underlying regularity is objectively lacking (Povel and Essens, 1985; Clarke, 1987; Schulze, 1989; Desain and Honing, 2003; ten Hoopen et al., 2006). Consequently, global timing in music comprises the percept of the three global temporal features as a conglomerate.

Is global timing perception unique to humans?

Music is a human construct (for reviews on the evolutionary origin of music see McDermott and Hauser, 2005; Patel, 2006), but if non-human animals can be shown to share our perception of some features of global timing, then we can experimentally investigate the neural correlates of musical rhythm perception in these species. The characteristic temporal patterns of sounds produced by animals in nature (e.g., birdsong or the galloping of ungulates) suggest that many animals should be capable of global timing recognition. For example, the temporal pattern of vocal calls facilitates gender identification among emperor penguins (Jouventin et al., 1979) and species recognition in songbirds (Aubin and Brémond, 1983; Gentner and Hulse, 2000).

Can animals perceive specific features of global timing, such as temporal grouping, that are required for musical rhythm perception? Doves can detect a syllable delay within a conspecific vocalization (Slabbekoorn and ten Cate, 1999), which could reflect sensitivity to temporal grouping. Animals can also be trained to discriminate between non-ethologically relevant patterns for which temporal grouping might be a relevant cue (Ramus et al., 2000; Lomber and Malhotra, 2008). However, because many vocalizations also vary along non-temporal (e.g., spectral) dimensions, cues other than global timing could be used to differentiate these sounds. Further studies will be necessary to determine which species are able to distinguish grouping patterns solely on the basis of temporal cues.

The most striking difference in global timing perception between humans and many non-human species is that the latter exhibit a much poorer appreciation of beat. Human listeners readily detect and exploit temporal regularity in sound sequences. We remember auditory temporal patterns comprising a beat better than irregular patterns (Povel and Essens, 1985), and impose a regular beat on temporal patterns that we are required to remember (Povel, 1981). In contrast, animals have difficulty performing comparable tasks (pigeons: Hagmann and Cook, 2010; macaque monkeys: Zarco et al., 2009). Consequently, studies of beat perception in animals are rare. On the basis of our definition (Box 1A), one might expect that beat perception builds upon the basic ability to distinguish between temporally regular and irregular sound sequences. European starlings have been shown to discriminate between temporally regular (referred to as “rhythmic”) and irregular (referred to as “arrhythmic”) acoustic sequences and transferred the acquired categorization across different tempi (Hulse et al., 1984). However, attempts to train pigeons to categorize sound sequences in this manner have failed (Hagmann and Cook, 2010). When a temporally regular sequence of sounds was presented to Macaque monkeys, they did not show an electroencephalographic (EEG) response to beat deviants (Honing et al., 2012). This is in contrast to human newborns and adults, who show a mismatch-negativity response to beat deviants in similar stimuli (Winkler et al., 2009; Geiser et al., 2010). This finding suggests that not only do non-human primates fail to respond behaviorally to the beat, but they may also lack humans' neural correlates of beat perception.

Some parrots have been shown to synchronize their body movements to the beat of music due to model learning (Patel et al., 2009a,b) or even spontaneously (Schachner et al., 2009). Beat synchronization is one of the most commonly observed indicators for beat perception in humans from infancy onwards (Phillips-Silver and Trainor, 2005). The “vocal learning and rhythmic synchronization hypothesis” suggests that animals, such as parrots, who are vocal learners, might have the unique capacity to synchronize to the beat of sound sequences (Patel, 2006). However, beat synchronization has been recently observed in the California sea lion, a species presumed to be a non-vocal learner (Cook et al., 2013). While beat synchronization is likely a demonstration of beat perception, it does not need to be a requirement. The discrimination between sequences comprising temporal regularity and sequences comprising no regularity, as evidenced in the European starling (Hulse et al., 1984), suggests that beat perception might occur in species that lack beat synchronization. Whether this ability in starlings extends to more complex patterns found in global timing of music and can be found in other species remains to be investigated.

A number of species have been shown to discriminate the tempi of regular sound sequences, including birds (pigeons: Hagmann and Cook, 2010; European starlings: Hulse and Kline, 1993; emperor penguins: Jouventin et al., 1979; quails: Schneider and Lickliter, 2009), insects (crickets: Kostarakos and Hedwig, 2012), and non-human primates (cotton-top tamarins: McDermott and Hauser, 2007). Thus, it is likely that many animals share our perception of tempo. Moreover, like humans, starlings have been shown to ignore changes in tempo when categorizing the temporal pattern of sound sequences, relying instead on relative timing cues between acoustical events (Hulse and Kline, 1993). This perceptual invariance to tempo changes is a critical component of music perception in humans, as it allows us to recognize a familiar temporal pattern when it is played at a different speed. The finding that some animals share this aspect of global timing, at least when distinguishing regular and irregular sequences, is important as it means that we could study the neural mechanisms for tempo invariance at the neural level in these species.

In summary, we argue that various animals show sensitivity to the temporal grouping and the tempo feature of global timing in their spontaneous behavior and in experimental settings. Based on the above evidence one could speculate that some birds, such as European starlings, may also be able to distinguish the beat structure of sound sequences. The discussion of animal research above is selective, as we aimed to include only experimental paradigms that closely relate to perceptual mechanisms of global timing observed in humans.

Which brain regions are implicated in global timing processing?

Functional Magnetic Resonance Imaging (fMRI) studies in healthy humans have associated a network of cortico-striatal brain areas [including the superior temporal gyrus (STG), the Supplementary Motor Area (SMA), prefrontal (PFC) and premotor cortices (PMC), the basal ganglia, and the cerebellum] with the performance of global timing tasks. These areas show increased activity compared to baseline when participants perform same-different judgments (Grahn and Brett, 2007; Chen et al., 2008), categorization (Geiser et al., 2008), and tempo judgments on temporal patterns (Henry et al., 2013), or when they passively listen to temporal patterns (Bengtsson et al., 2008). The global timing features investigated in these studies included beat and tempo. This network of brain areas is, however, not exclusively linked to global timing in the auditory domain. It is also implicated in local timing (for a review see Bueti, 2011). Specifically, activity in the cerebellum and the basal ganglia has been observed during duration discrimination, both in the auditory (Rao et al., 2001; Belin et al., 2002) and visual modality (Coull and Nobre, 1998; Ferrandez et al., 2003; Lewis and Miall, 2003; Coull, 2004; Pouthas et al., 2005). Taken together, these findings could indicate a general temporal processing function for these brain areas (Teki et al., 2012). Alternatively, these brain areas could differ functionally on the micro-anatomical level. Such functional distinctions could be found in direct comparisons of local and global timing tasks using high-resolution imaging.

There is evidence for a functional distinction between cortical and subcortical brain areas of the network identified in global timing perception studies. The putamen, which is part of the basal ganglia, is associated specifically with beat and temporal regularity perception. In a rhythm discrimination task, increased activity in the putamen was observed for temporal patterns comprising temporal regularity compared to rhythms comprising no regularity (Grahn and Brett, 2007). This association of the putamen with beat perception was replicated in passive listening (Bengtsson et al., 2008), temporal pattern reproduction (Chen et al., 2008), pitch and intensity change detection (Grahn and Rowe, 2009; Geiser et al., 2012), and duration perception tasks (Teki et al., 2011). This suggests that the putamen responds to temporal regularity in auditory stimuli and that this response even occurs when listeners do not perform a temporal task. Future studies will need to investigate if such processing is automatic or attention dependent.

Beat and temporal regularity perception have also been investigated with magnetoencephalography (MEG) and electroencephalography (EEG). For example, steady-state evoked potentials have been measured to capture the brains response to beat perception (Nozaradan et al., 2011, 2012). Moreover, several studies reported a correlation between neural oscillations and beat perception. Specifically, neural oscillations are correlated with the perception of beat (gamma frequency range: Snyder and Large, 2004), temporal regularity of a sound sequence (delta frequency range: Stefanics et al., 2010; Henry and Obleser, 2012), and the tempo of temporally regular sound sequences (beta frequency range: Fujioka et al., 2012). Fujioka et al. localized modulations in the beta frequency band with the tempo of temporally regular sounds to the auditory cortex, postcentral gyrus, left cingulate cortex, SMA, and left inferior temporal gyrus. Whether this indicates a functional coupling in these brain areas during beat perception will need to be investigated in future studies. Furthermore, measures of neural oscillations in animal species that do not show spontaneous motor synchronization but might nevertheless show the ability to perceive beat (such as the European starling) could help elucidate which of these brain areas are sufficient for beat perception.

Tempo perception has been investigated with neuroimaging mostly in the context of beat perception. Not surprisingly, these studies have associated tempo perception with some of the same brain regions implicated in temporal regularity perception, specifically the primary sensory and cortico-striatal brain areas (Thaut, 2003; Schwartze et al., 2011; Henry et al., 2013), and the neural oscillations therein (Fujioka et al., 2012). Beat and tempo processing may therefore rely on a similar neural network, which may or may not be partially distinct from that involved in temporal grouping perception. Studying tempo perception by measuring the average perceived rate of a temporally non-regular stimulus could reveal the underlying mechanism of tempo perception without the confounding influence of beat perception.

Neuroimaging studies investigating temporal grouping perception are yet sparse. Tasks referred to as rhythm discrimination have relied on the perception of temporal grouping and revealed the general network of timing related brain areas including the STG, (pre-)SMA, basal ganglia, and cerebellum (Grahn and Brett, 2007). There are several behavioral studies in humans that report behavioral measures for temporal grouping (Trehub and Thorpe, 1989; ten Hoopen et al., 2006; Geiser and Gabrieli, 2013), but, to our knowledge, these measures have not yet been implemented in neuroimaging studies. In the future, studies should elucidate whether specific regions of the cortico-striatal network play a role in temporal grouping perception and whether these are functionally distinguishable from the brain areas involved in beat and tempo perception.

What are the potential neural mechanisms for encoding global timing information?

Before global timing information can be processed by multiple higher-order brain structures, the acoustic signal must be encoded in auditory cortex. In cats, inactivation of the anterior, but not the posterior, field of secondary auditory cortex impairs performance on a temporal pattern-matching task (Lomber and Malhotra, 2008) suggesting that the initial stage of temporal pattern processing may take place in a subset of higher auditory cortical fields. A neuron within auditory cortex can exhibit time-locked responses to repeated events (e.g., brief tones) as long as only one event falls within its temporal integration window. When multiple events fall within that window, that is, for faster occurring events, the firing rate of the neuron will co-vary with the number of events occurring within its temporal integration window (Lu et al., 2001; Anderson et al., 2006; Dong et al., 2011). This rate code represents the synthesis of multiple acoustic events and could, thus, reflect a first neural encoding mechanism toward computing global timing information.

In general, the temporal integration window in the auditory pathway varies. Previous reports suggest an integration windows of less than 10 ms in subcortical structures, ~20–25 ms in primary auditory cortex, and more than 100 ms in auditory fields downstream from primary auditory cortex in primates (Lu et al., 2001; Bartlett and Wang, 2007; Bendor and Wang, 2007; Walker et al., 2008). In human auditory cortex, two different processing timescales have been postulated: 25–50 and 200–300 ms (Boemio et al., 2005). Unlike other acoustic information related to music, such as pitch (Bendor and Wang, 2005; Bizley et al., 2013) and timbre (Walker et al., 2011), global timing requires the integration of temporal patterns spanning several seconds, thus exceeding the temporal integration window in primary or secondary auditory cortex of humans and non-human species. How does our brain solve this problem, and encode temporal patterns extending beyond a neuron's temporal integration window? One possibility is that these computations happen outside of auditory cortex in neurons with longer temporal integration windows (e.g., neurons sensitive to particular temporal patterns). However, evidence of such neurons is yet lacking. Alternatively, the time-locked neural activity to each acoustic event could first provide a passive representation of the global temporal pattern. Then, a distributed neuronal ensemble could perform higher-level timing computations on this temporal representation. While such timing computations have not been reported in auditory cortex, neural circuits capable of ensemble-based encoding of temporal or sequential patterns have been reported in two brain regions: (1) area HVC in song birds, and (2) the hippocampus of rodents. We speculate that similar mechanisms could be used to process the temporal patterns required for encoding global timing information and discuss these neural mechanisms in more detail below.

In the zebra finch, area HVC contains neurons that fire during the bird's song production in a mechanism referred to as synfire chain. Neural activity in a synfire chain is sparsely present throughout the song sequence, with each neuron being active at only one single time-point within the sequence and not necessarily correlated with the onset of a syllable (Hahnloser et al., 2002). At a population level, the spiking of neurons defines the tempo for the song's production. If HVC is cooled bilaterally, the interval between each sparse burst lengthens, effectively slowing down the neural representation of the song, and generating a slowed-down production of the bird's song (Long and Fee, 2008). Warming the HVC has the opposite effect. These data suggests that HVC acts as a clock for song timing. Intracellular recordings from HVC during song production indicate that the sequential pattern of neuronal firing is created by feed-forward excitation within a synfire chain (Long et al., 2010). Although HVC is only thought to play role in the production of a well-learned song (Aronov et al., 2008), one might speculate, that a neural mechanism similar to a synfire chain could be used for computing global timing information in other brain regions.

A different neural mechanism for encoding sequential information has been reported in the hippocampus of rodents. Neurons in the hippocampus are tuned to behaviorally relevant spatial (O'Keefe and Dostrovsky, 1971) and temporal information (Pastalkova et al., 2008; Kraus et al., 2013). A behavioral episode (e.g., a rat moving along a spatial trajectory) is encoded by the sequential activity of an ensemble of hippocampal neurons, with each neuron tuned to a different time point or location. This encoding interacts with the ongoing background theta (~8 Hz) oscillation in local field potentials by a process called “phase precession.” That is, the neuron fires a burst of spikes every theta cycle, with the timing of this burst gradually drifting earlier in each successive cycle. When the rodent enters a second neuron's place field, the relative phase of activity for the second neuron will consistently lag the firing of the first neuron. In each theta cycle, the two neurons consequently fire in a sequence matching the order the rodent passed through the two place fields. Since the time it takes to move through two overlapping place fields is longer than a theta cycle, phase precession effectively compresses a sequential pattern to fit the duration of a theta cycle (~125 ms). While the rodent is resting or sleeping, place cells spontaneously reactivate the temporally compressed version of this sequential pattern indicating that the hippocampus is capable of also storing the memory of a temporally compressed sequence (Lee and Wilson, 2002; Foster and Wilson, 2006). In principle, temporal compression of a sequence following the example of place field encoding and phase precession in the hippocampus could encode auditory temporal patterns spanning several seconds. Moreover, once a sequence is temporally compressed, neurons with shorter temporal integration windows (e.g., neurons in auditory cortex) could represent global timing information using a rate code. Recent evidence suggests that acoustical processing can influence hippocampal responses (Bendor and Wilson, 2012; Itskov et al., 2012). However, whether the hippocampus can encode acoustic temporal patterns in the same way it encodes space and time remains to be investigated.

Conclusions

We suggest global timing as a conceptual framework to investigate the temporal aspects of musical rhythm perception that require listeners to integrate temporal patterns spanning several seconds. Music perception in humans comprises three features of global timing—temporal grouping, beat, and tempo,—which are perceptually distinguishable yet interdependent. Both humans and non-human species make use of temporal grouping and tempo processing in auditory signals. These features are ecologically important in interpreting the vocal calls of many species. In contrast, beat perception—that aspect of global timing that often distinguishes music from other sounds—appears to be limited in most non-human species. Global timing perception relies on neural processes across a wide network of subcortical and cortical brain regions, including the basal ganglia, SMA, and prefrontal cortex, as well as a subset of fields within auditory cortex. The neural mechanisms that underlie global timing perception remain elusive. However, given the limited temporal integration window of neurons, it is likely that sequential activity within a neuronal ensemble is required to encode global timing. Further research into the neural basis of processing outside of auditory cortex may help in understanding how the brain processes global timing. The examination of vocal learners such as parrots or European starlings, that could be capable of beat perception, could be essential toward that goal. In future experiments, designs that allow dissociating temporal grouping, beat and tempo perception processes will be a necessary step toward unraveling neural mechanisms of global timing.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the three reviewers for their comments, which helped improve this manuscript. Eveline Geiser is funded by the McGovern Institute at Massachusetts Institute of Technology and by the Swiss National Science Foundation. Kerry M. M. Walker is funded by an Early Career Research Fellowship from the University of Oxford. Daniel Bendor is funded by the Capita Foundation.

References

- Anderson S. E., Kilgard M. P., Sloan A. M., Rennaker R. L. (2006). Response to broadband repetitive stimuli in auditory cortex of the unanesthetized rat. Hear. Res. 213, 107–117 10.1016/j.heares.2005.12.011 [DOI] [PubMed] [Google Scholar]

- Aronov D., Andalman A. S., Fee M. S. (2008). A specialized forebrain circuit for vocal babbling in the juvenile songbird. Science 320, 630–634 10.1126/science.1155140 [DOI] [PubMed] [Google Scholar]

- Aubin T., Brémond J. C. (1983). The process of species-specific song recognition in the skylark Alauda arvensis: an experimental study by means of synthesis. Zeitschr. Tierpsych. 61, 141–152 10.1111/j.1439-0310.1983.tb01334.x [DOI] [Google Scholar]

- Bartlett E. L., Wang X. (2007). Neural representations of temporally modulated signals in the auditory thalamus of awake primates. J. Neurophysiol. 97, 1005–1017 10.1152/jn.00593.2006 [DOI] [PubMed] [Google Scholar]

- Belin P., McAdams S., Thivard L., Smith B., Savel S., Zilbovicius M., et al. (2002). The neuroanatomical substrate of sound duration discrimination. Neuropsychologia 40, 1956–1964 10.1016/S0028-3932(02)00062-3 [DOI] [PubMed] [Google Scholar]

- Bendor D., Wang X. (2005). The neural representation of pitch in primate auditory cortex. Nature 436, 1161–1165 10.1038/nature03867 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendor D., Wang X. (2007). Differential neural coding of acoustic flutter within primate auditory cortex. Nat. Neurosci. 10, 763–771 10.1038/nn1888 [DOI] [PubMed] [Google Scholar]

- Bendor D., Wilson M. A. (2012). Biasing the content of hippocampal replay during sleep. Nat. Neurosci. 15, 1439–1444 10.1038/nn.3203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bengtsson S. L., Ullen F., Ehrsson H., Hashimoto T., Kito T., Naito E., et al. (2008). Listening to rhythms activates motor and premotor cortices. Cortex 45, 62–71 10.1016/j.cortex.2008.07.002 [DOI] [PubMed] [Google Scholar]

- Bizley J. K., Walker K. M. M., Nodal F. R., King A. J., Schnupp J. W. H. (2013). Auditory cortex represents both pitch judgments and the corresponding acoustic cues. Curr. Biol. 23, 620–625 10.1016/j.cub.2013.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boemio A., Fromm S., Braun A., Poeppel D. (2005). Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat. Neurosci. 8, 389–395 10.1038/nn1409 [DOI] [PubMed] [Google Scholar]

- Bueti D. (2011). The sensory representation of time. Front. Integr. Neurosci. 8:34 10.3389/fnint.2011.00034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J. L., Penhune V. B., Zatorre R. J. (2008). Listening to musical rhythms recruits motor regions of the brain. Cereb. Cortex 18, 2844–2854 10.1093/cercor/bhn042 [DOI] [PubMed] [Google Scholar]

- Clarke E. F. (1987). Action and perception in rhythm and music, in Categorical Rhythm Perception. An ecological perspective, ed Gabrielsson A. (Stockholm: Royal Swedish Academy of Music; ), 19–33 [Google Scholar]

- Cook P., Rouse A., Wilson M., Reichmuth C. J. (2013). A california sea lion (Zalophus californianus) can keep the beat: motor entrainment to rhythmic auditory stimuli in a non vocal mimic. J. Comp. Psychol. 127, 1–16 10.1037/a0032345 [DOI] [PubMed] [Google Scholar]

- Coull J. T. (2004). fMRI studies of temporal attention: allocating attention within, or towards, time. Cogn. Brain. Res. 21, 216–226 10.1016/j.cogbrainres.2004.02.011 [DOI] [PubMed] [Google Scholar]

- Coull J. T., Nobre A. C. (1998). Where and when to pay attention: the neural systems for directing attention to spatial locations and to time intervals as revealed by both PET and fMRI. J. Neurosci. 18, 7426–7435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desain P., Honing H. (2003). The formation of rhythmic categories and metric priming. Perception 32, 341–365 10.1068/p3370 [DOI] [PubMed] [Google Scholar]

- Deutsch D. (2013) Grouping mechanisms in music, in The Psychology of Music, ed Deutsch D. (San lDiego, CA: Elsevier; ), 183–248 10.1016/B978-0-12-381460-9.00006-7 [DOI] [Google Scholar]

- Dong C., Qin L., Liu Y., Zhang X., Sato Y. (2011). Neural responses in the primary auditory cortex of freely behaving cats while discriminating fast and slow click-trains. PLoS ONE 6:e25895 10.1371/journal.pone.0025895 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drake C., Jones M. R., Baruch C. (2000b). The development of rhythmic attending in auditory sequences: attunement, referent period, focal attending. Cognition 77, 251–288 10.1016/S0010-0277(00)00106-2 [DOI] [PubMed] [Google Scholar]

- Drake C., Penel A., Bigand E. (2000a). Tapping in time with mechanically and expressively performed music. Music Percept. 18, 1–24 10.2307/40285899 [DOI] [Google Scholar]

- Ferrandez A. M., Hugueville L., Lehericy S., Poline J. B., Marsault C., Pouthas V. (2003). Basal ganglia and supplementary motor area subtend duration perception: an fMRI study. Neuroimage 19, 1532–1544 10.1016/S1053-8119(03)00159-9 [DOI] [PubMed] [Google Scholar]

- Fitch W. T. (2013). Rhythmic cognition in humans and animals: distinguishing meter and pulse perception. Front. Syst. Neurosci. 7:68 10.3389/fnsys.2013.00068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitch W. T., Rosenfeld A. J. (2007). Perception and production of syncopated rhythms. Music. Percept. 25, 43–58 10.1525/mp.2007.25.1.4310696614 [DOI] [Google Scholar]

- Foster D. J., Wilson M. A. (2006). Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature 440, 680–683 10.1038/nature04587 [DOI] [PubMed] [Google Scholar]

- Fujioka T., Trainor L. J., Large E. W., Ross B. (2012). Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. J. Neurosci. 32, 1791–1802 10.1523/JNEUROSCI.4107-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiser E., Gabrieli J. D. (2013). Influence of rhythmic grouping on duration perception: a novel auditory illusion. PLoS ONE 8:e54273 10.1371/journal.pone.0054273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiser E., Notter M., Gabrieli J. D. E. (2012). A cortico-striatal neural system enhances auditory perception through temporal context processing. J. Neurosci. 32, 6177–6182 10.1523/JNEUROSCI.5153-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiser E., Sandmann P., Jancke L., Meyer M. (2010). Refinement of metre perception–training increases hierarchical metre processing. Eur. J. Neurosci. 32, 1979–1985 10.1111/j.1460-9568.2010.07462.x [DOI] [PubMed] [Google Scholar]

- Geiser E., Zaehle T., Jancke L., Meyer M. (2008). The neural correlate of speech rhythm as evidenced by metrical speech processing. J. Cogn. Neurosci. 20, 541–552 10.1162/jocn.2008.20029 [DOI] [PubMed] [Google Scholar]

- Gentner T. Q., Hulse S. H. (2000). Perceptual classification based on the component structure of song in European starlings. J. Acoust. Soc. Am. 107, 3369 10.1121/1.429408 [DOI] [PubMed] [Google Scholar]

- Grahn J. A. (2012). Neural mechanisms of rhythm perception: current findings and future perspectives. Top. Cogn. Sci. 4, 585–606 10.1111/j.1756-8765.2012.01213.x [DOI] [PubMed] [Google Scholar]

- Grahn J. A., Brett M. (2007). Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906 10.1162/jocn.2007.19.5.893 [DOI] [PubMed] [Google Scholar]

- Grahn J. A., McAuley J. D. (2009). Neural bases of individual differences in beat perception. Neuroimage 47, 1894–1903 10.1016/j.neuroimage.2009.04.039 [DOI] [PubMed] [Google Scholar]

- Grahn J. A., Rowe J. B. (2009). Feeling the beat: premotor and striatal interactions in musicians and nonmusicians during beat perception. J. Neurosci. 29, 7540–7548 10.1523/JNEUROSCI.2018-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagmann C. E., Cook R. G. (2010). Testing meter, rhythm, and tempo discriminations in pigeons. Behav. Process. 85, 99–110 10.1016/j.beproc.2010.06.015 [DOI] [PubMed] [Google Scholar]

- Hahnloser R. H., Kozhevnikov A. A., Fee M. S. (2002). An ultra-sparse code underliesthe generation of neural sequences in a songbird. Nature 419, 65–70 10.1038/nature00974 [DOI] [PubMed] [Google Scholar]

- Hannon E. E., Soley G., Levine R. S. (2011). Constraints on infants' musical rhythm perception: effects of interval ratio complexity and enculturation. Dev. Sci. 14, 865–872 10.1111/j.1467-7687.2011.01036.x [DOI] [PubMed] [Google Scholar]

- Hannon E. E., Vanden Bosch der Nederlanden C. M., Tichko P. (2012). Effects of perceptual experience on children's and adults' perception of unfamiliar rhythms. Ann. N.Y. Acad. Sci. 1252, 92–99 10.1111/j.1749-6632.2012.06466.x [DOI] [PubMed] [Google Scholar]

- Henry M. J., Hermann B., Obleser J. (2013). Selective attention to temporal features on nested time scales. Cereb. Cortex. [Epub ahead of print]. 10.1093/cercor/bht240 [DOI] [PubMed] [Google Scholar]

- Henry M. J., Obleser J. (2012). Frequency modulation entrains slow neural oscillations and optimizes human listening behavior. Proc. Natl. Acad. Sci. U.S.A. 109, 20095–20100 10.1073/pnas.1213390109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Honing H., Merchant H., Háden G., Prado L., Bartolo R. (2012). Rhesus monkeys (Macaca mulatta) detect rhythm groups in music, but not the beat. PLoS ONE 7:e51369 10.1371/journal.pone.0051369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hulse S., Kline C. (1993). The perception of time relations in auditory tempo discrimination. Anim. Learn. Behav. 21, 281–288 10.3758/BF03197992 [DOI] [Google Scholar]

- Hulse S. H., Humpal J., Cynx J. (1984). Discrimination and generalization of rhythmic and arrhythmic sound patterns by European starlings. Music Percept. 1, 442–464 10.2307/40285272 [DOI] [Google Scholar]

- Hulse S. H., Takeuchi A. H., Braaten R. F. (1992). Perceptual invariances in the comparative psychology of music. Music Percept. 10, 151–184 10.2307/40285605 [DOI] [Google Scholar]

- Itskov P. M., Vinnik E., Honey C., Schnupp J., Diamond M. E. (2012). Sound sensitivity of neurons in rat hippocampus during performance of a sound-guided task. J. Neurophysiol. 107, 1822–1834 10.1152/jn.00404.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jouventin P., Guilotin M., Cornet A. (1979). Le chant du Manchot empereur et sa signification adaptive. Behaviour 70, 231–250 10.1163/156853979X00070 [DOI] [Google Scholar]

- Kostarakos K., Hedwig B. (2012). Calling song recognition in female crickets: temporal tuning of identified brain neurons matches behavior. J. Neurosci. 32, 9601–9612 10.1523/JNEUROSCI.1170-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraus B. J., Robinson R. J., II., White J. A., Eichenbaum H., Hasselmo M. E. (2013). Hippocampal time cells: time versus path integration. Neuron 78, 1090–1101 10.1016/j.neuron.2013.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee A. K., Wilson M. A. (2002). Memory of sequential experience in the hippocampus during slow wave sleep. Neuron 36, 1183–1194 10.1016/S0896-6273(02)01096-6 [DOI] [PubMed] [Google Scholar]

- Lewis P. A., Miall R. C. (2003). Distinct systems for automatic and cognitively controlled time measurement: evidence from neuroimaging. Curr. Opin. Neurobiol. 13, 250–255 10.1016/S0959-4388(03)00036-9 [DOI] [PubMed] [Google Scholar]

- Lomber S. G., Malhotra S. (2008). Double dissociation of “what” and “where” processing in auditory cortex. Nat. Neurosci. 11, 609–616 10.1038/nn.2108 [DOI] [PubMed] [Google Scholar]

- Long M. A., Fee M. S. (2008). Using temperature to analyse temporal dynamics in the songbird motor pathway. Nature 456, 189–194 10.1038/nature07448 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long M. A., Jin D. Z., Fee M. S. (2010). Support for a synaptic chain model of neuronal sequence generation. Nature 468, 394–399 10.1038/nature09514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu T., Liang L., Wang X. (2001). Temporal and rate representations of time-varying signals in the auditory cortex of awake primates. Nat. Neurosci. 4, 1131–1138 10.1038/nn737 [DOI] [PubMed] [Google Scholar]

- McAuley J. D. (2010). Tempo and rhythm, in Music Perception, ed Jones M. R., Fay R. R., Popper A. N. (New York, NY: Springer; ), 165–199 10.1007/978-1-4419-6114-3_6 [DOI] [Google Scholar]

- McDermott J., Hauser M. D. (2005). The origins of music: innateness, uniqueness, and evolution. Music. Percept. 23, 29–59 10.1525/mp.2005.23.1.29 [DOI] [Google Scholar]

- McDermott J., Hauser M. D. (2007). Nonhuman primates prefer slow tempos but dislike music overall. Cognition 104, 654–668 10.1016/j.cognition.2006.07.011 [DOI] [PubMed] [Google Scholar]

- Nozaradan S., Perety I., Missal M., Mouraux A. (2011). Tagging the neuronal entrainment to beat and meter. J. Neurosci. 31, 10234–10249 10.1523/JNEUROSCI.0411-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozaradan S., Perety I., Mouraux A. (2012). Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J. Neurosci. 32, 17572–17581 10.1523/JNEUROSCI.3203-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J., Dostrovsky J. (1971). The hippocampus as a spatial map. Preliminary evidence from single unit activity in the freely-moving rat. Brain Res. 34, 171–175 10.1016/0006-8993(71)90358-1 [DOI] [PubMed] [Google Scholar]

- Pastalkova E., Itskov V., Amarasingham A., Buzsaki G. (2008). Internally generated cell assembly sequences in the rat hippocampus. Science 321, 1322–1327 10.1126/science.1159775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel A. D. (2006). Music rhythm, linguistic rhythm, and human evolution. Music Percept. 24, 99–104 10.1525/mp.2006.24.1.99 [DOI] [Google Scholar]

- Patel A. D., Iversen J. R., Bregman M. R., Schulz I. (2009a). Experimental evidence for synchronization to a musical beat in a nonhuman animal. Curr. Biol. 19, 827–830 10.1016/j.cub.2009.03.038 [DOI] [PubMed] [Google Scholar]

- Patel A. D., Iversen J. R., Bregman M. R., Schulz I. (2009b). Studying synchronization to a musical beat in nonhuman animals. Ann. N.Y. Acad. Sci. 1169, 459–469 10.1111/j.1749-6632.2009.04581.x [DOI] [PubMed] [Google Scholar]

- Phillips-Silver J., Trainor L. J. (2005). Feeling the beat in music: movement influences rhythm perception in infants. Science 308, 1430 10.1126/science.1110922 [DOI] [PubMed] [Google Scholar]

- Pouthas V., George N., Poline J. B., Pfeuty M., VandeMoorteele P. F., Hugueville L., et al. (2005). Neural network involved in time perception: an fMRI study comparing long and short interval estimation. Hum. Brain Mapp. 25, 433–441 10.1002/hbm.20126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Povel D. J. (1981). Internal representation of simple temporal patterns. J. Exp. Psychol. Hum. Percept. Perform. 7, 3–18 10.1037/0096-1523.7.1.3 [DOI] [PubMed] [Google Scholar]

- Povel D. J., Essens P. (1985). Perception of temporal patterns. Music Percept. 2, 411–440 10.2307/40285311 [DOI] [PubMed] [Google Scholar]

- Ramus F., Hauser M. D., Miller C. T., Morris D., Mehler J. (2000). Language discrimination by human newborns and cotton-top tamarins. Science 288, 349–351 10.1126/science.288.5464.349 [DOI] [PubMed] [Google Scholar]

- Rao S. M., Mayer A. R., Harrington D. L. (2001). The evolution of brain activation during temporal processing. Nat. Neurosci. 4, 317–323 10.1038/85191 [DOI] [PubMed] [Google Scholar]

- Schachner A., Brady T. F., Pepperberg I., Hauser M. (2009). Spontaneous motor entrainment to music in multiple vocal mimicking species. Curr. Biol. 19, 831–836 10.1016/j.cub.2009.03.061 [DOI] [PubMed] [Google Scholar]

- Schneider S., Lickliter R. (2009). Operant generalization of auditory tempo in quail neonates. Psychon. Bull. Rev. 16, 145 10.3758/PBR.16.1.145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulze H. H. (1989). Categorical perception of rhythmic patterns. Psychol. Res. 51, 10–15 10.1007/BF00309270 [DOI] [Google Scholar]

- Schwartze M., Keller P. E., Patel A. D., Kotz S. A. (2011). The impact of basal ganglia lesions on sensorimotor synchronization, spontaneous motor tempo, and the detection of tempo changes. Behav. Brain Res. 216, 685–691 10.1016/j.bbr.2010.09.015 [DOI] [PubMed] [Google Scholar]

- Slabbekoorn H., ten Cate C. (1999). Collared dove responses to playback: slaves to the rhythm. Ethology 105, 377–391 10.1046/j.1439-0310.1999.00420.x [DOI] [Google Scholar]

- Snyder J. S., Large E. W. (2004). Tempo dependence of middle- and long-latency auditory responses: power and phase modulation of the EEG at multiple time-scales. Clin. Neurophysiol. 115, 1885–1895 10.1016/j.clinph.2004.03.024 [DOI] [PubMed] [Google Scholar]

- Stefanics G., Hangya B., Hernadi I., Winkler I., Lakatos P., Ulbert I. (2010). Phase entrainment of human delta oscillations can mediate the effects of expectation on reaction speed. J. Neurosci. 30, 13578–13585 10.1523/JNEUROSCI.0703-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S., Grube M., Griffiths T. D. (2012). A unified model of time perception accounts for duration-based and beat-based timing mechanisms. Front. Integr. Neurosci. 5:90 10.3389/fnint.2011.00090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S., Grube M., Kumar S., Griffiths T. D. (2011). Distinct neural substrates of duration-based and beat-based auditory timing. J. Neurosci. 31, 3805–3812 10.1523/JNEUROSCI.5561-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- ten Hoopen G., Sasaki T., Nakajima Y., Remijn G., Massier B., et al. (2006). Timeshrinking and categorical temporal ratio perception: evidence for a 1:1 temporal category. Music Percept. 24, 1–22 10.1525/mp.2006.24.1.1 [DOI] [Google Scholar]

- Thaut M. H. (2003). Neural basis of rhythmic timing networks in the human brain. Ann. N.Y. Acad. Sci. 999, 364–373 10.1196/annals.1284.044 [DOI] [PubMed] [Google Scholar]

- Trehub S. E., Thorpe L. A. (1989). Infants' perception of rhythm: categorization of auditory sequences by temporal structure. Can. J. Psychol. 43, 217–229 10.1037/h0084223 [DOI] [PubMed] [Google Scholar]

- Walker K. M., Ahmed B., Schnupp J. W. (2008). Linking cortical spike pattern codes to auditory perception. J. Cogn. Neurosci. 20, 125–152 10.1162/jocn.2008.20012 [DOI] [PubMed] [Google Scholar]

- Walker K. M., Bizley J. K., King A. J., Schnupp J. W. (2011). Multiplexed and robust representations of sound features in auditory cortex. J. Neurosci. 31, 14565–14576 10.1523/JNEUROSCI.2074-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winkler I., Gabor P. H., Ladinig O., Sziller I., Honing H. (2009). Newborn infants detect the beat in music. Proc. Natl. Acad. Sci. U.S.A. 106, 2468–2471 10.1073/pnas.0809035106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zarco W., Merchant H., Prado L., Mendez J. C. (2009). Subsecond timing in primates: comparison of interval production between human subjects and Rhesus monkeys. J. Neurophysiol. 102, 3191–3202 10.1152/jn.00066.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]