Abstract

Robotic surgery has expanded rapidly over the past two decades and is in widespread use among the surgical subspecialties. Clinical applications in plastic surgery have emerged gradually over the last few years. One of the promising applications is robotic-assisted microvascular anastomosis. Here the authors first describe a process by which an assessment instrument they developed called the Structured Assessment of Robotic Microsurgical Skills (SARMS) was validated. The instrument combines the previously validated Structured Assessment of Microsurgical Skills (SAMS) with other skill domains in robotic surgery. Interrater reliability for the SARMS instrument was excellent for all skill areas among four expert, blinded evaluators. They then present a process by which the learning curve for robotic-assisted microvascular anastomoses was measured and plotted. Ten study participants performed five robotic microanastomoses each that were recorded, deidentified and scored. Trends in SARMS scores were plotted. All skill areas and overall performance improved significantly for each participant over the five microanastomotic sessions, and operative time decreased for all participants. The results showed an initial steep ascent in technical skill acquisition followed by more gradual improvement, and a steady decrease in operative times for the cohort. Participants at all levels of training, ranging from minimal microsurgical experience to expert microsurgeons gained proficiency over the course of five robotic sessions.

Keywords: robotic microsurgery, surgical skills assessment, surgical evaluation, surgical training, robotic skills assessment

Background

Despite the marked advances in surgical training, microsurgery training primarily remains an apprenticeship model. Considering the complexity of microsurgery and the consequences of failure, the lack of a standardized, quantitative system to evaluate surgeon skills, provide feedback, and measure training endpoints raises major quality control issues.1 The need for such a standardized microsurgery training model with defined measurable endpoints of training has been long overdue.

One instrument for surgical evaluation of conventional microsurgery is the Structured Assessment of Microsurgical Skills (SAMS). The SAMS model assesses four areas: dexterity, visuospatial ability, operative flow, and judgment, and was formulated from other previously validated assessment tools including the Imperial College Surgical Assessment Device and the Observed Structured Assessment of Technical Skills.2 3 4 5 This model was designed to assess technical skills during conventional microsurgery, and has been validated in a recent series at the authors' home institution.6

Robotically assisted microsurgery or telemicrosurgery is defined as a microsurgical technique that uses robotic telemanipulators to control an effector component that enhances the precision of surgical movements.7 Robotic microsurgical skills combine many of the same principles as conventional microsurgery with an additional skill set that is unique to the use of the surgical robot. To capture this blend of skills, we created the Structured Assessment of Robotic Microsurgery Skills (SARMS). This evaluation system combines the SAMS scoring system with other validated skill domains pertaining to robotic surgery.8 The SARMS includes three parameters to assess conventional microsurgical skills: (1) dexterity, (2) visuospatial ability, and (3) operative flow. The robotic skills include six additional parameters: (1) camera movement, (2) depth perception, (3) wrist articulation, (4) atraumatic tissue handling, and (5) atraumatic needle handling (Table 1).

Table 1. Structured Assessment of Robotic Microsurgical Skills (SARMS).

| Microsurgical skills | |||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| Dexterity | Bimanual dexterity | Lack of use of non-dominant hand | Occasionally awkward use of nondominant hand | Fluid movements with both hands working together | |||

| Tissue handling | Frequently unnecessary force with tissue damage | Careful but occasional inadvertent tissue damage | Consistently appropriate with minimal tissue damage | ||||

| Visuospatial ability | Microsuture placement | Frequently lost suture and uneven placement | Occasionally uneven suture placement | Consistently, delicately, and evenly spaced sutures | |||

| Knot technique | Unsecure knots | Occasional awkward knot tying and improper tension | Consistently, delicately, and evenly placed sutures | ||||

| Operative flow | Motion | Many unnecessary or repetitive moves | Efficient but some unnecessary moves | Economy of movement and maximum efficiency | |||

| Speed | Excessive time at each step due to poor dexterity | Efficient time but some unnecessary or repetitive moves | Excellent speed and superior dexterity without awkward moves | ||||

| Robotic skills | |||||||

| 1. Camera movement | Unable to maintain focus or suitable view | Occasionally out of focus and inappropriately view | Continually in focus and appropriate view | ||||

| 2. Depth perception | Frequent inability to judge object distance | Occasional empty grasp | Consistently able to judge spatial relations | ||||

| 3. Wrist articulation | Little or awkward wrist movement | Occasionally inappropriate wrist movement or angles | Continually using full range of endowrist motion | ||||

| 4. Atraumatic needle handling | Consistent bending/breakage of needle | Occasional bending/breakage of needle | Consistently undamaged needle | ||||

| 5. Atraumatic tissue handling | Consistent inappropriate grasping/crushing or overspreading of tissue | Occasional inappropriate grasping/crushing or over spreading of tissue | Consistently gentle handling of tissue | ||||

| 1 | 2 | 3 | 4 | 5 | |||

| Overall performance | Poor | Borderline | Satisfactory | Good | Excellent | ||

| Indicative skill | Novice | Advanced Beginner | Competent | Proficient | Expert | ||

We describe a process, the scientific details of which are presented elsewhere, that validates the SARMS as a tool to capture robotic microsurgical skill, and then tracks the maturation process of robotic microsurgical skills in a heterogeneous cohort. We demonstrate that operative times decrease steadily for all users, and that SARMS scores improve in all skill areas for all participants. Ultimately, the goal of any technical training program should be to create a curriculum that includes targeted feedback and results in competency-based training. We believe those presented herein are the first steps down the road to that goal in robotic microsurgery.

Training Model and Laboratory Experience

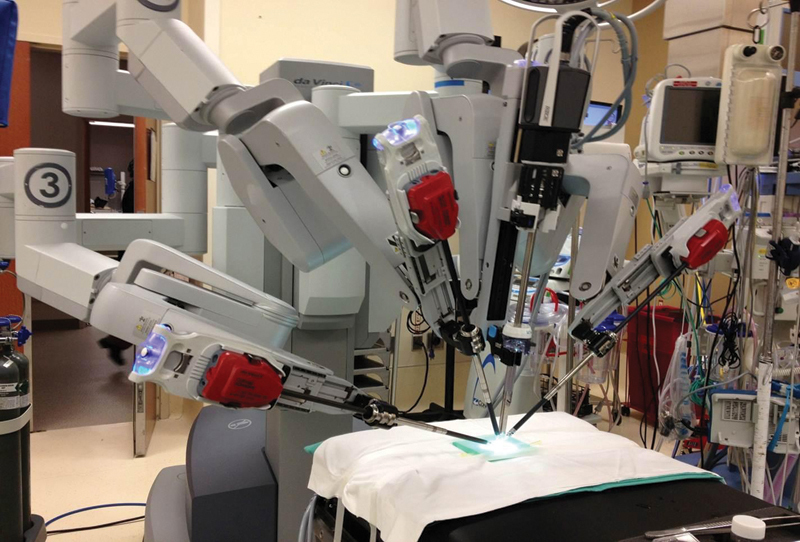

Ten participants from the Department of Plastic and Reconstructive Surgery at MD Anderson Cancer Center with various levels of training from no experience to experienced microsurgeons were studied. Participants completed a short survey detailing demographic information, and previous experience including conventional microsurgery, the robotic surgery simulator, and video games, to determine if in any of these areas affected primary or secondary outcomes of skill and operative time. Each participant then completed an online training module (da Vinci Surgical System Online Training Module, Intuitive Surgical, Sunnyvale, CA). Next, an interactive instructional session was designed and conducted in the operating room on an actual standard da Vinci system. This session began with verification of satisfactory completion of assigned precourse materials followed by a hands-on demonstration of appropriate set-up and usage of all system components.

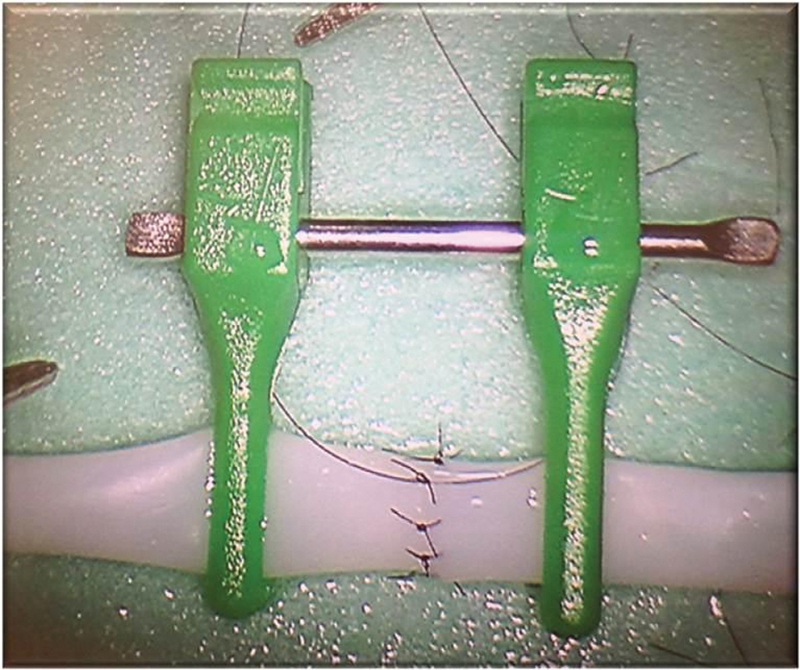

Following these survey and preparatory measures, a dry laboratory was undertaken utilizing the da Vinci robot to plot the learning curve of participants. For the experimental model, a 3-mm synthetic vessel (Biomet, Warsaw, IN) was fixed to a simple foam platform (Fig. 1). The vessel was divided in half and then secured with a double-opposing vascular clamp. For the robot set-up, two articulated arms equipped with Black Diamond forceps and a high definition, 0-degree endoscope was used. Sessions were conducted using an actual operating room and a robotic system that is in regular clinical use. The microvascular anastomosis was performed using 9–0 nylon, and end-to-end 0/180 degree suture technique with eight interrupted sutures required for completion of the task (Fig. 2). Each participant completed five robotic-assisted, microvascular anastomoses over the study period.

Fig. 1.

The setup for the laboratory experiment used the da Vinci Surgical System (Intuitive Surgical, Sunnyvale, CA). Instrumentation included two robotic arms employing Black Diamond Micro forceps (Intuitive Surgical) and a high definition, 0-degree endoscope.

Fig. 2.

A 3-mm synthetic vessel was used for the anastomotic model. The vessel was divided and secured with double-opposing vascular clamps. Zero- and 180-degree sutures were placed, followed by three sutures in between on each side for a total of eight. All suturing was performed robotically and the synthetic vessel was mounted on a foam platform.

SARMS Validation

To validate SARMS as an assessment tool because it has never been used before this study, six of the videos were selected at random. The four world's leading experts on robotic microsurgery were selected as blinded evaluators. These included Sijo Paraketil (University of South Florida), Philippe Liverneaux (University of Strasbourg, FR), Gustavo Mantovani (Sao Paulo, BR), and Jesse Selber (MD Anderson Cancer Center). The order, the identity of the participant, and the session number of the videos were randomized and raters were blinded to these data. Each skill was evaluated using the SARMS on a 1 to 5 scale, 1 being the worst and 5 being the best. Raters were then compared for agreement.

Statistical Analysis

A paired t test was used to compare changes in SARMS scores for each technical component for each participant over the study period. The Cronbach's α coefficient was used to determine consistency in application of SARMS.9 The kappa statistic was used to determine agreement among raters.10 A value of p < 0.05 was considered significant. All analyses were performed in SAS 9.3 (SAS Institute Inc., Cary, NC) and R 3.0.2 (The R Foundation for Statistical Computing).

Results

Ten plastic surgeons participated in this study, nine of whom completed all five video sessions. Each surgeon performed five robotic-assisted, microsurgical anastomoses. All sessions were recorded. The length of the operative procedures ranged from 9 to 44 minutes. The 45 videos were subjected to blind evaluation using the Structured Assessment of Robotic Microsurgery Skills (SARMS) model, which consists of 11 items, 6 for microsurgery skills and 5 for robotic skills.

The Cronbach's α coefficient was used to determine consistency in the application of the SARMS instrument (Table 2). Our data show that the interrater consistencies for the SARMS are good on all items. The instrument has excellent consistency on overall performance and individual skills such as bimanual dexterity, microsuture placement, motion, speed, depth perception, wrist articulation, and atraumatic handling of both needles and tissue. Kappa scores were used to assess agreement among multiple raters. The kappa statistic for most skills was between 0.4 and 0.6, which indicate moderate agreement among raters when using SARMS to assess the same video. Raters demonstrated good agreement in assessing overall performance (κ = 0.625).

Table 2. Consistency of Structured Assessment of Robotic Microsurgical Skills.

| Cronbach's α | |||

|---|---|---|---|

| All raters | W/O rater 1 | ||

| Dexterity | Bimanual dexterity | 0.916 | 0.969 |

| Tissue handling | 0.823 | 0.795 | |

| Visuospatial ability | Microsuture placement | 0.920 | 0.904 |

| Knot technique | 0.872 | 0.907 | |

| Operative flow | Motion | 0.939 | 0.914 |

| Speed | 0.951 | 0.940 | |

| 6. Camera movement | 0.888 | 0.841 | |

| 7. Depth perception | 0.907 | 0.951 | |

| 8. Wrist articulation | 0.917 | 0.922 | |

| 9. Communication | 0.906 | 0.718 | |

| 10. Atraumatic needle handling | 0.957 | 0.937 | |

| 11. Atraumatic tissue handling | 0.924 | 0.901 | |

| Average score | 0.867 | 0.837 | |

| Overall performance | 0.925 | 0.857 | |

| Indicative skills | 0.909 | 0.855 | |

Table 3 lists the mean SARMS scores for each of the skill areas during each of the sessions. Generally, the microsurgical skills increased in each successive practice session. For all the robotic microsurgical skills, data confirmed that the more sessions, the better the skills (all βs are > 0). Among them, atraumatic needle handling and atraumatic tissue handling improved the fastest (β = 0.60 and 0.57, respectively).

Table 3. Summary of the score of Structured Assessment of Robotic Microsurgical Skills.

| 1 | 2 | 3 | 4 | 5 | ||

|---|---|---|---|---|---|---|

| Dexterity | Bimanual dexterity | 2.78 (0.67) | 3.22 (0.83) | 3.56 (0.73) | 3.78 (1.09) | 4.11 (0.93) |

| Tissue handling | 2.00 (0.71) | 3.00 (0.71) | 3.67 (0.71) | 3.78 (0.83) | 3.89 (0.60) | |

| Visuospatial ability | Microsuture placement | 2.22 (0.97) | 2.89 (0.93) | 3.22 (0.97) | 3.00 (0.87) | 3.56 (0.53) |

| Knot technique | 2.22 (0.44) | 3.00 (0.71) | 3.44 (0.53) | 3.56 (0.73) | 3.67 (0.50) | |

| Operative flow | Motion | 1.78 (0.67) | 2.33 (0.71) | 3.00 (0.87) | 3.00 (1.12) | 3.56 (0.73) |

| Speed | 1.89 (0.60) | 2.44 (0.73) | 3.00 (0.87) | 3.11 (1.05) | 3.78 (0.83) | |

| 12. Camera movement | 2.33 (0.50) | 2.67 (0.71) | 3.11 (0.60) | 3.33 (1.00) | 4.00 (0.00) | |

| 13. Depth perception | 3.11 (0.60) | 3.44 (0.73) | 3.44 (0.73) | 3.67 (0.87) | 4.11 (0.33) | |

| 14. Wrist articulation | 2.67 (0.50) | 3.22 (0.67) | 3.67 (0.71) | 3.67 (1.12) | 4.11 (0.33) | |

| 15. Atraumatic needle handling | 2.33 (0.87) | 2.78 (0.97) | 3.56 (0.53) | 3.67 (0.87) | 4.11 (0.60) | |

| 16. Atraumatic tissue handling | 2.33 (0.71) | 3.11 (0.93) | 3.67 (0.71) | 3.89 (0.60) | 4.22 (0.44) | |

| Overall performance | 2.11 (0.33) | 2.44 (0.73) | 3.00 (0.50) | 3.11 (0.78) | 3.89 (0.60) | |

Univariate analysis of previous experience in various domains demonstrated that microsurgical experience and number of robotic simulator hours were associated with certain robotic microsurgical skills. Participants with higher levels of conventional microsurgical expertise performed better overall in the robotic microsurgery tasks (β = 0.32, p = 0.048). Previous robotic experience, laparoscopic experience, and video game experience did not show any impact on robotic microsurgical skills. Microsurgery experience and laparoscopic experience were both related to faster improvement in motion and camera-movement skills. Table 4 lists the effect of session number on operative times. All participants experienced steady decreases in operative time over the course of the five sessions. Shorter operative times were associated with previous microsurgical experience, but not video game or laparoscopic experience, and changes in operative times were independent of any experience.

Table 4. Univariate analysis of time effect of robotic microsurgical skill acquisitions.

| β | 95% CI | p Value | ||

|---|---|---|---|---|

| Dexterity | Bimanual dexterity | 0.35 | 0.12–0.58 | 0.003 |

| Tissue handling | 0.48 | 0.37–0.60 | <0.001 | |

| Visuospatial ability | Microsuture placement | 0.30 | 0.15–0.45 | <0.001 |

| Knot technique | 0.32 | 0.24–0.40 | <0.001 | |

| Operative flow | Motion | 0.34 | 0.17–0.50 | <0.001 |

| Speed | 0.32 | 0.12–0.52 | 0.002 | |

| 1. Camera movement | 0.38 | 0.28–0.48 | <0.001 | |

| 2. Depth perception | 0.23 | 0.14–0.31 | <0.001 | |

| 3. Wrist articulation | 0.30 | 0.15–0.46 | <0.001 | |

| 4. Atraumatic needle handling | 0.60 | 0.40–0.80 | <0.001 | |

| 5. Atraumatic tissue handling | 0.57 | 0.35–0.79 | <0.001 | |

| Overall performance | 0.42 | 0.36–0.47 | <0.001 | |

Discussion

Robotic microsurgery has many theoretical advantages. Compete tremor elimination and up to 5-to-1 motion scaling provide the robot with superhuman levels of precision. In no area is this attribute more critical than in microsurgery where success is measured in nanometers. Because of the inherent benefit of the robotic surgical platform, there is considerable interest in defining its role in microsurgery. To do this systematically, we have developed a well-defined anastomotic model, a validated assessment tool and a general plot of the trajectory of both naive and experienced learners.

In this article, we described a new, robotic-assisted microsurgical evaluation system, the SARMS that demonstrated good consistency and interrater reliability. We further showed improvement in robotic microsurgical skill across a heterogeneous group of learners over a relatively compressed learning schedule and number of practice sessions. All skill areas and overall performance improved significantly for each participant over the five robotic-assisted microanastomosis sessions. Operative time decreased over the study for all participants. The results showed an initial steep ascent in technical skill acquisition followed by more gradual improvement, and a steady decrease in operative times that ranged between 1.2 hours and 9 minutes. Prior experience with conventional microsurgery did help skill acquisition in certain specific areas but was not essential, indicating that the technical aspects of robotically assisted microsurgery can be gained by learners with no prior microsurgery experience.11 This obviously has clear implications for the structure of future curricula that in this case could be taught in series or in parallel as they are not strictly cumulative skills.

The concept of a learning curve is being used increasingly in surgical training and education to denote the process of gaining knowledge and improving skills in performing a surgical procedure.12 It provides an objective assessment of technical ability and a benchmark to compare surgical approaches and technologies. The learning curve is defined by the number of cases required to achieve technical competence at performing a particular surgery.12 Learning curves are commonly assessed using operative time as a surrogate, assuming that operative times improve as the surgeon becomes more facile. Although this endpoint is easy to measure, it is fundamentally low resolution in that it does not provide objective definitions of learning and is not a direct indicator of learning.13 Other evaluation tools have been developed that promise more technique-specific evaluation of learning curves. Both microsurgical learning curves and robotic learning curves have previously been described, but robotic microsurgery has not been rigorously studied elsewhere.14 15 The SARMS evaluation system is unique in that it combines previously validated skill assessment parameters for both microsurgery and robotic surgery.

Attempting to define learning curves in a clinical setting is fraught with challenges. Variation in patient anatomy, operative conditions and many other factors make each clinical situation different, and introduces inherent variation into the evaluation of the learner. The microsurgical anastomosis is as close to an ideal model for evaluating skills and defining a learning curve as possible because unlike the constantly shifting sands of a clinical case, it is a finite task with a straightforward sequence of steps. By using synthetic vessels of a predetermined size and a proscribed technical method, the model becomes extremely reproducible with almost no variation introduced except the skill and alacrity of the learner. Once the model is established, an evaluation system can be tested, as we have done using the SARMS, and once validation is established, a learning curve can be plotted using a range of participants from the surgically naïve to the experienced microsurgeon as we have shown.

Creating measurement tools and models of learning are the first steps on the road to customized education, curricular design with individual assessment and targeted feedback, and competency-based learning, which is the future of surgical education.

Conclusion

The Structured Assessment of Robotic Microsurgical Skills (SARMS) is the first validated instrument for assessing robotic microsurgical skills. Proficiency in robotic microsurgical skills can be achieved over a relatively limited number of practice sessions. Standardized evaluation and systematic learning of both robotic and conventional surgical techniques is necessary and lies at the heart of competency-based training.

References

- 1.Liverneaux P A, Berner S H, Bednar M S, Paris, France: Springer; 2013. Telemicrosurgery: robot assisted microsurgery. [Google Scholar]

- 2.Balasundaram I, Aggarwal R, Darzi L A. Development of a training curriculum for microsurgery. Br J Oral Maxillofac Surg. 2010;48(8):598–606. doi: 10.1016/j.bjoms.2009.11.010. [DOI] [PubMed] [Google Scholar]

- 3.Temple C L, Ross D C. A new, validated instrument to evaluate competency in microsurgery: the University of Western Ontario Microsurgical Skills Acquisition/Assessment instrument [outcomes article] Plast Reconstr Surg. 2011;127(1):215–222. doi: 10.1097/PRS.0b013e3181f95adb. [DOI] [PubMed] [Google Scholar]

- 4.Chan W, Niranjan N, Ramakrishnan V. Structured assessment of microsurgery skills in the clinical setting. J Plast Reconstr Aesthet Surg. 2010;63(8):1329–1334. doi: 10.1016/j.bjps.2009.06.024. [DOI] [PubMed] [Google Scholar]

- 5.Chan W Y, Matteucci P, Southern S J. Validation of microsurgical models in microsurgery training and competence: a review. Microsurgery. 2007;27(5):494–499. doi: 10.1002/micr.20393. [DOI] [PubMed] [Google Scholar]

- 6.Selber J C, Chang E I, Liu J. et al. Tracking the learning curve in microsurgical skill acquisition. Plast Reconstr Surg. 2012;130(4):550e–557e. doi: 10.1097/PRS.0b013e318262f14a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liverneaux P, Nectoux E, Taleb C. The future of robotics in hand surgery. Chir Main. 2009;28(5):278–285. doi: 10.1016/j.main.2009.08.002. [DOI] [PubMed] [Google Scholar]

- 8.Dulan G, Rege R V, Hogg D C. et al. Developing a comprehensive, proficiency-based training program for robotic surgery. Surgery. 2012;152(3):477–488. doi: 10.1016/j.surg.2012.07.028. [DOI] [PubMed] [Google Scholar]

- 9.Cronbach L J. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16:297–334. [Google Scholar]

- 10.Tavakoli A S et al. Calculating-multi-rater observation agreement in health care research using the SAS Kappa Statistic Paper presented at: SAS Global Forum 2012; April 22–25, 2012; Orlando, FL

- 11.Karamanoukian R L, Bui T, McConnell M P, Evans G R, Karamanoukian H L. Transfer of training in robotic-assisted microvascular surgery. Ann Plast Surg. 2006;57(6):662–665. doi: 10.1097/01.sap.0000229245.36218.25. [DOI] [PubMed] [Google Scholar]

- 12.Hernandez J D, Bann S D, Munz Y. et al. Qualitative and quantitative analysis of the learning curve of a simulated surgical task on the da Vinci system. Surg Endosc. 2004;18(3):372–378. doi: 10.1007/s00464-003-9047-3. [DOI] [PubMed] [Google Scholar]

- 13.Darzi A, Smith S, Taffinder N. Assessing operative skill. Needs to become more objective. BMJ. 1999;318(7188):887–888. doi: 10.1136/bmj.318.7188.887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee J Y, Matter T, Parisi T J, Carlsen B T, Bishop A T, Shin A Y. Learning curve of robotic-assisted microvascular anastomosis in the rat. J Reconstr Microsurg. 2012;28(7):451–456. doi: 10.1055/s-0031-1289166. [DOI] [PubMed] [Google Scholar]

- 15.Seamon L G, Cohn D E, Richardson D L. et al. Robotic hysterectomy and pelvic-aortic lymphadenectomy for endometrial cancer. Obstet Gynecol. 2008;112(6):1207–1213. doi: 10.1097/AOG.0b013e31818e4416. [DOI] [PubMed] [Google Scholar]