Abstract

Background

Intervention studies in participatory ergonomics (PE) are often difficult to interpret due to limited descriptions of program planning and evaluation.

Methods

In an ongoing PE program with floor layers, we developed a logic model to describe our program plan, and process and summative evaluations designed to describe the efficacy of the program.

Results

The logic model was a useful tool for describing the program elements and subsequent modifications. The process evaluation measured how well the program was delivered as intended, and revealed the need for program modifications. The summative evaluation provided early measures of the efficacy of the program as delivered.

Conclusions

Inadequate information on program delivery may lead to erroneous conclusions about intervention efficacy due to Type III error. A logic model guided the delivery and evaluation of our intervention and provides useful information to aid interpretation of results.

Keywords: intervention, injury prevention, musculoskeletal disorder, logic model, process evaluation

INTRODUCTION

Participatory ergonomics (PE) studies in complex work environments have shown limited efficacy in reducing musculoskeletal disorders (MSD) [Rivilis et al., 2008; van Eerd et al., 2010]. Even studies that observed efficacy of PE interventions in reducing symptoms, injuries, and lost days have been unable to explain which program elements were responsible for these outcomes [Evanoff et al., 1999], limiting the usefulness of findings to plan other interventions. Many PE studies do not clearly describe their implemented programs, nor measure the extent to which programs were implemented as planned [Rinder et al., 2008; Rivilis et al., 2008; van Eerd et al., 2010]. Studies without adequate process evaluation are subject to Type III error, concluding that the program was not effective without recognizing that it was not delivered as intended [Glasgow et al., 2003; Hasson, 2010; Linnan and Steckler, 2002].

Construction workers, including floor layers, have high rates of MSD [Jensen and Friche, 2010; Spector et al., 2011] and rates remain high in this industry despite a recent national downward trend in MSD across other industries [CDC, 2009]. Participatory ergonomic interventions have been recommended in construction since workers often have the autonomy to select the tools and methods used to complete tasks in a rapidly changing work environment [Kramer et al., 2009; Ringen and Stafford, 1998; Scharf et al., 2001], and experienced workers can bring to the program their expertise and knowledge of safe and productive work processes [Hess et al., 2004; Moir and Buchholz, 1996; van der Molen et al., 2005b]. While PE interventions should be well suited to construction work, it is not clear if PE interventions can be successfully incorporated into small construction firms, which often lack formal safety programs [Hasle, 2012; Rinder et al., 2008; Behm, 2008; Wojcik et al., 2003].

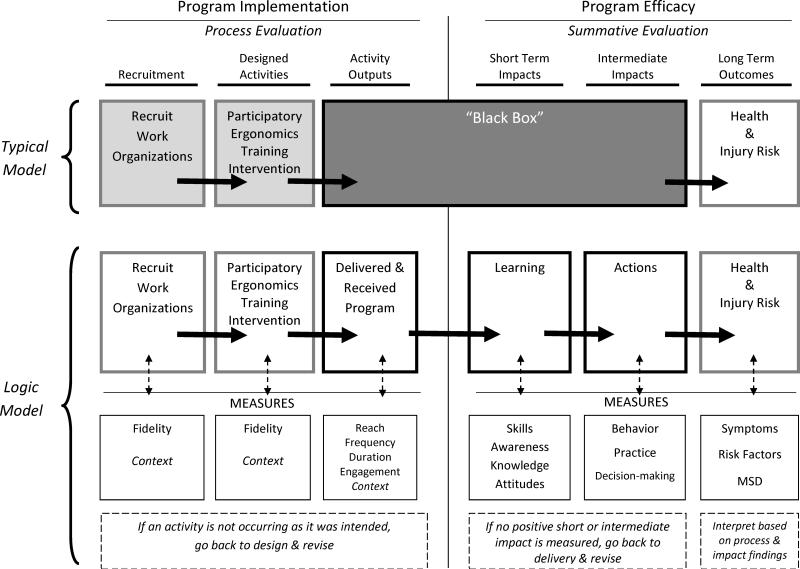

A major goal of MSD prevention research is to diffuse innovations and transfer research-based findings to workers, employers, health and safety professionals, researchers, and policy makers [CPWR, 2009; CRE-MSD, 2012; IRSST, 2010; IWH, 2012; NIOSH, 2011]. Without systematic program description and evaluation, we cannot determine why innovations or programs worked or did not work in the studied population, limiting dissemination to other work groups. Planning (logic) models and process evaluation methods are not as commonly used by researchers in the field of ergonomics and MSD prevention as in other areas of public health [Berthelette et al., 2012; Driessen et al., 2010; Hengel et al., 2011; Helitzer et al., 2009; Roquelaure, 2008; van der Molen et al., 2005a; van Eerd et al., 2010]. Most intervention programs measure only long term outcomes (e.g., injury rates), leaving information about the implementation of the program and short term outcomes hidden in a “black box” [Hulscher et al., 2003; Saunders et al., 2005; Weiss, 1997]. Interpretation of long term outcomes requires process evaluation of the intervention and measurement of short term outcomes to distinguish between lack of program impact (program failure) or lack of behavior change effect on health (theory failure) [Kristensen, 2005]. Detailed description and evaluation of PE programs will improve the usefulness of results to practitioners, researchers, and other stakeholders [Kristensen, 2005; Rogers, 2007]. Given that logic models have been a useful aid in evaluation of other public health programs, they may provide useful and necessary structure in the delivery and evaluation of complex PE programs.

In this manuscript, we use a logic model to describe the planning and evaluation structure of a PE program, and provide examples of measures used in a PE program delivered to a group of construction workers (floor layers). Using this PE program as an example, we show how a logic model can aid the interpretation of long term outcomes by describing the intermediate steps of program delivery and evaluation, which are often hidden in a “black box.”

MATERIALS and METHODS

As part of an ongoing study of PE interventions in construction trades, we recruited three floor layer contractors in the St. Louis metropolitan area in Missouri, USA. We worked with the local floor layers union to identify flooring contractors, and met with willing contractors to discuss their study participation, which included providing access to one or more work groups, sharing their annual OSHA log, allowing formal and informal worker training, supporting survey implementation, and scheduling workers together in the same work group over a period of six months. Contractors who committed to all these PE components were invited to participate and signed a participation agreement form. Each of the contractors provided access to a work group for recruitment of floor layer apprentices, journeymen, and foremen who typically worked together. Our study protocol was reviewed and approved by the Institutional Review Boards at Washington University School of Medicine and Saint Louis University. All subjects provided informed written consent to participate in this study.

Development of a Logic Model

We constructed a logic model showing the relationship between our program implementation and program outcomes [IOM and NRC, 2009; WK Kellogg Foundation, 2004]. The model included both process and summative evaluation components to assess program implementation, activity outputs, and the short term and intermediate outcomes that are precursors to the desired long term outcomes [Edberg, 2007].

Figure I shows a traditional PE Study Model in the first row with recruitment, PE intervention, and a “black box” representing often omitted information about the activity description, outputs, and short term and intermediate impacts of the program. The second row shows a PE logic model, which follows recommendations for health behavior evaluation in describing the designed activities, activity outputs, impacts, and long term outcomes [Edberg, 2007]. Arrows indicate the expected progression through the program. The third row shows measures for each portion of the logic model, with bidirectional arrows to indicate an iterative process of feedback and program adjustment [Campbell et al., 2000].

Figure I.

Comparison of Participatory Ergonomics Study Models for Program Evaluation

Describing the Program

The tailored PE training intervention emphasized that workers should 1) identify targets for change and 2) be engaged in the social process of identifying solutions and procedures for implementation [Gherardi and Nicolini, 2002]. PE intervention models appeared to be well suited for the construction work environment, where small groups of floor layers work closely together to complete building projects [Hess et al., 2004; Wijk and Mathiassen, 2011]. These small group interactions provided the opportunity for workers to develop solutions to the new problems created by the rapidly changing work environment.

Our planned intervention included a variety of PE training activities described in Table I. Groups of workers would receive two 30-minute General Ergonomics Training sessions followed by weekly 30-minute Researcher-led Meetings (4 to 8 sessions), and after one to two months, would progress to weekly Worker-led Meetings (4 to 8 sessions). In addition to group training, researchers would engage in One-on-one Interactions with individual workers one to two times per week for two to three months (8 to 24 sessions).

Table I.

Planned Activities for Program Implementation

| Recruitment | |

| Recruitment of Contractors | Contractors will be interviewed during an initial partnership meeting using a “wish list” of key factors that we identified for successful participation (eagerness to participate, secured work during the study period, provides a contact person, and the identified intervention group has steady work and will stay together). Contractors who agree to participate will be required to sign a partnership agreement acknowledging their responsibilities. During a post-intervention meeting, researchers will interview the contractors to learn about their follow-through with the partnership agreement. |

| Participatory Ergonomics Training Intervention | |

| Group Training | |

| General Ergonomics Training 1 & 2 | Ergonomist-researchers will provide two 30-minute training sessions including recognition of signs and symptoms of MSD, specific worksite examples of task-related risk factors, and explain how to identify problems and ergonomic solutions. |

| Researcher-led Meetings | After the General Ergonomics Training and at least 2 weeks of One-on-one Interactions, this 30-minute interactive group meeting explores the workers’ problem tasks and solutions to those problems. These meetings will continue weekly for at least 1 to 2 months until the PE group progresses to Worker-led Meetings. |

| Worker-led Meetings | The PE group will continue the problem-solution process using a worksheet to guide their discussions of the prior week's identified solutions, determine if those solutions worked or if they were implemented, and discuss new problems and solutions. |

| Individual Training | |

| One-on-one Interactions | One week post-training, the ergonomist-researchers will visit the trained workers and briefly discuss their current work tasks, problems related to the tasks, and solutions that workers are considering. These interactions will occur periodically for 2 to 3 months after which the worker continues the problem and solution identification process. |

Process Evaluation & Summative Evaluation Plans

A description of the evaluation plan is presented in Table II, which provides examples of measures for each component of the evaluation, evaluation questions, data sources, tools and procedures for data collection, data analysis, and application of the findings. We planned to use mixed methods to perform the process evaluation and to measure the short term and intermediate impacts of the program [Sandelowski, 2000]. Process evaluation measured program implementation, while summative evaluation measured program efficacy.

Table II.

Program Evaluation Plan

| Evaluation Measures | Evaluation Questions | Data Sources | Tools / Procedures | Data Analysis or Synthesis | Use of the Findings | |

|---|---|---|---|---|---|---|

| Program Implementation - Process Evaluation | ||||||

| Recruitment | ||||||

| Recruit Work Organizations | Fidelity – the extent to which researchers followed the “wish list” for recruitment, contractors’ adherence to the partnership agreement, and the surrounding context. | 1.To what extent were contractors recruited within the “wish list”? 2.Did contractors follow the partnership agreement? |

Researchers | 1.Items from the “wish list.” 2. Items from the partnership agreement & meeting notes. |

Content analysis of the initial partnership and post-intervention meeting notes. | Inform the criteria for recruitment and feasibility of items on the partnership agreement. |

| Designed Activities | ||||||

| Participatory Ergonomics (PE) Training Intervention | Fidelity – the extent to which the program was implemented as planned and the context in which it occurred. | To what extent were the training intervention and training content implemented as researchers planned? | Researchers | Descriptions of original training, training logs, and delivered training presentations. | Comparison of intended training program delivery to the actual training program delivery. | Determine what was feasible to provide in training and modifications for the training content. |

| Activity Outputs | ||||||

| Delivered & Received Program | Reach – proportion of the intended audience that participates in the training (attendance). | Was the training program delivered to at least 80% of the workers? | Researchers | Training logs | Calculate the proportion of workers who participated in each training component. | If attendance is low, consider adjusting delivery of training to improve reach. |

| Frequency – the amount of intended units of each delivered training component. | To what extent was the amount or number of intended units of training delivered? | Researchers | Training logs | Calculation of frequency of attendance for each training component. | Explore the context to delivering the training to make adjustments. | |

| Duration of workers in training. | How long did the workers receive the training? | Workers | Training logs | Sum the duration of training. | Explore the context surrounding duration to make adjustments. | |

| Engagement of workers with the intervention. | 1. To what extent did workers actively engage in the training? 2. Were workers receptive to the program? |

Workers | 1. Field notes. 2. Self-report surveys & focus groups. |

Content analysis | Determine ways to improve active engagement & receptiveness. | |

| Context – Aspects of the larger social & political environment that may influence the program. | Were there aspects of the larger environment that influenced implementation? | Contractors, union reps, local business reports. | Field notes, worker job logs, contractor & union interviews, and local business journal reports. | Review of the local construction economy from 2007 through 2011. | Modify the program to work within the limitations of the context. | |

| Program Efficacy – Summative Evaluation | ||||||

| Short Term Impacts | ||||||

| Learning | Skills, awareness, knowledge, attitudes | To what extent did workers identify problems (e.g. risk factors) and solutions (e.g. work methods & tools or equipment)? | Workers | 1. Self-report surveys. 2. Observation field notes. 3. Interview field notes. |

1.Quantitative analysis. 2. Content analysis. 3. Content analysis. |

Consider modifications to target increased learning. |

| Intermediate Impacts | ||||||

| Actions | Behavior, practice, decision-making | To what extent did workers perform solutions (e.g. work methods & tools or equipment) to make the job easier? | Workers & Researchers | 1.Self-report surveys. 2. Observation field notes. 3. Video analysis. 4. Interview notes. |

1.Quantitative analysis. 2. Content analysis. 3. Video analysis. 4. Content analysis. |

Modify training to target increased learning and actions. |

| Long Term Outcomes | ||||||

| Health & Injury Risk | Symptoms, risk factors, MSD | To what extent did workers’ reports of discomforts, exposures to risk factors, & MSD change? | Workers & Contractors | 1.Self-report surveys administered at baseline, 3 and 12 months. 2. OSHA 300 Logs. |

Quantitative analysis | Interpret these results based on findings from the process & impact evaluations. |

Process Evaluation and Description of Data Elements

The process evaluation was designed to determine if the program was delivered as planned, if the program needed modifications to improve delivery or efficacy, and if the intervention produced unintended consequences [Grembowski, 2001; Saunders, 2005]. Process evaluation of the activity outputs was measured by reach (participation), frequency, duration, and engagement. Data were recorded to describe the context surrounding the recruitment activities, PE training intervention, and activity outputs [Linnan and Steckler, 2002].

Fidelity of Recruitment

Fidelity of recruitment refers to the extent to which recruitment activities by the researcher and contractors were carried out as planned. During initial partnership meetings with contractors, researchers used a recruitment “wish list” or guide for selecting floor layer contractors for participation in the study, including a contractor's ability to provide a floor layer group who would work together for the duration of the intervention.

Fidelity of Training

Fidelity of the PE training intervention measures the extent to which the intervention was implemented as designed. We measured fidelity by comparing the planned training objectives and content to the delivered training presentations and training logs to determine the amount of intended training content that was actually delivered.

Reach

Reach is the proportion of the intended audience that participated in each portion of the intervention. We utilized training logs to determine how many workers we planned to target and how many workers actually attended General Ergonomics Training from each contractor group.

Frequency

Frequency, a measure of training completeness, is the number of intended intervention activities provided. We utilized training logs to track delivery of training sessions. For One-on-one Interactions, we calculated the proportion of delivered interactions by the total number of intended interactions.

Duration

Duration is the total length of time the training program occurred for each work group. We intended for the training intervention to last at least two and up to six months for each of the contractor groups. We utilized training logs to track duration of the intervention for each contractor group.

Engagement

Engagement is the extent to which workers were actively engaged with, interacted with, were receptive to, and used materials or resources from the training. We measured workers’ receptiveness to the training using self-report survey items (e.g. usefulness of the training and most helpful aspects of training). Post-intervention, researchers held focus groups to ask workers about their receptiveness to the PE training intervention and their use of ergonomic resources presented in training.

Context

Context data describes aspects of the larger social and political environment that may influence implementation of the program. We used contractor meeting notes and field notes to describe the circumstances surrounding the recruitment and training (e.g. tight deadlines) and training logs to describe the context of the training environment (e.g. distractions during the training). Contractor-provided daily worker job logs were used to describe the variability of work locations and transiency of workers. Contractor and union interviews (e.g. state of the construction economy) and local reports from business journals (e.g. local construction economy data) provided the context surrounding the local construction industry.

Summative Evaluation and Description of Data Elements

We designed this portion of our program evaluation to determine the impact of the PE training intervention on various short term, intermediate, and long term outcomes.

Short term impact

Short term impacts included learning constructs of skills, awareness, knowledge, and attitudes related to the PE intervention. Skills were measured by the workers’ ability to identify problems (e.g. high risk tasks related to discomfort) and solutions (e.g. work methods, tools or equipment). Awareness (e.g. ability to point out why work tasks are physically demanding), attitudes (e.g. willingness to try new tools), and knowledge (e.g. knowing how to use ergonomics in his job) were measured using survey items. Data were collected on repeated self-report surveys and interviews. During One-on-one Interactions, researchers explored the workers’ awareness and skills to identify problems and solutions within their work tasks and recorded the data using written field notes.

Intermediate impact

Intermediate impact of the program was assessed by behaviors, practice of ergonomic methods, and decision-making. A variety of data were collected to determine the extent to which the outcomes were achieved. Data sources included surveys in which workers were asked to report their individual use of ergonomic solutions, field observations, videotapes, and periodic worker interviews. Focus groups conducted after the intervention period were used to explore the fabrication or purchase of new tools or equipment, decisions made related to ergonomics, and communication of ergonomics ideas with others.

Long term outcomes

Long term health and injury risk outcomes (i.e. reductions in MSD symptoms, MSD risk factors, and occurrence of MSD) were measured by worker surveys and each contractor's “Log of Work-Related Injuries and Illnesses” OSHA's Form 300. An example of a symptom was, “Have you felt any muscle or joint pain or discomfort in the last 4 weeks?” [Village and Ostry, 2010]. Symptoms were assessed by location and severity. A question related to exposure to hand force was “How much effort do you use to grip a power tool?” rated on a scale of effort [Borg, 1990]. Other risk factor measures included use of vibrating hand tools, working with the hands above the head, bending forward, kneeling, and lifting or carrying objects.

Planned Data Collection Activities for Conducting Process & Summative Evaluations

Process evaluation data included training logs and worker job logs. The content and delivery of the planned training was compared to the delivered presentations to describe their differences. Focus group discussions were audio recorded and transcribed for content analysis. Short term and intermediate outcomes were collected by worker surveys at two, six, and twelve weeks after the ergonomics training and one year following the intervention. Worker actions were extracted from observation and interview field notes and from video by two researchers. Long term outcome data were collected by surveys at baseline, three months, and one year post-intervention; and contractors’ annual logs of work-related MSD.

For this manuscript, we have illustrated the potential utility of our logic model using examples from our data sources in this ongoing intervention study.

RESULTS

We used our logic model (Figure I) to guide the delivery of an ongoing PE program and demonstrate the use of process evaluation to modify this continuing program. We described our planned activities of the PE program (Table 1) in order to compare it to actual activities that occurred in the following examples of our delivered program. In order to illustrate the application of the logic model to the presentation of intervention results, we also provide examples of summative evaluation outcomes among a cohort of 25 floor layers participating in an ongoing PE intervention study.

Process Evaluation

Fidelity of Recruitment

At baseline, all of the invited contractors (n=3) demonstrated eagerness to participate and provided researchers with a contractor representative to act as the main contact person for coordinating visits with the workers. By the midpoint of participation, all three contractors were not able to schedule work by the core work groups together for long periods at one site: Contractor A's work was on hold, Contractor B had no planned work, and Contractor C's core workgroup was divided between two job sites with inconsistent work.

Fidelity of Training

Time limitations in the workers’ schedules did not allow all workers to participate in training as planned. We reduced the duration of the General Ergonomics Training by 50% while retaining all of the core educational elements. For workers who missed the original training, we provided a condensed version that included fewer examples of problems and solutions and fewer illustrative photos than the original training.

Process Evaluation: Activity Outputs

Reach

All workers (n=25) attended either the full or condensed version of General Ergonomics Training. Participation in one or more One-on-one Interactions was 92% (n=23).

Frequency

We delivered the full General Ergonomics Training to 32% (n=8) of workers in two sessions and a condensed, one session version to 68% (n=17). Researcher-led meetings occurred with workers in only one out of the three participating contractor groups and none of the groups progressed to Worker-led Meetings due to temporary layoffs and movement of workers to different sites.

Duration

The length of the intervention for each group ranged from three to four months, with a mean duration of 3.6 months, within the planned range of two to six months.

Engagement

Workers were actively engaged in the General Ergonomics Training. They reported more often that One-on-One Interactions and “just talking” were helpful to them as compared to group meetings, supporting emphasis on participatory discussions rather than formal meetings. In focus groups we asked workers about how the PE program changed the way that they think about work technique, tools, and equipment. A quote from one worker was typical of responses in self-reported follow up surveys: “Yeah for sure, now [you] got us all thinking of ways to try to make tools and make things easier.”

Context

The context includes factors that did not result from our program but may have influenced its delivery. Our review of each contractor's daily worker job logs showed high variability of job locations: workers often did not stay together and often moved to different work locations within a single work day or week. Our field notes indicated that some workers experienced tightened build deadlines and others’ workloads decreased, resulting in temporary layoffs.

Summative Evaluation

Short term impact: Learning

Early analysis of repeated surveys indicated that workers were aware of why some work tasks were physically demanding, felt knowledgeable about ergonomics, and had a positive attitude towards trying new tools to reduce risk of pain and discomfort on the job. During One-on-one Interactions, workers indicated awareness of ergonomic problems in their work tasks including activities that involved high force, repetition, awkward postures, and vibration (i.e. lifting boxes of ceramic tiles, troweling tile adhesive, kneeling and leaning on the hands at the floor level, and operating vibrating power floor strippers). We applied workers’ awareness of specific ergonomic problems into the One-on-one Interactions and weekly meetings to expand on their ideas and interests related to ergonomics.

Intermediate Impacts (Actions) & Long Term Outcomes

Preliminary analysis showed that 83% (n=10) of workers surveyed one to three months post-intervention had changed their work methods, tools, or equipment in order to make their jobs physically easier, and described limits to their ability to consistently adopt new equipment or methods (i.e. too expensive, unable to plan ahead, may reduce productivity). Some workers reported changes by interview and/or demonstrated during field observations how they implemented solutions (i.e. transported boxes of tiles by using a wheeled hand truck to reduce carrying). Other workers displayed no behavioral changes and indicated various barriers to implementing solutions as described above in “context.” Data collection on changes in MSD symptoms, reductions in physical exposures, and occurrence of MSD is still in progress for these longer term outcomes and future analysis will provide further evaluation of program efficacy.

DISCUSSION

We applied a logic model to the design of a PE intervention in floor layers and found it useful in describing our program plan and in systematically providing feedback on its delivery. The process evaluation quickly showed that reach of the General Ergonomics Training would be low (32%) if we did not better accommodate the floor layers’ tight work schedules. By modifying the method of delivery, we were able maintain fidelity and deliver the core principles of the General Ergonomics Training to all of the intended workers. Workers preferred informal discussions of ergonomic problems and solutions, which supported our focus on One-on-one Interactions and improved the frequency of learning delivered due to difficulties in gathering groups of workers for Researcher-led and Worker-led Meetings. It was important to use the process evaluation to determine how our program worked under usual, everyday work conditions since contextual factors affected the degree of implementation [Cole et al., 2009; Hengel et al. 2011; Wells et al., 2009]. The high variability of worker movement between jobs and time constraints due to profound economic pressure at the time of the study left little time for ergonomics training or other activities that were not necessary for rapid completion of the build. Larger construction environment influences on the program may include the high degree of contractor competition to perform low bid work within time and cost constraints of construction contracts, particularly during the recent economic recession [NIOSH, 2012].

Our evaluation of the PE program's short term and intermediate impact provided insight to interpret the long term outcomes, even before injury risk factors and symptoms were analyzed post-intervention. Early findings from short term outcomes indicated the workers benefitted from the program; more detailed analysis will evaluate longer term data and determine if the delivered program was efficacious. In our program, workers learned from the training, identified work changes that would reduce risk for MSD, and attempted to take actions to reduce their risk of injury. If they are able to carry out these ergonomic solutions, we anticipate a measurable impact on some long term outcomes. Impact on learning and actions are early indicators of efficacy based on the delivered program, and as mediators to the long term outcomes will enable us to describe why the program did or did not reduce injury risk factors and MSD symptoms [Edberg, 2007].

Detailed knowledge of the delivered program makes it possible to interpret both negative and positive results more meaningfully. The typical evaluation model (Figure I) indicates that a program is not efficacious unless it can improve health outcomes. If health outcomes improve following our study, it would be as a result of a less intensive intervention than originally planned. We would be at risk of committing Type III error if we concluded the PE program was not efficacious based on health improvement alone [Berthelette et al., 2012]. This case example demonstrates the value of describing the program plan, using a process evaluation to determine what was actually delivered, and interpreting both short and longterm data based on the delivered program.

The most comprehensive way to evaluate this type of complex program (e.g. multiple intervention activities implemented among transient work groups in an ever-changing work environment), is to use multiple methods and approaches, an approach often underutilized in efficacy studies [Sandelowski, 2000]. Studying only quantitative results can produce incomplete data that are difficult to interpret without the context provided by qualitative data. Our study used mixed methods, fitting the complex nature of the work context and the interactive nature of the intervention [Glasgow, 2003].

Conclusion

Determining the efficacy of a PE intervention involves more than evaluating long term outcomes. Participatory ergonomics studies need to be reported with more details about the program plan, delivered program, and program evaluation to allow for interpretation of the outcomes and replication of interventions. In preparing for the diffusion of interventions in dynamically changing work environments, researchers must describe and measure their program implementation not only to determine efficacy and potential Type III error, but also to demonstrate how to make the program work within each environment's unique conditions.

ACKNOWLEDGEMENTS

This research was funded as part of a grant to CPWR—The Center for Construction Research and Training from the National Institute for Occupational Safety and Health / Centers for Disease Control (Grant# NIOSH U60 OH009762) and by the Washington University Institute of Clinical and Translational Sciences grant UL1 TR000448 from the National Center for Advancing Translational Sciences (NCATS) of the National Institutes of Health (NIH). We would like to thank the Carpenters’ District Council of Greater St. Louis and the contractors and floor layers who participated in this study.

Footnotes

Institution at which the work was performed: Washington University School of Medicine

Conflict of Interest Statement: None of the authors have conflicts of interest to report.

REFERENCES

- Behm M. Construction sector. J Saf Res. 2008;39(2):175–178. doi: 10.1016/j.jsr.2008.02.007. [DOI] [PubMed] [Google Scholar]

- Berthelette D, Leduc N, Bilodeau H, Durand M-J, Faye C. Evaluation of the implementation fidelity of an ergonomic training program designed to prevent back pain. Appl Ergon. 2012;43(1):239–245. doi: 10.1016/j.apergo.2011.05.008. [DOI] [PubMed] [Google Scholar]

- Borg G. Psychophysical scaling with applications in physical work and the perception of exertion. Scand J Work Environ Health. 1990;16(suppl. 1):55–58. doi: 10.5271/sjweh.1815. [DOI] [PubMed] [Google Scholar]

- Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Spiegelhalter D, Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ. 2000;321(7262):694–696. doi: 10.1136/bmj.321.7262.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention Average rate of occupational musculoskeletal disorders involving days away from work, Construction sectors, Private Industry, 2003 – 2007. [2013 Feb 16];National Institute for Occupational Safety and Health, Division of Surveillance, Hazard Evaluations & Field Studies. Worker Health eChartbook. 2009 Available from: http://wwwn.cdc.gov/niosh-survapps/echartbook/Chart.aspx?id=1310&cat=104.

- Centre of Research Expertise for the Prevention of Musculoskeletal Disorders (CRE-MSD) [2012 May 16];r2P (Research to practice) 2012 Available from: http://www.cre-msd.uwaterloo.ca/r2p_Research_to_Practice.aspx.

- Cole DC, Theberge N, Dixon SM, Rivilis I, Neumann WP, Wells R. Reflecting on a program of participatory ergonomics interventions: A multiple case study. Work. 2009;34(2):161–178. doi: 10.3233/WOR-2009-0914. [DOI] [PubMed] [Google Scholar]

- Driessen MT, Proper KI, Anema JR, Bongers PM, van der Beek AJ. Process evaluation of a participatory ergonomics programme to prevent low back pain and neck pain among workers. Implement Sci. 2010;5:65. doi: 10.1186/1748-5908-5-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edberg M. Evaluation: What is it? Why is it needed? How does it relate to theory? In: Riegelman R, editor. Essentials of health behavior. Social and behavioral theory in public health. Jones and Bartlett Publishers, Inc.; Sudbury (MA): 2007. pp. 151–161. [Google Scholar]

- Evanoff BA, Bohr PC, Wolf LD. Effects of a participatory ergonomics team among hospital orderlies. Am J Ind Med. 1999;35(4):358–365. doi: 10.1002/(sici)1097-0274(199904)35:4<358::aid-ajim6>3.0.co;2-r. [DOI] [PubMed] [Google Scholar]

- Gherardi S, Nicolini D. Learning the trade: A culture of safety in practice. Organization. 2002;9(2):191–223. [Google Scholar]

- Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93(8):1261–1267. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grembowski D. Evaluation of program implementation. In: Grembowski D, editor. The practice of health program evaluation. Sage Publications, Inc.; Thousand Oaks (CA): 2001. pp. 143–161. [Google Scholar]

- Hasle P, Kvorning LV, Rasmussen CDN, Smith LH, Flyvholm MA. A model for design of tailored working environment intervention programmes for small enterprises. Saf Health Work. 2012;3(3):181–191. doi: 10.5491/SHAW.2012.3.3.181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson H. Systematic evaluation of implementation fidelity of complex interventions in health and social care. Implement Sci. 2010;5:67. doi: 10.1186/1748-5908-5-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helitzer D, Willging C, Hathorn G, Benally J. Using logic models in a community-based agricultural injury prevention project. Public Health Reports. 2009;124(Suppl1):63–73. doi: 10.1177/00333549091244S108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hengel KMO, Blatter BM, van der Molen HF, Joling CI, Proper KI, Bongers PM, van der Beek AJ. Meeting the challenges of implementing an intervention to promote work ability and health-related quality of life at construction worksites: A process evaluation. J Occup Environ Med. 2011;53(12):1483–1491. doi: 10.1097/JOM.0b013e3182398e03. [DOI] [PubMed] [Google Scholar]

- Hess JA, Hecker S, Weinstein M, Lunger M. A participatory ergonomics intervention to reduce risk factors for low-back disorders in concrete laborers. Appl Ergon. 2004;35(5):427–441. doi: 10.1016/j.apergo.2004.04.003. [DOI] [PubMed] [Google Scholar]

- Hulscher MEJL, Laurant MGH, Grol RPTM. Process evaluation on quality improvement interventions. Qual Saf Health Care. 2003;12(1):40–46. doi: 10.1136/qhc.12.1.40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institut de recherche Robert-Sauvé en santé et en sécurité du travail (IRRST) [2012 May 16];Organizational priority: Knowledge translation and promotion of results. 2010 Available from: http://www.irsst.qc.ca/en/organizational-priority.html.

- Institute for Work & Health (IWH) [2012 May 16];Knowledge transfer and exchange. 2009 Available from: http://www.iwh.on.ca/knowledge-transfer-exchange.

- Institute of Medicine (IOM) and National Research Council (NRC) Evaluating occupational health and safety research programs: Framework and next steps. The National Academies Press; Washington, DC: 2009. p. 132. [PubMed] [Google Scholar]

- Jensen LK, Friche C. Implementation of new working methods in the floor-laying trade: Long-term effects on knee load and knee complaints. Am J Ind Med. 2010;53(6):615–627. doi: 10.1002/ajim.20808. [DOI] [PubMed] [Google Scholar]

- Kramer D, Bigelow P, Vi P, Garritano E, Carlan N, Wells R. Spreading good ideas: A case study of the adoption of an innovation in the construction sector. Appl Ergon. 2009;40(5):826–832. doi: 10.1016/j.apergo.2008.09.006. [DOI] [PubMed] [Google Scholar]

- Kristensen TS. Intervention studies in occupational epidemiology. Occup Environ Med. 2005;62(3):205–210. doi: 10.1136/oem.2004.016097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linnan L, Steckler A. Process evaluation for public health interventions and research: An overview. In: Steckler A, Linnan L, editors. Process evaluation for public health interventions and research. Jossey-Bass; San Francisco (CA): 2002. pp. 1–23. [Google Scholar]

- Moir S, Buchholz B. Emerging participatory approaches to ergonomic interventions in the construction industry. Am J Ind Med. 1996;29(4):425–430. doi: 10.1002/(SICI)1097-0274(199604)29:4<425::AID-AJIM31>3.0.CO;2-1. [DOI] [PubMed] [Google Scholar]

- National Institute for Occupational Safety and Health (NIOSH) [2012 May 16];R2p: Research to practice at NIOSH. 2011 Available from: http://www.cdc.gov/niosh/r2p/

- National Institute for Occupational Safety and Health (NIOSH) [2012 Feb 27];NIOSH program portfolio. Construction, input: Economic factors. 2012 Available from: http://www.cdc.gov/niosh/programs/const/economics.html.

- Rinder MM, Genaidy A, Salem S, Shell R, Karwowski W. Interventions in the construction industry: A systematic review and critical appraisal. Hum Factor Ergon Man. 2008;18(2):212–229. [Google Scholar]

- Ringen K, Stafford EJ. Intervention research in occupational safety and health: Examples from construction. Am J Ind Med. 1996;29(4):314–320. doi: 10.1002/(SICI)1097-0274(199604)29:4<314::AID-AJIM7>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Rivilis I, Van Eerd D, Cullen K, Cole DC, Irvin E, Tyson J, Mahood Q. Effectiveness of participatory ergonomic interventions on health outcomes: A systematic review. Appl Ergon. 2008;39(3):342–358. doi: 10.1016/j.apergo.2007.08.006. [DOI] [PubMed] [Google Scholar]

- Rogers PJ, Weiss CH. Theory-Based Evaluation: Reflections Ten Years On: Theory-based evaluation: Past, present, and future. New Dir Eval. 2007;2007(114):63–81. [Google Scholar]

- Roquelaure Y. Workplace intervention and musculoskeletal disorders: The need to develop research on implementation strategy. Occup Environ Med. 2008;65(1):4–5. doi: 10.1136/oem.2007.034900. [DOI] [PubMed] [Google Scholar]

- Sandelowski M. Combining qualitative and quantitative sampling, data collection, and analysis techniques in mixed-method studies. Res Nurs Health. 2000;23(3):246–255. doi: 10.1002/1098-240x(200006)23:3<246::aid-nur9>3.0.co;2-h. [DOI] [PubMed] [Google Scholar]

- Saunders RP, Evans MH, Joshi P. Developing a process-evaluation plan for assessing health promotion program implementation: A how-to guide. Health Promot Pract. 2005;6(2):134–147. doi: 10.1177/1524839904273387. [DOI] [PubMed] [Google Scholar]

- Scharf T, Vaught C, Kidd P, Steiner L, Kowalski K, Wiehagen B, Rethi L, Cole H. Toward a typology of dynamic and hazardous work environments. Hum Ecol Risk Assess. 2001;7(7):1827–1841. [Google Scholar]

- Spector JT, Adams D, Silverstein B. Burden of work-related knee disorders in Washington state, 1999 to 2007. J Occup Environ Med. 2011;53(5):537–547. doi: 10.1097/JOM.0b013e31821576ff. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Center for Construction Research and Training (CPWR) [2012 May 16];Research to practice: Bridging the gap between research and practice. 2009 Available from: http://www.cpwr.com/r2p/index.php.

- van der Molen HF, Sluiter JK, Hulshof CTJ, Vink P, van Duivenbooden C, Frings-Dresen MHW. Conceptual framework for the implementation of interventions in the construction industry. Scand J Work Environ Health. 2005a;31(suppl. 2):96–103. [PubMed] [Google Scholar]

- van der Molen HF, Sluiter JK, Hulshof CT, Vink P, van Duivenbooden C, Holman R, Frings-Dresen MH. Implementation of participatory ergonomics intervention in construction companies. Scand J Work Environ Health. 2005b;31(3):191–204. doi: 10.5271/sjweh.869. [DOI] [PubMed] [Google Scholar]

- van Eerd D, Cole D, Irvin E, Mahood Q, Keown K, Theberge N, Village J, St. Vincent M, Cullen K. Process and implementation of participatory ergonomic interventions: A systematic review. Ergonomics. 2010;53(10):1153–1166. doi: 10.1080/00140139.2010.513452. [DOI] [PubMed] [Google Scholar]

- Village J, Ostry A. Assessing attitudes, beliefs and readiness for musculoskeletal injury prevention in the construction industry. Appl Ergon. 2010;41(6):771–778. doi: 10.1016/j.apergo.2010.01.003. [DOI] [PubMed] [Google Scholar]

- Weiss C. Theory-based evaluation: past, present, and future. New Dir Eval. 1997;1997(76):68–81. [Google Scholar]

- Wells R, Laing A, Cole D. Characterising the intensity of changes made to reduce mechanical exposure. Work. 2009;34(2):179–193. doi: 10.3233/WOR-2009-0915. [DOI] [PubMed] [Google Scholar]

- Wijk K, Mathiassen SE. Explicit and implicit theories of change when designing and implementing preventive ergonomics interventions - a systematic literature review. Scand J Work Environ Health. 2011;37(5):363–375. doi: 10.5271/sjweh.3159. [DOI] [PubMed] [Google Scholar]

- W.K. Kellogg Foundation . Using logic models to bring together planning, evaluation, and action: Logic model development guide [Internet] W.K. Kellogg Foundation; Battle Creek (MI): 2004. 1998. [2012 Nov 5]. Available from: http://www.wkkf.org/knowledge-center/resources/2006/02/WK-Kellogg-Foundation-Logic-Model-Development-Guide.aspx. [Google Scholar]

- Wojcik S, Kidd PS, Parshall MB, Struttmann TW. Performance and evaluation of small construction safety training simulations. Occup Med. 2003;53(4):279–286. doi: 10.1093/occmed/kqg068. [DOI] [PubMed] [Google Scholar]