Abstract

Concepts develop for many aspects of experience, including abstract internal states and abstract social activities that do not refer to concrete entities in the world. The current study assessed the hypothesis that, like concrete concepts, distributed neural patterns of relevant, non-linguistic semantic content represent the meanings of abstract concepts. In a novel neuroimaging paradigm, participants processed two abstract concepts (convince, arithmetic) and two concrete concepts (rolling, red) deeply and repeatedly during a concept-scene matching task that grounded each concept in typical contexts. Using a catch trial design, neural activity associated with each concept word was separated from neural activity associated with subsequent visual scenes to assess activations underlying the detailed semantics of each concept. We predicted that brain regions underlying mentalizing and social cognition (e.g., medial prefrontal cortex, superior temporal sulcus) would become active to represent semantic content central to convince, whereas brain regions underlying numerical cognition (e.g., bilateral intraparietal sulcus) would become active to represent semantic content central to arithmetic. The results supported these predictions, suggesting that the meanings of abstract concepts arise from distributed neural systems that represent concept-specific content.

Introduction

Conceptual knowledge underlies the interpretation of experience and guides action in the world (Barsalou, 1999, 2003a, 2003b, 2008a, 2009). Investigations of how concepts develop and operate have largely focused on concrete entities that can be perceived directly: objects, tools, buildings, animals, foods, musical instruments, and so forth (Medin, Lynch, & Solomon, 2000; Murphy, 2002). Despite their prevalence and importance, little is known about how abstract concepts such as cognitive processes (e.g., focus, rumination), emotions (e.g., exuberance, dread), social activities (e.g., party, gossip), and many others are represented and used to interpret experience.

Abstract concepts are most often discussed in terms of their general differences from concrete concepts. Classic theories of knowledge representation present dichotomies in which abstract concepts are primarily represented by linguistic or other amodal symbols, and concrete concepts are primarily represented by perceptually grounded or derived symbols (cf. Paivio, 1986, 1991; Schwanenflugel, 1991). These theories re-emerged when neuroimaging methodologies developed that could test their hypotheses. If abstract concepts are represented through associations with other words, brain regions central to language should be more active for abstract than for concrete concepts. In support of this prediction, a recent meta-analysis of 19 neuroimaging studies that compared the processing of abstract and concrete concepts during a wide variety of tasks found that left inferior frontal, superior temporal, and middle temporal cortices were consistently more active for abstract than for concrete concepts across studies (Wang, Conder, Blitzer, & Shinkareva, 2010). Conversely, left posterior cingulate, precuneus, fusiform gyrus, and parahippocampal gyrus were consistently more active for concrete concepts. The conclusion drawn was that abstract concepts rely on verbal systems, whereas concrete concepts rely on perceptual systems supporting mental imagery, consistent with the conclusions drawn in many of the individual studies reviewed (e.g., Fiebach & Friederici, 2004; Noppeney & Price, 2004; Sabsevitz, Medler, Seidenberg, & Binder, 2005).

The meta-analysis just described identified a relatively small number of brain areas that become active to process common elements of many diverse concepts classified as abstract or as concrete. Research on concrete concept types (e.g., living things, tools, foods, musical instruments) increasingly suggests, however, that activations aggregating across diverse concepts are only part of the distributed neural patterns that represent the sensory-motor and affective content1 underlying the meaning of each individual concept (for reviews see Barsalou, 2008; Binder, Desai, Graves, & Conant, 2009; Martin, 2001, 2007). Idiosyncratic content central for representing individual concepts is lost when many diverse concepts compose one “concrete concept” condition. From this perspective, for example, the concept pear2 would be represented by distributed neural patterns reflecting visual content for shape and color, gustatory content for sweetness, tactile content for juiciness, affective content for pleasure, and so forth. Whereas the focal content for pear may be relatively visual and gustatory in nature, the focal content for violin may be relatively auditory and tactile (Cree & McRae, 2003). Furthermore, pear is not represented in a vacuum, but is instead associated with various contexts (e.g., grocery store, kitchen) that form unified, situated conceptualizations (for related reviews see Bar, 2004; Barsalou, 2008b; Yeh & Barsalou, 2006). Collapsing across the activation patterns that underlie pear and violin to examine concrete concepts would only reveal shared content, obscuring much unique content, such as gustatory information relevant to the meaning of pear and auditory information relevant to the meaning of violin.

In a similar manner, theories of grounded cognition suggest that individual abstract concepts are represented by distributed neural patterns that reflect their unique content, which is often more situationally complex and temporally extended than that of concrete concepts (Barsalou, 1999, 2003b; Barsalou & Weimer-Hastings, 2005; Wilson-Mendenhall, Barrett, Simmons, & Barsalou, 2011). According to this view, abstract concepts are represented by situated conceptualizations that develop as the abstract concept is used to capture elements of a dynamic situation. For example, situated conceptualizations for the abstract concept convince develop to represent events in which one agent is interacting with another person in an effort to change their mental state. Any number of situated conceptualizations may develop to represent convince in different contexts (e.g., to convince one’s spouse to rub one’s feet, to convince another of one’s political views). Thus the lexical representation for “convince” is associated with much non-linguistic semantic content that supports meaningful understanding of the concept, including the intentions, beliefs, internal states, affect, and actions of self and others that unfold in a spatiotemporal context. Consistent with this approach, neuroimaging evidence suggests that abstract social concepts (e.g., personality traits) are grounded in non-linguistic brain areas that process social and affective content relevant to social perception and interaction (e.g., medial prefrontal cortex, superior temporal sulcus, temporal poles) (Contreras, Banaji, & Mitchell, 2011; Martin & Weisberg, 2003; Mason, Banfield, & Macrae, 2004; Mitchell, Heatherton, & Macrae, 2002; Ross & Olson, 2010; Simmons, Reddish, Bellgowan, & Martin, 2010; Wilson-Mendenhall et al., 2011; Zahn et al., 2007). It remains unclear, however, if various other kinds of abstract concepts are analogously represented by distributed, nonlinguistic content that is specific and relevant to their meanings.

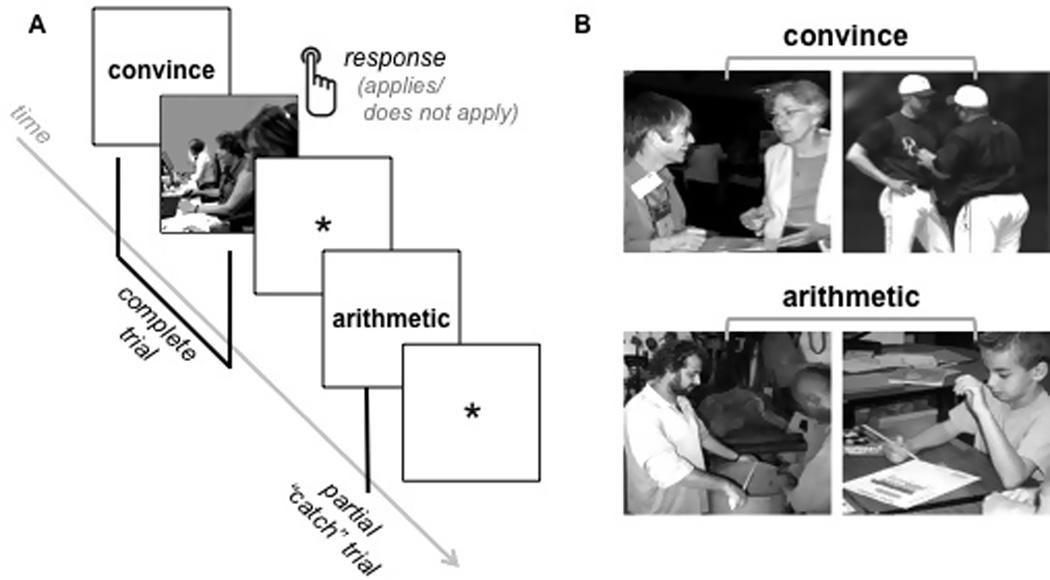

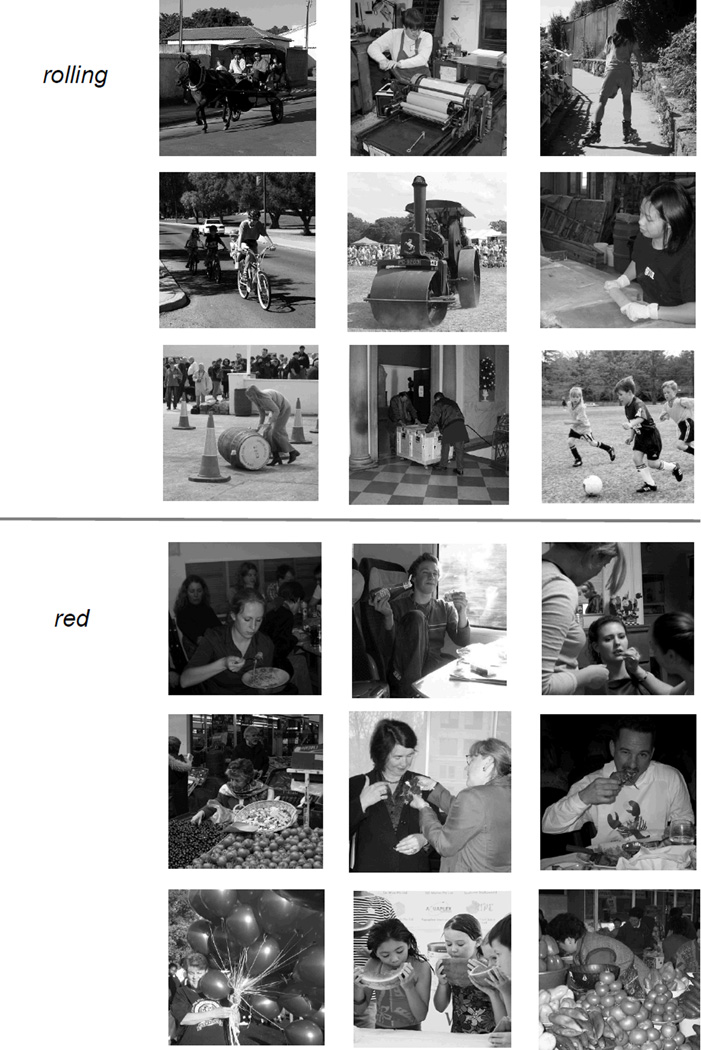

An empirical obstacle in studying abstract concepts is that people find it especially difficult to process the meaning of an isolated abstract concept when it is divorced from its typically rich context (Schwanenflugel, 1991). Moreover, existing neuroimaging paradigms often assess semantic knowledge with word tasks (e.g., lexical decision, synonym matching) that elicit fast conceptual processing (e.g., negative valence; Kousta et al., 2011; Estes & Adelman, 2008), but that typically do not require deeper processing because superficial linguistic strategies are sufficient to complete the task (Barsalou, Santos, Simmons, & Wilson, 2008; Kan, Barsalou, Solomon, Minor, & Thompson-Schill, 2003; Sabsevitz et al., 2005; Simmons, Hamann, Harenski, Hu, & Barsalou, 2008). To address these methodological challenges, and to investigate whether the meanings of abstract concepts are grounded in neural patterns reflecting relevant semantic content, we developed a new paradigm for studying them. During the concept-scene trials illustrated in Figure 1, the word for one of four concepts, two abstract (convince, arithmetic) and two concrete (rolling, red), was presented for several seconds prior to a visual scene that appeared briefly on most trials. The participants’ task was to think deeply about the meaning of the concept so they could quickly determine if it applied to the subsequent scene. This match/mismatch task elicited deep processing of the concepts (i.e., participants could not use simple word association strategies) and provided visual contexts in which to ground them. The four concepts were presented repeatedly with various matching and mismatching visual scenes in concept-scene trials, which were randomly intermixed with one other trial type. During unpredictable “catch” trials that accounted for a third of all trials, a visual scene did not follow the concept (see Figure 1). These critical catch trials allowed separation of neural activity during the concept period from neural activity during the scene period that often followed. With this design, it was possible to isolate the neural activity underlying each concept’s (deeply processed) semantics that occurred before the scene appeared.

Figure 1.

A illustrates the randomly intermixed events that occurred in the concept-scene match task: complete concept-scene trials, partial “catch” concept-only trials, and baseline fixation. Concrete concept (rolling, red) complete and partial trials were also randomly intermixed with the abstract concept trials (although not shown here). B provides a few examples of the scenes selected to match the abtract concepts convince and arithmetic.

We chose to investigate only four concepts – convince, arithmetic, rolling, and red – because specific predictions could be made about their content. Unlike previous studies that assessed many diverse concepts in an “abstract” condition, our design repeated only two abstract concepts. This design choice allowed us to identify the detailed content specific to each abstract concept that aggregated across trials and scene contexts, while avoiding the loss of such content that results from averaging many concepts. In other words, repeated trials of the same concept made up specific concept conditions as opposed to many different concepts making up an abstract concept condition. We predicted that brain regions underlying mental state inference, social interaction, and affective processing would represent the meaning of convince, whereas brain regions underlying number and mathematical processing would represent the meaning of arithmetic. Because previous research has established neural profiles for color and motion concepts (e.g., Simmons et al., 2007), we used the concrete concepts red and rolling to verify that these same brain regions were active in this new paradigm. We also included these concrete concepts so that we could examine the standard abstract (convince + arithmetic) versus concrete (rolling + red) contrast assessed in previous research.

To provide a strong test of these predictions, participants also performed four functional localizer tasks during the final scans of the experiment (one related to each concept’s content). To localize the regions associated with mentalizing and social cognition (e.g., dmPFC, posterior STS/TPJ), participants thought about what people in a visual scene might be thinking (for reviews and meta-analyses see Adolphs, 2009; Amodio & Frith, 2006; Kober et al., 2008; Van Overwalle, 2009). To localize regions involved in number and mathematical processing (e.g., bilateral IPS), participants counted the number of individual entities in a scene (for reviews and meta-analyses see Cantlon, Platt, & Brannon, 2009; Dehaene, Piazza, Pinel, & Cohen, 2003; Nieder & Dehaene, 2009). We computed the contrast of these independent localizer tasks (thought vs. count) to identify unique regions of interest (ROIs) in which to compare the activations for convince and arithmetic. Within localizer regions more active during mentalizing and social cognition, we tested the prediction that neural activity would be reliably greater for convince than for arithmetic. Within localizer regions more active during numerical cognition, we tested the prediction that neural activity would be reliably greater for arithmetic than for convince. Localizers for motion and color were analogously used to establish brain areas predicted to be relevant for the concrete concepts rolling (e.g., middle temporal gyrus) and red (e.g., fusiform gyrus) (for reviews of related research see Martin, 2001, 2007).

Methods

Design & Participants

The fMRI experiment consisted of six matching task runs, and two subsequent functional localizer runs. A fast event-related fMRI design, optimized to examine the two abstract concept conditions (convince, arithmetic) and the two concrete concept conditions (rolling, red), was developed for the concept-scene matching task. Two trial types existed in the four concept conditions. In 144 complete concept-scene trials, participants deeply processed one of the four concepts to subsequently judge whether it applied or did not apply to a visual scene. In 72 partial “catch” trials, participants deeply processed one of the concepts with no scene following. Partial trials were included so that the BOLD signal for concept events could be mathematically separated from signal for subsequent scene events (Ollinger, Corbetta, & Shulman, 2001; Ollinger, Shulman, & Corbetta, 2001). Separation of these events was critical for examining the concept events apart from the scene events that followed on many trials. The BOLD activations reported here are for the concept events (not for scene events). As necessary for this type of catch trial design, partial trials were unpredictable and accounted for a third of all trials.

In each run, six complete trials and three partial trials were presented for each concept amidst jittered visual fixation periods3 (ranging from 2.5 to 12.5 s in increments of 2.5 s; average ISI = 5 s) in a pseudo-random order optimized for deconvolution using optseq2 software. The 36 visual scenes used in the experiment were randomly assigned to the 36 complete trials in each concept condition. The 36-scene set consisted of 9 scenes selected to match each concept (see Materials), such that the concept applied to the scene on 25% of the complete trials. All scenes were presented with each concept so that the visual information following each concept was held constant across concepts. As a result, each scene repeated four times across the six runs (but never within a single run). Finally, six random orders of the six runs were created to control for run-order effects, with participants being randomly assigned to the six versions.

An independent functional localizer followed the critical matching task runs. The localizer was implemented in a blocked design that cycled through four randomly ordered task blocks (thought, count, motion, color) three times within each of two runs. The sequence of the three cycles within a run was rotated to counterbalance for order (i.e., the last cycle became the first cycle in each alternate version), creating three versions to which participants were randomly assigned. In each task block, participants viewed a cue and then performed the corresponding task on three visual scenes that were randomly assigned to the block online. Fixation blocks following each task block allowed the hemodynamic response to return to baseline.

Thirteen right-handed, consenting native English speakers from the Emory community, ranging in age from 18–24 (7 female), participated in the experiment. Three additional participants were dropped due to excessive head motion in the scanner. Participants had no history of psychiatric illness and were not taking psychotropic medication. All participants received $50 in compensation.

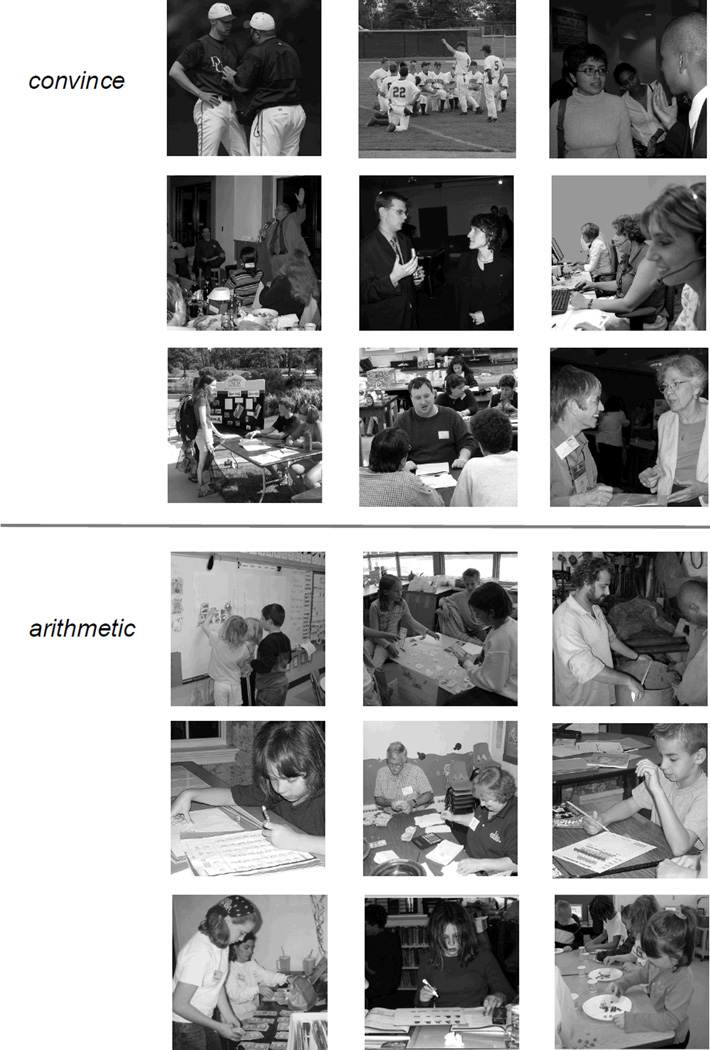

Materials

For the concept-scene matching task, 36 photographic images (600 × 600 pixels) of everyday scenes, all including people, were sampled from the Internet and then cropped, resized, and converted to grayscale bitmaps (see Appendix). Grayscale images were used so that performing the match task for red would require conceptual knowledge. Nine scenes that best applied to one of the four concepts (but not the other three) were selected based on ratings from a separate set of participants. A separate sample of 30 participants rated 26–31 scenes selected by the experimenters to apply to each concept (convince, arithmetic, rolling, red) for visual complexity (7-pt likert scale; 1=Not Complex, 4=Somewhat Complex, 7=Very Complex) and for how easy it was to apply each of the four concepts to the scene (7-pt likert scale; 1=Not Easy, 4=Somewhat Easy, 7=Very Easy). The nine scenes selected for a given concept (e.g., arithmetic) were rated as significantly easier to apply to that concept (e.g., arithmetic) than to the other concepts (e.g., convince, rolling, red; all pair-wise t-tests p < .001).4 Furthermore, the four groups of scenes selected to apply to each concept did not significantly differ in visual complexity (one-way ANOVA; F(3,32) = .126, p =.944).

For the functional localizer, seventy-two grayscale photographic bitmap images (600 × 600 pixels) of everyday scenes (e.g., people flying kites, playing golf, sweeping the floor) were collected and edited in the manner described above. Every scene included multiple objects and entities, at least one person, and at least one object in motion so that participants could perform the localizer tasks described in more detail later.

Procedure

Participants were introduced to the concept-scene matching task during a short practice session before the imaging session. The researcher instructed participants to think deeply about the concept’s meaning for the entire 5 s that the concept word appeared on the screen. The researcher further explained that on some trials a visual scene would appear for 2.5 s when the concept word disappeared, and that the participant’s task was to press one of two buttons to indicate if the concept applied or did not apply to the scene. Because the scene would only appear briefly, deeply processing the meaning of the word was necessary to respond as quickly as possible. The researcher also explained that a fixation star would appear after some trials, indicating a rest period with no task (varying in duration). Participants practiced the task in a training run, with different concepts and scenes than would appear in the critical scanner runs.

Participants also practiced the functional localizer tasks prior to scanning. During each localizer block, a 2.5 s cue indicated which task to perform on the three visual scenes that appeared sequentially for 5 s each (total block duration 17.5 s). The “thoughts” cue indicated that the participant should infer the thoughts of people in the scene, the “count” cue indicated the participant should count the number of independent entities in the scene, the “motion” cue indicated the participant should infer the direction of motion for a scene object, and the “color” cue indicated the participant should infer the colors of objects in the (grayscale) scene. The 12.5 s fixation after each task block signaled a rest period.

Imaging and Analysis

Images were collected at the Emory Biomedical Imaging Technology Center on a 3T Siemens Trio scanner and preprocessed using standard methods in AFNI (Cox, 1996). In each 6-min functional run, 144 T2*-weighted echo planar MR volumes depicting BOLD contrast were acquired using a 4-channel head coil, 33 (3 mm thick) axial slices per volume (TR = 2500 ms, TE = 35 ms, FOV = 192 mm, 64 × 64 matrix, voxel size = 3 × 3 × 3 mm). High-resolution T1-weighted MPRAGE volumes were also acquired during an initial 8-min anatomical scan (176 1mm sagittal slices, voxel size = 1 × 1 × 1 mm, TE = 3.9 ms, TR = 2600 ms). Participants viewed stimuli through a mirror mounted on the head coil, which reflected a screen positioned at the head of the scanner. Stimuli were projected onto this screen and responses collected using Eprime software (Schneider, Eschman, & Zuccolotto, 2002).

Initial preprocessing steps included slice time correction and motion correction in which all volumes were aligned to the 20th volume in the first run. This base volume was acquired close in time to the collection of the anatomical dataset, facilitating later alignment of functional and anatomical data. The functional data were then smoothed using an isotropic 6 mm full-width half-maximum Gaussian kernel. Voxels outside the brain were removed from further analysis, as were high-variability low-intensity voxels likely to be shifting in and out of the brain due to minor head motion. Finally, the signal intensities in each volume were divided by the mean signal value for the respective run and multiplied by 100 to produce percent signal change from the run mean. All later regression analyses performed on these percent signal change data included the six motion regressors obtained from volume registration to remove any residual signal changes correlated with movement (translation in the X, Y, and Z planes; rotation around the X, Y, and Z axes), and a 3rd order baseline polynomial function to remove scanner drift.

The anatomical data were aligned to the same functional base volume used during motion correction to align the functional volumes. In preparation for later group analyses, the anatomical dataset was warped to Talairach space by a transformation in which the anterior commissure (AC) and posterior commissure (PC) were aligned to become the y-axis, and scaled to the Talairach-Tournoux Atlas brain size. The transformation matrix generated to warp the anatomical dataset was used in later steps to warp the functional data just prior to group analysis.

Functional Localizer Analyses

Individual regression analyses and subsequent group-level random effects contrasts were first computed on the functional localizer percent signal change data, establishing regions of interest associated with the four localizer tasks: thought, count, motion, color. A regression analysis was performed on each participant’s preprocessed signal change data using canonical gamma functions to model the hemodynamic response. The onset times of the four conditions corresponding to the thought, count, motion, and color task blocks were specified, with the 17.5 s block duration modeled as a boxcar convolved with the canonical gamma function.

Each participant’s condition betas from the regression were then warped to Talairach space, and entered into a second-level random effects ANOVA. At the group level, four whole-brain contrasts were computed to define regions of interest for the concept-scene matching task data: 1) thought > count 2) count > thought 3) motion > color 4) color > motion. A voxel-wise threshold of p < .0001 was used in conjunction with a 189mm3 extent threshold to produce an overall p < .05 corrected threshold. AFNI Alphasim was used to calculate these extent thresholds, which runs Monte Carlo simulations to estimate the cluster size required to exceed those of false positives at a given voxel-wise threshold. The results for the color > motion contrast were slightly less robust and thus a voxel-wise threshold of p < .001 was used for this contrast with a larger extent threshold of 486 mm3, the conjunction of which again resulted in an overall p < .05 corrected threshold.

Concept-Scene Matching Task Analyses

In the regression analyses for each individual, the onset times for five conditions were specified: the four concept conditions (convince, arithmetic, rolling, red) and a scene condition that included the scenes following all concepts during complete trials. The scene condition was modeled using a canonical gamma function, and the four concept conditions were modeled as 5 s boxcar functions convolved with a gamma function. Concept events from complete trials (i.e., those followed by a scene) and concept events from partial trials were specified within a single concept condition (e.g., all convince events from both the complete and the partial trials as the convince condition). Specifying concept events in complete and partial trials as a single condition allowed each concept condition to be modeled separately from the scene condition. Prior to group-level analyses, each individual’s resulting condition betas from the regression analysis were warped to Talairach space.

ROI analyses

At the group level, analyses of the concept conditions (i.e., neural activity during the concept phase, not the subsequent scene phase of the match task) were conducted in localizer regions of interest first. To test the key a priori hypotheses, the mean betas for the convince and arithmetic conditions were extracted across voxels in the thought and count localizer ROIs for each individual. A one-tailed t-test was then computed for each thought localizer ROI to determine if the mean beta across individuals for convince was significantly greater than the mean beta for arithmetic. A one-tailed t-test was analogously computed in each count localizer ROI to determine if the mean beta for arithmetic was significantly greater than the mean beta for convince. Because these tests were based on a priori predictions and because ROIs were defined by a separate dataset, the conventional criterion of p < .05 was adopted. As can be seen in Tables 1 and 2, many regions of interest were significant at more stringent levels of p < .01 and p < .005.

Table 1.

ROI analyses of the abstract concepts in regions defined by the independent thought and count localizers.

| Regions of Interest Defined by Independent Functional Localizer Task |

Concept ROI analysis (Match Paradigm) |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| localizer contrast | brain region | Volume mm3 |

peak voxel |

mean t |

concept contrast | t | sig. | ||

| x | y | z | |||||||

| thought > count | L Anterior Temp/OFC/Hipp | 7011 | −47 | −15 | −4 | 7.00 | convince > arithmetic | 3.72 | *** |

| thought > count | L STS | 3387 | −55 | −58 | 14 | 6.63 | convince > arithmetic | 4.91 | **** |

| thought > count | R STS | 2498 | 47 | −59 | 9 | 6.92 | convince > arithmetic | 2.70 | ** |

| thought > count | Posterior Cingulate | 2250 | −2 | −51 | 35 | 6.86 | convince > arithmetic | 3.81 | *** |

| thought > count | dmPFC | 2212 | −9 | 48 | 41 | 6.42 | convince > arithmetic | 3.22 | *** |

| thought > count | SMA | 1594 | −3 | 3 | 64 | 6.96 | convince > arithmetic | 1.88 | * |

| thought > count | Medial OFC | 710 | 0 | 48 | −7 | 6.69 | convince > arithmetic | 3.68 | *** |

| thought > count | R Anterior STS | 698 | 56 | −4 | −9 | 6.87 | convince > arithmetic | 2.29 | * |

| thought > count | dmPFC | 566 | −8 | 35 | 50 | 6.95 | convince > arithmetic | 2.23 | * |

| thought > count | R Inferior Frontal g | 340 | 55 | 26 | 10 | 7.14 | convince > arithmetic | 3.20 | *** |

| thought > count | L Amygdala | 272 | −24 | −8 | −11 | 6.52 | convince > arithmetic | 0.54 | n.s. |

| thought > count | dmPFC | 272 | 7 | 44 | 41 | 7.61 | convince > arithmetic | −0.30 | n.s. |

| thought > count | R Temporal Pole | 267 | 47 | 21 | −18 | 6.12 | convince > arithmetic | 1.71 | n.s. (.06) |

| count > thought | L IPS/Superior Parietal | 3190 | −31 | −47 | 37 | 6.22 | arithmetic > convince | 3.08 | ** |

| count > thought | R Middle Frontal g (dlPFC) | 1473 | 34 | 34 | 35 | 6.42 | arithmetic > convince | 1.81 | * |

| count > thought | R IPS | 1324 | 42 | −44 | 51 | 6.24 | arithmetic > convince | 3.06 | ** |

| count > thought | R Superior Parietal | 1194 | 15 | −64 | 43 | 6.53 | arithmetic > convince | 1.46 | n.s. (.09) |

Note.

p < .05

p < .01

p < .005

p < .001

L is left, R is right, g is gyrus, OFC is orbitofrontal cortex, dmPFC and dlPFC are dorsomedial and dorsolateral prefrontal cortex, Hipp is hippocampus SMA is supplementary motor area, IPS is intraparietal sulcus, Temp is temporal, STS is superior temporal sulcus

Table 2.

ROI analyses of the concrete concepts in regions defined by the independent motion and color localizers.

| Regions of Interest Defined by Independent Functional Localizer Task |

Concept ROI analysis (Match Paradigm) |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| localizer contrast | brain region | Volume mm3 |

peak voxel |

mean t |

concept contrast | t | sig. | ||

| x | y | z | |||||||

| motion > color | L M TG /ST S | 1176 | −54 | −60 | 8 | 6.48 | rolling > red | 4.76 | **** |

| motion > color | R M T G /ST S | 1088 | 45 | −41 | 9 | 6.22 | rolling > red | 1.67 | n.s. (.06) |

| motion > color | L Supramarginal g | 1034 | −54 | −38 | 31 | 6.50 | rolling > red | 2.36 | * |

| motion > color | L Superior Parietal | 613 | −35 | −47 | 57 | 6.64 | rolling > red | 0.69 | n.s. |

| motion > color | Precuneus | 514 | −9 | −55 | 45 | 5.99 | rolling > red | 2.73 | ** |

| color > motion | L Fusiform /Middle Occipital g | 2697 | −37 | −85 | −7 | 5.11 | red > rolling | 2.45 | * |

| color > motion | L Superior Parietal | 985 | −23 | −66 | 41 | 5.26 | red > rolling | 3.55 | *** |

| color > motion | R Fusiform g | 569 | 36 | −62 | −11 | 4.73 | red > rolling | 1.99 | * |

| color > motion | R P arahippocampal g | 497 | 24 | −28 | −15 | 4.95 | red > rolling | 0.05 | n.s. |

Note.

p < .05

p < .01

p < .005

p < .001

L is left, R is right, g is gyrus, STS is superior temporal sulcus, MTG is middle temporal gyrus

The same procedure was used to test a priori predictions that the red and rolling conditions would replicate previous research. The mean betas for these concepts were extracted in all motion and color localizer ROIs for each individual. One-tailed t-tests were computed in each motion localizer ROI to examine if rolling was significantly greater than red, and in each color localizer ROI to examine if red was significant greater than rolling.

Whole-brain analyses

Voxel-wise group analyses across the whole brain were also conducted (again examining the concept conditions, not the scene condition). Each participant’s four condition betas for the concepts were entered into a second-level random effects ANOVA. Four whole-brain contrasts were computed that mirrored the ROI analyses just described: 1) convince > arithmetic 2) arithmetic > convince 3) rolling > red 4) red > rolling. In addition, the abstract vs. concrete contrast of [convince + arithmetic] vs. [rolling + red] was computed using the contrast weights convince (+1) arithmetic (+1) rolling (−1) red (−1). All whole-brain contrasts were thresholded using a voxel-wise threshold of p < .001 in conjunction with a spatial extent threshold of 486 mm3, yielding an overall corrected threshold of p < .05.

Split-half analyses

Because a novel feature of our paradigm was that only four concepts repeated across the experiment, we examined whether activity associated with each concept differed within the localizer ROIs across the first and second halves of the matching task. We observed a significant decrease in the reaction times (RTs) to the scenes during the second half (vs. the first half) of the experiment, which raised the possibility that neural activity during the separate concept period of interest here (that preceded the scene) was also changing across time. More specifically, a 2 (concept type: abstract, concrete) × 2 (response: applies, does not apply) × 2 (time: first half, second half) Repeated Measures ANOVA revealed that RTs decreased from the first half (M = 1444 ms) to the second half (M = 1308 ms) of the experiment; F(1,14) = 19.9, p < .001 (time main effect). To examine time effects in the neuroimaging data, event onsets for concept events in the first half of the experiment (runs 1–3) were specified as separate conditions from concept events in the second half of the experiment (runs 4–6). Nine conditions were modeled in this analysis: 1) convince-first half 2) convince-second half 3) arithmetic-first half 4) arithmetic-second half 5) rolling-first half 6) rolling-second half 7) red-first half 8) red-second half, and 9) scenes following all eight concept conditions. In all other regards, this analysis was identical to the original regression analysis. In the same manner as the initial ROI analysis, mean betas were extracted across voxels in each localizer ROI, for each individual. The group mean (across subjects) for convince in the first and second half, and for arithmetic in the first and second half, were compared using t-tests (p < .05) in both the thought and count localizer ROIs. The same analysis approach was also used to examine the concrete concepts in the motion and color localizer ROIs. As we will see, the RT decrease over time during the scene period does not appear to reflect processing during the earlier concept period, as neural activity largely remained stable across time for each concept.

One additional finding of note occurred in the RT data. A significant concept type × response interaction revealed that RTs were significantly slower for the abstract concepts than for the concrete concepts when concepts applied to the subsequent scene (but not significantly different when concepts did not apply; F(1,14) = 11.2, p = .005). This difference could be interpreted in a number of ways. One possibility is that it reflects differences in task difficulty (i.e., greater difficulty in applying the abstract concepts to matching scenes), which has been a general concern in past work comparing the neural bases of abstract and concrete concepts. As we will see, though, regions associated with task difficulty in prior studies (e.g., anterior insula, anterior cingulate, dorsal inferior frontal gyrus) (Noppeney & Price, 2004; Sabsevitz et al., 2005) were not more active for the abstract concepts than for the concrete concepts during the initial concept period. Thus, it appears that the RT differences reflect difficulty (or perhaps complexity) only during the later scene period, when the concepts were mapped to the scenes.

Results

Functional Localizers

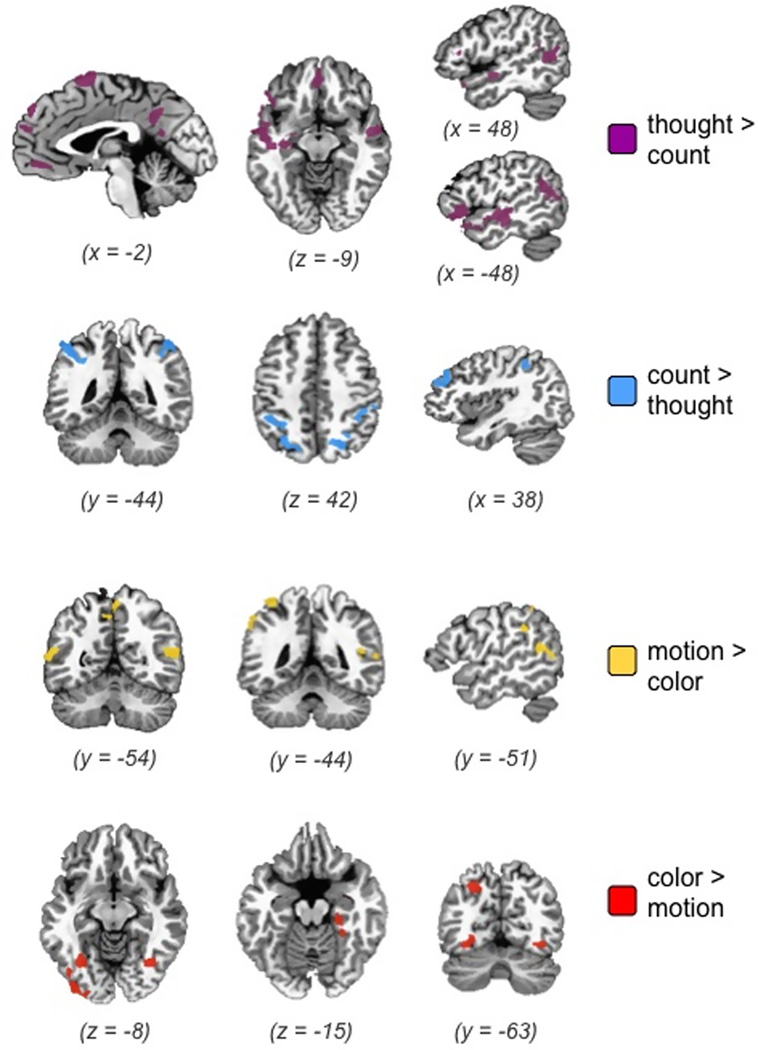

Each functional localizer contrast illustrated in Figure 2 identified brain regions consistent with previous research (see also Tables 1, 2). As just described, the four localizers defined ROIs in an independently obtained dataset, within which contrasts were computed for the separate and primary task of interest: the concept-scene matching task. The network of regions more active during the thought localizer has been consistently implicated in social cognition and emotion (Adolphs, 2009; Amodio & Frith, 2006; Kober et al., 2008; Simmons et al., 2010; Van Overwalle, 2009). Robust activity was observed along the cortical midline in medial prefrontal cortex and posterior cingulate, core regions of a network consistently identified during spontaneous thought (Buckner, Andrews-Hanna, & Schacter, 2008), and also in supplementary motor area. Bilaterally, the temporal poles, much of the superior temporal sulcus (both anterior and posterior), and inferior frontal gyrus were active. In the left hemisphere, activity in the anterior temporal lobe extended medially into the amygdala and hippocampus, and anteriorly into orbitofrontal cortex.

Figure 2.

Regions of interest defined by the independent functional localizer tasks.

The regions more active during the count localizer were those typically associated with number and magnitude (Cantlon et al., 2009; Dehaene et al., 2003; Nieder & Dehaene, 2009). These regions included bilateral intraparietal sulcus and posterior superior parietal regions, as well as right middle frontal gyrus.

The ROIs identified in the motion and color localizers were also consistent with previous research (Martin, 2001, 2007). The motion localizer identified predicted clusters of activity in lateral temporal (bilateral middle temporal gyrus and superior temporal sulcus) and parietal cortex (precuneus, left supramarginal gyrus and superior parietal), whereas the color localizer identified predicted clusters of activity in ventral temporal cortex (bilateral fusiform gyrus, right parahippocampal gyrus).

Concept-Scene Matching Task

Concept Contrasts in Functional Localizer ROIs Defined by an Independent Dataset

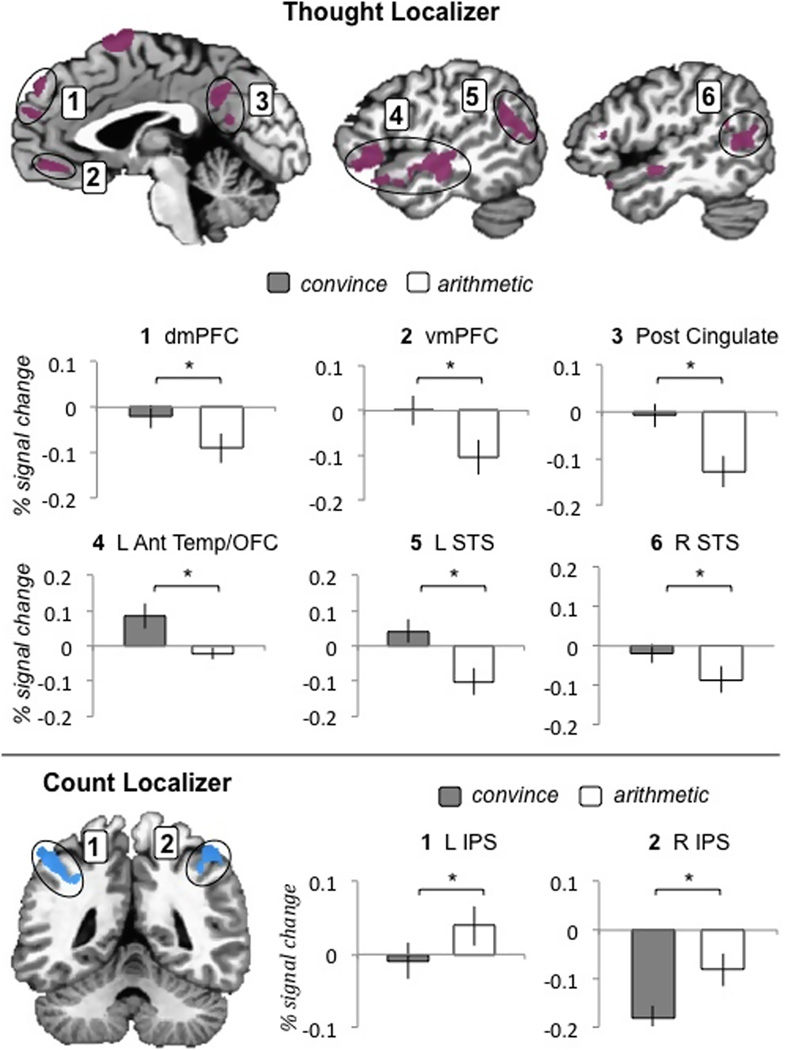

As Table 1 and Figure 3 illustrate, the results supported our predictions for the abstract concepts during the matching task (i.e., during the concept period prior to scene viewing). Specifically, our first prediction was that reliably greater activity would be observed in ROIs defined by the thought localizer when participants processed the meaning of convince as compared to when they processed the meaning of arithmetic. As predicted, regions of medial prefrontal cortex, posterior cingulate, orbitofrontal cortex, and superior temporal sulcus showed reliably greater activity for convince. Of the 13 ROIs defined in the thought localizer, 11 showed reliably greater activity when participants processed the meaning of convince versus arithmetic5. Because the first ROI defined by the thought localizer spanned multiple regions in frontal and temporal cortex (see Table 1), we also examined orbitofrontal cortex and the hippocampus separately from anterior temporal cortex. Whereas orbitofrontal cortex and anterior temporal cortex showed significantly more activity for convince than for arithmetic, no reliable difference was observed in the hippocampus.

Figure 3.

Histograms for convince and arithmetic in critical ROIs defined by the localizer.

Our second prediction was that reliably greater activity would be observed in ROIs defined by the count localizer when participants processed the meaning of arithmetic as compared to when they processed the meaning of convince. As predicted, bilateral IPS showed reliably greater activity for arithmetic. Both left IPS and left superior parietal cortex, which made up the first ROI defined by the count localizer (see Table 1), remained significant when examined as distinct regions. Right middle frontal gyrus also showed reliably greater activity for arithmetic, and right superior parietal cortex strongly trended toward reliability greater activity for arithmetic as well (p < .09).

The ROI analyses for the concrete concepts replicated previous research (see Table 2). Activity for rolling was reliably greater than that for red in lateral temporal and parietal regions; activity for red was reliably greater than that for rolling in bilateral fusiform regions.

Concept Contrasts Across the Whole-Brain

The brain regions that emerged when we computed the same contrasts across the entire brain overlapped with those identified in the ROI analysis. The one exception was a cluster in left lingual gyrus that was reliably more active for rolling than for red.

Concept Activations in the First and Second Half of the Experiment

Because the four concepts were presented repeatedly in our paradigm, and because reaction times to the scenes decreased in the second half of the experiment (see Methods), we examined whether activity associated with each concept in the localizer ROIs differed in the first and second half of the matching task. As shown in Table 3, brain activity within the ROIs was generally stable for both abstract concepts, showing few differences between the first and second halves of the experiment. This finding suggests that participants continued to process the concepts deeply throughout the experiment.

Table 3.

Stable concept activations occur across the first and second halves of the experiment.

| Functional Localizer ROIs |

Concept ROI analysis |

Concept Split-Half ROI analysis |

|

|---|---|---|---|

| brain region | concept contrast | 1st > 2nd half critical concept |

1st > 2nd half comparsion concept |

| thought > count | convince > arithmetic | (convince) | (arithmetic) |

| L Anterior Temp/OFC/Hipp | *** | n.s. | n.s. |

| L STS | **** | n.s. | n.s. |

| R STS | ** | n.s. | * |

| Posterior Cingulate | *** | n.s. | n.s. |

| dmPFC | *** | n.s. | n.s. |

| SMA | * | n.s. | * |

| Medial OFC | *** | n.s. | n.s. |

| R Anterior STS | * | n.s. | n.s. |

| dmPFC | * | n.s. | n.s. |

| R Inferior Frontal g | *** | n.s. | * |

| L Amygdala | n.s. | n.s. | n.s. |

| dmPFC | n.s. | n.s. | n.s. |

| R Temporal Pole | n.s. (.06) | n.s. | n.s. |

| count > thought | arithmetic > convince | (arithmetic) | (convince) |

| L IPS/Superior Parietal | ** | n.s. | n.s. |

| R Middle Frontal g (dlPFC) | * | n.s. | * |

| R IPS | ** | n.s. | n.s. |

| R Superior Parietal | n.s. (.09) | n.s. | *** |

| motion > color | rolling > red | (rolling) | (red) |

| L MTG/STS | **** | n.s. | n.s. |

| R MTG/STS | n.s. (.06) | n.s. | n.s. |

| L Supramarginal g | * | n.s. | n.s. |

| L Superior Parietal | n.s. | n.s. | n.s. |

| Precuneus | ** | n.s. | n.s. |

| color > motion | red > rolling | (red) | (rolling) |

| L Fusiform/Middle Occipital g | * | n.s. | n.s. |

| L Superior Parietal | *** | * | n.s. |

| R Fusiform g | * | n.s | n.s. |

| R Parahippocampal g | n.s. | n.s. | n.s. |

Note.

p < .05

p < .01

p < .005

p < .001

L is left, R is right, g is gyrus, OFC is orbitofrontal cortex, dmPFC and dlPFC are dorsomedial and dorsolateral prefrontal cortex, Hipp is hippocampus, SMA is supplementary motor area, IPS is intraparietal sulcus, temp. is temporal, STS is superior temporal sulcus, MTG is middle temporal gyrus.

Abstract vs. Concrete

Finally, we performed the standard whole-brain contrast between the abstract [convince + arithmetic] vs. concrete [rolling + red] concepts. Only a few regions emerged from this contrast, suggesting that averaging two concepts in the same condition eliminated important content specific to each concept, as described earlier. Significant activations for the abstract concepts occurred in posterior cingulate, precuneus, right parahippocampal gyrus, and bilateral lingual gyrus (see Table 4). In contrast, the concrete concepts showed a left-lateralized profile of activity in middle/inferior frontal gyrus, inferior temporal gyrus, and inferior parietal cortex. Interestingly, we did not observe activations for the abstract concepts in the left hemisphere language areas identified in previous studies that averaged across many abstract concepts and that used relatively shallow context-independent tasks. Because our paradigm differs in a number of ways from those used in prior studies, though, we lack a precise understanding of this result. Nevertheless, it suggests that very different results can occur when small numbers of abstract and concrete concepts are examined in detail under deep processing conditions.

Table 4.

Abstract (convince, arithmetic) versus concrete (rolling, red) concepts contrast.

| brain region | Volume mm3 |

peak voxel |

mean t |

||

|---|---|---|---|---|---|

| x | y | z | |||

| abstract > concrete | |||||

| L Lingual g | 6071 | −8 | −86 | −3 | 5.04 |

| Posterior Cingulate/Precuneus | 3898 | 5 | −45 | 32 | 4.98 |

| R Parahippocampal g | 1120 | 27 | −58 | −4 | 4.83 |

| R Lingual g | 488 | 13 | −79 | 5 | 5.22 |

| concrete > abstract | |||||

| L Midde/Inferior Frontal g | 1468 | −36 | 27 | 19 | 5.16 |

| L Inferior Parietal | 978 | −42 | −37 | 38 | 4.90 |

| L Inferior Temporal g | 957 | −55 | −64 | −2 | 5.08 |

Note. L is left, R is right, and g is gyrus

Discussion

Distributed patterns of activation for the abstract concepts, convince and arithmetic, occurred in brain areas predicted to represent relevant non-linguistic semantic content. Specifically, brain regions implicated in mentalizing and social cognition were active when participants processed the meaning of convince, whereas brain regions implicated in numerical cognition were active when participants processed the meaning of arithmetic. Previous results for color and motion concepts replicated in our novel paradigm, demonstrating its utility for studying different kinds of concepts. Specifically, red activated ventral occipitotemporal regions that underlie color simulation (Chao & Martin, 1999; Goldberg, Perfetti, & Schneider, 2006; Simmons et al., 2007; Wiggs, Weisberg, & Martin, 1999), whereas rolling activated lateral temporal and parietal regions that underlie visual motion simulation (Kable, Kan, Wilson, Thompson-Schill, & Chatterjee, 2005; Noppeney, Josephs, Kiebel, Friston, & Price, 2005; Tranel, Kemmerer, Adolphs, Damasio, & Damasio, 2003; Tranel, Martin, Damasio, Grabowski, & Hichwa, 2005). Similar to the research findings for concrete concepts, our results suggest that abstract concepts are represented by distributed neural patterns that reflect their semantic content.

Because different kinds of abstract concepts have unique content, it is important to consider this heterogeneity when investigating the neural bases of abstract knowledge. We have shown that different patterns of activity underlie the meanings of convince and arithmetic. We further demonstrated that these patterns were largely obscured when convince and arithmetic were collapsed into an abstract concept condition that was compared to a concrete concept condition. These findings suggest that the variety in abstract concepts must be examined in the same way that the variety in concrete concepts is being examined (e.g., animals vs. tools). Simply distinguishing between abstract and concrete concepts, broadly defined, is not sufficient to understand how people accumulate rich repertoires of abstract knowledge.

A challenge to studying abstract concepts is that the semantic content underlying their meanings is often quite complex (e.g., Barsalou & Wiemer-Hastings, 2005). Relative to the concrete concepts that typically categorize local elements of situations (e.g., cookie categorizes part of an event in which a child is trying to convince a parent to give him/her a cookie), abstract concepts typically integrate these local elements into configural relational structures during conceptualization (e.g., convince integrates various parts of the event, including the child, parent, their mental states, their interaction, the cookie, etc) (Barsalou & Wiemer-Hastings, 2005; Wilson-Mendenhall et al., 2011). The most comprehensive illustrations of this complexity are currently found in linguistics. For example, Schmid (2000) demonstrated that many abstract nouns act as “conceptual shells” that construe the content of clauses preceding or following them.

To the extent that these accounts are correct, sophisticated methods for establishing the complex content of abstract concepts will be necessary. We adopted a straightforward approach in this experiment by assessing clear predictions about the semantic content of single concepts. Our functional localizer approach to investigating semantic properties in abstract concepts is similar to standard approaches used to study concrete concepts. Where our approach diverges from standard approaches to concrete concepts is in incorporating visual contexts that ground a single concept across a series of trials. We chose to prioritize the contextual grounding of a single concept in each condition, rather than including many related concepts in each condition (as is standard), because context plays a critical role in deeply processing complex abstract concepts (Schwanenflugal, 1991). Moving forward, it will be important for future work to systematically examine the neural patterns that underlie a wide variety of abstract concepts. Our experiment suggests that stable focal properties may be one source of variance among different abstract concepts (e.g., convince vs. arithmetic). Another systematic influence may be situational variance that occurs for individual concepts (e.g., knowledge exists for convince in very different situations – bargaining at a market, debating political issues, deliberating as a juror, etc) (e.g., Wilson-Mendenhall et al., 2011; see also Barrett, 2009; Barsalou, 2003b, 2008b).

Integral to assessing the semantic meanings of abstract concepts is a better understanding of the dynamical processing involved in accessing this knowledge. What constitutes a concept in a specific situation depends on the automatic and strategic processing involved in accessing information stored in memory (including linguistic associations and grounded conceptual knowledge) that changes across experimental laboratory contexts and across real life contexts (cf. Barsalou et al., 2008). For example, reciting a memorized dictionary definition of the abstract concept gossip draws on different processes and representations than deciding whether the rumor circulating around the office is gossip. Much research demonstrates that information activated during fast, automatic processing is relatively superficial, whereas information activated during extended, strategic processing constitutes core meaning (e.g., Keil & Batterman, 1984; Smith, Shoben, & Rips, 1974; cf. Barsalou et al., 2008). In this study, our goal was to assess the semantic knowledge that people retrieve when they are required to deeply process a concept in anticipation of applying it to a situation. Because our tightly controlled experimental paradigm involved presenting abstract concepts as single words divorced from a typical linguistic context, we embedded the concepts in visual contexts throughout the experiment, with the initial concept period lasting several seconds to provide sufficient time for deep conceptual processing.

During language comprehension, one important element involved in semantic retrieval appears to be syntactic structure. Studies illustrate that verb aspect, for example, foregrounds specific semantic properties of concepts and backgrounds others (e.g., Madden & Zwaan, 2003). Consistent with this view, recent reviews suggest that patterns of neural activity observed for verbs (like convince) vs. nouns (like arithmetic) are driven primarily by semantics (as opposed to the grammatical distinction itself; e.g., Crepaldi, Berlingeri, Paulesu, & Luzzatti, 2011; Vigliocco, Vinson, Druks, Barber, & Cappa, 2011). The different neural patterns we observed for convince vs. arithmetic may reflect semantic content shaped by grammatical class, but this factor was likely minimized due to our functional localizer approach that isolated semantic properties of interest using a scene interpretation method.

In conclusion, our results suggest that abstract concepts are represented by distributed neural patterns that reflect their semantic content, consistent with research on concrete concepts. As this experiment illustrates, however, the semantic content unique to different abstract concepts is only revealed when individual concepts are processed deeply in context. Probing the representations in memory that support using abstract knowledge in context is a significant departure from previous research focusing on the linguistic or other processing that occurs quickly and similarly for many different abstract concepts. We propose that investigating this deeper, contextually-based processing of abstract concepts is of central importance for understanding human thought, reasoning, and decision-making. Much remains to be learned about the variety of abstract concepts that people acquire, and how they support thought and action in a complex world.

Acknowledgements

We are grateful to Robert Smith and to the Emory Biomedical Imaging Technology Center staff for their help with data collection. This work was supported by a NSF Graduate Research Fellowship to Christine D. Wilson-Mendenhall and by DARPA contract FA8650-05-C-7256 to Lawrence W. Barsalou.

Appendix

Footnotes

By “content,” we mean the general kind of information (e.g., visual) and also the operations that produce various forms of this information (e.g., object shape).

We use italics to indicate a concept and quotes to indicate the “word” associated with the concept.

A star (*) was presented instead of the standard cross (+) during fixation trials because piloting revealed that the cross elicited thinking about arithmetic.

The concept word “convincing” was initially used instead of “convince” to collect these ratings. Because “convincing” can be an adjective or verb, we changed the concept word to the unambiguous verb form “convince” for the neuroimaging study. We selected the present tense “convince” (as opposed to the past or future tense) to foreground the active process of changing the mental state of another person, which is central to the meaning of this concept and is also the property of interest here.

We include right temporal pole among significant clusters here given the very strong trend of p < .06.

References

- Adolphs R. The social brain: neural basis of social knowledge. Annu Rev Psychol. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707.163514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nat Rev Neurosci. 2006;7(4):268–277. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5(8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Variety is the spice of life: A psychological construction approach to understanding variability in emotion. Cogn Emot. 2009;23(7):1284–1306. doi: 10.1080/02699930902985894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22(4):577–609. doi: 10.1017/s0140525x99002149. discussion 610-560. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Abstraction in perceptual symbol systems. Philos Trans R Soc Lond B Biol Sci. 2003a;358(1435):1177–1187. doi: 10.1098/rstb.2003.1319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou LW. Situated simulation in the human conceptual system. Lang Cogn Process. 2003b;18:513–562. [Google Scholar]

- Barsalou LW. Grounded cognition. Annu Rev Psychol. 2008a;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Situating concepts. In: Robbins P, Aydede M, editors. Cambridge Handbook of Situated Cognition. New York: Cambridge University Press; 2008b. pp. 236–263. [Google Scholar]

- Barsalou LW. Simulation, situated conceptualization, and prediction. Philos Trans R Soc Lond B Biol Sci. 2009;364(1521):1281–1289. doi: 10.1098/rstb.2008.0319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou LW, Santos A, Simmons WK, Wilson CD. Language and simulation in conceptual processing. In: De Vega M, Glenberg AM, Graesser A, editors. Symbols, embodiment, and meaning. Oxford: Oxford University Press; 2008. pp. 245–283. [Google Scholar]

- Barsalou LW, Weimer-Hastings K. Situating abstract concepts. In: Pecher D, Zwann R, editors. Grounding Cognition: The Role of Perception and Action in Memory, Language, and Thought. New York: Cambridge University Press; 2005. pp. 129–163. [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL. The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- Cantlon JF, Platt ML, Brannon EM. Beyond the number domain. Trends Cogn Sci. 2009;13(2):83–91. doi: 10.1016/j.tics.2008.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Martin A. Cortical regions associated with perceiving, naming, and knowing about colors. J Cogn Neurosci. 1999;11(1):25–35. doi: 10.1162/089892999563229. [DOI] [PubMed] [Google Scholar]

- Contreras JM, Banaji MR, Mitchell JP. Dissociable neural correlates of stereotypes and other forms of semantic knowledge. Soc Cogn Affect Neurosci. 2011 doi: 10.1093/scan/nsr053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29(3):162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cree GS, McRae K. Analyzing the factors underlying the structure and computation of the meaning of chipmunk, cherry, chisel, cheese, and cello (and many other such concrete nouns) J Exp Psychol Gen. 2003;132(2):163–201. doi: 10.1037/0096-3445.132.2.163. [DOI] [PubMed] [Google Scholar]

- Crepaldi D, Berlingeri M, Paulesu E, Luzzatti C. A place for nouns and a place for verbs? A critical review of neurocognitive data on grammatical-class effects. Brain Lang. 2011;116:33–40. doi: 10.1016/j.bandl.2010.09.005. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Piazza M, Pinel P, Cohen L. Three parietal circuits for number processing. Cogn Neuropsychol. 2003;20(3):487–506. doi: 10.1080/02643290244000239. [DOI] [PubMed] [Google Scholar]

- Estes Z, Adelman JS. Automatic vigilance for negative words is categorical and general. Emotion. 2008;8(4):453–457. doi: 10.1037/1528-3542.8.4.441. [DOI] [PubMed] [Google Scholar]

- Fiebach CJ, Friederici AD. Processing concrete words: fMRI evidence against a specific right-hemisphere involvement. Neuropsychologia. 2004;42(1):62–70. doi: 10.1016/s0028-3932(03)00145-3. [DOI] [PubMed] [Google Scholar]

- Goldberg RF, Perfetti CA, Schneider W. Perceptual knowledge retrieval activates sensory brain regions. J Neurosci. 2006;26(18):4917–4921. doi: 10.1523/JNEUROSCI.5389-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Kan IP, Wilson A, Thompson-Schill SL, Chatterjee A. Conceptual representations of action in the lateral temporal cortex. J Cogn Neurosci. 2005;17(12):1855–1870. doi: 10.1162/089892905775008625. [DOI] [PubMed] [Google Scholar]

- Kan IP, Barsalou LW, Solomon KO, Minor JK, Thompson-Schill SL. Role of mental imagery in a property verification task: FMRI evidence for perceptual representations of conceptual knowledge. Cogn Neuropsychol. 2003;20(3):525–540. doi: 10.1080/02643290244000257. [DOI] [PubMed] [Google Scholar]

- Keil FC, Batterman N. A characteristic-to-defining shift in the acquisition of word meaning. Journal of Verbal Learning and Verbal Behavior. 1984;23:221–236. [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. NeuroImage. 2008;42(2):998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kousta ST, Vigliocco G, Vinson DP, Andrews M, Del Campo E. The representation of abstract words: why emotion matters. Journal of Experimental Psychology: General. 2011;140(1):14–34. doi: 10.1037/a0021446. [DOI] [PubMed] [Google Scholar]

- Madden CJ, Zwaan RA. How does verb aspect constrain eventrepresentations? Memory & Cognition. 2003;31(5):663–672. doi: 10.3758/bf03196106. [DOI] [PubMed] [Google Scholar]

- Martin A. Functional neuroimaging of semantic memory. In: Cabeza R, Kingstone A, editors. Handbook of Functional Neuroimaging of Cognition. Cambridge, MA: MIT Press; 2001. pp. 153–186. [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annu Rev Psychol. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A, Weisberg J. Neural foundations for understanding social and mechanical concepts. Cogn Neuropsychol. 2003;20(3–6):575–587. doi: 10.1080/02643290342000005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mason MF, Banfield JF, Macrae CN. Thinking about actions: the neural substrates of person knowledge. Cereb Cortex. 2004;14(2):209–214. doi: 10.1093/cercor/bhg120. [DOI] [PubMed] [Google Scholar]

- Medin DL, Lynch EB, Solomon KO. Are there kinds of concepts? Annu Rev Psychol. 2000;51:121–147. doi: 10.1146/annurev.psych.51.1.121. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Heatherton TF, Macrae CN. Distinct neural systems subserve person and object knowledge. Proc Natl Acad Sci U S A. 2002;99(23):15238–15243. doi: 10.1073/pnas.232395699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy G. The Big Book of Concepts. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- Nieder A, Dehaene S. Representation of number in the brain. Annu Rev Neurosci. 2009;32:185–208. doi: 10.1146/annurev.neuro.051508.135550. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Josephs O, Kiebel S, Friston KJ, Price CJ. Action selectivity in parietal and temporal cortex. Brain Res Cogn Brain Res. 2005;25(3):641–649. doi: 10.1016/j.cogbrainres.2005.08.017. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ. Retrieval of abstract semantics. NeuroImage. 2004;22(1):164–170. doi: 10.1016/j.neuroimage.2003.12.010. [DOI] [PubMed] [Google Scholar]

- Ollinger JM, Corbetta M, Shulman GL. Separating processes within a trial in event-related functional MRI. NeuroImage. 2001;13(1):218–229. doi: 10.1006/nimg.2000.0711. [DOI] [PubMed] [Google Scholar]

- Ollinger JM, Shulman GL, Corbetta M. Separating processes within a trial in event-related functional MRI. NeuroImage. 2001;13(1):210–217. doi: 10.1006/nimg.2000.0710. [DOI] [PubMed] [Google Scholar]

- Paivio A. Mental Representations: A Dual Coding Approach. New York: Oxford University Press; 1986. [Google Scholar]

- Paivio A. Dual coding theory: retrospect and current status. Can J Psychol. 1991;45:255–287. [Google Scholar]

- Ross LA, Olson IR. Social cognition and the anterior temporal lobes. NeuroImage. 2010;49(4):3452–3462. doi: 10.1016/j.neuroimage.2009.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabsevitz DS, Medler DA, Seidenberg M, Binder JR. Modulation of the semantic system by word imageability. NeuroImage. 2005;27(1):188–200. doi: 10.1016/j.neuroimage.2005.04.012. [DOI] [PubMed] [Google Scholar]

- Schmid, Hans-Jörg . In: English abstract nouns as conceptual shells: From corpus to cognition. Kortmann B, Traugott EC, editors. Berlin: de Gruyter GmbH & Co; 2000. [Google Scholar]

- Schneider W, Eschman A, Zuccolotto A. E-prime computer software and manual. Pittsburgh, PA: Psychology Software Tools Inc; 2002. [Google Scholar]

- Schwanenflugel PJ. Why are abstract concepts so hard to understand? In: Schwanenflugel P, editor. The Psychology of Word Meanings. Hillsdale, NJ: Lawrence Erlbaum Associates; 1991. pp. 223–250. [Google Scholar]

- Simmons WK, Hamann SB, Harenski CL, Hu XP, Barsalou LW. fMRI evidence for word association and situated simulation in conceptual processing. J Physiol Paris. 2008;102(1–3):106–119. doi: 10.1016/j.jphysparis.2008.03.014. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW. A common neural substrate for perceiving and knowing about color. Neuropsychologia. 2007;45(12):2802–2810. doi: 10.1016/j.neuropsychologia.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Reddish M, Bellgowan PS, Martin A. The selectivity and functional connectivity of the anterior temporal lobes. Cereb Cortex. 2010;20(4):813–825. doi: 10.1093/cercor/bhp149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith EE, Shoben EJ, Rips LJ. Structure and process in semantic memory: A featural model for semantic decisions. Psychol Rev. 1974;81:214–241. [Google Scholar]

- Tranel D, Kemmerer D, Adolphs R, Damasio H, Damasio AR. Neural correlates of conceptual knowledge for actions. Cogn Neuropsychol. 2003;20(3):409–432. doi: 10.1080/02643290244000248. [DOI] [PubMed] [Google Scholar]

- Tranel D, Martin C, Damasio H, Grabowski TJ, Hichwa R. Effects of noun-verb homonymy on the neural correlates of naming concrete entities and actions. Brain Lang. 2005;92(3):288–299. doi: 10.1016/j.bandl.2004.01.011. [DOI] [PubMed] [Google Scholar]

- Van Overwalle F. Social cognition and the brain: a meta-analysis. Hum Brain Mapp. 2009;30(3):829–858. doi: 10.1002/hbm.20547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigliocco G, Vinson DP, Druks J, Barber H, Cappa SF. Nouns and verbs in the brain: A review of behavioral, electrophysiological, neuropsychological and imaging studies. Neurosci Biobehav Rev. 2011;35:407–426. doi: 10.1016/j.neubiorev.2010.04.007. [DOI] [PubMed] [Google Scholar]

- Wang J, Conder JA, Blitzer DN, Shinkareva SV. Neural representation of abstract and concrete concepts: a meta-analysis of neuroimaging studies. Hum Brain Mapp. 2010;31(10):1459–1468. doi: 10.1002/hbm.20950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiggs CL, Weisberg J, Martin A. Neural correlates of semantic and episodic memory retrieval. Neuropsychologia. 1999;37(1):103–118. doi: 10.1016/s0028-3932(98)00044-x. [DOI] [PubMed] [Google Scholar]

- Wilson-Mendenhall CD, Barrett LF, Simmons WK, Barsalou LW. Grounding emotion in situated conceptualization. Neuropsychologia. 2011;49(5):1105–1127. doi: 10.1016/j.neuropsychologia.2010.12.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeh W, Barsalou LW. The situated nature of concepts. Am J Psychol. 2006;119(3):349–384. [PubMed] [Google Scholar]

- Zahn R, Moll J, Krueger F, Huey ED, Garrido G, Grafman J. Social concepts are represented in the superior anterior temporal cortex. Proc Natl Acad Sci U S A. 2007;104(15):6430–6435. doi: 10.1073/pnas.0607061104. [DOI] [PMC free article] [PubMed] [Google Scholar]