Abstract

Improved guided image fusion for magnetic resonance and computed tomography imaging is proposed. Existing guided filtering scheme uses Gaussian filter and two-level weight maps due to which the scheme has limited performance for images having noise. Different modifications in filter (based on linear minimum mean square error estimator) and weight maps (with different levels) are proposed to overcome these limitations. Simulation results based on visual and quantitative analysis show the significance of proposed scheme.

1. Introduction

Medical images from different modalities reflect different levels of information (tissues, bones, etc.). A single modality cannot provide comprehensive and accurate information [1, 2]. For instance, structural images obtained from magnetic resonance (MR) imaging, computed tomography (CT), and ultrasonography, and so forth, provide high-resolution and anatomical information [1, 3]. On the other hand, functional images obtained from position emission tomography (PET), single-photon emission computed tomography (SPECT), and functional MR imaging, and so forth, provide low-spatial resolution and functional information [3, 4]. More precisely, CT imaging provides better information on denser tissue with less distortion. MR images have more distortion but can provide information on soft tissue [5, 6]. For blood flow and flood activity analysis, PET is used which provide low space resolution. Therefore, combining anatomical and functional medical images through image fusion to extract much more useful information is desirable [5, 6]. Fusion of CT/MR images combines anatomical and physiological characteristics of human body. Similarly fusion of PET/CT is helpful for tumor activity analysis [7].

Image fusion is performed on pixels, features, and decision levels [8–10]. Pixel-level methods fuse at each pixel and hence reserve most of the information [11]. Feature-level methods extract features from source images (such as edges or regions) and combine them into a single concatenated feature vector [12, 13]. Decision-level fusion [11, 14] comprises sensor information fusion, after the image has been processed by each sensor and some useful information has been extracted out of it.

Pixel-level methods include addition, subtraction, division, multiplication, minimum, maximum, median, and rank as well as more complicated operators like Markov random field and expectation-maximization algorithm [15]. Besides these, pixel level also includes statistical methods (principal component analysis (PCA), linear discriminant analysis, independent component analysis, canonical correlation analysis, and nonnegative matrix factorization). Multiscale transforms like pyramids and wavelets are also types of pixel-level fusion [11, 14]. Feature-level methods include feature based PCA [12, 13], segment fusion [13], edge fusion [13], and contour fusion [16]. They are usually robust to noise and misregistration. Weighted decision methods (voting techniques) [17], classical inference [17], Bayesian inference [17], and Dempster-Shafer method [17] are examples of decision-level fusion methods. These methods are application dependent; hence, they cannot be used generally [18].

Multiscale decomposition based medical image fusion decompose the input images into different levels. These include pyramid decomposition (Laplacian [19], morphological [20], and gradient [21]); discrete wavelet transform [22]; stationary wavelet transform [23]; redundant wavelet transform [24]; and dual-tree complex wavelet transform [25]. These schemes produce blocking effects because the decomposition process is not accompanied by any spatial orientation selectivity.

To overcome the limitations, multiscale geometric analysis methods were introduced for medical image fusion. Curvelet transform based fusion of CT and MR images [26] does not provide a proper multiresolution representation of the geometry (as curvelet transform is not built directly in the discrete domain) [27]. Contourlet transform based fusion improves the contrast, but shift-invariance is lost due to subsampling [27, 28]. Nonsubsampled contourlet transform with a variable weight for fusion of MR and SPECT images has large computational time and complexity [27, 29].

Recently, guided filter fusion (GFF) [30] is used to preserve edges and avoid blurring effects in the fused image. Guided filter is an edge-preserving filter and its computational time is also independent of filter size. However, the method provides limited performance for noisy images due to the use of Gaussian filter and two-level weight maps. An improved guided image fusion for MR and CT imaging is proposed to overcome these limitations. Simulation results based on visual and quantitative analysis show the significance of proposed scheme.

2. Preliminaries

In this section, we briefly discuss the methodology of GFF [30]. The main steps of the GFF method are filtering (to obtain the two-scale representation), weight maps construction, and fusion of base and detail layers (using guided filtering and weighted average method).

Let F be the fused image obtained by combining input images A and B of same sizes (M × N). The base (I 11 and I 12) and detail (I 21 and I 22) layers of source images are

| (1) |

where f is the average filter. The base and detail layers contain large- and small-scale variations, respectively. The saliency images are obtained by convolving A and B and with a Laplacian filter h followed by a Gaussian filter g; that is,

| (2) |

The weight maps P 1 and P 2 are

| (3) |

where ξ(S 1, S 2) is a function with value 1 for S 1(m, n) ≥ S 2(m, n) and value 0 for S 1(m, n) < S 2(m, n) (similarly for ξ(S 2, S 1)). S 1(m, n) and S 2(m, n) are the saliency values for (m, n) pixel in A and B, respectively.

Guided image filtering is performed to obtain the refined weights W 11, W 12, W 21, and W 22 as

| (4) |

where r 1, ϵ 1, r 2, and ϵ 2 are the parameters of the guided filter W 11 and W 21 and W 12 and W 22 are the base layer and the detail layer weight maps.

The fused image F is obtained by weighted averaging of the corresponding layers; that is,

| (5) |

The major limitations of GFF [30] scheme are summarized as follows.

The Gaussian filter from (2) is not suitable for Rician noise removal. Thus, the algorithm has limited performance for noisy images. Hence filter of (2) needs to be modified to incorporate noise effects.

The weight maps P 1 and P 2 from (3) can be improved by defining more levels. The main issue with binary assignment (0 and 1) is that when the saliency values are approximately equal, the effect of one value is totally discarded, which results in degraded fused image.

3. Proposed Methodology

The proposed scheme follows the methodology of GFF [30] with necessary modifications to incorporate the above listed limitations. This section first discusses the modification proposed due to noise artifacts and then the improved weight maps are presented.

3.1. Improved Saliency Maps

The acquired medical images are usually of low quality (due to artifacts), which degrade the performance (both in terms of human visualization and quantitative analysis).

Beside other artifacts, MR images often contain Rician Noise (RN) which causes random fluctuations in the data and reduces image contrast [31]. RN is generated when real and imaginary parts of MR data are corrupted with zero-mean, equal variance uncorrelated Gaussian noise [32]. RN is a nonzero mean noise. Note that the noise distribution tends to Rayleigh distribution in low intensity regions and to a Gaussian distribution in regions of high intensity of the magnitude image [31, 32].

Let be image obtained using MR imaging containing Rician noise N R. The CT image B has higher spatial resolution and negligible noise level [33, 34].

The source images are first decomposed into base and I 12 and detail and I 22 layers following (1):

| (6) |

and have an added noise term compared to (1). Linear minimum mean square error estimator (LMMSE) is used instead of Gaussian filter for minimizing RN, consequently improving fused image quality.

The saliency maps and are thus computed by applying the LMMSE based filter q and following (2):

| (7) |

The main purpose of q is to make the extra term N R∗h as in small as possible while enhancing the image details.

3.2. Improved Weight Maps

The saliency maps are linked with detail information in the image. The main issue with 0 and 1 weight assignments arises in GFF [30] when different images have approximately equal saliency values. In such cases, one value is totally discarded. For noisy MR images, the saliency value may be higher at a pixel due to noise; in that case it will assign value 1 (which is not desirable). An appropriate solution is to define a range of values for weight maps construction.

Let ΔAB = S 1(m, n) − S 2(m, n), and S 1(m, n) ≥ S 2(m, n),

| (8) |

Let ΔBA = S 2(m, n) − S 1(m, n), and S 2(m, n) ≥ S 1(m, n),

| (9) |

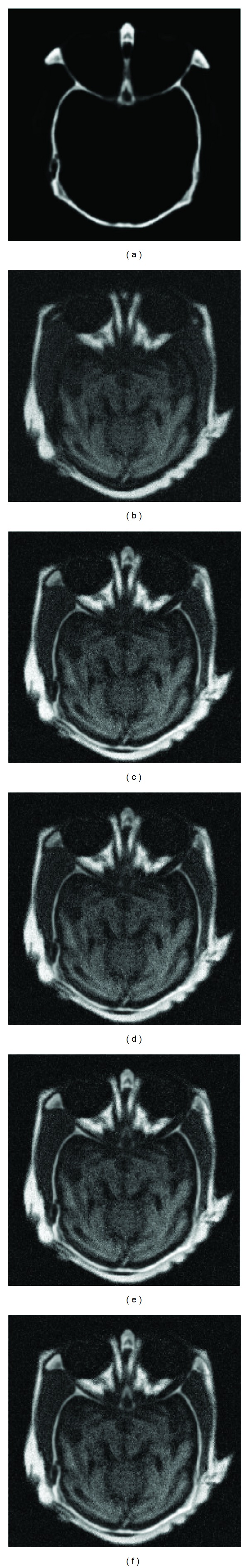

These values are selected empirically and may be further adjusted to improve results. Figures 1(a) and 1(b) show CT and noisy MR images, respectively. Figures 1(c)–1(f) show the results of applying different weights. The information in the upper portion of the fused image increases as more levels are added to the weight maps.

Figure 1.

Weight maps comparison: (a) CT image, (b) noisy MR image, (c) fused image with 3 weight maps, (d) fused image with 4 weight maps, (e) fused image with 5 weight maps, and (f) fused image with 6 weight maps.

The weight maps are passed through guided filter to obtain , , , and . Finally the fused image is

| (10) |

LMMSE based filter reduces the Rician noise and the more levels of weight maps ensure that more information is transferred to the fused image. The incorporation of the LMMSE based filter and a range of weight map values makes the proposed method suitable for noisy images.

4. Results and Analysis

The proposed method is tested on several pairs of source (MR and CT) images. For quantitative evaluation, different measures including mutual information (MI) [35] measure ζ MI, structural similarity (SSIM) [36] measure ζ SSIM, Xydeas and Petrović's [37] measure ζ XP, Zhao et al.'s [38] measure ζ Z, Piella and Heijmans's [39] measures ζ PH1 and ζ PH2, and visual information fidelity fusion (VIFF) [40] metric ζ VIFF are considered.

4.1. MI Measure

MI is a statistical measure which provides the degree of dependencies in different images. Large value of MI implies better quality and vice versa [11, 33, 35]:

| (11) |

where H A, H B, and H F are the entropies of A, B, and F images, respectively. P AF is the jointly normalized histogram of A and F, P BF is the jointly normalized histogram of B and F, and P A, P B, and P F are the normalized histograms of A, B, and F, respectively.

4.2. SSIM [36] Measure

SSIM [36] measure is defined as

| (12) |

where w is a sliding window and λ(w) is

| (13) |

where σ Aw and σ Bw are the variance of images A and B, respectively.

4.3. Xydeas and Petrović's [37] Measure

Xydeas and Petrović [37] proposed a metric to evaluate the amount of edge information, transferred from input images to fused image. It is calculated as

| (14) |

where Q AF and Q BF are the product of edge strength and orientation preservation values at location (m, n), respectively. The weights τ A(m, n) and τ B(m, n) reflect the importance of Q AF(m, n) and Q BF(m, n), respectively.

4.4. Zhao et al.'s [38] Metric

Zhao et al. [38] used the phase congruency (provides an absolute measure of image feature) to define an evaluation metric. The larger value of the metric describes a better fusion result. The metric ζ Z is defined as the geometric product of phase congruency, maximum and minimum moments, respectively.

4.5. Piella and Heijmans's [39] Metric

Piella and Heijmans's [39] metrics ζ P1 and ζ P2 are defined as

| (15) |

where Q o(A, F | w) and Q o(B, F | w) are the local quality indexes calculated in a sliding window w and λ(w) is defined as in (13). Consider

| (16) |

where is the mean of A and σ A 2 and σ AF are the variance of A and the covariance of A, B, respectively. Consider

| (17) |

where σ Aw and σ Bw are the variance of images A and B within the window w, respectively.

4.6. VIFF [40] Metric

VIFF [40] is a multiresolution image fusion metric used to assess fusion performance objectively. It has four stages. (1) Source and fused images are filtered and divided into blocks. (2) Visual information is evaluated with and without distortion information in each block. (3) The VIFF of each subband is calculated and the overall quality measure is determined by weighing (of VIFF at different subbands).

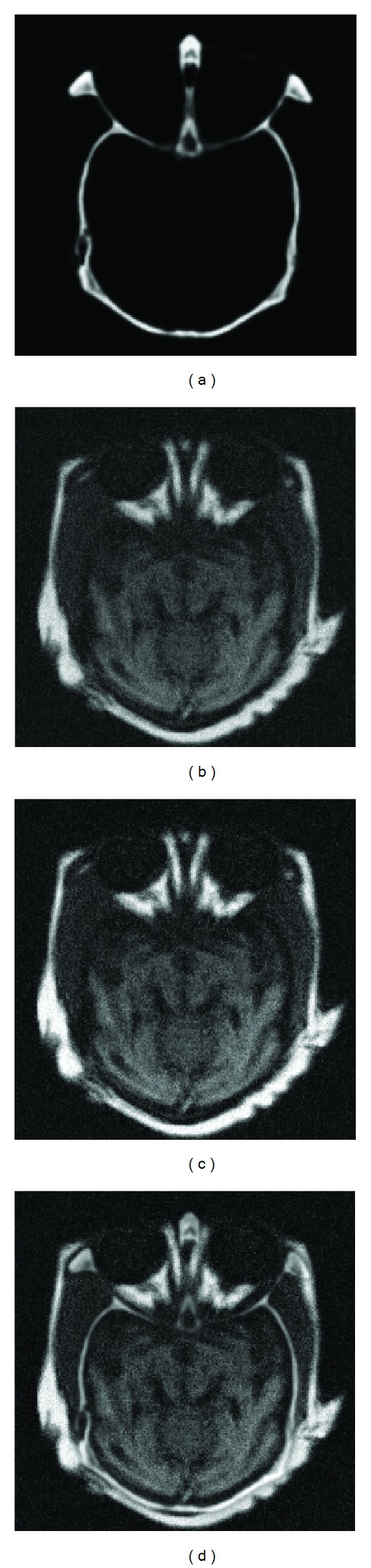

Figure 2 shows a pair of CT and MR images. It can be seen that the CT image (Figure 2(a)) provides clear bones information but no soft tissues information, while in contrast to CT image the MR image in Figure 2(b) provides soft tissues information. The fused image must contain both the information of bones and soft tissues. The fused image obtained using proposed scheme in Figure 2(d) shows better results as compared to fused image obtained by GFF [30] in Figure 2(c).

Figure 2.

(a) CT image, (b) noisy MR image, (c) GFF [30] fused image, and (d) proposed fused image.

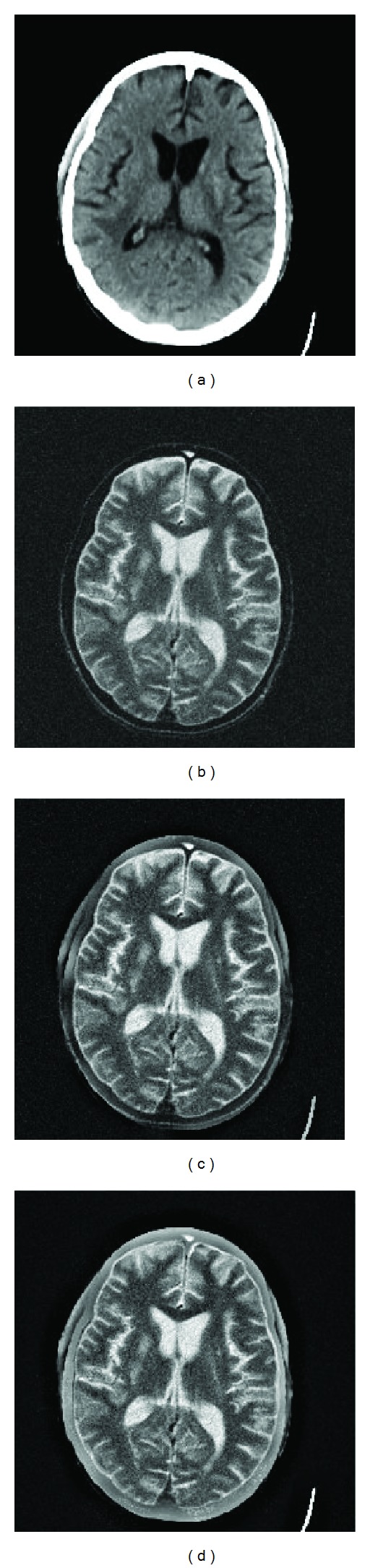

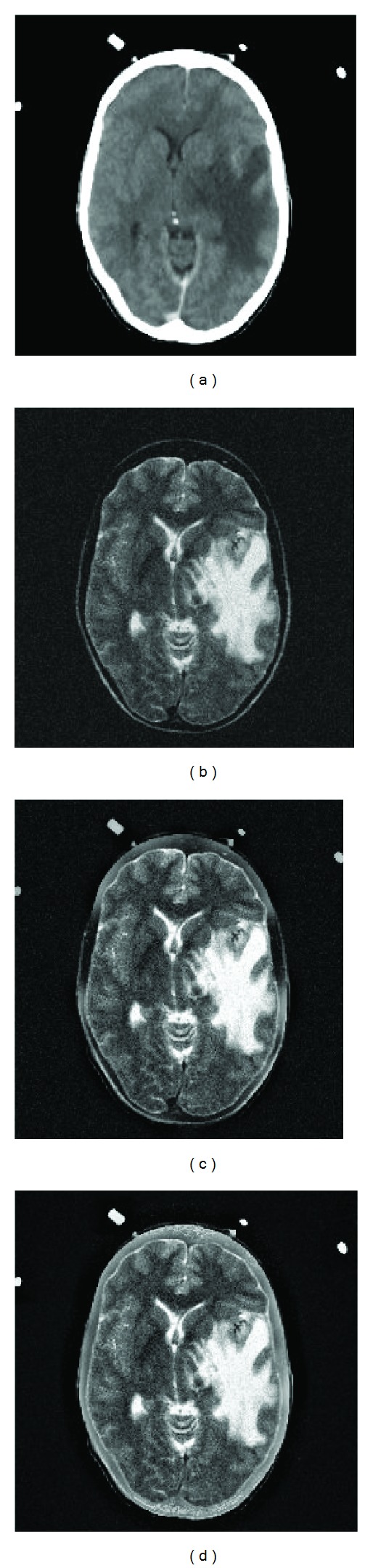

Figure 3 shows the images of a patient suffering from cerebral toxoplasmosis [41]. A more comprehensive information consisting of both the CT and MR images is the requirement in clinical diagnosis. The improvement in fused image using proposed scheme can be observed in Figure 3(d) compared to image obtained by GFF [30] in Figure 3(c).

Figure 3.

(a) CT image, (b) noisy MR image, (c) GFF [30] fused image, and (d) proposed fused image.

Figure 4 shows a pair of CT and MR images of a woman suffering from hypertensive encephalopathy [41]. The improvement in fused image using proposed scheme can be observed in Figure 4(d) compared to image obtained by GFF [30] in Figure 4(c).

Figure 4.

(a) CT image, (b) noisy MR image, (c) GFF [30] fused image, and (d) proposed fused image.

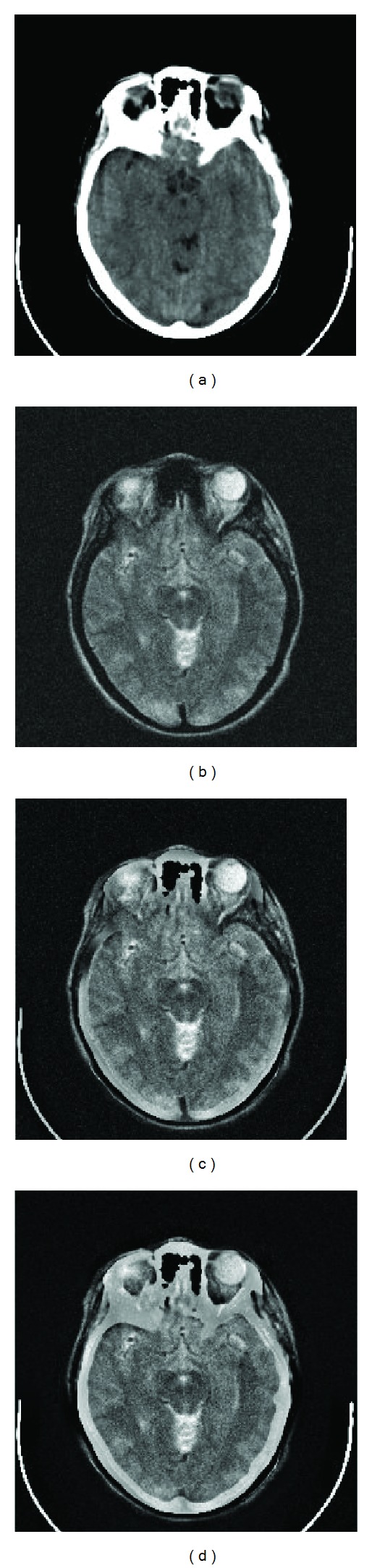

Figure 5 shows a pair of images containing acute stroke disease [41]. The improvement in quality of fused image obtained using proposed scheme can be observed in Figure 5(d) compared to Figure 5(c) (image obtained by GFF [30]).

Figure 5.

(a) CT image, (b) noisy MR image, (c) GFF [30] fused image, and (d) proposed fused image.

Table 1 shows that proposed scheme provides better quantitative results in terms of ζ MI, ζ SSIM, ζ XP, ζ Z, ζ P1, ζ P2, and ζ VIFF as compared to GFF [30] scheme.

Table 1.

Quantitative analysis of GFF [30] and proposed schemes.

| Quantitative measures | Example 1 | Example 2 | Example 3 | Example 4 | ||||

|---|---|---|---|---|---|---|---|---|

| GFF [30] | Proposed | GFF [30] | Proposed | GFF [30] | Proposed | GFF [30] | Proposed | |

| ζ MI | 0.2958 | 0.2965 | 0.4803 | 0.5198 | 0.4164 | 0.4759 | 0.4994 | 0.5526 |

| ζ SSIM | 0.3288 | 0.3540 | 0.3474 | 0.3519 | 0.3130 | 0.3139 | 0.2920 | 0.2940 |

| ζ XP | 0.4034 | 0.5055 | 0.4638 | 0.4678 | 0.4473 | 0.4901 | 0.4498 | 0.4653 |

| ζ Z | 0.1600 | 0.1617 | 0.3489 | 0.3091 | 0.2061 | 0.2193 | 0.3002 | 0.2855 |

| ζ P1 | 0.4139 | 0.4864 | 0.2730 | 0.3431 | 0.2643 | 0.3247 | 0.2729 | 0.3339 |

| ζ P2 | 0.4539 | 0.7469 | 0.5188 | 0.6387 | 0.6098 | 0.7453 | 0.5268 | 0.6717 |

| ζ VIFF | 0.2561 | 0.3985 | 0.1553 | 0.2968 | 0.1852 | 0.3009 | 0.1842 | 0.3487 |

5. Conclusions

An improved guided image fusion for MR and CT imaging is proposed. Different modifications in filter (LMMSE based) and weights maps (with different levels) are proposed to overcome the limitations of GFF scheme. Simulation results based on visual and quantitative analysis show the significance of proposed scheme.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Yang Y, Park DS, Huang S, Rao N. Medical image fusion via an effective wavelet-based approach. Eurasip Journal on Advances in Signal Processing. 2010;2010:13 pages.579341 [Google Scholar]

- 2.Maes F, Vandermeulen D, Suetens P. Medical image registration using mutual information. Proceedings of the IEEE. 2003;91(10):1699–1721. [Google Scholar]

- 3.Das S, Chowdhury M, Kundu MK. Medical image fusion based on ripplet transform type-I. Progress In Electromagnetics Research B. 2011;(30):355–370. [Google Scholar]

- 4.Daneshvar S, Ghassemian H. MRI and PET image fusion by combining IHS and retina-inspired models. Information Fusion. 2010;11(2):114–123. [Google Scholar]

- 5.Barra V, Boire J-Y. A general framework for the fusion of anatomical and functional medical images. NeuroImage. 2001;13(3):410–424. doi: 10.1006/nimg.2000.0707. [DOI] [PubMed] [Google Scholar]

- 6.Polo A, Cattani F, Vavassori A, et al. MR and CT image fusion for postimplant analysis in permanent prostate seed implants. International Journal of Radiation Oncology Biology Physics. 2004;60(5):1572–1579. doi: 10.1016/j.ijrobp.2004.08.033. [DOI] [PubMed] [Google Scholar]

- 7.Grosu AL, Weber WA, Franz M, et al. Reirradiation of recurrent high-grade gliomas using amino acid PET (SPECT)/CT/MRI image fusion to determine gross tumor volume for stereotactic fractionated radiotherapy. International Journal of Radiation Oncology Biology Physics. 2005;63(2):511–519. doi: 10.1016/j.ijrobp.2005.01.056. [DOI] [PubMed] [Google Scholar]

- 8.Piella G. A general framework for multiresolution image fusion: from pixels to regions. Information Fusion. 2003;4(4):259–280. [Google Scholar]

- 9.Mitianoudis N, Stathaki T. Pixel-based and region-based image fusion schemes using ICA bases. Information Fusion. 2007;8(2):131–142. [Google Scholar]

- 10.Jameel A, Ghafoor A, Riaz MM. Entropy dependent compressive sensing based image fusion. Intelligent Signal Processing and Communication Systems; November 2013. [Google Scholar]

- 11.Yang B, Li S. Pixel-level image fusion with simultaneous orthogonal matching pursuit. Information Fusion. 2012;13(1):10–19. [Google Scholar]

- 12.Ranchin T, Wald L. Fusion of high spatial and spectral resolution images: the ARSIS concept and its implementation. Photogrammetric Engineering and Remote Sensing. 2000;66(1):49–61. [Google Scholar]

- 13.Al-Wassai F, Kalyankar N, Al-Zaky A. Multisensor images fusion fased on feature-level. International Journal of Latest Technology in Engineering, Management and Applied Science. 2012;1(5):124–138. [Google Scholar]

- 14.Ding M, Wei L, Wanga B. Research on fusion method for infrared and visible images via compressive sensing. Infrared Physics and Technology. 2013;57:56–67. [Google Scholar]

- 15.Mitchell HB. Image Fusion Theories, Techniques and Applications. Springer; 2010. [Google Scholar]

- 16.Sharma V, Davis W. Augmented Vision Perception in Infrared. 2008. Feature-level fusion for object segmentation using mutual information; pp. 295–319. [Google Scholar]

- 17.Hall DL, Llinas J. An introduction to multisensor data fusion. Proceedings of the IEEE. 1997;85(1):6–23. [Google Scholar]

- 18.Ardeshir Goshtasby A, Nikolov S. Image fusion: advances in the state of the art. Information Fusion. 2007;8(2):114–118. [Google Scholar]

- 19.Burt PJ, Adelson EH. The laplacian pyramid as a compact image code. IEEE Transactions on Communications. 1983;31(4):532–540. [Google Scholar]

- 20.Toet A. A morphological pyramidal image decomposition. Pattern Recognition Letters. 1989;9(4):255–261. [Google Scholar]

- 21.Petrović VS, Xydeas CS. Gradient-based multiresolution image fusion. IEEE Transactions on Image Processing. 2004;13(2):228–237. doi: 10.1109/tip.2004.823821. [DOI] [PubMed] [Google Scholar]

- 22.Chitade AZ, Katiyar SK. Multiresolution and multispectral data fusion using discrete wavelet transform with IRS images: cartosat-1, IRS LISS III and LISS IV. Journal of the Indian Society of Remote Sensing. 2012;40(1):121–128. [Google Scholar]

- 23.Li S, Kwok JT, Wang Y. Using the discrete wavelet frame transform to merge Landsat TM and SPOT panchromatic images. Information Fusion. 2002;3(1):17–23. [Google Scholar]

- 24.Singh R, Vatsa M, Noore A. Multimodal medical image fusion using redundant discrete wavelet transform. Proceedings of the 7th International Conference on Advances in Pattern Recognition (ICAPR '09); February 2009; pp. 232–235. [Google Scholar]

- 25.Chen G, Gao Y. Multisource image fusion based on double density dualtree complex wavelet transform. Proceedings of the International Conference on Fuzzy Systems and Knowledge Discovery; 2012; pp. 1864–1868. [Google Scholar]

- 26.Alia F, El-Dokanya I, Saada A, Abd El-Samiea F. A curvelet transform approach for the fusion of MR and CT images. Journal of Modern Optics. 2010;57(4):273–286. [Google Scholar]

- 27.Wang L, Li B, Tian L-F. Multi-modal medical image fusion using the inter-scale and intra-scale dependencies between image shift-invariant shearlet coefficients. Information Fusion. 2012 [Google Scholar]

- 28.Yang L, Guo BL, Ni W. Multimodality medical image fusion based on multiscale geometric analysis of contourlet transform. Neurocomputing. 2008;72(1–3):203–211. [Google Scholar]

- 29.Li T, Wang Y. Biological image fusion using a NSCT based variable-weight method. Information Fusion. 2011;12(2):85–92. [Google Scholar]

- 30.Li S, Kang X, Hu J. Image fusion with guided filtering. IEEE Transactions on Image Processing. 2013;22(7) doi: 10.1109/TIP.2013.2244222. [DOI] [PubMed] [Google Scholar]

- 31.Nowak RD. Wavelet-based Rician noise removal for magnetic resonance imaging. IEEE Transactions on Image Processing. 1999;8(10):1408–1419. doi: 10.1109/83.791966. [DOI] [PubMed] [Google Scholar]

- 32.Anand CS, Sahambi JS. Wavelet domain non-linear filtering for MRI denoising. Magnetic Resonance Imaging. 2010;28(6):842–861. doi: 10.1016/j.mri.2010.03.013. [DOI] [PubMed] [Google Scholar]

- 33.Nakamoto Y, Osman M, Cohade C, et al. PET/CT: comparison of quantitative tracer uptake between germanium and CT transmission attenuation-corrected images. Journal of Nuclear Medicine. 2002;43(9):1137–1143. [PubMed] [Google Scholar]

- 34.Bowsher JE, Yuan H, Hedlund LW, et al. Utilizing MRI information to estimate F18-FDG distributions in rat flank tumors. Proceedings of the IEEE Nuclear Science Symposium Conference Record; October 2004; pp. 2488–2492. [Google Scholar]

- 35.Hossny M, Nahavandi S, Creighton D. Comments on ’Information measure for performance of image fusion’. Electronics Letters. 2008;44(18):1066–1067. [Google Scholar]

- 36.Yang C, Zhang J-Q, Wang X-R, Liu X. A novel similarity based quality metric for image fusion. Information Fusion. 2008;9(2):156–160. [Google Scholar]

- 37.Xydeas CS, Petrović V. Objective image fusion performance measure. Electronics Letters. 2000;36(4):308–309. [Google Scholar]

- 38.Zhao J, Laganière R, Liu Z. Performance assessment of combinative pixel-level image fusion based on an absolute feature measurement. International Journal of Innovative Computing, Information and Control. 2007;3(6):1433–1447. [Google Scholar]

- 39.Piella G, Heijmans H. A new quality metric for image fusion. Proceedings of the International Conference on Image Processing (ICIP '03); September 2003; pp. 173–176. [Google Scholar]

- 40.Han Y, Cai Y, Cao Y, Xu X. A new image fusion performance metric based on visual information fidelity. Information Fusion. 2013;14(2):127–135. [Google Scholar]

- 41.Harvard Image Database. https://www.med.harvard.edu/