Abstract

This paper derives new algorithms for signomial programming, a generalization of geometric programming. The algorithms are based on a generic principle for optimization called the MM algorithm. In this setting, one can apply the geometric-arithmetic mean inequality and a supporting hyperplane inequality to create a surrogate function with parameters separated. Thus, unconstrained signomial programming reduces to a sequence of one-dimensional minimization problems. Simple examples demonstrate that the MM algorithm derived can converge to a boundary point or to one point of a continuum of minimum points. Conditions under which the minimum point is unique or occurs in the interior of parameter space are proved for geometric programming. Convergence to an interior point occurs at a linear rate. Finally, the MM framework easily accommodates equality and inequality constraints of signomial type. For the most important special case, constrained quadratic programming, the MM algorithm involves very simple updates.

Keywords: arithmetic-geometric mean inequality, geometric programming, global convergence, MM algorithm, linearly constrained quadratic program-ming, parameter separation, penalty method, signomial programming

1 Introduction

As a branch of convex optimization theory, geometric programming is next in line to linear and quadratic programming in importance [4,5,15,16]. It has applications in chemical equilibrium problems [14], structural mechanics [5], integrated circuit design [7], maximum likelihood estimation [12], stochastic processes [6], and a host of other subjects [5]. Geometric programming deals with posynomials, which are functions of the form

| (1) |

Here the index set S ⊂ ℝn is finite, and all coefficients cα and all components x1, …, xn of the argument x of f(x) are positive. The possibly fractional powers αi corresponding to a particular α may be positive, negative, or zero. For instance, is a posynomial on ℝ2. In geometric programming we minimize a posynomial f(x) subject to posynomial inequality constraints of the form uj(x) ≤ 1 for 1 ≤ j ≤ q, where the uj(x) are again posynomials. In some versions of geometric programming, equality constraints of posynomial type are permitted [3].

A signomial function has the same form as the posynomial (1), but the coefficients cα are allowed to be negative. A signomial program is a generalization of a geometric program, where the objective and constraint functions can be signomials. From a computational point of view, signomial programming problems are significantly harder to solve than geometric programming problems. After suitable change of variables, a geometric program can be transformed into a convex optimization problem and globally solved by standard methods. In contrast, signomials may have many local minima. Wang et al. [20] recently derived a path algorithm for solving unconstrained signomial programs.

The theory and practice of geometric programming has been stable for a generation, so it is hard to imagine saying anything novel about either. The attractions of geometric programming include its beautiful duality theory and its connections with the arithmetic-geometric mean inequality. The present paper derives new algorithms for both geometric and signomial programming based on a generic device for iterative optimization called the MM algorithm [9,11]. The MM perspective possesses several advantages. First it provides a unified framework for solving both geometric and signomial programs. The algorithms derived here operate by separating parameters and reducing minimization of the objective function to a sequence of one-dimensional minimization problems. Separation of parameters is apt to be an advantage in high-dimensional problems. Another advantage is ease of implementation compared to competing methods of unconstrained geometric and signomial programming [20]. Finally, straightforward generalizations of our MM algorithms extend beyond signomial programming.

We conclude this introduction by sketching a roadmap to the rest of the paper. Section 2 reviews the MM algorithm. Section 3 derives MM algorithm for unconstrained signomial program from two simple inequalities. The behavior of the MM algorithm is illustrated on a few numerical examples in Section 4. Section 5 extends the MM algorithm for unconstrained problems to the constrained cases using the penalty method. Section 6 specializes to linearly constrained quadratic programming on the positive orthant. Convergence results are discussed in Section 7.

2 Background on the MM Algorithm

The MM principle involves majorizing the objective function f(x) by a surrogate function g(x | xm) around the current iterate xm (with ith component xmi) of a search. Majorization is defined by the two conditions

| (2) |

In other words, the surface x ↦ g(x | xm) lies above the surface x ↦ f(x) and is tangent to it at the point x = xm. Construction of the majorizing function g(x | xm) constitutes the first M of the MM algorithm.

The second M of the algorithm minimizes the surrogate g(x | xm) rather than f(x). If xm+1 denotes the minimizer of g(x | xm), then this action forces the descent property f(xm+1) ≤ f(xm). This fact follows from the inequalities

reflecting the definition of xm+1 and the tangency conditions (2). The descent property makes the MM algorithm remarkably stable. Strictly speaking, the validity of the descent property depends only on decreasing g(x | xm), not on minimizing g(x | xm).

3 Unconstrained Signomial Programming

The art in devising an MM algorithm revolves around intelligent choice of the majorizing function. For signomial programming problems, fortunately one can invoke two simple inequalities. For terms with positive coefficients cα, we use the arithmetic-geometric mean inequality

| (3) |

for nonnegative numbers zi and αi and ℓ1 norm [19]. If we make the choice zi = xi/xmi in inequality (3), then the majorization

| (4) |

emerges, with equality when x = xm. We can broaden the scope of the majorization (4) to cases with αi < 0 by replacing zi by the reciprocal ratio xmi/xi whenever αi < 0. Thus, for terms with cα > 0, we have the majorization

where sgn(αi) is the sign function.

The terms with cα < 0 are handled by a different majorization. Our point of departure is the supporting hyperplane minorization

at the point z = 1. If we let , then it follows that

| (5) |

is a valid minorization in x around the point xm. Multiplication by the negative coefficient cα now gives the desired majorization. The surrogate function separates parameters and is convex when all of the αi are positive.

In summary, the objective function (1) is majorized up to an irrelevant additive constant by the sum

| (6) |

where S+ = {α : cα > 0}, and S− = {α : cα < 0}. To guarantee that the next iterate is well defined and occurs on the interior of the parameter domain, it is helpful to assume for each i that at least one α ∈ S+ has αi positive and at least one α ∈ S+ has αi negative. Under these conditions each gi(xi | xm) is coercive and attains its minimum on the open interval (0, ∞).

Minimization of the majorizing function is straightforward because the surrogate functions gi(xi | xm) are univariate functions. The derivative of gi(xi | xm) with respect to its left argument equals

Assuming that the exponents αi are integers, this is a rational function of xi, and once we equate it to 0, we are faced with solving a polynomial equation. This task can be accomplished by bisection or by Newton’s method. In practice, just a few steps of either algorithm suffice since the MM principle merely requires decreasing the surrogate functions gi(xi | xm).

In a geometric program, the function has a single root on the interval (0, ∞). For a proof of this fact, note that making the standard change of variables xi = eyi eliminates the positivity constraint xi > 0 and renders the transformed function hi(yi | xm) = gi(xi | xm) strictly convex. Because |αi| sgn(αi)2 = |αi|, the second derivative

is positive. Hence, hi(yi | xm) is strictly convex and possesses a unique minimum point. These arguments yield the even sweeter dividend that the MM iteration map is continuously differentiable. From the vantage point of the implicit function theorem [8], the stationary condition determines ym+1,i, and consequently xm+1,i, in terms of xm. Observe here that as required by the implicit function.

It is also worth pointing out that even more functions can be brought under the umbrella of signomial programming. For instance, majorization of the two related functions − ln f(x) and ln f(x) is possible for any posynomial . In the first case,

| (7) |

holds for and bm = Σα αmα because Jensen’s inequality applies to the convex function − ln t. In the second case, the supporting hyperplane inequality applied to the convex function − ln t implies

This puts us back in the position of needing to majorize a posynomial, a problem we have already discussed in detail. By our previous remarks, the coefficients cα can be negative as well as positive in this case. Similar majorizations apply to any composition ϕ ◦ f(x) of a posynomial f(x) with an arbitrary concave function ϕ(y).

4 Examples of Unconstrained Minimization

Our first examples demonstrate the robustness of the MM algorithms in minimization and illustrate some of the complications that occur. In each case we can explicitly calculate the MM updates. To start, consider the posynomial

with the implied constraints x1 > 0 and x2 > 0. The majorization (4) applied to the third term of f1(x) yields

Applied to the second term of f1(x) using the reciprocal ratios, it gives

The sum g(x | xm) of the two surrogate functions

majorizes f1(x). If we set the derivatives

of each of these equal to 0, then the updates

solve the minimization step of the MM algorithm. It is also obvious that the point is a fixed point of the updates, and the reader can check that it minimizes f1(x).

It is instructive to consider the slight variations

of this objective function. In the first case, the reader can check that the MM algorithm iterates according to

In the second case, it iterates according to

The objective function f2(x) attains its minimum value whenever . The MM algorithm for f2(x) converges after a single iteration to the value 2, but the converged point depends on the initial point x0. The infimum of f3(x) is 0. This value is attained asymptotically by the MM algorithm, which satisfies the identities and xm+1,2 = 22/25xm2 for all m ≥ 1. These results imply that xm1 tends to 0 and xm2 to ∞ in such a manner that f3(xm) tends to 0. One could not hope for much better behavior of the MM algorithm in these two examples.

The function

is a signomial but not a posynomial. The surrogate function (6) reduces to

with all variables separated. The MM updates

converge in a single iteration to a solution of f4(x) = 0. Again the limit depends on the initial point.

The function

is more complicated than a signomial. It also is unbounded because the point satisfies f5(x) = 2 + m−2 − ln(m + 2/m). According to the majorization (7), an appropriate surrogate is

up to an irrelevant constant. The MM updates are

If the components of the initial point coincide, then the iterates converge in a single iteration to the saddle point with all components equal to . Otherwise, it appears that f5(xm) tends to −∞.

The following objective functions

from the reference [20] are intended for numerical illustration. Table 1 lists initial conditions, minimum points, minimum values, and number of iterations until convergence under the MM algorithm. Convergence is declared when the relative change in the objective function is less than a pre-specified value ε, in other words, when

Optimization of the univariate surrogate functions easily succumbs to Newton’s method. The MM algorithm takes fewer iterations to converge than the path algorithm for all of the test functions mentioned in [20] except f6(x). Furthermore, the MM algorithm avoids calculation of the gradient and Hessian and requires no matrix decompositions or selection of tuning constants.

Table 1.

Numerical examples of unconstrained signomial programming. Test functions f4(x), f6(x), f7(x), f8(x) and f9(x) are taken from [20]. P: posynomial; S: signomial; G: general function.

| Fun | Type | Initial Point x0 | Min Point | Min Value | Iters (10−9) |

|---|---|---|---|---|---|

| f1 | P | (1,2) | (1.4310,1.4310) | 3.4128 | 38 |

| f2 | P | (1,2) | (0.6300,1.2599) | 2.0000 | 2 |

| f3 | P | (1,1) | diverges | 0.0000 | |

| f4 | S | (0.1,0.2,0.3,0.4) | (0.1596,0.3191,0.1954,0.2606) | 0.0000 | 3 |

| f5 | G | (1,1,1) | (0.4082,0.4082,0.4082) | 0.2973 | 2 |

| (1,2,3) | diverges | −∞ | |||

| f6 | S | (1,1) | (2.9978,0.4994) | −14.2031 | 558 |

| f7 | S | (1, …, 10) | 0.0255x0 | 0.0000 | 18 |

| f8 | P | (1, …, 7) | diverges | 0.0000 | |

| f9 | P | (1,2,3,4) | (0.3969,0.0000,0.0000,1.5874) | 2.0000 | 7 |

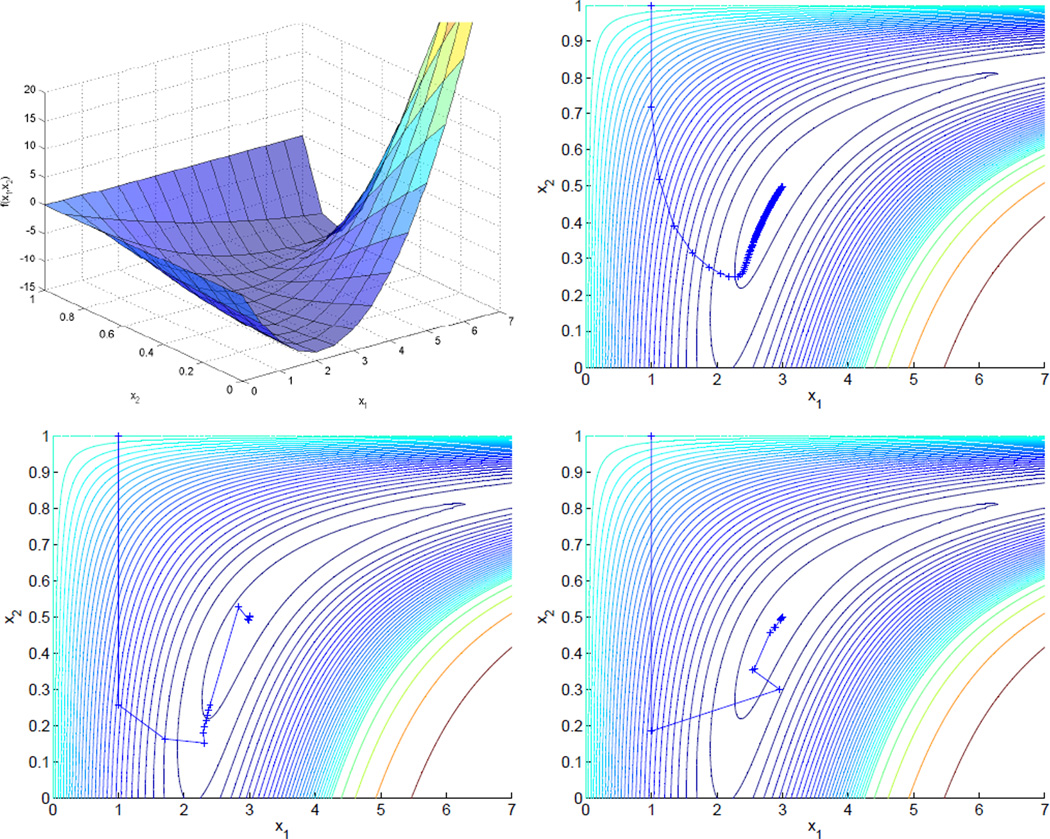

As Section 7 observes, MM algorithms typically converge at a linear rate. Although slow convergence can occur for functions such as the test function f6(x), there are several ways to accelerate an MM algorithm. For example, our published quasi-Newton acceleration [21] often reduces the necessary number of iterations by one or two orders of magnitude. Figure 1 shows the progress of the MM iterates for the test function f6(x) with and without quasi-Newton acceleration. Under a convergence criterion of ε = 10−9 and q = 1 secant condition, the required number of iterations falls to 30; under the same convergence criterion and q = 2 secant conditions, the required number of iterations falls to 12. It is also worth emphasizing that separation of parameters enables parallel processing in high-dimensional problems. We have recently argued [25] that the best approach to parallel processing is through graphics processing units (GPUs). These cheap hardware devices offer one to two orders of magnitude acceleration in many MM algorithms with parameters separated.

Fig. 1.

Upper left: The test function f6(x). Upper right: 558 MM iterates. Lower left: 30 accelerated MM iterates (q = 1 secant conditions). Lower right: 12 accelerated MM iterates (q = 2 secant conditions).

5 Constrained Signomial Programming

Extending the MM algorithm to constrained geometric and signomial programming is challenging. Box constraints ai ≤ xi ≤ bi are consistent with parameter separation as just developed, but more complicated posynomial constraints that couple parameters are not. Posynomial inequality constraints take the form

The corresponding equality constraint sets h(x) = 1. We propose handling both constraints by penalty methods. Before we treat these matters in more depth, let us relax the positivity restrictions on the dβ but enforce the restriction βi ≥ 0. The latter objective can be achieved by multiplying h(x) by for all i. If we subtract the two sides of the resulting equality, then the equality constraint h(x) = 1 can be rephrased as r(x) = 0 for the signomial , with no restriction on the signs of the eγ but with the requirement γi ≥ 0 in effect. For example, the equality constraint

becomes

In the quadratic penalty method [1,13,17] with objective function f(x) and a single equality constraint r(x) = 0 and a single inequality constraint s(x) ≤ 0, one minimizes the sum , where s(x)+ = max{s(x), 0}. As the penalty constant λ tends to ∞, the solution vector xλ typically converges to the constrained minimum. In the revised objective function, the term r(x)2 is a signomial whenever r(x) is a signomial. For example, in our toy problem the choice has square

Of course, the powers in r(x) can be fractional here as well as integer. The term is not a signomial and must be subjected to the majorization

to achieve this status. In practice, one does not need to fully minimize fλ(x) for any fixed λ. If one increases λ slowly enough, then it usually suffices to merely decrease fλ(x) at each iteration. The MM algorithm is designed to achieve precisely this goal. Our exposition so far suggests that we majorize r(x)2, s(x)2, and [s(x) − s(xm)]2 in exactly the same manner that we majorize f(x). Separation of parameters generalizes, and the resulting MM algorithm keeps all parameters positive while permitting pertinent parameters to converge to 0. Section 7 summarizes some of the convergence properties of this hybrid procedure.

The quadratic penalty method traditionally relies on Newton’s method to minimize the unconstrained functions fλ(x). Unfortunately, this tactic suffers from roundoff errors and numerical instability. Some of these problems disappear with the MM algorithm. No matrix inversions are involved, and iterates enjoy the descent property. Ill-conditioning does cause harm in the form of slow convergence, but the previously mentioned quasi-Newton acceleration largely remedies the situation [21]. As an alternative to quadratic penalties, exact penalties take the form λ|r(x)| + λs(x)+. Remarkably, the exact penalty method produces the constrained minimum, not just in the limit, but for all finite λ beyond a certain point. Although this desirable property avoids the numerical instability encountered in the quadratic penalty method, the kinks in the objective functions f(x) + λ|r(x)| + λs(x)+ are a nuisance. Our recent paper [24] on the exact penalty method shows how to circumvent this annoyance.

6 Nonnegative Quadratic Programming

As an illustration of constrained signomial programming, consider quadratic programming over the positive orthant. Let

be the objective function, Ex = d the linear equality constraints, and Ax ≤ b the linear inequality constraints. The symmetric matrix Q can be negative definite, indefinite, or positive definite. The quadratic penalty method involves minimizing the sequence of penalized objective functions

as λ tends to ∞. Based on the obvious majorization

the term is majorized by , where

A brief calculation shows that fλ(x) is majorized by the surrogate function

up to an irrelevant constant, where Hλ and υλm are defined by

It is convenient to assume that the diagonal coefficients appearing in the quadratic form Hλx are positive. This is generally the case for large λ. One can handle the off-diagonal term hλijxixj by either the majorization (4) or the majorization (5) according to the sign of hλij. The reader can check that the MM updates reduce to

| (8) |

where

When , the update (8) collapses to

| (9) |

To avoid sticky boundaries, we replace 0 in equation (9) by a small positive constant ε such as 10−9. Sha et al. [18] derived the update (8) for λ = 0 ignoring the constraints Ex = d and Ax ≤ b.

For a numerical example without equality constraints take

The minimum occurs at the point (2/3, 4/3)t. Table 2 lists the number of iterations until convergence and the converged point xλ for the sequence of penalty constants λ = 2k. The quadratic program

converges much more slowly. Its minimum occurs at the point (2.4, 1.6)t. Table 3 lists the numbers of iterations until convergence with (q = 1) and without (q = 0) acceleration and the converged point xλ for the same sequence of penalty constants λ = 2k. Fortunately, quasi-Newton acceleration compensates for ill conditioning in this test problem.

Table 2.

Iterates from the quadratic penalty method for the test function f10(x). The convergence criterion for the inner loops is 10−9.

| log2 λ | Iters | xλ |

|---|---|---|

| 0 | 8 | (0.9503,1.6464) |

| 1 | 6 | (0.8580,1.5164) |

| 2 | 5 | (0.8138,1.4461) |

| 3 | 23 | (0.7853,1.4067) |

| 4 | 32 | (0.7264,1.3702) |

| 5 | 31 | (0.6967,1.3518) |

| 6 | 30 | (0.6817,1.3426) |

| 7 | 29 | (0.6742,1.3380) |

| 8 | 28 | (0.6704,1.3356) |

| 9 | 26 | (0.6686,1.3345) |

| 10 | 25 | (0.6676,1.3339) |

| 11 | 23 | (0.6671,1.3336) |

| 12 | 22 | (0.6669,1.3335) |

| 13 | 21 | (0.6668,1.3334) |

| 14 | 19 | (0.6667,1.3334) |

| 15 | 18 | (0.6667,1.3334) |

| 16 | 16 | (0.6667,1.3333) |

| 17 | 15 | (0.6667,1.3333) |

Table 3.

Iterates from the quadratic penalty method for the test function f11(x). The convergence criterion for the inner loops is 10−16.

| log2 λ | Iters (q = 0) | Iters (q = 1) | xλ |

|---|---|---|---|

| 0 | 18 | 5 | (3.0000,1.8000) |

| 1 | 2 | 2 | (2.8571,1.7143) |

| 2 | 56 | 6 | (2.6667,1.6667) |

| 3 | 97 | 5 | (2.5455,1.6364) |

| 4 | 167 | 5 | (2.4762,1.6190) |

| 5 | 312 | 5 | (2.4390,1.6098) |

| 6 | 541 | 6 | (2.4198,1.6049) |

| 7 | 955 | 5 | (2.4099,1.6025) |

| 8 | 1674 | 4 | (2.4050,1.6012) |

| 9 | 2924 | 3 | (2.4025,1.6006) |

| 10 | 4839 | 3 | (2.4013,1.6003) |

| 11 | 7959 | 4 | (2.4006,1.6002) |

| 12 | 12220 | 4 | (2.4003,1.6001) |

| 13 | 17674 | 4 | (2.4002,1.6000) |

| 14 | 21739 | 3 | (2.4001,1.6000) |

| 15 | 20736 | 3 | (2.4000,1.6000) |

| 16 | 8073 | 3 | (2.4000,1.6000) |

| 17 | 111 | 3 | (2.4000,1.6000) |

| 18 | 6 | 4 | (2.4000,1.6000) |

| 19 | 5 | 2 | (2.4000,1.6000) |

| 20 | 3 | 2 | (2.4000,1.6000) |

| 21 | 2 | 2 | (2.4000,1.6000) |

7 Convergence

As we have seen, the behavior of the MM algorithm is intimately tied to the behavior of the objective function f(x). For the sake of simplicity, we now restrict attention to unconstrained minimization of posynomials and investigate conditions guaranteeing that f(x) possesses a unique minimum on its domain. Uniqueness is related to the strict convexity of the reparameterization

of f(x), where is the inner product of α and y and xi = eyi for each i. The Hessian matrix

of h(y) is positive semidefinite, so h(y) is convex. If we let T be the subspace of ℝn spanned by {α}α∈S, then h(y) is strictly convex if and only if T = ℝn. Indeed, suppose the condition holds. For any υ ≠ 0, it then must be true that αtυ ≠ 0 for some α ∈ S. As a consequence,

and d2h(y) is positive definite. Conversely, suppose T ≠ ℝn, and take υ ≠ 0 with αtυ = 0 for every α ∈ S. Then h(y+tυ) = h(y) for every scalar t, which is incompatible with h(y) being strictly convex.

Strict convexity guarantees uniqueness, not existence, of a minimum point. Coerciveness ensures existence. The objective function f(x) is coercive if f(x) tends to ∞ whenever any component of x tends to 0 or ∞. Under the reparameterization xi = eyi, this is equivalent to h(y) = f(x) tending to ∞ as ‖y‖2 tends to ∞. A necessary and sufficient condition for this to occur is that maxα∈S αtυ > 0 for every υ ≠ 0. For a proof, suppose the contrary condition holds for some υ ≠ 0. Then it is clear that h(tυ) remains bounded above by h(0) as the scalar t tends to ∞. Conversely, if the stated condition is true, then the function q(y) = maxα∈S αty is continuous and achieves its minimum of d > 0 on the sphere {y ∈ ℝn : ‖y‖2 = 1}. It follows that q(y) ≥ d‖y‖2 and that

This lower bound shows that h(y) is coercive.

The coerciveness condition is hard to apply in practice. An equivalent condition is that the origin 0 belongs to the interior of the convex hull of the set {α}α∈S. It is straightforward to show that the negations of these two conditions are logically equivalent. Thus, suppose q(υ) = maxα∈S αtυ ≤ 0 for some vector υ ≠ 0. Every convex combination Σα pαα then satisfies (Σα pαα)t υ ≤ 0. If the origin is in the interior of the convex hull, then ευ is also for every sufficiently small ε > 0. But this leads to the contradiction . Conversely, suppose 0 is not in the interior of the convex hull. According to the separating hyperplane theorem for convex sets, there exists a unit vector υ with υtα ≤ 0 = υt0 for every α ∈ S. In other words, q(υ) ≤ 0. The convex hull criterion is easier to check, but it is not constructive. In simple cases such as the objective function f1(x) where the power vectors are α = (−3, 0)t, α = (−1, −2)t, and α = (1, 1)t, it is visually obvious that the origin is in the interior of their convex hull.

One can also check the criterion q(υ) > 0 for all υ ≠ 0 by solving a related geometric programming problem. This problem consists in minimizing the scalar t subject to the inequality constraints αty ≤ t for all α ∈ S and the nonlinear equality constraint . If tmin ≤ 0, then the original criterion fails.

In some cases, the objective function f(x) does not attain its minimum on the open domain . This condition is equivalent to the corresponding function ln h(y) being unbounded below on ℝn. According to Gordon’s theorem [2,10], this can happen if and only if 0 is not in the convex hull of the set {α}α∈S. Alternatively, both conditions are equivalent to the existence of a vector υ with αtυ < 0 for all α ∈ S. For the objective function f3(x), the power vectors are α = (−1, −2)t and α = (1, 1)t. The origin (0, 0)t does not lie on the line segment between them, and the vector (−3/2, 1)t forms a strictly oblique angle with each. As predicted, f3(x) does not attain its infimum on .

The theoretical development in reference [10] demonstrates that the MM algorithm converges at a linear rate to the unique minimum point of the objective function f(x) when f(x) is coercive and its convex reparameterization h(y) is strictly convex. The theory does not cover other cases, and it would be interesting to investigate them. The general convergence theory of MM algorithms [10] states that five properties of the objective function f(x) and MM algorithmic map x ↦ M(x) guarantee convergence to a stationary point of f(x): (a) f(x) is coercive on its open domain; (b) f(x) has only isolated stationary points; (c) M(x) is continuous; (d) x* is a fixed point of M(x) if and only if x* is a stationary point of f(x); and (e) f[M(x*)] ≥ f(x*), with equality if and only if x* is a fixed point of M(x). For a general signomial program, items (a) and (b) are the hardest to check. Our examples provide some clues.

The standard convergence results for the quadratic penalty method are covered in the references [1,10,13,17]. To summarize the principal finding, suppose that the objective function f(x) and the constraint functions ri(x) and si(x) are continuous and that f(x) is coercive on . If xλ minimizes the penalized objective function

and x∞ is a cluster point of xλ as λ tends to ∞, then x∞ minimizes f(x) subject to the constraints. In this regard observe that the coerciveness assumption on f(x) implies that the solution set {xλ}λ is bounded and possesses at least one cluster point. Of course, if the solution set consists of a single point, then xλ tends to that point.

8 Discussion

The current paper presents novel algorithms for both geometric and signomial programming. Although our examples are low dimensional, the previous experience of Sha et al. [18] offers convincing evidence that the MM algorithm works well for high-dimensional quadratic programming with nonnegativity constraints. The ideas pursued here – the MM principle, separation of variables, quasi-Newton acceleration, and penalized optimization – are surprisingly potent in large-scale optimization. The MM algorithm deals with the objective function directly and reduces multivariate minimization to a sequence of one-dimensional minimizations. The MM updates are simple to code and enjoy the crucial descent property. Treating constrained signomial programming by the penalty method extends the MM algorithm even further. Quadratic programming with linear equality and inequality constraints is the most important special case of constrained signomial programming. Our new MM algorithm for constrained quadratic programming deserves consideration in high-dimensional problems. Even though MM algorithms can be notoriously slow to converge, quasi-Newton acceleration can dramatically improve matters. Acceleration involves no matrix inversion, only matrix times vector multiplication. In our limited experiments with large-scale problems [22,23], MM algorithms with quasi-Newton acceleration can achieve comparable or better performance than limited-memory BFGS algorithms. Finally, it is worth keeping in mind that parameter separated algorithms are ideal candidates for parallel processing.

Because geometric programs are convex after reparameterization, it is relatively easy to pose and check sufficient conditions for global convergence of the MM algorithm. In contrast it is far more difficult to analyze the behavior of the MM algorithm for signomial programs. Theoretical progress will probably be piecemeal and require problem-specific information. A major difficulty is understanding the asymptotic nature of the objective function as parameters approach 0 or ∞. Even in the absence of theoretical guarantees, the descent property of the MM algorithm makes it an attractive solution technique and a diagnostic tool for finding counterexamples. Some of our test problems expose the behavior of the MM algorithm in non-standard situations. We welcome the help of the optimization community in unraveling the mysteries of the MM algorithm in signomial programming.

Acknowledgments

Research was supported by United States Public Health Service grants GM53275, MH59490, and R01HG006139.

Contributor Information

Kenneth Lange, Departments of Biomathematics, Human Genetics, and Statistics, University of California, Los Angeles, CA 90095-1766, USA. klange@ucla.edu.

Hua Zhou, Department of Statistics, North Carolina State University, 2311 Stinson Drive, Campus Box 8203, Raleigh, NC 27695-8203, USA. hua_zhou@ncsu.edu.

References

- 1.Bertsekas DP. Nonlinear Programming. Athena Scientific; 1999. [Google Scholar]

- 2.Borwein JM, Lewis AS. Convex Analysis and Nonlinear Optimization: Theory and Examples. New York: Springer-Verlag; 2000. [Google Scholar]

- 3.Boyd S, Kim S-J, Vandenberghe L, Hassibi A. A tutorial on geometric programming. Optimization and Engineering. 2007;8:67–127. [Google Scholar]

- 4.Boyd S, Vandenberghe L. Convex Optimization. Cambridge: Cambridge University Press; 2004. [Google Scholar]

- 5.Ecker JG. Geometric programming: methods, computations and applications. SIAM Review. 1980;22:338–362. [Google Scholar]

- 6.Feigin PD, Passy U. The geometric programming dual to the extinction probability problem in simple branching processes. Annals Prob. 1981;9:498–503. [Google Scholar]

- 7.del Mar Hershenson M, Boyd SP, Lee TH. Optimal design of a CMOS op-amp via geometric programming. IEEE Trans Computer-Aided Design. 2001;20:1–21. [Google Scholar]

- 8.Hoffman K. Analysis in Euclidean Space. Englewood Cliffs, NJ: Prentice-Hall; 1975. [Google Scholar]

- 9.Hunter DR, Lange K. A tutorial on MM algorithms. Amer Statistician. 2004;58:30–37. [Google Scholar]

- 10.Lange K. Optimization. New York: Springer-Verlag; 2004. [Google Scholar]

- 11.Lange K, Hunter DR, Yang I. Optimization transfer using surrogate objective functions (with discussion) J Comput Graphical Stat. 2000;9:1–59. [Google Scholar]

- 12.Mazumdar M, Jefferson TR. Maximum likelihood estimates for multinomial probabilities via geometric programming. Biometrika. 1983;70:257–261. [Google Scholar]

- 13.Nocedal J, Wright SJ. Numerical Optimization. Springer; 1999. [Google Scholar]

- 14.Passy U, Wilde DJ. A geometric programming algorithm for solving chemical equilibrium problems. SIAM J Appl Math. 1968;16:363–373. [Google Scholar]

- 15.Peressini AL, Sullivan FE, Uhl JJ., Jr . The Mathematics of Nonlinear Programming. New York: Springer-Verlag; 1988. [Google Scholar]

- 16.Peterson EL. Geometric programming. SIAM Review. 1976;18:338–362. [Google Scholar]

- 17.Ruszczynski A. Optimization. Princeton University Press; 2006. [Google Scholar]

- 18.Sha F, Saul LK, Lee DD. Multiplicative updates for nonnegative quadratic programming in support vector machines. In: Becker S, Thrun S, Obermayer K, editors. Advances in Neural Information Processing Systems. Vol. 15. Cambridge, MA: MIT Press; pp. 1065–1073. [Google Scholar]

- 19.Steele JM. The Cauchy-Schwarz Master Class: An Introduction to the Art of Inequalities. Cambridge: Cambridge University Press and the Mathematical Association of America; 2004. [Google Scholar]

- 20.Wang Y, Zhang K, Shen P. A new type of condensation curvilinear path algorithm for unconstrained generalized geometric programming. Math. Comput. Modelling. 2002;35:1209–1219. [Google Scholar]

- 21.Zhou H, Alexander D, Lange KL. A quasi-Newton acceleration method for high-dimensional optimization algorithms. Statistics and Computing. 2011;21:261–173. doi: 10.1007/s11222-009-9166-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhou H, Lange KL. MM algorithms for some discrete multivariate distributions. Journal of Computational and Graphical Statistics. 2010;19(3):645–665. doi: 10.1198/jcgs.2010.09014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zhou H, Lange KL. A fast procedure for calculating importance weights in bootstrap sampling. Computational Statistics and Data Analysis. 2011;55:26–33. doi: 10.1016/j.csda.2010.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhou H, Lange KL. Path following in the exact penalty method of convex programming. arXiv: 1201.3593. 2011 doi: 10.1007/s10589-015-9732-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhou H, Lange KL, Suchard MA. Graphical processing units and high-dimensional optimization. Statistical Science. 2010;25(3):311–324. doi: 10.1214/10-STS336. [DOI] [PMC free article] [PubMed] [Google Scholar]