ABSTRACT

Navigating is a complex cognitive task that places high demands on spatial abilities, particularly in the absence of sight. Significant advances have been made in identifying the neural correlates associated with various aspects of this skill; however, how the brain is able to navigate in the absence of visual experience remains poorly understood. Furthermore, how neural network activity relates to the wide variability in navigational independence and skill in the blind population is also unknown. Using functional magnetic resonance imaging, we investigated the neural correlates of audio‐based navigation within a large scale, indoor virtual environment in early profoundly blind participants with differing levels of spatial navigation independence (assessed by the Santa Barbara Sense of Direction scale). Performing path integration tasks in the virtual environment was associated with activation within areas of a core network implicated in navigation. Furthermore, we found a positive relationship between Santa Barbara Sense of Direction scores and activation within right temporal parietal junction during the planning and execution phases of the task. These findings suggest that differential navigational ability in the blind may be related to the utilization of different brain network structures. Further characterization of the factors that influence network activity may have important implications regarding how this skill is taught in the blind community. Hum Brain Mapp 35:2768–2778, 2014. © 2013 Wiley Periodicals, Inc.

Keywords: early blind, virtual environments, navigation, way finding, path integration, posterior parietal cortex, temporal parietal junction

INTRODUCTION

Navigating independently remains a very important skill for a blind individual to master; yet, very little is known about how they are able to find their way without accessing spatial information and identifying landmarks through sight. Blind individuals typically undergo formal instruction [referred to as orientation and mobility (O&M) training] to develop adaptive strategies for efficient navigation and independent travel (Blasch et al., 1997). In particular, blind individuals learn to use their remaining senses (such as hearing, touch, and proprioception) for the purposes of planning appropriate routes, gathering and updating information regarding position, and reorienting to reestablish travel as needed (Long and Giudice, 2010; Loomis et al., 2001). Despite formal training and the advent of novel assistive technology (e.g., Chebat et al., 2011; Giudice et al., 2007; Kalia et al., 2010; Lahav et al., 2011; Loomis et al., 2005), it is clear that navigation abilities vary dramatically in the blind population. For example, some individuals completely master this skill and have an excellent sense of their spatial surroundings, while others struggle to maintain their orientation and find their way especially when faced with unfamiliar environments. At present, it is unknown how this variability manifests itself in terms of the brain networks associated with navigation in the blind.

Building upon extensive work in animal models and neuroimaging studies in humans, navigation implicates a distributed network of occipital‐parietal‐temporal‐frontal regions (Latini‐Corazzini et al., 2010). However, a core network of key brain structures have been identified underlying the multiple facets involved with this skill (Burgess 2008; Chrastil 2013; Lithfous et al., 2012; Maguire et al., 1998; O'Keefe et al., 1998; Spiers and Maguire, 2006b). Within the medial temporal lobe, the hippocampus (as well as associated parahippocampal regions) plays a key role in storing and accessing spatial information to facilitate navigation to unseen goals and is crucial for maintaining allocentric (i.e., object based or survey level) representations of space (Maguire et al., 1998, 2000; O'Keefe et al., 1998). In contrast, the subcortical caudate nucleus is associated with egocentric (i.e., first person based or route level) representations of space, typical of following a familiar route (Hartley et al., 2003; Iaria et al., 2003). Retrosplenial cortex (strategically positioned between the medial temporal lobe and parietal cortex) is implicated in the processing of heading information (Iaria et al., 2003) and the transformation of spatial information into allocentric representations (Spiers and Maguire, 2006a; Vann et al., 2009). For its part, frontal areas (including the medial frontal gyrus and prefrontal cortical areas) participate in planning and maintaining task relevant information in working memory such as the temporal order of landmarks (Maguire et al., 1998; Wolbers et al., 2004).

A crucial step in successful navigation is the processing of spatial cues and integration of sensorimotor information acquired not only from different modalities but also from different reference frames. For example, the primary visual cortex is represented in retinotopic coordinates (Gardner et al., 2008), higher order auditory cortex is represented in head‐centered and ear‐centered systems (Maier and Groh, 2009), and hippocampal place cells represent information in an environment‐specific reference frame (Best et al., 2001). This suggests that the brain must be engaged in the integration of spatial information from differing modalities into a single egocentric frame of reference (Maguire et al., 1998). Associative areas within the parietal lobe (e.g., inferior parietal cortex) have been purported to play a key role toward this purpose (Galati et al., 2000, 2001; Maguire et al., 1998; Vogeley and Fink, 2003; Wolbers et al., 2004).

In the absence of visual experience, blind individuals may differ in terms of their reliance on nonvisual spatial information to navigate effectively. This difference (and furthermore, the overall variability in skill) may manifest itself within the core regions implicated in the task of navigation.

Much of the work carried in humans subjects has been accomplished by simulating real‐world navigation using virtual environments compatible within the scanner setting (e.g., Maguire et al., 1998; Spiers and Maguire, 2007). In the blind, identifying the structural and functional neural correlates associated with navigation has been comparatively more difficult given their reliance on only nonvisual sensory information and spatial cues. This limitation notwithstanding, previous work has been able to identify and compare activation patterns in early blind and sighted (blindfolded) controls related to navigation by using a visual to tactile sensory substitution device (Kupers et al., 2010). In this study, we expanded upon this approach using a virtual environment that characterizes an open (i.e., unconstrained), large‐scale, realistic setting with comparable complexity in line with previous investigations with sighted subjects. Toward this effort, we have worked closely with the blind community to develop a virtual environment software called audio‐based environment simulator (AbES) designed to promote navigation skill development specifically in this population. Using only iconic audio‐based cues and spatialized sound, a blind individual can explore the virtual layout of essentially any complex indoor environment. We have recently shown that this simulation approach can be used by early profoundly blind participants to generate an accurate spatial mental representation that corresponds to the spatial layout of a modeled physical building. Participants were able to transfer the spatial information acquired and successfully carry out a series of navigation tasks in the target physical building for which they were previously unfamiliar (Merabet et al., 2012).

For the purposes of this investigation, we asked blind participants to navigate along a series of virtual path integration routes within the same large‐scale, complex, and realistic indoor environment with the goal of identifying the neural correlates associated with this task. Furthermore, we sought to characterize the variability observed in overall navigation abilities by correlating individual patterns of activation with a validated self‐report measure of spatial navigation and independence in blind individuals.

METHODS

Participants

Nine early blind individuals (all with documented diagnosis of profound blindness before age 3 and from known congenital causes; see Table 1) were recruited to participate in this study. All were right handed based on self‐report, and all had undergone formal O&M training. All participants provided written informed consent in accordance with procedures approved by the investigative review board of the Massachusetts Eye and Ear Infirmary (Boston, MA).

Table 1.

Participant Characteristics

| Subject | Independence score | Age (y) | Gender | Visual function | Documented diagnosis | Primary mobility aid | Virtual navigation task performance (% correct) |

|---|---|---|---|---|---|---|---|

| 1 | 76 | 31 | M | Light perception | Congenital retinitis pigmentosa | Long cane | 85.7 |

| 2 | 70 | 36 | M | Hand motion | Leber's congenital amaurosis | Long cane | 71.4 |

| 3 | 68 | 23 | F | Light perception | Leber's congenital amaurosis | Long cane | 93.3 |

| 4 | 66 | 35 | M | Hand motion | Juvenile macular degeneration and glaucoma | Standard cane | 88.9 |

| 5 | 54 | 31 | F | No light perception | Retinopathy of prematurity | Guide dog | 83.4 |

| 6 | 50 | 25 | M | No light perception | Congenital anophthalmia | Guide dog | 60.0 |

| 7 | 48 | 26 | F | No light perception | Retinopathy of prematurity | Guide dog | 45.0 |

| 8 | 41 | 23 | F | Hand motion | Retinopathy of prematurity | Standard cane | 82.0 |

| 9 | 30 | 42 | M | Light perception | Congenital optic nerve atrophy | Driver, standard cane | 66.6 |

Sense of Direction Score and Navigational Independence Rating

To quantify each participant's overall navigation ability, we employed the Santa Barbara Sense of Direction (SBSoD) scale (Hegarty et al., 2002; Wolbers and Hegarty, 2010) modified for blind and visually impaired individuals (Giudice et al., 2011; Wolbers et al., 2011). This instrument quantifies an individual's perceived sense of direction, ability to find one's way in the environment, and ability to learn the layout of a large‐scale space (collectively referred to as environmental spatial ability). The instrument consists of statements regarding spatial and navigational abilities, preferences, and experiences in which responder's rate their level of agreement on a 7‐point Likert‐type scale (ranging from 1: “strongly disagree” to 7:“strongly agree”). There are 15 items in which roughly half the items in the questionnaire are stated positively and the other half negatively. Thus, prior to analysis, the scoring of the negatively stated items is reversed so that a higher score is indicative of an overall higher sense of environmental spatial ability.

Behavioral Task and Training

Each participant was given 2 h of combined structured instruction and free exploration time so as to learn the spatial layout of a target indoor virtual environment using the AbES software. AbES was developed using the XNA programming platform and runs on a standard PC laptop computer. Based on an original architectural floor plan of an existing building (located at the Carroll Center for the Blind; Newton, MA), the modeled indoor virtual environment includes 23 rooms, a series of connecting corridors, 3 separate entrances, and 2 stairwells (Fig. 1a). The design specifics of this user‐centered audio‐based interface have been described in detail elsewhere (see Connors et al., 2013). Briefly, using simple keystrokes the user‐centered software allows an individual to explore the virtual environment (moving forward, right, or left) and explicitly learn the spatial layout of the building. Each virtual step approximates one step in the real physical building. Auditory‐based spatial information is dynamically updated, acquired sequentially, and within context, allowing the user to build a corresponding spatial mental representation of the environment. Spatial and situational information is based on iconic and spatialized sound cues provided after each step taken (e.g., hearing a knocking sound in the left stereo channel represents the presence of a door on the user's left side). Orientation is based on cardinal compass headings (e.g., “north” or “east”) and text to speech is used to provide further information regarding a user's current location, orientation, and heading (e.g., “you are in the corridor on the first floor, facing west”) as well as the identity of objects and obstacles in their path (e.g. “this is a door”). Distance cues are provided based on modulating sound intensity. The spatial localization of the sounds is updated to match the user's egocentric heading. The software is designed to play an appropriate audio file as a function of the location and egocentric heading of the user and keeps track of the user's position as they move through the environment. For example, if a door is located on the person's right side, the knocking sound is heard in the user's right ear. Conversely, if the person now turns around 180° so that the same door is now located on their left side, the same knocking sound is now heard in the left channel. Finally, if the user is facing the door, the same knocking sound is heard equally in both ears. By continuously monitoring the user's egocentric heading, the software can play the appropriate spatial localized sounds that identify the presence and location of objects and keep track of these changes as the user moves through the virtual environment.

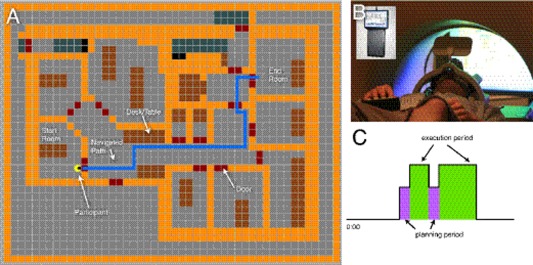

Figure 1.

Experimental protocol: (a) visual representation of the AbES environment. Participants were given audio cues to indicate their starting position and target room. The blue line indicates a representative navigation path. (b) Sample block period for the navigation task showing the relationship of the planning period to the execution period for the navigation task. Participants were instructed to not make any movements during the planning period while instructions were given (a). (c) Participants were blindfolded and performed this task within the MRI using a four‐button response box to navigate the environment shown in.

The training involves a complete step‐by‐step instruction of the building layout such that all the room locations, exits, and landmarks are encountered in a serial fashion (following a clockwise direction). This method of exploration is similar to a “shoreline” strategy along the interior perimeter of the building. The paths followed were virtual recreations of a typical lesson taught by a professional O&M instructor for the blind. After the initial assistance of a sighted facilitator, the participants were encouraged to freely explore the virtual environment on their own to get a sense of the entire area laid out over a three‐dimensional space. Participants wore stereo headphones and were blindfolded at all times during the training (and subsequent scanning) period.

Functional Magnetic Resonance Imaging Data Acquisition and Analysis

Neuroimaging was performed using a Philips 3T MRI scanner. Each subject completed four 8.5‐min functional runs (TR = 2.5 s; in plane resolution 3 × 3 mm, forty‐five 3‐mm slices, TE: 28 ms, flip angle: 90°). Functional runs consisted of three block periods, repeated twice, each separated by 30 s of rest. Three task blocks were used: a motor control task (30 s), a navigation control task (60 s), and a virtual navigation task (60 s). Within each virtual navigation block, two path integration tasks were presented consisting of an 8‐s audio instruction cue (planning period), followed by time to freely navigate to the target goal (execution period), up to a maximum time of 60 s. Out‐of‐scanner piloting suggested that all the tested paths could be navigated within 40–50 s allowing for two periods of audio instruction cues within a 60‐s navigation block (Fig. 1b). Participation in the neuroimaging study occurred once the participant was able to successfully complete all the path integration tasks within the allotted time. Using a functional magnetic resonance imaging (fMRI) compliant button box and stereo headphones, participants were able to explore the virtual environment in a similar manner as the training phase (Fig. 1c). Virtual navigation paths of comparable difficulty (i.e., distance traveled and number of turns) were chosen based on predetermined pairings of start and stop locations (i.e., rooms) (see Fig. 1a for example route). Specifically, the range of steps needed to navigate the target route ranged between 25 and 35 steps and incorporated between 3 and 4 turns of 90°. For the motor control task, subjects were asked to push the button keys in a random sequence. The order of each block was pseudo‐randomized and counterbalanced across runs. Predetermined navigation paths were loaded into the AbES software and presented automatically after each completed task. During the navigation blocks, subjects were placed in a predetermined location (a room) and were instructed to navigate to a target location (another room located in the same building). Once the target was reached, subjects were then automatically placed in a new location and instructed to navigate to the next target. The route paths, success, and time taken were automatically recorded by the AbES software and performance on each run was video‐taped to reconstruct regressors to account for the instruction and execution periods during each navigation task. For example, if a subject reached the second goal before the end of the 1‐min block, this time was noted and not included in the regressor for execution. Performance on the navigation task was assessed as number of correctly navigated paths within the allotted 60‐s navigation period.

Analysis

All functional analyses were conducted with FEAT version 5.98, a part of FSL (FMRIB's software library, http://www.fmrib.ox.ac.uk/fsl). Data were processed using standard procedures. The following preprocessing steps were applied: motion correction, Gaussian spatial smoothing (5 mm FWHM), mean intensity normalization, and high‐pass temporal filtering (sigma = 50 s). After preprocessing, regressors for each task and nuisance motion variables were input into a general linear model. Individual sessions were incorporated in a second‐level analysis that included a regressor for navigational independence (SBSoD) score. All volumes were thresholded using clusters determined by z > 2.3 and corrected for cluster significance at a threshold of P = 0.05. A secondary region of interest (ROI) analysis was performed on the right temporal parietal junction (TPJ) by drawing a 5‐mm radius spherical ROI (x = 51, y = −54, z = 27) at the site of TPJ previously identified and reported (Saxe and Kanwisher, 2003) and extracting region‐based statistics. A linear regression was performed between SBSoD scale scores and individual z‐statistic activation. An ancillary analysis, we also substituted SBSoD scale scores with behavioral task performance (% of target rooms reached successfully in the allotted navigation time).

RESULTS

The participants' self‐reported navigation skill (i.e., sense of environmental spatial ability) obtained by the SBSoD questionnaire are presented and ranked from highest to lowest in Table 1 (mean score 55.89 ± 15.18 SD). Despite a certain degree of variability, the scores subjectively matched clinical impressions regarding navigation skill and overall independence. Specifically, participants with the highest SBSoD scores corresponded to individuals that could be considered more expert and highly independent navigators (e.g., routinely used public transport and long cane users). Lower SBSoD scores were associated with less independent navigators (e.g., heavily reliant on assistive resources such as a personal driver). Middle range scores were associated with individuals who were guide dog users.

All participants were able to carry out the virtual navigation tasks within the scanner environment (mean performance: 75.15% correct ± 15.68 SD). Interestingly, these same participants exhibited variable activation in their fMRI responses when comparing the contrast of navigation versus rest. Specifically, during both the planning (instruction) and the execution period of the navigation task, a great degree of variability was observed in terms of the magnitude, extent, and areas of activation revealed by fMRI. To ascertain if this variability in individual activation patterns was related to the level of self‐reported navigation skill, the functional MRI analysis was entered into a second‐level analysis where individual subjects' SBSoD scores were treated as a regressor.

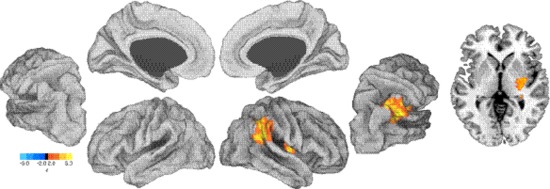

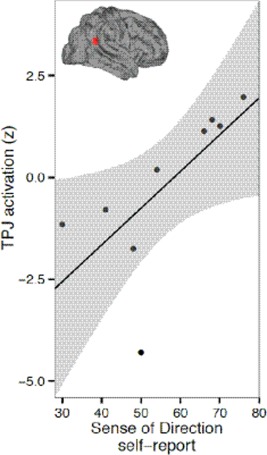

For the execution period, a positive relationship between navigational independence and fMRI activation was found within the right TPJ. This cluster of voxels extended down through the TPJ (cluster maximum Montreal Neurological Institute (MNI): 48, −48, 22) and included the right lateral thalamus and the posterior putamen (local maximum MNI: 36, −6, 4). The spatial location of these relationships are illustrated in Figure 2, and cluster information is shown in Tables 2 and 3. To further extend this analysis, an independent region of interest analysis was performed, finding a significant correlation between SBSoD scores and fMRI activation in right TPJ (r = 0.69, P < 0.05; Fig. 3).

Figure 2.

Group map for execution vs. rest contrast correlated with SBSoD scores. Left, partially inflated views of left and right hemispheres showing activation in right TPJ corresponds with higher SBSoD scores. Right, an axial slice through MNI z = 0. Color scale: red, regions positively related to SBSoD scores; blue, regions negatively related to SBSoD scores.

Table 2.

Loci of Activation

| Cluster center of gravity (voxels correlated with SBSoD questionnaire) | Cluster index | ||||

|---|---|---|---|---|---|

| x | y | z | mm3 | P | |

| Navigation × SBSoD (+) | |||||

| 44.6 | −32.1 | 21.6 | 19,160 | 0.0018 | 1+ |

| Planning × SBSoD (+) | |||||

| 40.4 | −55.8 | 39 | 19,920 | 0.0006 | 1+ |

| Planning × SBSoD (−) | |||||

| −52.5 | −27.1 | −2.08 | 39,728 | >0.0001 | 1− |

| 57.6 | −16.2 | −2.02 | 11,944 | 0.0145 | 2− |

| 31.3 | 6.67 | −34.8 | 9,520 | 0.0419 | 3− |

Table 3.

Loci of Activation

| Cluster local maximums | Cluster index | |||

|---|---|---|---|---|

| x | y | z | z value | |

| Navigation × SBSoD (+) | ||||

| 62 | −56 | 30 | 4.08 | 1+ |

| 20 | −18 | 12 | 4.01 | 1+ |

| 54 | −46 | 34 | 3.96 | 1+ |

| 34 | −10 | 0 | 3.96 | 1+ |

| 54 | −32 | 34 | 3.94 | 1+ |

| 70 | −36 | 34 | 3.87 | 1+ |

| Planning × SBSoD (+) | ||||

| 28 | −66 | 52 | 4.87 | 1+ |

| 18 | −68 | 50 | 4.22 | 1+ |

| 30 | −78 | 36 | 4 | 1+ |

| 30 | −76 | 44 | 3.97 | 1+ |

| 30 | −72 | 32 | 3.88 | 1+ |

| 32 | −80 | 18 | 3.81 | 1+ |

| Planning × SBSoD (−) | ||||

| −58 | −8 | 4 | 4.34 | 1− |

| −66 | −8 | 0 | 4.3 | 1− |

| −62 | −4 | −4 | 4.3 | 1− |

| −56 | −74 | 26 | 4.22 | 1− |

| −68 | −24 | −8 | 4.2 | 1− |

| −58 | −8 | −4 | 4 | 1− |

| 66 | −6 | −24 | 3.73 | 2− |

| 58 | −18 | −8 | 3.73 | 2− |

| 68 | 0 | −18 | 3.66 | 2− |

| 62 | −10 | 4 | 3.63 | 2− |

| 54 | −12 | 2 | 3.62 | 2− |

| 64 | −22 | −2 | 3.61 | 2− |

| 34 | 12 | −40 | 4.5 | 3− |

| 26 | 2 | −38 | 3.96 | 3− |

| 20 | 4 | −18 | 3.83 | 3− |

| 14 | 10 | −20 | 3.68 | 3− |

| 16 | 2 | −22 | 3.58 | 3− |

| 34 | 22 | −44 | 3.54 | 3− |

Figure 3.

TPJ activation for execution vs. rest correlates to individual SBSoD scores. A spherical region of interest (5 mm radius) was drawn at the coordinate x = 51, y = −54, z = 27 (Saxe and Kanwisher, 2003). BOLD activation for the execution vs. rest comparison was converted into z‐scores and plotted against individual SBSoD scores. Black line, best linear fit; gray bands, 95% confidence interval on linear fit. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

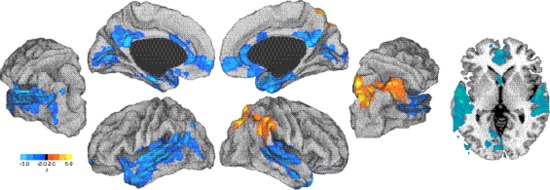

For the planning period (i.e. when subjects listened to the navigation task instructions), a positive relationship was again found in the right TPJ (local maximum MNI: 66, −44, 22) and in addition, the superior parietal cortex (local maximum MNI: 20, −66, 60). Negative relationships were found in medial prefrontal cortex (local maximum MNI: −6, 60, −4), bilateral superior temporal lobe [local maximum MNI: −54, −22, 6 (left), 56, −8, 2 (right)], left inferior temporal lobe (local maximum MNI: −40, −6, −22), bilateral anterior cingulate (local maximum MNI: 8, 14, 28), bilateral posterior cingulate (local maximum MNI: 2, −48, 20), and bilateral calcarine sulcus (local maximum: MNI 0, −72, 6). The spatial location of these relationships are shown in Figure 4, and cluster information is shown in Tables 2 and 3.

Figure 4.

Group map for planning vs. rest correlated with SBSoD scores. Left, partially inflated views of left and right hemispheres. Right, an axial slice through MNI z = 0. Red, regions positively related to SBSoD scores; blue, regions negatively related to SBSoD scores.

Finally, as an ancillary analysis, we substituted SBSoD scores with behavioral task performance scores and found that patterns of activation were qualitatively similar (see Supporting Information). Behavioral task performance was found to be highly correlated with SBSoD (Spearman r = 0.58).

DISCUSSION

We report that, in the blind, path integration tasks performed within a virtual environment leads to differential activation of core regions implicated with the navigation network revealed by fMRI. Furthermore, we assessed brain activation in response to the planning and execution phases of the task and compared this with individual self‐reported navigation ability as indexed by the SBSoD scale. In highly rated navigators, robust activation within a highly localized region corresponding to the right TPJ correlated significantly with individual SBSoD scores for both the execution and planning components of the navigation task. In contrast, lower rated navigators tended to show activation within a more distributed network of areas implicating parahippocampal regions and early sensory processing areas such as the occipital‐calcarine sulcus as well as superior and inferior temporal lobe (rather than TPJ) for the planning component of the navigation task.

Activation within TPJ has been previously shown a variety of cognitive tasks (see Decety and Grezes, 2006), including mentalizing the thoughts of others (Saxe and Kanwisher, 2003), autobiographical memory (Spreng et al., 2009), cross‐modal sensory integration (Downar et al., 2000), and self‐location and first‐person perspective (Ionta et al., 2011a, 2011b). Here, we propose that TPJ is implicated in egocentric‐based navigation and, furthermore, may serve as a marker of independent navigation ability. The exact role of TPJ in spatial navigation has not been previously clearly established. Interestingly, clinical data reported by Ciaramelli et al. (2010) has shown that lesions to TPJ impair performance on egocentric‐based navigation tasks (but not allocentric) such as the identification of landmarks (Ciaramelli et al., 2010).

The neural correlates associated with navigation performance in the blind have been recently explored in a number of studies (albeit with different approaches to the one presented here). For example, Deutschlander et al. (2009) used fMRI in congenitally blind subjects while they performed a kinesthetic imagery task (imaginary walking and running compared to standing and lying down). In contrast to sighted controls, robust activation within multisensory vestibular areas (posterior insula and superior temporal gyrus) was observed during imagined locomotion in blind subjects (activation within parahippocampal and fusiform areas was not observed). The authors interpreted these findings to suggest that, compared with sighted, the blind are more reliant on vestibular and somatosensory feedback for locomotion control. Kupers et al. (2010) used fMRI in a route recognition task in congenitally blind and sighted (blindfolded) control subjects trained to use a vision‐to‐tactile sensory substitution device (the tongue display unit). Following training, participants carried out a recognition task based on previously explored routes learned with the tongue display unit. The authors reported that blind subjects (but not blindfolded sighted controls) activated right parahippocampus and areas corresponding to visual cortex during this route recognition task (Kupers et al., 2010). In a follow‐up study by the same group, fMRI was used to identify the neural correlates associated in solving a tactile maze pattern (using the index finger) in congenitally blind and sighted subjects. Here, activation was observed in the right medial temporal lobe (i.e., hippocampus and parahippocampus), occipital cortex, and fusiform gyrus in blind participants, while blindfolded sighted controls did not show increased activation in these areas, instead activating the subcortical caudate nucleus and thalamus (Gagnon et al., 2012). Taken together, these results indicate that structures implicated in the core navigation network are activated during different types of navigation tasks and that differences in the recruitment of these areas are likely to exist not only between blind and sighted individuals but also in relation to the nature of sensory information acquired and the demands related to the navigation task performed.

The results of the study by Gagnon et al. (2012) as well as an earlier structural imaging study by Fortin et al. (2008) (the latter demonstrating an association between larger hippocampal volume and supranormal spatial navigation skills), highlight the importance of the hippocampus in navigation in the blind. While hippocampal activation was observed in certain participants of this study (not shown), the overall activation within hippocampus‐parahipocampal regions did not appear to be correlated with individual SBSoD scores for both the execution and planning components of the navigation task. The lack of a robust and correlated activation can perhaps be reconciled by the fact that the path integration routes carried out in our study paradigm were overly reliant on egocentric navigation strategies and were even perhaps overlearned. As mentioned in the introduction, the role of the hippocampus is tightly associated with allocentric type navigation strategies. In contrast, the robust correlation we observed within parietal areas TPJ and superior parietal cortex (the latter specifically for the planning phase) is in line with the purported role of this region in egocentric‐based navigation, which is characterized with more rigid route‐based knowledge and navigation of well‐known paths or environments lacking visual features (Lithfous et al., 2012). It would be of interest to follow up these findings with a neuroimaging study in which participants switch between navigation demands (as previously carried out in sighted by Iaria et al., 2003; Jordan et al., 2004; Latini‐Corazzini et al., 2010; Mellet et al., 2000) to disentangle the role of TPJ and the neural networks associated with egocentric and allocentric strategies in the blind. Indeed, effective navigation is often associated with an ability to switch between one reference frame and another depending on what is optimal for a given situation (Lithfous et al., 2012). There is evidence that egocentric and allocentric strategies are likely to be mediated by different neural systems (Packard and McGaugh, 1996) and uncovering these spatial cues and corresponding strategies would be of great potential benefit in terms of O&M instruction in the blind. Thus, there is an interesting possibility to develop a neuroscience‐based approach that guides O&M training emphasizing the fundamental differences between how sighted, early blind, and late blind individuals carry out navigation tasks and how these strategies may be taught and differentially employed under specific situations.

In the absence of vision, the blind may not be able to easily identify the location of distant landmark cues and thus overall, the relative contribution and switching between navigation strategies (specifically, egocentric vs. allocentric modes of navigation) are likely to be different than in the sighted. An allocentric reference frame typically describes global (or “survey”) level knowledge of the surrounding environment. In contrast, an egocentric frame characterizes a first‐person perspective (or “route” level) and is typically a precursor to developing survey level knowledge (Siegel and White, 1975). In allocentric navigation, sighted individuals maintain a cognitive spatial map of their environment, which is viewpoint independent and translates where they are currently located into this map function. That is not to say that allocentric‐based strategies and representation are not possible in the blind (Noordzij et al., 2006; Ruggiero et al., 2009) but rather, it is reasonable to assume that the blind (at least initially) may be more reliant on proximal spatial cues and employ strategies such as maintaining a mental list of turns or body movements, which must be made in a particular sequence to arrive to a target location: a hallmark sign of an egocentric navigation strategy. Indeed, in our study, blind individuals who self‐report to be more navigation independent (as indexed by a higher score on the SBSoD scale) strongly activate TPJ. The close anatomical association of TPJ to parietal cortex (strongly associated with egocentric‐based navigation) fits with this view. Alternatively (though not mutually exclusive), it is possible that activation of TPJ may also be related to the nature of the virtual navigation task performed in this study. That is, the best navigators relied on an egocentric navigation strategy because it was most appropriate for the complexity of and scale of the environment used for this task. Again, as mentioned previously, follow‐up imaging studies designed to selectively promote egocentric versus allocentric frames of reference would help disentangle network activity associated with navigation strategy from skill performance.

Wolbers and Hegarty (2010) highlight that differences occurring at any or multiple interdependent stages (including the encoding of sensory spatial information, formation of spatial mental representations, and how efficiently these representations are used for the purposes of navigation) can lead to variability in navigational abilities (Wolbers and Hegarty, 2010). In the case of blindness, differences in performance may be promulgated from a very early stage, given that spatial information typically obtained from visual channels are not available. In our study, we noted that poor navigators showed correlated activity with a much more distributed network of areas implicating early areas of sensory processing during the planning stages of the navigation task (again, as opposed to highly localized activation within TPJ in high‐rated navigators). It is interesting to speculate how this more distributed pattern may be related to the poor navigation abilities observed in this group. Specifically, it appears that the different steps implicated in the planning phases of the navigation task implicate more distributed regions of the brain (including early areas of sensory processing) rather than the more consolidated pattern with TPJ observed in the high‐rated navigators. The notion that enhanced skill performance is often associated with combined “specific increases within global decreases” (Steele and Penhune, 2010) in brain activation has often been purported in motor sequence type learning (Steele and Penhune, 2010). Specifically, practice‐related shifts in brain foci and activation are taken to reflect changes in attentional demands, development of automaticity, and suppression of task irrelevant processing (Petersen et al., 1998; Sakai et al., 1998; Tracy et al., 2003). It is possible that similar processes underlie the mastery of navigation skill or at least as it is characterized using virtual‐based assessments.

Ohnishi et al. (2006) carried out an fMRI study in sighted subjects with a similar goal of relating navigation performance with patterns of activation supporting the notion that navigation skill performance is related to differential brain activities. Using a passive maze task, this group found that, compared with poor navigators, good navigators showed strong activation in hippocampus and parahippocampal areas (bilaterally) as well as precuneus. More specifically, activity in medial temporal areas (left) was positively correlated with task performance, whereas activity in the parietal areas (right) was negatively correlated (opposite to what we report here). By correlating performance with another measure of sense of direction (i.e., the sense of direction questionnaire short form), they were able to show that egocentric route strategies were associated with poor navigators, while good navigators relied on allocentric orientation strategies. Intriguingly, this same study found less activation within TPJ for navigation tasks in individuals with high scores on their measure of sense of direction (Ohnishi et al., 2006). These changes in strategies may account for contradictory conclusions (particularly in relation to the importance of prior visual experience) regarding the accuracy of cognitive spatial representations in the blind and overall navigation skill as compared with sighted individuals (Kitchin et al., 1997; Loomis et al., 2001).

As an ancillary analysis, we found that substituting the SBSoD scores with task performance scores yielded qualitatively similar results in terms of localizing activation within TPJ. This result is not surprising given that SBSoD scores were found to be correlated with behavioral task performance. On one level, this makes it difficult to disentangle task performance from navigational independence within the context of this experiment. However, in line with the original aims of the study, it can be seen that the SBSoD as an independent measure characterizes real‐world navigational abilities, and thus this observation does not detract from our fundamental finding that blind individuals who have high navigation abilities show strong activation within TPJ compared with individuals with lower abilities. Finally, it should be noted that successful task performance is an artificial construct for the purposes of this study, characterizing the participant's ability to reach the target room within the allotted time. Thus, we argue that navigation performance alone (as carried out in this virtual environment) does not capture all of the challenges faced and the strategies employed by the blind as they try to find their way in the real world.

Finally, we attempted to recruit a cohort of individuals that would best represent a wide range in variability with regards to navigation skill and independence. However, our sample size of nine participants may represent a potential limitation, particularly for the purposes of a correlation study. As such, the conclusions drawn here are still preliminary and await confirmation from a larger study sample. Further studies should be designed to leverage the strengths of employing virtual environments within the constraints of neuroimaging settings to fully elucidate the neural correlates associated with this skill. For example, does activation within TPJ change as a function of training and skill development? To answer this question, a pre‐ to postintervention design could be employed to provide more direct evidence regarding the role of TPJ (and potentially other areas) as a result of training. Current work by our group is underway to explore this question.

CONCLUSION

Spatial navigation in the blind (as assessed by a virtual path integration task) appears to be associated with many of the core brain areas identified as part of the navigation network. In this study, we report that high navigational ability in the blind is correlated with the utilization of regions of the brain responsible for egocentric navigation. The development of neuroscience‐based training strategies for O&M training may ultimately help in minimizing the apparent variability in navigation performance by better characterizing potential factors that are associated with this skill.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

The authors would like to thank the research participants and the staff of the Carroll Center for the Blind (Newton, MA) for their support in carrying out this research and Marina Bedny for comments on an earlier draft of this manuscript. The authors declare no conflicts of interest related to the work presented.

REFERENCES

- Best PJ, White AM, Minai A (2001): Spatial processing in the brain: The activity of hippocampal place cells. Annu Rev Neurosci 24:459–486. [DOI] [PubMed] [Google Scholar]

- Blasch BB, Wiener WR, Welsh RL, editors (1997): Foundations of Orientation and Mobility, 2nd ed. New York: AFB Press. [Google Scholar]

- Burgess N (2008): Spatial cognition and the brain. Ann N Y Acad Sci. 1124:77–97. [DOI] [PubMed] [Google Scholar]

- Chebat DR, Schneider FC, Kupers R, Ptito M (2011): Navigation with a sensory substitution device in congenitally blind individuals. Neuroreport 22:342–347. [DOI] [PubMed] [Google Scholar]

- Chrastil ER (2013): Neural evidence supports a novel framework for spatial navigation. Psychon Bull Rev 20:208–227. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Rosenbaum RS, Solcz S, Levine B, Moscovitch M (2010): Mental space travel: Damage to posterior parietal cortex prevents egocentric navigation and reexperiencing of remote spatial memories. J Exp Psychol Learn Mem Cogn 36:619–634. [DOI] [PubMed] [Google Scholar]

- Connors EC, Yazzolino LA, Sánchez J, Merabet LB (2013): Development of an audio‐based virtual gaming environment to assist with navigation skills in the blind. J Vis Exp 73. doi: 10.3791/50272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Grezes J (2006): The power of simulation: Imagining one's own and other's behavior. Brain Res 1079:4–14. [DOI] [PubMed] [Google Scholar]

- Deutschlander A, Stephan T, Hufner K, Wagner J, Wiesmann M, Strupp M, Brandt T, Jahn K (2009): Imagined locomotion in the blind: An fMRI study. Neuroimage 45:122–128. [DOI] [PubMed] [Google Scholar]

- Downar J, Crawley AP, Mikulis DJ, Davis KD (2000): A multimodal cortical network for the detection of changes in the sensory environment. Nat Neurosci 3:277–283. [DOI] [PubMed] [Google Scholar]

- Fortin M, Voss P, Lord C, Lassonde M, Pruessner J, Saint‐Amour D, Rainville C, Lepore F (2008): Wayfinding in the blind: larger hippocampal volume and supranormal spatial navigation. Brain 131:2995–3005. [DOI] [PubMed] [Google Scholar]

- Gagnon L, Schneider FC, Siebner HR, Paulson OB, Kupers R, Ptito M (2012): Activation of the hippocampal complex during tactile maze solving in congenitally blind subjects. Neuropsychologia 50:1663–1671. [DOI] [PubMed] [Google Scholar]

- Galati G, Committeri G, Sanes JN, Pizzamiglio L (2001): Spatial coding of visual and somatic sensory information in body‐centred coordinates. Eur J Neurosci 14:737–746. [DOI] [PubMed] [Google Scholar]

- Galati G, Lobel E, Vallar G, Berthoz A, Pizzamiglio L, Le Bihan D (2000): The neural basis of egocentric and allocentric coding of space in humans: A functional magnetic resonance study. Exp Brain Res 133:156–164. [DOI] [PubMed] [Google Scholar]

- Gardner JL, Merriam EP, Movshon JA, Heeger DJ (2008): Maps of visual space in human occipital cortex are retinotopic, not spatiotopic. J Neurosci 28:3988–3999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giudice NA, Bakdash JZ, Legge GE (2007): Wayfinding with words: Spatial learning and navigation using dynamically updated verbal descriptions. Psychol Res 71:347–358. [DOI] [PubMed] [Google Scholar]

- Giudice NA, Betty MR, Loomis JM (2011): Functional equivalence of spatial images from touch and vision: Evidence from spatial updating in blind and sighted individuals. J Exp Psychol Learn Mem Cogn 37:621–634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley T, Maguire EA, Spiers HJ, Burgess N (2003): The well‐worn route and the path less traveled: distinct neural bases of route following and wayfinding in humans. Neuron 37:877–888. [DOI] [PubMed] [Google Scholar]

- Hegarty M, Richardson AE, Montello DR, Lovelace K, Subbiah I (2002): Development of a self‐report measure of environmental spatial ability. Intelligence 30:425–447. [Google Scholar]

- Iaria G, Petrides M, Dagher A, Pike B, Bohbot VD (2003): Cognitive strategies dependent on the hippocampus and caudate nucleus in human navigation: Variability and change with practice. J Neurosci 23:5945–5952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ionta S, Gassert R, Blanke O (2011a): Multi‐sensory and sensorimotor foundation of bodily self‐consciousness—An interdisciplinary approach. Front Psychol 2:383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ionta S, Heydrich L, Lenggenhager B, Mouthon M, Fornari E, Chapuis D, Gassert R, Blanke O (2011b): Multisensory mechanisms in temporo‐parietal cortex support self‐location and first‐person perspective. Neuron 70:363–374. [DOI] [PubMed] [Google Scholar]

- Jordan K, Schadow J, Wuestenberg T, Heinze HJ, Jancke L (2004): Different cortical activations for subjects using allocentric or egocentric strategies in a virtual navigation task. Neuroreport 15:135–140. [DOI] [PubMed] [Google Scholar]

- Kalia AA, Legge GE, Roy R, Ogale A (2010): Assessment of indoor route‐finding technology for people with visual impairment. J Vis Impair Blind 104:135–147. [PMC free article] [PubMed] [Google Scholar]

- Kitchin RM, Blades M, Golledge RG (1997): Understanding spatial concepts at the geographic scale without the use of vision. Progr Hum Geogr, 21:225–242. [Google Scholar]

- Kupers R, Chebat DR, Madsen KH, Paulson OB, Ptito M (2010): Neural correlates of virtual route recognition in congenital blindness. Proc Natl Acad Sci U S A 107:12716–12721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lahav O, Schloerb DW, Srinivasan MA (2012): Newly blind persons using virtual environment system in a traditional orientation and mobility rehabilitation program: a case study. Disabil Rehabil Assist Technol 7:420–435. [DOI] [PubMed] [Google Scholar]

- Latini‐Corazzini L, Nesa MP, Ceccaldi M, Guedj E, Thinus‐Blanc C, Cauda F, Dagata F, Peruch P (2010): Route and survey processing of topographical memory during navigation. Psychol Res 74:545–559. [DOI] [PubMed] [Google Scholar]

- Lithfous S, Dufour A, Despres O (2012): Spatial navigation in normal aging and the prodromal stage of Alzheimer's disease: Insights from imaging and behavioral studies. Ageing Res Rev 12:201–213. [DOI] [PubMed] [Google Scholar]

- Long RG, Giudice NA (2010): Establishing and maintaining orientation for mobility In: Blasch BB, Wiener WR, Welsh RL, editors. Foundations of Orientation and Mobility, 3rd ed., Vol. 1: History and Theory. New York: American Foundation for the Blind; pp 45–62. [Google Scholar]

- Loomis JM, Klatzky RL, Golledge RG (2001): Navigating without vision: Basic and applied research. Optom Vis Sci 78:282–289. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Marston JR, Golledge RG, Klatzky RL (2005): Personal guidance system for people with visual impairment: A comparison of spatial displays for route guidance. J Vis Impair Blind 99:219–232. [PMC free article] [PubMed] [Google Scholar]

- Maguire EA, Burgess N, Donnett JG, Frackowiak RS, Frith CD, O'Keefe J (1998): Knowing where and getting there: A human navigation network. Science 280:921–924. [DOI] [PubMed] [Google Scholar]

- Maguire EA, Gadian DG, Johnsrude IS, Good CD, Ashburner J, Frackowiak RS, Frith CD (2000): Navigation‐related structural change in the hippocampi of taxi drivers. Proc Natl Acad Sci U S A 97:4398–4403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maier JX, Groh JM (2009): Multisensory guidance of orienting behavior. Hear Res 258:106–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mellet E, Briscogne S, Tzourio‐Mazoyer N, Ghaem O, Petit L, Zago L, Etard O, Berthoz A, Mazoyer B, Denis M (2000): Neural correlates of topographic mental exploration: The impact of route versus survey perspective learning. Neuroimage 12:588–600. [DOI] [PubMed] [Google Scholar]

- Merabet LB, Connors EC, Halko MA, Sanchez J (2012): Teaching the blind to find their way by playing video games. PLoS One 7:e44958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noordzij ML, Zuidhoek S, Postma A (2006): The influence of visual experience on the ability to form spatial mental models based on route and survey descriptions. Cognition 100:321–342. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Burgess N, Donnett JG, Jeffery KJ, Maguire EA (1998): Place cells, navigational accuracy, and the human hippocampus. Philos Trans R Soc Lond B Biol Sci 353:1333–1340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohnishi T, Matsuda H, Hirakata M, Ugawa Y (2006): Navigation ability dependent neural activation in the human brain: An fMRI study. Neurosci Res 55:361–369. [DOI] [PubMed] [Google Scholar]

- Packard MG, McGaugh JL (1996): Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiol Learn Mem 65:65–72. [DOI] [PubMed] [Google Scholar]

- Petersen SE, van Mier H, Fiez JA, Raichle ME (1998): The effects of practice on the functional anatomy of task performance. Proc Natl Acad Sci U S A 95:853–860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruggiero G, Ruotolo F, Iachini T (2009): The role of vision in egocentric and allocentric spatial frames of reference. Cogn Process 10(Supporting Information 2):S283–S285. [DOI] [PubMed] [Google Scholar]

- Sakai K, Hikosaka O, Miyauchi S, Takino R, Sasaki Y, Putz B (1998): Transition of brain activation from frontal to parietal areas in visuomotor sequence learning. J Neurosci 18:1827–1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Kanwisher N (2003): People thinking about thinking people. The role of the temporo‐parietal junction in “theory of mind.” Neuroimage 19:1835–1842. [DOI] [PubMed] [Google Scholar]

- Siegel AW, White SH (1975): The development of spatial representations of large‐scale environments. Adv Child Dev Behav 10:9–55. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, Maguire EA (2006a): Spontaneous mentalizing during an interactive real world task: An fMRI study. Neuropsychologia 44:1674–1682. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, Maguire EA (2006b): Thoughts, behaviour, and brain dynamics during navigation in the real world. Neuroimage 31:1826–1840. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, Maguire EA (2007): Decoding human brain activity during real‐world experiences. Trends Cogn Sci 11:356–365. [DOI] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim AS (2009): The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: A quantitative meta‐analysis. J Cogn Neurosci 21:489–510. [DOI] [PubMed] [Google Scholar]

- Steele CJ, Penhune VB (2010): Specific increases within global decreases: A functional magnetic resonance imaging investigation of five days of motor sequence learning. J Neurosci 30:8332–8341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy J, Flanders A, Madi S, Laskas J, Stoddard E, Pyrros A, Natale P, DelVecchio N (2003): Regional brain activation associated with different performance patterns during learning of a complex motor skill. Cereb Cortex 13:904–910. [DOI] [PubMed] [Google Scholar]

- Vann SD, Aggleton JP, Maguire EA (2009): What does the retrosplenial cortex do? Nat Rev Neurosci 10:792–802. [DOI] [PubMed] [Google Scholar]

- Vogeley K, Fink GR (2003): Neural correlates of the first‐person‐perspective. Trends Cogn Sci 7:38–42. [DOI] [PubMed] [Google Scholar]

- Wolbers T, Hegarty M (2010): What determines our navigational abilities? Trends Cogn Sci 14:138–146. [DOI] [PubMed] [Google Scholar]

- Wolbers T, Klatzky RL, Loomis JM, Wutte MG, Giudice NA (2011): Modality‐independent coding of spatial layout in the human brain. Curr Biol 21:984–989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolbers T, Weiller C, Buchel C (2004): Neural foundations of emerging route knowledge in complex spatial environments. Brain Res Cogn Brain Res 21:401–411. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information