Abstract

Audiovisual speech consists of overlapping and invariant patterns of dynamic acoustic and optic articulatory information. Research has shown that infants can perceive a variety of basic audio-visual (A-V) relations but no studies have investigated whether and when infants begin to perceive higher order A-V relations inherent in speech. Here, we asked whether and when infants become capable of recognizing amodal language identity, a critical perceptual skill that is necessary for the development of multisensory communication. Because, at a minimum, such a skill requires the ability to perceive suprasegmental auditory and visual linguistic information, we predicted that this skill would not emerge before higher-level speech processing and multisensory integration skills emerge. Consistent with this prediction, we found that recognition of the amodal identity of language emerges at 10-12 months of age but that when it emerges it is restricted to infants’ native language.

Keywords: perceptual development, intersensory; speech; language

Interpersonal communication via the speech modality is usually represented by temporally and spatially synchronous auditory and visual streams of information. These streams correspond in terms of their intensity, duration, tempo, and rhythm (Yehia, Rubin, & Vatikiotis-Bateson, 1998) and, as a result, provide a highly salient and redundant communicative signal. Normally, we take advantage of the audio-visual (A-V) redundancy of everyday speech and because of this we not only perceive our interlocutors as unified amodal entities (Fowler, 2004; Rosenblum, 2008; Sumby & Pollack, 1954) but even recognize their specific amodal identity (Kamachi, Hill, Lander, & Vatikiotis-Bateson, 2003; Lachs & Pisoni, 2004). With specific regard to the latter, this means that adults can link a specific person’s face with that person’s voice. These facts raise questions about whether we might also be able to recognize other amodal features of talkers such as, for example, the amodal identity of a talker’s language and whether such an ability might be present early in development. In other words, can infants perceive the correlation between the vocalizations produced by a talker and the talker’s accompanying visual articulatory movements, integrate them into a unified percept, and then extract the multisensory identity information inherent in such a percept?

The theoretical expectation that the ability to recognize the amodal identity of a talker’s language might emerge early in life is consistent with a body of research showing that A-V intersensory perceptual abilities emerge during infancy and that they become relatively sophisticated by the end of the first year of life (Lewkowicz, 2000; Lewkowicz & Ghazanfar, 2009). For example, newborn infants can perceive A-V intensity equivalence (Lewkowicz & Turkewitz, 1980, 1981), learn their mother’s face if they have access to her voice in the first hours after birth (Sai, 2005), and can match monkey facial and vocal gestures purely on the basis of synchrony rather than their identity (Lewkowicz, Leo, & Simion, 2010). Two months later, infants begin to detect amodal phonetic information inherent in single syllables (Kuhl & Meltzoff, 1982; Patterson & Werker, 1999, 2003) and by three months infants can associate specific people’s faces and voices (Brookes, et al., 2001). By five months, infants can integrate conflicting audible and visible speech information in a manner consistent with the McGurk effect (Rosenblum, Schmuckler, & Johnson, 1997) and can recognize that human faces produce human speech sounds and that monkey faces produce monkey sounds (Vouloumanos, Druhen, Hauser, & Huizink, 2009). By six months, infants can perceive illusory spatiotemporal A-V relations (Scheier, Lewkowicz, & Shimojo, 2003) as well as A-V duration equivalence (Lewkowicz, 1986) and by seven months infants can perceive face-voice affect equivalence (Walker-Andrews, 1986). Finally, by eight months infants can perceive face-voice gender equivalence (Patterson & Werker, 2002) and can perform adult-like integration of auditory and visual spatial localization cues (Neil, Chee-Ruiter, Scheier, Lewkowicz, & Shimojo, 2006).

The rapid improvement in intersensory perception and the emergence of the ability to perceive amodal phonetic information (Kuhl & Meltzoff, 1982; Patterson & Werker, 1999, 2003) as well as amodal species identity (Vouloumanos, et al., 2009) suggest that the ability to perceive amodal language identity should emerge sometime during infancy as well. This ability must, however, be preceded by the ability to perceive the amodal attributes of audiovisual speech at the utterance level and this, in turn, requires that infants be able to process speech at the segmental level. Findings show, in fact, that infants begin to detect various segmental speech features during the second half of the first year of life. For example, it is then that infants first begin to detect typical word stress patterns (Jusczyk, Cutler, & Redanz, 1993), recognize language-specific sound combinations (Jusczyk, Luce, & Charles-Luce, 1994), use transitional probabilities and/or prosodic cues to identify words in continuous speech (Saffran, Aslin, & Newport, 1996), and begin to recognize word forms and mispronunciations of familiar words (Swingley, 2005; Vihman, Nakai, DePaolis, & Hallé, 2004). In addition, infants begin to understand words at around 10 months of age and by their first birthday they begin to acquire their native lexicon and its semantic properties (Benedict, 1979; Fenson, et al., 1994; Huttenlocher, 1974). Thus, it is likely that the ability to perceive the amodal character of audiovisual speech at the utterance (i.e., fluent speech) level emerges sometimes during the second half of the first year of life. Critically, however, when this ability emerges it is likely that it is restricted to the infants’ native language because multisensory perceptual narrowing produces a decline in responsiveness to non-native audiovisual inputs by the end of the first year of life (Lewkowicz & Ghazanfar, 2009; Pons, Lewkowicz, Soto-Faraco, & Sebastián-Gallés, 2009).

To examine this prediction, we tested 6-8 and 10-12 month-old infants and used a paired-preference procedure to investigate looking at two identical faces speaking silently. One face could be seen speaking in the infants’ native language and the other in their non-native language. The experiment consisted of a baseline and a familiarization/test phase. During the baseline phase infants watched the two faces speaking silently. During the familiarization/test phase infants heard one of the audible languages, saw the pairs of silently talking faces, heard the same audible language again, and saw the pairs of silently talking faces again but reversed for side of presentation. During both phases we measured looking and asked whether preferences would change following exposure to audible-only speech. As suggested earlier, our general expectation was that the ability to perceive amodal language identity would emerge sometime in the second half of the first year of life and that this would be reflected in a shift in visual preferences following familiarization. Our more specific expectation was that we would not observe the shift until 10-12 months of age because it is then that intersensory perceptual abilities are sufficiently developed to permit infants to perceive amodal language identity. In addition, we expected that we would only observe the shift following familiarization with the infants’ native language because of multisensory narrowing.

Method

Participants

We tested 6-8 month-old (N = 96; 44 girls; M age = 7 months, range = 5 months, 25 days - 8 months, 29 days) and 10-12 month-old (N = 96; 47 girls; M age = 11 months, 6 days, range = 9 months, 24 days - 12 months, 19 days) infants. All infants were raised in mostly monolingual English homes. This was established by a detailed questionnaire (Bosch & Sebastián-Gallés, 2001) which determined which language/s were spoken by the parents and other closer relatives. As in previous studies (Bosch & Sebastián-Gallés, 2001; Weikum, et al., 2007) monolingual infants were defined as those infants who had at least 80-85% exposure to a specific language (in our case, English). All but eight of the infants were Caucasian.

Apparatus & Stimuli

Infants were seated in an infant seat or in their parent’s lap 50 cm from two side-by-side 17-inch (43.2cm) LCD display monitors that were 6.7 cm apart. The experimenter was outside of the testing chamber and only started the experiment. Infant looking was recorded via a closed-circuit camera and was later coded off-line by observers who were blind with respect to the experimental hypothesis.

A single and continuous QuickTime movie was used to present all stimulus events. The visual events consisted of video clips showing the face of the same Caucasian bilingual female silently uttering a script in a highly prosodic style in English on one monitor and Spanish on the other and in a temporally similar way in each language (see Fig. 1). The auditory events consisted of 20 s clips of audible-only utterances (65±5 dB[A]) that corresponded to the first 20 s of one of the visible languages. To ensure that infants did not respond on the basis of some idiosyncratic features of the actor’s faces and vocalizations, the audible utterances were not those of the female seen but rather those of two other and different females each of whom was a native speaker in her respective language.

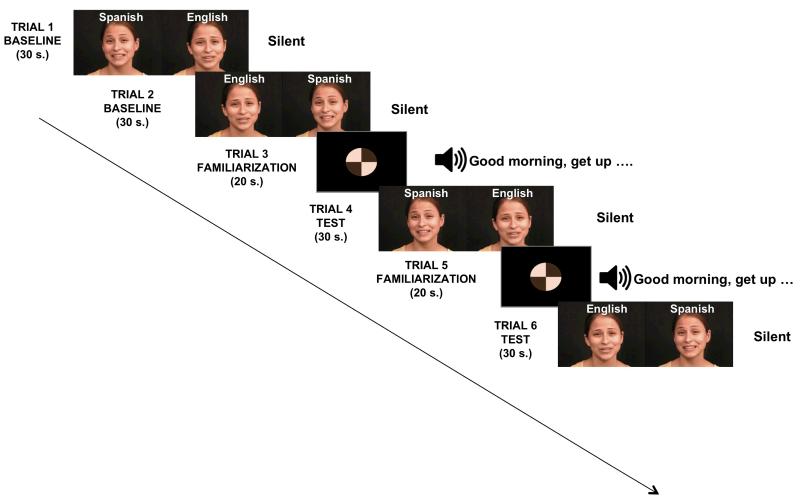

Figure 1.

A schematic representation of the types of stimuli presented during the experiment (showing only the English soundtrack condition).

Procedure

The experiment consisted of six trials: two baseline, two auditory familiarization, and two test trials (Fig. 1). Each baseline and test trial lasted 30 s and side of language presentation was switched on the second of each of these two types of trials. Each auditory familiarization trial was followed by a test trial and during the familiarization trials half the infants at each age heard the English soundtrack while half heard the Spanish soundtrack. We presented a multicolored rotating ball in the center prior to each visual test trial and during the familiarization trials to give infants something to look at while they listened to the utterance.

Results

We analyzed looking during the first 20 s of the baseline and test trials based on the expectation that infants would map the auditory utterance directly onto the corresponding visual utterance if they were perceiving amodal identity information. In the first analysis, we asked whether infants preferred either silent visual language by examining looking during the baseline trials. Results indicated that, regardless of age, infants looked equally at Spanish vs. English, respectively, in each language-familiarization group (younger, Spanish-familiarized infants: 9.4 s vs. 8.5 s [t (47) = 0.94, n.s.]; older, Spanish-familiarized infants: 8.8 s vs. 8.2 s [t (47) = 0.92, n.s.]; younger, English-familiarized infants: 8.3 s vs. 9.6 s [t (47) = 1.36, n.s.]; older, English-familiarized infants: 8.7 s vs. 9.1 s [t (47) = 0.56, n.s.].

In a second analysis, we addressed the principal question of interest, namely whether infants recognized amodal language identity. To do so, we compared looking prior to auditory familiarization with looking following it based on separate baseline and test-trial proportion-of-total-looking-time (PTLT) scores. To compute each score, we divided the total amount of looking time accorded to the matching visible language by the total amount of time accorded to both languages. We then compared these PTLT scores by way of a mixed analysis of variance (ANOVA), with Age (6-8 and 10-12 months) and Audible-Language (English, Spanish) as the between-subjects factors and Experimental Condition (baseline, test) as the within-subjects factor.

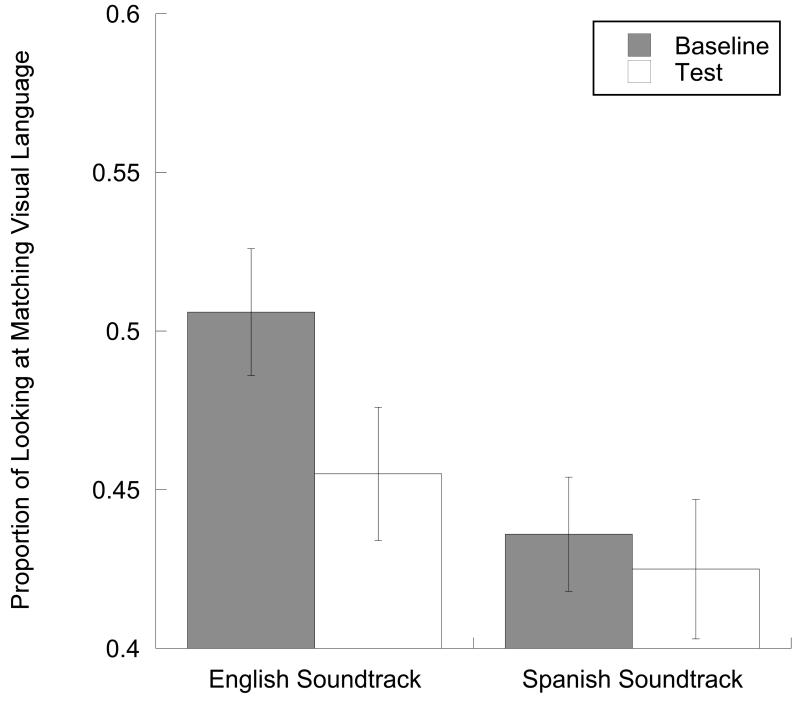

The ANOVA yielded a significant triple interaction [F (1, 188) = 5.34, p < .025]. Follow-up tests indicated that this interaction was due to the younger infants not exhibiting a shift in looking following familiarization and the older infants exhibiting a shift following familiarization with the English utterance but not with the Spanish one. That is, no significant effects were observed in the younger infants whereas a significant Audible-Language x Experimental Condition interaction [F (1, 94) = 3.83, p = .05] was found in the older infants (see Figure 2). Separate two-tailed t-tests revealed that those older infants who were familiarized with the English audible utterance looked longer at the Spanish face [t (47) = 2.51, p < .025] but that those who were familiarized with the Spanish audible utterance did not exhibit differential looking.

Figure 2.

Mean proportion of total looking at the matching visible language during the baseline trials and during the test trials following familiarization with either the English or the Spanish soundtrack in the 10-12 month-old infants. Error bars indicate the standard errors of the mean.

Discussion

The current findings demonstrate that infants begin to recognize the amodal identity of their native language by the end of the first year of life. Specifically, the findings showed that following familiarization with an auditory-only English speech utterance, older English-learning infants looked longer at a face speaking silently in Spanish than at a face speaking silently in English. This novelty effect is similar to previous reports of novelty effects in, both, the intersensory perception literature (Gottfried, Rose, & Bridger, 1977) and the visual perception literature (Pascalis, Haan, & Nelson, 2002). It shows that the older infants recognized the correspondence between the previously heard English utterance and the English-speaking face during the test trials. This finding confirms our prediction that infants are likely to begin recognizing native-language, but not non-native language, amodal identity by the end of the first year of life.

The finding that the older infants recognized native-language amodal identity suggests that they extracted, remembered, and matched common visible and audible speech attributes. If so, they did this either by detecting the spatiotemporally correlated patterns of optic and acoustic energy and/or by detecting the invariant optic and acoustic lexical information. Most likely, it was the former because even 10-month-old infants can only comprehend approximately 10 words (Fenson, et al., 1994) and, thus, do not possess a large-enough mental lexicon to detect the specific lexical items in the utterances presented here. If recognition of amodal language identity depends on the detection of the correlated temporal patterns of acoustic and optic energy, then why did the older infants fail to detect the correlated patterns following familiarization with Spanish?

The most likely answer is the fact that responsiveness to speech and language in older infants reflects the operation of two different and opposing developmental processes. The first is the process of learning and differentiation which leads to the developmental emergence of gradually improving perceptual detection skills (Gibson, 1969). It gradually enables infants to discover native-language segmental, suprasegmental, and lexical features (Johnson & Jusczyk, 2001; Jusczyk, et al., 1993; Jusczyk, et al., 1994; Saffran, et al., 1996; Swingley, 2005; Vihman, et al., 2004) and gradually leads to an improvement in intersensory perceptual skills (Lewkowicz, 2002; Lewkowicz & Ghazanfar, 2009). The second and opposing process is perceptual narrowing that occurs at, both, the unisensory and multisensory processing levels. It is illustrated by findings showing that younger infants exhibit broader unisensory and multisensory perceptual tuning than do older infants. For example, it has been found that younger infants can perceive native as well as non-native audible-only and visible-only speech (Weikum, et al., 2007; Werker & Tees, 1984), native and non-native faces (Pascalis, et al., 2002), native-race and non-native race faces (Pascalis & Kelly, 2009), and the amodal attributes of native and non-native speech (Pons, et al., 2009). In contrast, it has been found that older infants only perceive native audible-only and visible-only speech, faces, and amodal attributes of speech (Mattock & Burnham, 2006; Pascalis, et al., 2002; Pons, et al., 2009; Skoruppa, et al., 2009; Weikum, et al., 2007; Werker & Tees, 1984, 2005). Thus, even though infants’ perceptual expertise improves during development due to learning and differentiation, the breadth of that expertise narrows due to exposure to native-only auditory, visual, and audiovisual perceptual inputs.

If the older infants recognized amodal language identity by perceiving the correlated temporal patterns of acoustic and optic energy, the perceptual feature that most likely mediated responsiveness was language prosody. It is known that infants can distinguish between languages on the basis of their prosodic (i.e., rhythmic) characteristics starting at birth (Mehler, et al., 1988; Nazzi, Bertoncini, & Mehler, 1998) and that this early and broad sensitivity becomes refined and attuned to the specific prosodic characteristics of the infants’ native language (Jusczyk, et al., 1993; Nazzi, Jusczyk, & Johnson, 2000; Pons & Bosch, 2010). Because English and Spanish belong to different rhythmic classes (i.e., they are stress-timed and syllable-timed languages, respectively), it is likely that the older infants distinguished between these languages based on their unique prosodic characteristics. Consequently, the finding that the older infants successfully perceived their native amodal language identity reflects the emergence of perceptual expertise for multisensory native-language speech attributes.

The finding that the younger infants failed to recognize amodal language identity is consistent with our theoretical predictions offered earlier. Furthermore, the younger infants’ failure could not have been due to their inability to perform cross-modal transfer because prior studies have demonstrated that 6-month-old infants can perform this type of cross-modal transfer in an audiovisual speech perception task (Pons, et al., 2009) and that young infants can detect various types of multisensory relations including those inherent in audiovisual speech (Kuhl & Meltzoff, 1982). Therefore, the most likely reason for the younger infants’ failure was probably the fact that they find it difficult to perceive the multisensory coherence of audiovisual speech beyond the syllable level.

In conclusion, the current findings demonstrate that the ability to recognize the amodal identity of one’s native language emerges by the end of the first year of life. The emergence of this critical perceptual/linguistic skill is parallel with the start of the vocabulary explosion in early development (McMurray, 2007). This is important because multisensory redundancy effects are known to facilitate perception, learning, and memory (Lewkowicz & Kraebel, 2004) in infancy and because it has been found that infants take advantage of audiovisual speech redundancy when they begin learning how to talk in the second half of the first year of life (Lewkowicz & Hansen-Tift, 2012). If, by the end of the first year of life, infants take full advantage of the audiovisual redundancy of their native fluent speech then they can gain access to the redundant auditory and visual attributes of new lexical items at precisely the point when the vocabulary explosion is beginning. These redundant attributes are likely to facilitate the acquisition of new lexical items and, in the process, facilitate the acquisition of language as well.

Acknowledgements

We thank Kelly McMullan for her assistance with data collection and Romà Segura-Fabregas for his assistance with data analysis.

Funding

This work was supported by a grant from the National Science Foundation (BCS-0751888) to DJL and the Spanish Ministerio de Ciencia e Innovación (PSI2010-20294) to FP.

References

- Benedict H. Early lexical development: comprehension and production. Journal of Child Language. 1979;6:183–200. doi: 10.1017/s0305000900002245. [DOI] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Evidence of early language discrimination abilities in infants from bilingual environments. Infancy. 2001;2(1):29–49. doi: 10.1207/S15327078IN0201_3. [DOI] [PubMed] [Google Scholar]

- Brookes H, Slater A, Quinn PC, Lewkowicz DJ, Hayes R, Brown E. Three-month-old infants learn arbitrary auditory-visual pairings between voices and faces. Infant & Child Development. 2001;10(1-2):75–82. [Google Scholar]

- Fenson L, Dale P, Reznick J, Bates E, Thal D, Pethick S. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59 [PubMed] [Google Scholar]

- Fowler CA. Speech as a supramodal or amodal phenomenon. In: Calvert G, Spence C, Stein B, editors. MIT Press; Cambridge: 2004. pp. 189–201. [Google Scholar]

- Gibson EJ. Principles of perceptual learning and development. Appleton; New York: 1969. [Google Scholar]

- Gottfried AW, Rose SA, Bridger WH. Cross-modal transfer in human infants. Child Development. 1977;48(1):118–123. [PubMed] [Google Scholar]

- Huttenlocher J. The origins of language comprehension. In: Solso RL, editor. Theories in cognitive psychology: The Loyola Symposium. Lawrence Erlbaum; 1974. pp. 331–368. [Google Scholar]

- Johnson EK, Jusczyk PW. Word segmentation by 8-month-olds: When speech cues count more than statistics. Journal of Memory & Language. 2001;44(4):548–567. [Google Scholar]

- Jusczyk PW, Cutler A, Redanz NJ. Infants' preference for the predominant stress patterns of English words. Child Development. 1993;64(3):675–687. [PubMed] [Google Scholar]

- Jusczyk PW, Luce PA, Charles-Luce J. Infants' sensitivity to phonotactic patterns in the native language. Journal of Memory & Language. 1994;33(5):630–645. [Google Scholar]

- Kamachi M, Hill H, Lander K, Vatikiotis-Bateson E. Putting the face to the voice: matching identity across modality. Current Biology. 2003;13(19):1709–1714. doi: 10.1016/j.cub.2003.09.005. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218(4577):1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Lachs L, Pisoni D. Crossmodal source identification in speech perception. Ecological Psychology. 2004;16(3):159–187. doi: 10.1207/s15326969eco1603_1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Turkewitz G. Cross-modal equivalence in early infancy: Auditory-visual intensity matching. Developmental Psychology. 1980;16:597–607. [Google Scholar]

- Lewkowicz DJ, Turkewitz G. Intersensory interaction in newborns: modification of visual preferences following exposure to sound. Child Dev. 1981;52(3):827–832. [PubMed] [Google Scholar]

- Lewkowicz DJ. Developmental changes in infants' bisensory response to synchronous durations. Infant Behavior & Development. 1986;9(3):335–353. [Google Scholar]

- Lewkowicz DJ. The development of intersensory temporal perception: An epigenetic systems/limitations view. Psychological Bulletin. 2000;126(2):281–308. doi: 10.1037/0033-2909.126.2.281. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Heterogeneity and heterochrony in the development of intersensory perception. Cognitive Brain Research. 2002;14:41–63. doi: 10.1016/s0926-6410(02)00060-5. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Kraebel K. The value of multimodal redundancy in the development of intersensory perception. In: Calvert G, Spence C, Stein B, editors. Handbook of multisensory processing. MIT Press; Cambridge: 2004. pp. 655–678. [Google Scholar]

- Lewkowicz DJ, Ghazanfar AA. The emergence of multisensory systems through perceptual narrowing. Trends in Cognitive Sciences. 2009;13(11):470–478. doi: 10.1016/j.tics.2009.08.004. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Leo I, Simion F. Intersensory perception at birth: Newborns match non-human primate faces & voices. Infancy. 2010;15(1):46–60. doi: 10.1111/j.1532-7078.2009.00005.x. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Hansen-Tift AM. Infants deploy selective attention to the mouth of a talking face when learning speech. Proceedings of the National Academy of Sciences. 2012;109(5):1431–1436. doi: 10.1073/pnas.1114783109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattock K, Burnham D. Chinese and English infants' tone perception: Evidence for perceptual reorganization. Infancy. 2006;10(3):241–265. [Google Scholar]

- McMurray B. Defusing the childhood vocabulary explosion. Science. 2007;317(5838):631. doi: 10.1126/science.1144073. [DOI] [PubMed] [Google Scholar]

- Mehler J, Jusczyk P, Lambertz G, Halsted N, Bertoncini J, Amiel-Tison C. A precursor of language acquisition in young infants. Cognition. 1988;29(2):143–178. doi: 10.1016/0010-0277(88)90035-2. [DOI] [PubMed] [Google Scholar]

- Nazzi T, Bertoncini J, Mehler J. Language discrimination by newborns: Toward an understanding of the role of rhythm. Journal of Experimental Psychology: Human Perception & Performance. 1998;24(3):756–766. doi: 10.1037//0096-1523.24.3.756. [DOI] [PubMed] [Google Scholar]

- Nazzi T, Jusczyk PW, Johnson EK. Language discrimination by English-learning 5-month-olds: Effects of rhythm and familiarity. Journal of Memory & Language. 2000;43(1):1–19. [Google Scholar]

- Neil PA, Chee-Ruiter C, Scheier C, Lewkowicz DJ, Shimojo S. Development of multisensory spatial integration and perception in humans. Developmental Science. 2006;9(5):454–464. doi: 10.1111/j.1467-7687.2006.00512.x. [DOI] [PubMed] [Google Scholar]

- Pascalis O, Haan M. d., Nelson CA. Is face processing species-specific during the first year of life? Science. 2002;296(5571):1321–1323. doi: 10.1126/science.1070223. [DOI] [PubMed] [Google Scholar]

- Pascalis O, Kelly DJ. The origins of face processing in humans: Phylogeny and ontogeny. Perspectives on Psychological Science. 2009;4(2):200–209. doi: 10.1111/j.1745-6924.2009.01119.x. [DOI] [PubMed] [Google Scholar]

- Patterson ML, Werker JF. Matching phonetic information in lips and voice is robust in 4.5-month-old infants. Infant Behavior & Development. 1999;22(2):237–247. [Google Scholar]

- Patterson ML, Werker JF. Infants' ability to match dynamic phonetic and gender information in the face and voice. Journal of Experimental Child Psychology. 2002;81(1):93–115. doi: 10.1006/jecp.2001.2644. [DOI] [PubMed] [Google Scholar]

- Patterson ML, Werker JF. Two-month-old infants match phonetic information in lips and voice. Developmental Science. 2003;6(2):191–196. [Google Scholar]

- Pons F, Lewkowicz DJ, Soto-Faraco S, Sebastián-Gallés N. Narrowing of intersensory speech perception in infancy. Proceedings of the National Academy of Sciences USA. 2009;106(26):10598–10602. doi: 10.1073/pnas.0904134106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pons F, Bosch L. Stress pattern preference in Spanish-learning infants: The role of syllable weight. Infancy. 2010;15(3):223–245. doi: 10.1111/j.1532-7078.2009.00016.x. [DOI] [PubMed] [Google Scholar]

- Rosenblum LD, Schmuckler MA, Johnson JA. The McGurk effect in infants. Perception & Psychophysics. 1997;59(3):347–357. doi: 10.3758/bf03211902. [DOI] [PubMed] [Google Scholar]

- Rosenblum LD. Speech perception as a multimodal phenomenon. Current Directions in Psychological Science. 2008;17(6):405. doi: 10.1111/j.1467-8721.2008.00615.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274(5294):1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Sai FZ. The role of the mother's voice in developing mother's face preference: Evidence for intermodal perception at birth. Infant and Child Development. 2005;14(1):29–50. [Google Scholar]

- Scheier C, Lewkowicz DJ, Shimojo S. Sound induces perceptual reorganization of an ambiguous motion display in human infants. Developmental Science. 2003;6:233–244. [Google Scholar]

- Skoruppa K, Pons F, Christophe A, Bosch L, Dupoux E, Sebastián-Gallés N, Limissuri R, Peperkamp S. Language-specific stress perception by 9-month-old French and Spanish infants. Developmental Science. 2009;12(6):914–919. doi: 10.1111/j.1467-7687.2009.00835.x. [DOI] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Swingley D. 11-month-olds' knowledge of how familiar words sound. Developmental Science. 2005;8(5):432–443. doi: 10.1111/j.1467-7687.2005.00432.x. [DOI] [PubMed] [Google Scholar]

- Vihman M, Nakai S, DePaolis R, Hallé P. The role of accentual pattern in early lexical representation. Journal of Memory and Language. 2004;50(3):336–353. [Google Scholar]

- Vouloumanos A, Druhen M, Hauser M, Huizink A. Five-month-old infants' identification of the sources of vocalizations. Proceedings of the National Academy of Sciences. 2009 doi: 10.1073/pnas.0906049106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker-Andrews AS. Intermodal perception of expressive behaviors: Relation of eye and voice? Developmental Psychology. 1986;22:373–377. [Google Scholar]

- Weikum WM, Vouloumanos A, Navarra J, Soto-Faraco S, Sebastián-Gallés N, Werker JF. Visual language discrimination in infancy. Science. 2007;316(5828):1159. doi: 10.1126/science.1137686. [DOI] [PubMed] [Google Scholar]

- Werker JF, Tees RC. Cross-language speech perception: Evidence for perceptual reorganization during the first year of life. Infant Behavior & Development. 1984;7(1):49–63. [Google Scholar]

- Werker JF, Tees RC. Speech perception as a window for understanding plasticity and commitment in language systems of the brain. Developmental Psychobiology. Special Issue: Critical Periods Re-examined: Evidence from Human Sensory Development. 2005;46(3):233–234. doi: 10.1002/dev.20060. [DOI] [PubMed] [Google Scholar]

- Yehia H, Rubin P, Vatikiotis-Bateson E. Quantitative Association of Vocal-Tract and Facial Behavior. Speech Communication. 1998;26(1-2):23–43. [Google Scholar]