Abstract

Objective

Coding of clinical communication for fine-grained features such as speech acts has produced a substantial literature. However, annotation by humans is laborious and expensive, limiting application of these methods. We aimed to show that through machine learning, computers could code certain categories of speech acts with sufficient reliability to make useful distinctions among clinical encounters.

Materials and methods

The data were transcripts of 415 routine outpatient visits of HIV patients which had previously been coded for speech acts using the Generalized Medical Interaction Analysis System (GMIAS); 50 had also been coded for larger scale features using the Comprehensive Analysis of the Structure of Encounters System (CASES). We aggregated selected speech acts into information-giving and requesting, then trained the machine to automatically annotate using logistic regression classification. We evaluated reliability by per-speech act accuracy. We used multiple regression to predict patient reports of communication quality from post-visit surveys using the patient and provider information-giving to information-requesting ratio (briefly, information-giving ratio) and patient gender.

Results

Automated coding produces moderate reliability with human coding (accuracy 71.2%, κ=0.57), with high correlation between machine and human prediction of the information-giving ratio (r=0.96). The regression significantly predicted four of five patient-reported measures of communication quality (r=0.263–0.344).

Discussion

The information-giving ratio is a useful and intuitive measure for predicting patient perception of provider–patient communication quality. These predictions can be made with automated annotation, which is a practical option for studying large collections of clinical encounters with objectivity, consistency, and low cost, providing greater opportunity for training and reflection for care providers.

Keywords: Patient-provider Communication, Automated Annotation, Computational Linguistics, Machine Learning

Background and significance

The many extant systems for coding and analyzing physician–patient communication by defining verbal behaviors and counting their frequencies have produced a considerable literature.1 2 Such studies have linked verbal communication behaviors to health outcomes3–7 and established that conversation is relevant to shared decision-making,8 cultural competence,9 and patient-centeredness.10

However, quantitative analysis of clinical conversation has a very high barrier to entry. Coding a single outpatient visit with non-trivial annotations can require hours of work even for a trained expert. Fine-grained annotation of transcripts is prohibitively expensive to scale to datasets large enough to offer statistical power for many applications, and even relatively small-scale studies are expensive. Instead, results often use simple counting of annotation frequency or similar analyses.11 12 More complex conversation analysis, such as studying variation in conversational behaviors throughout a discussion, requires a large amount of data to be annotated; this fine-grained annotation of large collected datasets is not affordable or practical for the majority of interested researchers.

With recent advances in machine learning for text, there is new opportunity for this cost barrier to be dismantled. In other medical contexts, the potential of natural language processing is already being realized. Within the clinical setting, in particular, natural language processing has been used for automated extraction of factual content (for instance, in clinical notes, electronic health records, and narratives13–16).

Social behavior, meanwhile, has been studied with machine learning methods in informal settings such as online discussion forums, covering diverse topics in social support, medical information and personal narrative sharing, and medical education and awareness.17–19 Using machine learning to study social behavior in clinical conversations, by contrast, is an essentially unexplored area of research.

This paper demonstrates an application of machine learning for automated annotation of one sociolinguistic aspect of conversation. We study the information-giving ratio, a speaker's balance between giving and requesting information. We begin with a validation of the annotation scheme's qualitative interpretability and quantitative ability to predict outcomes. We then demonstrate that machine learning is approaching the ability to replicate the results that would be found with human coding. Together, these results demonstrate both the utility of information-giving annotation and the process of using machine learning for automated conversation annotation more generally in a clinical context.

Annotating information-giving

Prior work has demonstrated that language from medical interactions confers social meaning extending beyond mere semantic content, making use of constructs from systemic functional linguistics.20 21 Our work draws from that subfield to define a measure of ‘information-giving.’ Information-giving annotations mark at the speech act level when a speaker gives information or requests information, similar to annotations of statements or questions.22 A slight variant of this annotation scheme has previously been used for analysis of direction-giving dialogs,23 24 collaborative learning,25 and online support groups for cancer patients.26 27 The work presented here is the first to transfer the scheme to clinical encounters.

Throughout this paper we use the speech act as the unit of annotation for information-giving. Speech act theory28–30 is a sociolinguistic approach which identifies the social act embodied in an utterance, such as questioning, representing reality, expressing the speaker's inner state, or giving instructions. A speech act is more fine-grained than a turn (an uninterrupted span of speech from one speaker); a turn may consist of multiple speech acts with different intentions. The annotation we use here includes only selected speech acts from coding done using the Generalized Medical Interaction Analysis System (GMIAS).22 GMIAS codes are hierarchical, with high-level categories (eg, Ask Questions, Give Information, and Conversation Management) that are broken down into more narrow and concrete behaviors (such as Open and Closed Questions, self-reports of behavior, or Introduce Topic). Our study is a secondary analysis of a dataset that had already been collected, segmented into speech acts, and annotated with GMIAS codes.

Additionally, a subset of that data was annotated with the Comprehensive Analysis of the Structure of Encounters System (CASES) coding scheme.31 CASES annotates several meta-discursive aspects of a transcript, such as assigning ‘ownership’ of topics in a dialog and subdividing conversations into distinct segments in the medical interview. We use this latter annotation, known as the process that a speech act is fulfilling, in our assessment of face validity of our information-giving scheme.

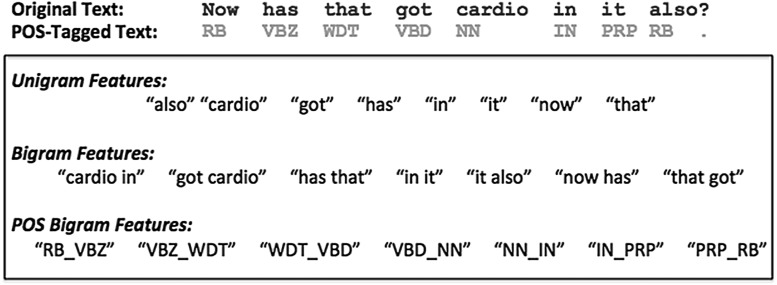

Social ritual (such as ‘thank you’ and ‘hello’), acknowledgments, promises, and various other categories of speech acts do not contribute new information from the speaker, nor do they request information, and are grouped as ‘other’ and ignored for our study, although those turns are contentful and have the potential to reveal insights in other analyses. The speech act classifications we do include are aggregated from more detailed categories in the GMIAS. An example of this annotation scheme is presented in figure 1.

Figure 1.

Annotated transcript excerpt from a provider–patient dialog.

From these speech act-level annotations, we define a metric of overall information-giving from a speaker in a conversation—the ‘information-giving ratio.’ This value can be assigned as an aggregate of a set of speech acts, and is useful as a per-speaker, rather than per-speech act, measurement.

Machine learning: background

We use machine learning as follows. After a set of initial training transcripts are annotated by hand, machine learning extracts features from those examples that represent the text content of speech acts, then discovers latent patterns across the training set. Those patterns are used to reproduce human coding behavior. No hand-derived rules or expert input is needed.

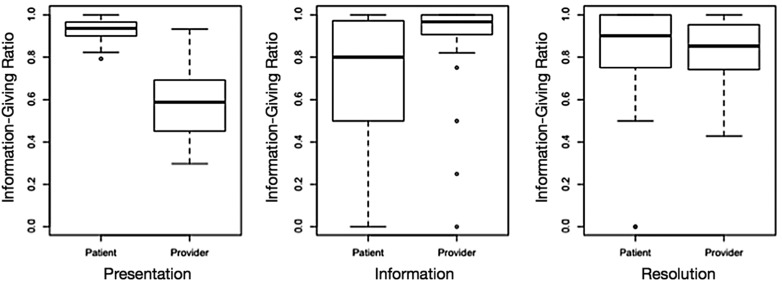

Computers are incapable of semantic understanding. Machine learning depends on statistical correspondence between linguistic features extracted from training input and the target labels. We use several lexical and syntactic features, each of which is simple on its own. In the aggregate, using many features allows flexibility for machine learning to identify patterns in language use. A description of the types of features we use is presented in table 1.

Table 1.

Features extracted for our machine learning pipeline, with references demonstrating them as standard published techniques, when applicable

| Feature type | Description | Purpose |

|---|---|---|

| Unigrams (‘bag-of-words’)32 | One feature for each word observed in the entire set of training transcripts. For a single line of dialog, the value of each unigram feature is set to ‘true’ if that word appears, and false if the word does not appear | Determining vocabulary that corresponds to particular labels, without having to define keywords or metrics ahead of time |

| Bigrams32 | Extends the unigram representation to adjacent pairs of words | Recognizing portions of phrases, rather than individual terms from the vocabulary |

| Part-of-speech bigrams32 | Identical to bigrams; however, rather than the surface form of a word, we abstract that word to the level of part-of-speech tags | Capturing grammatical structure on a basic, local level. This abstraction allows simple syntactic patterns to be recognized as indicative of a particular annotation |

| Role-specialized N-grams33 | All features in the three categories listed above are duplicated, but specialized by the predefined role of the speaker, using a domain adaptation approach described in prior work | Identifying differences in the use of a word across predefined social roles (for instance, using technical medical terms may indicate a different behavior from provider compared to patient) |

| Adjacent speech –content similarity24 | For both the previous and next speech acts from the other speaker in a transcript, we measure the similarity in vocabulary with the current speech act. To measure this, we use cosine similarity with TF-IDF term weighting | Recognizing shared topics and vocabulary between speakers can be a good indicator of the intention of a speaker; term weighting allows us to focus on rare, relevant words rather than function words like pronouns and conjunctions |

| Adjacent speech – hypothesis label | In a first pass, this feature is blank. In subsequent passes, this feature is the predicted label of the previous and next speech act from the other speaker in the transcript | Context changes the likelihood of information-giving behaviors; for instance, if the previous speaker was Requesting Information, then this speech act is more likely to be Giving Information in response |

Using the line of dialog originally highlighted in figure 1, we present some example features in more detail in figure 2. Each feature represents a local unit of text from the transcript, rather than a measurement of the speech act as a whole.

Figure 2.

Example features drawn from a single speech act. POS, part-of-speech.

In our task, each speech act in a dialog transcript must be assigned exactly one label from a small set of possibilities. The classifier is thus a mapping function between input features and output label.

Objective

The long-term goal of this research is to produce machine learning systems that can reliably annotate information-giving at a level that matches humans. Ensuring that this is useful as a goal and feasible in practice requires tests of validity and reliability at many stages of data analysis. In this work, we test this research workflow at four stages to test whether:

Findings from the information-giving annotation scheme correspond to intuitive understanding of provider–patient interactions.

Information-giving correlates meaningfully with indicators of outcomes that matter for patient healthcare quality.

An automated system can reproduce speech act annotations of information-giving with near human reliability.

The noisy automated annotation of information-giving can still detect meaningful trends in indicator variables.

Our evaluations are divided into two categories: first, evaluating information-giving as a metric for provider–patient interactions (#1 and #2); second, applying automated annotation to that scheme (#3 and #4).

Our higher-level objective is to define a generalized workflow for evaluating automated annotation systems. As machine learning becomes a viable option for some annotation tasks, a methodical way of testing its applicability in new domains will be desirable. Our work provides a roadmap for future researchers weighing the option of automating their data annotation.

Materials and methods

Data collection

This work is a secondary analysis of 415 transcripts of routine outpatient visits by people living with HIV, from four widely separated sites of care in the USA, collected and annotated for the Enhancing Communication and HIV Outcomes (ECHO) Study.34 Eligible providers were physicians, nurse practitioners (NPs), or physician assistants who provided primary care to HIV-infected patients. Eligible patients were HIV-infected; 19 years of age or older; English-speaking; identified in the medical record as non-Hispanic black, Hispanic, or non-Hispanic white; and had at least one prior visit with their provider.

Forty-five providers participated. Each patient was represented by a single visit, while providers typically saw 8–10 patients in our sample. A total of 435 visits were originally recorded, but 18 of the recordings were unusable due to recorder malfunction, and two of the visits turned out to be principally with providers other than the HIV specialist, leaving 415 available for analysis. In addition to the 45 index providers, many encounters also featured a second provider, an NP, or fellow, particularly at one site which uses a model in which patients are normally first seen by an NP and then by a physician. We call these ‘complex’ visits. There were a total of 36 such complex visits, 30 of them at one site (27 featuring an NP and a physician, 3 featuring 2 physicians), with six visits at another site featuring a second physician, presumably a fellow plus the attending physician. Seventeen visits featured only an NP, with no physician participating.

The original study was approved by Institutional Review Boards (IRBs) at all four participating sites. This secondary analysis was declared exempt by the IRB at the lead site which has custody of the data, as it uses only de-identified data.

A professional transcription service or research assistant transcribed audio recordings of visits, and a research assistant reviewed the resulting transcripts for accuracy. Research assistants then coded the transcripts using the GMIAS. These coded transcripts were used to train the machine learning system, and as the ‘gold-standard’ for evaluation. A subset (50 encounters) was also annotated with the CASES scheme.31

All of the above steps were performed manually by human annotators. To acquire ‘gold-standard’ information-giving annotations from this (which we can use for training our machine learning annotation system), we define a mapping from GMIAS codes to information-giving:

All Representative or Expressive Questions (questions soliciting the hearer's opinions, feelings, or other inner states) are mapped to ‘Requesting Information.’

Representative statements (except Repeat), expressive statements (except Compliment, Agree, Apologize, and Validating), emotion statements (except Laughter, Surprise/Awe, and Mild Satisfaction), and some directive statements (Convince, Give Permission, and Approve/Encourage) are mapped to ‘Giving Information.’

All other annotations are marked as ‘Other.’

Measurable outcomes

For each transcript, immediately following the medical encounter, research assistants for the original ECHO study administered a survey to patients that assessed demographic, social, and behavioral characteristics, as well as their experience of care and ratings of provider communication.

Before performing our analyses, we selected five indicator variables from these surveys which we felt were likely to relate to our measure of information-giving: communication quality,35 provider decision-making,36 participatory decision-making,8 37 interpersonal style,35 and interpersonal trust.38 Each of these are aggregate measures from multi-item scales. This subset of indicator variables was selected prior to our experiments with automated annotation, to avoid overfitting to in-sample data.

Information-giving: aggregating fine-grained annotations

From turn-level annotation, we assign an Information-Giving Ratio to each speaker. We define this by summing across speech acts and dividing:

Intuitively, an Information-Giving Ratio indicates the extent to which a speaker acts as the source of new information in the dialog. A score of 1.0 would indicate a speaker who never requested information from other speakers, while a score of 0.0 would indicate that they never provided new information. We assign an Information-Giving Ratio to each speaker separately, as one speaker's high Information-Giving Ratio does not necessitate that the other speaker gave correspondingly less information.

Validation and evaluations

We divide our study into two distinct evaluations. The first is a typical annotation study as applied to a collection of clinical transcripts. We then test our ability to automate that annotation, first at a fine-grained level and then for predicting outcomes.

Evaluation 1: validation

We evaluate the face validity of our analysis via comparison with the process annotations from the CASES coding scheme. The process annotation is based on the nature of the task being performed in that portion of the transcript, making it a good foundation on which to judge the interpretability of results. Processes span over multiple speech acts, from both speakers, and can reappear multiple times throughout a conversation. We focus on three processes in particular:

Presentation—patient-specific circumstances, including the patient's observations and experiences as well as test results or clinical observations.

Information—medical facts and information, both abstract and general, non-specific to the patient, biomedical or otherwise.

Resolution—solutions, treatment options, referrals, etc, and solving the problem that triggered the medical interaction.

For each transcript, we calculate the Information-Giving Ratios separately for each process in which each speaker participates. This results in three separate values for each speaker, one per process. If information-giving is useful for interpretation, we expect to see a differentiation in information-giving patterns among processes.

Next, we model our five outcome indicator variables as separate multiple regressions. Each regression uses aggregate patient and provider Information-Giving Ratios and patient gender as input. We include second- and third-level interaction terms between these three variables. In preliminary analysis, patient gender demonstrated significant interaction with the Information-Giving Ratio, but age and race did not; we therefore discarded those factors in further analyses and regressions. To enable a model to assign weight to balanced ratios, rather than merely high or low ratios, we convert our Information-Giving Ratio into a three-valued nominal variable, based on whether a speaker's ratio fell into the lowest quartile, the middle 50%, or the highest quartile among all speakers we observe in our training transcripts. Three transgender patients were excluded from these regressions.

Evaluation 2: automation

All machine learning was performed in LightSIDE, an open source research tool for automated text analysis.32 Our work uses L2-regularized logistic regression as our classification algorithm as implemented by LibLinear, an efficient and open source algorithm available within LightSIDE's user interface.39 Where not otherwise noted, each classifier was trained on 40 conversations. Reported automated performance is the averaged result of five ‘runs’; in each run, a distinct 40-conversation subset of our total dataset was used for training, and automated annotation of the remaining 375 conversations was compared to human annotation to calculate accuracy. To evaluate the reliability of annotation, we report Cohen's κ agreement statistic:

|

This statistic is widely used in annotation studies,40 especially where the distinction between two particular annotators is of interest (in our case, human versus automated annotation), rather than treating annotators as interchangeable.

We also test automated reproduction of the Information-Giving Ratios per speaker. For this measure, only two data points are collected for each transcript (one per speaker). To test this reproduction, we calculate correlation (r) between Information-Giving Ratios calculated with manual versus automated annotations. Finally, we study the impact of varying the number of initial training transcripts and the inclusion of hypothesis label features (the final row in table 1), to give an estimate of the manual effort required to achieve usable machine learning reliability.

Finally, we replicate the outcome indicator multiple regressions from manual annotation; however, in this case we calculate each Information-Giving Ratio with automatically annotated transcripts. With this test, we determine whether patterns can be identified with automated annotation. This measures the readiness of automated annotation for discovering overall conversational behaviors, rather than speech act-level annotations.

Results

In total, our data consist of 415 conversations consisting of 118 287 speech acts annotated as Giving Information (49.4%), 28 576 as Requesting Information (11.9%), and 92 448 as Other (38.6%). Descriptive statistics for our outcome indicator variables are presented in table 2.

Table 2.

Indicators of communication quality used for evaluating the utility of our annotation

Evaluation 1: validation

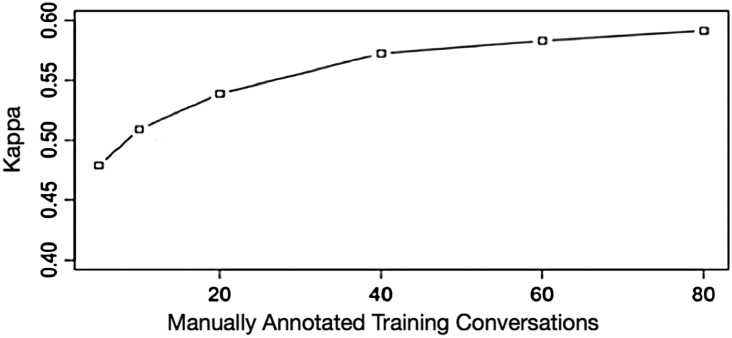

The distribution of CASES processes was highly skewed: 61.4% of speech acts, or an average of 175 provider and 148 patient speech acts per transcript, belong to the Presentation process. Information and Resolution processes accounted for 7.8% and 9.8% of speech acts, respectively. Differences in Information-Giving Ratios across processes are presented in figure 3. Our multiple regression from evaluation 2 significantly predicts four outcome variables; these results are presented in table 3.

Figure 3.

Information-Giving Ratio differences between provider and patient across Comprehensive Analysis of the Structure of Encounters System (CASES) processes.

Table 3.

Information-Giving Ratio and indicator outcome variable correlations

| Variable | Correlation (r) |

|---|---|

| Communication quality | 0.287* |

| Provider decision-making | 0.264* |

| Participatory decision-making | 0.252 |

| Interpersonal style | 0.344* |

| Interpersonal trust | 0.265* |

*p<0.05.

Evaluation 2: automation

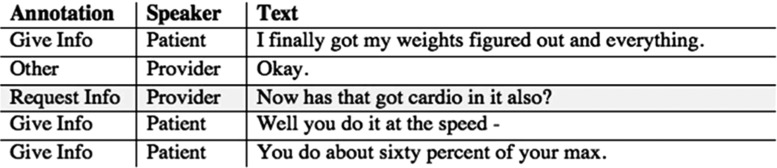

Our machine learning model trained on 40 conversations reproduces human annotation with κ=0.573 and an overall accuracy of 71.2%, which would be deemed fair agreement bordering on good agreement between two humans. We see high correlation between manual and automated predictions of each speaker's overall Information-Giving Ratio, r=0.96. Accuracies with smaller training set sizes are shown in figure 4. Performance continuously increases with more training data, but exhibits diminishing returns per new transcript used for training (the gain moving from 5 to 10 manual transcripts is similar to the gain moving from 10 to 20 transcripts, for instance).

Figure 4.

Impact of increasing training transcripts on performance.

The results of our automated prediction of outcomes are shown in table 4. In all cases, we see a correlation; in one variable we demonstrate a significant effect using automated coding (interpersonal style), compared to four significant correlations from manual annotation. Overall, using automated annotation decreases outcome correlations compared to manual annotation, but marginal correlative trends are apparent.

Table 4.

Outcome prediction using automated annotation

| Communication quality | Provider decision-making | Participatory decision-making | Interpersonal style | Interpersonal trust | |

|---|---|---|---|---|---|

| Human | 0.287* | 0.264* | 0.252 | 0.344* | 0.263* |

| Auto | 0.206 | 0.245 | 0.187 | 0.288* | 0.242 |

*p<0.05.

Discussion

Face validity

Our annotation scheme for information-giving performed well. Intuitively, giving versus receiving information parallels the surface level speech acts of statements and questions, although it is not based on syntactic structure. For instance, a physician checking a patient's understanding of an instruction may speak in the syntactical form of a question, but is seeking confirmation rather than eliciting new information. Additionally, we find that the ‘Other’ annotation includes acknowledgments and active listening, which play a distinct conversational role, separate from information sharing, in interaction.21 Our analysis of the face validity of these labels is built on processes, and in figure 4, we see distinct patterns emerge.

In the presentation process, the patient is typically the dominant source of information, asking few questions while the provider alternates between giving information and requesting more detail. Correspondingly, we see very high patient Information-Giving Ratios, with very little variance across transcripts, and lower average provider Information-Giving Ratios, with wider variance.

In the information process, providers consistently share new information—they are no longer at a stage of requesting information from the patient. Patient behaviors in this phase vary widely, with some requesting more information and others participating equally with their provider.

Finally, in the resolution process, information-giving is more equal, with each participant primarily giving, rather than requesting, information. Because information exchange is not the primary driver of this process—most information transfer occurs in previous processes—it is intuitively correct to see that roles are not primarily distinguished here by information-giving. Instead, pre-existing roles are differentiated in this process through instructions for action, a feature not captured in this analysis.

Throughout these three processes, we see alignment with qualitative expectations of the differing behaviors that speakers will exhibit, based on their pre-existing role as patient or provider. In this work, we do not consider a standalone analysis of the CASES coding scheme; future research may study those annotations in greater detail, rather than merely as a face validity test.

Information-Giving Ratios and outcomes

Several outcome indicator variables were significantly predicted by Information-Giving Ratios, and trends were similar across those variables. In general, conversations where both patient and provider had low Information-Giving Ratios (indicating many requests for information) resulted in poor evaluations of interaction quality. In men, this was indicative of a more general pattern where a low patient Information-Giving Ratio resulted in lower evaluations. The interaction between Information-Giving Ratios, gender, and outcomes was also significant. As a coarse generalization, males preferred fewer explicit requests for information, both from themselves and the provider.

Female patients differed; the condition where both speakers had high Information-Giving Ratios resulted in low scores, especially in overall communication quality. Instead, more balanced interactions, with a moderate amount of information being requested by both speakers, tended to result in higher evaluations from female patients.

Automated annotation

The automated annotation reliability reported in this paper is not sufficient to call for replacement of human annotators when they are available. However, fair agreement (with far less effort and cost) suggests there is already a place for this technology, with promising potential for future development.

Detailed analysis of automated annotation behavior suggests that confusion between giving and requesting information is relatively rare; instead, when error occurs, it comes from not recognizing speech acts as relevant to information-giving at all, mislabeling them as ‘Other.’ Future machine learning research should focus on this error type, to improve performance for future applied researchers.

Real-world applications

We suggest multiple use cases for automated annotation in clinical communication research. The most important application is as a preliminary evaluation of a new annotation scheme or dataset. Our evaluation in table 4 indicated that significant trends from manual annotation were apparent with automated results. Thus, using machine learning as a ‘pilot’ for testing new approaches to data analysis will enable rapid discovery of new directions for research with lowered cost compared to full annotation of entire datasets.

Additional applications for this technology exist in contexts where rapid screening of conversation transcripts would be useful and fair agreement (κ near 0.6) between annotators would be sufficient in practice. These include exploratory research for potential trends in existing datasets, rapid building of training materials for professional development, and acting as a ‘first pass’ screening tool to identify interesting subsets of data that merit further qualitative study. For example, a qualitative researcher with access to a large collection of transcripts might use automated annotation even at the current level of performance to prioritize their time towards interactions with unusual distributions of labels.

Until now, the difficulty and expense of human coding has limited researchers’ ability to capture signals from large sets of transcripts; because of this, understanding of the impact of communication process behavior across conversational contexts (such as first-time visits compared to long-term care) has been underexplored, along with the application of this behavior to patient-centered outcomes. With automated techniques, providers can receive fast, clear feedback on the impact of their communication behaviors for training tailored to specific contexts, and the impact of communicative interventions on health outcomes can be better measured.

Conclusion

Our work here uses information-giving, atop existing GMIAS codes, as an example of automated annotation that can be applied to clinical transcripts. The codes have both face validity and meaningful correlation with outcome variables. With machine learning, we can code transcripts at reliability approaching, though not yet matching, humans on a per-speech act basis (κ=0.57). We can also replicate some predictive power for outcome variables, particularly for dimensions of interpersonal style and trust.

There is ample room for future work based on these results. All machine learning techniques described in this work are essentially standard tools for using machine learning with text input. Incorporating more domain knowledge into our features will push reliability even closer to that of humans, as will development of better machine learning algorithms.

The current level of performance demonstrated in this paper already suggests that automated annotation can lower the barrier for deeper analysis of large collections of data. This invites broader exploration of inventive annotations, and invites cross-disciplinary analysis and discussion in the clinical domain, all while lowering the resource needs and expenses for clinical communication researchers.

Footnotes

Contributors: EM implemented all machine learning results presented in this paper, performed data transformation and analysis in order to map existing, collected data into a usable framework for machine learning, and wrote the majority of the paper. MBL was a principal developer of the speech act and process coding systems which were applied in this research. He consulted on mapping the systems onto the negotiation framework construct, on specification of hypotheses, and on interpretation of results. IBW has been closely involved with the development of the original coding systems and brought deep practical expertise on the domain of clinical communication, including analysis of both collected data and machine learning results, while acting as a liaison between research groups. CPR provided machine learning expertise, sociolinguistic expertise for mapping between annotations schemes, suggestions and advice on machine learning experiments, and revisions and editing in the writing process.

Funding: This work was supported by the National Institute of Mental Health through grants R01MH083595 and 2K24MH092242, the National Science Foundation through grant IIS-0968485, and the Health Resources and Services Administration and the Agency for Healthcare Research and Quality through grant AHRQ 290-01-0012.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Beck RS, Daughtridge R, Sloane PD. Physician-patient communication in the primary care office: a systematic review. J Am Board Fam Pract 2002;15:25–38 [PubMed] [Google Scholar]

- 2.Fine E, Reid MC, Shengelia R, et al. Directly observed patient-physician discussions in palliative and end-of-life care: a systematic review of the literature. J Palliat Med 2010;13:595–603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Arbuthnott A, Sharpe D. The effect of physician-patient collaboration on patient adherence in non-psychiatric medicine. Patient Educ Couns 2009;77(1):60–7 [DOI] [PubMed] [Google Scholar]

- 4.Greenfield S, Kaplan SH, Ware JE, Jret al. Patients’ participation in medical care: effects on blood sugar control and quality of life in diabetes. J Gen Intern Med 1988;3(5):448–57 [DOI] [PubMed] [Google Scholar]

- 5.Griffin SJ, Kinmonth AL, Veltman MW, et al. Effect on health-related outcomes of interventions to alter the interaction between patients and practitioners: a systematic review of trials. Ann Fam Med 2004;2(6):595–608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stewart MA. Effective physician-patient communication and health outcomes: a review. J Can Med Assoc 1995;152(9):1423–33 [PMC free article] [PubMed] [Google Scholar]

- 7.Zolnierek KB, DiMatteo MR. Physician communication and patient adherence to treatment: a meta-analysis. Medical Care 2009;47(8):826–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Makoul G, Clayman ML. An integrative model of shared decision making in medical encounters. Patient Educ Couns 2006;60(3):301–12 [DOI] [PubMed] [Google Scholar]

- 9.Teal CR, Street RL. Critical elements of culturally competent communication in the medical encounter: a review and model. Soc Sci Med 2009;68(3):533–43 [DOI] [PubMed] [Google Scholar]

- 10.Mead N, Bower P. Patient-centredness: a conceptual framework and review of the empirical literature. Soc Sci Med 2000;51(7):1087–110 [DOI] [PubMed] [Google Scholar]

- 11.Waitzkin H. On studying the discourse of medical encounters. A critique of quantitative and qualitative methods and a proposal for reasonable compromise. Med Care 1990:473–88 [DOI] [PubMed] [Google Scholar]

- 12.Connor M, Fletcher I, Salmon P. The analysis of verbal interaction sequences in dyadic clinical communication: a review of methods. Patient Educ Couns 2009;75(2):169–77 [DOI] [PubMed] [Google Scholar]

- 13.Deleger L, Molnar K, Savova G, et al. Large-scale evaluation of automated clinical note de-identification and its impact on information extraction. J Am Med Inform Assoc 2013;20(1):84–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Roberts K, Harabagiu SM. A flexible framework for deriving assertions from electronic medical records. J Am Med Inform Assoc 2011;18(5):568–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mork JG, Bodenreider O, Demner-Fushman D, et al. Extracting Rx information from clinical narrative. J Am Med Inform Assoc 2010;17(5):536–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Torii M, Wagholikar K, Liu H. Using machine learning for concept extraction on clinical documents from multiple data sources. J Am Med Inform Assoc 2011; 18(5):580–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Eysenbach G, Powell J, Englesakis M, et al. Health related virtual communities and electronic support groups: systematic review of the effects of online peer to peer interactions. BMJ 2004;328(7449):1166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Barak A, Boniel-Nissim M, Suler J. Fostering empowerment in online support groups. Comput Hum Behav 2008;24(5):1867–83 [Google Scholar]

- 19.Chou WS, Hunt YM, Burke Beckjord E, et al. Social media use in the United States: implications for health communication. J Med Internet Res 2009;11(4):48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Halliday MAK, Hasan R. Cohesion in English. Longman, London, 1976. [Google Scholar]

- 21.Mishler EG. The Discourse of Medicine: Dialectics of Medical Interviews. Praeger, Westport, CT, 1985. [Google Scholar]

- 22.Laws MB, Beach MC, Lee Y, et al. Provider-patient adherence dialogue in HIV care: results of a multisite study. J AIDS Behav 2013;17(1):148–59 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mayfield E, Adamson D, Rudnicky A, et al. Computational representations of discourse practices across populations in task-based dialogue. Proceedings of IC Intercultural Collaboration, ACM, New York, NY, 2012:67–76. [Google Scholar]

- 24.Mayfield E, Rosé CP. Recognizing authority in dialogue with an integer linear programming constrained model. Proceedings of ACL, Association for Computational Linguistics, Stroudsburg, PA, 2011:1018–26. [Google Scholar]

- 25.Howley I, Adamson D, Dyke G, et al. Group composition and intelligent dialogue tutors for impacting students’ academic self-efficacy. Proceedings of Intelligent Tutoring Systems, Springer-Verlag, Berlin, Germany, 2012:551–6. [Google Scholar]

- 26.Mayfield E, Wen M, Golant M, et al. Discovering habits of effective online support group chatrooms. Proceedings of Supporting Group Work ACM, New York, NY, 2012:263–72. [Google Scholar]

- 27.Mayfield E, Adamson D, Rosé CP. Hierarchical conversation structure prediction in multi-party chat. In Proceedings of SIGDIAL Association for Computational Linguistics, Stroudsburg, PA, 2012:60–9. [Google Scholar]

- 28.Searle JR. Speech acts. An essay in the philosophy of language. Cambridge University Press, 1969 [Google Scholar]

- 29.Austin JL. How to do things with words. Oxford University Press, 1962 [Google Scholar]

- 30.Habermas J. The theory of communicative action. V.1. Reason and the rationalization of society. V.2. Lifeworld and system: a critique of functionalist reason. Boston: Beacon Press, 1984 [Google Scholar]

- 31.Laws MB, Taubin T, Bezreh T, et al. Problems and processes in medical encounters: the cases method of dialogue analysis. Patient Educ Couns 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mayfield E, Rosé CP. LightSIDE: Open Source Machine Learning for Text. In Handboook of Automated Essay Assessment, Routledge, New York, NY, 2013: 124–35

- 33.Daumé H. Frustratingly easy domain adaptation. In Proceedings of ACL, Association for Computational Linguistics, Stroudsburg, PA, 2007:256–63. [Google Scholar]

- 34.Beach MC, Saha S, Korthuis PT, et al. Patient-provider communication differs for black compared to white HIV-infected patients. AIDS Behav 2011;15(4):805–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Stewart AL, Napoles-Springer A, Perez-Stable EJ. Interpersonal processes of care in diverse populations. Milbank Q 1999;77(3):305–39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Heisler M, Bouknight RR, Hayward RA, et al. The relative importance of physician communication, participatory decision making, and patient understanding in diabetes self-management. J Gen Intern Med 2002;17(4):243–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brody DS, Miller SM, Lerman CE, et al. Patient perception of involvement in medical care: relationship to illness attitudes and outcomes. J Gen Intern Med 1989;4(6):506–11 [DOI] [PubMed] [Google Scholar]

- 38.Thom DH, Ribisl KM, Stewart AL, et al. Further validation and reliability testing of the Trust in Physician Scale. The Stanford Trust Study Physicians. Med Care 1999;37(5):510–17 [DOI] [PubMed] [Google Scholar]

- 39.Fan RE, Chang KW, Hsieh CJ, et al. LibLinear: a library for large linear classification. J Mach Learn Res 2008;9:1871–74 [Google Scholar]

- 40.Lombard M, Snyder-Duch J, Bracken CC. Content analysis in mass communication research: An assessment and reporting of intercoder reliability. Hum Commun Res 2002;28(4):587–604 [Google Scholar]