Publications in reputed journals is one of the criteria often used by research and scientific organizations to evaluate the potential and aptness of a researcher in recruitment, promotions, research fellowships, expert assignments, awards, etc. In combination with the number of publications and the number of first authorships, the journal ranking is often used as a metric to evaluate the merit of a researcher.

There are a number of journal ranking systems today, but the oldest and most influential is the Journal Impact Factor (JIF) devised by Eugene Garfield, the founder of the Institute for Scientific Information which is now owned by Thomson Reuters (http://www.garfield.library.upenn.edu/papers/isichapter15centuryofscipub149-160y2001.pdf). IFs are calculated for those journals that are indexed in the Journal Citation Reports (JCR) of Thomson Reuters (http://thomsonreuters.com/journal-citation-reports/). Most often, the 2-year IF is used for journal ranking and it is calculated annually. The IF of a journal, in a given year, is the average number of citations received per paper published in that journal during the preceding 2 years. For example, if a journal has an IF of 3 in 2010, then its papers published in 2008 and 2009 received three citations each on an average in 2010. The 2010 IF of a journal is calculated as follows:

JIF2010= A/B

where

A = the number of times the articles published in that journal in 2008 and 2009, were cited by articles in indexed journals during 2010.

B = the total number of “citable items” published by that journal in 2008 and 2009.

JIF2010 is calculated only after all of the 2010 publications have been processed by the indexing agency. Hence, a new journal will not get its IF until it has been indexed for at least 3 years.

Since the 2-year citation window is often too short a period for adequate citation, the JCR also includes a 5-year IF. The IF is highly discipline-dependent and hence one cannot compare journals across disciplines on the basis of their relative IFs.

Journals employ several methods to increase its IF[1,2,3] (http://www.newworldencyclopedia.org/entry/Impact_factor). ‘Citable items’ are usually articles, reviews, proceedings, or notes and not editorials or letters to the editor. One straight forward way to increase JIF is by publishing more of review articles which are generally cited more than research reports. Editor may force an author to add spurious self-citations to an article before the journal will agree to publish it. Journals may also attempt to decline publication of articles which are unlikely to be cited, such as case reports in medical journals. Another tactic is that a journal may publish the papers expected to be highly cited, early in the calendar year. This gives those papers more time to gather citations. There are instances whereby a single article, the IF of a journal rose from just 2.05 to about 50 in a single year as this one paper got a citation of 6,600; whereas, the best citation in the journal in the previous year was only 28[4](http://en.wikipedia.org/wiki/Impact_factor). Articles that address controversial issues also get high citation, even though they contribute little or nothing to scientific progress. Hence, it is highly controversial when the use of JIF is extended to evaluate the merit of papers therein and/or to assess an individual researcher.

An alternate measure to evaluate credibility of individual researcher was suggested by Jorge E. Hirsch, a physicist at University of California (UCSD) and is called after his name, ‘h-index’.[5] It is an index that attempts to measure both the productivity and impact of the published work of a scientist or scholar. The index can also be applied to measure the productivity and/or impact of a group of scientists, such as a department or university or country, as well as that of a journal and is referred as ‘H-index’.

A scientist/researcher has an ‘index h’ if ‘h’ of his/her Np papers have at least ‘h’ citations each, and the other (Np-h) papers have less than ‘h’ citations each. In other words, a scholar with ‘h-index’ has published ‘h’ papers each of which has been cited in other papers at least ‘h’ times. Thus, the h-index reflects both the number of publications and the number of citations per publication. ‘H-Index’ is also used to measure the standing of a journal such that ‘h’ articles published in the journal have received at least ‘h’ citations in the entire period. One drawback of this index is that it can be used to compare among those of similar years of standing in the same field.

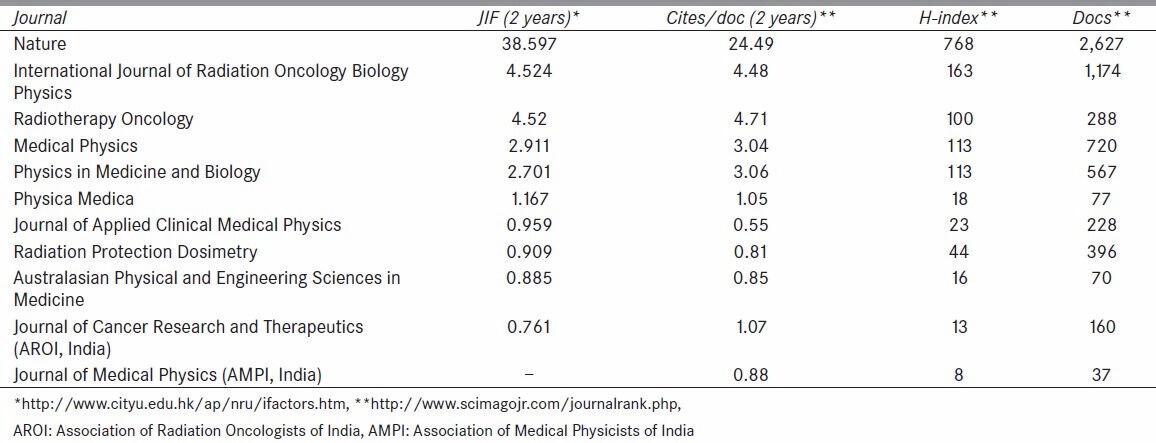

Journals that are not indexed in JCR of Thomson Reuters find a place in the journal rankings system developed by SCIMAGO JCR (http://www.scimagojr.com/). Cites per Doc. (2 year) is a measure of the scientific impact of an average article published in the journal. It is computed using the same formula used for JIF. The site also quotes the H-index ranking of a Journal and the number of articles published per annum. Table 1 compares the published values of JIF and Cites per doc (2 year) for a few well-known journals related to medical physics, for the year 2012. H-index ranking and total numbers of articles published in the year are also given in the Table 1. The values for ‘Nature’ (http://www.nature.com/npg_/company_info/impact_factors.html), which is the foremost journal dealing with interdisciplinary science is included in the Table 1 to show its standing compared to others.

Table 1.

Ranking indicators of a few medical physics related journals for 2012

Cites/doc, obtained using the same concept of JIF but using a different citation site, show reasonably good agreement, in seven out of ten journals compared. For Nature and Journal of Applied Clinical Medical Physics, cites per doc (2 year) value is substantially less than the published JIF value. It is the reverse for Journal of Cancer Research and Therapeutics which is a radiation oncology related journal published by the Association of Radiation Oncologists of India. Journal of Medical Physics which has gone online since 2006 has not yet been indexed in JCR of Thomson Reuters and hence not cited in JIF. Cites/doc (2 years) of 0.88 for 2012 is quite encouraging. However, the annual number of papers published is substantially less and it calls for earnest contribution from researchers across the country to enhance the reputation of the journal and that of the Association of Medical Physicists of India.

Having compared the relative ranking of some of the journals, the next and most important question is whether all the papers published in high ranked journal are superior in quality to the papers published in lesser ranked journal. The answer is ‘NO’, as only a few really good and highly cited papers can balance the impact of average or less significant papers. Total number of papers published plays a significant role in this analysis.

There are several sites condemning the use of JIF for assessing the merit of individual researcher; a few noteworthy are quoted here. An editorial[6] on the use and misuse of JIFs, published in Medical Physics by a group of editors of scientific journals, led by William Hendee, stressed that if quantification is considered important for qualitative assessment of an individual, then the h-index is a more meaningful measure than the JIF. The editorial cautioned the limitations of h-index as it is useful only for comparing researchers working in similar field and with similar years of experience. Further, self-citations can raise an individual‘s h-index.

The European Association of Science Editors (EASE) issued an official statement in November 2007, recommending that JIFs be used only for measuring and comparing the influence of entire journals, not for the assessment of single papers, and certainly not for the assessment of researchers or research programs, either directly or as a surrogate (http://www.ease.org.uk/publications/impact-factor-statement).

“Scientists join journal editors to fight impact-factor abuse”, a news blog appeared in “Nature” and “Scientific insurgents say ‘Journal Impact Factors’ distort science” in Science Daily, both dated 16 May 2013 are noteworthy (Retrieved from http://www.sciencedaily.com-/releases/2013/05/130516142537.html). Both articles are based on the San Francisco Declaration on Research Assessment (DORA), framed by a group of journal editors, publishers, and others convened by the American Society for Cell Biology (ASCB) in December 2012. DORA makes 18 recommendations for change in the scientific culture at all levels to reduce the dominant role of the JIF. DORA’s recommendations are addressed to funders, institutions, researchers, publishers, and suppliers of metrics. Broadly, these involve phasing out journal-level metrics in favor of article-level ones, being transparent and straightforward about metric assessments, and judging by scientific content rather than publication metrics wherever possible. A complete list of organizations which are signatories of DORA is given at http://www.ascb.org/SFdeclaration.html. It’s notable that those signing DORA are almost all from US or European institutions, but the ASCB has a website where anyone can sign the declaration. Nature Publishing Group, which published this blog, has although not signed DORA, its editor-in-chief, Philip Campbell, admitted that the group’s journals had published many editorials, critical of excesses in the use of JIFs. Even the company that creates the IF, Thomson Reuters, admits that it does not measure the quality of an individual article because only a few articles in a journal receive most of the citations.

DORA states that the JIF was initially developed to help librarians make subscription decisions, but has now become a proxy for the quality of research and researchers. The JIF has become even more powerful in China, India, and other nations emerging as global research powers. The San Francisco declaration urges all stakeholders to focus on the content of papers, rather than the JIF of the journal in which it was published. DORA’s 18 recommendations call for sweeping changes in scientific assessment.

A statement in the Editorial of Journal of Applied Clinical Medical Physics in 2008 (http://www.jacmp.org/index.php/jacmp/article/view/2823/1389), appears most apt for all journals in the field of medical physics. It states that “as the information presented in the Journal is directed more toward improving the clinical practice of medical physics, the real measure of impact of a paper is the number of times the information in that paper is used by a practitioner of medical physics to improve the quality of treatment”.

While submitting a manuscript to a journal, the most important consideration should be the readership and not the IF of the journal. The quality of a publication should be judged by its usefulness and acceptability evident in subsequent publications. A new, time dependent, factor for impact of individual published papers could be thought about and recommend this factor as the criteria to evaluate the merit of the publication or that of the researcher. This factor could be easily based on the citation of the paper (number of times referred by other researchers in the specific period) or by generating a system of rating by readers.

Footnotes

Source of Support: Nil

Conflict of Interest: None declared.

References

- 1.Pontille D, Torny D. The controversial policies of journal ratings: Evaluating social sciences and humanities. Res Eval. 2010;19:347–60. [Google Scholar]

- 2.Agrawal AA. Corruption of journal impact factors. Trends Ecol Evol. 2005;20:157. doi: 10.1016/j.tree.2005.02.002. [DOI] [PubMed] [Google Scholar]

- 3.Fassoulaki A, Papilas K, Paraskeva A, Patris K. Impact factor bias and proposed adjustments for its determination. Acta Anaesthesiol Scand. 2002;46:902–5. doi: 10.1034/j.1399-6576.2002.460723.x. [DOI] [PubMed] [Google Scholar]

- 4.Grant B. New impact factors yield surprises. [http://www.the-scientist.com/?articles.view/articleNo/29093/title/New-impact-factors-yieldsurprises/ ]

- 5.Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102:16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hendee W, Bernstein MA, Levine D. Scientific journals and impact factors. Med Phys. 2011;12:38. doi: 10.1118/1.3660554. [DOI] [PubMed] [Google Scholar]