Abstract

Uniformly most powerful tests are statistical hypothesis tests that provide the greatest power against a fixed null hypothesis among all tests of a given size. In this article, the notion of uniformly most powerful tests is extended to the Bayesian setting by defining uniformly most powerful Bayesian tests to be tests that maximize the probability that the Bayes factor, in favor of the alternative hypothesis, exceeds a specified threshold. Like their classical counterpart, uniformly most powerful Bayesian tests are most easily defined in one-parameter exponential family models, although extensions outside of this class are possible. The connection between uniformly most powerful tests and uniformly most powerful Bayesian tests can be used to provide an approximate calibration between p-values and Bayes factors. Finally, issues regarding the strong dependence of resulting Bayes factors and p-values on sample size are discussed.

Keywords: Bayes factor, Jeffreys–Lindley paradox, objective Bayes, one-parameter exponential family model, Neyman–Pearson lemma, nonlocal prior density, uniformly most powerful test, Higgs boson

1. Introduction

Uniformly most powerful tests (UMPTs) were proposed by Neyman and Pearson in a series of articles published nearly a century ago [e.g., Neyman and Pearson (1928, 1933); see Lehmann and Romano (2005) for a comprehensive review of the subsequent literature]. They are defined as statistical hypothesis tests that provide the greatest power among all tests of a given size. The goal of this article is to extend the classical notion of UMPTs to the Bayesian paradigm through the definition of uniformly most powerful Bayesian tests (UMPBTs) as tests that maximize the probability that the Bayes factor against a fixed null hypothesis exceeds a specified threshold. This extension is important from several perspectives.

From a classical perspective, the outcome of a hypothesis test is a decision either to reject the null hypothesis or not to reject the null hypothesis. This approach to hypothesis testing is closely related to Popper’s theory of critical rationalism, in which scientific theories are never accepted as being true, but instead are only subjected to increasingly severe tests [e.g., Mayo and Spanos (2006), Popper (1959)]. Many scientists and philosophers, notably Bayesians, find this approach unsatisfactory for at least two reasons [e.g., Howson and Urbach (2005), Jeffreys (1939)]. First, a decision not to reject the null hypothesis provides little quantitative information regarding the truth of the null hypothesis. Second, the rejection of a null hypothesis may occur even when evidence from the data strongly support its validity. The following two examples—one contrived and one less so—illustrate these concerns.

The first example involves a test for the distribution of a random variable X that can take values 1, 2 or 3; cf. Berger and Wolpert (1984). The probability of each outcome under two competing statistical hypotheses is provided in Table 1. From this table, it follows that a most powerful test can be defined by rejecting the null hypothesis when X = 2 or 3. Both error probabilities of this test are equal to 0.01.

Table 1.

Probabilities of a random variable under competing hypotheses

| X | 1 | 2 | 3 |

|---|---|---|---|

| Null hypothesis | 0.99 | 0.008 | 0.001 |

| Alternative hypothesis | 0.01 | 0.001 | 0.989 |

Despite the test’s favorable operating characteristics, the rejection of the null hypothesis for X = 2 seems misleading: X = 2 is 8 times more likely to be observed under the null hypothesis than it is under the alternative. If both hypotheses were assigned equal odds a priori, the null hypothesis is rejected at the 1% level of significance even though the posterior probability that it is true is 0.89. As discussed further in Section 2.1, such clashes between significance tests and Bayesian posterior probabilities can occur in variety of situations and can be particularly troubling in large sample settings.

The second example represents a stylized version of an early phase clinical trial. Suppose that a standard treatment for a disease is known to be successful in 25% of patients, and that an experimental treatment is concocted by supplementing the standard treatment with the addition of a new drug. If the supplemental agent has no effect on efficacy, then the success rate of the experimental treatment is assumed to remain equal to 25% (the null hypothesis). A single arm clinical trial is used to test this hypothesis. The trial is based on a one-sided binomial test at the 5% significance level. Thirty patients are enrolled in the trial.

If y denotes the number of patients who respond to the experimental treatment, then the critical region for the test is y ≥ 12. To examine the properties of this test, suppose first that y = 12, so that the null hypothesis is rejected at the 5% level. In this case, the minimum likelihood ratio in favor of the null hypothesis is obtained by setting the success rate under the alternative hypothesis to 12/30 = 0.40 (in which case the power of the test is 0.57). That is, if the new treatment’s success rate were defined a priori to be 0.4, then the likelihood ratio in favor of the null hypothesis would be

| (1) |

For any other alternative hypothesis, the likelihood ratio in favor of the null hypothesis would be larger than 0.197 [e.g., Edwards, Lindman and Savage (1963)]. If equal odds are assigned to the null and alternative hypothesis, then the posterior probability of the null hypothesis is at least 16.5%. In this case, the null hypothesis is rejected at the 5% level of significance even though the data support it. And, of course, the posterior probability of the null hypothesis would be substantially higher if one accounted for the fact that a vast majority of early phase clinical trials fail.

Conversely, suppose now that the trial data provide clear support of the null hypothesis, with only 7 successes observed during the trial. In this case, the null hypothesis is not rejected at the 5% level, but this fact conveys little information regarding the relative support that the null hypothesis received. If the alternative hypothesis asserts, as before, that the success rate of the new treatment is 0.4, then the likelihood ratio in favor of the null hypothesis is 6.31; that is, the data favor the null hypothesis with approximately 6:1 odds. If equal prior odds are assumed between the two hypotheses, then the posterior probability of the null hypothesis is 0.863. Under the assumption of clinical equipoise, the prior odds assigned to the two hypotheses are assumed to be equal, which means the only controversial aspect of reporting such odds is the specification of the alternative hypothesis.

For frequentists, the most important aspect of the methodology reported in this article may be that it provides a connection between frequentist and Bayesian testing procedures. In one-parameter exponential family models with monotone likelihood ratios, for example, it is possible to define a UMPBT with the same rejection region as a UMPT. This means that a Bayesian using a UMPBT and a frequentist conducting a significance test will make identical decisions on the basis of the observed data, which suggests that either interpretation of the test may be invoked. That is, a decision to reject the null hypothesis at a specified significance level occurs only when the Bayes factor in favor of the alternative hypothesis exceeds a specified evidence level. This fact provides a remedy to the two primary deficiencies of classical significance tests—their inability to quantify evidence in favor of the null hypothesis when the null hypothesis is not rejected, and their tendency to exaggerate evidence against the null when it is. Having determined the corresponding UMPBT, Bayes factors can be used to provide a simple summary of the evidence in favor of each hypothesis.

For Bayesians, UMPBTs represent a new objective Bayesian test, at least when objective Bayesian methods are interpreted in the broad sense. As Berger (2006) notes, “there is no unanimity as to the definition of objective Bayesian analysis … ” and “many Bayesians object to the label ‘objective Bayes,”’ preferring other labels such as “noninformative, reference, default, conventional and nonsubjective.” Within this context, UMPBTs provide a new form of default, nonsubjective Bayesian tests in which the alternative hypothesis is determined so as to maximize the probability that a Bayes factor exceeds a specified threshold. This threshold can be specified either by a default value—say 10 or 100—or, as indicated in the preceding discussion, determined so as to produce a Bayesian test that has the same rejection region as a classical UMPT. In the latter case, UMPBTs provide an objective Bayesian testing procedure that can be used to translate the results of classical significance tests into Bayes factors and posterior model probabilities. By so doing, UMPBTs may prove instrumental in convincing scientists that commonly-used levels of statistical significance do not provide “significant” evidence against rejected null hypotheses.

Subjective Bayesian methods have long provided scientists with a formal mechanism for assessing the probability that a standard theory is true. Unfortunately, subjective Bayesian testing procedures have not been—and will likely never be— generally accepted by the scientific community. In most testing problems, the range of scientific opinion regarding the magnitude of violations from a standard theory is simply too large to make the report of a single, subjective Bayes factor worthwhile. Furthermore, scientific journals have demonstrated an unwillingness to replace the report of a single p-value with a range of subjectively determined Bayes factors or posterior model probabilities.

Given this reality, subjective Bayesians may find UMPBTs useful for communicating the results of Bayesian tests to non-Bayesians, even when a UMPBT is only one of several Bayesian tests that are reported. By reducing the controversy regarding the specification of prior densities on parameter values under individual hypotheses, UMPBTs can also be used to focus attention on the specification of prior probabilities on the hypotheses themselves. In the clinical trial example described above, for example, the value of the success probability specified under the alternative hypothesis may be less important in modeling posterior model probabilities than incorporating information regarding the outcomes of previous trials on related supplements. Such would be the case if numerous previous trials of similar agents had failed to provide evidence of increased treatment efficacy.

UMPBTs possess certain favorable properties not shared by other objective Bayesian methods. For instance, most objective Bayesian tests implicitly define local alternative prior densities on model parameters under the alternative hypothesis [e.g., Berger and Pericchi (1996), Jeffreys (1939), O’Hagan (1995)]. As demonstrated in Johnson and Rossell (2010), however, the use of local alternative priors makes it difficult to accumulate evidence in favor of a true null hypothesis. This means that many objective Bayesian methods are only marginally better than classical significance tests in summarizing evidence in favor of the null hypothesis. For small to moderate sample sizes, UMPBTs produce alternative hypotheses that correspond to nonlocal alternative prior densities, which means that they are ableto provide more balanced summaries of evidence collected in favor of true null and true alternative hypotheses.

UMPBTs also possess certain unfavorable properties. Like many objective Bayesian methods, UMPBTs can violate the likelihood principle, and their behavior in large sample settings can lead to inconsistency if evidence thresholds are held constant. And the alternative hypotheses generated by UMPBTs are neither vague nor noninformative. Further comments and discussion regarding these issues are provided below.

In order to define UMPBTs, it useful to first review basic properties of Bayesian hypothesis tests. In contrast to classical statistical hypothesis tests, Bayesian hypothesis tests are based on comparisons of the posterior probabilities assigned to competing hypotheses. In parametric tests, competing hypotheses are characterized by the prior densities that they impose on the parameters that define a sampling density shared by both hypotheses. Such tests comprise the focus of this article. Specifically, it is assumed throughout that the posterior odds between two hypotheses H1 and H0 can be expressed as

| (2) |

where BF10(x) = m1(x)/m0(x) is the Bayes factor between hypotheses H1 and H0,

| (3) |

is the marginal density of the data under hypothesis Hi, f (x | θ) is the sampling density of data x given θ, πi(θ | Hi) is the prior density on θ under Hi and p(Hi) is the prior probability assigned to hypothesis Hi, for i = 0, 1. The marginal prior density for θ is thus

When there is no possibility of confusion, πi(θ | Hi) will be denoted more simply by πi(θ). The parameter space is denoted by Θ and the sample space by χ. The logarithm of the Bayes factor is called the weight of evidence. All densities are assumed to be defined with respect to an appropriate underlying measure (e.g., Lebesgue or counting measure).

Finally, assume that one hypothesis—the null hypothesis H0—is fixed on the basis of scientific considerations, and that the difficulty in constructing a Bayesian hypothesis test arises from the requirement to specify an alternative hypothesis. This assumption mirrors the situation encountered in classical hypothesis tests in which the null hypothesis is known, but no alternative hypothesis is defined. In the clinical trial example, for instance, the null hypothesis corresponds to the assumption that the success probability of the new treatment equals that of the standard treatment, but there is no obvious value (or prior probability density) that shouldbe assigned to the treatment’s success probability under the alternative hypothesis that it is better than the standard of care.

With these assumptions and definitions in place, it is worthwhile to review a property of Bayes factors that pertains when the prior density defining an alternative hypothesis is misspecified. Let πt(θ | H1) = πt(θ) denote the “true” prior density on θ under the assumption that the alternative hypothesis is true, and let mt(x) denote the resulting marginal density of the data. In general πt(θ) is not known, but it is still possible to compare the properties of the weight of evidence that would be obtained by using the true prior density under the alternative hypothesis to those that would be obtained using some other prior density. From a frequentist perspective, πt might represent a point mass concentrated on the true, but unknown, data generating parameter. From a Bayesian perspective, πt might represent a summary of existing knowledge regarding θ before an experiment is conducted. Because πt is not available, suppose that π1(θ | H1) = π1(θ) is instead used to represent the prior density, again under the assumption that the alternative hypothesis is true. Then it follows from Gibbs’s inequality that

That is,

| (4) |

which means that the expected weight of evidence in favor of the alternative hypothesis is always decreased when π1(θ) differs from πt(θ) (on a set with measure greater than 0). In general, the UMPBTs described below will thus decrease the average weight of evidence obtained in favor of a true alternative hypothesis. In other words, the weight of evidence reported from a UMPBT will tend to underestimate the actual weight of evidence provided by an experiment in favor of a true alternative hypothesis.

Like classical statistical hypothesis tests, the tangible consequence of a Bayesian hypothesis test is often the rejection of one hypothesis, say H0, in favor of the second, say H1. In a Bayesian test, the null hypothesis is rejected if the posterior probability of H1 exceeds a certain threshold. Given the prior odds between the hypotheses, this is equivalent to determining a threshold, say γ, over which the Bayes factor between H1 and H0 must fall in order to reject H0 in favor of H1. It is therefore of some practical interest to determine alternative hypotheses that maximize the probability that the Bayes factor from a test exceeds a specified threshold.

With this motivation and notation in place, a UMPBT(γ) may be formally defined as follows.

Definition. A uniformly most powerful Bayesian test for evidence threshold γ > 0 in favor of the alternative hypothesis H1 against a fixed null hypothesis H0, denoted by UMPBT(γ), is a Bayesian hypothesis test in which the Bayes factor for the test satisfies the following inequality for any θ∈ Θ and for all alternative hypotheses H2 : θ ~ π2(θ):

| (5) |

In other words, the UMPBT(γ) is a Bayesian test for which the alternative hypothesis is specified so as to maximize the probability that the Bayes factor BF10(x) exceeds the evidence threshold γ for all possible values of the data generating parameter θt.

The remainder of this article is organized as follows. In the next section, UMPBTs are described for one-parameter exponential family models. As in the case of UMPTs, a general prescription for constructing UMPBTs is available only within this class of densities. Specific techniques for defining UMPBTs or approximate UMPBTs outside of this class are described later in Sections 4 and 5. In applying UMPBTs to one parameter exponential family models, an approximate equivalence between type I errors for UMPTs and the Bayes factors obtained from UMPBTs is exposed.

In Section 3, UMPBTs are applied in two canonical testing situations: the test of a binomial proportion, and the test of a normal mean. These two tests are perhaps the most common tests used by practitioners of statistics. The binomial test is illustrated in the context of a clinical trial, while the normal mean test is applied to evaluate evidence reported in support of the Higgs boson. Section 4 describes several settings outside of one parameter exponential family models for which UMPBTs exist. These include cases in which the nuisance parameters under the null and alternative hypothesis can be considered to be equal (though unknown), and situations in which it is possible to marginalize over nuisance parameters to obtain expressions for data densities that are similar to those obtained in one-parameter exponential family models. Section 5 describes approximations to UMPBTs obtained by specifying alternative hypotheses that depend on data through statistics that are ancillary to the parameter of interest. Concluding comments appear in Section 6.

2. One-parameter exponential family models

Assume that x1, …, xn ≡ x are i.i.d. with a sampling density (or probability mass function in the case of discrete data) of the form

| (6) |

where T (x), h(x), η(θ) and A(θ) are known functions, and η(θ) is monotonic. Consider a one-sided test of a point null hypothesis H0: θ = θ0 against an arbitrary alternative hypothesis. Let γ denote the evidence threshold for a UMPBT(γ), and assume that the value of θ0 is fixed.

Lemma 1. Assume the conditions of the previous paragraph pertain, and define gγ(θ, θ0) according to

| (7) |

In addition, define u to be 1 or −1 according to whether η(θ) is monotonically increasing or decreasing, respectively, and define v to be either 1 or −1 according to whether the alternative hypothesis requires θ to be greater than or less than θ0, respectively. Then a UMPBT(γ) can be obtained by restricting the support of π1(θ) to values of θ that belong to the set

| (8) |

Proof. Consider the case in which the alternative hypothesis requires θ to be greater than θ0 and η(θ) is increasing (so that uv = 1), and let θt denote the true (i.e., data-generating) parameter for x under (6). Consider first simple alternatives for which the prior on θ is a point mass at θ1. Then

| (9) |

It follows that the probability in (9) achieves its maximum value when the right-hand side of the inequality is minimized, regardless of the distribution of ΣT (xi).

Now consider composite alternative hypotheses, and define an indicator function s according to

| (10) |

Let θ* be a value that minimizes gγ (θ, θ0). Then it follows from (9) that

| (11) |

This implies that

| (12) |

for all probability densities π(θ). It follows that

| (13) |

is maximized by a prior that concentrates its mass on the set for which gγ (θ, θ0) is minimized.

The proof for other values of (u, v) follows by noting that the direction of the inequality in (9) changes according to the sign of η(θ1) − η(θ0).

It should be noted that in some cases the values of θ that maximize Pθt (BF10 > γ) are not unique. This might happen if, for instance, no value of the sufficient statistic obtained from the experiment could produce a Bayes factor that exceeded the γ threshold. For example, it would not be possible to obtain a Bayes factor of 10 against a null hypothesis that a binomial success probability was 0.5 based on a sample of size n = 1. In that case, the probability of exceeding the threshold is 0 for all values of the success probability, and a unique UMPBT does not exist. More generally, if T (x) is discrete, then many values of θ1 might produce equivalent tests. An illustration of this phenomenon is provided in the first example.

2.1. Large sample properties of UMPBTs

Asymptotic properties of UMPBTs can most easily be examined for tests of point null hypotheses for a canonical parameter in one-parameter exponential families. Two properties of UMPBTs in this setting are described in the following lemma.

Lemma 2. Let X1, …, Xn represent a random sample drawn from a density expressible in the form (6) with η(θ) = θ, and consider a test of the precise null hypothesis H0: θ = θ0. Suppose that A(θ) has three bounded derivatives in a neighborhood of θ0, and let θ* denote a value of θ that defines a UMPBT(γ) test and satisfies

| (14) |

Then the following statements apply: (1) For some t ∈ (θ0, θ*),

| (15) |

(2) Under the null hypothesis,

| (16) |

Proof. The first statement follows immediately from (14) by expanding A(θ) in a Taylor series around θ*. The second statement follows by noting that the weight of evidence can be expressed as

Expanding in a Taylor series around θ0 leads to

| (17) |

where ε represents a term of order O(n−½). From properties of exponential family models, it is known that

Because A(θ) has three bounded derivatives in a neighborhood of θ0, [A′ (t) − A′ (θ0)] and [A″ (t) − A″ (θ) are order O(n−½), and the statement follows application of the central limit theorem.

Equation (15) shows that the difference |θ* − θ0| is O(n−1/2) when the evidence threshold γ is held constant as a function of n. In classical terms, this implies that alternative hypotheses defined by UMPBTs represent Pitman sequences of local alternatives [Pitman (1949)]. This fact, in conjunction with (16), exposes an interesting behavior of UMPBTs in large sample settings, particularly when viewed from the context of the Jeffreys–Lindley paradox [Jeffreys (1939), Lindley (1957); see also Robert, Chopin and Rousseau (2009)].

The Jeffreys–Lindley paradox (JLP) arises as an incongruity between Bayesian and classical hypothesis tests of a point null hypothesis. To understand the paradox, suppose that the prior distribution for a parameter of interest under the alternative hypothesis is uniform on an interval I containing the null value θ0, and that the prior probability assigned to the null hypothesis is π1. If π1 is bounded away from 0, then it is possible for the null hypothesis to be rejected in an α-level significance test even when the posterior probability assigned to the null hypothesis exceeds 1 − α. Thus, the anomalous behavior exhibited in the example of Table 1, in which the null hypothesis was rejected in a significance test while being supported by the data, is characteristic of a more general phenomenon that may occur even in large sample settings. To see that the null hypothesis can be rejected even when the posterior odds are in its favor, note that for sufficiently large n the width of I will be large relative to the posterior standard deviation of θ under the alternative hypothesis. Data that are not “too far” from fθ0 may therefore be much more likely to have arisen from the null hypothesis than from a density fθ when θ is drawn uniformly from I. At the same time, the value of the test statistic based on the data may appear extreme given that fθ0 pertains.

For moderate values of γ, the second statement in Lemma 2 shows that the weight of evidence obtained from a UMPBT is unlikely to provide strong evidence in favor of either hypothesis when the null hypothesis is true. When γ = 4, for instance, an approximate 95% confidence interval for the weight of evidence extends only between (−4.65, 1.88), no matter how large n is. Thus, the posterior probability of the null hypothesis does not converge to 1 as the sample size grows. The null hypothesis is never fully accepted—nor the alternative rejected—when the evidence threshold is held constant as n increases.

This large sample behavior of UMPBTs with fixed evidence thresholds is, in a certain sense, similar to the JLP. When the null hypothesis is true and n is large, the probability of rejecting the null hypothesis at a fixed level of significance remains constant at the specified level of significance. For instance, the null hypothesis is rejected 5% of the time in a standard 5% significance test when the null hypothesis is true, regardless of how large the sample size is. Similarly, when γ = 4, the probability that the weight of evidence in favor of the alternative hypothesis will be greater than 0 converges to 0.20 as n becomes large. Like the significance test, there remains a nonzero probability that the alternative hypothesis will be favored by the UMPBT even when the null hypothesis is true, regardless of how large n is.

On a related note, Rousseau (2007) has demonstrated that a point null hypothesis may be used as a convenient mathematical approximation to interval hypotheses of the form (θ0 − ε, θ0 + ε) if ε is sufficiently small. Her results suggest that such an approximation is valid only if ε < o(n). The fact that UMPBT alternatives decrease at a rate of O(n−½) suggests that UMPBTs may be used to test small interval hypotheses around θ0, provided that the width of the interval satisfies the constraints provided by Rousseau.

Further comments regarding the asymptotic properties of UMPBTs appear in the discussion section.

3. Examples

Tests of simple hypotheses in one-parameter exponential family models continue to be the most common statistical hypothesis tests used by practitioners. These tests play a central role in many science, technology and business applications. In addition, the distributions of many test statistics are asymptotically distributed as standard normal deviates, which means that UMPBTs can be applied to obtain Bayes factors based on test statistics [Johnson (2005)]. This section illustrates the use of UMPBT tests in two archetypical examples; the first involves the test of a binomial success probability, and the second the test of the value of a parameter estimate that is assumed to be approximately normally distributed.

3.1. Test of binomial success probability

Suppose x ~ Bin(n, p), and consider the test of a null hypothesis H0: p = p0 versus an alternative hypothesis H1: p > p0. Assume that an evidence threshold of γ is desired for the test; that is, the alternative hypothesis is accepted if BF10 > γ.

From Lemma 1, the UMPBT(γ) is defined by finding p1 that satisfies p1 > p0 and

| (18) |

Although this equation cannot be solved in closed form, its solution can be found easily using optimization functions available in most statistical programs.

3.1.1. Phase II clinical trials with binary outcomes

To illustrate the resulting test in a real-world application that involves small sample sizes, consider a one-arm Phase II trial of a new drug intended to improve the response rate to a disease from the standard-of-care rate of p0 = 0.3. Suppose also that budget and time constraints limit the number of patients that can be accrued in the trial to n = 10, and suppose that the new drug will be pursued only if the odds that it offers an improvement over the standard of care are at least 3:1. Taking γ = 3, it follows from (18) that the UMPBT alternative is defined by taking H1: p1 = 0.525. At this value of p1, the Bayes factor BF10 in favor of H1 exceeds 3 whenever 6 or more of the 10 patients enrolled in the trial respond to treatment.

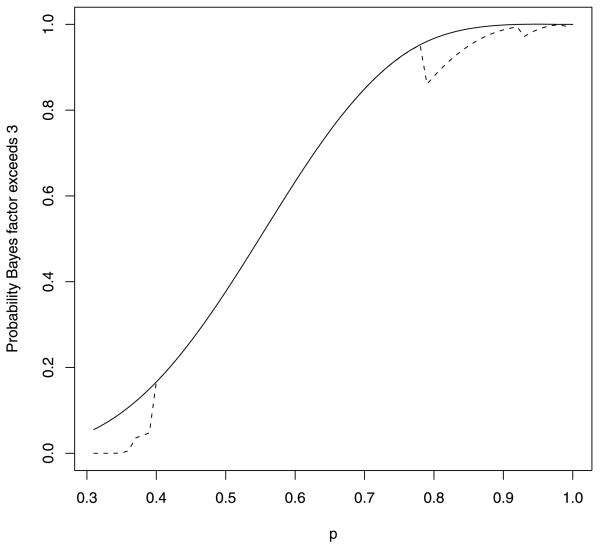

A plot of the probability that BF10 exceeds 3 as function of the true response rate p appears in Figure 1. For comparison, also plotted in this figure (dashed curve) is the probability that BF10 exceeds 3 when p1 is set to the data-generating parameter, that is, when p1 = pt.

FIG. 1.

Probability that the Bayes factor exceeds 3 plotted against the data-generating parameter. The solid curve shows the probability of exceeding 3 for the UMPBT. The dashed curve displays this probability when the Bayes factor is calculated using the data-generating parameter.

Figure 1 shows that the probability that BF10 exceeds 3 when calculated under the true alternative hypothesis is significantly smaller than it is under the UMPBT alternative for values of p < 0.4 and for values of p > 0.78. Indeed, for values of p < 0.334, there is no chance that BF10 will exceed 3. This is so because (0.334/0.30)x remains less than 3.0 for all x ≤ 10. The decrease in the probability that the Bayes factor exceeds 3 for large values of p stems from the relatively small probability that these models assign to the observation of intermediate values of x. For example, when p = 0.8, the probability of observing 6 out 10 successes is only 0.088, while the corresponding probability under H0 is 0.037. Thus BF10 2.39, and the evidence in favor of the true success probability does not exceed 3. That is, the discontinuity in the dashed curve at p ≈ 0.7 occurs because the Bayes factor for this test is not greater than 3 when x = 6. Similarly, the other discontinuities in the dashed curve occur when the rejection region for the Bayesian test (i.e., values of x for which the Bayes factor is greater than 3) excludes another immediate value of x. The dashed and solid curves agree for all Bayesian tests that produce Bayes factors that exceed 3 for all values of x ≥ 6.

It is also interesting to note that the solid curve depicted in Figure 1 represents the power curve for an approximate 5% one-sided significance test of the null hypothesis that p = 0.3 [note that P0.3(X ≥ 6) = 0.047]. This rejection region for the 5% significance test also corresponds to the=region for which the Bayes factor corresponding to the UMPBT(γ) exceeds γ for all values of γ ∈ (2.36, 6.82). If equal prior probabilities are assigned to H0 and H1, this suggests that a p-value of 0.05 for this test corresponds roughly to the assignment of a posterior probability between (1.0/7.82, 1.0/3.36) = (0.13, 0.30) to the null hypothesis. This range of values for the posterior probability of the null hypothesis is in approximate agreement with values suggested by other authors, for example, Berger and Sellke (1987).

This example also indicates that a UMPBT can result in large type I errors if the threshold γ is chosen to be too small. For instance, taking γ = 2 in this example would lead to type I errors that were larger than 0.05.

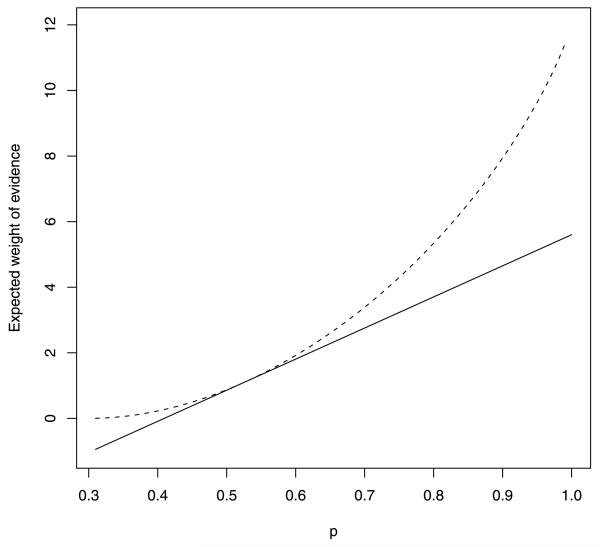

It is important to note that the UMPBT does not provide a test that maximizes the expected weight of evidence, as equation (4) demonstrates. This point is illustrated in Figure 2, which depicts the expected weight of evidence obtained in favor of H1 by a solid curve as the data-generating success probability is varied in (0.3, 1.0). For comparison, the dashed curve shows the expected weight of evidence obtained as a function of the true parameter value. As predicted by the inequality in (4), on average the UMPBT provides less evidence in favor of the true alternative hypothesis for all values of p ∈ (0.3, 1.0) except p = 0.525, the UMPBT value.

FIG. 2.

Expected weight of evidence produced by a UMPBT(γ) against a null hypothesis that p0 = 0.3 when the sample size is n = 10 (solid curve), versus the expected weight of evidence observed using the data-generating success probability at the alternative hypothesis (dashed curve). The data-generating parameter value is displayed on the horizontal axis.

3.2. Test of normal mean, σ2 known

Suppose xi, i 1, …, n are i.i.d. N(μ, σ2) with σ2 known. The null hypothesis is H0: μ = μ0, and the alternative hypothesis is accepted if BF10 > γ. Assuming that the alternative hypothesis takes the form H1: μ = μ1 in a one-sided test, it follows that

| (19) |

If the data-generating parameter is μt, the probability that BF10 is greater than γ can be written as

| (20) |

If μ1 > μ0, then the UMPBT(γ) value of μ1 satisfies

| (21) |

Conversely, if μ1 < μ0, then optimal value of μ1 satisfies

| (22) |

It follows that the UMPBT(γ) value for μ1 is given by

| (23) |

depending on whether μ1 > μ0 or μ1 < μ0.

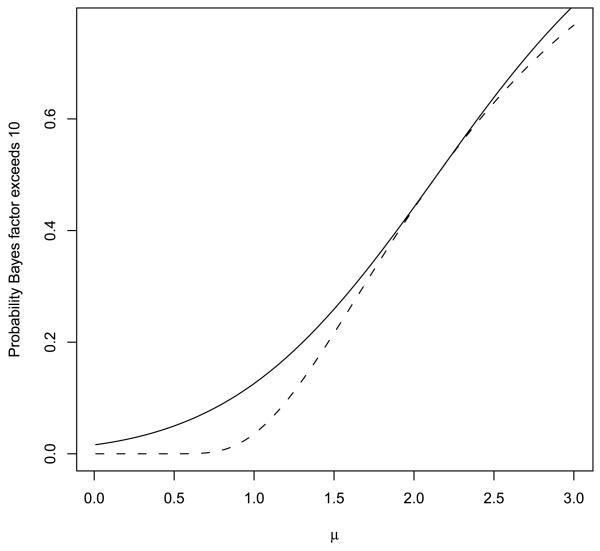

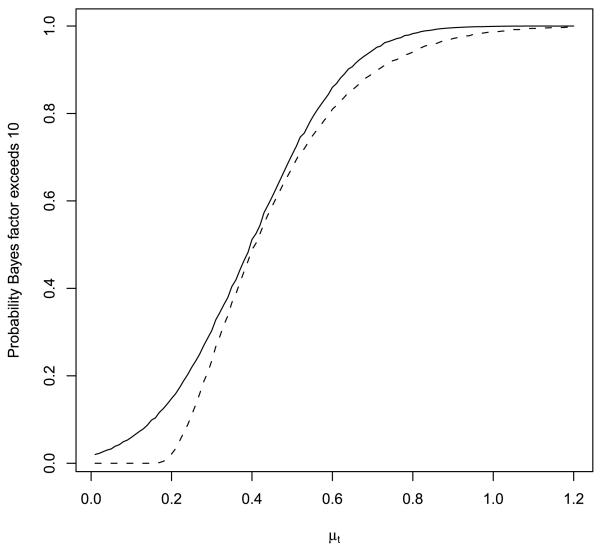

Figure 3 depicts the probability that the Bayes factor exceeds γ = 10 when testing a null hypothesis that μ = 0 based on a single, standard normal observation (i.e., n = 1, σ2 = 1). In this case, the UMPBT(10) is obtained by taking μ1 = 2.146. For comparison, the probability that the Bayes factor exceeds 10 when the alternative is defined to be the data-generating parameter is depicted by the dashed curve in the plot.

FIG. 3.

Probability that Bayes factor in favor of UMPBT alternative exceeds 10 when μ0 = 0 and n = 1 (solid curve). The dashed curve displays this probability when the Bayes factor is calculated under the alternative hypothesis that μ1 equals the data-generating parameter (displayed on the horizontal axis).

UMPBTs can also be used to interpret the evidence obtained from classical UMPTs. In a classical one-sided test of a normal mean with known variance, the null hypothesis is rejected if

where α is the level of the test designed to detect μ1 > μ0. In the UMPBT, from (19)–(20) it follows that the null hypothesis is rejected if

Setting and equating the two rejection regions, it follows that the rejection regions for the two tests are identical if

| (24) |

For the case of normally distributed data, it follows that

| (25) |

which means that the alternative hypothesis places μ1 at the boundary of the UMPT rejection region.

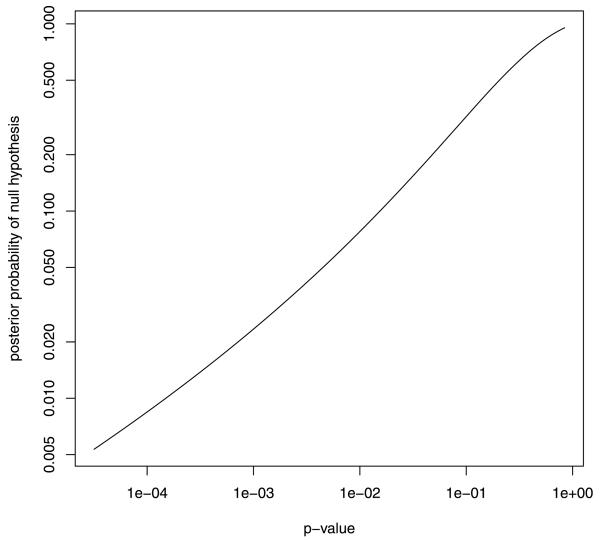

The close connection between the UMPBT and UMPT for a normal mean makes it relatively straightforward to examine the relationship between the p-values reported from a classical test and either the Bayes factors or posterior probabilities obtained from a Bayesian test. For example, significance tests for normal means are often conducted at the 5% level. Given this threshold of evidence for rejection of the null hypothesis, the one-sided γ threshold corresponding to the 5% significance test is 3.87, and the UMPBT alternative is . If we assume that equal prior probabilities are assigned to the null and alternative hypotheses, then a correspondence between p-values and posterior probabilities assigned to the null hypothesis is easy to establish. This correspondence is depicted in Figure 4. For instance, this figure shows that a p-value of 0.01 corresponds to the assignment of posterior probability 0.08 to the null hypothesis.

FIG. 4.

Correspondence between p-values and posterior model probabilities for a UMPBT test derived from a 5% test. This plot assumes equal prior probabilities were assigned to the null and alternative hypotheses. Note that both axes are displayed on the logarithmic scale.

3.2.1. Evaluating evidence for the Higgs boson

On July 4, 2012, scientists at CERN made the following announcement:

We observe in our data clear signs of a new particle, at the level of 5 sigma, in the mass region around 126 gigaelectronvolts (GeV). (http://press.web.cern.ch/press/PressReleases/Releases2012/PR17.12E.html).

In very simplified terms, the 5 sigma claim can be explained by fitting a model for a Poisson mean that had the following approximate form:

Here, x denotes mass in GeV, {ai} denote nuisance parameters that model background events, s denotes signal above background, m denotes the mass of a new particle, w denotes a convolution parameter and φ(x; m, w) denotes a Gaussian density centered on m with standard deviation w [Prosper (2012)]. Poisson events collected from a series of high energy experiments conducted in the Large Hadron Collider (LHC) at CERN provide the data to estimate the parameters in this stylized model. The background parameters {ai} are considered nuisance parameters. Interest, of course, focuses on testing whether s > 0 at a mass location m. The null hypothesis is that s = 0 for all m.

The accepted criterion for declaring the discovery of a new particle in the field of particle physics is the 5 sigma rule, which in this case requires that the estimate of s be 5 standard errors from 0 (http://public.web.cern.ch/public/).

Calculation of a Bayes factor based on the original mass spectrum data is complicated by the fact that prior distributions for the nuisance parameters {ai}, m, and w are either not available or are not agreed upon. For this reason, it is more straightforward to compute a Bayes factor for these data based on the test statistic z = ŝ/ se(ŝ) where ŝ denotes the maximum likelihood estimate of s and se(ŝ) its standard error [Johnson (2005, 2008)]. To perform this test, assume that under the null hypothesis z has a standard normal distribution, and that under the alternative hypothesis z has a normal distribution with mean μ and variance 1.

In this context, the 5 sigma rule for declaring a new particle discovery means that a new discovery can only be declared if the test statistic z > 5. Using equation (24) to match the rejection region of the classical significance test to a UMPBT(γ) implies that the corresponding evidence threshold is γ = exp(12.5) ≈ 27,000. In other words, a Bayes factor of approximately γ = exp(12.5) ≈ 27,000 corresponds to the 5 sigma rule required to accept the alternative hypothesis that a new particle has been found.

It follows from the discussion following equation (25) that the alternative hypothesis for the UMPBT alternative is μ1 = 5. This value is calculated under the assumption that the test statistic z has a standard normal distribution under the null hypothesis [i.e., σ = 1 and n = 1 in (23)]. If the observed value of z was exactly 5, then the Bayes factor in favor of a new particle would be approximately 27,000. If the observed value was, say 5.1, then the Bayes factor would be exp(−0.5[0.12 − 5.12]) = 44,000. These values suggest very strong evidence in favor a new particle, but perhaps not as much evidence as might be inferred by nonstatisticians by the report of a p-value of 3 × 10−7.

There are, of course, a number of important caveats that should be considered when interpreting the outcome of this analysis. This analysis assumes that an experiment with a fixed endpoint was conducted, and that the UMPBT value of the Poisson rate at 126 GeV was of physical significance. Referring to (23) and noting that the asymptotic standard error of z decreases at rate , it follows that the UMPBT alternative hypothesis favored by this analysis is O(n−½). For sufficiently large n, systematic errors in the estimation of the background rate could eventually lead to the rejection of the null hypothesis in favor of the hypothesis of a new particle. This is of particular concern if the high energy experiments were continued until a 5 sigma result was obtained. Further comments regarding this point appear in the discussion section.

3.3. Other one-parameter exponential family models

Table 2 provides the functions that must be minimized to obtain UMPBTs for a number of common exponential family models. The objective functions listed in this table correspond to the function gγ (·, ·) specified in Lemma 1 with v 1. The negative binomial is parameterized by the fixed number of failures r and=random number of successes x = 0, 1, … observed before termination of sampling. The other models are parameterized so that μ and p denote means and proportions, respectively, while σ2 values refer to variances.

Table 2.

Common one parameter exponential family models for which UMPBT(γ) exist

| Model | Test | Objective function |

|---|---|---|

| Binomial | p1 > p0 | {log(γ) − nlog[(1 − p)/(1 − p0)]}(log{[p(1 − P0)]/[(1 − P)P0]})−1 |

| Exponential | μ1 > μ0 | {log(γ) + n[log(μ1) − log(μ0)]}[1/μ0 − 1/μ1]−l |

| Neg. Bin. | p1 > p0 | {log(γ) − rlog[(1 − p1)/(1 − p0)]}[log(p1) − log(p0)]−1 |

| Normal | ||

| Normal | μ1 > μ0 | |

| Poisson | μ1 > μ0 | [log(γ) + n(μ1 − μ0)][log(μ1) − log(μ0)]−1 |

4. Extensions to other models

Like UMPTs, UMPBTs are most easily defined within one-parameter exponential family models. In unusual cases, UMPBTs can be defined for data modeled by vector-valued exponential family models, but in general such extensions appear to require stringent constraints on nuisance parameters.

One special case in which UMPBTs can be defined for a d-dimensional parameter θ occurs when the density of an observation can be expressed as

| (26) |

and all but one of the ηi(θ) are constrained to have identical values under both hypotheses. To understand how a UMPBT can be defined in this case, without loss of generality suppose that ηi(θ), i = 2, …, d are constrained to have the same value under both the null and alternative hypotheses, and that the null and alternative hypotheses are defined by H0: θ1 = θ1,0 and H1: θ1 > θ1,0. For simplicity, suppose further that η1 is a monotonically increasing function.

As in Lemma 1, consider first simple alternative hypotheses expressible as H1: θ1 = θ1,1. Let θ0 = (θ1,0, …, θd,0)′ and θ1 = (θ1,1, …, θd,1)′. It follows that the probability that the logarithm of the Bayes factor exceeds a threshold log(γ) can be expressed as

| (27) |

The probability in (27) is maximized by minimizing the right-hand side of the inequality. The extension to composite alternative hypotheses follows the logic described in inequalities (11)–(13), which shows that UMPBT(γ) tests can be obtained in this setting by choosing the prior distribution of θ1 under the alternative hypotheses so that it concentrates its mass on the set

| (28) |

while maintaining the constraint that the values of ηi(θ) are equal under both hypotheses. Similar constructions apply if η1 is monotonically decreasing, or if the alternative hypothesis specifies that θ1,0 < θ0,0.

More practically useful extensions of UMPBTs can be obtained when it is possible to integrate out nuisance parameters in order to obtain a marginal density for the parameter of interest that falls within the class of exponential family of models. An important example of this type occurs in testing whether a regression coefficient in a linear model is zero.

4.1. Test of linear regression coefficient, σ2 known

Suppose that

| (29) |

where σ2 is known, y is an n × 1 observation vector, X an n × p design matrix of full column rank and β = (β1, …, βp)′ denotes a p × 1 regression parameter. The null hypothesis is defined as H0: βp = 0. For concreteness, suppose that interest focuses on testing whether βp > 0, and that under both the null and alternative hypotheses, the prior density on the first p − 1 components of β is a multivariate normal distribution with mean vector 0 and covariance matrix σ2Σ. Then the marginal density of y under H0 is

| (30) |

where

| (31) |

and X−p is the matrix consisting of the first p − 1 columns of X.

Let βp* denote the value of βp under the alternative hypothesis H1 that defines the UMPBT(γ), and let xp denote the pth column of X. Then the marginal density of y under H1 is

| (32) |

It follows that the probability that the Bayes factor BF10 exceeds γ can be expressed as

| (33) |

which is maximized by minimizing the right-hand side of the inequality. The UMPBT(γ) is thus obtained by taking

| (34) |

The corresponding one-sided test of βp < 0 is obtained by reversing the sign of βp* in (34).

Because this expression for the UMPBT assumes that σ2 is known, it is not of great practical significance by itself. However, this result may guide the specification of alternative models in, for example, model selection algorithms in which the priors on regression coefficients are specified conditionally on the value of σ2. For example, the mode of the nonlocal priors described in Johnson and Rossell (2012) might be set to the UMPBT values after determining an appropriate value of γ based on both the sample size n and number of potential covariates p.

5. Approximations to UMPBTs using data-dependent alternatives

In some situations—most notably in linear models with unknown variances—data dependent alternative hypotheses can be defined to obtain tests that are approximately uniformly most powerful in maximizing the probability that a Bayes factor exceeds a threshold. This strategy is only attractive when the statistics used to define the alternative hypothesis are ancillary to the parameter of interest.

5.1. Test of normal mean, σ2 unknown

Suppose that xi, i = 1, …, n, are i.i.d. N(μ, σ2), that σ2 is unknown and that the null hypothesis is H0: μ = μ0. For convenience, assume further that the prior distribution on σ2 is an inverse gamma distribution with parameters α and λ under both the null and alternative hypotheses.

To obtain an approximate UMPBT(γ), first marginalize over σ2 in both models. Noting that (1 + a/t)t → ea, it follows that the Bayes factor in favor of the alternative hypothesis satisfies

| (35) |

| (36) |

| (37) |

where

| (38) |

The expression for the Bayes factor in (37) reduces to (19) if σ2 is replaced by s2. This implies that an approximate, but data-dependent UMPBT alternative hypothesis can be specified by taking

| (39) |

depending on whether μ1 > μ0 or μ1 < μ0.

Figure 5 depicts the probability that the Bayes factor exceeds γ = 10 when testing a null hypothesis that μ = 0 based on an independent sample of size n = 30 normal observations with unit variance (σ2 = 1) and using (39) to set the value of μ1 under the alternative hypothesis. For comparison, the probability that the Bayes factor exceeds 10 when the alternative is defined by taking σ2 = 1 and μ1 to be the data-generating parameter is depicted by the dashed curve in the plot. Interestingly, the data-dependent, approximate UMPBT(10) provides a higher probability of producing a Bayes factor that exceeds 10 than do alternatives fixed at the data generating parameters.

FIG. 5.

Probability that Bayes factor based on data-dependent, approximate UMPBT alternative exceeds 10 when μ0 = 0 and n = 30 (solid curve). The dashed curve displays this probability when the Bayes factor is calculated under the alternative hypothesis that μ1 equals data-generating parameter (displayed on the horizontal axis) and σ2 = 1 (the true value).

5.2. Test of linear regression coefficient, σ2 unknown

As final example, suppose that the sampling model of Section 4.1 holds, but assume now that the observational variance σ2 is unknown and assumed under both hypotheses to be drawn from an inverse gamma distribution with parameters α and λ. Also assume that the prior distribution for the first p − 1 components of β, given σ2, is a multivariate normal distribution with mean 0 and covariance matrix σ2Σ. As before, assume that H0: βp = 0. Our goal is to determine a value βp* so that H1: βp = βp* is the UMPBT(γ) under the constraint that βp > 0.

Define y1 = y − xpβp* and let y0 = y. By integrating with respect to the prior densities on σ2 and the first p − 1 components of β, the marginal density of the data under hypothesis i, i = 0, 1 can be expressed as

| (40) |

where F is defined in (31), and

| (41) |

It follows that the Bayes factor in favor of H1 can be written as

| (42) |

| (43) |

where

| (44) |

The UMPBT(γ) is defined from (43) according to

| (45) |

Minimizing the right-hand side of the last inequality with respect to βp* results in

| (46) |

This expression is consistent with the result obtained in the known variance case, but with substituted for σ2.

6. Discussion

The major contributions of this paper are the definition of UMPBTs and the explicit description of UMPBTs for regular one-parameter exponential family models. The existence of UMPBTs for exponential family models is important because these tests represent the most common hypothesis tests conducted by practitioners. The availability of UMPBTs for these models means that these tests can be used to interpret test results in terms of Bayes factors and posterior model probabilities in a wide range of scientific settings. The utility of these tests is further enhanced by the connection between UMPBTs and UMPTs that have the same rejection region. This connection makes it trivial to simultaneously report both the p-value from a test and the corresponding Bayes factor.

The simultaneous report of default Bayes factors and p-values may play a pivotal role in dispelling the perception held by many scientists that a p-value of 0.05 corresponds to “significant” evidence against the null hypothesis. The preceding sections contain examples in which this level of significance favors the alternative hypothesis by odds of only 3 or 4 to 1. Because few researchers would regard such odds as strong evidence in favor of a new theory, the use of UMPBTs and the report of Bayes factors based upon them may lead to more realistic interpretations of evidence obtained from scientific studies.

The large sample properties of UMPBTs described in Section 2.1 deserve further comment. From Lemma 2, it follows that the expected weight of evidence in favor of a true null hypothesis in an exponential family model converges to log(γ) as the sample size n tends to infinity. In other words, the evidence threshold γ represents an approximate bound on the evidence that can be collected in favor of the null hypothesis. This implies that γ must be increased with n in order to obtain a consistent sequence of tests.

Several criteria might be used for selecting a value for γ in large sample settings. One criterion can be inferred from the first statement of Lemma 2, where it is shown that the difference between the tested parameter’s value under the null and alternative hypotheses is proportional to [log(γ)/n]½. For this difference to be a constant—as it would be in a subjective Bayesian test—log(γ) must be proportional to n, or γ = exp(cn) for some c > 0. This suggests that an appropriate value for c might be determined by calibrating the weight of evidence against an accepted threshold/sample size combination. For example, if an evidence threshold of 4 were accepted as the standard threshold for tests conducted with a sample size of 100, then c might be set to log(4)/100 = 0.0139. This value of c leads to an evidence threshold of γ = 16 for sample sizes of 200, a threshold of 64 for sample sizes of 300, etc. From (24), the significance levels for corresponding z-tests would be 5%, 1% and 0.2%, respectively.

The requirement to increase γ to achieve consistent tests in large samples also provides insight into the performance of standard frequentist and subjective Bayesian tests in large sample settings. The exponential growth rate of γ required to maintain a fixed alternative hypothesis suggests that the weight of evidence should be considered against the backdrop of sample size, even in Bayesian tests. This is particularly important in goodness-of-fit testing where small deviations from a model may be tolerable. In such settings, even moderately large Bayes factors against the null hypotheses may not be scientifically important when they are based on very large sample sizes.

From a frequentist perspective, the use of UMPBTs in large sample settings can provide insight into the deviations from null hypotheses when they are (inevitably) detected. For instance, suppose that a one-sided 1% test has been conducted to determine if the mean of normal data is 0, and that the test is rejected with a p-value of 0.001 based on a sample size of 10,000. From (24), the implied evidence threshold for the test is γ = 15, and the alternative hypothesis that has been implicitly tested with the UMPBT is that μ = 0.023σ. Based on the observation of x̄ = 0.031σ, the Bayes factor in favor of this alternative is 88.5. Although there are strong odds against the null, the scientific importance of this outcome may be tempered by the fact that the alternative hypothesis that was supported against the null represents a standardized effect size of only 2.3%.

This article has focused on the specification of UMPBTs for one-sided alternatives. A simple extension of these tests to two-sided alternatives can be obtained by assuming that the alternative hypothesis is represented by two equally-weighted point masses located at the UMPBT values determined for one-sided tests. The Bayes factors for such tests can be written as

| (47) |

where ml and mh denote marginal densities corresponding to one-sided UMPBTs. Letting m*(x) = max(ml(x), mh(x)) for the data actually observed, and assuming that the favored marginal density dominates the other, it follows that

| (48) |

Thus, an approximate two-sided UMPBT(γ) can be defined by specifying an alternative hypothesis that equally concentrates its mass on the two one-sided UMPBT(2γ) tests.

Additional research is needed to identify classes of models and testing contexts for which UMPBTs can be defined. The UMPBTs described in this article primarily involve tests of point null hypotheses, or tests that can be reduced to a test of a point null hypothesis after marginalizing over nuisance parameters. Whether UMPBTs can be defined in more general settings remains an open question.

Acknowledgments

The author thanks an Associate Editor and two referees for numerous comments that improved this article. Article content is solely the responsibility of the author and does not necessarily represent the official views of the National Cancer Institute or the National Institutes of Health.

Footnotes

MSC2010 subject classifications. 62A01, 62F03, 62F05, 62F15.

REFERENCES

- BERGER J. The case for objective Bayesian analysis. Bayesian Anal. 2006;1:385–402. MR2221271. [Google Scholar]

- BERGER JO, PERICCHI LR. The intrinsic Bayes factor for model selection and prediction. J. Amer. Statist. Assoc. 1996;91:109–122. MR1394065. [Google Scholar]

- BERGER JO, SELLKE T. Testing a point null hypothesis: Irreconcilability of P values and evidence. J. Amer. Statist. Assoc. 1987;82:112–122. [Google Scholar]

- BERGER JO, WOLPERT RL. The Likelihood Principle. Institute of Mathematical Statistics Lecture Notes—Monograph Series 6. IMS; Hayward, CA: 1984. MR0773665. [Google Scholar]

- EDWARDS W, LINDMAN H, SAVAGE L. Bayesian statistical inference for psychological research. Psychological Review. 1963;70:193–242. [Google Scholar]

- HOWSON C, URBACH P. Scientific Reasoning: The Bayesian Approach. 3rd ed. Open Court; Chicago, IL: 2005. [Google Scholar]

- JEFFREYS H. Theory of Probability. Cambridge Univ. Press; Cambridge: 1939. [Google Scholar]

- JOHNSON VE. Bayes factors based on test statistics. J. R. Stat. Soc. Ser. B Stat. Methodol. 2005;67:689–701. MR2210687. [Google Scholar]

- JOHNSON VE. Properties of Bayes factors based on test statistics. Scand. J. Stat. 2008;35:354–368. MR2418746. [Google Scholar]

- JOHNSON VE, ROSSELL D. On the use of non-local prior densities in Bayesian hypothesis tests. J. R. Stat. Soc. Ser. B Stat. Methodol. 2010;72:143–170. MR2830762. [Google Scholar]

- JOHNSON VE, ROSSELL D. Bayesian model selection in high-dimensional settings. J. Amer. Statist. Assoc. 2012;107:649–660. doi: 10.1080/01621459.2012.682536. MR2980074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LEHMANN EL, ROMANO JP. Testing Statistical Hypotheses. 3rd ed. Springer; New York: 2005. MR2135927. [Google Scholar]

- LINDLEY D. A statistical paradox. Biometrika. 1957;44:187–192. [Google Scholar]

- MAYO DG, SPANOS A. Severe testing as a basic concept in a Neyman–Pearson philosophy of induction. British J. Philos. Sci. 2006;57:323–357. MR2249183. [Google Scholar]

- NEYMAN J, PEARSON E. On the use and interpretation of certain test criteria for purposes of statistical inference. Biometrika. 1928;20A:175–240. [Google Scholar]

- NEYMAN J, PEARSON E. On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1933;231:289–337. [Google Scholar]

- O’HAGAN A. Fractional Bayes factors for model comparison. J. R. Stat. Soc. Ser. B Stat. Methodol. 1995;57:99–118. [Google Scholar]

- PITMAN E. Lecture Notes on Nonparametric Statistical Inference. Columbia Univ.; New York: 1949. [Google Scholar]

- POPPER KR. The Logic of Scientific Discovery. Hutchinson; London: 1959. MR0107593. [Google Scholar]

- PROSPER 2012 Personal communication. to news@bayesian.org.

- ROBERT CP, CHOPIN N, ROUSSEAU J. Harold Jeffreys’s theory of probability revisited. Statist. Sci. 2009;24:141–172. MR2655841. [Google Scholar]

- ROUSSEAU J. Approximating interval hypothesis: p-values and Bayes factors. In: Bernardo J, Bayarri M, Berger J, Dawid A, Heckerman D, Smith A, West M, editors. Proceedings of the 2006 Valencia Conference. Oxford Univ. Press; Oxford: 2007. pp. 1–27. [Google Scholar]