Abstract

Extant research has examined the process of decision making under uncertainty, specifically in situations of ambiguity. However, much of this work has been conducted in the context of semantic and low-level visual processing. An open question is whether ambiguity in social signals (e.g., emotional facial expressions) is processed similarly or whether a unique set of processors come on-line to resolve ambiguity in a social context. Our work has examined ambiguity using surprised facial expressions, as they have predicted both positive and negative outcomes in the past. Specifically, whereas some people tended to interpret surprise as negatively valenced, others tended toward a more positive interpretation. Here, we examined neural responses to social ambiguity using faces (surprise) and nonface emotional scenes (International Affective Picture System). Moreover, we examined whether these effects are specific to ambiguity resolution (i.e., judgments about the ambiguity) or whether similar effects would be demonstrated for incidental judgments (e.g., nonvalence judgments about ambiguously valenced stimuli). We found that a distinct task control (i.e., cingulo-opercular) network was more active when resolving ambiguity. We also found that activity in the ventral amygdala was greater to faces and scenes that were rated explicitly along the dimension of valence, consistent with findings that the ventral amygdala tracks valence. Taken together, there is a complex neural architecture that supports decision making in the presence of ambiguity: (a) a core set of cortical structures engaged for explicit ambiguity processing across stimulus boundaries and (b) other dedicated circuits for biologically relevant learning situations involving faces.

INTRODUCTION

Uncertainty is a prominent feature of our decision process (Kahneman, Slovic, & Tversky, 1982), as it is ubiquitous in our realistic settings, and poses a major obstacle to effective decision making (Brunsson, 1985; Corbin, 1980). Although such decision-making processes have been studied for several decades (Tversky & Kahneman, 1974), we have only recently begun to understand the neural architecture that allows for performing such a complex task. A clear understanding of these cognitive functions requires, then, also a clear definition of uncertainty. Whereas some describe this concept as a psychological state in which an individual lacks sufficient knowledge about the outcome(s) of a given choice (see Platt & Huettel, 2008, for a review), a more specific kind of uncertainty is derived from ambiguity. In large part, research on ambiguity has been studied in the realm of linguistic properties, as ambiguity resolution is a central problem in language comprehension (Rodd, Davis, & Johnsrude, 2005; see MacDonald, Pearlmutter, & Seidenberg, 1994, for a review). More recently, work in neuroeconomics has compared the effects of risk (levels of probability) and ambiguity (uncertain probabilities) in the context of choice (Levy, Snell, Nelson, Rustichini, & Glimcher, 2010; Hsu, Bhatt, Adolphs, Tranel, & Camerer, 2005).

Although this work has examined decision-making processes in the presence of ambiguity, it remains unclear how this may differ from the process of resolving ambiguity (i.e., making a discrete decision about an ambiguous stimulus, specifically along the dimension of the ambiguity). In other words, an incidental judgment in the presence of ambiguity might require participants to judge the gender of faces that have been morphed along the dimension of race, whereas an explicit resolution judgment would require participants to judge the races of those same faces. Some studies have revealed that a set of cortical regions collectively referred to as the cingulo-opercular network (dorsal ACC [dACC]/medial superior frontal cortex and the bilateral frontal operculum [FO]/anterior insula [AI]; see Dosenbach et al., 2006) has been linked to decision making in the presence of semantic ambiguity (i.e., the selection between competing alternatives; Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997) and when detecting ambiguity in visual motion (Sterzer, Russ, Preibisch, & Kleinschmidt, 2002). With respect to face processing, one study found that these regions were more active only when participants were asked to resolve the ambiguity (i.e., when making race judgments about faces that are racially ambiguous [morphed Asian and white faces] and gender judgments about faces that are gender-ambiguous [morphed male and female faces; Demos, Wig, Moran, & Kelley, 2004]). One goal of the current work was to determine whether certain aspects of the task demands specifically recruit this network, that is, when it is recruited by the mere presence of ambiguity or when specifically making decisions that resolve the ambiguity.

To date, much of the work examining ambiguity has been conducted in the context of semantic and low-level visual processing. An open question is whether ambiguity in social signals (e.g., emotional facial expressions) are processed in a similar manner or whether a unique set of processors come on-line to resolve ambiguity in an emotional context. For example, surprised facial expressions are ambiguous in that they have predicted both positive and negative outcomes in the past. In fact, this ambiguity can be exploited to demonstrate that people show individual differences in their propensity to interpret surprised faces as either positive or negative. In the absence of contextual information that can be used to disambiguate the valence of this expression, some people interpret surprised faces negatively, whereas others interpret them positively (Neta & Whalen, 2010; Neta, Norris, & Whalen, 2009; Kim, Somerville, Johnstone, Alexander, & Whalen, 2003). The only known reports of neural responses to ambiguity of surprised faces has shown that the amygdala is modulated by ambiguity but only in the absence of a task that requires ambiguity resolution per se (Kim et al., 2003, 2004). Taken together, the main goal of this study was to examine ambiguity as it relates to social signals, both in face and other nonface emotional stimuli (i.e., International Affective Picture System [IAPS] scenes) and to determine how task demands might modulate responses to ambiguity (explicit valence ratings of ambiguously valenced stimuli, as compared with nonvalence evaluations).

To this end, we examined behavioral and neural responses to ambiguous stimuli across two stimulus and task categories. Specifically, we presented face stimuli (surprised, angry, and happy expressions) as well as scenes (ambiguous, clearly negative, and clearly positive) and asked participants to make either a valence or non-valence judgment about each one. In both stimulus categories, the clearly valenced stimuli were included purely to serve as anchors for behavioral valence ratings of the ambiguous stimuli. We predicted that there might be a set of cortical regions that come on-line when effortful processing of ambiguity is required (i.e., explicit valence judgments of ambiguously valenced stimuli), whereas the amygdala may play a dual role, in accordance with ventral and dorsal subregions tracking valence and arousal, respectively, that may be critically dependent on the task demands.

METHODS

Participants

Thirty-five healthy participants (right-handed, without neurological disease, and with normal/corrected vision; 21 women) volunteered. None were aware of the purpose of the experiment, and they were all compensated for their participation through monetary payment or course credit. Written informed consent was obtained from each participant before the session, and all procedures were approved by the Dartmouth College Committee for the Protection of Human Subjects. Three participants were removed because of technical complications with the stimulus-presenting computer, and an additional participant was removed because of nonnormative ratings (e.g., happy expressions were rated as negative on greater than 40% of trials). As a result, the final sample contained 31 participants (19 women). All included participants tested within normal limits for depression (Beck Depression Inventory [BDI]; Beck, Ward, & Mendelson, 1961; M = 3.74, SE = .66) and anxiety (Spielberger, Gorsuch, & Lushene, 1988; State-Trait Anxiety Inventory: STAIs = 31.06 ± 1.21, STAIt = 34.32 ± 1.43). One additional participant was removed from the fMRI data analysis because of scanner-related artifact, so the final sample for those analyses contained 30 participants (18 women).

Stimuli

For the face task, we used 24 face identities (12 men) posing angry, happy, and surprised expressions. We selected images of 14 identities (7 women, 7 men) from the NimStim standardized facial expression stimulus set (Tottenham et al., 2009) and 20 identities (10 women, 10 men) from the averaged Karolinska Directed Emotional Faces database (Lundqvist, Flykt, & Öhman, 1998). Of the 34 individuals whose images were included in the experiment, some posed all three expressions, and some posed only one or two of the expressions, providing us with 48 discrete stimuli. The facial expressions in this stimulus set have been validated by a separate set of participants who labeled each expression; only faces correctly labeled more than 60% of the time were included.

Importantly, not all of the identities are represented in each expression condition. However, the focus of this study was to assess the ambiguous conditions specifically (faces and scenes), and the clearly valenced conditions (e.g., happy and angry expressions) were included only to serve as anchors that would ground participants’ responses to the ambiguous (e.g., surprised) stimuli. As such, we used twice as many face identities in the surprise condition compared with the angry and happy face conditions, and so, the conditions were never intended to be matched for identity. Rather, we selected identities within each expression condition that were labeled the most accurately in terms of the normative data provided with the stimulus set, so to ensure that our clearly valenced faces were the ideal anchors (i.e., the most clearly valenced stimuli we could present), and the same goal was applied for the surprised faces.

For the scenes task, we used images from IAPS (Lang, Bradley, & Cuthbert, 2008) that were previously rated as either positive, negative, or ambiguous in valence. Ambiguity was based on discordant valence ratings across participants (some rated images as positive and others as negative; i.e., high standard deviation in valence ratings; Table 1). The mean (SEM) valence ratings on the normative 9-point scale (1 = very unpleasant, 9 = very pleasant) were 2.39 (0.08) for negative pictures, 7.93 (0.07) for positive pictures, and 5.19 (0.26) for ambiguous pictures (F(2, 10) = 1469.44, p < .001), such that positive pictures were rated as more positive than ambiguous and negative pictures, and negative pictures were rated as more negative than ambiguous pictures (all ps < .001). Importantly, arousal was equated across these three conditions: the mean (SEM) arousal ratings on the normative 9-point scale (1 = low arousal, 9 = high arousal) were 5.36 (0.20) for negative pictures, 4.96 (0.18) for positive pictures, and 4.99 (0.18) for ambiguous pictures (F(2, 10) = 1.56, p = .26; Table 1).

Table 1.

Results of Stimulus-matching Procedure for IAPS Scenes

| Negative Pictures | Ambiguous Pictures | Positive Pictures | |

|---|---|---|---|

| Mean (SEM) valence | 2.39 (0.08) | 5.19 (0.26) | 7.93 (0.07) |

| Mean (SEM) arousal | 5.36 (0.20) | 4.99 (0.18) | 4.96 (0.18) |

Valence scores are based on the normative 9-point scale (1 = very unpleasant, 9 = very pleasant). There was a significant difference between each condition of pictures (F(2, 10) = 1469.44, p < .001, η2 = .95), such that positive pictures were rated as more positive than ambiguous and negative pictures, and negative pictures were rated as more negative than ambiguous pictures (all ps < .001).

Arousal was also based on the normative 9-point scale (1 = low arousal, 9 = high arousal). Arousal was equated across these three conditions (F(2, 10) = 1.56, p = .26, η2 = .10).

In a behavioral pilot, we recruited 20 new participants (11 women). None were aware of the purpose of the experiment, and they were all compensated for their participation through monetary payment or course credit. Written informed consent was obtained from each participant before the session, and all procedures were approved by Dartmouth College Committee for the Protection of Human Subjects. Each participant viewed 114 scenes and rated each image as positive or negative (consistent with the methods in Experiment 1). Of the 114 scenes, 24 were defined as clearly negative, 24 as clearly positive, and 66 as ambiguous, based on the valence and arousal ratings provided with the stimuli (Table 1). Each image was presented for 1500 msec on a black background, with an intertrial interval of 500 msec, during which a white fixation cross appeared on the screen. As with the face stimuli, 24 images were used, and each participant viewed 50% ambiguous images and 50% clear valence images (25% clear positive and 25% clear negative). Thus, from the data collected in the pilot, we selected 12 clearly negative and 12 clearly positive images and 24 with an ambiguous valence (i.e., chosen because they had the highest standard deviation in valence ratings across participants). Indeed, the standard deviation in ratings of ambiguous scenes was significantly greater than the standard deviation in ratings of clearly valenced scenes (t(23) = 19.2, p < .001).

Finally, to directly compare the conditions across stimulus sets, we ran a Stimulus (IAPS, faces) × Valence (ambiguous, negative, positive) repeated-measures ANOVA on the normative ratings associated with each stimulus set. For valence ratings, we found a significant main effect of Valence (as expected) but no main effect of Stimulus (p > .2). For arousal ratings, however, there was a main effect of Stimulus (F(1, 11) = 5.66, p = .04), where arousal ratings were significantly higher for IAPS than faces, which is somewhat expected given the nature of the stimuli.

Experiment Design and Parameters

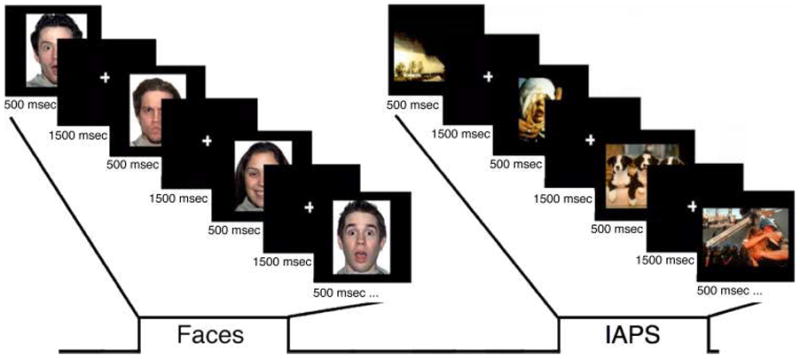

The fMRI paradigm consisted of eight functional runs of four experimental blocks each: two blocks each of facial expressions and IAPS stimuli interleaved. Each block began with a brief (2 sec) task instruction statement: “valence” or “gender” (nonvalence) for the face blocks and “valence” or “social/nonsocial” (i.e., “Does the picture contain a person?”; nonvalence) for the IAPS blocks. Each block consisted of 24 images, 12 from the ambiguous conditions and six from each clear valence condition (positive, negative). All images were presented sequentially for 500 msec, with an ISI of 1500 msec, in a randomized fashion for all conditions. An additional 24 trials were presented randomly within each block to provide jitter, during which a white fixation cross appeared on the screen, and 24 fixation trials were presented between each block (Figure 1). The order of the blocks was counterbalanced across participants. During imaging, participants responded by pressing one of two buttons with their dominant hand. Moreover, the ratings of surprised faces on the valence task allowed for the determination of positivity–negativity bias and RT.

Figure 1.

A depiction of the experimental design. The paradigm consisted of eight runs of four experimental blocks each: two blocks each of facial expressions and IAPS stimuli interleaved. Each block began with a brief (2000 msec) instruction statement: “valence” or “gender” (nonvalence) for the face blocks and “valence” or “social/nonsocial” (nonvalence; i.e., “Does the picture contain a person?”) for the IAPS blocks. Each block consisted of 24 images, 12 from the ambiguous conditions (e.g., surprised faces) and six from each clear valence condition (positive, negative; e.g., happy and angry faces, respectively). All images were presented sequentially for 500 msec, with an ISI of 1500 msec, in a randomized fashion for all conditions. An additional 24 trials were presented randomly within each block to provide jitter, during which a white fixation cross appeared on the screen, and 24 fixation trials were presented between each block. The order of the blocks was counterbalanced across participants.

Following each scanning session, participants also completed the following behavioral scales: BDI (Beck et al., 1961) and the STAIs/STAIt (Spielberger et al., 1988).

Imaging Parameters

Images were acquired on a Philips Achieva 3.0-T scanner (Philips Medical Systems, Bothell, WA), equipped with a SENSE birdcage head coil. Anatomical T1-weighted images were collected using a high-resolution 3-D magnetization-prepared rapid gradient-echo sequence, with 160 contiguous 1-mm thick sagittal slices (echo time = 4.6 msec, repetition time = 9.8 msec, field of view = 240 mm, flip angle = 8°, voxel size = 1 × 0.94 × 0.94 mm). Functional images were acquired using an echo-planar T2*-weighted imaging sequence. Each volume consisted of 36 interleaved 3-mm thick slices, AC–PC aligned, with 0.5-mm interslice gap (echo time = 35 msec, repetition time = 2000 msec, field of view = 240 mm, flip angle = 90°, voxel size = 3 × 3 × 3.5 mm). Despite our focus on the amygdala, we used slices aligned with the AC–PC plane so that we could effectively interrogate whole-brain effects of processing ambiguity across stimulus and task categories.

fMRI Analysis

Processing of fMRI data took place in SPM2 (Wellcome Department of Cognitive Neurology, London, United Kingdom). First, several preprocessing steps were employed to increase the signal-to-noise ratio before formation of statistical images. Slice time correction was employed to correct for acquisition delays within functional volumes. Next, realignment corrected for participant head motion within and across runs using rigid body transformation. Functional data then underwent unwarping, which corrects for image distortions caused by movement by susceptibility interactions (Andersson, Hutton, Ashburner, Turner, & Friston, 2001). Functional data were normalized to the EPI.mnc template, which warped data into Montreal Neurological Institute space. Normalized functional data were then spatially smoothed (6-mm FWHM) using a Gaussian kernel.

Next, the general linear model was performed to examine separate task contributions to the fMRI signal. Surprised, angry, and happy expressions as well as ambiguous, negative, and positive IAPS were modeled as separate task regressors, which were convolved with a canonical hemodynamic response function. These task regressors were included in a general linear model along with covariates of noninterest (session mean, run regressor, linear trend, and six movement parameters derived from realignment corrections) to compute parameter estimates (images containing weighted parameter estimates) for each comparison at each voxel and for each participant.

Contrast maps were then entered into a random effects model, which accounts for intersubject variability and allows population-based inferences to be drawn. Parameter estimates for the ambiguous stimuli (i.e., surprised faces and ambiguously valenced IAPS) were then submitted to a 2 × 2 ANOVA examining the effects of material type (faces vs. IAPS) and task instruction (explicit valence judgments vs. incidental non judgments). Brain regions showing significant main effects in these analyses were extracted as ROIs (threshold: p < .001, as determined by Monte Carlo simulations implemented in AlphaSim within AFNI software [Cox, 1996] correcting for the whole brain). Given the current study’s focus on the amygdala, we imposed a significance threshold of p < .05, corrected for multiple comparisons over the amygdala volume (~4500 mm3, defined using the Automated Anatomical Labeling atlas; Maldjian, Laurienti, Kraft, & Burdette, 2003) and also determined by Monte Carlo simulations, a strategy we have implemented in previous studies (Davis, Johnstone, Mazzulla, Oler, & Whalen, 2010; Kim et al., 2003, 2010; Johnstone, Somerville, Alexander, et al., 2005).

ROI

ROI analyses were conducted using the MarsBaR tool within SPM2 (Wellcome Department of Imaging Neuroscience, London, United Kingdom). Spherical regions (6-mm radius) were defined around each of these peak activations, and all significant voxels (p < .001) were included. Signal intensities for each ROI were then calculated separately for each task comparison (i.e., ambiguously valenced faces and IAPS, for the valence and nonvalence tasks separately) and examined statistically using repeated-measures ANOVA. Then, the BOLD signal (beta weights) from the significantly activated voxels was extracted from each ROI for each participant and submitted for off-line testing.

RESULTS

Behavioral Results

Valence Ratings

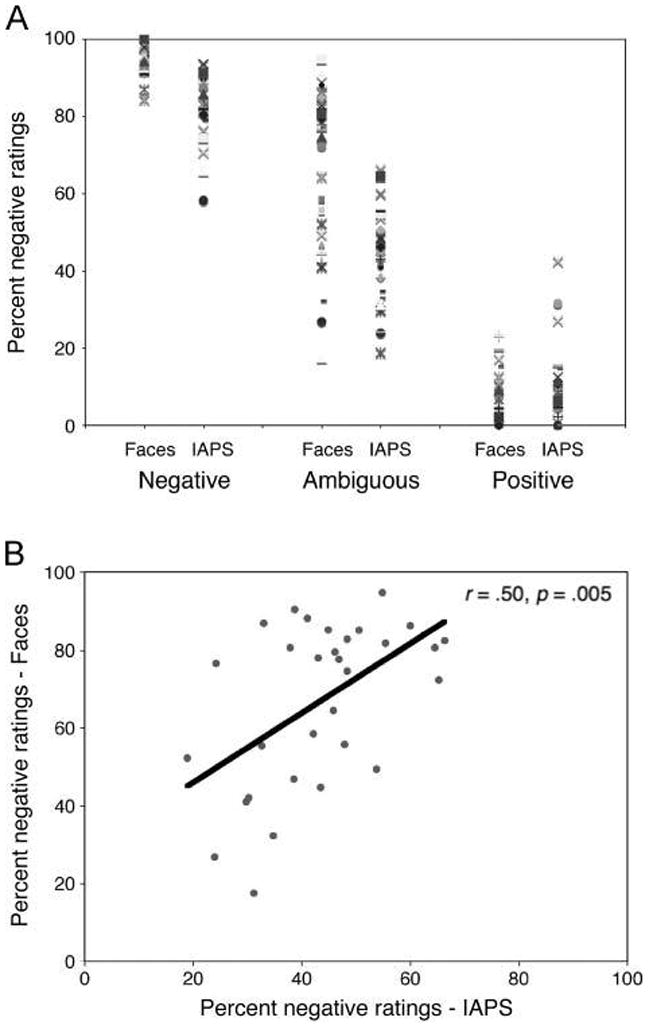

Angry and happy expressions were rated as consistently negative (95.2% of trials) and positive (93.1% of trials), respectively. Figure 2A shows that these same participants differed in their tendency to interpret surprised faces negatively versus positively. As a manipulation check of the valence conditions (ambiguously, as compared with clearly valenced stimuli), a Stimulus (IAPS, faces) × Valence (ambiguous, negative, positive) repeated-measures ANOVA revealed a significant main effect of Valence (F(2, 29) = 1576.1, p < .001), and pairwise comparisons (LSD corrected) revealed that negative stimuli were rated as more negative than ambiguous stimuli, which were rated as more negative than positive stimuli (ps < .001). Importantly, there was a significant positive correlation in ratings of ambiguous IAPS and faces (r = .50, p = .005; Figure 2B), such that participants who tended to interpret surprised faces as positive also tended to interpret ambiguous IAPS as positive.

Figure 2.

Individual differences in rating valence of surprised expressions and ambiguous scenes. (A) Consistent with previous work, angry faces are rated consistently negative, happy faces are consistently positive, and surprised ratings vary from negative to positive, revealing individual differences in how people interpret the valence of these expressions. (B) There was a significant positive correlation between ratings for ambiguous faces and scenes. Individuals who tended to rate surprised faces as positive also tended to rate the ambiguous IAPS scenes as positive.

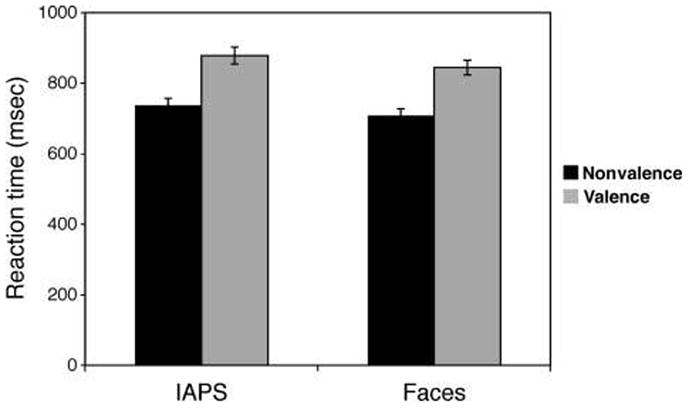

RT

A Stimulus (IAPS, faces) × Task (valence, nonvalence) repeated-measures ANOVA revealed a significant main effect of Stimulus (F(1, 30) = 7.3, p = .011), and pairwise comparisons (LSD corrected) revealed that participants took longer to rate the IAPS, as compared with the face stimuli (Figure 3). A significant main effect of Task (F(1, 30) = 295.3, p < .001) revealed that RTs were longer during the valence as compared with the nonvalence task. There was no significant Stimulus × Task interaction (p > .7).

Figure 3.

RTs (mean ± standard error) are greater when rating the valence of ambiguous stimuli, as compared with an incidental nonvalence rating, and they were also greater for the ambiguous scenes than faces.

Questionnaire Data

As noted in the Methods section, we administered personality and trait measure scales to document that these participants fell within a healthy psychiatric range on certain measures (i.e., depression, anxiety) across all participants. Results indicated that all scores for depression (BDI) and anxiety (STAI) were within normal limits.

fMRI Results

A voxelwise whole-brain 2 × 2 repeated-measures ANOVA on the ambiguous trials with the factors of Stimulus (IAPS, faces) and Task (valence, nonvalence) revealed brain regions showing a main effect of Stimulus, a main effect of Task, and an interaction between Stimulus and Task in response to ambiguity (Table 2). To explore the directionality of each effect, regions identified in the statistical F maps were examined further using ROI analyses. It should be noted that when RTs were regressed out, none of the reported effects changed.

Table 2.

Brain Regions Identified as Responding to Ambiguous Stimuli

| x | y | z | F | Region |

|---|---|---|---|---|

| Main Effect of Stimulus (Faces vs. Scenes) | ||||

| −33 | −39 | −21 | 364.66 | Left fusiform gyrus |

| 33 | −36 | −21 | 201.71 | Right fusiform gyrus |

| 3 | 36 | 6 | 37.0 | Right ACC |

| −6 | 45 | 15 | 23.78 | Left ACC |

| −3 | −42 | 39 | 32.61 | Left dACC |

| 57 | 12 | 24 | 28.14 | Right inferior frontal gyrus |

| −6 | 39 | 54 | 38.92 | Left medial superior frontal gyrus |

| −36 | 18 | 57 | 27.70 | Left superior frontal gyrus |

| −51 | 18 | −30 | 26.70 | Left superior temporal gyrus |

| −57 | −3 | −21 | 34.26 | Left middle temporal gyrus |

| 54 | −6 | −27 | 38.48 | Right inferior temporal gyrus |

| −27 | −90 | 33 | 97.35 | Left superior occipital gyrus |

| −39 | −84 | 9 | 170.36 | Left middle occipital gyrus |

| 36 | −84 | 18 | 124.58 | Right middle occipital gyrus |

| Main Effect of Task (Valence vs. Nonvalence) | ||||

| 0 | 18 | 48 | 69.82 | Medial superior frontal cortex |

| 6 | 30 | 27 | 35.09 | Right dACC |

| 42 | 24 | −9 | 47.56 | Right FO |

| −30 | 21 | −3 | 46.84 | Left insula |

| 45 | 6 | 36 | 34.42 | Right inferior frontal gyrus |

| −54 | 18 | 6 | 43.33 | Left inferior frontal gyrus |

| −30 | 51 | 9 | 24.26 | Left middle frontal gyrus |

| 30 | 48 | 18 | 15.07 | Right superior frontal gyrus |

| 48 | −42 | 0 | 18.51 | Right middle temporal gyrus |

| −12 | −78 | 6 | 18.42 | Left cuneus |

| 12 | −75 | 9 | 18.76 | Right cuneus |

| Stimulus × Task Interaction | ||||

| −30 | −63 | 33 | 21.78 | Left middle occipital gyrus |

| −39 | −54 | 48 | 17.61 | Left inferior parietal lobule |

| −6 | 51 | 21 | 16.89 | Left medial frontal gyrus |

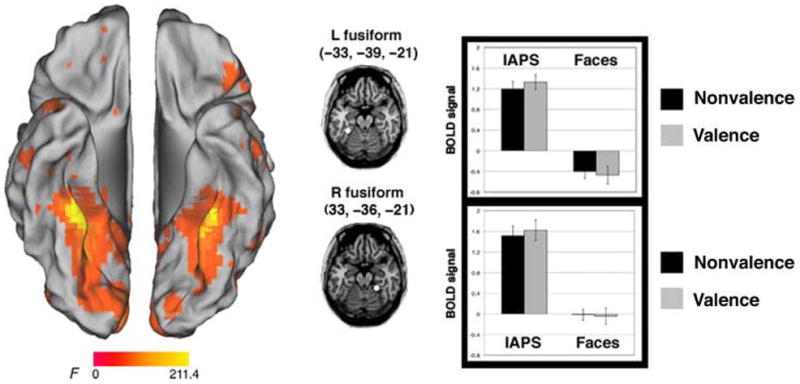

Brain Regions Preferentially Sensitive to Stimulus

The main effect of Stimulus revealed activations in inferior temporal cortex, with a peak on the bilateral medial fusiform gyrus (x, y, z: left = −33, −39, −21; right = 33, −36, −21; main effects of Stimulus, left: F(1, 29) = 191.0, p < .001; right: F(1, 29) = 126.3, p < .001). ROI analyses revealed that activity here was greater for the IAPS stimuli than the face stimuli (Figure 4). Many of the other regions that showed a main effect of Stimulus (Table 2) revealed a similar pattern (i.e., greater activity for IAPS than faces), with the exception of the right ACC (x, y, z = 3, 36, 6; main effects of stimulus: F(1, 29) = 45.8, p < .001) and the right inferior frontal gyrus (x, y, z = 57, 12, 24; main effects of Stimulus: F(1, 29) = 14.7, p = .001), which showed greater activity for the faces than the IAPS stimuli. Of these regions, only the latter one also showed a main effect of Task (F(1, 29) = 11.6, p = .002), with a preferential response to the valence, as compared with the nonvalence task. None of these regions showed a significant interaction (p > .1).

Figure 4.

Brain regions active for the main effect of stimulus. Activity in the bilateral ventral temporal cortex was greater for the IAPS, as compared with the face stimuli.

Brain Regions Preferentially Sensitive to Task

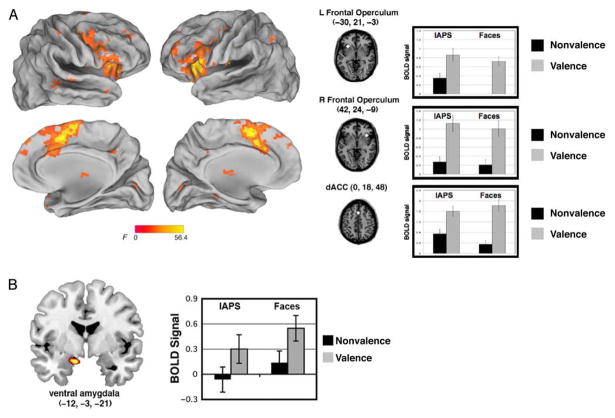

The main effect of Task revealed activity in the bilateral AI/FO (left = −31, 21, −3; right = 36, 21, −3; main effects of task, left: F(1, 29) = 38.9, p < .001; right: F(1, 29) = 49.1, p < .001) as well as in the dACC (x, y, z = 0, 18, 48; main effect of Task: F(1, 29) = 49.8, p < .001; Figure 5A). ROI analyses in all of the regions that showed a main effect of Task (Table 2) revealed that activity here was greater for the explicit valence judgment task than the nonvalence (gender and social) task for both categories (faces and scenes, respectively). Moreover, the left AI/FO and the left inferior frontal gyrus also showed a main effect of Stimulus (F(1, 29) = 12.5, p = .001; F(1, 29) = 15.6, p < .001; respectively), with a preferential response to the IAPS than face stimuli. Several of these regions, including the dACC, showed a significant interaction of Stimulus and Task (F(1, 29) = 5.4, p = .03), where there was a preferential response to the IAPS, but only during the nonvalence task.

Figure 5.

Brain regions active for the main effect of task. (A) Three task control regions, comprising the cingulo-opercular network, showed greater activity during the valence task, as compared with the nonvalence task (gender judgment for face stimuli, social judgment for IAPS scenes). (B) A region of ventral amygdala showed greater activity to ambiguous stimuli during the valence task.

Finally, with a lower significance threshold (see fMRI Analysis), we found a region of ventral amygdala (x, y, z = −12, −3, −21) also showed a main effect of Task. An ROI analysis was used to examine Stimulus (IAPS, faces) × Task (valence, nonvalence) effects. We confirmed this main effect of Task (F(1, 29) = 10.6, p = .003), but no other main effects or interactions were significant (ps > .2). Like the other regions showing a main effect of Task, there was greater activity here for the valence task as compared with the nonvalence task (Figure 5B).1

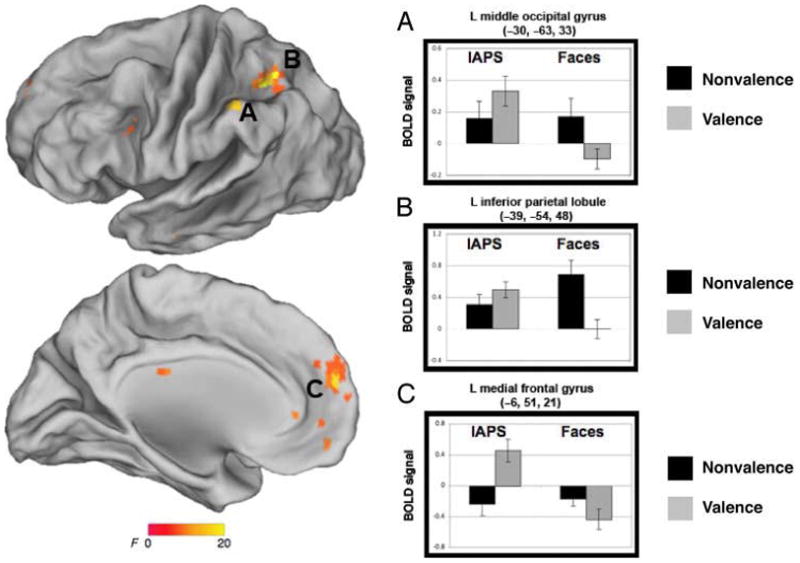

Brain Regions Exhibiting an Interaction of Stimulus and Task

Three distributed cortical regions demonstrated a crossover interaction, including the left middle occipital gyrus (x, y, z = −30, −63, 33; interaction: F(1, 29) = 16.0, p = .001), the left inferior parietal lobule (x, y, z = −39, −54, 48; interaction: F(1, 29) = 17.8, p < .001), and the left medial frontal gyrus (x, y, z = −6, 51, 21; F(1, 29) = 11.3, p = .002; Figure 6).

Figure 6.

Brain regions exhibiting an interaction of stimulus and task include (A) left medial frontal gyrus, (B) left inferior parietal lobule, and (C) left middle occipital gyrus.

DISCUSSION

The neural architecture for responding to ambiguity relies, in part, on cognitive control processing that is recruited when participants make a judgment that requires the resolution of ambiguity. We found that activity in regions comprising a task control network (i.e., cingulo-opercular network) provides a central part of this architecture, as it also has been shown to play a crucial role in processing ambiguity in other domains (i.e., linguistic, low-level perception). This domain-general processing is consistent with our behavioral results that demonstrated that individual differences in the bias to resolve ambiguity positively or negatively were correlated across the stimulus boundaries (i.e., the same participants who showed a positive bias when resolving ambiguity of facial expressions showed a similar positive bias when resolving ambiguity in scenes). Finally, we found that activity in the ventral amygdala was greater to faces and scenes that were rated explicitly along the dimension of valence, consistent with findings that the ventral amygdala tracks valence (Whalen et al., 2009; Kim et al., 2003). Here, we discuss the implications of these findings, specifically in the context of task control and specific emotion processing systems.

The Function of the Cingulo-opercular Network

A meta-analysis of 10 different tasks showed that three cortical regions, comprising the cingulo-opercular network (dACC and the bilateral AI/FO), showed three distinct types of task-control signals: (1) signals tied to the start of a task, which are likely related to the instantiation of task parameters; (2) activity sustained at a constant level across the task period, which likely reflects maintenance of those parameters; and (3) error-related activity, which is likely related to performance feedback (Dosenbach et al., 2006). Thus, this network has been referred to as a “core” task set system (Dosenbach et al., 2006, 2007), which is thought to be involved in controlling task performance through the stable maintenance of task parameters, as well as making and monitoring choices in accordance with those parameters (see Dosenbach, Fair, Cohen, Schlaggar, & Petersen, 2008, for a review).

Aside from these higher-order cognitive functions, there are widely replicated findings implicating the dACC in conflict monitoring (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Miller & Cohen, 2001). Moreover, the AI shows transient responses related to electrodermal arousal (Critchley, Elliott, Mathias, & Dolan, 2000), empathy (Singer et al., 2004), the generation of subjective feelings and integration of sensory inputs (see Medford & Critchley, 2010, for a review), and other socioemotional processing (Chang, Smith, Dufwenberg, & Sanfey, 2011; Sanfey, Rilling, Aronson, Nystrom, & Cohen, 2003; Lane, Fink, Chua, & Dolan, 1997). This region is also shown, via resting state functional connectivity (see Dosenbach et al., 2007; Fox, Corbetta, Snyder, Vincent, & Raichle, 2006; Fox et al., 2005) to coactivate with the dACC as part of a network that is nonspecifically involved in general goal-directed cognition (Chang, Yarkoni, Khaw, & Sanfey, 2012; Yarkoni, Poldrack, Nichols, Van Essen, & Wager, 2011; Dosenbach et al., 2006). Thus, task-related manipulations (e.g., conflict monitoring) that drive activity in one of these regions (dACC) is likely to also affect activation in the others (bilateral AI), providing further evidence that these regions comprise a network or system that is implicated in many instances requiring attentional resources or task control (see also Nee, Wager, & Jonides, 2007; Duncan & Owen, 2000, for meta-analyses of cognitive control paradigms).

As such, although the dACC and AI are independently implicated in specific processes (e.g., conflict monitoring, generating subjective feelings), the specific role of these regions likely lies in the domain of general task control. In other words, many different task demands are likely to require an ability to sustain attention, monitor task parameters, and modulate arousal (Nelson et al., 2010; Dosenbach et al., 2006), but this does not indicate that the specific role for these regions is to, for example, generate subjective feelings.

A Role for the Cingulo-opercular Network in Ambiguity

We found increased activity in this network in response to surprised faces and ambiguous IAPS, but only when making judgments that require the resolution of ambiguity. This network has been shown to respond to ambiguity in semantic (Thompson-Schill et al., 1997), visual motion (Sterzer et al., 2002), and face processing (Demos et al., 2004) paradigms. This is consistent with the notion that these regions are recruited during tasks where a stimulus–response mapping needs to be made concerning the ambiguous stimulus. In other words, ambiguity is defined here as something that can be interpreted in more than one way and, in turn, requires a selection between competing alternatives. Research in neuroeconomics has demonstrated a link between the AI and ambiguity in decision making, particularly in situations that require behavioral flexibility that is dependent on contextual analysis (Huettel, Stowe, Gordon, Warner, & Platt, 2006), although ambiguous decisions (multiple possible outcomes with unknown probabilities) are made more quickly than risky decisions (multiple possible outcomes with known probabilities). In other words, as in our own data showing that these regions were still active during explicit valence judgments even when RT was regressed out, activity in this network seems to be unrelated to time on task (see also Neta, Schlaggar, & Petersen, under revision, but see Grinband et al., 2011).

Recent work on perceptual recognition found that activity in the AI/FO region remained near baseline until the moment of recognition, suggesting a relation to the moment of the decision itself (Ploran et al., 2007). Similarly, the dACC was shown to learn from experiences by monitoring and integrating outcomes (Behrens, Woolrich, Walton, & Rushworth, 2007), playing a crucial role in the update (late) period of such decision making, not during the initial computation of uncertainty (see Singer, Critchley, & Preuschoff, 2009, for a review). These findings suggest that these regions may not be involved in the process of deciding how to resolve ambiguous stimuli, but rather, they are crucial at the point during which ambiguity is resolved per se. Taken together, these data support the notion that activity in the cingulo-opercular network is not simply related to task difficulty, and we propose that this activity is most readily observed in tasks that (1) require a decision relevant to the ambiguity (i.e., valence) and (2) require resolution rather than simple detection or perception of ambiguity. Future work can investigate how these transient responses to ambiguity relate to the transient error-related activity identified in the same regions (Neta et al., under revision).

Limitations

We note several limitations in interpreting the present findings. First, the IAPS stimuli were significantly more arousing than the face stimuli. As noted in the Methods section, this is somewhat expected given the nature of these stimulus categories, where the IAPS scenes carry much more information than individual facial expressions. Given this effect, the brain regions that were found to show a main effect of stimulus may be, at least in part, modulated by this difference in arousal. However, we focus here predominantly on the main effects of task, which is not impacted by these arousal effects.

Second, although we compared two stimulus categories, one of individual facial expressions and another that represents nonface emotional stimuli (IAPS scenes), we note that approximately half of our scenes contained (at least part of) faces and human forms. As such, the present data showing similar neural responses to ambiguity between these two stimulus sets might still require a social element, and the present result can be mostly cautiously summarized by saying that this network was similarly involved in processing ambiguity to directly presented faces and other faces within more complex social scenes.

Finally, based on previous work showing that a region of the dorsal amygdala tracks surprised faces during passive viewing (Kim et al., 2003), we predicted that this region would show greater activity to stimuli with an ambiguous, as compared with clear valence, and that this effect might be modulated by task demands. Indeed, this would fit well with nonhuman animal work implicating the amygdala in associative orienting, meaning that it functions to alert other brain areas in instances of uncertainty when there is a greater amount to learn (Gallagher, Graham, & Holland, 1990; Kapp, Frysinger, Gallagher, & Haselton, 1979). However, we did not find a region of dorsal amygdala in the omnibus ANOVA in the current study. It could be that our task manipulation did not allow for the sensitivity to pick up on the amygdala response to ambiguity in the implicit nonvalence task (as our previous work has demonstrated such an effect only for passive viewing). Indeed, meta-analyses have demonstrated that amygdala responses to emotional stimuli can be diminished during an active task, as compared with passive viewing (Costafreda, Brammer, David, & Fu, 2008). Future work might help to disentangle these effects by directly comparing passive viewing to responses during both explicit and implicit evaluations of ambiguous stimuli.

Conclusions

The brain regions that were recruited while making a valence judgment about ambiguously valenced stimuli (i.e., the cingulo-opercular network, ventral amygdala) were active for both the ambiguous faces (surprised expressions) and scenes. Moreover, the bias with which people resolve ambiguity is consistent across these categories of stimuli. Thus, the neural and behavioral responses required when resolving ambiguity transcends stimulus boundaries and is better defined by an uncertainty in stimulus–response mapping that is created when people are required to make a selection between competing alternatives. At a weaker threshold, a targeted analysis of the amygdala based on previous data showed that the dorsal amygdala showed the highest response to surprised faces, but only during the nonvalence task. Together, these data suggest that, although some of the domain-general processes that come on-line in the “face” of ambiguity are similar across many categories of ambiguity (faces and scenes in the present work; semantic, visual motion, and economic decision-making ambiguity in previous work), there may be other dedicated circuits for some social and emotional ambiguity and, specifically, for biologically relevant learning situations involving faces.

Acknowledgments

We thank J. V. Haxby for advice on experimental design and comments on the manuscript as well as S. E. Petersen and D. D. Wagner for fruitful discussions about results. We also thank G. Wolford for statistical advising and comments on the manuscript, C. J. Norris for advice on experimental design, and A. L. Palmer and S. V. Fogelson for discussion about methods. Finally, we thank R. A. Loucks for assistance in recruitment of participants and help with data collection and survey data entry. This work was supported by NIMH 080716.

Footnotes

Given our previous demonstrations of amygdala responses to ambiguous facial expressions during passive viewing (Davis et al., 2010; Whalen et al., 2009; Kim et al., 2003), we predicted that there would be a dorsal amygdala response to ambiguity that was also modulated by task demands but may be limited to face stimuli. Accordingly, a small volume correction (see fMRI Analysis) revealed a region in the dorsal amygdala (x, y, z = −24, −3, −9) that was more active for surprised as compared with clearly valenced expressions, only for the nonvalence task. This effect was not observed for the IAPS at this more liberal threshold.

References

- Andersson JLR, Hutton C, Ashburner J, Turner R, Friston KJ. Image distortion correction in fMRI: A quantitative evaluation. Neuroimage. 2001;16:217–240. doi: 10.1006/nimg.2001.1054. [DOI] [PubMed] [Google Scholar]

- Beck AT, Ward C, Mendelson M. Beck Depression Inventory (BDI) Archives of General Psychiatry. 1961;4:561–571. doi: 10.1001/archpsyc.1961.01710120031004. [DOI] [PubMed] [Google Scholar]

- Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychological Review. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Brunsson N. The irrational organization: Irrationality as a basis for organizational action and change. Chichester, UK: Wiley; 1985. [Google Scholar]

- Chang LJ, Smith A, Dufwenberg M, Sanfey AG. Triangulating the neural, psychological, and economic bases of guilt aversion. Neuron. 2011;70:560–572. doi: 10.1016/j.neuron.2011.02.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang LJ, Yarkoni T, Khaw MW, Sanfey AG. Decoding the role of the insula in human cognition: Functional parcellation and large-scale reverse inference. Cerebral Cortex. 2012 doi: 10.1093/cercor/bhs065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbin RM. Decisions that might not get made. In: Wallsten T, editor. Cognitive processes in choice and decision behavior. Hillsdale, NJ: Erlbaum; 1980. pp. 47–67. [Google Scholar]

- Costafreda SG, Brammer MJ, David AS, Fu CHY. Predictors of amygdala activation during the processing of emotional stimuli: A meta-analysis of 385 PET and fMRI studies. Brain Research Reviews. 2008;58:57–70. doi: 10.1016/j.brainresrev.2007.10.012. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Elliott R, Mathias CJ, Dolan RJ. Neural activity relating to generation and representation of galvanic skin conductance responses: A functional magnetic resonance imaging study. Journal of Neuroscience. 2000;20:3033–3040. doi: 10.1523/JNEUROSCI.20-08-03033.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis FC, Johnstone T, Mazzulla EC, Oler JA, Whalen PJ. Regional response differences across the human amygdaloid complex during social conditioning. Cerebral Cortex. 2010;20:612–621. doi: 10.1093/cercor/bhp126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demos KE, Wig GS, Moran JM, Kelley WM. A role for ambiguity resolution in left inferior prefrontal cortex. Washington, DC: Society for Neuroscience; 2004. [Google Scholar]

- Dosenbach NUF, Fair DA, Cohen AL, Schlaggar BL, Petersen SE. A dual-networks architecture of top–down control. Trends in Cognitive Sciences. 2008;12:99–105. doi: 10.1016/j.tics.2008.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosenbach NUF, Fair DA, Miezin FM, Cohen AL, Wenger KK, Dosenbach RAT, et al. Distinct brain networks for adaptive and stable task control in humans. Proceedings of the National Academy of Sciences, USA. 2007;104:11073–11078. doi: 10.1073/pnas.0704320104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosenbach NUF, Visscher KM, Palmer ED, Miezin FM, Wenger KK, Hyunseon CK, et al. A core system for the implementation of task sets. Neuron. 2006;50:799–812. doi: 10.1016/j.neuron.2006.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends in Neurosciences. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Fox MD, Corbetta M, Snyder AZ, Vincent JL, Raichle ME. Spontaneous neuronal activity distinguishes human dorsal and ventral attention systems. Proceedings of the National Academy of Sciences, USA. 2006;103:10046–10051. doi: 10.1073/pnas.0604187103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proceedings of the National Academy of Sciences, USA. 2005;102:9673–9678. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher M, Graham PW, Holland PC. The amygdala central nucleus and appetitive Pavlovian conditioning: Lesions impair one class of conditioned behavior. Journal of Neuroscience. 1990;10:1906–1911. doi: 10.1523/JNEUROSCI.10-06-01906.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grinband J, Savitskaya J, Wager TD, Teichert T, Ferrera VP, Hirsch J. The dorsal medial frontal cortex is sensitive to time on task, not response conflict or error likelihood. Neuroimage. 2011;57:303–311. doi: 10.1016/j.neuroimage.2010.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Johnstone T, Somerville LH, Alexander AL, Oakes TR, Davidson RJ, Kalin NH, et al. Stability of amygdala BOLD response to fearful faces over multiple scan sessions. Neuroimage. 2005;25:1112–1123. doi: 10.1016/j.neuroimage.2004.12.016. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Slovic P, Tversky A, editors. Judgment under uncertainty: Heuristics and biases. Cambridge: Cambridge University Press; 1982. [DOI] [PubMed] [Google Scholar]

- Kapp BS, Frysinger M, Gallagher M, Haselton JR. Amygdala central nucleus lesions: Effect on heart rate conditioning in the rabbit. Physiology Behavior. 1979;23:1009–1117. doi: 10.1016/0031-9384(79)90304-4. [DOI] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, Alexander A, Whalen PJ. Inverse amygdala and medial prefrontal cortex responses to surprised faces. NeuroReport. 2003;14:2317–2322. doi: 10.1097/00001756-200312190-00006. [DOI] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, Polis S, Alexander AL, Shin LM, et al. Contextual modulation of amygdala responsivity to surprised faces. Journal of Cognitive Neuroscience. 2004;16:1730–1745. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Kim MJ, Loucks RA, Neta M, Davis FC, Oler JA, Mazzulla EC, et al. Behind the mask: The influence of mask-type on amygdala responses to fearful faces. Social Cognitive & Affective Neuroscience. 2010;5:363–368. doi: 10.1093/scan/nsq014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane RD, Fink GR, Chua P, Dolan RJ. Neural activation during selective attention to subjective emotional responses. NeuroReport. 1997;8:3969–3972. doi: 10.1097/00001756-199712220-00024. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Gainesville, FL: University of Florida; 2008. [Google Scholar]

- Levy I, Snell J, Nelson AJ, Rustichini A, Glimcher PW. Neural representation of subjective value under risk and ambiguity. Journal of Neurophysiology. 2010;103:1036–1047. doi: 10.1152/jn.00853.2009. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. The Karolinska Directed Emotional Faces–KDEF. CD ROM from Department of Clinical Neuroscience Psychology section. Karolinska Institutet; 1998. [Google Scholar]

- MacDonald MC, Pearlmutter NJ, Seidenberg MS. The lexical nature of syntactic ambiguity resolution. Psychological Review. 1994;101:676–703. doi: 10.1037/0033-295x.101.4.676. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Medford N, Critchley HD. Conjoint activity of anterior insular and anterior cingulate cortex: Awareness and response. Brain Structure and Function. 2010;214:535–549. doi: 10.1007/s00429-010-0265-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Nee DE, Wager TD, Jonides J. Interference resolution: Insights from a meta-analysis of neuroimaging tasks. Cognitive and Affective Behavior Neuroscience. 2007;7:1–17. doi: 10.3758/cabn.7.1.1. [DOI] [PubMed] [Google Scholar]

- Nelson SM, Dosenbach NUF, Cohen AL, Wheeler ME, Schlaggar BL, Petersen SE. Role of the anterior insula in task-level control and focal attention. Brain Structure and Function. 2010;214:669–680. doi: 10.1007/s00429-010-0260-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Norris CJ, Whalen PJ. Corrugator muscle responses are associated with individual differences in positivity–negativity bias. Emotion. 2009;9:640–648. doi: 10.1037/a0016819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Schlaggar BL, Petersen SE. Seperable responses to error, ambiguity, and reaction time in cingulo-opercular task control regions. doi: 10.1016/j.neuroimage.2014.05.053. under revision. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neta M, Whalen PJ. The primacy of negative interpretations when resolving the valence of ambiguous facial expressions. Psychological Science. 2010;21:901–907. doi: 10.1177/0956797610373934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Huettel SA. Risky business: The neuroeconomics of decision making under uncertainty. Nature Neuroscience. 2008;11:398–403. doi: 10.1038/nn2062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ploran EJ, Nelson SM, Velanova K, Donaldson DI, Petersen SE, Wheeler ME. Evidence accumulation and the moment of recognition: Dissociating perceptual recognition processes using fMRI. Journal of Neuroscience. 2007;27:11912–11924. doi: 10.1523/JNEUROSCI.3522-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodd JM, Davis MH, Johnsrude IS. The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cerebral Cortex. 2005;15:1261–1269. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD. The neural basis of economic decision-making in the Ultimatum Game. Science. 2003;300:1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- Singer T, Critchley HD, Preuschoff K. A common role of insula in feelings, empathy, and uncertainty. Trends in Cognitive Sciences. 2009;13:334–340. doi: 10.1016/j.tics.2009.05.001. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O’Doherty J, Kaube H, Dolan RJ, Frith CD. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Spielberger CD, Gorsuch RL, Lushene RE. STAI-Manual for the State Trait Anxiety Inventory. Palo Alto, CA: Consulting Psychologists Press; 1988. [Google Scholar]

- Sterzer P, Russ MO, Preibisch C, Kleinschmidt A. Neural correlates of spontaneous direction reversals in ambiguous apparent visual motion. Neuroimage. 2002;15:908–916. doi: 10.1006/nimg.2001.1030. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, D’Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proceedings of the National Academy of Sciences, USA. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. Judgment under uncertainty: Heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Davis FC, Oler JA, Kim H, Kim MJ, Neta M. Human amygdala responses to facial expressions of emotion. In: Whalen PJ, Phelps EA, editors. The human amygdala. New York: Guilford Press; 2009. pp. 265–288. [Google Scholar]

- Yarkoni T, Poldrack RA, Nichols TE, Van Essen DC, Wager TD. Large-scale automated synthesis of human functional neuroimaging data. Nature Methods. 2011;8:665–670. doi: 10.1038/nmeth.1635. [DOI] [PMC free article] [PubMed] [Google Scholar]