Abstract

The World Health Organization (WHO) guidelines for monitoring the effectiveness of HIV treatment in resource-limited settings (RLS) are mostly based on clinical and immunological markers (e.g., CD4 cell counts). Recent research indicates that the guidelines are inadequate and can result in high error rates. Viral load (VL) is considered the “gold standard”, yet its widespread use is limited by cost and infrastructure. In this paper, we propose a diagnostic algorithm that uses information from routinely-collected clinical and immunological markers to guide a selective use of VL testing for diagnosing HIV treatment failure, under the assumption that VL testing is available only at a certain portion of patient visits. Our algorithm identifies the patient sub-population, such that the use of limited VL testing on them minimizes a pre-defined risk (e.g., misdiagnosis error rate). Diagnostic properties of our proposal algorithm are assessed by simulations. For illustration, data from the Miriam Hospital Immunology Clinic (RI, USA) are analyzed.

Keywords: Antiretroviral failure, constrained optimization, HIV/AIDS, resource limited, ROC, tripartite classification

1. INTRODUCTION

According to a recent report of the World Health Organization (WHO) (WHO 2010a), almost 40 million people world-wide are infected with human immunodeficiency virus (HIV). Among them, over 97% live in resource-limited settings (RLS), particularly in sub-Saharan Africa (UNAIDS 2010). Although the number of people living with HIV remains high, the mortality rate due to acquired immune deficiency syndrome (AIDS) has started to decline since 2006 (UNAIDS 2009), due in large part to the successful rollout of HIV antiretroviral treatment (ART) in RLS (WHO 2010b).

With more and more people having access to ART, treatment failure is inevitable and must be anticipated. Treatment failure occurs when antiretroviral medications fail to control HIV replication in infected patients. Common causes of treatment failure include lack of proper medication adherence and development of drug resistance. The former may be addressed by reinforcing adherence (Gardner et al. 2009), while the latter usually mandates a switch to a more effective “next line” ART regimen (e.g., from a first- to a second-line regimen).

Monitoring the effectiveness of HIV treatment and correctly diagnosing treatment failure in a timely manner is critical for preventing HIV-related morbidity and mortality and transmission of the virus. Incorrect diagnosis of treatment failure can lead to undesired consequences and compromise the success that has been achieved by rolling out ART in RLS. Specifically, failure to diagnose treatment failure can result in continued viral replication, deterioration of patient’s immune system, extra clinical costs such as treatment of opportunistic infections, increased risk of HIV transmission, selection of resistant strains, and death (Anderson and Bartlett 2006; Calmy et al. 2007; Vekemans et al. 2007). Meanwhile, incorrectly diagnosing patients as having treatment failure when in fact they do not can prompt a premature switch to the next-line ART. This generates unnecessary financial burden (second-line therapies cost up to ten times more than first-lines) and potentially accelerates progression toward resistance to next-line therapies, which are most probably the last line in RLS (Vekemans et al. 2007).

In resource-rich countries such as those in much of western Europe and North America, viral load (VL) testing is routine for HIV treatment monitoring (Thompson et al. 2010; DHHS 2011). In this paper, VL refers to the amount of HIV in the blood as measured using nucleic acid amplification (Hammer et al. 2006). It is a marker that directly reflects the effectiveness of HIV treatment. Although HIV cannot be eradicated now, patients with adequate adherence can be expected to have viral suppression, which generally means that VL is below the lower detection limit of the assay being used (assays used for clinical purposes have lower detection limits of between 20 and 1000 copies/mL). A patient on adequate ART who has detectable VL after having previously reached an undetectable level is said to have virological treatment failure (hereafter “viral failure” or “treatment failure”), an indication that the particular treatment regimen may no longer be effective.

In RLS, VL testing is either limited or not available due to factors such as cost, lack of facilities, and lack of properly trained personnel (Fiscus et al. 2006; Calmy et al. 2007; Schooley 2007). Therefore, diagnosis of HIV treatment failure is commonly made using lower-cost and less accurate markers such as current CD4 cell count, CD4 percent among all lymphocytes, and relative changes in these measures since last visit; and clinical indicators such as opportunistic infections, weight loss, and HIV-related malignancies. Indeed, these immunological and clinical markers form the basis of HIV treatment monitoring guidelines as recommended by the WHO (Calmy et al. 2007; WHO 2010a). These guidelines are widely adopted by countries in sub-Saharan Africa (e.g., Malawi 2003; Uganda 2003; Zambia 2004; Kenya 2005) and other developing regions.

Although CD4-based markers are generally associated with VL, a consensus has been reached recently that their use for diagnosing HIV treatment failure is prone to high misclassification rates (Deeks et al. 2000, 2002; Moore et al. 2005; Bisson et al. 2006; Schechter and Tuboi 2006; Tuboi et al. 2007; Bisson et al. 2008; Mee et al. 2008; Castelnuovo et al. 2009; Kantor et al. 2009; Keiser et al. 2009; Meya et al. 2009; Reynolds et al. 2009; Kiragga et al. 2012). Data from a recent study of patients receiving care through the Academic Model Providing Access to Healthcare (AMPATH) in western Kenya show that almost 40% of those having treatment failures would have been incorrectly diagnosed based on the WHO guidelines (Kantor et al. 2009).

Several studies have investigated monitoring HIV treatment using markers in addition to or instead of CD4 cell count (Bagchi et al. 2007; Kantor et al. 2009; Foulkes et al. 2010; Abouyannis et al. 2011). Bagchi et al. (2007) showed that weight loss is associated with treatment failure but pointed out that its clinical utility is limited because weight is influenced by many factors. Kantor et al. (2009) found in a Kenyan cohort that time on therapy and change in CD4 percent can be potentially incorporated into CD4-based rules to improve the diagnosis of treatment failure. Abouyannis et al. (2011) developed and tested a scoring system that incorporates CD4 count, mean cell volume, medication adherence, and HIV-associated clinical events for diagnosing treatment failure. Foulkes et al. (2010) proposed a prediction-based classification method that combines multiple time-varying clinical measures for predicting treatment failure. Each of these studies focuses on augmenting or replacing CD4 count with other immunological and clinical markers, assuming that VL testing is completely unavailable. Potential improvements are demonstrated, but often found to be marginal.

In this paper, we consider augmenting rules of diagnosing treatment failure based on low-cost markers (such as CD4 cell count) with a selective use of VL testing, under the assumption that VL testing can be ordered only for a fixed portion of patient visits. Our approach is motivated by the fact that several HIV care programs in developing countries have started to conduct VL testing for some of their patients. For example, as a result of the study by Kantor et al. (2009), AMPATH is currently conducting VL testing at about ten percent of its patient visits when treatment failure is suspected. Our approach is also motivated by the expectation that as technology and training advance (e.g., Greengrass et al. 2009), VL testing will be more affordable, even if substantially limited in the near future.

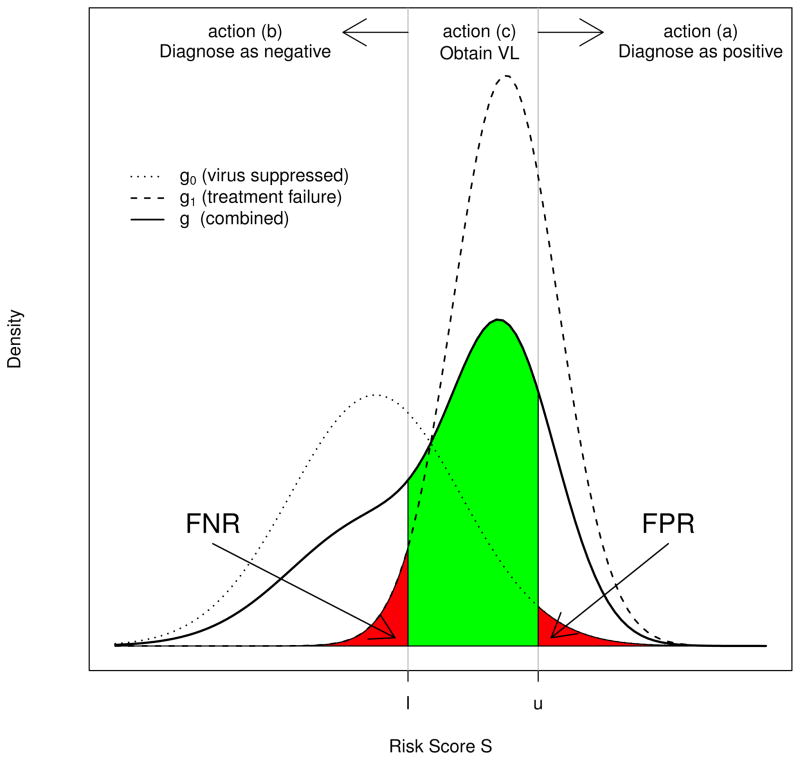

Assuming that VL testing is available but at a fixed portion of patient visits, we propose a tripartite classification procedure to triage VL testing based on a risk score S derived from low-cost non-VL markers. Specifically, the resulting tripartite diagnostic rule comprises two cut-off values l and u on S, with l ≤ u, that classify HIV patients into three mutually exclusive categories (refer to Figure 1), and correspondingly takes one of the following three actions for each category.

Figure 1.

Risk score distributions and diagnosis actions.

Those with S > u are diagnosed as failing treatment,

Those with S ≤ l are diagnosed as non-failing, and

Those with l < S ≤ u are designated for VL testing, which will provide an error-free diagnosis.

The tripartite diagnostic rule is designed to minimize a pre-specified risk (e.g., misclassification) subject to the constraint on the availability of VL assays. To identify the optimal rule, we develop both nonparametric and semiparametric approaches to inference about l and u. We also develop a receiver operating characteristic (ROC) analysis procedure for a general assessment of candidate tripartite rules. The ROC curve and the area under the ROC curve (AUC) provide a comprehensive measure of diagnosis capacity of tripartite rules, and allow us to evaluate the potential improvement that can be achieved by increasing VL testing availability. ROC analysis of tripartite rules has many statistical properties that are similar to conventional ROC analysis of bipartite rules.

The rest of the paper is organized as follows: Notations, definitions, and criteria for rule development are given in Section 2; nonparametric and semiparametric approaches to optimal rule selection are presented in Section 3; ROC analysis of tripartite rules is described in Section 4; and simulation studies are conducted in Section 5. For illustration, data from the HIV Immunology Clinic of the Miriam Hospital (RI, USA) are analyzed in Section 6. We conclude with a summary and discussion of future research in Section 7.

2. NOTATIONS AND DEFINITIONS

2.1 HIV Viral Status and Risk Score

The objective of HIV treatment monitoring is to diagnose viral failure. Let V denote a patient’s (possibly unmeasured) viral load. Viral failure is said to occur when V exceeds a pre-specified threshold v*, where v* is typically the lower detection limit of the VL assay being used. Let Z = 1(V > v*) denote viral status with Z = 1 indicating a viral failure and 0 otherwise, where 1(·) is the indicator function. The prevalence of viral failure is denoted by p = Pr(Z = 1). At each patient encounter, a set of immunological, clinical, and demographic markers is usually collected, which may include CD4 count, CD4 percent among all lymphocytes (and recent changes in both), WHO stage, time on therapy, hemoglobin, weight, age, gender, and adherence measures. Henceforth, these markers are generically referred to as low-cost clinical markers and denoted by a vector X.

For each individual, these clinical markers are translated into a scalar risk score S = S(X). Several recent studies have proposed versions of S(X) for determining the risk of treatment failure (e.g., Lynen et al. 2009; Meya et al. 2009; Abouyannis et al. 2011). If S(X) is a predicted probability of viral failure given X, it can be derived using logistic regression, regression trees, or other types of prediction-based classification methods (e.g., Pepe and Thompson 2000; Hastie et al. 2001; Foulkes et al. 2010; Justice et al. 2010; van der Laan 2011). In this paper, we assume that the functional form of S(·) is known, but note that finding and validating an optimal form of S(X) is an important topic of research (see Huang et al. 2007; Pepe et al. 2008; Steyerberg et al. 2010; Pepe 2011)

Let G1 and G0 denote the distributions of S for patients with viral failure (Z = 1) and viral suppression (Z = 0), and g1 and g0 denote their associated densities, respectively. The population distribution of S is therefore a mixture distribution G = (1 − p)G0 + pG1, whose density is denoted by g. We assume that for independent observations S and S′, where S ~ G1 and S′ ~ G0, S is stochastically greater than S′ in the sense that on average, patients with viral failure have higher risk scores. An illustration of g0, g1, and g leading to a hypothetical distribution of S is presented in Figure 1.

2.2 Classification Cut-offs and Tripartite Rules

The tripartite diagnostic rule can be formalized as follows. Let l and u, with l ≤ u, subdivide the population into three categories: those whose risk of treatment failure is high (S > u), low (S ≤ l), or intermediate (S ∈ ℐ ≡ (l, u]). Let δℐ (S) denote the diagnostic decision based on S, with δℐ (S) = 1 indicating a treatment failure diagnosis and δℐ (S) = 0 a non-failing diagnosis. Then our tripartite rule is expressed as

| (1) |

This rule obtains the gold standard measurement for the intermediate risk subpopulation {S ∈ ℐ}, which carries the greatest uncertainty about true viral status. Note that when S ∈ ℐ, the diagnosis decision corresponds to the true viral failure status and therefore leads to a correct diagnosis.

2.3 Loss and Risk Functions

Let L(d, z) denote the loss or cost incurred when the true viral failure status is Z = z and a diagnostic decision d is taken. Two commonly used loss functions in studies of medical diagnosis are L1(d, z) = 1(d ≠ z), which indicates whether a misdiagnosis occurs, and L2(d, z) = {1(d = 0, z = 1), 1(d = 1, z = 0)}⊤, which indexes misdiagnoses separately for those with viral failure (i.e., false negative, FN) and those without (i.e., false positive, FP). Loss functions can be made more elaborate and extended to incorporate potential costs as well as benefits of correct and incorrect diagnoses (e.g., expected mortality, cost of switching to next-line therapies, and gain of life expectancy); see Parmigiani (2002) for further discussions.

The development of our diagnostic rule also uses a weighted loss function

where λ ∈ [0, 1] is a user-specified weight that reflects relative loss for the two types of misdiagnoses. At the extremes, setting λ = 1 places the highest priority on avoiding FN (incorrectly diagnosing a patient as non-failing), while λ = 0 prioritizes avoidance of FP (incorrectly diagnosing a patient as treatment failure). An appropriate and meaningful value of λ should be contextually specific and take into account the available information about patient’s health status and various costs associated with FP and FN.

The overall diagnostic accuracy of a diagnostic rule is summarized by a risk function defined as R(ℐ) = E[L(δℐ (S), Z)], where the expectation is taken over the joint distribution of (S, Z)⊤ (Berger 1985). For the loss function L1, R1(ℐ) = E[1{δℐ (S) ≠ Z}] is the total misclassification rate (TMR). For L2, R2(ℐ) = {pFNR, (1 −p)FPR}⊤, where FNR and FPR are the FN and FP rates, respectively. For L3, we have a weighted sum of FPR and FNR

| (2) |

where the weights depend on both λ and the prevalence of viral failure. Risk function R3(ℐ; λ) is one form of ‘net benefit’ functions that have been used in decision curve analyses and utility analyses (Vickers and Elkin 2006; Baker 2009). As a special case when λ = .5, minimizing R3(ℐ; .5) is equivalent to minimizing R1(ℐ).

In the next section, we develop methods for obtaining optimal rules under the risk criteria R1(ℐ) and R3(ℐ; λ). The optimal rules that minimize R1(ℐ) and R3(ℐ; λ) are called the min-TMR rules and min-3 rules, respectively. In Section 4, the vector-valued risk function R2(ℐ) is used to develop a ROC analysis procedure for a general assessment of tripartite diagnostic rules.

3. OPTIMAL RULE SELECTION: CONSTRAINED OPTIMIZATION

3.1 Characterization of Constraints on Gold Standard Testing

Suppose that VL tests can be ordered for a fixed portion φ of patient visits, where 0 ≤ φ ≤ 1. Then the proposed tripartite rules must satisfy the constraint

| (3) |

In the extreme cases, φ = 0 means that no VL testing is available, while φ = 1 means that it is available at all patient visits.

Tripartite diagnostic rules that satisfy (3) can be infinitely many, because if δℐ (s) satisfies (3), so does δℐ′(s) for all ℐ′ ⊂ ℐ. We therefore restrict attention only to those rules that take maximum advantage of the available VL tests. All such rules form our decision space. Specifically, the decision space is defined as the set with the condition that for any , there does not exist another rule δℐ′(s) with ℐ′ ⊃ ℐ and satisfying (3).

For a given risk function R(·) and a decision space , the optimal rule is defined as

| (4) |

where ℐ* indicates the optimal cut-offs on S for triaging the VL tests. We assume that the optimal rule is unique.

3.2 Optimal Rule Selection

In this section, we develop nonparametric and semiparametric approaches to determining the optimal rule from . The nonparametric approach places no distributional assumption on either G0 or G1 and can therefore be broadly applied. The semiparametric approach assumes that G0 and G1 follow an exponential tilt model, whereby the densities g0(s) and g1(s) differ only by a factor proportional to exp(β1s), where β1 is an unknown scalar parameter (called the tilting parameter). In Section 5, we use simulations to show that when the exponential tilt assumption holds, the semiparametric approach is generally more efficient in estimating the optimal rule when sample size is large.

Nonparametric Approach

Suppose that we have a training data set of independent pairs (S1, Z1), …, (Sn, Zn). We first estimate G1, G0, and G empirically via

with . To determine the optimal rule using (4), we then obtain the empirical decision space by the following steps:

Write L̃ = (l̃1, l̃2, …, l̃n)⊤ = (S(1), S(2), …, S(n))⊤, where l̃j = S(j) is the j-th order statistic of S = (S1, …, Sn)⊤.

For each l̃j, calculate ũj = arg maxu∈S{Ĝ(u) − Ĝ(l̃j) ≤ φ}. Let Ũ = (ũ1, ũ2, …, ũn)⊤.

Fore ũj and ũj′ ∈ Ũ, j < j′, if ũj = ũj′, drop l̃j′ from L̃ and uj′ from Ũ. Denote the resulting vectors by L̂ = (l̂1, l̂2, …, l̂m)⊤ and Û = (û1, û2, …, ûm)⊤ with m ≤ n.

The empirical decision space is given by with .

With the empirical decision space , the optimal rule is then estimated via (4) with replaced by . This can be carried out using a grid search. For example, to estimate the optimal rule that minimizes TMR, we calculate and for j = 1, …, m. Then, the optimal min-TMR rule is the rule in that has a risk equal to . Similarly, to identify the rule that minimizes R3(ℐ; λ) for a pre-specified λ, we select the rule in that has a risk of .

Semiparametric Approach

The exponential tilt model has been used to characterize the relationship between components of a mixture distribution (Anderson 1972, 1979; Prentice and Pyke 1979; Efron 1981; Qin 1999). The model places no parametric assumptions on individual components of the mixture, except assuming that they differ only by a factor of the form

| (5) |

where β1 is an unknown tilting parameter and is a normalizing constant. Although no constraints are placed on g0, many commonly-used parametric distribution families can be represented in the form of (5), such as binomial, Poisson, normal with a common variance, and gamma distributions with a common shape parameter. In our case, the exponential tilt model is equivalent to the logistic model

| (6) |

with logit(y) = log{y/(1 − y)}and .

When the exponential tilt assumption holds, we can estimate G0 and G1 semiparametrically using the results in Appendix A.1, and then estimate the optimal rule using a grid-search in a similar way to what has been described in the last section.

If our goal is to identify a rule that minimizes TMR, it turns out that we can readily determine this rule without calculating the semiparametric estimates of G0 and G1. To see this, we write Γ(l, u, τ) = R1(ℐ) + τ(G(u) − G(l) − φ), and apply the Lagrange multiplier to solve ∂Γ/∂(l, u, τ)⊤ = 0. It is straightforward to verify that the resulting rule must satisfy

That is, the optimal interval ℐ* for triaging the limited VL testing is centered at − β̂0/β̂1, independent of the VL test availability φ. The optimal cut-off values therefore can be estimated by l̂ = −β̂0/β̂1 − Δφ and û = −β̂0/β̂1 + Δφ, where β̂0 and β̂1 are parameter estimates of the logistic model (6) and

In the above equation, the empirical estimate Ĝ is used because the semiparametric estimate of G under the exponential tilt model is the same as Ĝ.

Uniqueness of Estimated Optimal Rule

The estimated optimal rule based on a finite data set may not be unique, even though the true optimal rule is unique. When there are multiple rules that meet the optimality criterion, we propose to impose additional secondary criteria so as to determine a single optimal rule. For example, to determine an optimal rule from multiple rules that equally minimize R3(ℐ; λ), we may consider adding R1(ℐ) as a secondary criterion and choosing one from these rules that has the lowest TMR. It is also reasonable to randomly choose one for practical use if the estimated optimal rules differ little.

4. ROC ANALYSIS

ROC analyses have been widely used to assess the overall diagnostic accuracy of bipartite classification rules. An ROC curve is a graphical presentation of the risk function R2(·) associated with all candidate rules in a decision space. Comprehensive reviews of ROC analyses in biomedical research can be found in Pepe (2000), Zhou et al. (2002, Ch 2), Pepe (2003, Chs 4-5), and Gatsonis (2009). Recent applications of ROC analyses in studies of HIV-infected populations include Pahwa et al. (2008), Joska et al. (2011), and Mabeya et al. (2012), among many others.

4.1 ROC Curve for Tripartite Rules and AUC

ROC analyses for tripartite rules can be carried out in a fashion similar to conventional ROC analyses. With each rule in represented by a point in a 2-dimensional space with its (FPR, 1−FNR) as the coordinates, an ROC curve for tripartite rules can be generated by connecting these points using a non-decreasing curve. Mathematically, we can express the ROC curve for tripartite rules as

| (7) |

where is the generalized inverse of a cadlag function, Hφ(u) = arg infw{G(u) − G(w) ≤ φ}, and ‘∘’ denotes the function composition operator. Note that the difference between (7) and a conventional ROC curve is the operation induced by Hφ, which dictates that for each u and resulting FPR, the corresponding FNR is calculated based on a lower cut-off l = Hφ(u) ≤ u.

The area under the ROC curve (AUC) for tripartite rules is defined as,

| (8) |

Like AUC for bipartite classification rules, AUCφ provides an omnibus measure of diagnostic capability of all candidate rules in . It can be interpreted as the true positive rate averaged across all FNRs. In Appendix A.2, we present several properties of AUCφ, which turn out to be very similar to the AUC from a conventional ROC analysis.

As a special case when φ = 0, Cφ(t) and AUCφ reduce to conventional ROC curve and AUC for bipartite rules.

4.2 Estimation

With a training data set of independent (S1, Z1), …, (Sn, Zn) and a given φ, we can estimate the ROC curve for tripartite rules nonparametrically by

| (9) |

where Ĝ0 and Ĝ1 are empirical estimates, and Ĥφ(u) = arg minw{Ĝ(u) − Ĝ(w) ≤ φ}. The estimated ROC curve is a step function with jumps only at points representing the rules in . When the exponential tilt assumption holds, the ROC curve also can be estimated semiparametrically by replacing Ĝ0 and Ĝ1 in (9) by their corresponding semiparametric estimates given in Appendix A.1.

Using the results in the Appendix A.2 (See Eq.(A.1)), we can estimate AUCφ nonparametrically by

| (10) |

In Appendix A.3, we present several large-sample properties of the nonparametric estimates Ĉφ(t) and .

4.3 Using ROC curve for Rule Selection

An ROC curve for tripartite rules also can be used for optimal rule selection, recognizing that the diagnostic properties of each rule in are characterized by a point on the curve. For example, if the ROC curve is smooth and concave, it can be verified that the min-λ rule corresponds to the point on the ROC curve where the tangent is equal to (1−λ)(1−p)/(λp) (Metz 1978). Broader discussions on using ROC curves for optimal rule selection can be found in Zhou et al. (2002) and Pepe (2003).

5. SIMULATION STUDIES

In this section, we conduct simulation studies to examine 1) the diagnostic accuracy of the optimal rules estimated by the nonparametric and semiparametric approaches, and 2) the large-sample properties of the estimated optimal rules. For the first aim, we consider two scenarios when the exponential tilt assumption is and is not satisfied. For the second aim, we focus on the setting where the exponential tilt assumption holds. For simplicity, we consider estimating the optimal rules that minimize TMR.

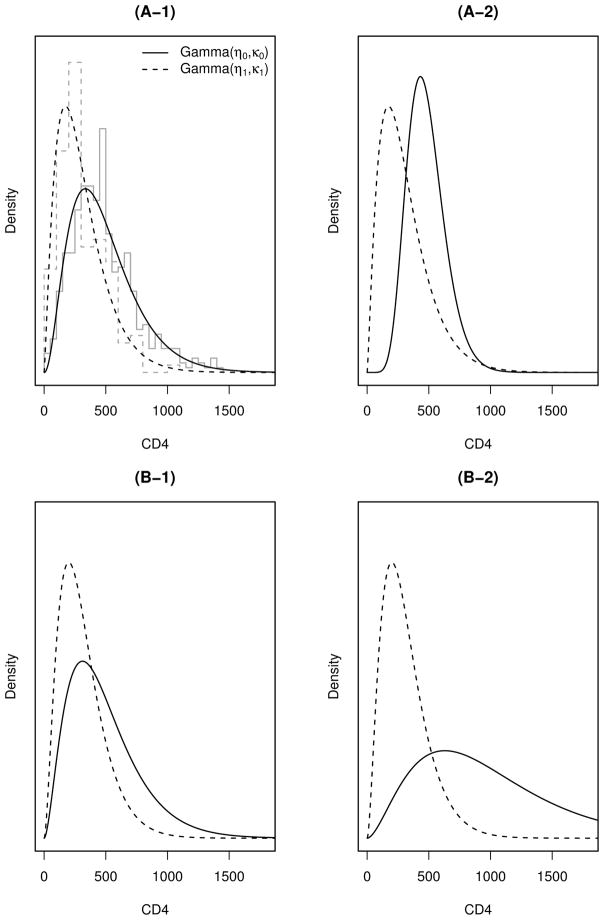

We use the negative value of CD4 count as a risk score. We first simulate viral status Z assuming that Z ~ Bernoulli(p), and then conditional on Z, simulate (CD4 | Z = z) = ⌈W⌉ with W ~ Gamma(ηz, κz), where ⌈·⌉ denotes the ceiling operation, and ηz and κz are the shape and scale parameters of the gamma distribution.

Scenario (A) considers the case when the exponential tilt assumption does not hold. We conduct two simulations by simulating CD4 count data from gamma distributions with parameters,

| (A-1) |

| (A-2) |

The parameter values of (A-1) are chosen as the maximum likelihood estimates (MLEs) obtained by fitting gamma distributions to the Miriam Immunology Clinic data (which will be analyzed in Section 6). For (A-2), we choose the same values of (η1, κ1) as in (A-1), but set (η0, κ0) such that the exponential tilt assumption is further violated while keeping η0κ0 unchanged, i.e. the average CD4 count for patients without treatment failure stays the same as (A-1). (Recall that the mean of gamma distribution is ηκ.)

Scenario (B) considers the case when the exponential tilt assumption holds. We choose a common shape parameter η0 = η1 = 2.8, the mid-point of η0 and η1 in (A-1), and conduct two simulations with parameters

| (B-1) |

| (B-2) |

The values of κ0 and κ1 in (B-1) are the MLEs obtained by fitting gamma distributions to the Miriam Immunology Clinic data while fixing their shape parameters at 2.8. For (B-2), we choose a large value of κ0 = 350 to simulate a case when two gamma distributions are further separated. The gamma densities of the four simulations are shown in Figure 2.

Figure 2.

Gamma distributions used for simulating CD4 count data. The gray step lines in the top-left subplot are histograms of the CD4 data from the Miriam Hospital Immunology Clinic. The smooth dashed (solid) lines are gamma densities for those with (without) treatment failure.

5.1 Diagnostic Accuracy

For the first aim, we consider three prevalences of treatment failure, p = (.15, .25, .40), and assume that VL testing is available at proportions φ = (0, 20, 40)% of patient visits. For each parameter combination, we simulate 1000 datasets each having 5000 observations. The first 2500 observations of each dataset are used as the training data to develop an optimal rule, and the remaining 2500 observations as the testing data to calculate its associated misclassification rate. Results are shown in Table 1.

Table 1.

Simulation results. For each condition, the estimated lower and upper cut-off values are averages over 1000 simulations, and converted to the original scale of CD4 count. The numbers in parentheses are standard errors.

| p | φ | true values

|

nonparametric estimate

|

semiparametric estimate

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| lower | upper | TMR | lower | upper | TMR | lower | upper | TMR | |||

| (A-1) | .15 | 0 | 65 | 65 | .15 | 70 (20) | 70 (20) | .148 | 0 (1) | 0 (1) | .150 |

| .2 | 0 | 230 | .09 | 29 (14) | 231 (5) | .086 | 0 (0) | 230 (5) | .086 | ||

| .4 | 0 | 348 | .05 | 25 (13) | 349 (6) | .051 | 0 (0) | 348 (6) | .051 | ||

| .25 | 0 | 125 | 125 | .24 | 128 (23) | 128 (23) | .237 | 79 (25) | 79 (25) | .240 | |

| .2 | 66 | 225 | .15 | 71 (21) | 228 (9) | .152 | 2 (8) | 214 (5) | .153 | ||

| .4 | 39 | 333 | .09 | 47 (20) | 336 (7) | .092 | 0 (0) | 329 (6) | .092 | ||

| .4 | 0 | 239 | 239 | .32 | 240 (28) | 240 (28) | .327 | 268 (13) | 268 (13) | .327 | |

| .2 | 177 | 288 | .23 | 192 (22) | 302 (22) | .233 | 212 (13) | 323 (14) | .234 | ||

| .4 | 149 | 378 | .15 | 148 (22) | 377 (22) | .153 | 153 (14) | 382 (14) | .152 | ||

| (A-2) | .15 | 0 | 130 | 130 | .14 | 132 (16) | 132 (16) | .135 | 35 (23) | 35 (23) | .147 |

| .2 | 72 | 268 | .07 | 71 (20) | 268 (6) | .075 | 0 (0) | 262 (5) | .075 | ||

| .4 | 57 | 373 | .04 | 59 (21) | 374 (6) | .045 | 0 (0) | 370 (5) | .045 | ||

| .25 | 0 | 176 | 176 | .21 | 178 (17) | 178 (17) | .209 | 148 (17) | 148 (17) | .212 | |

| .2 | 122 | 270 | .13 | 121 (17) | 269 (9) | .131 | 51 (28) | 247 (7) | .135 | ||

| .4 | 98 | 368 | .08 | 96 (18) | 368 (8) | .080 | 1 (3) | 351 (5) | .083 | ||

| .4 | 0 | 249 | 249 | .29 | 251 (20) | 251 (20) | .291 | 290 (10) | 290 (10) | .295 | |

| .2 | 200 | 312 | .20 | 203 (17) | 314 (15) | .201 | 236 (11) | 345 (11) | .204 | ||

| .4 | 167 | 394 | .13 | 169 (18) | 396 (15) | .130 | 177 (11) | 403 (10) | .130 | ||

|

| |||||||||||

| (B-1) | .15 | 0 | 0 | 0 | .15 | 27 (15) | 27 (15) | .150 | 0 (0) | 0 (0) | .150 |

| .2 | 0 | 220 | .10 | 20 (9) | 221 (5) | .095 | 0 (0) | 221 (5) | .095 | ||

| .4 | 0 | 338 | .05 | 18 (8) | 338 (6) | .056 | 0 (0) | 338 (6) | .055 | ||

| .25 | 0 | 45 | 45 | .25 | 69 (34) | 69 (34) | .251 | 45 (25) | 45 (25) | .250 | |

| .2 | 0 | 209 | .17 | 33 (19) | 211 (6) | .166 | 0 (2) | 209 (5) | .165 | ||

| .4 | 0 | 322 | .10 | 26 (14) | 323 (6) | .100 | 0 (0) | 322 (6) | .099 | ||

| .4 | 0 | 259 | 259 | .35 | 257 (34) | 257 (34) | .355 | 259 (15) | 259 (15) | .353 | |

| .2 | 215 | 321 | .26 | 206 (28) | 312 (29) | .259 | 207 (15) | 312 (15) | .258 | ||

| .4 | 159 | 379 | .17 | 148 (31) | 370 (30) | .171 | 149 (16) | 369 (15) | .170 | ||

| (B-2) | .15 | 0 | 241 | 241 | .13 | 241 (31) | 241 (31) | .127 | 242 (13) | 242 (13) | .126 |

| .2 | 99 | 383 | .05 | 103 (37) | 391 (19) | .047 | 97 (20) | 386 (11) | .047 | ||

| .4 | 0 | 619 | .01 | 38 (19) | 622 (14) | .011 | 0 (0) | 620 (13) | .010 | ||

| .25 | 0 | 344 | 344 | .16 | 345 (30) | 345 (30) | .165 | 345 (10) | 345 (10) | .164 | |

| .2 | 234 | 452 | .08 | 237 (22) | 455 (25) | .082 | 236 (10) | 454 (11) | .081 | ||

| .4 | 112 | 577 | .03 | 111 (33) | 581 (26) | .028 | 111 (13) | 578 (11) | .028 | ||

| .4 | 0 | 457 | 457 | .18 | 458 (30) | 458 (30) | .184 | 458 (9) | 458 (9) | .183 | |

| .2 | 344 | 564 | .10 | 347 (20) | 567 (30) | .100 | 347 (8) | 568 (12) | .100 | ||

| .4 | 237 | 671 | .05 | 240 (19) | 677 (38) | .047 | 239 (8) | 676 (13) | .046 | ||

When the prevalence of treatment failure is low (e.g., p ≤ .25) and VL test availability φ is high, the semiparametric approach may yield a negative estimate of the lower cut-off value on CD4 count, particularly when the center of the optimal cut-off interval is close to zero. When this occurs, we replace the negative cut-off values by zero. This adjustment does not imply that the algorithm fails. It can be verified that the upper cut-off estimate is still correct, and the optimal rule in this case is to assign VL test to those high-risk patients with CD4 count less than the upper cut-off value.

Table 1 shows that the nonparametric approach yields the correct estimates of the optimal cut-off values for both Scenarios (A) and (B), and the resulting TMRs are close to the underlying truth. When the exponential tilt assumption does not hold as in Scenario (A), the optimal rules estimated by the semiparametric approach are slightly biased (contrasted with Scenario (B)). However when the exponential tilt assumption holds as in Scenario (B), the semiparametric approach yields the correct estimates of the optimal cut-off values, and the estimated cut-off values have much smaller standard errors compared with their corresponding nonparametric estimates.

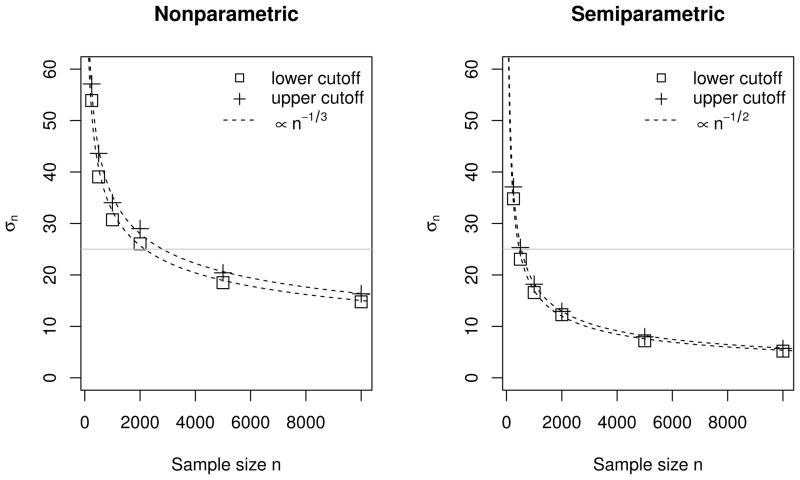

5.2 Convergence Rate and Efficiency

The second aim of our simulation studies is to examine the relative efficiency of the nonparametric and semiparametric approaches. For this aim, we consider only the parameter setup of (B-2) with p = .25 and φ = .20, but simulate the training data with increasing sample sizes of n = (250, 500, 1000250, 500, 2000, 5000, 10000). For each sample size, we simulate 1000 training datasets, and for each data set, we estimate the optimal rules both nonparametrically and semiparametrically.

With a slight abuse of notation, we use to denote the variances of both estimated upper and lower cut-off values. Then assuming that σn ∝ n−w (a sufficient condition for σn = O(n−w) as n → ∞), we use simulations to approximate w for the two estimation approaches. Specifically, we compute σn based on the 1000 estimated optimal cut-off values for each sample size n. We then regress log(σn) on (−log n) using a simple linear model with a slope w. By least-squares estimation, we find that w ≈ 0.33 when the optimal cut-off values are estimated nonparametrically, and ≈ 0.50 when they are estimated semiparametrically. The results are shown in Figure 3.

Figure 3.

Large-sample convergence properties of estimated optimal cut-off boundaries. Horizontal lines are added to indicate the sample sizes needed to achieve σn = 25.

The simulations suggest that in this speci_c case, the nonparametric estimates of the optimal cut-off values converge approximately at a rate of O(n−1/3), and the semiparametric estimates converge at a faster rate of about O(n−1/2). The relative efficiency between the two estimation approaches is approximately O(n1/6) when n is large.

5.3 Simulation-Based Study Design

The results above also suggest that a study for tripartite rule development can be designed based on simulations. For example, suppose that the same assumptions as in Section 5.2 are made, and we would like to design a study to determine an optimal tripartite rule such that the 95% confidence intervals of both upper and lower cut-offs have widths of no more than 100 CD4 (i.e., σn ≤ 25). Then referring to the gray horizontal lines in Figure 3, a study with a sample size of about 3000 subjects is needed if the nonparametric approach is used to estimate the optimal rule, or a sample size of about 500 if the exponential tilt assumption holds and the semiparametric approach is used.

6. APPLICATION

6.1 Data from the Miriam Hospital Immunology Clinic

For illustration, we analyze data from the Miriam Immunology Clinic in Providence, RI, USA, the largest HIV clinic in the state (Gillani 2009). We recognize the essential difference between HIV-infected patients in the US and RLS. The main reason we use a US dataset to demonstrate the development of clinical rules is because this database contains high quality CD4 and VL data that were routinely and frequently collected.

We use data from the most recent clinic visits of 597 patients who meet the following criteria: have taken ART for at least 6 months; have CD4 count, CD4% and VL measure available at the most recent clinic visit; and have CD4 count and CD4% available 6 months (with a window of 6 ± 1 mo) prior to that visit. We calculate the 6-month changes in CD4 count and in CD4%, where [6-month change] is defined as ([current] − [6-mo ago])/[6-mo ago]. Total time on ART, while a potentially important predictor (Kantor et al. 2009), is not available for all patients and therefore not used in formulating risk scores.

Table 2 provides summary statistics for key clinical and immunological markers in the data. For uniformity, viral failure is defined as having VL above 400 copies/mL (some of the VL test assays used have lower detection limits of < 400 copies/mL). Among the 597 patients, 146 have viral failure; so the estimated prevalence of viral failure is p̂ = 146/597 = .25.

Table 2.

Summary statistics for key variables (n = 597)

| Marker | mean | median | IQR | range |

|---|---|---|---|---|

| Virological marker | ||||

| VL at most recent visit (copies/mL) | 11.8K | 75 | (75, 400) | (12, > 500K) |

| Immunological markers | ||||

| CD4 count at most recent visit (cells/uL) | 442 | 407 | (254, 576) | (8, 1412) |

| 6-month CD4 count change (%) | 7.3 | 18 | (−13, 33) | (−80, 736) |

| CD4 % at most recent visit | 24 | 23 | (17, 30) | (.90, 59) |

| 6-month CD4% change (%) | 9.5 | 4.7 | (−6.1, 16) | (−74, 209) |

K: thousand; IQR: Interquartile range.

6.2 Risk Scores

Two risk scores are considered for developing diagnostic rules. The first risk score is S1 = −CD4, or negative value of the most recent CD4 count (to be consistent with the notion that greater values of S correlate with increased risk of viral failure). The second risk score is a prediction-based composite score derived from a logistic regression of treatment failure on four immunological markers as follows,

where CD4 and CD4% refer to their measures at current visit. The MLEs (SEs) of the coefficients are β̂0 = .89 (.27), β̂1 = −.0021 (.00074), β̂2 = −.049 (.017), β̂3 = −.055 (.21), and β̂4 = −1.40 (.46). A Hosmer-Lemeshow test gives a p-value of .28, indicating no evidence of lack of fit. The distribution of S2 has a median .21, ranges from .01 to .87, and can be interpreted as the predicted probability of treatment failure.

The risk score S1 is easier to implement in clinical practice but known to have a high error rate for diagnosing viral failure. By incorporating more clinical information, S2 is potentially more accurate, but its use in clinical settings is not as straightforward as S1.

6.3 Two Simple Rules

Before calculating tripartite rules based on criteria laid out in Section 3, we summarize operating characteristics of two simple diagnostic rules that are similar in spirit to those commonly used in RLS when VL test has limited or no availability.

The first rule assumes that no VL testing is available (i.e., φ = 0) and uses CD4 < 200 as the hard cut-off for diagnosing treatment failure and CD4 ≥ 200 as non-failing, a criterion recommended by the WHO for the RLS (WHO 2010b). (Another criterion recommended by the WHO for the RLS is using CD4 = 350 as the cut-off threshold.)

The second rule assumes that the limited VL testing will be used only as a confirmative test for patients with CD4 < 200. This rule classifies those with CD4 count ≥ 200 as non-failure, and makes correct diagnoses for patients with CD4 count < 200. In the Miriam Immunology Clinic data, about 15% of patients have current CD4 count less than 200, so we consider the case that VL testing is available at 15% of patient visits, i.e., φ = .15.

The diagnostic accuracies of these two rules are summarized in Table 3. Both rules have FNR around 0.70. The second rule, by having 15% of patients tested for VL, reduces the FPR to 0 and TMR from .26 to .18. The improvement realized by having VL testing available to a small fraction of patients is evident; however, whether the second rule is optimal needs further investigations.

Table 3.

Diagnostic accuracies of the two simple empirical rules.

| φ | Diagnosis action based on CD4

|

FPR | FNR | TMR | ||

|---|---|---|---|---|---|---|

| test positive | request VL test | test negative | ||||

| 0 | 0 – 200 | – | ≥ 200 | .10 | .70 | .26 |

| .15 | – | 0 – 200 | ≥ 200 | 0 | .70 | .18 |

6.4 Analysis I: CD4-Based Min-λ Rules

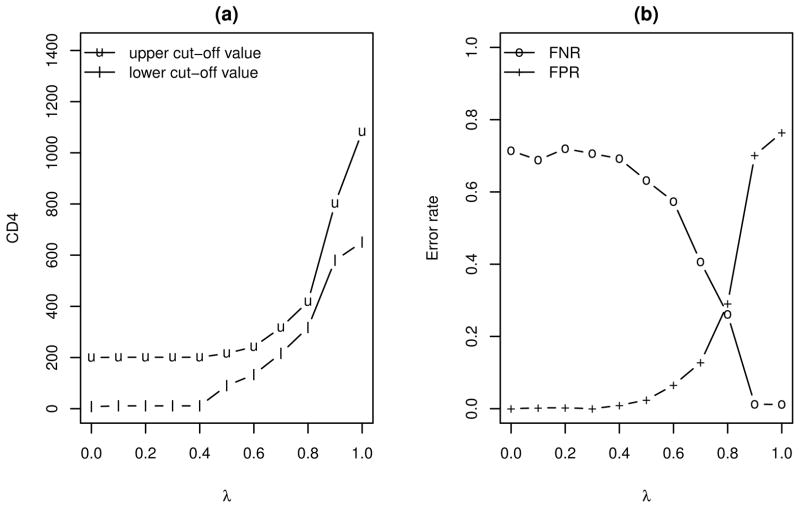

In this section, we evaluate the diagnostic performance of optimal tripartite rules based on S1, using R3(·) as the risk criterion. To make a direct comparison to the simple rules in the last section, we assume that VL testing is available at 15% of patient visits. The optimal tripartite rules are developed using the nonparametric approach as described in Section 3.

Figure 4 shows the estimated optimal rules and associated FNR and FPR, for λ varying from 0 to 1. The FNR and FPR are computed using 10-fold cross validations, carried out as follows. We randomly subdivide the data into 10 subsets of about equal size; determine the FPR and FNR for each subset using the optimal rule developed using the remaining 9 subsets; and then calculate the FPR and FNR as the averages over the 10 pieces (cf. Hastie et al. 2001).

Figure 4.

The optimal min-λ rules based on S1 and associated FPR and FNR.

As shown in Figure 4, when λ increases (i.e., placing higher priority on avoiding false negative diagnoses), the estimated optimal rule shifts gradually toward triaging the VL tests to those with CD4 count in the middle and high range. At λ = .8, the estimated optimal rule calls for testing patients having CD4 count between 300 and 450; in this case, both FNR and FPR are around .30. At the extreme when λ = 1, the estimated optimal rule calls for VL tests on those with CD4 > 650, which reduces the FNR to ≈ 0 but increases FPR to ≈ .80.

The left panel of Figure 4 shows that when λ < .4, the estimated optimal rule is to obtain VL when 17 < CD4 < 201. That is, when avoidance of false positive diagnosis is prioritized, the simple rule using VL testing as a confirmative test is optimal and a reasonable choice.

6.5 Analysis II: Comparing S1- and S2-Based Rules that Minimize the Weighted Risk R3

Next, we compare the diagnostic accuracy of single-maker tripartite rules based on S1 to multiple-marker rules based on S2 using R3(·) as the risk criterion. We consider three values of λ = (.25, .50, .75) and three constraints on VL test availability φ = (0, .15, .30). Nonparametric estimates of the optimal rules, along with FPR, FNR and TMR obtained from cross-validations, are given in Table 4. Standard errors for all table entries are computed using the bootstrap method with 500 re-samples.

Table 4.

Comparison of the S1- and S2-based tripartite rules. The optimal cut-off points based on S1 are transformed back to the original scale of CD4 count. The numbers in parentheses are standard errors.

| λ | φ | cut-off points

|

FNR | FPR | R3 | TMR | ||

|---|---|---|---|---|---|---|---|---|

| lower | upper | |||||||

| S1 | .25 | .00 | 17 (17) | 17 (17) | .98 (.02) | .00 (.00) | .06 (.01) | .24 (.02) |

| .15 | 17 (9) | 201 (18) | .72 (.04) | .00 (.00) | .04 (.00) | .18 (.01) | ||

| .30 | 17 (13) | 284 (13) | .46 (.04) | .00 (.00) | .03 (.00) | .12 (.01) | ||

| .50 | .00 | 120 (64) | 120 (64) | .93 (.12) | .03 (.04) | .12 (.01) | .26 (.02) | |

| .15 | 90 (16) | 216 (39) | .63 (.06) | .02 (.01) | .08 (.01) | .17 (.01) | ||

| .30 | 17 (15) | 284 (32) | .45 (.04) | .01 (.01) | .05 (.01) | .12 (.01) | ||

| .75 | .00 | 302 (45) | 302 (45) | .43 (.06) | .26 (.06) | .13 (.01) | .30 (.04) | |

| .15 | 216 (51) | 317 (50) | .40 (.07) | .13 (.06) | .10 (.01) | .20 (.04) | ||

| .30 | 226 (67) | 417 (81) | .30 (.07) | .14 (.06) | .08 (.01) | .18 (.04) | ||

|

| ||||||||

| S2 | .25 | .00 | .64 (.04) | .64 (.04) | .91 (.04) | .01 (.00) | .06 (.00) | .23 (.01) |

| .15 | .39 (.01) | .75 (.04) | .62 (.04) | .00 (.00) | .04 (.00) | .16 (.01) | ||

| .30 | .29 (.01) | .71 (.04) | .42 (.04) | .00 (.00) | .03 (.00) | .11 (.01) | ||

| .50 | .00 | .53 (.07) | .53 (.07) | .79 (.08) | .04 (.02) | .11 (.01) | .23 (.01) | |

| .15 | .37 (.01) | .66 (.06) | .60 (.05) | .01 (.01) | .07 (.01) | .16 (.01) | ||

| .30 | .28 (.01) | .67 (.06) | .43 (.04) | .01 (.01) | .05 (.01) | .11 (.01) | ||

| .75 | .00 | .26 (.04) | .26 (.04) | .35 (.08) | .30 (.08) | .12 (.01) | .31 (.04) | |

| .15 | .26 (.04) | .34 (.05) | .32 (.08) | .21 (.07) | .09 (.01) | .24 (.03) | ||

| .30 | .19 (.03) | .34 (.08) | .25 (.06) | .13 (.05) | .07 (.01) | .16 (.03) | ||

In summary, the optimal rules based on S2 have a slightly better diagnostic performance than the optimal rules on S1. However, the magnitude of improvement by incorporating more non-VL markers is small, relative to the improvement that can be achieved by the selective use of VL testing on more patients. In Section 6.7, the diagnostic accuracies of the rules based on the two risk scores will be further compared using AUCs.

6.6 Analysis III: Optimal Rules under Exponential Tilt Assumption

In this section, we develop the optimal tripartite rules under the exponential tilt assumption. We consider the following two risk scores, S1 and , for rule development. The reason for using is that it avoids the issue of having the empirical adjustment when one cut-off value is beyond the support of the risk score (as we encountered in our simulation studies). The risk score may also be more suitable for the exponential tilt model.

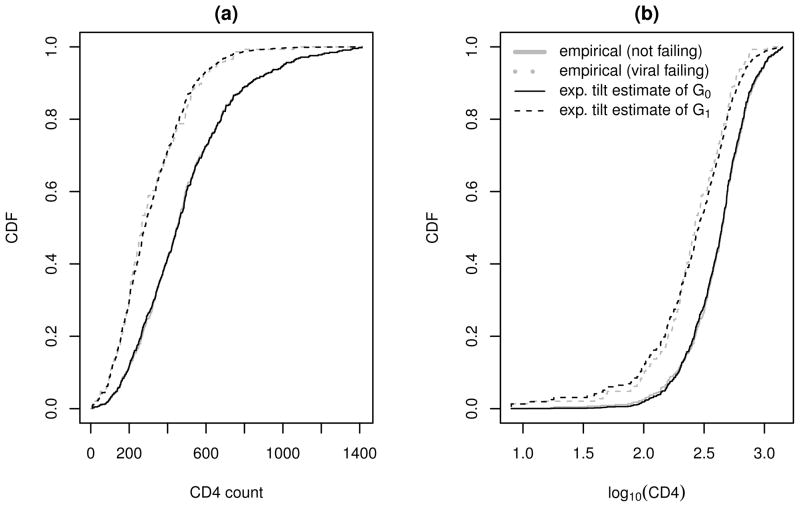

We first examine the suitability of the exponential tilt model for S1 and by plotting the semiparametric estimates of G0 and G1 against their empirical estimates. The results are shown in Figure 5, where the semiparametric estimates of G0 and G1 are obtained using the results in Appendix A.1. Figure 5 suggests that the exponential tilt assumption is reasonable for both S1 and although the goodness of fit for S1 is slightly better. (One also can use Q-Q plots, not shown, to examine the model goodness of fit.)

Figure 5.

Empirical and semiparametric estimates of the cumulative densities of CD4 counts and log10(CD4).

The estimated optimal rules using TMR as the risk criterion are given in Table 5. The intervals for triaging VL assays are centered at CD4 = 77 and 109 for the optimal rules based on S1 and , respectively. Overall, the diagnostic accuracies of the two sets of estimated optimal rules are comparable, and their estimated cut-off values differ only slightly relative to their standard errors. The optimal rules in Table 5 also are comparable to the optimal rules that are developed nonparametrically (see Table 4 with λ = .50), but in general have much smaller standard errors.

Table 5.

The optimal min-TMR rules under the exponential tilt assumption. Cut-off points were transformed back to the original scale of CD4 count. The numbers in parentheses are standard errors calculated using the bootstrap method.

| φ | cut-off points

|

FNR | FPR | TMR | |||

|---|---|---|---|---|---|---|---|

| lower | upper | ||||||

| S1 | 0 | 77 (46) | 77 (46) | .94 (.06) | .01 (.02) | .24 (.02) | |

| .15 | 0 (29) | 199 (10) | 69 (.05) | .00 (.01) | .17 (.02) | ||

| .30 | 0 (10) | 278 (11) | .47 (.04) | .00 (.00) | .11 (.01) | ||

|

| |||||||

|

|

0 | 109 (23) | 109 (23) | .89 (.05) | .03 (.01) | .24 (.02) | |

| .15 | 57 (22) | 206 (10) | .67 (.04) | .01 (.01) | .18 (.02) | ||

| .30 | 41 (17) | 287 (12) | .46 (.04) | .01 (.00) | .12 (.01) | ||

6.7 Analysis IV: ROC Analyses for Tripartite Rules

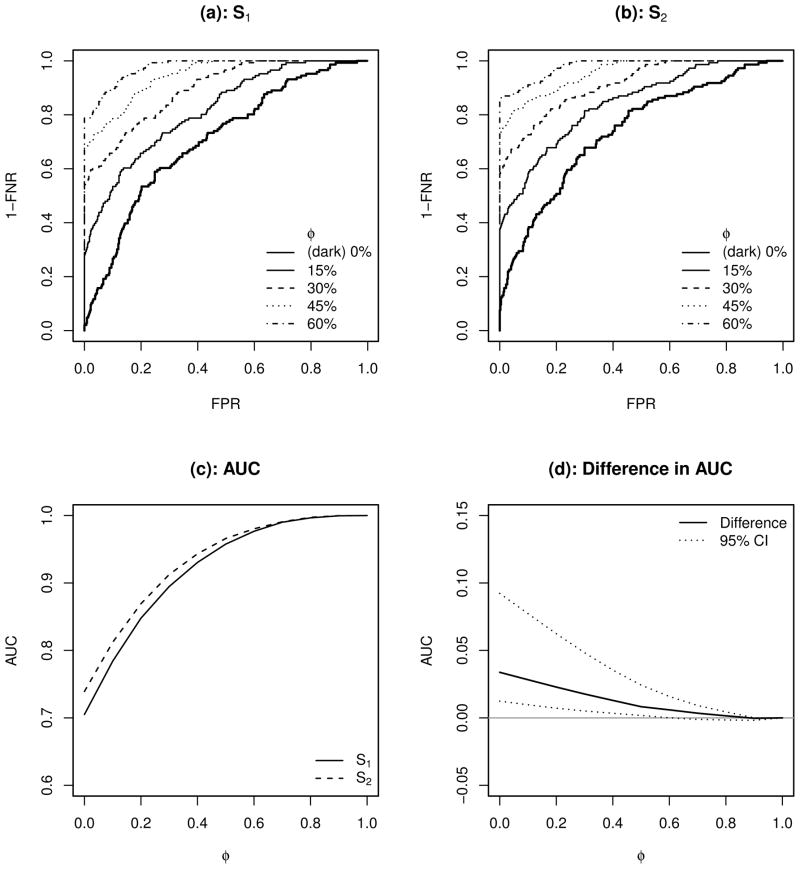

Figure 6 shows nonparametric estimates of ROC curves for tripartite rules based on S1 and S2, when the VL tests are available at φ = 0, 15, 30, 45, and 60% of patient visits. The ROC curves in the subplot (b) are slightly better than those in the subplot (a), which suggests that improvement in diagnostic capacity can be achieved using the composite score S2. See also the AUC curves and their difference in the subplots (c) and (d). Consistent with our findings in Analysis II (Section 6.5), the difference between the two AUC curves, although statistically significant for φ < .6, is marginal.

Figure 6.

ROC curves for diagnostic rules using S1 and S2 (subplots (a) and (b)); the resulting AUC curves as functions of φ (subplot (c)); and the difference of the two AUC curves (S2 “minus” S1, subplot (d)). The point-wise 95% CI of the difference in AUC is calculated using the bootstrap method with 500 re-samples.

Relative to not having VL tests available, the AUCs for tripartite rules based on both risk scores are substantially improved as VL testing is made available for some of clinical visits. For example, as shown in the subplot (c), when we increase the VL test availability from 0 to 20%, the absolute improvement in AUC is about 15%; and increasing availability to 40% improves AUC by more than 20%. In particular, the relative improvement by making VL testing accessible to some HIV patients is more pronounced when the VL testing availability is low.

7. DISCUSSION

This paper is motivated by recent evidence that the CD4-based WHO guidelines for monitoring HIV treatment in RLS can lead to high treatment failure misclassification rates, and by the fact that VL tests are becoming available to programs and patients in RLS, typically on a limited basis. To make optimal use of VL tests, we propose a tripartite diagnostic rule based on a risk score that subdivides patients into a high-risk group (classified as treatment failure), a low-risk group (as viral suppressed), and an intermediate-risk group to whom the limited VL tests are assigned, where the size of the third group is constrained by the availability of VL tests. Nonparametric and semiparametric methods are proposed for determining an optimal rule to minimize a given risk criterion. ROC analysis procedure for characterizing the diagnostic performance of tripartite rules and its associated asymptotic properties are developed.

Our proposed method is demonstrated by analyzing data from the Miriam Hospital Immunology Clinic in Providence, RI. We show that with selective and targeted use of VL tests, the rate of misdiagnosis can be substantially reduced even when VL testing is available at a small portion of patient visits (e.g., φ = 15%). Our analysis also suggests that when avoidance of false positive diagnoses is prioritized, using VL testing strictly to confirm viral failure for those deemed to be at high risk is a reasonable choice. This finding applies only to patients at the Miriam Hospital Immunology Clinic; its external validity remains to be tested.

Our methods assume that the functional form of risk score S(X) is known, but may be relaxed by unknown parameters. When the function form of S(X) is unknown, methods of machine/statistical learning, such as boosting (Freund et al. 1999), targeted/super learner (Sinisi et al. 2007; van der Laan 2011), classification tree learning (Breiman et al. 1984), neural networks (Hagan et al. 1996; Sarle 1994), and prediction-based classification methods (Foulkes and De Gruttola 2002), can be implemented. We refer the readers to Hastie et al. (2001) and Kotsiantis (2007) for a more comprehensive treatment on the topic.

We assume that there is no measurement error in VL, i.e. that VL test is the gold standard for determining the amount of circulating virus. In developed countries, repeated VL tests and HIV genotyping are usually required to confirm treatment failure and existence of drug resistance once the VL becomes detectable. HIV-infected patients in RLS however do not have such luxury, and a single VL test result (if done) is probably the most direct measure of viral failure, and is used for clinical decision making. So although the assumption is not ideal, it is reasonable in this context because the measured VL is the best available basis for decision making in RLS. Future work will address the issue of measurement error in VL and its effect on misclassification rates.

CD4 counts are known to be highly variable due to measurement error, diurnal variations, and other factors. The measurement error of CD4 count may be part of the reason for high misclassification rates of the WHO guidelines. The impact of measurement error in biomarkers on predicting binary outcomes has been studied by Carroll et al. (1984, 2006), Buzas et al. (2003), and Fuller (2009) among others. Generally speaking, large measurement errors of a biomarker are associated with a greater attenuation of its capability of predicting outcomes. One way to reduce the impact of measurement errors is through repeated measurements. Given the fact that point-of-care CD4 technologies are being developed, it may be possible in practice in the future to quantify and reduce the impact of CD4 measurement error by multiple testing at a single visit. On the other hand, with additional information such as prior history of CD counts, it may also be possible to evaluate the magnitude of measurement error by constructing appropriate measurement error models (which typically rely on certain subjective assumptions) and applying methods such as regression calibration (Carroll and Stefanski 1990; Rosner et al. 1990) and simulation-extrapolation (Stefanski and Cook 1995). Improving diagnostic accuracy by reducing the impact of CD4 measurement error is an area worthwhile further investigation.

A final limitation of this paper, as it applies to developing rules for RLS, is that a US data set is used to demonstrate our proposed methods. Our ongoing work is focused on developing and calibrating rules based on data from sub-Saharan Africa and other RLS.

Acknowledgments

This research is funded by a 2009 developmental grant from the Lifespan/Tufts/Brown Center for AIDS Research (CFAR). The project described is supported by Grant Number P30AI042853 from the National Institute of Allergy and Infectious Diseases (NIAID). Work by Dr. Kantor is also supported by a grant (Number R01AI66922) from the National Institute of Health (NIH). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIAID or NIH. The authors are grateful for the helpful comments from reviewers, the associate editor, and the editor. The authors also thank Ms. Allison K. DeLong for discussions and comments on early versions of the manuscript.

APPENDIX A

A.1 Semiparametric estimates of G0, G1, and G under the exponential tilt assumption

Suppose that we want to estimate the mixture distribution G using an i.i.d sample of {(Si, Zi) : i = 1, …, n}. In the spirit of nonparametric likelihood estimation, we consider only the distributions with jumps at {Si}. Thus the (pro3le) likelihood for G0 can be written as (see Qin 1999)

where , β̂0 and β̂1 are the MLEs fromP the logistic regression (6), and θi = g0(Si) denotes the mass at the observed Si with Σi θi = 1. Here we proceed as if we have n distinct values in {Si}, which does not affect the following results. Applying the Lagrange multiplier, one can show that the likelihood is maximized at

where ν is the Lagrange multiplier solving

We then estimate G0, G1, and G semiparametrically by

Because the exponential tilt assumption places no constraint on the marginal distribution G, it can be verified that the semiparametric estimate G̃(s) is equal to the empirical estimate Ĝ(s).

A.2 Properties of AUCφ for tripartite rules

Property A.2.1

Let S ∼ G1, S′ ∼ G0, and S and S′ be independent. Then,

| (A.1) |

and

| (A.2) |

Proof

We have

where is added for ties and the term vanishes for continuous S. Further,

Property A.2.2

If S is stochastically greater than S′, then AUCφ is bounded by

The lower bound is achieved when G1 = G0, and the upper bound when φ = 1.

Proof

We prove the results for the case when S is continuous such that there exist l and u with G(u) − G(l) = φ. Manipulating this constraint slightly, we have 1 − G(l) = 1 − G(u)+ φ ⇒ 1 − {pG1(Hφ (u)) + (1 − p)G0(Hφ (u))} = 1 − {pG1(u) + (1 − p)G0(u)} + φ. The condition that S is stochastically greater than S′ implies that

Therefore,

All equalities hold when G0 = G1.

A.3 Asymptotic properties of estimated ROC curve and AUC

The nonparametric estimate Ĉφ given by (9) has the following properties:

Property A.3.1

The nonparametric estimate Ĉφ is uniformly consistent.

Proof

Let us write

Then, it can be shown that the first term converges to zero almost surely by the Glivenko-Cantelli Theorem, and the second and third terms converge to zero almost surely by the Law of Large Numbers. See Hsieh and Turnbull (1996).

Property A.3.2

Suppose that the densities g0, g1 and g are continuous and bounded, and ΣZi/n → p as n → ∞. Then, the following approximation holds asymptotically as n → ∞,

where B1(v) and B2(v) are independent Brownian bridges, , and .

The strategy for proving A.3.2 is similar to Hsieh and Turnbull (1996).

Property A.3.3

The nonparametric estimate given by (10) has the property that, as n → ∞,

| (A.3) |

where σ2 = VarG1 {G0 ∘ Hφ (S)}p−1 + VarG0 {G1 ∘ Hφ (S)}(1 − p)−1

Proof

We prove (A.3) using the properties of U-statistics (Lee 1990). Applying the Hájek projection principle on (10) (van der Vaart 1998), we express as

where

is a U-statistic. Then conditional on Σi Zi, an ancillary statistic for the AUC, (A.3) is an immediate result of applying Slutsky’s lemma and the Central Limit theorem.

Contributor Information

Tao Liu, Email: tliu@stat.brown.edu, Assistant Professor, Department of Biostatistics, Center for Statistical Sciences, Brown University School of Public Health, Providence, RI 02912

Joseph W. Hogan, Professor, Department of Biostatistics, Center for Statistical Sciences, Brown University School of Public Health, Providence, RI 02912

Lisa Wang, Graduate Student, Department of Biostatistics, Center for Statistical Sciences, Brown University School of Public Health, Providence, RI 02912

Shangxuan Zhang, Statistical Programmer, Memorial Sloan-Kettering Cancer Center, New York City, NY 10016

Rami Kantor, Associate Professor of Medicine, Division of Infectious Diseases, the Alpert Medical School of Brown University, Providence, RI 02912

References

- Abouyannis M, Menten J, Kiragga A, Lynen L, Robertson G, Castelnuovo B, Man-abe YC, Reynolds SJ, Roberts L. Development and validation of systems for rational use of viral load testing in adults receiving first-line ART in sub-Saharan Africa. AIDS. 2011;25:1627–1635. doi: 10.1097/QAD.0b013e328349a414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson AM, Bartlett JA. Changing antiretroviral therapy in the setting of virologic relapse: review of the current literature. Current HIV/AIDS Reports. 2006;3:79–85. doi: 10.1007/s11904-006-0022-1. [DOI] [PubMed] [Google Scholar]

- Anderson JA. Separate sample logistic discrimination. Biometrika. 1972;59:19–35. [Google Scholar]

- Anderson JA. Multivariate Logistic Compounds. Biometrika. 1979;66:17–26. [Google Scholar]

- Bagchi S, Kempf M, Westfall A, Maherya A, Willig J, Saag M. Can routine clinical markers be used longitudinally to monitor antiretroviral therapy success in resourcelimited settings? Clinical Infectious Diseases. 2007;44:135–138. doi: 10.1086/510072. [DOI] [PubMed] [Google Scholar]

- Baker S. Putting risk prediction in perspective: relative utility curves. Journal of the National Cancer Institute. 2009;101:1538–1542. doi: 10.1093/jnci/djp353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger JO. Statistical Decision Theory and Bayesian Analysis. 2 New York: Springer-Verlag; 1985. [Google Scholar]

- Bisson GP, Gross R, Bellamy S, Chittams J, Hislop M, Regensberg L, Frank I, Maartens G, Nachega JB. Pharmacy refill adherence compared with CD4 count changes for monitoring HIV-infected adults on antiretroviral therapy. PLoS Medicine. 2008;5:e109. doi: 10.1371/journal.pmed.0050109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisson GP, Gross R, Strom JB, Rollins C, Bellamy S, Weinstein R, Friedman H, Dickinson D, Frank I, Strom BL, Gaolathe T, Ndwapi N. Diagnostic accuracy of CD4 cell count increase for virologic response after initiating highly active antiretroviral therapy. AIDS. 2006;20:1613–1619. doi: 10.1097/01.aids.0000238407.00874.dc. [DOI] [PubMed] [Google Scholar]

- Breiman L, Friedman J, Stone C, Olshen R. Classification and regression trees. Chapman & Hall/CRC; 1984. [Google Scholar]

- Buzas J, Tosteson T, Stefanski L. Measurement error. Institute of Statistics Mimeo Series, No. 2544. 2003;4:1–92. [Google Scholar]

- Calmy A, Ford N, Hirschel B, Reynolds SJ, Lynen L, Goemaere E, de la Vega FG, Perrin L, Rodriguez W. HIV viral load monitoring in resource-limited regions: optional or necessary? Clinical Infectious Diseases. 2007;44:128–134. doi: 10.1086/510073. [DOI] [PubMed] [Google Scholar]

- Carroll R, Ruppert D, Stefanski L, Crainiceanu C. Measurement error in nonlinear models: a modern perspective. Vol. 105. Chapman & Hall/CRC; 2006. [Google Scholar]

- Carroll R, Stefanski L. Approximate quasi-likelihood estimation in models with surrogate predictors. Journal of the American Statistical Association. 1990;85:652–663. [Google Scholar]

- Carroll RJ, Spiegelman CH, Lan KKG, Bailey KT, Abbott RD. On errors-in-variables for binary regression models. Biometrika. 1984;71:19–25. [Google Scholar]

- Castelnuovo B, Kiragga A, Schaefer P, Kambugu A, Manabe Y. High rate of misclassification of treatment failure based on WHO immunological criteria in resource limited settings in Uganda. AIDS. 2009;23:1295–1296. doi: 10.1097/QAD.0b013e32832cbd43. [DOI] [PubMed] [Google Scholar]

- Deeks SG, Barbour JD, Grant RM, Martin JN. Duration and predictors of CD4 T-cell gains in patients who continue combination therapy despite detectable plasma viremia. AIDS. 2002;16:201–207. doi: 10.1097/00002030-200201250-00009. [DOI] [PubMed] [Google Scholar]

- Deeks SG, Barbour JD, Martin JN, Swanson MS, Grant RM. Sustained CD4+ T cell response after virologic failure of protease inhibitor-based regimens in patients with human immunodeficiency virus infection. Journal of Infectious Diseases. 2000;181:946–953. doi: 10.1086/315334. [DOI] [PubMed] [Google Scholar]

- Efron B. Nonparametric standard errors and confidence intervals. Canadian Journal of Statistics. 1981;9:139–158. [Google Scholar]

- Fiscus S, Cheng B, Growe S, Dementer L, Jennings C, Miller V, et al. HIV-1 viral load assays for resource limited settings. PLoS Medicine. 2006;3:e417. doi: 10.1371/journal.pmed.0030417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foulkes AS, Azzoni L, Li X, Johnson M, Smith C, Mounzer K, Montaner L. Prediction based classification for longitudinal biomarkers. The Annals of Applied Statistics. 2010;4:1476–1497. doi: 10.1214/10-AOAS326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foulkes AS, De Gruttola V. Characterizing the Relationship between HIV-1 Genotype and Phenotype: Prediction-Based Classification. Biometrics. 2002;58:145–156. doi: 10.1111/j.0006-341x.2002.00145.x. [DOI] [PubMed] [Google Scholar]

- Freund Y, Schapire R, Abe N. A short introduction to boosting. Journal-Japanese Society For Artificial Intelligence. 1999;14:1612. [Google Scholar]

- Fuller W. Measurement error models. Vol. 305. Wiley; 2009. [Google Scholar]

- Gardner E, Burman W, Steiner JF, AP, Bangsberg D. Antiretrovi-ral medication adherence and the development of class-specific antiretroviral resistence. AIDS. 2009;23:1035–1046. doi: 10.1097/QAD.0b013e32832ba8ec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gatsonis C. Receiver Operating Characteristic Analysis for the Evaluation of Diagnosis and Prediction1. Radiology. 2009;253:593–596. doi: 10.1148/radiol.2533091633. [DOI] [PubMed] [Google Scholar]

- Gillani FS. The Miriam Hospital Immunology Center Database (ICDB) Annual Data Report. Tech rep. 2009 http://www.rhodeislandhospital.org/cfar/ICDB-guidelines.htm.

- Greengrass V, Lohman B, Morris L, Plate M, Steele PM, Walson JL, Crowe SM. Assessment of the low-cost Cavidi ExaVir Load assay for monitoring HIV viral load in pediatric and adult patients. JAIDS. 2009;52:387–390. doi: 10.1097/QAI.0b013e3181b05f62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagan M, Demuth H, Beale M, et al. Neural network design. Thomson Learning Stamford; CT: 1996. [Google Scholar]

- Hammer S, Saag M, Schechter M, Montaner J, Schooley R, Jacobsen D, et al. Treatment for adult HIV infection: 2006 recommendations of the internatiional AIDS society-US panel. JAMA. 2006;296:827–843. doi: 10.1001/jama.296.7.827. [DOI] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman JH. The Elements of StatisticalLearning: Data Mining, Inference, and Prediction. Springer-Verlag Inc; 2001. [Google Scholar]

- Hsieh F, Turnbull BW. Nonparametric and Semiparametric Estimation of the Receiver Operating Characteristic Curve. The Annals of Statistics. 1996;24:25–40. [Google Scholar]

- Huang Y, Pepe M, Feng Z. Evaluating the predictiveness of a continuous marker. Biometrics. 2007;63:1181–1188. doi: 10.1111/j.1541-0420.2007.00814.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joska J, Westgarth-Taylor J, Hoare J, Thomas K, Paul R, Myer L, Stein D. Validity of the International HIV Dementia Scale in South Africa. AIDS Patient Care STDS. 2011;25(2):95–101. doi: 10.1089/apc.2010.0292. [DOI] [PubMed] [Google Scholar]

- Justice A, McGinnis K, Skanderson M, Chang C, Gibert C, Goetz M, Rimland D, Rodriguez-Barradas M, Oursler K, Brown S, et al. Towards a combined prognostic index for survival in HIV infection: The role of ‘non-HIV’ biomarkers. HIV Medicine. 2010;11:143–151. doi: 10.1111/j.1468-1293.2009.00757.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kantor R, Diero L, DeLong A, Kamle L, Muyonga S, Mambo F, Walumbe E, Emonyi W, Chan P, Carter EJ, Hogan J, Buziba N. Misclassification of First-Line Antiretroviral Treatment Failure Based on Immunological Monitoring of HIV Infection in Resource-Limited Settings. Clinical Infectious Diseases. 2009;49:454–462. doi: 10.1086/600396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keiser O, Macphail P, Boulle A, Wood R, Schechter M, Dabis F, et al. Accuracy of WHO CD4 cell count criteria for virological failure of antiretroviral therapy. Tropical Medicine and International Health. 2009;14:1220–1225. doi: 10.1111/j.1365-3156.2009.02338.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kenya. National AIDS and STD control Programme. 3. Kenya Ministry of Health; 2005. Guidelines to Antiretroviral Drug Therapy in Kenya. [Google Scholar]

- Kiragga AN, Castelnuovo B, Kamya MR, Moore R, Manabe YC. Regional differences in predictive accuracy of WHO immunologic failure criteria. AIDS. 2012;26:768–770. doi: 10.1097/QAD.0b013e32835143e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotsiantis SB. Supervised Machine Learning: A Review of Classification Techniques. Informatica. 2007;31:249–268. [Google Scholar]

- Lee A. U-Statistics: Theory and Practice. Marcel Dekker; New York: 1990. [Google Scholar]

- Lynen L, An S, Koole O, Thai S, Ros S, De Munter P, et al. An algorithm to optimize viral load testing in HIV-positive patients with suspected first-line antiretroviral therapy failure in Cambodia. JAIDS. 2009;52:40–48. doi: 10.1097/QAI.0b013e3181af6705. [DOI] [PubMed] [Google Scholar]

- Mabeya H, Khozaim K, Liu T, Orango O, Chumba D, Pisharodi L, Carter J, Cu-Uvin S. Comparison of Conventional Cervical Cytology Versus Visual Inspection With Acetic Acid Among Human Immunodeficiency Virus–Infected Women in Western Kenya. Journal of Lower Genital Tract Disease. 2012;16:92–97. doi: 10.1097/LGT.0b013e3182320f0c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malawi. Treatment of AIDS, Guidelines for the use of Antiretroviral therapy in Malawi. 1. Malawi Ministry of Health; 2003. [Google Scholar]

- Mee P, Fielding K, Charalambous S, Churchyard G, ADG Evaluation of the WHO criteria for antiretroviral treatment failure among adults in South Africa. AIDS. 2008;22(15):1971–1977. doi: 10.1097/QAD.0b013e32830e4cd8. [DOI] [PubMed] [Google Scholar]

- Metz CE. Basic principles of ROC analysis. Seminars in Nuclear Medicine. 1978;8:283–298. doi: 10.1016/s0001-2998(78)80014-2. [DOI] [PubMed] [Google Scholar]

- Meya D, Spacek L, Tibenderana H, John L, Namugga I, Magero S, et al. Development and evaluation of a clinical algorithm to monitoring patients on antiretro-virals in resource-limited settings using adherence, clinical and CD4 cell count criteria. Journal of the International AIDS Society. 2009;12:3. doi: 10.1186/1758-2652-12-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore DM, Hogg RS, Yip B, Wood E, Tyndall M, Braitstein P, Montaner JS. Discordant immunologic and virologic responses to highly active antiretro-viral therapy are associated with increased mortality and poor adherence to therapy. JAIDS. 2005;40:288–293. doi: 10.1097/01.qai.0000182847.38098.d1. [DOI] [PubMed] [Google Scholar]

- Pahwa S, Read JS, Yin W, Matthews Y, Shearer W, Diaz C, Rich K, Mendez H, Thompson B for the Women, and Study, I. T. . CD4+/CD8+ T Cell Ratio for Diagnosis of HIV-1 Infection in Infants: Women and Infants Transmission Study. Pediatrics. 2008;122:331–339. doi: 10.1542/peds.2007-2308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panel on Antiretroviral Guidelines for Adults and Adolescents. Guidelines for the use and antiretroviral agents in HIV-1-infected adults and adolescents. Department of Health and Human Services (DHHS), WHO; 2011. [Google Scholar]

- Parmigiani G. Wiley Series in Statistics in Practice. 2002. Modeling in Medical Decision Making: A Bayesian Approach. [Google Scholar]

- Pepe M. Problems with risk reclassification methods for evaluating prediction models. American Journal of Epidemiology. 2011;173:1327–1335. doi: 10.1093/aje/kwr013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pepe M, Feng Z, Huang Y, Longton G, Prentice RL, Thompson I, et al. Inteprating the predictiveness of a marker with its performance as a classifier. American Journal of Epidemiology. 2008;167:362–368. doi: 10.1093/aje/kwm305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pepe MS. Receiver Operating Characteristic Methodology. Journal of the Amer-ican Statistical Association. 2000;95:308–311. [Google Scholar]

- Pepe MS. The statistical evaluation of medical tests for classification and prediction. Oxford University Press; 2003. [Google Scholar]

- Pepe MS, Thompson ML. Combining diagnostic test results to increase accuracy. Biostatistics. 2000;1:123–140. doi: 10.1093/biostatistics/1.2.123. [DOI] [PubMed] [Google Scholar]

- Prentice RL, Pyke R. Logistic disease incidence models and case-control studies. Biometrika. 1979;66:403–11. [Google Scholar]

- Qin J. Empirical Likelihood Ratio Based Confidence Intervals for Mixture Proportions. The Annals of Statistics. 1999;27:1368–1384. [Google Scholar]

- Republic Zambia. National Guidelines on Management and Care of Patients with HIV/AIDS. Republic Zambia Ministry of Health; 2004. [Google Scholar]

- Reynolds S, Nakigozi G, Newell K, Ndyanabo A, Galiwongo R, Boaz I, Quinn T, Gray R, Wawer M, Serwadda D. Failure of immunologic criteria to appropriately identify antiretroviral treatment failure in Uganda. AIDS. 2009;23(6):697–700. doi: 10.1097/QAD.0b013e3283262a78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosner B, Spiegelman D, Willett W. Correction of logistic regression relative risk estimates and confidence intervals for measurement error: the case of multiple covariates measured with error. American Journal of Epidemiology. 1990;132:734–745. doi: 10.1093/oxfordjournals.aje.a115715. [DOI] [PubMed] [Google Scholar]

- Sarle W, editor. Proceedings of the Nineteenth Annual SAS Users Group International Conference. SAS Institute; 1994. Neural networks and statistical models; pp. 1538–1550. [Google Scholar]

- Schechter M, Tuboi SH. Discordant immunological and virologic responses to antiretroviral therapy. Journal of Antimicrobial Chemotherapy. 2006;58:506–510. doi: 10.1093/jac/dkl263. [DOI] [PubMed] [Google Scholar]

- Schooley R. Viral load testing in resource-limited settings. Clinical Infectious Diseases. 2007;44:139–140. doi: 10.1086/510090. [DOI] [PubMed] [Google Scholar]

- Sinisi SE, Polley EC, Petersen ML, Rhee S-Y, van der Laan MJ. Super learning: an application to the prediction of HIV-1 drug resistance. Statistical Applications in Genetics and Molecular Biology. 2007;6:7. doi: 10.2202/1544-6115.1240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanski L, Cook J. Simulation-extrapolation: the measurement error jack-knife. Journal of the American Statistical Association. 1995;90:1247–1256. [Google Scholar]

- Steyerberg E, Vickers A, Cook N, et al. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology. 2010;21:128–138. doi: 10.1097/EDE.0b013e3181c30fb2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson MA, Aberg JA, Cahn P, Montaner JSG, Rizzardini G, Telenti A, Gatell JM, G•unthard HF, Hammer SM, Hirsch MS, Jacobsen DM, Reiss P, Richman DD, Volberding PA, Yeni P, Schooley RT. Antiretroviral Treatment of Adult HIV Infection. JAMA. 2010;304:321–333. doi: 10.1001/jama.2010.1004. [DOI] [PubMed] [Google Scholar]

- Tuboi SH, Brinkhof MW, Egger M, Stone RA, Braitstein P, Nash D, Sprinz E, Dabis F, Harrison LH, Schechter M. Discordant responses to potent antiretroviral therapyin previously naive HIV-1-infected adults initiating therapyin resource-constrained countries: The antiretroviral therapy in low-income countries (ART-LINC) collaboration. JAIDS. 2007;45:52–59. doi: 10.1097/QAI.0b013e318042e1c3. [DOI] [PubMed] [Google Scholar]

- Uganda. National Antiretroviral Treatment and Care Guidelines for Adults and Children. 1. Uganda Ministry of Health; 2003. [Google Scholar]

- UNAIDS. 2009 AIDS Epidemic Update. World Health Organization (WHO); 2009. http://data.unaids.org/pub/Report/2009/2009_epidemic_update_en.pdf. [Google Scholar]

- UNAIDS. Report on the global AIDS epidemic. World Health Organization (WHO); 2010. [Google Scholar]

- van der Laan M. Targeted Learning: Causal Inference for Observational and Experimental Data. Springer; 2011. [Google Scholar]

- van der Vaart A. Asymptotic Statistics. Cambridge University Press; 1998. [Google Scholar]

- Vekemans M, John L, Colebunders R. When to switch for antiretroviral treatment failure in resource-limited settings? AIDS. 2007;21:1205–1206. doi: 10.1097/QAD.0b013e3281c617e8. [DOI] [PubMed] [Google Scholar]

- Vickers A, Elkin E. Decision curve analysis: A novel method for evaluating prediction models. Medical Decision Making. 2006;26:565–574. doi: 10.1177/0272989X06295361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO. Antiretroviral therapy for HIV infection in adults and adolescents: 2010 Revision. World Health Organization; Geneva: 2010a. [PubMed] [Google Scholar]

- WHO. Towards universal access: Scaling up priority HIV/AIDS interventions in the health sector. World Health Organization; Geneva: 2010b. [Google Scholar]

- Zhou X-H, McClish DK, Obuchowski NA. Wiley Series in Probability and Statistics. 2002. Statistical Methods in Diagnostic Medicine. [Google Scholar]