Abstract

Background

Simulation is an effective method for teaching clinical skills but has not been widely adopted to educate trainees about how to teach.

Objective

We evaluated a curriculum for pediatrics fellows by using high-fidelity simulation (mannequin with vital signs) to improve pedagogical skills.

Intervention

The intervention included a lecture on adult learning and active-learning techniques, development of a case from the fellows' subspecialties, and teaching the case to residents and medical students. Teaching was observed by an educator using a standardized checklist. Learners evaluated fellows' teaching by using a structured evaluation tool; learner evaluations and the observer checklist formed the basis for written feedback. Changes in fellows' pedagogic knowledge, attitudes, and self-reported skills were analyzed by using Friedman and Wilcoxon rank-sum test at baseline, immediate postintervention, and 6-month follow-up.

Results

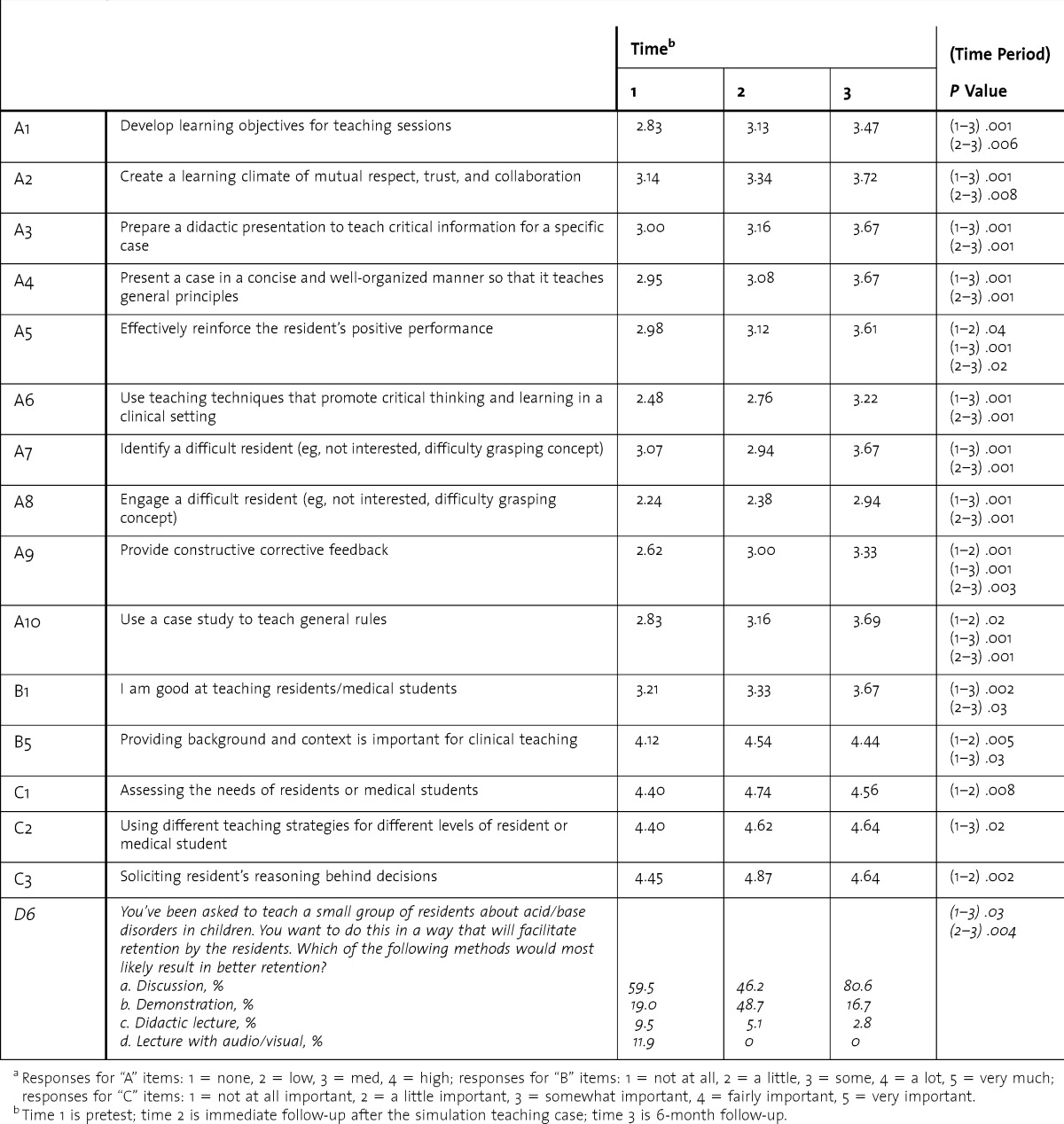

Forty fellows participated. Fellows' self-ratings significantly improved from baseline to 6-month follow-up for development of learning objectives, effectively reinforcing performance, using teaching techniques to promote critical thinking, providing constructive feedback, and using case studies to teach general rules. Fellows significantly increased agreement with the statement “providing background and context is important” (4.12 to 4.44, P = .02).

Conclusions

Simulation was an effective means of educating fellows about teaching, with fellows' attitudes and self-rated confidence improving after participation but returning to baseline at the 6-month assessment. The simulation identified common weaknesses of fellows as teachers, including failure to provide objectives to learners, failure to provide a summary of key learning points, and lack of inclusion of all learners.

What was known

Residents and fellows frequently teach junior learners despite often having received little education and preparation for this role.

What is new

A simulation, in which fellows' teaching abilities are assessed while teaching a case to junior learners, improved fellows' self-perceived teaching skills.

Limitations

Outcomes are limited to self-reported gains in skills. Multiple resources required to implement this curriculum may be a barrier to broad adoption.

Bottom line

The simulation identified common weaknesses of fellows as teachers, including failure to provide objectives to learners, failure to provide a summary of key learning points, and lack of inclusion of all learners.

Editor's Note: The online version of this article contains an education feedback checklist (48.5KB, doc) and fellow self-assessment questionnaire (60.5KB, doc) used in the study.

Introduction

Residents and fellows provide a significant amount of undergraduate clinical medical education. Yet, trainees have limited teaching experience and often no training. In an attempt to decrease this gap, resident-as-teacher programs are being incorporated into residency training.1 These programs are designed around adult-learning theories, which advocate self-directed learning and readily applicable information.2 Common elements among resident-as-teacher programs include discussion of basic teaching principles, resident-led teaching sessions observed by instructors and followed by a thorough debriefing, and success assessment through participant surveys.3–8 Inclusion of feedback strategies and leadership and communication skills help ensure a safe learning environment.9 Objective, performance-based evaluations are recommended to provide summative feedback and guidance for residents as they continue to develop their pedagogic skills.1 The impact of resident-as-teacher programs is far reaching, with benefits for medical students and positive results in patient care as well.10

High-fidelity patient simulation (HPS) can enhance resident and fellow training, but this method has not been widely adapted to resident-as-teacher training programs.4 HPS exercises typically begin with an introduction followed by learner participation in a staged scenario and end with an instructor-led debriefing. This structured environment aids instructors by facilitating close observation and opportunities for “teachable moments.” HPS sessions can be video recorded, providing a valuable source of feedback and meaningful evaluation based on measurable criteria.8,11–14

We hypothesized that HPS would enhance a resident-as-teacher training program in our institution by allowing fellows to gain experience teaching residents and medical students while enabling close observation of the fellows leading sessions.

Methods

Structured Teaching Program

Our study included all pediatrics subspecialty fellows training at our institution between July 2009 and June 2011 whose fellowships were more than 1 year in duration. Fellows participated in a structured teaching program using HPS as a platform to conceptualize their standardized teaching encounter. Constructs and methods from social learning theory (SLT) were the basis for developing different modalities to systematically educate fellows about how to teach.15 This process encompassed several steps: (1) development of a standardized lecture emphasizing adult learning and teaching skills to be viewed by all fellows (SLT constructs: knowledge acquisition, vicarious learning, skills modeling); (2) development of guidelines for fellows to use in constructing a teaching case for simulation (SLT construct: outcome expectations); (3) providing an opportunity for the case to be simulated with the fellow in a teaching role for residents (SLT constructs: skills practice and self-efficacy); and (4) delivery of structured feedback by faculty (SLT constructs: feedback and reinforcement).15

The Institutional Review Board at the University of Alabama at Birmingham approved this study.

Standardized Lecture

We developed a standardized lecture, organized into 5 main pedagogic concepts: (1) Malcolm Knowles' adult learning theory2; (2) David Kolb's experiential learning theory16; (3) a video depicting simulation to illustrate the complexity achievable in simulation; (4) a video showing the 1-minute preceptor or 5-step “microskills” teaching technique16; and (5) material from the effective Bringing Education and Service Together program adapted for fellows.17,18 The lecture was delivered during a teaching conference and was video recorded so it would be available for fellows to view just before their teaching encounter. All fellows were required to review the video before their teaching experience unless they attended the lecture in the 6 months before the date of their teaching case.

Simulation Case Guidelines

Constructing and demonstrating a clinical case study provided an applied learning opportunity for fellows. A standardized form, including learning objectives, was developed by an instructional design expert along with 2 of the authors. This template allowed fellows to choose a case they felt was important for general pediatricians. The paper-based case was reviewed with the simulation staff and adapted into a simulation scenario. The fellow also developed a brief teaching points presentation directed toward the case's learning objectives.

Teaching Simulation Exercise

The simulation exercise allowed fellows to demonstrate their teaching skills. The main premise of the program was to guide fellows functioning as teachers through appropriate learner orientation techniques, giving feedback, and capturing bedside and clinical “teachable moments.”19 This process was piloted on 2 fellows and adjusted to optimize clarity and content before full implementation. Each fellow individually came to the simulation center with the residents/medical students assigned to their service for that month to enact their case. Learner group sizes ranged from 2 to 12 and included varying levels (eg, medical students, interns, residents, and occasionally junior fellows). The fellow watched the learners enact the case from a separate room by using a live audio/video feed. The case scenario was immediately followed by a debriefing/teaching session in which the fellow provided constructive corrective feedback, clarified confusing aspects of the case, and instructed the learners about the key teaching points. The case was recorded, allowing the video to be available for case discussion. The entire debriefing/teaching session was videotaped with copies given to the fellowship program director and to fellows for their learner portfolio.

Structured Feedback

An educational consultant and faculty member associated with the simulation center were present for each fellow teaching session. A structured, teaching behaviors–feedback checklist adapted with permission from a tool with some validity evidence by Morrison et al20 (provided as online supplemental material) was used to evaluate fellow teaching performance. This adapted instrument was reviewed by educational and statistical consultants as well as the original author of the tool for content validity. Immediately after the fellow's teaching session, the educational consultant and 1 of the 2 physician-authors met with fellows to review their reflections of the case and provide feedback based on the completed teaching behaviors checklist. This structured feedback served as an additional learning opportunity to review key concepts in adult learning, identify strengths and weaknesses in the fellow's teaching performance, and provide guidance for improving teaching skills. Along with the learners' feedback of the teaching performance, this teaching behaviors checklist was used to develop formal written feedback and was provided to each fellow and his or her program director for inclusion in his or her learning portfolio. The same educational expert evaluated all of the teaching sessions.

Data Collection

Before listening to either the live or web-based teaching lecture, fellows completed a self-assessment of their current teaching knowledge, self-efficacy, and skills (provided as online supplemental material). Question content was developed by the evaluation team based on the objectives of the teaching lecture. Test items were reviewed by the educational consultant to assure the questions would appropriately assess learning. The fellows completed this same teaching self-assessment immediately after their simulated case/teaching session and again 6 months later.

Statistical Analysis

To assess the impact of the teaching simulation, we examined response categories pairwise over time using categorical modeling for percentage agreement, disagreement, and change from each time. We used the Friedman test for change overall and the Wilcoxon rank-sum test for pairwise differences for each pair of the 3 time points. We grouped the fellows' clinical specialty into dichotomous groups of invasive and noninvasive specialties based on program director input. All tests were done by using SAS version 9.3 (SAS Inc, Cary, NC).

The educational consultant reviewed all completed checklist forms and anecdotal notes to identify themes using a standard qualitative approach that was adapted for the checklist context. The first 2 stages of qualitative analysis proposed by Thomas and Harden21 were conducted. Stage 1 involved coding observer comments based on patterns of meaning within the checklist category context. Stage 2 consisted of establishing descriptive themes from these patterns. A constant comparative method was used to examine data across checklists at the same time and reviewed after coding to ensure no themes were overlooked.22 Finally, themes were prioritized by frequency of coding on the checklists.

Results

Forty fellows participated in the study. Approximately half (52%) were women and 80% were non-Hispanic White. Less than one-fourth of participants had prior teaching experience, and none had a teaching degree. Over time, fellows showed statistically significant improvements in all self-appraised teaching behaviors as well as some self-efficacy factors and 1 knowledge item (which improved and then declined to baseline level; table). There were no statistically significant learning differences between invasive and noninvasive groups except for 3 items: identification of a difficult learner who is not interested in content material or is having difficulty grasping a concept (3.51 versus 3.20, P = .005); use of a case study to teach general rules (2.29 versus 3.23, P = .02); and assessing the needs of the learner (4.65 versus 4.29, P = .002). All showed greater improvement in the invasive specialties. Learner feedback from residents and medical students was positive, especially focusing on the interactive and engaging teaching style of the sessions.

TABLE.

Fellows' Teaching Self-Assessment Significant Changes: Baseline to Immediate Follow-Up to 6-Month Follow-Upa

Themes emerged for both strengths and weaknesses in performance. Strengths generated reinforcement, while weaknesses generated recommendations for teaching improvement. The themes that represent more than 75% of the coded comments are identified by italics. Weakness themes included, in prioritized order, failure to provide objectives to learners, failure to provide summary of key points, lack of inclusion of all learners, lack of elicitation of underlying reasoning, and lack of appropriate constructive corrective feedback. Strength themes, also prioritized, included provision of general principles, use of evidence, positive reinforcement, encouraging participation, and identification of learning deficits. While some coded statements appeared in both strength and weakness themes (well done and not well done), they are listed for the overall theme by which they were most represented.

Discussion

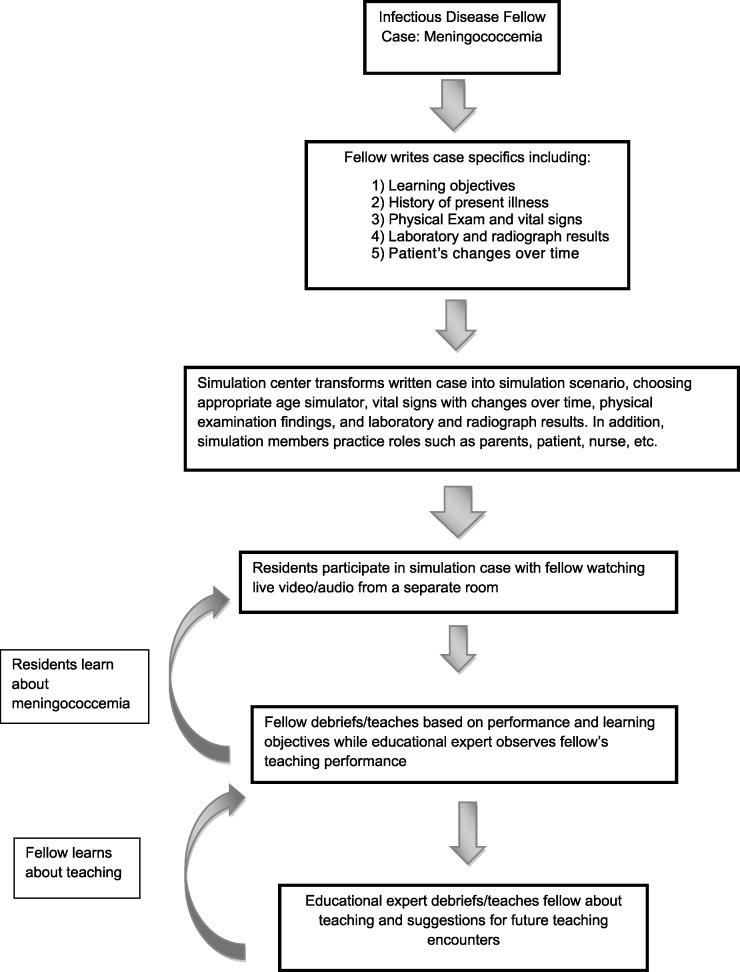

Fellows' attitudes and self-rated confidence improved significantly with this teaching program, and fellows continued to perceive improvements in their teaching skills at 6 months postintervention, indicating that our simulated teaching scenario was effective. Most existing resident-as-teacher programs use lecture and workshops as the primary means of teaching residents to teach, which deprives the resident of the opportunity to practice and apply pedagogic skills.23 Incorporating HPS into fellow teaching programs allows participants to have a more meaningful teaching experience by giving them the chance to apply and reinforce newly acquired skills and knowledge. Our study had similarities with that of Morrison and colleagues,18 which incorporated similar active learning techniques with multiple role-modeling sessions and assessed learners with an observed structured teaching encounter. Both active learning methods resulted in improvements in teaching skills. Neither study assessed whether participants used learned teaching techniques long term. Although offered, no fellow returned for the optional second simulated teaching encounter. It is unclear whether this was due to their belief that their skills were sufficient, as suggested by many of their self-assessment responses, or due to other clinical time demands. As in other aspects of simulation, specifically cardiopulmonary resuscitation skills, there may be value in repeated practice with guided structured feedback between teaching sessions.24 This is the focus of our ongoing evaluation of simulation as a tool to teach how to teach. One unique aspect of this model is the complete cycle of learning that occurs. The figure reflects this cycle of learning. This structure allowed faculty time and cost to be better justified as it was not just 1 fellow who was learning, but everyone involved in the simulation case. This model appears to be effective for all pediatrics fellows and could be applied to other fellowships as well.

FIGURE.

Simulation Teaching Case Example

The teaching program was well accepted by medical students and fellows and feasible within the usual educational structures for a wide variety of pediatrics specialty fellowships. The intervention required considerable resources which may reduce the ability of some settings to adopt or adapt this approach. There was a minimal 1-time cost to create the teaching video, produce copies of it, and place it on the university website. The total simulation faculty time for each fellow was approximately 3 hours. Preparation of the case, including laboratory results, radiograph results, and preparing simulation support staff to “perform” the case, took 90 minutes. Observation of the simulation case and debriefing took 1 hour, and 30 minutes was allotted to provide feedback to the fellow. The educational consultant took 1½ to 2 hours per fellow, observing their teaching, providing immediate feedback following the case, and preparing the formal written evaluation. Each fellow spent approximately 3 hours in the program. Forty minutes were required to review the video, at least 1 hour was needed to prepare the case, and approximately 90 minutes were needed to watch the simulation, participate in debriefing/teaching, and receive feedback.

Our study has several limitations, including its nonexperimental design and lack of a control group. Outcome data are largely limited to fellows' self-reported perceptions of improved skills and attitude about teaching. Finally, a single teaching/feedback session may not be enough practice for significant improvement to occur.

Conclusion

Fellows reported improved attitudes and self-rated confidence related to teaching after participating in a multilearner, multifaceted teaching skills simulation. The simulation identified common weaknesses of fellows as teachers, including failure to provide objectives to learners, failure to provide a summary of key learning points, and lack of inclusion of all learners.

Footnotes

Nancy M. Tofil, MD, MEd, is Medical Director, Pediatric Simulation Center, Children′s of Alabama, and Associate Professor, Department of Pediatrics, University of Alabama at Birmingham School of Medicine; Dawn Taylor Peterson, PhD, is Program Director, Department of Pediatrics, University of Alabama at Birmingham School of Medicine, and Director of Simulation Education and Research, Pediatric Simulation Center, Children′s of Alabama; Kathy F. Harrington, PhD, MPH, is Associate Professor, Department ofMedicine, University of Alabama at Birmingham School of Medicine; Brian T. Perrin, MD, is Resident Physician, UT/St. Thomas Midtown Hospital, Nashville, TN; Tyler Hughes, MD, is Internal Medicine Resident Physician, University of Alabama at Birmingham Huntsville Regional Campus; J. Lynn Zinkan,MPH, BSN, RN, is Nurse Educator, Pediatric Simulation Center, Children′s of Alabama; Amber Q.Youngblood, BSN, RN, is Nurse Educator, Pediatric Simulation Center, Children′s of Alabama; Al Bartolucci, PhD, is Professor Emeritus, Department of Biostatistics, University of Alabama at Birmingham School of Public Health; and Marjorie Lee White, MD, MPPM, MEd, is Medical Co-Director, Pediatric Simulation Center, Children′s of Alabama, and Associate Professor, Department of Pediatrics, University of Alabama at Birmingham School of Medicine.

Funding: This study was funded by Kaul Pediatric Institute, University of Alabama at Birmingham.

References

- 1.Yu T, Hill AG. Implementing an institution-wide resident-as-teacher program: successes and challenges. J Grad Med Educ. 2011;3(3):438–439. doi: 10.4300/JGME-03-03-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Knowles MS, Holton EF, Swanson RA. The Adult Learner. 5th ed. Woburn, MA: Butterworth-Heinemann Publications; 1998. [Google Scholar]

- 3.Pasquale SJ, Pugnaire MP. Preparing medical students to teach. Acad Med. 2002;77(11):1175–1176. doi: 10.1097/00001888-200211000-00046. [DOI] [PubMed] [Google Scholar]

- 4.Farrell SE, Pacella C, Egan D, Hogan V, Wang E, Bhatia K, et al. Resident-as-teacher: a suggested curriculum for emergency medicine. Acad Emerg Med. 2006;13(6):677–679. doi: 10.1197/j.aem.2005.12.014. [DOI] [PubMed] [Google Scholar]

- 5.Walton JM, Patel H. Residents as teachers in Canadian paediatric training programs: a survey of program director and resident perspectives. Paediatr Child Health. 2008;13(8):675–679. doi: 10.1093/pch/13.8.675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Weissman MA, Bensinger L, Koestler JL. Resident as teacher: educating the educators. Mt Sinai J Med. 2006;73(8):1165–1169. [PubMed] [Google Scholar]

- 7.Lacasse M, Ratnapalan S. Teaching-skills training programs for family medicine residents: systematic review of formats, content, and effects of existing programs. Can Fam Physician. 2009;55(9):902–903.e1–e5. [PMC free article] [PubMed] [Google Scholar]

- 8.Wamsley MA, Julian KA, Wipf JE. A literature review of “resident-as-teacher” curricula: do teaching courses make a difference. J Gen Intern Med. 19(5, pt 2):574–581. doi: 10.1111/j.1525-1497.2004.30116.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Butani L, Paterniti DA, Tancredi DJ, Li ST. Attributes of residents as teachers and role models—a mixed methods study of stakeholders. Med Teach. 2013;35(4):1052–1059. doi: 10.3109/0142159X.2012.733457. [DOI] [PubMed] [Google Scholar]

- 10.Snell L. The resident-as-teacher: it's more than just about student learning. J Grad Med Educ. 2011;3(3):440–441. doi: 10.4300/JGME-D-11-00148.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mann KV, Sutton E, Frank B. Twelve tips for preparing residents as teachers. Med Teach. 2007;29(4):301–306. doi: 10.1080/01421590701477431. [DOI] [PubMed] [Google Scholar]

- 12.Pinksy LE, Wipf JE. A picture is worth a thousand words: practical use of videotape in teaching. J Gen Intern Med. 2000;15(11):805–810. doi: 10.1046/j.1525-1497.2000.05129.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Decker S, Sportsman S, Puetz L, Billings L. The evolution of simulation and its contribution to competency. J Contin Educ Nurs. 2008;39(2):74–80. doi: 10.3928/00220124-20080201-06. [DOI] [PubMed] [Google Scholar]

- 14.Tofil NM, Benner KW, Zinkan L, Alten J, Varisco BM, White ML. Pediatric intensive care simulation course: a new paradigm in teaching. J Grad Med Educ. 2011;3(1):81–87. doi: 10.4300/JGME-D-10-00070.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bandura A. Social Learning Theory. Englewood Cliffs, NJ: Prentice Hall; 1977. [Google Scholar]

- 16.Kolb DA. Experiential Learning. Englewood Cliffs, NJ: Prentice Hall; 1984. [Google Scholar]

- 17.Morrison EH, Rucker L, Boker JR, Hollingshead J, Hitchcock MA, Prislin MD, et al. A pilot randomized, controlled trial of a longitudinal residents-as-teachers curriculum. Acad Med. 2003;78(7):722–729. doi: 10.1097/00001888-200307000-00016. [DOI] [PubMed] [Google Scholar]

- 18.Morrison EH, Rucker L, Boker JR, Gabbert CC, Hubbell FA, Hitchcock MA, et al. The effect of a 13-hour curriculum to improve residents' teaching skills: a randomized trial. Ann Intern Med. 2004;141(4):257–263. doi: 10.7326/0003-4819-141-4-200408170-00005. [DOI] [PubMed] [Google Scholar]

- 19.Neher JO, Gordon KC, Meyer B, Stevens N. A five-step “microskills” model of clinical teaching. J Am Board Fam Pract. 1992;5(4):419–424. [PubMed] [Google Scholar]

- 20.Morrison EH, Rucker L, Prislin MD, Castro CS. Lack of correlation of residents' academic performance and teaching skills. Am J Med. 2000;109(3):238–240. doi: 10.1016/s0002-9343(00)00473-3. [DOI] [PubMed] [Google Scholar]

- 21.Thomas J, Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med Res Methodol. 2008;8:45. doi: 10.1186/1471-2288-8-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hewitt-Taylor J. Use of constant comparative analysis in qualitative research. Nurs Stand. 2001;15(42):39–42. doi: 10.7748/ns2001.07.15.42.39.c3052. [DOI] [PubMed] [Google Scholar]

- 23.Fromme HB, Whicker SA, Paik S, Konopasek L, Koestler JL, Wood B, et al. Pediatric resident-as-teacher curricula: a national survey of existing programs and future needs. J Grad Med Educ. 2011;3(2):168–175. doi: 10.4300/JGME-D-10-00178.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wayne DB, Butter J, Siddall VJ, Fudala MJ, Wade LD, Feinglass J, et al. Mastery learning of advanced cardiac life support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med. 2006;21(3):251–256. doi: 10.1111/j.1525-1497.2006.00341.x. [DOI] [PMC free article] [PubMed] [Google Scholar]