Abstract

Background

Understanding quality improvement (QI) is an important skill for physicians, yet educational interventions focused on teaching QI to residents are relatively rare. Web-based training may be an effective teaching tool in time-limited and expertise-limited settings.

Intervention

We developed a web-based curriculum in QI and evaluated its effectiveness.

Methods

During the 2011–2012 academic year, we enrolled 53 first-year internal medicine residents to complete the online training. Residents were provided an average of 6 hours of protected time during a 1-month geriatrics rotation to sequentially complete 8 online modules on QI. A pre-post design was used to measure changes in knowledge of the QI principles and self-assessed competence in the objectives of the course.

Results

Of the residents, 72% percent (37 of 51) completed all of the modules and pretests and posttests. Immediate pre-post knowledge improved from 6 to 8.5 for a total score of 15 (P < .001) and pre-post self-assessed competence in QI principles on paired t test analysis improved from 1.7 to 2.7 on a scale of 5 for residents who completed all of the components of the course.

Conclusions

Web-based training of QI in this study was comparable to other existing non–web-based curricula in improving learner confidence and knowledge in QI principles. Web-based training can be an efficient and effective mode of content delivery.

Editor's Note: The online version of this article contains the scoring rubric (21.4KB, docx) and validation evidence for the tool, the self-efficacy survey used in this study, and a table (20.8KB, docx) describing titles, objectives, and length of the developed online modules and mean scores of the 37 learners on the accompanying multiple-choice quizzes of the modules.

Introduction

Practice-based learning and improvement is a core competency,1–3 yet limited evidence exists for how best to train physicians to lead quality improvement (QI) initiatives.4 The literature indicates that QI curricula are generally well accepted, improve knowledge, and can lead to improvements in processes of care.5 Challenges to the widespread introduction of QI curricula include a shortage of faculty expertise, limited faculty and trainee time, and a lack of stakeholder buy-in.6–8 Web-based training may offer a solution by addressing learners' time constraints and expanding access to expertise.9 Although web-based training in medical education is promising,10 the effectiveness of such curricula in QI has not been widely studied.

We designed and assessed the feasibility and effectiveness of a web-based curriculum to teach QI principles to interns.

Methods

Curriculum Description

We used Ogrinc and Headrick's Fundamentals of Health Care Improvement: A Guide to Improving Your Patients' Care,11 with permission from the publisher, to develop 8 web-based interactive modules. Interns in internal medicine, internal medicine-pediatrics, and internal medicine-psychiatry programs at Duke University Medical Center were enrolled in the course during the 2011–2012 academic year. Learners were provided an average of 6 hours of protected time during their 1-month geriatrics rotation to complete the modules and the accompanying pretests and posttests. Completion of the modules was tracked. E-mails were sent at the midpoint and end of the rotation to remind learners to complete the curriculum.

The study protocol was reviewed by Duke University's Institutional Review Board, which granted exemption.

Evaluation

A pre-post design was used to measure changes in QI knowledge and skills. We created a survey with a 5-point Likert scale (0 = no confidence at all to 5 = completely confident) based on the objectives of the modules to determine self-efficacy. Before viewing modules, learners completed the self-efficacy survey and the Quality Improvement Knowledge Application Tool (QIKAT) pretest. The QIKAT has previously been validated in trainees and has been shown to discriminate between learners with and without practice-based learning and improvement training.12 This tool offers 3 scenarios, and learners must state an aim, measures, and an intervention associated with QI initiatives. The responses are scored from 0 to 5 for each scenario, giving a maximum score of 15 (provided as online supplemental material).13 The open-ended format forces the learners to spontaneously generate answers, avoiding cueing and enabling assessment of problem-solving ability. The learners then completed the modules in sequential order without bypassing any parts. Each module was immediately followed by a multiple-choice quiz. At the end of all 8 modules learners completed the same self-efficacy survey and the QIKAT posttest. Using the first 30 QIKAT scenarios, 2 authors (M.Y. and G.T.B.) refined their scoring techniques to achieve a weighted Kappa statistic of 0.90, which is considered excellent agreement.14 The same 2 authors then divided and scored the remaining QIKAT scenarios independently. Scorers were blinded to the identity of the learners and to the prestatus and poststatus of each QIKAT. Residents who completed the study were encouraged to complete open-text fields offering their feedback about the curriculum to the investigators.

The primary outcome measures were the change in the QIKAT scores. Secondary outcome measures were the self-efficacy results.

Statistical Analysis

Paired t tests were conducted for the change scores (post-pre) for QIKAT and self-efficacy surveys to determine whether there was a significant change in knowledge (QIKAT) and confidence (self-efficacy). The analysis was conducted using SPSS Version 20 (IBM Corp, Armonk, NY).

Results

Of the interns assigned to the course, 96% (51 of 53) completed all of the modules and 72% (37 of 51) completed the accompanying pretests and posttests. Participants were mostly categorical interns (n = 27) and women (n = 20), and had a mean age of 28; few interns had prior QI experience (n = 2).

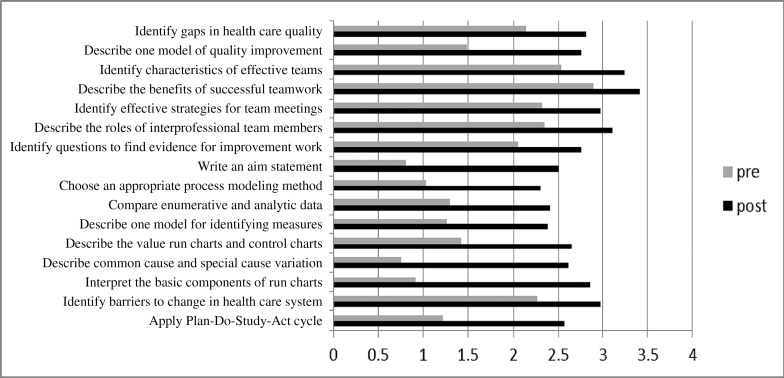

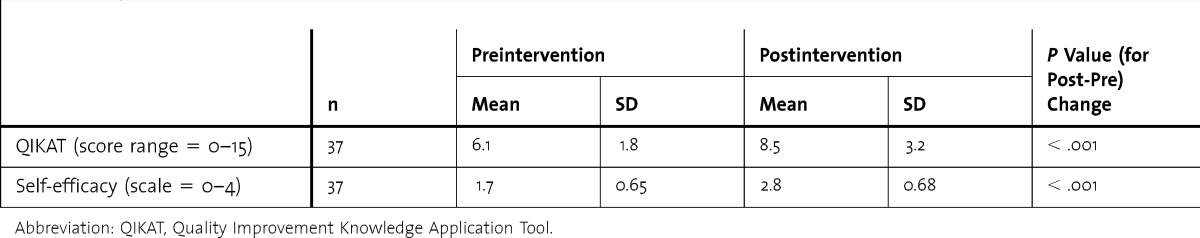

A statistically significant difference in knowledge was seen between the preintervention and postintervention QIKAT scores (maximum score = 15; preintervention mean = 6.1, SD = 1.8; postintervention mean = 8.5, SD = 3.2; P < .001; table). Statistically significant differences in confidence were also noted (scale of 0–4; preintervention mean = 1.7, SD = 0.65; postintervention mean = 2.8, SD = 0.68; P < .001; table), and there was improvement for all 16 items (figure). The mean scores on multiple-choice quizzes that followed the 8 modules are shown in the table provided as online supplemental material. The average score on all the tests was 82% correct.

TABLE.

Knowledge and Self-Efficacy Scores of Interns Who Completed All Elements of the Course

FIGURE.

Interns' Self-Efficacy in Quality Improvement Concepts Measured Before and After Completion of the Modules (n = 37)

Discussion

Our online QI curriculum demonstrated small gains in knowledge. Residents also reported improved confidence in proficiency of QI concepts. Our results are comparable to those from several other, non–web-based QI curricula that showed similar knowledge gains on QIKAT, despite involving different types of learners (a table presenting validity evidence of QIKAT in other settings is provided as online supplemental material).12,15–17 Our results were significant, but improvement on QIKAT scores were modest and had a high standard deviation (3.2 for a test with a total score of 15). The latter may have resulted from the fact that some learners spent insufficient time on the free text responses. Interns scored an average of 2.5 points higher from baseline; on average, learners scored 2 points for each scenario before the course and 3 points after the course. According to the scoring criteria, a score of 2 indicated that the learner's response needed substantial modification, whereas a score of 3 was good, but the response needed modification. We believe this is a meaningful change in practice.

We used self-efficacy as 1 of the evaluation tools, as confidence and personal drive are major components in initiating and completing a QI project. Self-efficacy is a learner's belief that he or she can successfully complete a task. It predicts success because it influences choices, effort, and persistence in the face of challenges.18,19 The results on the self-efficacy metric reflected positive change in the learner's confidence, but the results may be affected by self-report bias.

Learner feedback identified improvements for future implementation of the curriculum, including time for group discussions to facilitate active learning and reflection and shortened modules with the option to self-advance slides (after scoring the minimum passing score on the posttest to avoid advancing slides without focusing on the learning content).

Our study has several limitations. First, we did not include a hands-on QI project experience as part of the curriculum. This would have been desirable as health care is best improved through implementing skill-based activities in combination with theory instruction.20–24 Additionally, the evaluation focused only on immediate knowledge and self-efficacy gain but not long-term retention.

Our web-based QI curriculum was generally acceptable and feasible for learners. The course promoted self-directed learning that can occur at the learner's convenience and required less faculty time than other methods of teaching QI. Our residency program did not have a preexisting QI curricula, and the web-based approach was a good starting point and foundation for future project work. The curriculum could be easily transferrable to other specialties with no need for additional costs or faculty expertise.

Conclusion

Our QI curriculum was effective, as evidenced by the statistically significant knowledge and confidence gain in the learners who completed the course. Positive learner feedback accompanied by improvements in QIKAT scores and self-efficacy in these specific content areas support this finding.

Footnotes

Mamata Yanamadala, MBBS, MS, is Medical Instructor, Division of Geriatrics, Department of Medicine, Duke University Medical Center; Jeffrey Hawley, BS, is Analyst/Programmer, Duke Office of Clinical Research; Richard Sloane, MPH, is Biostatistician, Center for the Study of Aging, Duke University Medical Center; Jonathan Bae, MD, is Assistant Professor, Division of Hospital Medicine, Department of Medicine, Duke University School of Medicine; Mitchell T. Heflin, MD, MHS, is Associate Professor, Division of Geriatrics, Department of Medicine, Duke University Medical Center; and Gwendolen T. Buhr, MD, MHS, MEd, CMD, is Assistant Professor, Division of Geriatrics, Department of Medicine, Duke University Medical Center.

Funding: This study was supported by Duke GME Innovation Funding.

References

- 1.Accreditation Council for Graduate Medical Education. Common Program Requirements. Effective July 2013. http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/CPRs2013.pdf. Accessed January 14, 2014. [Google Scholar]

- 2.Leach DC. A model for GME: shifting from process to outcomes. A progress report from the Accreditation Council for Graduate Medical Education. Med Educ. 2004;38(1):12–14. doi: 10.1111/j.1365-2923.2004.01732.x. [DOI] [PubMed] [Google Scholar]

- 3.Accreditation Council for Graduate Medical Education. ACGME Program Requirements for Graduate Medical Education in Internal Medicine. 2009. http://www.acgme.org/acgmeweb/Portals/0/PFAssets/2013-PR-FAQ-PIF/140_internal_medicine_07012013.pdf. Accessed November 4, 2013. [Google Scholar]

- 4.Morrison LJ, Headrick LA. Teaching residents about practice-based learning and improvement. Jt Comm J Qual Patient Saf. 2008;34(8):453–459. doi: 10.1016/s1553-7250(08)34056-2. [DOI] [PubMed] [Google Scholar]

- 5.Wong BM, Etchells EE, Kuper A, Levinson W, Shojania KG. Teaching quality improvement and patient safety to trainees: a systematic review. Acad Med. 2010;85(9):1425–1439. doi: 10.1097/ACM.0b013e3181e2d0c6. [DOI] [PubMed] [Google Scholar]

- 6.Heard JK, Allen RM, Clardy J. Assessing the needs of residency program directors to meet the ACGME general competencies. Acad Med. 2002;77(7):750. doi: 10.1097/00001888-200207000-00040. [DOI] [PubMed] [Google Scholar]

- 7.Kerfoot BP, Conlin PR, McMahon GT. Health systems knowledge and its determinants in medical trainees. Med Educ. 2006;40(11):1132. doi: 10.1111/j.1365-2929.2006.02589.x. [DOI] [PubMed] [Google Scholar]

- 8.Peters AS, Ladden MD, Kotch JB, Fletcher RH. Evaluation of a faculty development program in managing care. Acad Med. 2002;77(11):1121–1127. doi: 10.1097/00001888-200211000-00014. [DOI] [PubMed] [Google Scholar]

- 9.Kerfoot BP, Nabha KS, Hafler JP. Designing a surgical “resident-as-teacher” programme. Med Educ. 2004;38(11):1190. doi: 10.1111/j.1365-2929.2004.02005.x. [DOI] [PubMed] [Google Scholar]

- 10.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of e-learning in medical education. Acad Med. 2006;81(3):207–212. doi: 10.1097/00001888-200603000-00002. [DOI] [PubMed] [Google Scholar]

- 11.Ogrinc GS, Headrick L. Fundamentals of Health Care Improvement: A Guide to Improving Your Patients' Care. Oak Brook Terrace, IL: Joint Commission Resources; 2008. [Google Scholar]

- 12.Ogrinc G, Headrick LA, Morrison LJ, Foster T. Teaching and assessing resident competence in practice-based learning and improvement. J Gen Intern Med. 2004;19(5, pt 2):496–500. doi: 10.1111/j.1525-1497.2004.30102.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Varkey P, Karlapudi SP, Bennet KE. Teaching quality improvement: a collaboration project between medicine and engineering. Am J Med Qual. 2008;23(4):296–301. doi: 10.1177/1062860608317764. [DOI] [PubMed] [Google Scholar]

- 14.Fleiss JL. Statistical Methods for Rates and Proportions. 2nd ed. New York, NY: Wiley John and Sons Inc; 1981. [Google Scholar]

- 15.Reardon CL, Ogrinc G, Walaszek A. A didactic and experiential quality improvement curriculum for psychiatry residents. J Grad Med Educ. 2011;3(4):562–565. doi: 10.4300/JGME-D-11-0008.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Vinci LM, Oyler J, Johnson JK, Arora VM. Effect of a quality improvement curriculum on resident knowledge and skills in improvement. Qual Saf Health Care. 2010;19(4):351–354. doi: 10.1136/qshc.2009.033829. [DOI] [PubMed] [Google Scholar]

- 17.Ogrinc G, West A, Eliassen MS, Liuw S, Schiffman J, Cochran N. Integrating practice-based learning and improvement into medical student learning: evaluating complex curricular innovations. Teach Learn Med. 2007;19(3):221–229. doi: 10.1080/10401330701364593. [DOI] [PubMed] [Google Scholar]

- 18.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence. JAMA. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 19.Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(suppl 10):46–54. doi: 10.1097/00001888-200510001-00015. [DOI] [PubMed] [Google Scholar]

- 20.Headrick LA, Knapp M, Neuhauser D, Gelmon S, Norman L, Quinn D, et al. Working from upstream to improve health care: the IHI interdisciplinary professional education collaborative. Jt Comm J Qual Improv. 1996;22(3):149–164. doi: 10.1016/s1070-3241(16)30217-6. [DOI] [PubMed] [Google Scholar]

- 21.Baker GR, Gelmon S, Headrick L, Knapp M, Norman L, Quinn D, et al. Collaborating for improvement in health professions education. Qual Manag Health Care. 1998;6(2):1–11. doi: 10.1097/00019514-199806020-00001. [DOI] [PubMed] [Google Scholar]

- 22.Headrick L. Guidelines to Accelerate Teaching and Learning About the Improvement of Health Care. Washington, DC: Community-based Quality Improvement Education for Health Professions Health Resources and Services Administration/Bureau of Health Professions; 1999. pp. 1–23. [Google Scholar]

- 23.Ogrinc G, Headrick LA, Mutha S, Coleman MT, O'Donnell J, Miles PV. A framework for teaching medical students and residents about practice-based learning and improvement, synthesized from a literature review. Acad Med. 2003;78(7):748–756. doi: 10.1097/00001888-200307000-00019. [DOI] [PubMed] [Google Scholar]

- 24.Dean Cleghorn G, Ross Baker G. What faculty need to learn about improvement and how to teach it to others. J Interprof Care. 2000;14(2):147–159. [Google Scholar]