ABSTRACT

BACKGROUND

Studies have documented strong associations between cognitive function, health literacy skills, and health outcomes, such that outcome performance may be partially explained by cognitive ability. Common cognitive assessments such as the Mini Mental Status Exam (MMSE) therefore may be measuring the same latent construct as existing health literacy tools.

OBJECTIVES

We evaluated the potential of the MMSE as a surrogate measure of health literacy by comparing its convergent and predictive validity to the three most commonly used health literacy assessments and education.

SUBJECTS

827 older adults recruited from an academic general internal medicine ambulatory care clinic or one of five federally qualified health centers in Chicago, IL. Non-English speakers and those with severe cognitive impairment were excluded.

MEASURES

Pearson correlations were completed to test the convergent validity of the MMSE with assessments of health literacy and education. Receiver Operating Characteristic (ROC) curves and the d statistic were calculated to determine the optimal cut point on the MMSE for classifying participants with limited health literacy. Multivariate logistic regression models were completed to measure the predictive validity of the new MMSE cut point.

KEY RESULTS

The MMSE was found to have moderate to high convergent validity with the existing health literacy measures. The ROC and d statistic analyses suggested an optimal cut point of ≤ 27 on the MMSE. The new threshold score was found to predict health outcomes at least as well as, or better than, existing health literacy measures or education alone.

CONCLUSIONS

The MMSE has considerable face validity as a health literacy measure that could be easily administered in the healthcare setting. Further research should aim to validate this cut point and examine the constructs being measured by the MMSE and other literacy assessments.

KEY WORDS: health literacy, MMSE, measurement, cognition, clinical strategies

INTRODUCTION

Health literacy, as currently defined by the Institute of Medicine, encompasses “the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.”1 Existing tools to measure health literacy have predominantly focused on capturing an individual’s ability to read or perform basic math skills.2 Early on, this led many researchers and health professionals to design solutions to the problem that solely involved the modification of health materials following best practices for plain language and format.3,4 Consequently. these interventions were somewhat effective at improving comprehension and knowledge, but had variable to no impact on behavior.

Regardless of existing controversies over health literacy definitions, these very crude measures of reading fluency, vocabulary, and numeracy have demonstrated a high degree of sensitivity to detect an at-risk group of individuals who consistently achieve poorer health outcomes; ranging from inadequate knowledge and self-care ability to suboptimal chronic disease outcomes, greater hospitalization and mortality risk.5–9 The magnitude of the effect of ‘low health literacy’ previously was equated to a diagnosis of cancer or chronic obstructive pulmonary disease (COPD), in terms of its impact on physical and mental health measures on the SF-36 assessment.10 Part of any effective solution to this problem will then need to include a means to identify those at risk. Yet the tools currently available have not been designed for clinical use, but rather for research purposes only.

There may be opportunity to screen for limited health literacy using available clinical assessments that may measure the same latent trait as common health literacy tools. Specifically, more recent studies have found that health literacy assessments are strongly correlated with various tests of cognitive abilities,11,12 such that the relationship between literacy and health outcomes may in part be explained by a more global cognitive skill set.13 If cognitive and health literacy measures are revealing a similar latent trait, then a clinical screening tool such as the Mini Mental Status Examination (MMSE),14,15 a brief global assessment of cognitive function, may viably serve as a surrogate measure to identify patients at risk for limited health literacy. The advantages of using the MMSE in this manner could be twofold: 1) it would offer greater face validity of the actual problem, that being limited proficiency for understanding and acting on health information; and 2) the MMSE is already a well-vetted assessment used for clinical purposes. In this study, we sought to explore associations between the MMSE and the most common measures used in health literacy research, and to determine an appropriate MMSE threshold that would reflect limited health literacy. We then compared its predictive validity with the most common assessments used in health literacy research by investigating the associations of each with physical and mental health outcomes.

METHODS

This investigation was embedded within a larger cohort study studying health literacy, cognitive function and decline, and health among older adults known as ‘LitCog’ (R01AG03611). A more complete description of the LitCog study and its battery has been published previously.13 Northwestern University’s Institutional Review Board approved this study.

Sample and Procedure

English-speaking adults between the ages of 55 and 74 years who received care at an academic general internal medicine ambulatory care clinic or one of five federally qualified health centers in Chicago, IL were recruited for study participation. Using each institution’s electronic health record, patients were initially identified and deemed eligible for the study by age, and ineligible if determined to have a severe cognitive or hearing impairment (by ICD-9 diagnosis codes). Additional exclusion criteria included limited English proficiency or not being connected to a clinic physician (defined as < 2 visits in 2 years). Eligible patients were then asked to participate in the study via a phone call, at which time they were asked the Six-Item Screener to further ensure the absence of cognitive impairment or dementia (impaired < 4).16 The final sample included 827 participants, and each subject completed two in-person, structured interviews. The combined interview included basic demographic information, socioeconomic status, comorbidity, functional health status, health literacy measures, the MMSE, a comprehensive battery of cognitive tests, and an assessment of performance on everyday health tasks.

Measurement

Health literacy measures investigated included the Test of Functional Health Literacy in Adults (TOFHLA), the Rapid Estimate of Adult Literacy in Medicine (REALM), and the Newest Vital Sign (NVS). The TOFHLA uses actual materials patients might encounter in healthcare to test their reading fluency and numeracy skills; tested by the comprehension of labeled prescription vials, an appointment slip, a chart describing eligibility for financial aid, and an example of results from a medical test17 (range 0–100; limited literacy ≤ 75). The REALM is a word-recognition test comprised of 66 health-related words arranged in order of increasing difficulty.18 Patients are scored based on the total number of words pronounced correctly (range 0–66; limited literacy ≤ 61). Finally, the NVS is a screening tool used to determine risk for limited health literacy.19 Patients are given a copy of a nutrition label and asked six questions about how they would interpret and act on the information (range 0–6; limited literacy ≤ 4).

Cognitive functioning was assessed using specific tasks of the MMSE (orientation, serial subtraction, working memory, delayed recall, and multi-step instructions).15 Each task is further broken down into domains: orientation to time, orientation to place, registration, serial subtraction, recall, language, and praxis/command (scored from 0 to 30). It is a common tool used clinically in various practice settings to screen for dementia, and despite widespread use, it has been recognized that much like health literacy measures, less educated but cognitively intact individuals may perform more poorly on tasks.20

Outcomes included self-reported physical and mental health status. Physical function was assessed using the SF-36 physical health summary subscale.21 Anxiety and depression were measured using the Patient Reported Outcomes Measurement Information Service (PROMIS) short form subscales.22 Anxiety scores ranged from 7 to 35, depression from 8 to 40, and physical function from 0 to 100. Northwestern University’s Institutional Review Board approved the study.

Analysis Plan

Descriptive statistics were calculated for demographic variables, including education, health literacy tools, the MMSE, and health status questions. Pearson product moment correlations were completed to examine the criterion validity of MMSE and its components, with the three health literacy measures and education. We calculated the accuracy of the MMSE in correctly classifying those with limited health literacy by computing the receiver operating characteristic (ROC) curves using the limited health literacy cut points from the TOFLHLA, REALM, and NVS. To identify the optimal MMSE cutoff scores for predicting limited health literacy, we computed the d statistic; an optimal cutoff score is determined by minimizing the value of d, and analyzing each potential threshold score on the MMSE (25–29; scores below 25 reflect cognitive impairment).23

Once a cut point was identified, we computed the sensitivity and specificity of the MMSE in determining limited health literacy based on each literacy measure. Multivariate logistic regression analyses were conducted to compare the predictive validity of the MMSE (dichotomized with the newly calculated cut point) with the three literacy assessments (dichotomized as limited/adequate) and education (< high school, ≥ high school), by examining associations of each with physical and mental health outcomes. Each model included age, sex, race and chronic conditions as covariates. Specific cognitive tasks within the MMSE were also examined separately. The Vuong test,24 a likelihood-ratio based approach for non-nested models, was used to determine whether the variance explained by models (R2) significantly changed when health literacy measures and the MMSE were added. All analyses were performed using STATA version 12 (College Station, TX).

RESULTS

The sample is described in Table 1. Participants were mostly female, with a mean age 63.1 (5.5) years. The sample was diverse by education level, but about half were white and earned more than $50,000 annually. Individuals on average scored a 28.0 (2.3) of 30 possible points on the MMSE. The total MMSE score was moderately to strongly correlated with each of the health literacy measures and education (TOFHLA r = 0.70, REALM r = 0.63, NVS, r = 0.54, education, r = 0.52, p < 0.01; Table 2).

Table 1.

Sample Characteristics and Test Performance (N = 827)

| Characteristic | Summary Value |

|---|---|

| Age, mean (SD) | 63.1 (5.5) |

| Gender (%) | |

| Female | 68.0 |

| Race (%) | |

| Black | 42.7 |

| White | 50.1 |

| Other | 7.2 |

| Education Level (%) | |

| High School or less | 27.1 |

| Some College or Technical School | 22.1 |

| College Graduate | 20.4 |

| Graduate Degree | 30.4 |

| Income (%) | |

| < $10,000 | 12.2 |

| $10,000–$24,999 | 19.7 |

| $25,000–$49,999 | 15.3 |

| > 50,000 | 52.8 |

| Number of Comorbidities, mean (SD) | 1.9 (1.4) |

| Health Literacy and Cognitive Measures, mean (SD) | |

| REALM (0–66) | 59.6 (12.1) |

| TOFHLA (0–100) | 77.0 (16.0) |

| NVS (0–6) | 3.2 (2.1) |

| MMSE (0–30) | 28.0 (2.3) |

| Functional Health Status | |

| Anxiety (7–35) | 15.3 (5.8) |

| Depression (8–40) | 13.0 (6.1) |

| Physical Functioning (0–100) | 81.5 (18.1) |

Table 2.

Correlations Between the MMSE, Its Subparts and Health Literacy Measures (n = 827)

| TOFHLA | REALM | NVS | Education | |

|---|---|---|---|---|

| MMSE Total | 0.70* | 0.63* | 0.54* | 0.52* |

| Orientation | 0.38* | 0.28* | 0.25* | 0.18* |

| Registration | 0.08 | 0.09 | 0.06 | 0.03 |

| Serial Subtraction | 0.52* | 0.44* | 0.45* | 0.45* |

| Delayed Recall | 0.35* | 0.28* | 0.46* | 0.37* |

| Language | 0.46* | 0.40* | 0.37* | 0.33* |

| Praxis | 0.55* | 0.50* | 0.39* | 0.41* |

*p < 0.001

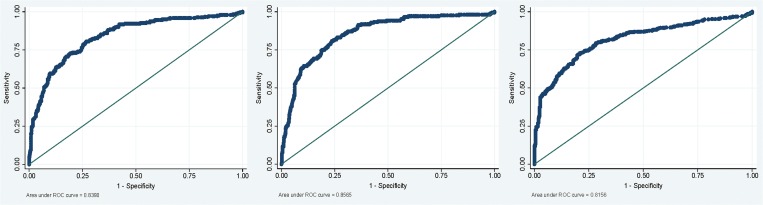

In calculating the ROC curve for each possible cut point of the MMSE (25–29), the greatest area under the ROC curve for the TOFHLA, REALM and NVS was 0.84, 0.86, and 0.82 respectively (Fig. 1). Based on the TOFHLA, the greatest area under the curve and smallest d statistic was for a cut score of ≤ 27, with a sensitivity of 67.9 % and a specificity of 84.4 % for classifying limited health literacy (d = 0.36). At the same threshold, the MMSE had a sensitivity of 71.6 % and a specificity of 82.2 % when identifying limited health literacy using the REALM (d = 0.34). The optimal threshold for the MMSE identified with the NVS, however, was found to be ≤ 28 with a sensitivity of 74.0 % and a specificity of 76 % (d = 0.35).

Figure 1.

Calculated receiver operating curves for the TOFHLA, REALM, NVS and education.

Using a cut point of ≤ 27, the MMSE was a significant independent predictor of physical and mental health outcomes, such that those performing below this threshold had significantly poorer physical health (β = −2.72, p < 0.05), and higher anxiety (β = 0.96, p < 0.05) and depression scores (β = 1.76, p < 0.001; Table 3). The variance explained (R2) by models with the MMSE as a dichotomous predictor, was equal to the variance explained by any of the common health literacy measures and that of education attainment. Utilizing the Vuong test, the differences in variance explained between the MMSE, TOFHLA, REALM, NVS, and education were not statistically significantly different (Table 3).

Table 3.

Models Predicting Physical and Mental Health Outcomes by Three Health Literacy Measures and the MMSE

| Measure | Physical Health β (95 % CI) | Anxiety Score β (95 % CI) | Depression Score β (95 % CI) |

|---|---|---|---|

| MMSE ≤ 27 | −2.72 (−5.06, −0.38)* | 0.96 (0.11, 1.81)* | 1.76 (0.88, 2.63)*** |

| R 2 = 0.34 | R 2 = 0.13 | R 2 = 0.18 | |

| - Orientation | 2.99 (1.20, 4.77)** | −0.73 (−1.38, −0.75)* | −1.28 (−1.95, −0.60)*** |

| R 2 = 0.35 | R 2 = 0.13 | R 2 = 0.18 | |

| - Registration | 0.05 (−14.58, 1.65) | −0.62 (−5.93, 4.68) | 1.63 (−3.83, 7.10) |

| R 2 = 0.35 | R 2 = 0.13 | R 2 = 0.17 | |

| - Serial Subtraction | 0.90 (0.15, 1.65)* | −0.29 (-0.57, −0.01)* | −0.65 (−0.94, −0.36)*** |

| R 2 = 0.35 | R 2 = 0.14 | R 2 = 0.19 | |

| - Delayed Recall | 0.84 (−0.03, 1.72) | −0.19 (−0.51, 0.13) | −0.47(−0.80, −0.15)** |

| R 2 = 0.34 | R 2 = 0.14 | R 2 = 0.18 | |

| - Language | −0.77 (−3.09, 1.54) | −0.55 (−1.39, 0.29) | −0.99 (−1.86, −0.12)* |

| R 2 = 0.33 | R 2 = 0.14 | R 2 = 0.18 | |

| - Praxis | 0.94 (−0.63, 2.51) | −0.28 (−0.86, 0.29) | −1.00 (−1.28, −0.41)** |

| R 2 = 0.33 | R 2 = 0.14 | R 2 = 0.19 | |

| TOFHLA < 75 | −3.99 (−6.40, −1.58)** | 0.47 (−0.42, 1.37) | 1.57 (0.66, 2.49)** |

| R 2 = 0.35 | R 2 = 0.13 | R 2 = 0.18 | |

| REALM < 61 | −2.61 (−5.20, −0.03)* | 0.03 (−0.91, 0.97) | 0.96 (−0.01, 1.94) |

| R 2 = 0.34 | R 2 = 0.13 | R 2 = 0.17 | |

| NVS < 4 | −4.07 (−6.29, −1.85)*** | 0.71 (−0.10, 1.52) | 1.47 (0.63, 2.30)** |

| R 2 = 0.34 | R 2 = 0.13 | R 2 = 0.18 | |

| Education < HS | −5.92 (−9.21, −2.63)*** | 0.76 (−.44, 1.96) | 2.84 (1.61, 4.07)*** |

| R 2 = 0.34 | R 2 = 0.13 | R 2 = 0.19 |

Models are adjusted for age, gender, race, and comorbidity

*** p < 0.001, ** p < 0.01, * p < 0.05

In analyses that examined associations between specific MMSE tasks and health status, only orientation and serial subtraction were associated with all three outcomes of physical health, anxiety and depression (Table 3). However, all MMSE tasks with the only exception of registration were significantly associated with a higher number of reported depressive symptoms.

DISCUSSION

The link between health literacy and health outcomes, and the ability to manage self-care has long been established.5,7,8 This relationship has led the World Health Organization and the Institute of Medicine to promote and exhort improving health literacy as a public health goal.1 While a ‘universal precautions’ approach for using clear health communication practices has been recommended by the American Medical Association,25 some studies have shown that simply using plain language and ‘teach back’ methods to confirm understanding may not be sufficient.26 Therefore, creating, identifying, and validating rapid assessment tools that measure health literacy for clinical purposes has become of increasing interest.

Currently, routinely collected patient information cannot adequately identify those with limited health literacy. With a simple screening tool, however, a healthcare provider might be able to readily learn which patients are at greater risk for misunderstanding medical instructions or having difficulty with self-care behaviors. With this information, additional resources, increased communication and instruction, or more frequent monitoring could be allocated.

Our study found that the MMSE demonstrated high construct validity with each of the most common health literacy measures, providing firm evidence that, at least initially, it may capture the same problems related to personal self-care management as the current, relatively crude, assessments. The MMSE also was significantly associated with functional health status at the suggested cut point (≤ 27), at a magnitude similar to the “limited” threshold for each of the three existing measures of health literacy. While this may be a slender margin from normal cognitive performance, with only three possible incorrect responses identifying someone as at risk for limited health literacy, our analyses also suggest which specific incorrect responses could make a difference. Errors on the serial subtraction and orientation tasks were most predictive of poorer health status. Serial subtraction was moderately correlated with all of the health literacy measures, and prior research by members of this research team found this task to partially explain the association between health literacy (via TOFHLA) and mortality risk.5 Yet problems with orientation to time or place would less likely be direct evidence of limited health literacy, but perhaps of mild cognitive impairment.

Interestingly, our suggested cut point is similar to a more recently identified threshold for mild cognitive impairment (MCI) and dementia measured by the MMSE (< 27).27 Both MCI and limited health literacy are at risk for not being detected in routine primary care.28 Although we are unable to draw conclusions about the underlying latent traits that result in a score of ≤ 27, whether it is a cognitive impairment or limited health literacy; we argue that we are in fact capturing an individual-level deficit in self-care proficiencies that would be missed with the original MMSE thresholds.24 Patients falling within this range therefore might be presumed to have inadequate ability to understand and impart medical instructions. This could warrant more in-depth assessment as to what remediation is necessary. Future studies are needed in order to better understand the latent traits driving lower MMSE scores, so that clinicians can better differentiate cognitive impairment from limited health literacy. Clearly, a score on the MMSE that is suggestive of a moderate or severe impairment would warrant appropriate cognitive and psychosocial interventions. Yet patients missing three to five ‘fluid’ (active learning) tasks only, such as serial subtraction, might be considered at risk for low health literacy. This might give clinicians pause and suggest the need to confirm their disease and treatment knowledge, as well as self-care ability.

Given the striking similarities between the MMSE and health literacy assessments in terms of face validity, it is not entirely clear why the MMSE was associated with the presence of anxiety symptoms, while the other tests were not. One possibility could be the perception by participants that one test was to assess their cognition (MMSE), while the others were not so clearly apparent. It could be that those who were presently experiencing cognitive decline were acutely aware and concerned by this.29 Health literacy assessments, while clearly capturing a similar latent trait, may not be so obviously measuring cognitive skills from the patient’s perspective.

This study has several limitations. Our sample was older, English-speaking, and predominantly female. In addition, those individuals who were classified as severely cognitively impaired during the study’s eligibility screening were not included. Therefore, we are unable to generalize the usefulness of this new cut point beyond the studied population; importantly among younger adults. More broadly, while predictive, the aforementioned health literacy assessments are all crude measures; they would only serve in clinical practice for initial screening and would clearly require detailed diagnostic follow-up.

Additionally, the health outcomes used to measure the predictive validity of the MMSE were cross sectional and all self-report; therefore, we are unable to say if their reported health status was reflective of their actual health. Finally, our study found that the optimal cut point based on the NVS was ≤ 28. This finding is not surprising, as the NVS normally captures more people as having low and limited health literacy,9,30 therefore increasing the threshold for which someone is considered as having limited health literacy from the MMSE. Further measurement of large and more diverse populations is necessary in order to confirm the most appropriate threshold for capturing limited health literacy using the MMSE, while validated clinical outcomes are also necessary for confirming the predictive validity of the new cut point.

In summary, the MMSE may serve as a decent proxy measure for health literacy skills. It has considerable face validity as a health literacy measure that could be easily administered in the healthcare setting. Based on our findings, we believe the MMSE may be capturing the same underlying skill set as the existing measures, and its ability to discriminate and predict health outcomes is also comparable. Further research will help to clarify the proposed cut point, differentiate what underlying constructs are picked up by each measure, as well as investigate if the MMSE brings utility to clinicians as a health literacy screening tool during routine clinical exams.

Acknowledgements

Funders: This project is supported by the National Institute on Aging (R01 AG030611; PI:Wolf).

Prior Presentations: The data was presented in poster format at the Health Literacy Annual Research Conference (HARC) on 21 October 2012 in Bethesda, Maryland.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Funding Source

This project was supported by the National Institute on Aging (RO1 AG030611; PI: Wolf).

REFERENCES

- 1.Health Literacy: A Prescription to End Confusion. Washington DC: National Academy Press; 2004. [PubMed] [Google Scholar]

- 2.Rudd RE. Health literacy skills of US adults. Am J Health Behav. 2007;31(supp):8–18. doi: 10.5993/AJHB.31.s1.3. [DOI] [PubMed] [Google Scholar]

- 3.Baker DW. The relationship of patient reading ability to self-reported health and use of health services. Am Pub Health Assoc. 1997;87:1027–30. doi: 10.2105/AJPH.87.6.1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Baker DW. Reading between the lines: deciphering the connections between literacy and health. J Gen Intern Med. 1999;14(5):315–7. doi: 10.1046/j.1525-1497.1999.00342.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Baker DW, Wolf MS, Feinglass J, Thompson JA. Health literacy, cognitive abilities, and mortality among elderly persons. J Gen Intern Med. 2008;23(6):723–6. doi: 10.1007/s11606-008-0566-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Baker DW, Wolf MS, Feinglass J, Thompson JA, Gazmararian JA, Huang J. Health literacy and mortality among elderly persons. Arch Intern Med. 2007;167(14):1503–9. doi: 10.1001/archinte.167.14.1503. [DOI] [PubMed] [Google Scholar]

- 7.DeWalt DA, Berkman ND, Sheridan S, Lohr KN, Pignone MP. Literacy and health outcomes: a systematic review of the literature. J Gen Intern Med. 2004;19(12):1228–39. doi: 10.1111/j.1525-1497.2004.40153.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams MV, Baker DW, Honig EG, Lee TM, Nowlan A. Inadequate literacy is a barrier to asthma knowledge and self-care. Chest. 1998;114(4):1008–15. doi: 10.1378/chest.114.4.1008. [DOI] [PubMed] [Google Scholar]

- 9.Baker DW, Parker RM, Williams MV, Clark WS. Health literacy and the risk of hospital admission. J Gen Intern Med. 1998;13(12):791–8. doi: 10.1046/j.1525-1497.1998.00242.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wolf MS, Gazmararian JA, Baker DW. Health literacy and functional health status among older adults. Arch Intern Med. 2005;165(17):1946–52. doi: 10.1001/archinte.165.17.1946. [DOI] [PubMed] [Google Scholar]

- 11.Baker DW, Gazmararian JA, Sudano J, Patterson M, Parker RM, Williams MV. Health literacy and performance on the Mini-Mental State Examination. Aging Mental Health. 2002;6(1):22–9. doi: 10.1080/13607860120101121. [DOI] [PubMed] [Google Scholar]

- 12.Federman AD, Sano M, Wolf MS, Siu AL, Halm EA. Health literacy and cognitive performance in older adults. J Am Geriatr Soc. 2009;57(8):1475–80. doi: 10.1111/j.1532-5415.2009.02347.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wolf MS, Curtis LM, Wilson EA, et al. Literacy, cognitive function, and health: results of the LitCog study. J Gen Intern Med. 2012;27(10):1300–7. doi: 10.1007/s11606-012-2079-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tangalos EG, Smith GE, Ivnik RJ, et al. The Mini-Mental State Examination in general medical practice: clinical utility and acceptance. Mayo Clin Proc. 1996;71(9):829–37. doi: 10.4065/71.9.829. [DOI] [PubMed] [Google Scholar]

- 15.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state” A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12(3):189–98. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 16.Callahan CM, Unverzagt FW, Hui SL, Perkins AJ, Hendrie HC. Six-item screener to identify cognitive impairment among potential subjects for clinical research. Med Care. 2002;40(9):771–81. doi: 10.1097/00005650-200209000-00007. [DOI] [PubMed] [Google Scholar]

- 17.Parker RM, Baker DW, Williams MV, Nurss JR. The test of functional health literacy in adults: a new instrument for measuring patients’ literacy skills. J Gen Intern Med. 1995;10(10):537–41. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- 18.Davis TC, Long SW, Jackson RH, et al. Rapid estimate of adult literacy in medicine: a shortened screening instrument. Fam Med. 1993;25(6):391. [PubMed] [Google Scholar]

- 19.Weiss BD, Mays MZ, Martz W, et al. Quick assessment of literacy in primary care: the newest vital sign. Ann Fam Med. 2005;3:514–22. doi: 10.1370/afm.405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Small GW. What we need to know about age related memory loss. BMJ. 2002;324(7352):1502–5. doi: 10.1136/bmj.324.7352.1502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ware JE. SF-36 Physical and Mental Health Summary. Scales: A User’s Manual. Boston: The Health Institute, New England Medical Center; 1994. [Google Scholar]

- 22.Hays RD, Bjorner JB, Revicki DA, Spritzer KL, Cella D. Development of physical and mental health summary scores from the patient-reported outcomes measurement information system (PROMIS) global items. Qual Life Res. 2009;18(7):873–80. doi: 10.1007/s11136-009-9496-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yovanoff PS, Squires J. Determining cutoff scores on a developmental screening measure: use of receiver operating characteristics and item response theory. J Early Interv. 2006;29(1):48–62. doi: 10.1177/105381510602900104. [DOI] [Google Scholar]

- 24.Vuong QH. Likelihood ratio tests for model selection and non-nested hypotheses. Econometrica. J Econ Soc. 1989:57:307–333.

- 25.Schwartzberg JG, Cowett A, VanGeest J, Wolf MS. Communication techniques for patients with low health literacy: a survey of physicians, nurses, and pharmacists. Am J Health Behav. 2007;31(Suppl 1):S96–104. doi: 10.5993/AJHB.31.s1.12. [DOI] [PubMed] [Google Scholar]

- 26.Davis TC, Wolf MS, Bass PF, III, et al. Literacy and misunderstanding prescription drug labels. Ann Intern Med. 2006;145(12):887–94. doi: 10.7326/0003-4819-145-12-200612190-00144. [DOI] [PubMed] [Google Scholar]

- 27.O’Bryant SE, Humphreys JD, Smith GE, et al. Detecting dementia with the mini-mental state examination in highly educated individuals. Arch Neurol. 2008;65(7):963–7. doi: 10.1001/archneur.65.7.963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Winblad B, Palmer K, Kivipelto M, et al. Mild cognitive impairment–beyond controversies, towards a consensus: report of the International Working Group on Mild Cognitive Impairment. J Intern Med. 2004;256(3):240–6. doi: 10.1111/j.1365-2796.2004.01380.x. [DOI] [PubMed] [Google Scholar]

- 29.Caselli RJ, Chen K, Locke DE, Lee W, Roontiva A, Brandy D, Fleisher AS, Reiman EM. Subjective Cognitive decline: Self and Informant Comparisons. Dementia: Alzheimers; 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Osborn CY, Weiss BD, Davis TC, et al. Measuring adult literacy in health care: performance of the newest vital sign. Am J Health Behav. 2007;31(Suppl 1):S36–46. doi: 10.5993/AJHB.31.s1.6. [DOI] [PubMed] [Google Scholar]